Abstract

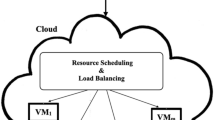

With the increasing and elastic demand for cloud resources, finding an optimal task scheduling mechanism become a challenge for cloud service providers. Due to the time-varying nature of resource demands in length and processing over time and dynamics and heterogeneity of cloud resources, existing myopic task scheduling solutions intended to maximize the performance of task scheduling are inefficient and sacrifice the long-time system performance in terms of resource utilization and response time. In this paper, we propose an optimal solution for performing foresighted task scheduling in a cloud environment. Since a-priori knowledge from the dynamics in queue length of virtual machines is not known in run time, an online reinforcement learning approach is proposed for foresighted task allocation. The evaluation results show that our method not only reduce the response time and makespan of submitted tasks, but also increase the resource efficiency. So in this thesis a scheduling method based on reinforcement learning is proposed. Adopting with environment conditions and responding to unsteady requests, reinforcement learning can cause a long-term increase in system’s performance. The results show that this proposed method can not only reduce the response time and makespan but also increase resource efficiency as a minor goal.

Similar content being viewed by others

References

Al Buhussain, A., Robson, E., & Boukerche, A. (2016). Performance analysis of bio-inspired scheduling algorithms for cloud environments. In 2016 IEEE international parallel and distributed processing symposium workshops (IPDPSW) (pp. 776–785). New York: IEEE.

Zhang, P., & Zhou, M. (2018). Dynamic cloud task scheduling based on a two-stage strategy. IEEE Transactions on Automation Science and Engineering, 15(2), 772–783.

Peng, Z., Cui, D., Zuo, J., Li, Q., Xu, B., & Lin, W. (2015). Random task scheduling scheme based on reinforcement learning in cloud computing. Cluster Computing, 18(4), 1595–1607.

Azad, P., Navimipour, N. J., & Hosseinzadeh, M. (2019). A fuzzy-based method for task scheduling in the cloud environments using inverted ant colony optimisation algorithm. International Journal of Bio-Inspired Computation, 14(2), 125–137.

Ebadifard, F., & Babamir, S. M. (2018). A PSO-based task scheduling algorithm improved using a load-balancing technique for the cloud computing environment. Concurrency and Computation: Practice and Experience, 30(12), e4368.

Keshanchi, B., Souri, A., & Navimipour, N. J. (2017). An improved genetic algorithm for task scheduling in the cloud environments using the priority queues: Formal verification, simulation, and statistical testing. Journal of Systems and Software, 124, 1–21.

Guo, F., Yu, L., Tian, S., & Yu, J. (2015). A workflow task scheduling algorithm based on the resources’ fuzzy clustering in cloud computing environment. International Journal of Communication Systems, 28(6), 1053–1067.

Barrett, E., Howley, E., & Duggan, J. (2013). Applying reinforcement learning towards automating resource allocation and application scalability in the cloud. Concurrency and Computation: Practice and Experience, 25(12), 1656–1674.

Yang, B., Xu, X., Tan, F., Park, D. H. (2011). An utility-based job scheduling algorithm for cloud computing considering reliability factor. In 2011 international conference on cloud and service computing (pp. 95–102). New York: IEEE.

Pham, X. Q., & Huh, E. N. (2016). Towards task scheduling in a cloud-fog computing system. In 2016 18th Asia-Pacific network operations and management symposium (APNOMS) (pp. 1–4). New York: IEEE.

Mostafavi, S., & Shafik, W. (2019). Fog computing architectures, privacy and security solutions. Journal of Communications Technology, Electronics and Computer Science, 24, 1–14.

Hussin, M., Lee, Y. C., & Zomaya, A. Y. (2011). Efficient energy management using adaptive reinforcement learning-based scheduling in large-scale distributed systems. In 2011 international conference on parallel processing (pp. 385–393). New York: IEEE.

Hussin, M., Hamid, N. A. W. A., & Kasmiran, K. A. (2015). Improving reliability in resource management through adaptive reinforcement learning for distributed systems. Journal of Parallel and Distributed Computing, 75, 93–100.

Cheng, M., Li, J., & Nazarian, S. (2018). DRL-cloud: Deep reinforcement learning-based resource provisioning and task scheduling for cloud service providers. In Proceedings of the 23rd Asia and South Pacific design automation conference (pp. 129–134). New York: IEEE.

Wei, Y., Pan, L., Liu, S., Wu, L., & Meng, X. (2018). DRL-scheduling: An intelligent QoS-aware job scheduling framework for applications in clouds. IEEE Access, 6, 55112–55125.

Lin, J., Peng, Z., & Cui, D. (2018). Deep reinforcement learning for multi-resource cloud job scheduling. In International conference on neural information processing (pp. 289–302). Berlin: Springer.

Li, F., & Hu, B. (2019). DeepJS: Job scheduling based on deep reinforcement learning in cloud data center. In Proceedings of the 2019 4th international conference on big data and computing (pp. 48–53). Berlin: ACM.

Chen, S., Fang, S., & Tang, R. (2019). A reinforcement learning based approach for multi-projects scheduling in cloud manufacturing. International Journal of Production Research, 57(10), 3080–3098.

Xue, F., & Su, Q. (2019). Intelligent task scheduling strategy for cloud robot based on parallel reinforcement learning. International Journal of Wireless and Mobile Computing, 17(3), 293–299.

Liu, H., Liu, S., & Zheng, K. (2018). A reinforcement learning-based resource allocation scheme for cloud robotics. IEEE Access, 6, 17215–17222.

Orhean, A. I., Pop, F., & Raicu, I. (2018). New scheduling approach using reinforcement learning for heterogeneous distributed systems. Journal of Parallel and Distributed Computing, 117, 292–302.

Duggan, M., Duggan, J., Howley, E., & Barrett, E. (2017). A reinforcement learning approach for the scheduling of live migration from under utilised hosts. Memetic Computing, 9(4), 283–293.

Cui, D., Peng, Z., Ke, W., Hong, X., & Zuo, J. (2018). Cloud workflow scheduling algorithm based on reinforcement learning. International Journal of High Performance Computing and Networking, 11(3), 181–190.

Wei, Y., Kudenko, D., Liu, S., Pan, L., Wu, L., & Meng, X. (2017). A reinforcement learning based workflow application scheduling approach in dynamic cloud environment. In International conference on collaborative computing: Networking, applications and worksharing(pp. 120–131). Berlin: Springer.

Zhong, J. H., Peng, Z. P., Li, Q. R., He, J. G., et al. (2019). Multi workflow fair scheduling scheme research based on reinforcement learning. Procedia Computer Science, 154, 117–123.

Nascimento, A., Olimpio, V., Silva, V., Paes, A., & de Oliveira, D. (2019). A reinforcement learning scheduling strategy for parallel cloud-based workflows. In 2019 IEEE international parallel and distributed processing symposium workshops (IPDPSW) (pp. 817–824). New York: IEEE.

Balla, H. A., Sheng, C. G., & Weipeng, J. (2018). Reliability enhancement in cloud computing via optimized job scheduling implementing reinforcement learning algorithm and queuing theory. In 2018 1st international conference on data intelligence and security (ICDIS) (pp. 127–130). New York: IEEE.

Mostafavi, S., & Dehghan, M. (2016). Game-theoretic auction design for bandwidth sharing in helper-assisted P2P streaming. International Journal of Communication Systems, 29(6), 1057–1072.

Mostafavi, S., & Dehghan, M. (2016). Game theoretic bandwidth procurement mechanisms in live P2P streaming systems. Multimedia Tools and Applications, 75(14), 8545–8568.

Mostafavi, S., & Dehghan, M. (2017). A stochastic approximation resource allocation approach for HD live streaming. Telecommunication Systems, 64(1), 87–101.

Cui, D., Peng, Z., Lin, W., et al. (2017). A reinforcement learning-based mixed job scheduler scheme for grid or IaaS cloud. IEEE Transactions on Cloud Computing. https://doi.org/10.1109/TCC.2017.2773078.

Zhao, Y., Mingqing, X., & Yawei, G. (2017). Dynamic resource scheduling of cloud-based automatic test system using reinforcement learning. In 2017 13th IEEE international conference on electronic measurement and instruments (ICEMI) (pp. 159–165). New York: IEEE.

Salahuddin, M. A., Al-Fuqaha, A., & Guizani, M. (2016). Reinforcement learning for resource provisioning in the vehicular cloud. IEEE Wireless Communications, 23(4), 128–135.

Liu, N., Li, Z., Xu, J., Xu, Z., Lin, S., Qiu, Q., et al. (2017). A hierarchical framework of cloud resource allocation and power management using deep reinforcement learning. In 2017 IEEE 37th international conference on distributed computing systems (ICDCS) (pp. 372–382). New York: IEEE.

Zhang, Y., Yao, J., & Guan, H. (2017). Intelligent cloud resource management with deep reinforcement learning. IEEE Cloud Computing, 4(6), 60.

Sutton, R. S., & Barto, A. G. (2018). Reinforcement learning: An introduction. Cambridge: MIT Press.

Kaelbling, L. P., Littman, M. L., & Moore, A. W. (1996). Reinforcement learning: A survey. Journal of Artificial Intelligence Research, 4, 237–285.

Bertsekas, D. P., Bertsekas, D. P., Bertsekas, D. P., & Bertsekas, D. P. (1995). Dynamic programming and optimal control (Vol. 1). Belmont, MA: Athena Scientific.

Peng, S. (1992). Stochastic Hamilton–Jacobi–Bellman equations. SIAM Journal on Control and Optimization, 30(2), 284–304.

Djonin, D. V., & Krishnamurthy, V. (2007). \(\{Q\}\)-learning algorithms for constrained markov decision processes with randomized monotone policies: Application to MIMO transmission control. IEEE Transactions on Signal Processing, 55(5), 2170–2181.

Kowsigan, M., & Balasubramanie, P. (2019). An efficient performance evaluation model for the resource clusters in cloud environment using continuous time Markov chain and Poisson process. Cluster Computing, 22(5), 12411–12419.

Isard, M., Budiu, M., Yu, Y., Birrell, A., & Fetterly, D. (2007). Dryad: Distributed data-parallel programs from sequential building blocks. In ACM SIGOPS operating systems review (Vol. 41, pp. 59–72). New York: ACM.

Dong, Z., Liu, N., & Rojas-Cessa, R. (2015). Greedy scheduling of tasks with time constraints for energy-efficient cloud-computing data centers. Journal of Cloud Computing, 4(1), 5.

Acknowledgements

We would like to express our gratitude to Fatemeh Ahmadi for her great assistance on implementing the simulation scenarios discussed in this article.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mostafavi, S., Hakami, V. A Stochastic Approximation Approach for Foresighted Task Scheduling in Cloud Computing. Wireless Pers Commun 114, 901–925 (2020). https://doi.org/10.1007/s11277-020-07398-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11277-020-07398-9