Abstract

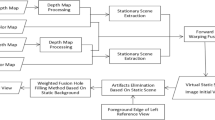

With the recent progress of multi-view devices and the corresponding signal processing techniques, stereoscopic viewing experience has been introduced to the public with growing interest. To create depth perception in human vision, two different video sequences in binocular vision are required for viewers. Those videos can be either captured by 3D-enabled cameras or synthesized as needed. The primary contribution of this paper is to establish two transformation models for stationary scenes and non-stationary objects in a given view, respectively. The models can be used for the production of corresponding stereoscopic videos as a viewer would have seen at the original event of the scene. The transformation model to estimate the depth information for stationary scenes is based on the information of the vanishing point and vanishing lines of the given video. The transformation model for non-stationary regions is the result of combining the motion analysis of the non-stationary regions and the transformation model for stationary scenes to estimate the depth information. The performance of the models is evaluated using subjective 3D video quality evaluation and objective quality evaluation on the synthesized views. Performance comparison with the ground truth and a famous multi-view video synthesis algorithm, VSRS, which requires six views to complete synthesis, is also presented. It is shown that the proposed method can provide better perceptual 3D video quality with natural depth perception.

Similar content being viewed by others

References

Tanimoto, M., Fujii, T., & Suzuki, K. (2007). Multi-view depth map of Rena and Akko & Kayo. ISO/IEC JTC1/SC29/WG11, MPEG M14888.

Tanimoto, M., Fujii, T., & Suzuki, K., (2007). Experiment of view synthesis using multi-view depth. ISO/IEC JTC1/SC29/WG11, MPEG M14889.

Lee, C., & Ho, Y.-S., (2008). View synthesis tools for 3D video. ISO/IEC JTC1/SC29/WG11, MPEG M15851.

Zhang, G., Hua, W., Qin, X., Wong, T.-T., & Bao, H. (2007). Stereoscopic video synthesis from a monocular video. IEEE Transactions on Visualization and Computer Graphics, 13(4), 686–696.

Chang, Y.-L., Fang, C.-Y., Ding, L.-F., Chen, S.-Y., & Chen, L.-G. (2007). Depth map generation for 2D-to-3D conversion by short-term motion assisted color segmentation. IEEE International Conference on Multimedia and Expo, pp. 1958–1961.

Battiato, S., Capra, A., Curti, S., & La Cascia, M. (2004). 3D stereoscopic image pairs by depth-map generation. Proceedings of the 2nd International Symposium on 3D Data Processing, Visualization, and Transmission, pp. 124–131.

Comaniciu, D., & Meer, P. (1997). Robust analysis of feature spaces: color image segmentation. Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 750–755.

Chien, S.-Y., Huang, Y.-W., Hsieh, B.-Y., Ma, S.-Y., & Chen, L.-G. (2004). Fast video segmentation algorithm with shadow cancellation, global motion compensation, and adaptive threshold techniques. IEEE Transactions on Multimedia, 6(5), 732–748.

Horry, Y., Anjyo, K., & Arai, K. (1997). Tour into the picture: using a spidery mesh interface to make animation from a single image. Proceedings of the 24th annual conference on computer graphics and interactive techniques, pp. 225–232.

Kral, K. (1998). Side-to-Side head movements to obtain motion depth cues: a short review of research on the praying mantis. Behavioural Processes, 43(1), 71–77.

Chien, S.-Y., Ma, S.-Y., & Chen, L.-G. (2002). Efficient moving object segmentation algorithm using background registration technique. IEEE Transactions on Circuits and Systems for Video Technology, 12(7), 577–586.

Khan, S., & Shah, M. (2001). Object based segmentation of video using color, motion and spatial information. Proceedings of the 2001 IEEE Conference on Computer Vision and Pattern Recognition, 2, II-746–II-751.

Krutz, A., Kunter, M., Mandal, M., & Frater, M. (2007). Motion-based object segmentation using sprites and anisotropic diffusion. Eighth International Workshop on Image Analysis for Multimedia Interactive Services, pp. 35.

Um, G.-M., Bang, G., Hur, N., Kim, J., & Ho, Y.-S. (2008). 3D video test material of outdoor scene. ISO/IEC JTC1/SC29/WG11, MPEG M15371.

Feldmann, M., Mueller, F., Zilly, R., Tanger, K., Mueller, A., Smolic, P., et al. (2008). HHI test material for 3D video. ISO/IEC JTC1/SC29/WG11, MPEG M15413.

Hyunh-Thu, Q., Callet, P., & Barkowsky, M. (2010). Video quality assessment: from 2D to 3D challenges and future trends. IEEE International Conference on Image Processing, pp. 4025–4028.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Han, CC., Hsiao, HF. Depth Estimation and Video Synthesis for 2D to 3D Video Conversion. J Sign Process Syst 76, 33–46 (2014). https://doi.org/10.1007/s11265-013-0805-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-013-0805-8