Abstract

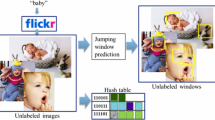

Automatically assigning keywords to images is of great interest as it allows one to retrieve, index, organize and understand large collections of image data. Many techniques have been proposed for image annotation in the last decade that give reasonable performance on standard datasets. However, most of these works fail to compare their methods with simple baseline techniques to justify the need for complex models and subsequent training. In this work, we introduce a new and simple baseline technique for image annotation that treats annotation as a retrieval problem. The proposed technique utilizes global low-level image features and a simple combination of basic distance measures to find nearest neighbors of a given image. The keywords are then assigned using a greedy label transfer mechanism. The proposed baseline method outperforms the current state-of-the-art methods on two standard and one large Web dataset. We believe that such a baseline measure will provide a strong platform to compare and better understand future annotation techniques.

Similar content being viewed by others

References

Barnard, K., & Johnson, M. (2005). Word sense disambiguation with pictures. Artificial Intelligence, 167, 13–30.

Blei, D. M., & Jordan, M. I. (2003a). Modeling annotated data. In Proceedings of the ACM SIGIR (pp. 127–134).

Blei, D., Ng, A., & Jordan, M. (2003b). Latent Dirichlet allocation. Journal of Machine Learning Research, 3, 993–1022.

Carneiro, G., & Vasconcelos, N. (2005a). A database centric view of semantic image annotation and retrieval. In SIGIR (pp. 559–566).

Carneiro, G., & Vasconcelos, N. (2005b). Formulating semantic image annotation as a supervised learning problem. In IEEE CVPR (pp. 559–566).

Carneiro, G., Chan, A. B., Moreno, P. J., & Vasconcelos, N. (2007). Supervised learning of semantic classes for image annotation and retrieval. IEEE Transactions on Pattern Analysis and Machine Intelligence, 29(3).

Datta, R., Joshi, D., Li, J., & Wang, J. Z. (2008). Image retrieval: ideas, influences, and trends of the new age. ACM Computing Surveys.

Duygulu, P., Barnard, K., de Freitas, J. F. G., & Forsyth, D. A. (2002). Object recognition as machine translation: learning a lexicon for a fixed image vocabulary’. In ECCV (pp. 97–112).

Feng, S. L., Manmatha, R., & Lavrenko, V. (2004). Multiple Bernoulli relevance models for image and video annotation. In IEEE conference on computer vision and pattern recognition.

Frome, A., Singer, Y., Sha, F., & Malik, J. (2007). Learning globally-consistent local distance functions for shape-based image retrieval and classification. In Proceedings of the IEEE international conference on computer vision, Rio de Janeiro, Brazil.

Gao, Y., & Fan, J. (2006). Incorporating concept ontology to enable probabilistic concept reasoning for multi-level image annotation. In Proceedings of the 8th ACM international workshop on multimedia information retrieval (pp. 79–88).

Gionis, A., Indyk, P., & Motwani, R. (1999). Similarity search in high dimensions via hashing. In VLDB ’99: proceedings of the 25th international conference on very large data bases (pp. 518–529), San Francisco, CA, USA.

Hare, J. S., Lewisa, P. H., Enserb, P. G. B., & Sandomb, C. J. (2006). Mind the gap: another look at the problem of the semantic gap in image retrieval. Multimedia Content, Analysis, Management and Retrieval.

Jeon, J., Lavrenko, V., & Manmatha, R. (2003). Automatic image annotation and retrieval using cross-media relevance models. In Proceedings of the ACM SIGIR conference on research and development in information retrieval. New York, NY, USA (pp. 119–126).

Jin, R., Chai, J. Y., & Si, L. (2004). Effective automatic image annotation via a coherent language model and active learning. In Proceedings of the ACM multimedia conference (pp. 892–899).

Lavrenko, V., Manmatha, R., & Jeon, J. (2004). A model for learning the semantics of pictures. In Advances in neural information processing systems (Vol. 16).

Li, J., & Wang, J. (2003). Automatic linguistic indexing of pictures by a statistical modeling approach. IEEE Transactions on Pattern Analysis and Machine Intelligence, 25.

Li, J., & Wang, J. Z. (2006). Real-time computerized annotation of pictures. In Proceedings of the ACM multimedia (pp. 911–920).

Makadia, A., Pavlovic, V., & Kumar, S. (2008). A new baseline for image annotation. In ECCV.

Metzler, D., & Manmatha, R. (2005). An inference network approach to image retrieval. In Image and video retrieval (pp. 42–50).

Monay, F., & Gatica-Perez, D. (2003). On image auto-annotation with latent space models. In Proceedings of the ACM international conference on multimedia (pp. 275–278).

Mori, Y., Takahashi, H., & Oka, R. (1999). Image-to-word transformation based on dividing and vector quantizing images with words. In Proceedings of the first international workshop on multimedia intelligent storage and retrieval management (MISRM).

Shi, J., & Malik, J. (2000). Normalized cuts and image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 22(8), 888–905.

Tibshirani, R. (1996). Regression shrinkage and selection via the Lasso. Journal of the Royal Statistical Society B, 58(1), 267–288.

Varma, M., & Ray, D. (2007). Learning the discriminative power-invariance trade-off. In Proceedings of the IEEE international conference on computer vision, Rio de Janeiro, Brazil.

von Ahn, L., & Dabbish, L. (2004). Labeling images with a computer game. In ACM CHI.

Wang, L., Liu, L., & Khan, L. (2004). Automatic image annotation and retrieval using subspace clustering algorithm. In ACM international workshop on multimedia databases.

Yang, C., Dong, M., & Hua, J. (2006). Region-based image annotation using asymmetrical support vector machine-based multiple-instance learning. In Proceedings of the IEEE international conference on computer vision and pattern recognition.

Yavlinsky, A., Schofield, E., & Ruger, S. (2005). Automated image annotation using global features and robust nonparametric density estimation. In CIVR.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Makadia, A., Pavlovic, V. & Kumar, S. Baselines for Image Annotation. Int J Comput Vis 90, 88–105 (2010). https://doi.org/10.1007/s11263-010-0338-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-010-0338-6