Abstract

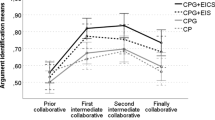

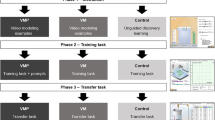

This study examined how differing instructional scaffolding influenced the actual use of evaluation skills to improve argumentation quality during college science inquiry. Source representation scaffolds supported multi-faceted reflection of complex source properties while managing cognitive load. Students were given an online annotation tool (treatment) in addition to a checklist (control). Goal instructions intended to induce critical task perceptions and evaluation standards. Balanced reasoning goals (treatment) replaced a typical persuasion goal (control). Students from one introductory biology course were assigned to one of the two by two combinations, which was implemented over one academic semester. We examined student arguments during and after interventions respectively to measure evidence quality, reasoning, and conceptual integration. Overall, scaffolds did not influence argumentation independently; rather we detected complex interaction effects across scaffolds and classroom dynamics. For low-prior knowledge groups, annotation increased argumentation quality when complemented by balanced goals. For mixed-prior knowledge groups, annotation alone impacted on argumentation independent of goal instructions. Transfer effects were marginal yet promising for low-prior knowledge groups. The results supported the synergistic integration of two scaffolding functionality, yet suggested possible difficulties in using and sustaining scaffold effects in complex classroom situations.

Similar content being viewed by others

Notes

The authors acknowledge Intel® Innovation in Education for permission to use the tool in this research.

References

Allen, D., & Tanner, K. (2005). Infusing active learning into the large-enrollment biology class: Seven strategies, from the simple to complex. Cell Biology Education, 4(4), 262–268. doi:10.1187/cbe.05-08-0113.

Asher, A. D. (2011). Search magic: Discovering how undergraduates find information. In Presented at the American Anthropological Association annual meeting, Montreal, Canada.

Bacher, J., Wenzig, K., & Vogler, M. (2004). SPSS twostep cluster: A first evaluation. Lehrstuhl für Soziologie. Retrieved from http://www.statisticalinnovations.com/products/twostep.pdf.

Belland, B. R. (2010). Portraits of middle school students constructing evidence-based arguments during problem-based learning: The impact of computer-based scaffolds. Educational Technology Research and Development, 58(3), 285–309. doi:10.1007/s11423-009-9139-4.

Biggs, J. B., & Collis, K. F. (1982). Evaluating the quality of learning: The SOLO taxonomy (structure of the observed learning outcome) (p. 1982). New York: Academic Press.

Bottoni, P., Levialdi, S., & Rizzo, P. (2003). An analysis and case study of digital annotation. In N. Bianchi-Berthouze (Ed.), Databases in networked information systems (Vol. 2822, pp. 216–230). Berlin, Heidelberg: Springer. Retrieved from http://www.springerlink.com/content/u1cu2mx7cexjf01h/.

Bråten, I., Strømsø, H. I., & Britt, M. A. (2009). Trust matters: Examining the role of source evaluation in students’ construction of meaning within and across multiple texts. Reading Research Quarterly, 44(1), 6–28.

Brem, S. K., & Rips, L. J. (2000). Explanation and evidence in informal argument. Cognitive Science, 24, 573–604. doi:10.1016/S0364-0213(00)00033-1.

Brem, S. K., Russell, J., & Weems, L. (2001). Science on the Web: Student evaluations of scientific arguments. Discourse Processes, 32(2/3), 191–213.

Britt, M. A., & Aglinskas, C. (2002). Improving students’ ability to identify and use source information. Cognition and Instruction, 20(4), 485.

Britt, M. A., & Rouet, J.-F. (2011). Research challenges in the use of multiple documents. Information Design Journal + Document Design, 19(1), 62–68.

Carter, G., & Jones, M. G. (1994). Relationship between ability-paired interactions and the development of fifth graders’ concepts of balance. Journal of Research in Science Teaching, 31(8), 847–856.

Chi, M. T. H., Slotta, J. D., & De Leeuw, N. (1994). From things to processes: A theory of conceptual change for learning science concepts. Learning and Instruction, 4(1), 27–43. doi:10.1016/0959-4752(94)90017-5.

Chinn, C. A., & Malhotra, B. A. (2002). Epistemologically authentic inquiry in schools: A theoretical framework for evaluating inquiry tasks. Science Education, 86(2), 175–218. doi:10.1002/sce.10001.

Clark, D. B., & Sampson, V. (2008). Assessing dialogic argumentation in online environments to relate structure, grounds, and conceptual quality. Journal of Research in Science Teaching, 45(3), 293–321. doi:10.1002/tea.20216.

Clark, D. B., Sampson, V., Weinberger, A., & Erkens, G. (2007). Analytic frameworks for assessing dialogic argumentation in online learning environments. Educational Psychology Review, 19(3), 343–374.

Driver, R., Newton, P., & Osborne, J. (2000). Establishing the norms of scientific argumentation in classrooms. Science Education, 84(3), 287–312.

Eysenbach, G., & Köhler, C. (2002). How do consumers search for and appraise health information on the world wide web? Qualitative study using focus groups, usability tests, and in-depth interviews. BMJ (Clinical Research Edition), 324(7337), 573–577.

Felton, M., Garcia-Mila, M., & Gilabert, S. (2009). Deliberation versus dispute: The impact of argumentative discourse goals on learning and reasoning in the science classroom. Informal Logic, 29(4), 417–446.

Frazier, P. A., Tix, A. P., & Barron, K. E. (2004). Testing moderator and mediator effects in counseling psychology research. Journal of Counseling Psychology, 51(1), 115.

Gentner, D., & Stevens, A. L. (1983). Mental models. Hillsdale: L. Erlbaum Associates.

Gilabert, S., Garcia-Mila, M., & Felton, M. K. (2013). The effect of task instructions on students’ use of repetition in argumentative discourse. International Journal of Science Education, 35(17), 2857–2878. doi:10.1080/09500693.2012.663191.

Harris, F. J. (2007). Challenges to teaching credibility assessment in contemporary schooling. In M. J. Metzger & A. J. Flanagin (Eds.), Digital media, youth, and credibility (pp. 155–180). Cambridge: The MIT Press.

Hart, S. G., & Staveland, L. E. (1988). Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In P. A. Hancock & N. Meshkati (Eds.), Human mental workload (pp. 139–183). Amsterdam: North-Holland.

Head, A. (2013). Project information literacy: What can be learned about the information- seeking behaviour of today’s college students? In Association of College and Research Libraries (ACRL) Proceedings 2013. Chicago: ALA.

Head, A., & Eisenberg, M. (2009). Lessons learned: How college students seek information in the digital age. Retrieved from http://projectinfolit.org/pdfs/PIL_Fall2009_finalv_YR1_12_2009v2.pdf.

Head, A., & Eisenberg, M. (2010). Truth be told: How college students evaluate and use information in the digital age. Retrieved from http://projectinfolit.org/pdfs/PIL_Fall2010_Survey_FullReport1.pdf.

Hirsch, R. (1989). Argumentation, information, and interaction: Studies in face-to-face interactive argumentation under differing turn-taking conditions. Göteborg: Department of Linguistics, University of Göteborg.

Hjørland, B. (2012). Methods for evaluating information sources: An annotated catalogue. Journal of Information Science, 38(3), 258–268. doi:10.1177/0165551512439178.

Howell, G. T., & Lacroix, G. L. (2012). Decomposing interactions using GLM in combination with the COMPARE, LMATRIX and MMATRIX subcommands in SPSS. Tutorials in Quantitative Methods for Psychology, 8(1), 1–22.

Iding, M. K., Crosby, M. E., Auernheimer, B., & Barbara, E. (2008). Web site credibility: Why do people believe what they believe? Instructional Science, 37, 43–63. doi:10.1007/s11251-008-9080-7.

Jiang, L., & Elen, J. (2011). Why do learning goals (not) work: a reexamination of the hypothesized effectiveness of learning goals based on students’ behaviour and cognitive processes. Educational Technology Research and Development, 59(4), 553–573. doi:10.1007/s11423-011-9200-y.

Johnson-Laird, P. N. (1983). Mental models: Towards a cognitive science of language, inference, and consciousness. Cambridge: Harvard University Press.

Jonassen, D. H. (2010). Learning to solve problems a handbook for designig problem-solving learning environments. New York: Routledge.

Jonassen, D. H., & Kim, B. (2010). Arguing to learn and learning to argue: Design justifications and guidelines. Educational Technology Research and Development, 58(4), 439–457.

Kalyuga, S. (2007). Expertise reversal effect and its implications for learner-tailored Instruction. Educational Psychology Review, 19(4), 509–539. doi:10.1007/s10648-007-9054-3.

Kalyuga, S. (2009). Knowledge elaboration: A cognitive load perspective. Learning and Instruction, 19(5), 402–410. doi:10.1016/j.learninstruc.2009.02.003.

Kalyuga, S. (2011). Informing: A cognitive load perspective. Informing Science, 14, 33–45.

Kim, S. M. (2014). The effects of goal instructions and source representation scaffolds on college students’ information evaluation in a general education biology course: A mixed methods study (unpublished doctoral dissertation). Athens: University of Georgia.

Kim, M. C., & Hannafin, M. J. (2011). Scaffolding 6th graders’ problem solving in technology-enhanced science classrooms: A qualitative case study. Instructional Science: An International Journal of the Learning Sciences, 39(3), 255–282.

Kim, S. M., & Hannafin, M. J. (2016). The effects of source representation and goal instructions on college students’ information evaluation behavior change. Computers in Human Behavior, 60, 384–397. doi:10.1016/j.chb.2016.02.044.

Kobayashi, K. (2006). Combined effects of note-taking/-reviewing on learning and the enhancement through interventions: A meta-analytic review. Educational Psychology, 26(3), 459–477.

Kuhn, D. (1989). Children and adults as intuitive scientists. Psychological Review, 96(4), 674–689. doi:10.1037/0033-295X.96.4.674.

Kuhn, D., Black, J., Keselman, A., & Kaplan, D. (2000). The development of cognitive skills to support inquiry learning. Cognition and Instruction, 18(4), 495–523. doi:10.1207/S1532690XCI1804_3.

Kuiper, E., Volman, M., & Terwel, J. (2005). The Web as an information resource in K-12 education: Strategies for supporting students in searching and processing information. Review of Educational Research, 75(3), 285–328.

Lazonder, A. W., & Rouet, J.-F. (2008). Information problem solving instruction: Some cognitive and metacognitive issues. Computers in Human Behavior, 24(3), 753–765. doi:10.1016/j.chb.2007.01.025.

Lee, Y.-J. (2004). Concept mapping your Web searches: a design rationale and Web-enabled application. Journal of Computer Assisted Learning, 20(2), 103–113. doi:10.1111/j.1365-2729.2004.00070.x.

Locke, E. A., & Latham, G. P. (2002). Building a practically useful theory of goal setting and task motivation: A 35-year odyssey. American Psychologist, 57(9), 705–717. doi:10.1037/0003-066X.57.9.705.

Marchionini, G. (2006). Exploratory search: From finding to understanding. Communications of ACM, 49(4), 41–46. doi:10.1145/1121949.1121979.

Mason, L., & Boldrin, A. (2008). Epistemic metacognition in the context of information searching on the Web. In M. S. Khine (Ed.), Knowing, knowledge and beliefs: Epistemological studies across diverse cultures (pp. 377–404). New York: Springer Science + Business Media.

Mason, L., Boldrin, A., & Ariasi, N. (2009). Searching the Web to learn about a controversial topic: Are students epistemically active? Instructional Science, 38(6), 607–633. doi:10.1007/s11251-008-9089-y.

Meola, M. (2004). Chucking the checklist: A contextual approach to teaching undergraduates Web-site evaluation. Libraries and the Academy, 4(3), 331–344.

Metzger, M. J. (2007). Making sense of credibility on the Web: Models for evaluating online information and recommendations for future research. Journal of the American Society for Information Science and Technology, 58(13), 2078–2091. doi:10.1002/asi.20672.

Mucci, L. (2011). Is it CRAP? Using a memorable acronym to teach critical website evaluation skills. Presented at the Wisconsin Association of Academic Librarians 2011 annual conference, Stevens Point, WI. http://www.slideshare.net/lisamucci/is-it-crap-using-a-memorable-acronym-to-teach-critical-website-evaluation-skills. Accessed 17 Jan 2012.

Norusis, M. (2008). SPSS 16.0 statistical procedures companion (2nd ed.). Upper Saddle River: Prentice Hall.

Nussbaum, E. M., & Kardash, C. M. (2005). The effects of goal instructions and text on the generation of counterarguments during writing. Journal of Educational Psychology, 97(2), 157–169. doi:10.1037/0022-0663.97.2.157.

Oh, E. G., & Reeves, T. C. (2015). Collaborating online: A logic model of online collaborative group work for adult learners. International Journal of Online Pedagogy and Course Design, 5(3), 47–61.

Page-Voth, V., & Graham, S. (1999). Effects of goal setting and strategy use on the writing performance and self-efficacy of students with writing and learning problems. Journal of Educational Psychology, 91(2), 230–240. doi:10.1037/0022-0663.91.2.230.

Pea, R. D. (2004). The social and technological dimensions of scaffolding and related theoretical concepts for learning, education, and human activity. Journal of the Learning Sciences, 13(3), 423–451.

Perfetti, C. A., Rouet, J.-F., & Britt, M. A. (1999). Toward a theory of documents representation. In H. van Oostendorp & S. R. Goldman (Eds.), The construction of mental representations during reading (pp. 99–122). Mahwah: Lawrence Erlbaum.

Puntambekar, S., & Hübscher, R. (2005). Tools for scaffolding students in a complex learning environment: What have we gained and what Have we missed? Educational Psychologist, 40(1), 1–12. doi:10.1207/s15326985ep4001_1.

Quintana, C., Zhang, M., & Krajcik, J. (2005). A Framework for supporting metacognitive aspects of online inquiry yhrough software-based scaffolding. Educational Psychologist, 40(4), 235–244. doi:10.1207/s15326985ep4004_5.

Rieh, S. Y., & Hilligoss, B. (2008). College students’ credibility judgments in the information-seeking process. In M. J. Metzger & A. J. Flanagin (Eds.), Digital media, youth, and credibility (pp. 49–71). Cambridge: MIT Press.

Rouet, J.-F. (2009). Managing cognitive load during document-based learning. Learning and Instruction, 19(5), 445–450. doi:10.1016/j.learninstruc.2009.02.007.

Russell, D. M., Stefik, M. J., Pirolli, P. L., & Card, S. K. (1993). The cost structure of sensemaking. In Presented at the ACM SIGCHI conference on human factors in computing systems (pp. 269–276).

Saracevic, T. (2007). Relevance: A review of the literature and a framework for thinking on the notion in information science. Part III: Behavior and effects of relevance. Journal of the American Society for Information Science and Technology, 58(13), 2126–2144. doi:10.1002/asi.20681.

Seel, N. M. (2001). Epistemology, situated cognition, and mental models:’Like a bridge over troubled water’. Instructional Science, 29(4–5), 403–427.

Sharma, P., & Hannafin, M. J. (2007). Scaffolding in technology-enhanced learning environments. Interactive Learning Environments, 15(1), 27–46. doi:10.1080/10494820600996972.

Slavin, R. E. (1995). Cooperative learning: Theory, research, and practice (Vol. 2). Boston: Allyn and Bacon. Retrieved from http://www.getcited.org/pub/103185225.

Song, L., Hannafin, M. J., & Hill, J. R. (2007). Reconciling beliefs and practices in teaching and learning. Educational Technology Research and Development, 55(1), 27–50.

Stadtler, M., & Bromme, R. (2007). Dealing with multiple documents on the WWW: The role of metacognition in the formation of documents models. International Journal of Computer-Supported Collaborative Learning, 2(2–3), 191–210.

Stadtler, M., Scharrer, L., & Bromme, R. (2011). How reading goals and rhetorical signals influence recipients’ recognition of intertexual conflicts. In L. Carlson, C. Hoelscher, & T. F. Shipley (Eds.), Proceedings of the 33rd Annual Conference of the Cognitive Sceicne Society (pp. 1346–1351). Austin, TX: Cognitive Sciecne Society.

Suthers, D. D., & Hundhausen, C. D. (2003). An experimental study of the effects of representational guidance on collaborative learning processes. The Journal of the Learning Sciences, 12(2), 183–218.

Tabak, I. (2004). Synergy: A complement to emerging patterns of distributed scaffolding. Journal of the Learning Sciences, 13(3), 305–335.

Tabatabai, D., & Shore, B. M. (2005). How experts and novices search the Web. Library and Information Science Research (07408188), 27(2), 222–248. doi:10.1016/j.lisr.2005.01.005.

Teddlie, C., & Tasshakori, A. (2006). A general typology of research designs featuring mixed methods. Research in the Schools, 13(1), 12–28.

Toulmin, S. (1958). The uses of argument. Cambridge: Cambridge University Press.

Tsai, C.-C. (2004). Information commitments in web-based learning environments. Innovations in Education and Teaching International, 41(1), 105–112.

van Gog, T., Ericsson, K. A., Rikers, R. M. J. P., & Paas, F. (2005). Instructional design for advanced learners: Establishing connections between the theoretical frameworks of cognitive load and deliberate practice. Educational Technology Research and Development, 53(3), 73–81.

White, B. Y., & Frederiksen, J. R. (1998). Inquiry, modeling, and metacognition: Making science accessible to all students. Cognition and Instruction, 16(1), 3–118.

Wiley, J., Goldman, S. R., Graesser, A. C., Sanchez, C. A., Ash, I. K., & Hemmerich, J. A. (2009). Source evaluation, comprehension, and learning in internet science inquiry tasks. American Educational Research Journal, 46(4), 1060–1106.

Wineburg, S. S. (1994). The cognitive representation of historical texts. In G. Leinhardt, I. L. Beck, & C. Stainton (Eds.), Teaching and learning in history (pp. 85–135). Hillsdale: Lawrence Erlbaum Associates.

Wineburg, S. S. (1998). Reading Abraham Lincoln: An expert/expert study in the interpretation of historical texts. Cognitive Science, 22(3), 319–346. doi:10.1016/S0364-0213(99)80043-3.

Winne, P. H., & Nesbit, J. C. (2009). Supporting self-regulated learning with cognitive tools. In D. J. Hacker, J. Dunlosky, & A. C. Graesser (Eds.), Handbook of metacognition in education (pp. 259–277). New York: Routledge.

Wood, D., Bruner, J. S., & Ross, G. (1976). The role of tutoring in problem solving. Journal of Child Psychology and Psychiatry and Allied Disciplines, 17(2), 89–100. doi:10.1111/1469-7610.ep11903728.

Wopereis, I., & van Merrienboer, J. J. G. (2011). Evaluating text-based information on the World Wide Web. Learning and Instruction, 21(2), 232–237.

Wu, Y.-T., & Tsai, C.-C. (2005). Information commitments: Evaluative standards and information searching strategies in web-based learning environments. Journal of Computer Assisted Learning, 21(5), 374–385. doi:10.1111/j.1365-2729.2005.00144.x.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kim, S.M., Hannafin, M.J. Synergies: effects of source representation and goal instructions on evidence quality, reasoning, and conceptual integration during argumentation-driven inquiry. Instr Sci 44, 441–476 (2016). https://doi.org/10.1007/s11251-016-9381-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11251-016-9381-1