Abstract

The era of 5G networks is approaching fast and its commercialization is planned for 2020. However, there are still numerous aspects that need to be solved and standardized before an average end-user can benefit from them on a daily basis. The 5G technology is supposed to be faster, provide services of higher quality, and better address the evolving needs of customers. As a consequence, 5th generation network needs to be implemented with efficiency and flexibility in mind, and thus, it fits well with the concepts of virtualization which enable sharing of physical resources among different operators, services, and applications. In this paper, we present an overview of these concepts, resulting from our discussions, i.e. between academic researchers and active network architects, and we describe the operation of a model that is most likely to emerge in such a complex network environment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The upcoming Olympic Games, i.e. 2018 Winter Olympics in South Korea, and 2022 in China, as well as, 2020 Summer Olympics in Japan [1], combined with the bandwidth-hungry social communication models (omnipresent voice and video transmissions accompanied by cloud-based photo sharing and extended reality services) stimulate the demand for 5th generation wireless network (abbreviated 5G) technology, which was defined by The 5G Infrastructure Public Private Partnership (5G PPP) in the form or Key Performance Indicators (KPIs) [2], that compare the envisioned 5G networks to the networks that are currently utilized. 1000 times higher data volume per geographical area, up to 100 times higher typical user data rates, and less than 1 ms end-to-end latency, are just the examples illustrating the highly-advanced challenges that need to be tackled in the upcoming years. Therefore, the technology behind 5G architecture is expected to provide few fold improvement with regard to capacity and delay when compared with networks of previous generations. Moreover, 5G it is being designed to be resistant to performance degradation associated with large numbers of simultaneous users, allowing for Machine-to-Machine (M2M) [3] [X1] and Internet of Things (IoT) [4] to blossom.

It is envisaged that 5G networks need to deliver necessary and fundamental facilities for further development of various vertical sectors of industry and economy. Following [5], five key verticals have been identified, i.e. e-health, factories for the future, automotive, energy, and media and entertainment sectors. One may observe that stable provision of numerous services for the stakeholders originating from those identified industry sectors will only be possible when the network architecture and the implemented technological solutions are able to precisely reflect stringent and often contradictive requirements. For example, in the case of media-and-entertainment, the expected values of supported user mobility may be much more demanding as compared to, e.g. the energy sector. Similarly, the requirements on the positioning accuracy in the case of the automotive sector could generally be highly challenging, but it will not usually be the case in metering the energy consumption area. The variety of examples that can be provided in that respect should be treated as evidence for the need for flexible and adaptive solutions. 5G network architecture should enable the implementation of distributive and elastic allocation of vertical-industry specific network functions [5].

The future of communication networks will be shaped by the requirements imposed by the Key Performance Indicators identified above, that entail the on-going development of new paradigms for flexible yet accurate designs of access and core parts of network architecture. The application of virtualization techniques together with further expansion of software defined (radio) networks are among the possible enablers [6,7,8,9]. Moreover, extremely demanding values of expected data rates, stated in the KPIs, lead to the development of new radio access solutions (such as the utilisation of millimetre waves [10, 11], massive MIMO schemes [12, 13], or new waveforms [14], and to the design of a novel approaches towards spectrum usage and regulation (such as the already mentioned use of higher frequencies and flexible spectrum management allowing for spectrum sharing [15,16,17,18], etc.). All these changes also have to be reflected by standardisation bodies, such as The 3rd Generation Partnership Project (3GPP), which plan to release the first standard defining the 5G network by the year 2020 [19].

Having in mind the plethora of possible technical solutions on one side and the variety of demands originated from different verticals on the other, in this paper, we concentrate on one specific aspect of 5G architecture, i.e. virtualisation and sharing paradigms. Our motivation for this selection is at least threefold. First, there are many scientific activities currently pursued around the world regarding the virtualisation of wireless communication networks. It is worthwhile to mention the European Commission funded projects, such as COHERENT [20,21,22] that concentrates on network abstraction and utilization of annotated network graphs to enhance network virtualization, 5G NORMA [23] focused at dynamic adaptive sharing of network resources between operators, 5G Superfluidity [24,25,26] that aims at proposing a cloud-based concept which will allow for innovative use cases of 5G networks, as well as, activities initiated by the National Science Foundation in the United States [27]). Second, the key industry players (such as mobile network operators) foresaw high benefits from the application of virtualisation in the context of wireless communication networks. This observation is proved by recent press releases on so-called 5G trials [28]. Finally, numerous solutions under the umbrella of network virtualisation have already been applied with great success in practice, however, these refer mainly to backbone parts of the communication networks (e.g. virtualisation of core IP/MPLS networks by telecommunications operators), as well as, data centres (e.g. virtualisation of operating systems). In the area of wireless networks, examples of such practices are established by the presence and popularity of the so-called Mobile Virtual Network Operators (MVNOs), or by the infrastructure sharing among geographically overlapping operators with the aim of capital expenditures (CAPEX) optimisation, enabling the emergence of service providers that have neither network infrastructure, nor frequency spectrum of their own. This evolution entails significant changes in network management, deployment and development, but it is only possible due to the accurate implementation of resource virtualisation and sharing concepts. Although the application of network sharing approaches in 5G networks seems to be generally well-justified, many aspects need to be discussed in detail, and the pros and cons of these concepts need to be evaluated.

The objective of this review and position paper is to summarize and discuss implications of current approaches to resource sharing in telecommunication networks, position them against the ideas under development, and further, to introduce and justify perspectives for three-layer model in relation to operational practices, market constraints, and technology development. Therefore, aside from the comprehensive review, the key input of this paper is the simplified and abstracted model that arose from authors’ experience in designing, operating and studying, both actually deployed and academically investigated network structures and technologies.

Therefore, this review and position paper is structured as follows. In Sect. 2 we analyse virtualisation and sharing approaches suitable for wireless and wired networks. to constitute the rationale for the authors’ position on the architecture of future communication networks. Next, Sect. 3 discusses the three-layered technical and operational model to emerge in future communication networks, accompanied by the presentation of the range of technologies that can be used to facilitate resource sharing in practice. Finally, Sect. 4 analyses market and regulatory constraints, while Sect. 5 concludes the paper.

2 Resource virtualisation and sharing

The virtualisation concept is very broad and although traditionally attributed to operating systems, it can also be applied to applications, services, networks and even more. It is often considered as an enabler for better resource utilisation and higher efficiency by means of sharing between different interested parties. Thus, it can be used to reduce operational costs, and to increase flexibility while maintaining a product-level degree of standardisation. Therefore, it seems very rational to consider it as a key element of 5G architecture.

As a term, virtualisation represents a broad idea of separating the requests for resources or services from the actual underlying resources (i.e. infrastructure or software). The practical implementation of this concept leads to the introduction of a dedicated abstraction layer which is placed between the computing, storage or networking hardware (physical resources layer), and the services running on top of the underlying infrastructure. This, in consequence, leads to the isolation of virtual servers (often called virtual machines), containers or processes and to the hardware-independence of the applied solutions [29]. In that context, sharing refers to the process of simultaneous or non-simultaneous (i.e. consecutive) usage of available resources by different stakeholders or services.

In principle, virtualisation as an idea cannot be implemented without sharing the available set of resources. Moreover, as these resources may be virtualised, the stakeholders or services (understood in a very broad sense, e.g. network operators, mobile users, virtual operating systems, processes) do not necessarily need to know or be aware of, what physical resources have finally been utilised by them. Such a theoretical idea has been applied successfully in microprocessors and in computer science leading to, e.g. fast development and popularity of virtual machines (understood as numerous virtual instances of a system or a computer operated on a single physical device) and to the cloudification of operations and resources. In a broader context, this great success of virtualisation of resources paved the way for network softwarisation via the application of the so-called Software Defined Networking (SDN) and Network Function Virtualisation (NFV) [6, 9, 30].

Software Defined Networking provides a level of abstraction over network configuration and operation, as it moves the management duties from single network devices to an abstracted control layer which is primarily designed to operate centrally, as well as in a cloud-based but still centrally operated manner. Since SDN aims at enabling network control to be dynamic in feature-rich and distributed environment, its architecture is based on three layers, i.e. the infrastructure layer (responsible for forwarding and data processing), the controller layer, and the application layer [31], with OpenFlow being a renowned protocol that standardises the access to the forwarding plane of an Internet Protocol (IP) network device (a switch or a router). Network Function Virtualisation can be a valuable addition to SDN, as the main objective of NFV is the logical separation of particular network functions from the underlying hardware and infrastructure [32], such as firewalls, Network Address Translation (NAT) routers, load-balancers, and moving them to a virtualised and often distributed computing environment. The concept of Network Function Virtualisation has gained significant attention in the last few years. The development of the general idea is more and more visible since numerous service providers, vendors, and research institutes combined their efforts under the aegis of the European Telecommunications Standards Institute (ETSI), creating of the so-called Industry Specification Group for NFV, made by seven of the world’s leading telecom network operators in November 2012 [33].

Not only 5G architecture development efforts are aimed at implementing virtualisation and sharing concepts, but they seem to impact and benefit from the whole telecommunications ecosystem. Therefore, the next subsections overview the most common approaches and objectives of sharing in wired and wireless networks, since 5G systems are intended to be based on both.

2.1 Resource sharing in wired networks

Currently, the common form of sharing of wired infrastructure is related to sharing of last-mile infrastructure. This type of sharing is typically seen in two flavours: Local Loop Unbundling (LLU) or Bitstream Access (BSA). LLU means sharing the local loop (usually a copper pair) with another operator, and thus it is a physical form of sharing [34]. BSA refers to the creation of an overlaying broadband data transport service, and thus it is a virtual form of sharing [35].

Historically, these forms of operation were forced on incumbent telecom operators through regulatory processes, so operators were obligated to make their copper network available to other market players. It was observed that typically LLU has a positive effect on broadband penetration in the early years [36], but in the following years, as the market matures and offers a higher level of competition, such a form of market stimulation is no longer needed [36].

In recent years, many operators have changed their standpoints and started to treat open access to their infrastructure as an opportunity to stop competing operators from building overlapping networks, since there is a possibility of leasing the existing infrastructure. This approach is especially well visible in Northern Europe, where the Open Access Network (OAN) model has been developed [37]. As depicted in Fig. 1, this model separates the roles of service provider, network operator and network owner, and thus, it brings the sharing concept to the extreme. Network owner becomes a wholesale infrastructure operator that does not provide end-user services but leaves these responsibilities to dedicated service providers that are being charged for the right to use the underlying infrastructure and data transport services.

Open access network model [37]

The OAN model is also quite flexible because the three roles can be aggregated but also distributed among various parties. So for example in one case a network operator may also provide services to the end customers while in other case it may be required to have multiple network operators. Various countries and even various regions within a country often exhibit different needs, customer expectations, cost models, and thus, require separate analyses. However, in general such an ownership model can be applied in most cases where network sharing is required from economical point of view.

On the one hand, the positive aspect of the OAN model is that it increases competition on the service provider part, and on the other hand, it may lead to a monopoly on the network owner part. That kind of monopoly may result in limited access to Next Generation Access (NGA) networks [38] and broadband Internet, and moreover, reduced quality of the network. Therefore, taking the end-user’s perspective, it is valid to conclude that there should be at least two competing infrastructures. Many countries, for example Poland [39], implement regulations imposing requirements which define rules for telecommunication installations in public buildings and collective residential buildings to introduce the obligation to install different types of last-mile mediums (types of cables): copper twisted pair, coaxial, optical fibre, and to terminate them in the distribution frame allowing for easy access by various operators.

In summary, sharing concepts for wired networks are especially attractive and needed in rural areas and in developing markets, where they allow operators to share the entry costs and limit operational costs. The same applies to those European Union (EU) countries which are lagging behind the EU average in terms of NGA and broadband deployment, as evidenced in the European Commission’s Digital Agenda Scoreboard [40].

2.2 Resource sharing in wireless networks

When applying the ideas of virtualisation and sharing to wireless communication networks, one may need to completely redefine the architecture of such networks and to propose new paradigms describing the processes of design, deployment and management. A general end-user (regardless of it being a mobile user of the cellular network or a user connected wirelessly to a fibre optics home router) may use services offered by the service provider, but the latter is not aware which exactly physical resources (provided by the infrastructure operator) will be used to deliver the requested service. The service provider in that case interacts with the infrastructure operator on the software level, usually through exchanging control messages with the use of dedicated Application Programming Interface (API). Let us briefly discuss two simplified use cases.

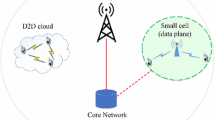

In the first case, the mobile user would like to transfer a portion of data at the desired rate, and hence, sends a request to the service provider (using the ways and resources identified in the service license agreementFootnote 1). Then, the service provider realizes this request by the usage of resources currently available in the heterogenic radio access network which is a mixture of IEEE 802.11 standard compliant access-points, cellular networks or point-to-point links). Moreover, if the user is mobile, the service provider is able to provide seamless data transfer based on the handover between different access technologies. The role of service provider would be to orchestrate the available resources (delivered by infrastructure operators), as well as, to use virtualized network functions such as firewalls and load-balancers, to deliver virtual services to end-users. This case is illustrated in Fig. 2, where service provider offers a set of services to end-users, i.e., clients (in this example, there are four services numbered from 1 to 4), such as video streaming or file transfer. When the user sends a request for a particular service (in our case service number 3), this information is further relayed to network orchestration system, which selects the best underlying wireless technology for realization of the request. In the example, the network orchestrator selected 4G network to fulfil that particular request.

Another example refers to the situation where the IEEE 802.11 Wireless Local Area Network (WLAN) provider deploys a set of N access points (AP) over a certain area, and utilising the benefits offered by virtualisation and sharing, creates a set of M virtual access points which are visible to end-users. In such a case, the end-user is associated with a certain virtual AP, i.e., it uses a specific Service Set Identifier (SSID), but at the same time, the user is not even aware which actual device (WLAN access point) physically manages the data transmission, as shown in Fig. 3. Please note that the gain in such an approach is not in increased capacity of the WLAN system (the total capacity will be directly dependent on the available physical devices and occupied channels). The benefits are in fully softwareized and remote management of available networks. For example, a new WLAN network dedicated for one event may be created without any modification of the real structure of the network.

Both cases are examples showing the variety of possibilities how virtualisation and sharing paradigms can be effectively applied in wireless networks. However, let us also notice that the benefits of virtualisation and sharing have been already applied, at least to a certain extent, in contemporary wireless networks. In many countries traditional Mobile Network Operators (MNO) work in parallel with MVNOs. As the former are understood here as entities offering dedicated services and utilising their own infrastructure (i.e. managed and operated directly by them), the virtual operators do not possess actual infrastructure. They may for example utilise (or lease) the physically deployed devices, frequencies, licenses etc. that belong to other stakeholders - either MNOs, or a dedicated entity called the Mobile Virtual Network Enabler (MVNE).

Another example involves infrastructure sharing, when some MNOs agree to share their infrastructure for further cost reduction. A typical situation occurs in highly saturated or remote (hard-accessed) areas where it is more cost-effective to the new operator (who becomes a service provider in that case) to lease resources that belong to an operator already well-established in that region (such as spectrum, processing capabilities in base stations, physical space for antennas or cards in the cabinets, operation and maintenance staff etc.) than deploying a new base station. Following [41, 42] various types of sharing can be identified, e.g. mast sharing, site sharing, radio-access-network sharing, network roaming and core-network sharing. Such a variety of opportunities implies that there is a huge potential behind wireless network virtualisation.

3 Three-layered model of future communication networks

The above observations lead us to the conclusion that future communication networks would require a revision of the traditional understanding of their architecture. Following [6], we envisage that potentially a generalized three-layer architecture can be applied, as shown in Fig. 4. This high-level approach assumes that the practical implementation of virtualization in every kind of network (i.e. regardless if wired, wireless or mixed) will lead to a functional split between the services offered to end-users and the physical resources utilized and maintained underneath. These three layers are: infrastructure layer being the basis for orchestration layer, and service layer located on top of it.

Let us notice that the three-layer structure may be implemented in various ways, i.e. some layers may be merged (e.g. contemporary MNOs operate in all three layers, whereas a MVNO covers orchestration and service layers) or may be further split (e.g. infrastructure layers may be split based on spectrum management into the control layer and the radio-access infrastructure layer, where the former is responsible for the management of available resources, licenses and agreements between various stakeholders, whereas the latter deploys and maintains only the hardware for radio access). As the evolution of the networks used so far, 5G architecture will both benefit from the decades of rapid technological developments by combining them to meet the changing needs, and introduce new ideas into the world of rising 5th generation networks.

Therefore, the key aspects and mechanisms suitable for the layers of presented model are discussed in the following subsections. The concept behind each layer and main challenges are followed by the most promising technological solutions to support the efforts. Please note that it is not possible today to answer precisely how exactly these challenges will or should be addressed. Furthermore, it may be envisaged that considerably much effort is needed to tackle the problems at hand to bring the concept of three-layer model closer to reality.

3.1 Infrastructure layer

The first (bottom) layer of the proposed model refers to the physically available resources and will be constituted by so-called infrastructure operators. This layer will represent the stakeholders offering the possibilities of using different resources, such as access points, base stations, masts, routers, and optical cables, as well as licensed frequency bands in radio spectrum sharing models. In the context of wireless network access, one may imagine the presence of companies deploying only masts and building backbone IP/MPLS network to provide wholesale access to the cellular system. Quite similar situation can take place in case of wired networks where infrastructure operators will build, own, and operate passive (e.g. cabling, racks) and active (e.g. routers, power supply systems) infrastructure. Another possible direction is that the operation and maintenance of already deployed infrastructure will be outsourced to dedicated companies (external or subsidiary) to act as an infrastructure operator, i.e. to companies specialised in the management of the infrastructure layer. To be able to share the resources with different wholesale customers, infrastructure operators need not only to maintain the network but also to operate it in a way that supports flexible orchestration. Only then the separation, simultaneousness and accounting of individual services will be possible. Therefore, hardware and software vendors, as well as, network architects have to investigate and address different challenges discussed in the next subsection.

3.1.1 Challenges

There are a few challenges in Infrastructure Layer while implementing resources sharing:

-

First, a common interface with a set of SDKs is needed to control and coordinate the usage of various access technologies. The orchestrator (described in the following section) has to be able to communicate effectively with any supported access technology regardless of the manufacturer or vendor of the device. This aspects will require a common agreement (probably in form of a standard) between various stakeholders.

-

Additionally, a control information overhead is needed for effective coordination of the underlying access technologies. However, its inclusions in the whole information exchange consumes additional power and bandwidth.

-

One may expect increased complexity of transmitters and receivers architecture as the resource sharing is to be implemented at the level of physical medium, e.g. access to fibre or spectrum;

-

On the other hand, if resource sharing is implemented at higher layer, i.e., above MAC layer, the challenge is to abstract available resources reliably, e.g. throughput in wireless network is varying depending on the channel conditions and interference plus noise floor. Also, a dedicated mapping tool will be needed which will be responsible for projecting the transmit opportunities offered by the specific transmission technique (e.g. MIMO transmission scheme, multipoint transmission like Coordinated MultiPoint—CoMP, enhanced inter-cell interference cancellation ICIC, etc.), to the form understandable by the orchestration layer (as it is proposed in, e.g. [43].

-

Finally, mostly in wireless transmission, additional interference can be expected as the result of inaccurate and not-always-reliable decisions of the orchestrator.

3.1.2 Supporting technologies

To tackle the aforementioned challenges, a number of solutions related do different aspects of network architecture will come in use, as presented hereunder.

Cognitive radio

Cognitive Radio is a concept of a radio being aware of its operation environment (e.g. wireless devices transmitting in the neighbourhood, propagation channel characteristics, regulator policies etc.) and its own capabilities (e.g. transmit power, possible bandwidth and carrier frequency, battery utilization) [44]. Therefore, it can be a crucial element of a distributed (uncoordinated) Dynamic Spectrum Access (DSA) system. Interestingly, its reaction to some obtained knowledge and the knowledge itself should be described by means of a high-level XML-like language [44]. As such, this technology should be capable of deployment in a virtualised 5G network, as its operation (e.g. association to a given access point, supporting of a given service) can be dynamically adjusted.

This technology can provide throughput by utilising spectral resources not utilised at a given location/time/frequency by spectrum license owners (called primary users), what is a crucial feature of a 5G system, as fast increasing throughput requirements are limited by available spectrum resources. Such Cognitive Radio operation should be enabled only if primary users do not experience harmful interference caused by additional users authorised to use the spectrum, as in the Licensed Shared Access (LSA) approach. This puts much research effort in the design of reliable, yet computationally simple spectrum sensing algorithms [45] in order to detect active primary users. From the transmitter perspective, a proper waveform has to be used [17] in order to maximise throughput while not deteriorating the operation of primary users detected during the “spectrum sensing” phase. Such an agile approach to spectrum utilisation can be used by different MNOs to share the available bandwidth in the licensed band. Interestingly, it is probably the only technology that allows the establishment of device-to-device communication in a licensed band without network infrastructure access (e.g. information provided by the control channel). On the other hand, Cognitive Radio technology can be used to share the unlicensed band with other transmissions utilising the same or other radio access technology.

Software defined radio

Software defined radio (SDR) is a technology that shifts all possible operation in a wireless transceiver to the digital domain [46]. In the past, all computationally intensive signal processing operations in wireless transceivers were carried out in Application-Specific Integrated Circuits (ASICs). Any modification of, e.g. operational bandwidth, modulation technology or Media Access Control (MAC) layer required the redesign of an ASIC that consumed a large amount of time, energy, and money. In the case of an SDR transceiver, only the Radio Frequency operations have to be carried out in the analogue domain, e.g. amplification, frequency shifting, filtering. Other operations, after passing the Analog to Digital Converter, or before the Digital to Analog Converter, are carried out in the digital domain using Digital Signal Processors (DSP) or Field-Programmable Gate Arrays (FPGA). Most importantly, digital processing is not limited to MAC layer, decoding, or demodulation. It is currently possible to also implement the Intermediate Frequency stage in this domain. Therefore, SDR technology rises as an important feature while providing service in a heterogeneous 5G network, especially when combined with Cognitive Radio approach.

From the user and service provider perspective, it is not important what frequency resources and what radio-access technology will be used for service provisioning (as a result of network virtualisation). However, the user terminal should be able to reconfigure itself to a desired carrier frequency, load appropriate radio-access technology modules and start transmission. Therefore, the main cost of SDR is typically higher energy utilisation of FPGA or DSP boards in comparison to ASIC modules. Moreover, analogue frontends have to be wideband and not introducing linear distortions to the signal, resulting in higher cost and energy consumption.

Software defined networks

An approach similar do SDR has gained a lot of attention in the context of network virtualization, where the idea of Software Defined Networking (SDN) plays a key role. In a nutshell, SDN splits the control and data (forwarding) plane in a centralized network. The idea behind this separation arose from the need for effective dynamic management of processing power and storage resources in modern computing environments. Typically, an access to the forwarding plane of a network is provided by the OpenFlow communications protocol. This technology became a popular technical enabler from the network point of view, as it allows to treat the underlying infrastructure of wired and wireless networks in an abstracted way, and to steer the traffic in a most efficient way.

Physical and logical service separation

Similarly to fixed spectral separation (frequency allocation) in the radio domain, wired communication can be isolated using physical service tunnelling, i.e. by assigning dedicated physical resources to distinct services. The creation of such physical service tunnels provides a strict way to separate parties within a network, since each party obtains a dedicated and isolated transmission domain, such as dedicated point-to-point optical fibres (often called dark fibres) or copper twisted pairs in the last mile (the local loop), etc. Different optical channels (wavelengths) in Wavelength-Division Multiplexing (WDM) systems, as well as, different time slots in Time-Division Multiplexing (TDM) systems.

Besides the aforementioned methods or, more common, in conjunction with them, various logical separation mechanisms are currently applied in core and access networks. Logical service separation is most often implemented as concept of a virtual tunnel dedicated to a given service in order to separate it from other services, usually at one of the layers of the Open Systems Interconnection (OSI) reference model. The logical separation between services provides a basic building block for concepts of dynamic and on demand sharing and virtualisation, by the creation of services dedicated to separate data destined for a given party (operator, company, or end-customer). In W-Fi networks it might be the use of Basic Service Set Identifier (BSSID) and Service Set Identifier, while the most common approaches for wired networks involve the use of Virtual Local Area Networks (VLANs) defined in IEEE 802.1Q standard, Link Aggregation according to IEEE 802.1AX-2008 standard, as well as, Multiprotocol Label Switching (MPLS) reliant services, such as, Virtual Leased Line (VLL), Virtual Private LAN Service (VPLS), and Virtual Private Routed Network (VPRN). Some protocols often considered today to be outdated, such as, Frame Relay (FR) and Asynchronous Transfer Mode (ATM) may be still in use as well. Moreover, some network functions may be virtualised for different sets of services, i.e. available as NFV modules of routers, firewalls etc.

3.2 Orchestration layer

The second layer consists of a dedicated software platform responsible for the management, operation and orchestration of heterogeneous resources delivered from the infrastructure layer, and delivering these combined virtual resources to service providers operating on top of the orchestration layer. Contemporary MVNE will belong to this layer. In a broader sense, an exemplary company from the second layer, i.e. a resource provider, may be responsible for collecting the resources originated from various infrastructure operators. In other words, that particular company may have access to, e.g. two WLAN access points delivered by infrastructure operator A, ten WLAN access points from operator B, 20 MHz of spectrum under LTE-A regime from cellular infrastructure operator C, and a set of 10 MHz interleaved bands in Licensed Shared Access paradigm from operator D. Finally, it may also have access to Fibre To The Home (FTTH) and Hybrid Fibre Coaxial (HFC) wired networks of a residential operator, equipped with Customer Premises Equipment (CPE) which allows for running a virtual access point, i.e. another SSID, on each home-grade router and WLAN access point [47]. Having such a set of physically available resources, the discussed resource provider could create a set of virtual resources and offer them to service providers (located in the service layer). For example, it may provide wireless Internet access at the rate of 1 Gbit/s, with such a high throughput achieved by joint and load balanced utilisation of WLAN and cellular resources.

3.2.1 Challenges

Network wide orchestration often covers currently only specific technologies or functionalities. Main reason for this is that many hardware vendors expose only a limited set of equipment functionalities through dedicated management protocols or APIs. For example even in case of widely implemented Simple Network Management Protocol (SNMP), which is already almost 30 years old, it is rarely observed that vendors offer full read and write access to all the functions available through Command Line Interface (CLI) or Graphical User Interface (GUI).Moreover, orchestration platforms need to implement various communication protocols and integrate with many APIs which change in time. Thus, process of keeping orchestration mechanisms up to date to be aware of all the functionalities exposed in recent software releases of various vendors, technologies and platforms is a very challenging task. Possibly the best approach would be to identify the common (probably somehow abstracted) interface or to define the translation tool that will convert data from technology-specific messages to the unified form.

Another challenge is to define the effective and precise way of presenting the transmission opportunities to the service layer. In other words, how to effectively map the underlying technologies into the resources that could be offered (and sold) to the service providers. The immediate solution is to create and define the virtual resource unit (e.g. virtual transmission block), and project the transmit opportunities delivered by the network provider into these virtual resource units. As the idea is conceptually simple, it is not straight-forward how to realize it in practice.

3.2.2 Supporting technologies

In order to support the high granularity of control of virtualised networks and services, with the ability to create, modify and delete services on demand, various configuration and management protocols can be used to build and execute centralised software controlled logic for the whole network. Many telecommunications systems are today managed with the use of Transaction Language 1 (TL1), SNMP, and Common Object Request Broker Architecture (CORBA), as well as, with the newer ones, such as, Technical Report 069 (TR-069), Extensible Messaging and Presence Protocol (XMPP) or Network Configuration Protocol (NETCONF) with YANG and ConfD data models, accompanied by the increasing popularity of Python programming language for network orchestration purposes. Aside from network configuration mechanisms there are existing concepts and tools, discussed further on, that can be used to support orchestration of future networks.

Network function virtualization

Virtualization of network resources is considered as an efficient tool for optimization of network services. In particular, it is responsible for handling of various network functions operating on virtual machines, which in turn are constituted based on abstraction of underlying hardware, such as routers or switches. It is designed to manage numerous virtual components.

Some interesting solutions have been proposed, e.g. the NFV management and organization (MANO) framework proposed by NFV MANO ETSI ISG. The latter consists of three key functional blocks: NFV Orchestrator, VNF Manager and Virtualized Infrastructure Manager. In general NFV concept in our three layer model belongs to infrastructure layer. However, management required by NFV belongs to orchestration layer.

SDN controllers platforms

SDN controllers provide network wide intelligence over SDN networks. SDN controllers enable global network management through a logical centralization of control functions, allowing control of multiple network devices as one infrastructure element. Data flows are controlled at the level of the abstract global network not associated with individual devices. Some of the well-known SDN controller platforms are Floodlight [48], OpenDaylight [49], OpenContrail [50], etc.

Operations support systems

Various Operations Support Systems (OSS) [51] implement network orchestration, although in typical cases, only related to access network. This is because OSS mostly support processes involved in activation and deactivation of services for individual customers, as well as, in maintaining network inventory. Therefore, OSS are usually not involved in orchestration of a complete networks. Nevertheless, when adequately modified and extended, they might be useful in orchestration of currently operated networks to be incorporated into 5G networks.

3.3 Service layer

The service layer, located on top of the two lower layers, represents the stakeholders responsible for end-user centric marketing and sales, customer service, as well as, service management and delivery. In such an approach, the end-user will contact the service layer company, i.e. the service provider, discussing the set of necessary and desired services. Those services will most likely be Internet Protocol based, since it is currently the de-facto standard for communication at the application level within and between different types of packet networks. Hence, upon the request properly initiated by any IP-reliant end-user application, service providers will allocate particular virtual resources delivered by resource providers and serve necessary facilities to the client. As an example, a 4K TV provider can utilise the resources delivered by broadcasters (for regular TV channels), cellular networks (for video streaming) or WLAN access points (for offloading some of the traffic). In a different case, a company offering video surveillance services may utilise cellular networks for transferring video signals, while utilising a dedicated citizen residential machine-to-machine IEEE 802.15.4-based network for short message transmission. In both cases, the service provider may not be the owner of any hardware and may only sell the services to the end-customers. This approach enables the support of any type of IP-based applications, provided that the end-user device is able to communicate via different access technologies to optimize the utilization of available resources by link aggregation (channel bonding), load balancing, parallel transmissions, etc.

Alternatively, in the efforts to optimize network and computing platforms utilization, novel alternatives to IP-based networking are investigated, such as Named Data Networking (NDN) aimed at introducing data-centric network architecture and addressing with the support of efficient data caching [52] [X2].

3.3.1 Challenges

One of the key challenges in the virtualized world, where the end-user is not aware of the underlying technology, is to propose the effective way of random access to the medium, authentication and authorization. These processes are conducted in various way depending on the technology and applied communication standard. In consequence, there should be a tool for seamless authorization, authentication, and channel access based on the personalized key. The work on vertical handover, or in general media-independent-handover may be treated as the baseline for further research on this topic [53,54,55].

Another challenge related somehow with the one described above is to deal with the security issues. New techniques for guaranteeing the secure service delivery are subject to deep investigation, as new threats may be identified.

3.4 Comparison of the proposed architecture and the traditional approach

As the three-layer model has been proposed, in this section we aim to compare it with the traditional approach. In fact, it is impossible to state what should be the best reference scenario to compare, as various models and technologies exist today on the market. Thus, we make a specific exemplification based on the model where all of the layers are located within the administrative domain of a single operator. Therefore, we consider a scenario in which the mobile network operator owns spectrum licenses, deploys and manages the whole infrastructure, and is responsible for providing services to the end-users. The comparison is presented in a structured form in Table 1.

3.5 Exemplary use case

In order to simplify presentation of the three-layer model, let us consider an exemplary use-case. We assume that there is a need in the local society for some specific wireless services, e.g. reliable-delivery of 4K video. At the same time, regular cellular users have to be served with agreed Quality-of-Service parameters. Let us imagine also that there exists an infrastructure for LTE-A cellular networking, a set of densely deployed WLAN access points and some microwave links. Moreover, the household users are connected to the fibre-based Internet.

In a traditional case, there will be at least one mobile network operator who will be in possession of the entire cellular infrastructure, and who will deliver LTE-A connectivity to the end-users. It may not be interested (due to various reasons) in offloading data service using the WLAN access points which are not under its control, unless the Licensed Assisted Access (LAA) scheme is guaranteed. The fixed-internet connectivity will be guaranteed by the Internet Service Provider (ISP), who may deploy also its own WLAN infrastructure. Agreements between the MNOs and ISPs may be necessary to guarantee seamless vertical handover between these types of networks. Moreover, the end-user is obliged to sign at least two agreements (one with MNO and one with ISP) for the connectivity services. Now, in order to offer the required wireless delivery of 4K video, it is the MNO who could make the investments in the network or may try to establish new virtual operator which will be responsible for management of this part of services. In the next step, the same infrastructure of MNO will be used to serve the typical cellular network users and the 4K users. Following recent achievements in this area, the network slicing approach could be a well-tailored solution to this problem. Following [56], various services may be provided within the dedicated so-called network slices, consisting of set of virtual functions run on the same infrastructure. These virtual functions are managed by an orchestrator, that is in charge of configuring the slice according to the specific requirements of the service.

Let us now analyse the same situation from the perspective when the three-layer model has been applied. Then, theoretically, one may imagine the presence of a company whose responsibility will be the deployment and management of entire infrastructure (including wireless part consisting of cellular and WLAN elements, as well as wired part, i.e. fibre-links). The wholesale infrastructure provider has no interest in any personal (single-user) marketing, and only sells the “network capacity” through a orchestrator to a service provider. The latter is located on top of the three-layer model, and it is the typical service company which does not possess any underlying hardware. The company is only offering services to the clients based on the agreements made between the company and end-users. The orchestrating company (located in the middle-layer) creates the slice based on the infrastructure delivered by the infrastructure provider.

Let us put this example into more details. The classical cellular users which make phone calls and surf the Internet requires X resources offered by the orchestrator (the exact mathematical definition of the resource is beyond the scope of this paper, but one may refer to the concept of virtualized resources discussed in e.g. [43]). At the same time, the 4K users will require Y resources, which will be probably much bigger value than X. Now, as the underlying infrastructure will be virtualized, it is the role of the orchestrator to map these X resources to physical resources using all available transmission techniques (e.g. radio spectrum, or optical bandwidth). For example, one required set of resources X may be guaranteed by network access via WLAN channels in 5 GHz band connected to fibre network. If these bands are already occupied, the orchestrator may select to switch to LTE-A resource blocs with certain version of MIMO transmission scheme, and in that case, the traffic will go through the cellular infrastructure. At the same time, the 4K users will be scheduled to use available beamforming technology, as it will guarantee the easiest way to deliver the required Y resources. In both cases, the work done by the orchestrator is transparent to the end-user, regardless if the service is delivered using LTE-A massive MIMO scheme, or via WLAN infrastructure. Please note that in such an approach user authentication will be required only between service provider and the client, there could be no need to distinguish between the SIM card users and SSID owners, or any other authentication model. Of course, the critical issue is to guarantee the delivery of required services and to keep the security of the end-user at the highest level [57, 58]. However, as this is a novel concept, much effort should be done towards this direction.

4 Market and regulatory constraints

Contemporary operators may welcome the evolving virtualisation and sharing paradigms, since these methods allow them to focus on operational costs and revenues only. However, this may lead to the consolidation of specific functions or network layers by a single specialised entity.

Therefore, a company facing the decision of becoming only a service provider without its own infrastructure needs to analyse risks associated with a limited (minimal) influence on the network or networks it will operate on. In such a case, an agreed form of monitoring of the network quality and reliability must be defined in a Service Level Agreement (SLA), i.e. a part of the network sharing contract that defines the details regarding the acceptable parameters of the network, such as, packet loss and two-way delay, or the maximum time a network-related failure should be resolved in. Sharing agreements between operators (providers) are usually regulated by law, and therefore, different regulators’ opinions on these matters are presented hereunder.

In January 2016, the Polish Office of Competition and Consumer Protection (Pol. Urza̧d Ochrony Konkurencji i Konsumentów – UOKiK) has published an opinion [59] that sharing passive infrastructure in general may result in both positive and negative effects on the market and especially on the competition. UOKiK states that it is desirable to support sharing in less attractive low urbanised areas where it is difficult to deliver high quality services by more than one telecommunications network. However, sharing of infrastructure in urban areas in Poland does not seem necessary. Moreover, from the point of view of market competition, it may be undesirable. In general, due to the potential risks that infrastructure sharing can cause, UOKiK will actively monitor and analyse the effects of such collaborations on the Polish market. The assessment of network sharing will be made after each analysis of the market environment. The French L’Autorité de Régulation des Communications Electroniques et des Postes (ARCEP) published an opinion [60] regarding two sharing agreements, and stated that such a cooperation between operators can only be allowed for a limited amount of time. The first part of the opinion is connected with a 2G/3G roaming agreement between two operators, i.e. Free Mobile and Orange. ARCEP demands that the agreement should be terminated immediately. The second part of the opinion referred to the SFR and Bouygues Telecom agreement on sharing their 2G/3G/4G networks. ARCEP wants to verify if this agreement results in improved coverage and quality of service for customers. Moreover, ARCEP requires that the agreement should be valid only for a limited amount of time.

The above shows differing regulators’ opinions related to infrastructure sharing. However, in regard to spectrum sharing, there seems to be a consensus between regulators and operators on the fact that shared spectrum access is desirable, as it enables to achieve high spectrum utilisation provided that sharing is managed and interference is controlled by means of careful frequency allocations. Moreover, the regulators aim to stimulate both market competition and efficient radio frequency utilisation. This opinion was presented for example by the British Office of Communications (Ofcom) [61]. In general, Ofcom goals are to increase the license-exempt spectrum and increase spectrum sharing. The study predicts that WLAN and mobile Internet will soon become congested and that is the reason why spectrum sharing is crucial in three scenarios:

-

Indoor WLAN—to facilitate the extension of currently allocated frequency bands in 5 GHz range and sharing of additional bands with existing services;

-

Small cell outdoor—to prevent the deployment of many competing small cell networks which could cause significant interference;

-

Internet of Things—to provide the spectrum in situations where infrequently a small amount of data needs to be send over a short distance.

In the United States, the Federal Communications Commission (FCC) also supports spectrum sharing [62] by specifying new rules for the use of the 3550–3700 MHz band and making it available for new Citizens Broadband Radio Service (CBRS). The new scheme proposes that the spectrum should be shared between existing licensed incumbents and two new categories of operators: Priority Access (operators holding commercial licenses) and General Authorized Access (free of charge without the need for licenses). Implementing such a scheme effectively moves away from traditional licensed and unlicensed frequency bands and provides a first step towards dynamic and efficient frequency allocation.

5 Conclusions

In this paper, we have presented the perspectives for resource virtualisation and sharing in the context of wireless and wired networks evolution towards the 5th generation. The discussed concepts will shape the future of networking in the following years, changing the telecommunications landscape, most likely taking the form of three-layered model described in the paper. Today various paradigms and technologies are available to facilitate effective resource sharing, while new ones are still being developed. Therefore, it is valid to assume that a 5G network will operate not only on different networking technologies but also under different configuration and operational regimes to best meet the area-specific needs. Moreover, a virtualised and shared model of operation, in general, seems to increase operators’ efficiency and reduce the entry barrier for a newly emerged service provider. From the user’s perspective, it can reduce service price and provide access to a wider scope of services. However, resource sharing not only presents new technical and operational challenges but is also related to various legal and regulatory aspects and constraints. Therefore, it needs to be flexible and allow for modifications during its lifespan. Models of cooperation are continually evolving because the expectations of the owners and users of different network layers tend to differ and vary in time. Furthermore, country-specific regulations may introduce restrictions, and hence, these models should be designed and applied with well-defined exit strategy procedures.

Notes

The exact definition of the random access channel is one of the research challenges identified later in the next section.

References

Jin-young, C. (2016). BusinessKorea Korea’s Premier Business Portal, 29 March 2016 [Online]. Available http://www.businesskorea.co.kr/english/news/ict/14236-5g-competition-olympic-games-northeast-asian-countries-5g-race. [Accessed 7 August 2017].

5GPPP: Key performance indicators [Online]. Available https://5g-ppp.eu/kpis/. Accessed 7 August 2017.

Mehmood, Y., Haider, N., Imran, M., Timm-Giel, A., & Guizani, M. (2017). M2M communications in 5G: state-of-the-art architecture, recent advances, and research challenges. IEEE Communications Magazine, 55(9), 194–201.

Mavromoustakis, C. X., Mastorakis, G., & Batalla, J. M. (Eds.). (2016). Internet of Things (IoT) in 5G mobile technologies. New York: Springer.

5GPP: 5G empowering vertical industries, 2016. [Online]. Available http://5g-ppp.eu/wp-content/uploads/2016/02/BROCHURE_5PPP_BAT2_PL.pdf. Accessed 7 August 2017.

Liang, C., & Yu, F. R. (2015). Wireless network virtualization: A survey, some research issues and challenges. IEEE Communications Surveys & Tutorials, 17(1), 358–380. First Quarter.

Khan, I., Belqasmi, F., Glitho, R., Crespi, N., Morrow, M., & Polakos, P. (2016). Wireless sensor network virtualization: A survey. IEEE Communications Surveys & Tutorials, 18(1), 553–576. First Quarter.

Blenk, A., Basta, A., Reisslein, M., & Kellerer, W. (2016). Survey on network virtualization hypervisors for software defined networking. IEEE Communications Surveys & Tutorials, 18(1), 655–685. First Quarter.

Li, Y., & Chen, M. (2015). Software-Defined Network Function Virtualization: A Survey. IEEE Access, (pp. 2542–2553).

Rappaport, T., Heath, R. W, Jr., Daniels, R. C., & Murdock, J. N. (2014). Millimeter Wave Wireless Communications. Englewood Cliffs: Pearson Education.

Kutty, S., & Sen, D. (2016). Beamforming for millimeter wave communications: An inclusive survey. IEEE Communications Surveys & Tutorials, 2, 949–973.

Agiwal, M., Roy, A., & Saxena, N. (2016). Next generation 5G wireless networks: A comprehensive survey. IEEE Communications Surveys & Tutorials, 99, 1–1.

Marzetta, T. L. (2010). Noncooperative cellular wireless with unlimited numbers of base station antennas. IEEE Transactions on Wireless Communications, 9(11), 3590–3600.

Banelli, P., Buzzi, S., Colavolpe, G., Modenini, A., Rusek, F., & Ugolini, A. (2014). Modulation formats and waveforms for 5G networks: Who will be the heir of OFDM?: An overview of alternative modulation schemes for improved spectral efficiency. IEEE Signal Processing Magazine, 31(6), 80–93.

Mustonen, M., Matinmikko, M., Palola, M., Yrjl, S., & Horneman, K. (2015). An evolution toward cognitive cellular systems: licensed shared access for network optimization. IEEE Communications Magazine, 53(5), 68–74.

Kliks, A., Holland, O., Basaure, A., & Matinmikko, M. (2015). Spectrum and license flexibility for 5G networks. IEEE Communications Magazine, 53(7), 42–49.

Bogucka, H., Kryszkiewicz, P., & Kliks, A. (2015). Dynamic spectrum aggregation for future 5G communications. IEEE Communications Magazine, 53(5), 35–43.

Tehrani, R. H., Vahid, S., Triantafyllopoulou, D., Lee, H., & Moessner, K. (2016) Licensed spectrum sharing schemes for mobile operators: A survey and outlook. IEEE Communications Surveys & Tutorials.

FLore, D.: Tentative 3GPP timeline for 5G, 3GPP [Online]. Available http://www.3gpp.org/news-events/3gpp-news/1674-timeline_5g. Accessed 7 August 2017.

COHERENT: EU funded H2020 research project: Coordinated control and spectrum management for 5G heterogeneous radio access networks (COHERENT) [Online]. Available http://www.ict-coherent.eu/. Accessed 7 August 2017.

Kostopoulos, A, et al. (2016). Scenarios for 5G networks: the COHERENT approach. In 23rd International conference on telecommunications, Thessalonkik, Greece.

Agapiou, G., Kostopoulos, A., Kuo, F.-C., Chen, T., Kliks, A., Goldhamer, M., et al. (2016). Developing a flexible spectrum management for 5G heterogeneous rafor 5G heterogeneous radio access networks. In European conference on networks and communications (EuCNC), Athens, Greece.

5G NORMA: EU funded H2020 research project: 5G Novel radio multiservice adaptive network architecture (5G NORMA) [Online]. Available https://5gnorma.5g-ppp.eu/. Accessed 7 August 2017.

Chiaraviglio, L., et. al. (2017). An economic analysis of 5G superfluid networks. In 2017 IEEE 18th international conference on high performance switching and routing (HPSR).

Chiaraviglio, L., et al. (2017). Optimal superfluid management of 5G networks. In Proceedings of 3rd IEEE conference on network softwarization (IEEE NetSoft), Bologna, Italy.

Shojafar, M., et al. (2017). P5G: A bio-inspired algorithm for the superfluid management of 5G networks. In IEEE GLOBECOM.

National Science Foundation: Advanced wireless research platforms: sustaining U.S. leadership in future wireless technologies, 9 June 2016, [Online]. Available https://www.nsf.gov/pubs/2016/nsf16096/nsf16096.jsp?org=NSF. Accessed 7 August 2017.

Warwick, M. (2016). Injecting some adrenaline into the plodding NFV effort, 3 August 2016, [Online]. Available http://www.telecomtv.com/articles/nfv/injecting-some-adrenaline-into-the-plodding-nfv-effort-13864/.

VMWare white paper: Virtualization Overview, [Online]. Available https://www.vmware.com/pdf/virtualization.pdf. Accessed 7 August 2017.

ETSI: Network functions virtualisation: An introduction, benefits, enablers, challenges & call for action, October 2012. [Online]. https://portal.etsi.org/nfv/nfv_white_paper.pdf. Accessed 7 August 2017.

SDxCentral: Understanding the SDN architecture, [Online]. Available https://www.sdxcentral.com/sdn/definitions/inside-sdn-architecture/. Accessed 7 August 2017.

E. T. S. I. -. ETSI (2013). Network functions virtualisation (NFV); Use Cases, ETSI, GSNFV.

ETSI: NFV industry specification group [Online]. Available http://www.etsi.org/technologies-clusters/technologies/nfv. Accessed 7 August 2017.

Bijl, P. d., & Peitz, M. (2005). Local loop unbundling in europe: Experience, prospects and policy challenges. International University in Germany working paper no. 29/2005.

Bouckaert, J., Dijk, T., & Verboven, F. (2010). Access regulation, competition, and broadband penetration: An international study. Telecommunications Policy, 34(11), 661–671.

Nardotto, M., Valletti, T., & Verboven, F. (2015). Unbundling the incumbent: Evidence from UK broadband. Journal of the European Economic Association, 13(2), 330–362.

Forzati, M., Larsen, C. P., & Mattsson, C. (2010). Open access networks, the Swedish experience. In 12th international conference on transparent optical networks, Munich.

Wong, E. (2012). Next-generation broadband access networks and technologies. Journal of Lightwave Technology, 30(4), 597–608.

Rozporza̧dzenie Ministra Transportu, Budownictwa i Gospodarki Morskiej z dnia 6 listopada 2012 r. zmieniaja̧ce rozporza̧dzenie w sprawie warunków technicznych, jakim powinny odpowiadać budynki i ich usytuowanie, 2012.

Europe’s Digital Progress Report 2016: Connectivity—broadband market developments in the EU, 2016. [Online]. Available http://ec.europa.eu/newsroom/dae/document.cfm?action=display&doc_id=15807. Accessed 7 August 2017.

GSMA: mobile infrastructure sharing [Online]. Available http://www.gsma.com/publicpolicy/wp-content/uploads/2012/09/Mobile-Infrastructure-sharing.pdf. Accessed 7 August 2017.

Meddour, D.-E., Rasheed, T., & Gourhant, Y. (2011). On the role of infrastructure sharing for mobile network operators in emerging markets. Computer Networks, 55(10), 1576–1591. 16 May.

Khatibi, S., Caeiro, L., Ferreira, L. S., Correia, L. M., & Nikaein, N. (2017). Modelling and implementation of virtual radio resources management for 5G Cloud RAN. EURASIP Journal on Wireless Communications and Networking.

Mitola, J., & Maguire, G. Q. (1999). Cognitive radio: making software radios more personal. IEEE Personal Communications, 6(4), 13–18.

Cichon, K., Kliks, A., & Bogucka, H. (2016). Energy-efficient cooperative spectrum sensing: A survey. IEEE Communications Surveys & Tutorials, PP(99), 1–29.

Tuttlebee, W. H. W. (1999). Software-defined radio: facets of a developing technology. IEEE Personal Communications, 6(2), 38–44.

Musznicki, B., Kowalik, K., Kołdziejski, P., & Grzybek, E. (September 2016). Development and operation of INEA mobile and stationary Wi-Fi hotspot network. ISWCS 2016, thirteenth international symposium on wireless communication systems, pp. 20–23.

Floodlight: Project floodlight [Online]. Available http://www.projectfloodlight.org/. Accessed 7 August 2017.

Opendaylight: OpenDaylight—Open source SDN platform, [Online]. Available https://www.opendaylight.org/. Accessed 7 August 2017.

OpencCotrail: Open contrail—An open-source network virtualization platform for the cloud [Online]. Available http://www.opencontrail.org/. Accessed 7 August 2017.

Hanrahan, H. (2007). Network convergence: Services, applications, transport, and operations support. Hoboken: Wiley.

Zhang, L., Afanasyev, A., Burke, J., et al. (2014). Named data networking. ACM SIGCOMM Computer Communication Review, 44(3), 66–73.

Khattab, O., & Alani, O. (2014). Algorithm for seamless vertical handover in heterogeneous mobile networks. In 2014 Science and Information Conference, London.

Ahmed, A., Boulahia, L. M., & Gaiti, D. (2014). Enabling vertical handover decisions in heterogeneous wireless networks A state-of-the-art and a classification. IEEE Communications Surveys & Tutorials, 16(2), 776–811.

Oliva, L., De, A., Banchs, A., Soto, I., Melia, T., & Vidal, A. (2008). An overview of IEEE 802. 21: media-independent handover services. IEEE Wireless Communications, 15(4), 96–103.

Bega, D., Gramaglia, M., Banchs, A., Sciancalepore, V. S. K. & Costa-Perez, X. (2017). Optimising 5G infrastructure markets: The business of network slicing. IEEE INFOCOM 2017—IEEE conference on computer communications, Atlanta, GA, USA, pp. 1-9.

Shin, S., Wang, H., & Gu, G. (2015). A first step toward network security virtualization: From concept to prototype. IEEE Transactions on Information Forensics and Security, 10(10), 2236–2249.

Zhu, L., Yu, F. R., Tang, T., & Ning, B. (2016). An integrated train-ground communication system using wireless network virtualization: Security and quality of service provisioning. IEEE Transactions on Vehicular Technology, 65(12), 9607–9616.

UOKIK: Stanowisko UOKiK w zakresie podejścia do oceny współpracy operatorów telekomunikacyjnych,” 15 01 2016. [Online]. Available https://www.uokik.gov.pl/download.php?plik=17459. Accessed 7 August 2017.

ARCEP: Mobile network sharing (ARCEP publishes guidelines on roaming and mobile network sharing for consultation), 12 01 2016 [Online]. Available http://www.arcep.fr/index.php?id=8571&tx_gsactualite_pi1%5Buid%5D=1825&L=1.

Ofcom: The future role of spectrum sharing for mobile and wireless data services, 30 April 2014. [Online]. Available http://stakeholders.ofcom.org.uk/binaries/consultations/spectrum-sharing/statement/spectrum_sharing.pdf. Accessed 7 August 2017.

Federal Communications Commission: Report and order and second further notice of proposed rulemaking, 21 April 2015. [Online]. Available http://transition.fcc.gov/Daily_Releases/Daily_Business/2015/db0421/FCC-15-47A1.pdf. Accessed 7 August 2017.

Bogucka, H., Kryszkiewicz, P., & Kliks, A. (2015). Dynamic spectrum aggregation for future 5G communications. IEEE Communications Magazine, 53(5), 35–43.

ETSI (2013) Network functions virtualisation (NFV); use cases, ETSI, GSNFV.

Floodlight, P.: http://www.projectfloodlight.org/ [Online].

Acknowledgements

The work has been funded by the EU H2020 project COHERENT (Contract No. 671639).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Kliks, A., Musznicki, B., Kowalik, K. et al. Perspectives for resource sharing in 5G networks. Telecommun Syst 68, 605–619 (2018). https://doi.org/10.1007/s11235-017-0411-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11235-017-0411-3