Abstract

Active inference offers a unified theory of perception, learning, and decision-making at computational and neural levels of description. In this article, we address the worry that active inference may be in tension with the belief–desire–intention (BDI) model within folk psychology because it does not include terms for desires (or other conative constructs) at the mathematical level of description. To resolve this concern, we first provide a brief review of the historical progression from predictive coding to active inference, enabling us to distinguish between active inference formulations of motor control (which need not have desires under folk psychology) and active inference formulations of decision processes (which do have desires within folk psychology). We then show that, despite a superficial tension when viewed at the mathematical level of description, the active inference formalism contains terms that are readily identifiable as encoding both the objects of desire and the strength of desire at the psychological level of description. We demonstrate this with simple simulations of an active inference agent motivated to leave a dark room for different reasons. Despite their consistency, we further show how active inference may increase the granularity of folk-psychological descriptions by highlighting distinctions between drives to seek information versus reward—and how it may also offer more precise, quantitative folk-psychological predictions. Finally, we consider how the implicitly conative components of active inference may have partial analogues (i.e., “as if” desires) in other systems describable by the broader free energy principle to which it conforms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the contemporary sciences of mind and brain, as well as in the areas of modern philosophy devoted to those sciences, the broad family of approaches labelled with the term “predictive processing” has gained considerable traction. Although not always clearly demarcated as such, this is an umbrella term covering a number of distinct models of the mind and brain, many of which have different theoretical commitments and targets of explanation. Early catalysts of this recent rise to prominence were papers highlighting the possibility that predictive coding algorithms, already widely used within the information and computer sciences (e.g., for data compression), might also represent a plausible means by which the brain accomplishes perception. These algorithms are computationally and energetically efficient, in that they simply encode predictions and prediction error signals (i.e., the magnitude of deviations between predicted and observed input). In this scheme, the gain in efficiency is enabled by compression: information needs to be processed only when it is not predicted; that is, beliefs about the causes of sensory input are only updated when doing so is necessary to minimize prediction error (i.e., to find a set of beliefs that make accurate predictions about sensory input).

Inspired by the success of predictive coding, it was subsequently proposed that predictive processing might offer a unifying framework for understanding brain processes generally, including learning, decision-making, and motor control. With respect to decision-making and motor control, an influential proposal for accomplishing this unification is active inference, which casts these control processes in terms of a similar prediction-error minimization process—in this case, by moving the body to minimize prediction error with respect to a set of prior beliefs about where the body should be. However, a counterintuitive aspect of this proposal is that it postulates nothing that, at first pass, might count as explicitly conative; that is, the framework seemingly contains only doxastic, belief-like elements and no conative, reward- or desire-like elements.

In this article, we address the worry that active inference models may be in tension with the belief–desire–intention (BDI) model within folk psychology—namely, the notion that we form intentions based on specific combinations of beliefs and desires (i.e., propositional attitudes such as “believing that X” and “desiring that Y”). This worry arises because active inference models do not explicitly include terms for desires (or other conative constructs) at the mathematical level of description (e.g., for examples of this worry in relation to broader predictive processing theories of the brain, see Clark, 2019; Dewhurst, 2017; Yon et al., 2020). If this worry is founded, it could be taken either to imply that active inference models are implausible because they do not capture central aspects of cognition, or—to the extent that these models are successful—to imply that desires should be eliminated from scientific theories of psychology (i.e., eliminativism; Churchland, 1981). To resolve this concern, we first provide a brief review of the historical progression from predictive coding to current active inference models, which allows us to distinguish active inference formulations of motor control (which need not have desires under folk psychology) and active inference formulations of decision processes (which do have desires within folk psychology). We then show that, despite a superficial tension when viewed at the mathematical level of description, the active inference formalism contains terms that are readily identifiable as desires (and related conative constructs) at the psychological level of description. We then discuss the additional insights that may be offered by active inference and implications for current debates.

2 From predictive coding to active inference

In the late 1990s, Rao and Ballard offered a convincing demonstration of how predictive coding could explain the particular receptive field properties of neurons in the visual cortex (Rao & Ballard, 1999). Friston and colleagues subsequently further developed this line of work by proposing a more extensive theory of how predictive coding could account for both micro- and meso-scale brain structure (e.g., patterns of synaptic connections in cortical columns, patterns in feedforward and feedback connections in cortical hierarchies), while also explaining a wide range of empirical findings within functional neuroimaging (fMRI) and electroencephalography (EEG) research (Bastos et al., 2012; Friston, 2005; Kiebel et al., 2008); for a recent review of empirical studies testing this theory, see Walsh et al. (2020). An inherent feature of predictive coding—namely, the weighting of prediction errors by their expected reliability or precision—also offered an attractive theory of selective attention (Feldman & Friston, 2010), while the processes by which predictions and expected precisions were updated over longer timescales further offered an attractive, biologically plausible (Hebbian; Brown et al., 2009) model of learning (Bogacz, 2017). These theories were also synergistic with previous (and ongoing) developments in the field of computer vision (Hinton & Zemel, 1994; Hinton et al., 1995), which has successfully employed similar prediction error minimization algorithms for unsupervised learning in artificial neural networks.

This line of work ultimately raised the possibility that predictive coding could offer a unifying principle by which to understand the brain as a whole—that is, that the entire brain may be constantly engaged in generating predictions and then updating beliefs when those predictions are violated. In this view, the brain can be envisioned as a kind of multi-level “prediction machine”, in which each level of representation in a neural hierarchy (encoding beliefs at different spatiotemporal scales and levels of integration/abstraction) attempts to remain accurate in its predictions about activity patterns at the level below (i.e., where the bottom level is sensory input itself; Clark, 2015). This could account for many empirical findings spanning both lower- and higher-level perceptual processes (e.g., from edge detection to facial recognition; Walsh et al., 2020) and across many sensory modalities (i.e., from both inside and outside of the body; Seth, 2013; Smith et al., 2017).

However, to be a complete theory of the brain, predictive coding would need to be extended beyond its origins as a theory of perception. The most blatantly missing piece of the puzzle was motor control. There was also a seeming incompatibility with the very possibility of motor control, due to the tension between a predictive coding account of proprioception (i.e., perception of body position) and the ability to change body position. Namely, if predictive coding were applied to proprioception, beliefs about body position should simply be updated via (precision-weighted) proprioceptive prediction errors as with any other sensory modality (i.e., based on afferent signals from the body). As such, neither precision, prediction, nor prediction error signals (i.e., the sole ingredients in predictive coding) appeared able to account for the brain’s role in controlling the activity of skeletal muscle to change body position. In other words, if all neural signaling were construed only in terms of the elements in predictive coding, it was unclear how proprioceptive prediction signals could both change in response to input from the body (e.g., as in vision or audition) while also allowing for change in body position when an animal moves throughout its environment. Additional motor command signals seemed necessary.

The major proposal put forward to solve this problem was called “active inference” (Adams et al., 2013; Brown et al., 2011; Friston et al., 2010). This proposal offered a theory of how prediction signals within the proprioceptive domain could essentially act as motor commands so long as they were transiently afforded the right influence on spinal reflex arcs whenever movement was required. In short, if proprioceptive prediction signals were highly weighted (i.e., such that they were not updated by contradictory sensory information about body position), they could modulate the set-points within spinal reflex arcs—leading the body to move to the position associated with the new set point (i.e., corresponding to the descending prediction). This conception of motor control was entirely consistent with a long pedigree of theorizing; ranging from ideomotor theory through to the equilibrium point hypothesis and perceptual control theory (Feldman, 2009; Mansell, 2011). Active inference further suggested that the concept of precision-weighting could be extended such that, instead of simply encoding the predictability of sensory input, an analogous weighting mechanism could also be used to control when predictions about body position were updated through traditional predictive coding (perception) and when they were instead used to move the body to positions consistent with descending predictions (i.e., acting as motor commands). Thus, instead of the passive perceptual inference process associated with traditional predictive coding models, this type of inference was “active” in the sense that prediction error could be minimized by moving the body into predicted positions when those predictions were assigned the right (dynamically controlled) precision weighting. Since then, similar models have also been used to explain both cortical and sub-cortical visceromotor control processes and related aspects of how the brain perceives and regulates the internal state of the body (Harrison et al., 2021; Petzschner et al., 2017, 2021; Pezzulo et al., 2015; Seth, 2013; Seth & Critchley, 2013; Smith et al., 2017; Stephan et al., 2016; Unal et al., 2021).

Crucially, however, this was a low-level theory of how predictions could accomplish motor control. As with predictive coding in perception, these predictions, prediction errors, and precision signals were assumed to be sub-personal (non-conscious) processes and did not correspond to conscious expectations or conscious surprise. Thus, the suggestion was not that we consciously choose to believe our body is in a different position when we desire to adopt that position, or that our conscious beliefs about body position always generate action. We can clearly [and in some cases falsely (Litwin, 2020)] believe our body is in one position while trying to move it to another (Yon et al., 2020). Even more crucially for the purposes of this paper, this “first-generation” active inference framework was not a theory of decision-making. In other words, it did not explain how we decide—or plan—where to move our body; it only explained how body movement can be executed using the predictive coding apparatus once a decision has been made.

In some discussions (e.g., see Clark, 2015), this motor control theory has also been considered in a hierarchical control setting in which higher-level, compact motor plans are progressively unpacked through descending levels—ultimately resulting in low-level predictions that control many motor processes in parallel over extended timescales. For example, the plan to eat ice cream could set a lower-level plan to walk to the fridge and open the door, which could set a yet lower-level plan to take a sequence of steps, and so forth, down to the control of spinal reflex arcs. This is also consistent with some discussions of goal hierarchies (where higher-level goals set lower-level goals) elsewhere in the active inference and broader psychology literatures (e.g., see Badre, 2008; Pezzulo et al., 2018). However, these control processes are still constrained by intention-formation at the highest level; that is, the aforementioned hierarchical system ultimately implements control of an action sequence after a plan has been selected through a decision process that was not included in these models.

Incorporating decision-making has recently led to yet further extensions of these models into the realm of discrete state-space Markov decision processes (Da Costa et al., 2020a; Friston et al., 2016, 2017a, 2017b, 2018; Parr & Friston, 2018b). Importantly, while these extensions are also referred to as “active inference”, formally speaking, they are distinct from the prediction-based models of motor control described above. For clarity, we will refer to the motor control version as “motor active inference” (mAI) and the decision-making version as “decision active inference” (dAI). For the purposes of this paper, the crucial distinction is that, unlike mAI models, dAI models explicitly describe a process in which decisions are made about what to do in order to generate some observations and not others (i.e., because some observations are preferred over others or are expected to be more informative than others; described below). In contrast, mAI does not make decisions. Instead, once a decision has been made about what to do (i.e., once a planned sequence of actions has been selected), mAI uses proprioceptive prediction signals to move the body to carry out the decided action sequence (i.e., proprioceptive predictions play the role of motor commands, as described above). In recent “mixed models,” this has been simulated quantitatively, where a dAI model can have an mAI model placed below it as a lower hierarchical level. The higher-level dAI model can then feed a decided action sequence down to the lower-level mAI model, which can then implement that action sequence by dynamically modulating the set point within a simulated reflex arc (Friston et al., 2017b). For example, the dAI level can decide on a sequence of locations to attend to while reading, and the lower mAI level can then move the eye to point toward the decided sequence of locations.

As will be discussed in more detail below, the formalism underlying dAI models is built from the same belief-like elements (predictions, prediction errors, precisions, and so forth) as are predictive coding models. Notably, unlike other leading models of decision-making—the prime example being reinforcement learning (Sutton & Barto, 2018)—there is nothing in dAI models that is explicitly labeled (at the mathematical level of description) in terms of conative constructs such as value, reward, goals, motivations, and so forth. Formally, these models simply come equipped with a set of probability distributions (i.e., “Bayesian beliefs” in the technical sense) about actions, states, and outcomes. One of these distributions encodes prior expectations about the observations that will most probably be received a priori (i.e., the preferred observations described above), and the model infers the sequence of actions expected to result in these observations (while simultaneously seeking out observations that will maximize confidence in current beliefs about the state of the world). Note that, in hierarchical models, “observations” can also correspond to posterior (Bayesian) beliefs over lower-level states.

This follows from a more fundamental principle—the “free energy principle” (FEP)—from which all flavors of predictive coding and active inference can be derived. This will be discussed further below, but—in short—the FEP starts from a truism, and works through the implications of that truism: namely, that we observe physical systems that exist, in the sense that they persist as systems with measurable properties over appreciable temporal and spatial scales (Friston, 2020). Living creatures are a subset of systems that exist; indeed, for organisms to survive, they must remain in a limited range of phenotypic states [i.e., those states that are consistent with their survival, broadly construed (Ramstead et al., 2018)]. By definition, these phenotypic states must be occupied with a higher probability than deleterious states (e.g., those leading to loss of structural integrity and death). That these phenotypic states are occupied more frequently means that the organism will observe the sensory consequences of occupying those states more frequently (e.g., as a human being, my survival entails that I will continue to perceive, with high probability, the sensory consequences of being on land as opposed to being underwater; conversely, being on land would be a low-probability observation for a fish). As such, again by definition, only those organisms that seek out such high-probability observations (i.e., those that are implicitly “expected,” given their phenotype) will continue to exist. Under the FEP, the value functions commonly used in other approaches to decision-making and control (e.g., reinforcement learning) are replaced with prior preferences for these “phenotypically expected” observations (Friston, 2011; Hipolito et al., 2020).

This reformulation can, in turn, be made more precise mathematically. It is formalized as the requirement that organisms continually seek out observations that maximize the evidence for their phenotype. The phenotype of the organism is given a statistical interpretation in this context: it is defined as a joint probability density over all possible combinations of states and observations, \(p\left( {o, s} \right)\) under a model (Ramstead et al., 2020a). This joint probability density is called a generative model, because it specifies what observations will be generated by different unobservable states of the world. Thus, under the FEP, to exist is to continually generate observations that provide evidence for one’s phenotype. However, directly assessing the evidence for one’s phenotype—namely, the marginal probability of observations \(\left( o \right)\) given a model \(\left( m \right)\), \(p(o|m)\)—is often mathematically intractable. Fortunately, there is a tractable way of estimating \(p(o|m)\); namely, by finding beliefs that minimize a statistical quantity called variational free energy \(\left( F \right)\). Variational free energy itself admits of different decompositions that clarify its different roles. One such formulation is as a measure of the complexity of one’s beliefs minus the accuracy of those beliefs (as measured by how well they predict incoming sensory data). A simple way to think about minimizing complexity is that, while searching for beliefs that maximize accuracy, an agent also seeks to change its prior beliefs as little as possible. Perception, learning, decision-making, and action under the FEP will thus result in the most parsimonious (most accurate and least complex) beliefs.Footnote 1

However, the terms above refer to existing observations, while dAI requires selecting actions that are expected to generate preferred (phenotype-consistent) observations in the future. For example, because remaining hydrated is “expected” given the phenotype of all animals, actions should be chosen in advance to ensure the observation of sufficient hydration in the future (e.g., drinking water before hydration levels get too low). Because variational free energy can only be evaluated when an observation is present, organisms must technically choose actions that minimize the related quantity of expected free energy (most often denoted \(G\)), which is the free energy expected under the outcomes anticipated when following a sequence of actions, given one’s model of the world. In the neural process theory associated with dAI, one type of prediction error signal (“state” prediction error) drives perceptual belief updating via minimizing \(F\), while decision-making can be expressed as being driven by another type of prediction error (“outcome” prediction error), which corresponds (in part) to the expected deviation between the observations expected under each possible decision and the phenotype-congruent (preferred) observations that organisms seek out a priori; e.g., see (Friston et al., 2018). Thus, current formulations of active inference appeal to forward-looking decision processes (i.e., plans or policies) aimed at maximizing phenotype-consistent observations.

It is important to emphasize that this cursory outline of the development of ideas within “predictive processing” is incomplete. There is much more to the story for both predictive coding and active inference (both mAI and dAI), and several other predictive processing (or “Bayesian brain”) algorithms and implementations have been proposed: e.g., see Knill and Pouget (2004), Mathys et al. (2014), Teufel and Fletcher (2020). Here, we have also focused on the series of theories motivated by the FEP. With that in mind, our cursory historical sketch is sufficient to understand the problem we seek to tackle in the present paper.

3 Preliminary considerations

Before diving into the formalism of active inference, it is important at the outset to consider two broad points. The first of these pertains to the distinction between personal (conscious) and sub-personal (unconscious) processes. The second point pertains to levels of description and their potential conflation. With respect to the first point, dAI models are largely taken to describe sub-personal, non-conscious processes. For example, dAI does not claim that people will feel subjectively surprised when prediction errors are generated, nor does it claim that people are aware of the types of prior expectations that interact with those prediction errors (e.g., for an example of unconscious visual priors, see Ramachandran, 1988). The construct of “surprisal” associated with prediction errors in the mathematics is not synonymous with folk-psychological surprise. It is just a measure of evidence for a generative model; it measures how “surprising” some data are, given a model of how that data might have been generated.

The relationship between personal (consciously accessible) and sub-personal (unconscious) computation therefore remains an open question. That said, some specific dAI models have been used to describe higher-level cognitive processes that can generate conscious beliefs and verbal reports (Smith et al., 2019a, 2019b; Whyte & Smith, 2021; Whyte et al., 2021). In these cases, the inferential (prediction-error minimization) processes can still be seen as sub-personal or unconscious, but the resulting beliefs and decisions themselves are assumed to enter awareness. As a general rule, however, sub-personal processes in dAI should not be expected to map one-to-one with consciously accessible or personal-level processes.

With respect to the second point, in the present context, it is important to avoid inappropriately conflating mathematical levels of description with psychological levels of description. The fact that a probability distribution plays the role of a prior (Bayesian) belief in a mathematical formalism does not entail, ipso facto, that it should be identified with a belief at a psychological level of description. A Bayesian belief is a formal mathematical object, while a psychological belief is not. We should therefore avoid the mistake of over-reifying mathematical descriptions. Cast a slightly different way, we should not make the mistake of conflating the referents of natural language terms like “belief” when used in mathematics versus when used in folk psychology.

As we will see below, one can non-problematically use a probability distribution (Bayesian belief) to represent desired outcomes—but simply view this aspect of the dAI formalism as a useful mathematical approach to allow desires to fit neatly into a fully Bayesian scheme. Put a slightly different way, one may consider dAI to be a good model of human decision-making without interpreting the distribution encoding desired outcomes as a belief in the psychological sense. On this view, the mathematical terminology, in and of itself, does not entail anything about consistency with (or the general empirical plausibility of) folk psychology. Assessing consistency instead requires identification of the (terminology-independent) functional roles played by each element in both dAI and folk-psychology, and then determining whether the same functional roles are present in each. Assessing plausibility further requires testing the empirical predictions of these frameworks.

To the extent that behavioral predictions from dAI models accurately capture patterns in a real organism’s behavior—that is, to the extent they can account for behavior better than available alternative models—an argument can also be made for a less deflationary view. Under this view, if computational and folk-psychological predictions converge (Kiefer, 2020)—and no other available theory can account for behavior equally well—this could entail that the mathematical structure of dAI is more than a useful descriptive model, but that it instead captures the true information processing structure underlying and enabling folk psychology and related abilities. In this case, the crucial point remains that one should not conflate mathematical and psychological levels of description. However, dAI models might nonetheless offer more detailed information about the true form of folk-psychological categories/processes. For example, they might entail that conative states like motivations and desired outcomes take the functional form of probability-like distributions that are integrated with beliefs to form intentions; or they might increase the granularity of folk psychology by highlighting that a term like “desire” actually has multiple referents at the algorithmic level (i.e., the exact opposite of the eliminativist claim that it has no referent).

Here, we do not specifically defend the more versus less deflationary views described above. Our aims are instead to: (1) demonstrate that there is a clear isomorphism between the elements of dAI models and those of the BDI model; and then (2) show how—provided one does not assume that probability distributions in computational models must be identified with beliefs at the psychological level—there will be no tension between dAI and the BDI model. With this as a starting point, we will now concisely lay out the minimal components of the formalism required to illustrate our central argument.

4 Variational free energy

First, we define a set of states of the world (\(s\)) that a decision-making agent could occupy at each time point (\(\tau\)), with a probability distribution \(p\left( {s_{\tau = 1} } \right)\) encoding beliefs about the probability of currently occupying each state at the start of a decision-making process (e.g., a trial in an experimental task). We then define a set of beliefs about possible sequences of actions the agent could entertain—termed “policies”—denoted by \(\pi\). Each policy can be considered a model that predicts a specific sequence of state transitions. Therefore, we must write down the way the agent believes it will move between states from one time point to the next, given each possible policy \(p\left( {s_{\tau + 1} |s_{\tau } , \pi } \right)\). At each time point, the agent receives observations (\(o_{\tau } )\), and must use these observations to infer beliefs over states based on a “likelihood” mapping that specifies how states generate observations \(p(o_{\tau } |s_{\tau } )\). Because each policy is a model of a sequence of state transitions, and each state generates specific observations, this means one can calculate a prior belief about the observations expected under each policy, \(p(o_{\tau } |\pi )\), and observed outcomes can then provide different amounts of evidence for some (policy-specific) models over others. Finally, because we minimize free energy to approximate optimal belief updating, we also define an (approximate) posterior belief distribution denoted as \(q\left( {s_{\tau } } \right)\) that will be updated with each new observation.

With this setup in place, we can now calculate the variational free energy \(\left( F \right)\) for each policy as follows:

The first term on the right-hand side is the “complexity” term, which is the Kullback–Leibler (KL) divergence between prior and approximate posterior beliefs (i.e., quantifying how much beliefs change after a new observation). Larger changes in beliefs lead to higher F values and are therefore disfavored. The second term on the right reflects predictive accuracy (i.e., the probability of observations given beliefs about states under the model). Greater accuracy leads to lower F values and is therefore favored. Minimizing F therefore maximizes accuracy while penalizing large changes in beliefs.Footnote 2 Therefore, finding the set of posterior beliefs that minimize F will approximate the best explanation of how observations are generated. This can be done because the free energy is a functional of beliefs (probability distributions), but a function of observations. Thus, the observations can be held fixed, and the beliefs varied, until the ones associated with a variational free energy minimum (model evidence maximum) are found. In the accompanying neural process theory, this is accomplished by minimizing state prediction errors (\(sPE\)) via neuronal dynamics that perform a gradient descent on variational free energy.

When applied to describe the right types of higher-level cognitive processes (see below), these kinds of beliefs about states have a fairly straightforward correspondence to psychological beliefs. For example, you may believe there is a plate of spaghetti in front of you after getting visual input most consistent with spaghetti, leading to a precise probability distribution encoding a very high probability over the state of “the presence of spaghetti”. Imprecise probability distributions over states could (again, when applied to describe the right cognitive processes) correspond to states of psychological uncertainty (e.g., not knowing what is in front of you in a dark room).

5 Expected free energy

Decision-making does not only require beliefs about past and present states (based on past and present observations). It also requires making predictions about future states and future observations. This requires taking the average (i.e., expected) free energy, \(G\left( \pi \right)\), given anticipated outcomes under each policy that one might choose:

Taking this average converts the complexity of the free energy into risk (the first term) while the predictive inaccuracy becomes ambiguity (the second term). This means that a policy is more likely if it minimizes risk and ambiguity, when read in this technical sense. In what follows, we will unpack the constituents of expected free energy to see what they look like intuitively.

The first (risk) term on the right-hand side encodes the anticipated difference (KL divergence) between the two quantities most central to our argument about the link between dAI and the BDI ontology. The first is \(q(o_{\tau } |\pi )\), which corresponds to the observations you expect if you chose to do one thing versus another. When applying a model to the right cognitive processes (see below), this maps well onto the colloquial notion of psychological-level expectations about (i.e., anticipation of) what one will observe. For example, imagine that you accidentally went skydiving with a broken parachute. In this case, you would have a precise expectation/belief that you will smash into the ground and die, no matter what action you choose. With each passing second, this folk-psychological belief will likely grow stronger and stronger, despite your desire not to smash into the ground. This term, \(q(o_{\tau } |\pi )\), therefore plays a role analogous to a common type of folk-psychological belief or expectation, and not the role of a desire.

The second quantity in the risk term within the expected free energy, \(p\left( {o_{\tau } } \right)\), is a policy-independent prior over observations. This encodes the observations that are congruent with an organism’s phenotype; namely, those observations that are consistent with the survival, reproduction, and other related goals of the organism. This distribution is most often called the “prior preference distribution” within the dAI literature. As can be seen in the equation, selecting actions that minimize \(G\left( \pi \right)\)—which can be done in neural dAI models by minimizing an outcome prediction error (\(oPE\)) signal—involves minimizing the difference between these phenotype-congruent prior expectations and the anticipated outcomes under a policy. Put more simply, the agent tries to infer which action sequence will generate outcomes that are as close as possible to phenotype-congruent outcomes. As discussed further below, this is essentially isomorphic with saying the agent chooses what it believes is most likely to get it what it desires.

The second (ambiguity) term on the right-hand side of the equation reflects the expected entropy (\({\text{H}}\)) of the likelihood function for a given state, where \({\text{H}}(p(o_{\tau } |s_{\tau } ) = \sum p(o_{\tau } |s_{\tau } ){\text{ln}}[p(o_{\tau } |s_{\tau } )]\). Entropy measures the uncertainty of a distribution, where a flatter (lower precision) distribution has higher entropy. Here, this simply means that an agent who minimizes \(G\) will also seek out states with the most precise (least ambiguous) mapping to observations. In other words, the agent will take actions expected to generate the most informative outcomes (e.g., turning on a light when in a dark room).

The posterior probability distribution over policies can now be expressed as:

This says that the most likely policies are those that minimize expected free energy, under constraints afforded by the quantities \(E\left( \pi \right)\) and F\(\left( \pi \right)\) (the \(\sigma\) symbol indicates a “softmax” function that converts the result back to a proper probability distribution with non-negative values summing to one). The vector \(E\left( \pi \right)\) is used to encode a fixed prior over policies that can be used to model habits. The \(F\left( \pi \right)\) term scores the variational free energy of past and present observations under each policy (i.e., the evidence these observations provide for each policy). In other words, it reflects how well each policy predicts the observations that have thus far been received. Note that this is only relevant when policies are anchored to a particular point in time (e.g., policies that involve particular sequences from some initial state, such as waiting for a period of time before responding to a ‘go cue’). With these kinds of policies, observable outcomes render some policies more likely than others. In the illustrative simulations below, we will use this kind of sequential policy to illustrate how the past and future underwrite policy selection.

The question now arises regarding how much weight to afford a policy based on habits or evidence from the past relative to the expected free energy of observations in the future. This balance depends upon the gamma (\(\gamma\)) term, which is a precision estimate for beliefs about expected free energy. This controls how much model-based predictions about outcomes (given policies) contribute to policy selection (i.e., how much model predictions about action outcomes are “trusted”) (Hesp et al., 2021). Lower values for \(\gamma\) cause the agent to act more habitually (i.e., more in line with the baseline behavior encoded in the \(E\left( \pi \right)\) vector) and with less confidence in its plans. The competition between \(E\left( \pi \right)\) and \(G\left( \pi \right)\) for influencing policy selection—as in cases with a precise \(E\left( \pi \right)\) distribution and/or a small to moderate value of \(\gamma\)—may in some cases be able to capture the conflict individuals experience when they feel an automatic “pull” toward one action despite the explicit belief that another action would be more effective for achieving a goal.

This precision is updated with each new observation, allowing the agent to increase or decrease its confidence (i.e., changes in how much it “trusts” its model of the future). The update to \(\gamma\) is via a hyperprior beta (\(\beta\)), the rate parameter of a gamma distribution, as follows:

Here, the arrow (\(\leftarrow\)) indicates iterative value updating (until convergence), \(\beta_{0}\) corresponds to a prior on \(\beta\), and \(p\left( {\pi_{0} } \right)\) corresponds to \(p\left( \pi \right)\) before an observation has been made to generate \(F\left( \pi \right)\); that is, \(p\left( {\pi_{0} } \right) = \sigma \left( {{\text{ln}}E\left( \pi \right) - \gamma G\left( \pi \right)} \right)\). In the context of the present paper, the quantity \(\left( {p\left( \pi \right) - p\left( {\pi_{0} } \right)} \right) \cdot \left( { - G\left( \pi \right)} \right)\) within the value that updates \(\beta\) (i.e., \(\beta_{update}\)) is of some relevance, due to literature discussing its potential link to affective states (Hesp et al., 2021). This term can be thought of as a type of prediction error indicating whether a new observation provides evidence for or against beliefs about \(G\left( \pi \right)\)—that is, whether \(G\left( \pi \right)\) is consistent or inconsistent with the \(F\left( \pi \right)\) generated by a new observation. When this update leads \(\gamma\) to increase in value (i.e., when confidence in \(G\left( \pi \right)\) increases), it is suggested that this can act as evidence for a positive affective state, while if it instead leads \(\gamma\) to decrease in value (i.e., when confidence in \(G\left( \pi \right)\) decreases), it is suggested that this can act as evidence for a negative affective state.

Returning to the equation for posteriors over policies above, we note that this is often referred to as encoding how “likely” a policy is. However, in psychological-level terms it could also be more intuitively described as encoding the overall “drive” to choose one course of action over another. As we have seen, this drive is composed of two major influences: the prior over policies \(E\left( \pi \right)\) and the expected free energy \(G\left( \pi \right)\). While \(E\left( \pi \right)\) maps well to habitual influences, \(G\left( \pi \right)\) reflects the inferred value of each policy based on beliefs (e.g., \(p(o_{\tau } |\pi )\) and \(p(o_{\tau } |s_{\tau } )\)) and desired outcomes (i.e., \(p\left( {o_{\tau } } \right)\)). The most likely policy under \(G\left( \pi \right)\)—i.e., the policy with the lowest expected free energy—could therefore be plausibly identified with the intentions of the agent, which also follow from beliefs and desires in the BDI model. In the absence of habit-like influences, this intention would become the policy the agent felt most driven to choose in \(p\left( \pi \right)\).

However, even when chosen policies are determined by intentions, this need not always translate into congruent action. This is because standard precision parameters (controlling noise in action selection or motor control) are also typically included (especially when fitting active inference models to empirical data). In dAI models, this takes the form:

Here \(\alpha\) is an inverse temperature parameter, where lower values increase the probability that enacted behaviors will differ from those entailed by the most likely policy. In simulations, the precision is usually set to a very high value—so that the action is sampled from the policy with the greatest posterior probability.

This completes our brief review of the mathematical framework that underwrites active inference. Having now discussed the active inference formulation, we turn to the main issue that we seek to resolve.

6 Addressing concerns about the (apparent) purely doxastic ontology of active inference

The problem of concern to us here is whether the sub-personal (i.e., non-conscious) inferential processes derived from the FEP—and those under dAI in particular—can be reconciled with, or instead represent a challenge to, traditional folk-psychological descriptions of planning and decision-making that include conative ontologies. Specifically, while there are fairly straightforward analogues to beliefs and intentions in active inference models, it has been argued that there is nothing at the level of the mathematics that—at least at first glance—can be mapped to folk-psychological desires (Yon et al., 2020).

The worry is that if active inference models can explain cognition and decision-making without appealing to constructs that can be mapped onto the commonsense notion of desires, then this could be seen as threatening our intuitive, folk-psychological understanding of ourselves as agents. Such a situation would also pressure the traditional BDI model of folk psychology that is prominent in philosophy (e.g., Bratman, 1987). The BDI model is a model of human agency that explains what it means to act intentionally. In the BDI model, beliefs (of the factual and instrumental sort) and desires combine to form intentions. For instance: I desire food, I believe there is food in the fridge, and I believe that going to the fridge is a means of obtaining food, so I form the intention to go to the fridge to get food). The problem that arises here is that, if one appeals only to the formal properties of the constructs posited by active inference (i.e., probability distributions over states that are “belief-like,” at least prima facie), then the intention-formation processes that figure in dAI break from folk psychology. Instead of a belief-desire-intention model, we have something like a belief-belief-intention model; e.g., I believe a priori that whatever I do will lead me to observe myself eating food, that there is food in the fridge, and that going to the fridge is a means of obtaining food, and so I form the intention to go to the fridge to get food). This seems to be in tension with first-person experience. For example, when hungry, I do not experience myself as believing that “whatever I do will lead me to eat food”; in fact, I can desire food while being concerned specifically because I don’t believe I will find food to eat.

Over the past several years, much has been made of this apparent lack of desires within dAI, especially within the philosophy of cognitive science. In some cases, this concern has also targeted mAI, although this can be seen as misplaced once mAI is understood as purely a theory of motor control after decisions have been made.Footnote 3

One prominent example concern is the “dark room problem” (Friston et al., 2012; Seth et al., 2020; Sun & Firestone, 2020; Van de Cruys et al., 2020). In a nutshell, the concern is that, if agents only act to minimize prediction error—as opposed to acting under the impetus of a conative, desire-like state—then they ought to simply seek out very stable, predictable environments (such as a dark room) and stay there. They would have no reason to leave the dark room without desires. Thus, something like expected rewards, goals, desires, etc. would be required to account for the motivation to leave a dark room.

As the reader may already gather, this concern is somewhat misplaced in the context of dAI (Badcock et al., 2019; Seth et al., 2020; Van de Cruys et al., 2020). This is for at least two reasons. First, the phenotype-specific prior expectations that an organism acts to fulfill are usually inconsistent with staying in a dark room (e.g., because organisms “expect” [mathematically speaking] to perform homeostasis-preserving actions, such as seeking out water when dehydrated). As we have seen, this follows directly from the core formalism that underwrites active inference. That is, contrary to the thrust of sporadic criticisms, the \(p\left( {o_{\tau } } \right)\) term associated with an organism’s preferences is not an ad hoc addition to save dAI from a fatal objection, but a necessary consequence when deriving the form of the equation for expected free energy—given that an organism must continue to make some observations with a higher probability than others in order to remain alive.

Second, as we have seen above, the expected free energy also entails a type of information-seeking drive that would motivate an agent to turn on a light in a dark room simply because it minimizes ambiguity (e.g., enabling the agent to ‘see’ what is in the room). Minimizing \(G\) in this way also drives the agent to seek out observations that will reduce uncertainty about the best way to (subsequently) bring about phenotype-congruent (preferred) outcomes. Here, information gain is equal to this reduction in uncertainty (i.e., expected surprise or prediction error); so, an agent seeking to minimize expected free energy will first sample informative (salient) observations. Another way to put this is that, under dAI, an agent doesn’t simply seek to minimize prediction-error with respect to its current sensory input; it instead seeks to minimize prediction-error with respect to its global beliefs about the environment. This actually entails seeking out the observations expected to generate prediction errors, such that uncertainty is minimized for the generative model as a whole.

Another key aspect of this type of global prediction-error minimization processes is that it pertains not only to beliefs about states in the present, but also to beliefs about the past and the future. For example, turning on a light updates beliefs not only about the room an individual is currently in, but also about: (1) the room they were in prior to turning on the light, and (2) the room they expect to be in in the future if they choose to sit still versus walk out the door. This is fundamental in the sense that dAI brings a new set of unknowns to the table; namely, the agent needs to infer the plans (policies) that are being enacted over time. This means there is a distinction between minimizing prediction error in the moment and forming beliefs about what to do based upon minimizing the prediction error expected following an action (i.e., where minimizing expected prediction error is equivalent to minimizing uncertainty). Thus, in addition to the drive to generate preferred outcomes, it is this sensitivity to epistemic affordances that dissolves concerns such as the dark room problem. As noted above, the first thing you do when entering a dark room is to turn on a light to resolve ambiguity and maximize information gain.

This important example highlights the care that needs to be taken when using words like ‘surprise’. From the point of view of vanilla free energy minimization (i.e., mAI), surprise was read as surprisal (i.e., prediction errors in the moment). In contrast, the epistemic affordance of information gain in the future is more closely related to the folk psychological notion of ‘salience’ (e.g., hearing a sound and feeling motivated to look in the direction of the sound to learn what has occurred). This imperative to minimize expected free energy—via minimization of uncertainty (i.e., expected surprise) through actively seeking informative observations—therefore aligns well with our folk-psychological concept of curiosity.

It is also worth noting that dAI makes an additional distinction between the aforementioned drive to minimize uncertainty about states and the further drive to learn the parameters of a generative model—such as learning the probability of observations given states (e.g., visiting a new place to see what it’s like to be there). This has been referred to as ‘intrinsic motivation’ (Barto et al., 2013; Oudeyer & Kaplan, 2007; Schmidhuber, 2010) as well as the drive to seek ‘novelty’ (Schwartenbeck et al., 2019), but more generally involves a drive to learn what will be observed when visiting an unfamiliar state. Thus, in addition to desires, dAI captures familiar folk-psychological experiences associated with the drive to both know about one’s current state and learn what will happen when choosing to move to other states (among other parameters in a generative model).

A further aspect of the dAI formalism worth considering here is the prior over policies, \(E\left( \pi \right)\). As touched on above, when an agent repeatedly chooses a policy, this term increases the probability that the agent will continue to select that policy in the future. At the level of the formalism, this corresponds to an agent coming to “expect” that it will choose a policy simply because it has chosen that policy many times in the past. This can be thought of as a type of habitization process, but it doesn’t have any direct connection to preferred outcomes. This is because \(E\left( \pi \right)\) is not informed by any other beliefs in the agent’s model. The resulting effect is that, if a policy has been chosen a sufficient number of times in the past, future behavior can become insensitive to expected action outcomes (i.e., similar to outcome desensitization effects observed empirically; e.g., see Dickinson, 1985; Graybiel 2008). However, in many cases this type of habit formation will be indirectly linked to preferred outcomes. For example, under the assumption that the agent repeatedly chose a policy because it was successful at maximizing reward, \(E\left( \pi \right)\) would come to promote selection of this reward-maximizing policy directly (and without the need for other model-based processes, which can have advantages in terms of minimizing computational/metabolic costs). As in outcome desensitization, this only becomes problematic when contingencies in the environment change over time. This highlights an important distinction between this type of habit formation and other mechanisms promoting habit-like patterns of behavior in dAI. For example, actions can also become resistant to change after repeated experience because agents build up highly confident beliefs about the reward probabilities under each action; e.g., within \(p(o_{\tau } |s_{\tau } )\). If contingencies change, it can take a very large number of trials for agents to unlearn such beliefs. However, unlike with the influence of \(E\left( \pi \right)\), a direct sensitivity to preferred outcomes still remains present in this case (although diminished).

The main point here, however, is that building up a prior over policies in \(E\left( \pi \right)\) offers yet another reason that dAI agents will not remain in dark rooms. This is because, before building up such priors, policies will be chosen to gain information and/or achieve preferred outcomes. After repeated policy selection, these priors will then simply solidify those patterns of behavior. At the level of the formalism, this involves a Bayesian belief about which policies will be chosen (and therefore does not have a desire-like world-to-mind direction of fit). However, at the psychological level, \(E\left( \pi \right)\) appears to correspond well to cases where individuals feel compulsive motivations to act in particular ways, despite contrary beliefs about expected action outcomes.

To summarize, dark room-style problems, which arise from the apparent lack of desires in dAI, simply do not occur when behavior is explicitly simulated using active inference (as we also show in quantitative simulations below in Fig. 1). This is because decision-making in dAI is motivated by both an intrinsic curiosity (information-seeking drive) and a drive to solicit a priori expected outcomes (prior preferences) under the generative model (i.e., which play a functional role analogous to desired outcomes). Learning priors over policies can also result in solidification of this information-/reward-seeking behavior. We elaborate on these points below.

Aside from the dark room issue, a second concern worth briefly highlighting here is that dAI can appear to preclude pessimistic expectations. To return to our example above about skydiving with a broken parachute: smashing into the ground is highly expected but certainly not consistent with phenotype-congruent expectations. In other words, we can expect one thing but prefer another. This concern can be resolved by highlighting two different types of expectations in dAI (i.e., prior expectations over states vs. over outcomes), which we demonstrate more formally below.

Nevertheless, the relationship between dAI’s sub-personal (algorithmic and implementation) level of description and its appropriate conceptualization at a folk-psychological level of description have yet to be fully elaborated. In the following, we show a plausible mapping between the dAI formalism and levels of description that appeal to BDI-type ontologies. We demonstrate how apparent conflicts between folk psychology and dAI largely disappear when highlighting the way specific Bayesian beliefs at the mathematical level of description can be straightforwardly identified with desires at a psychological level of description (i.e., that these Bayesian beliefs play the same functional role as representations of desired outcomes). These results are broadly consistent with arguments within a recent paper by Clark (2019). This recent paper considers a number of concerns about the presence of desires/motivations in the broader predictive processing paradigm and also shows how these can be accommodated by various types of interconnected prior beliefs. However, there are some important differences between our argument and these previous considerations. First, we map folk-psychological constructs to specific elements of the formalism employed in current implementations of active inference, as opposed to the broader theoretical constructs within the predictive processing paradigm.Footnote 4 Second, while this prior paper defended the idea that the single construct of a prior belief plays the role of both beliefs and desires, we highlight how distinct elements in the dAI formalism can be mapped to beliefs and desires. We also motivate a squarely non-eliminativist position with respect to such constructs and suggest that the set of theoretical primitives out of which active inference models are built is sufficient, not only to recover the categories of folk psychology, but also to potentially nuance them with more fine-grained distinctions.

7 Desired outcomes in active inference

In the previous sections, we have highlighted quantities in the formalism that are clear candidates for beliefs and intentions. Intentions map straightforwardly to policies with the lowest values for \(G\left( \pi \right)\). Almost all other variables in a dAI model are candidates for traditional psychological beliefs if used to model the right kinds of high-level cognitive processes. For example, depending on certain modelling choices: “do I believe I’m in the living room or the kitchen?” could correspond to \(p\left( {s_{\tau } } \right)\); “do I believe I will fall asleep if I stay in the living room?” could correspond to \(p\left( {s_{\tau + 1} |s_{\tau } , \pi } \right)\); “do I believe I will feel my heart beat faster if I’m afraid?” could correspond to \(p(o_{\tau } |s_{\tau } )\); and “do I believe I will feel full if I eat another bite?” could correspond to \(q(o_{\tau } |\pi )\). However, as noted above, this will only be the case when modelling these types of high-level processes. The same exact abstract quantities could apply to fully unconscious processes involving things like prior expectations about edge orientations in visual cortex, expected changes in blood pressure given a change in parasympathetic tone, and so forth.

To complete the mapping to the BDI structure of folk psychology now requires incorporating desires. Here, we argue that desired outcomes map in a fully isomorphic manner to the prior preferences, \(p\left( {o_{\tau } } \right)\), incorporated within the expected free energy; that is, the set of Bayesian beliefs encoded in \(p\left( {o_{\tau } } \right)\) plays a functional role identical to representations of desired outcomes at a psychological level, whenever applied to model the relevant (presumably high) levels and types of cognitive processes. At the high levels in a hierarchical model associated with folk psychology, desired outcomes are simply the posterior beliefs at the next level below (e.g., desiring to observe oneself in the state of being wealthy). In contrast, at the lowest levels of the neural hierarchy, where observations correspond to sensory data, it is expected that \(p\left( {o_{\tau } } \right)\) fixes homeostatic ranges of variables within the body to maintain survival (Pezzulo et al., 2015, 2018; Smith et al., 2017; Stephan et al., 2016; Unal et al., 2021). In this case, the brain has an unconscious drive to keep blood glucose levels, blood osmolality levels, heart rate, and other such variables within ranges consistent with long-term survival. These drives are not ‘desires’ in the conscious, folk-psychological sense, but they are expected to ground the rest of the hierarchical system to (learn to) desire and seek out other things precisely because they ultimately maintain observations of visceral states within these “expected” homeostatic ranges. For example, I might learn to desire going to a specific restaurant because being in the “at that restaurant” state is expected to generate the observation of food, and eating food is expected (lower in the hierarchy) to generate the observation of increased blood glucose levels, and so forth (Tschantz et al., 2021). Or, if a cue is observed that predicts an impending drop in blood glucose levels, the brain may take the “action” of temporarily increasing blood glucose levels (changing the setpoint in a visceral reflex arc) to counter that impending drop (Stephan et al., 2016; Unal et al., 2021). This idea of (unconscious) mechanisms promoting the selection of either skeletomotor or visceromotor actions now so as to prevent anticipated future deviations from homeostatic ranges is referred to as allostasis (for specific generative models and simulations, see Stephan et al., 2016; Tschantz et al., 2021).

When a dAI model is used to simulate conscious, goal-directed choice (e.g., choosing what restaurant to go to, but not choosing whether to increase heart rate), our argument is that \(p\left( {o_{\tau } } \right)\) will always (and must) be able to successfully fill the functional role of representing desired outcomes within the BDI framework. That is, any case of desire-driven behavior will be modellable using the right specification of \(p\left( {o_{\tau } } \right)\). For example, if the highest value in an outcome space was specified for observing “tasting ice cream” in \(p\left( {o_{t} } \right)\), and the policy space included “don’t move” or “walk to the ice cream truck and buy ice cream”, a dAI agent will infer that walking to the ice cream truck and buying ice cream is the policy with the lowest expected free energy—that is, it will form the intention to go buy ice cream. In addition, when considering the range of cases involving goal-directed choice, we have been unsuccessful at identifying examples where \(p\left( {o_{\tau } } \right)\) would play a role inconsistent with representing desired outcomes. As such, if the semantics and functional role of desired outcomes are never inconsistent with the role of \(p\left( {o_{\tau } } \right)\), and the role of \(p\left( {o_{\tau } } \right)\) is always consistent with the semantics and functional role of desires, then active inference does effectively contain desired outcomes.

This is consistent with recent empirical work that has used dAI to model behavior in reinforcement learning and reward-seeking tasks (Markovic et al., 2021; Sajid et al., 2021; Smith et al., 2020, 2021a, 2021b), and with other work demonstrating that dAI meets criteria for Bellman optimality (i.e., optimal reward-seeking within reinforcement learning) in certain limiting cases (Da Cost et al., 2020b). In these cases, \(p\left( {o_{\tau } } \right)\) is used to encode the strength of the relative preferences for winning and losing money or points (e.g., subjective reward value), being exposed to positive or negative emotional stimuli, and so forth. It is worth highlighting, however, that unlike reinforcement learning agents, the goal of dAI agents is not to maximize cumulative reward per se. Instead, dAI agents seek to reach (and maintain) a target distribution (where this distribution can be interpreted as rewarding). Indeed, recent work building on dAI has shown how both perception and action can be cast as jointly minimizing the divergence from this type of target distribution with distinct directions of fit—and illustrated how both information-seeking and reward-seeking behavior emerge from this objective (Hafner et al., 2020). This underlies the close link with maintaining homeostasis discussed above, and the selection of allostatic policies that can prevent predicted future deviations from homeostasis.

This also has some theoretical overlap with current models of motivated action in experimental psychology. For example, incentive salience models posit that motivation is directed at desired states/incentives—enhanced by the current (abstract) distance from those states and modulated by cues that signify the availability of actions to reach those states (e.g., being hungry and perceiving cues signifying the availability of food will each magnify drives to eat; Berridge, 2018). Homeostatic reinforcement learning models have also posited links between reward magnitudes and the distance with which one travels toward homeostatic states (Keramati & Gutkin, 2014). In reinforcement learning tasks such as those mentioned above, drives toward homeostasis-based target distributions can then be associated with cues (e.g., money, social acceptance) that predict the ability to reach those distributions. During learning within such tasks, individuals can then come to look as though they prefer other observations because they learn (within \(p(o_{\tau } |s_{\tau } )\)) that those observations are generated by states that also generate preferred outcomes (e.g., looking as though they have a desire to hear a tone due to learning that a tone is generated by a state that also generates a reward).

Another important point to highlight is that because \(p\left( {o_{\tau } } \right)\) also symmetrically encodes undesired or aversive outcomes, this motivates intentions to avoid those outcomes and can implement avoidance learning within \(p(o_{\tau } |s_{\tau } )\) in equivalent fashion (e.g., coming to look as though one dislikes seeing a light due to the expectation that it is generated by a state that also generates a painful shock). So dAI can successfully capture both ends of this conative axis.

However, it is important to clarify that the associative reward learning within \(p(o_{\tau } |s_{\tau } )\) described above (akin to learning a reward function in which agents acquire a mapping from states to rewards/punishments) does not involve changing the shape of the prior preference distribution \(p\left( {o_{\tau } } \right)\) itself. It instead involves learning which states/actions will reliably generate preferred observations. Learning prior preferences themselves would instead entail that an agent comes to prefer outcomes more and more each time they are observed (i.e., independent of their relationship to other preferred outcomes). For example, simply hearing an initially neutral tone several times in the absence of reward would, under this mechanism, lead that tone to be more and more preferred (i.e., because its prior probability would continually increase). This could be one way of accounting for “mere exposure” effects (Hansen & Wänke, 2009; Monahan et al., 2000), in which brief (and even subliminal) presentation of neutral stimuli can increase the preference for those stimuli. However, individuals show a contrasting preference for novel stimuli in other cases (e.g., preferences for familiar faces but for novel scenes; Liao et al., 2011), and such effects might also be explained through associative learning or epistemic drives (e.g., familiar faces may be associated with safe interactions, whereas novel scenes might carry greater amounts of information). That said, epistemic drives and preference learning may also interact. For example, recent simulation work has modelled the behavior of dAI agents within novel environments that do not contain rewards (Sajid et al., 2021). In such cases, agents actively explore the environment until uncertainty is resolved, and then gravitate toward the states that were visited most frequently (i.e., which were most “familiar” and generated the outcomes most often observed during epistemic foraging). Related simulation work has also explored how organisms learn action-oriented models of their ecological niche, where these models need not be fully accurate—but simply contain the generative structure and prior preferences most useful for guiding adaptive behavior within that niche (Tschantz et al., 2020).

Learning \(p\left( {o_{\tau } } \right)\) could offer an alternative to modelling associative learning in some cases, but this would also make distinct predictions in others. For example, unlike learning \(p(o_{\tau } |s_{\tau } )\), learning \(p\left( {o_{\tau } } \right)\) would also entail that strongly non-preferred outcomes (e.g., getting stabbed in the leg) would become more and more preferred if the agent were forced to continually endure them—to the point that the agent would eventually seek them out voluntarily. It is unclear how plausible these predictions are in many cases, and they would depend on a number of assumptions. As one example, assumptions would need to be made about the initial precision of \(p\left( {o_{\tau } } \right)\) prior to learning, where high precision could significantly slow preference changes (e.g., perhaps negative preferences for biological imperatives such as tissue damage are sufficiently precise that they effectively prevent preference learning). That said, there are also cases where individuals seek out pain or choose to remain in long-term maladaptive (e.g., abusive) situations. However, these cases are complex, and explanations of such behaviors have been proposed based on associative reinforcement learning, uncertainty avoidance, and various types of interpersonal dependence that need not appeal to familiarity effects (Crapolicchio et al., 2021; Lane et al., 2018; Nederkoorn et al., 2016; Reitz et al., 2015). It will be important to test the competing predictions of associative learning and preference learning through model comparison in future empirical research. A central point, however, is that—while the possibility of preference learning remains consistent with the idea that \(p\left( {o_{\tau } } \right)\) always represents desired outcomes in the BDI framework (e.g., the agent just comes to desire getting stabbed in the leg)—the proposed mechanisms of preference learning in dAI could come into tension with folk-psychological intuitions and allow empirical research to find evidence for one versus the other (that is, if the role of \(p\left( {o_{\tau } } \right)\) in the dAI formalism is taken to be more than a convenient mathematical tool for specifying reward).

Another learning-related point worth briefly returning to involves habit acquisition, which is also widely studied empirically. As touched upon above, the priors over policies encoding habits in dAI do not have a conative (world-to-mind) direction of fit and can appear purely epistemic from the perspective of the formalism.Footnote 5 However, when habits are acquired through repeated selection of policies that maximize desired outcomes, they will indirectly drive decision-making toward continuing to achieve those outcomes (i.e., if environmental contingencies are stable). Thus, one could see the implicit logic underlying their functional role as indirectly serving conative aims (and note that this also applies to solidifying effective information-seeking behavior). These habits also compete with explicit intentions (i.e., the influence of expected free energy) for control of action selection in dAI. While this doesn’t correspond well to desires, it does appear capable of capturing other types of felt motivational force. Namely, this competition has a plausible isomorphism with cases where one feels a strong urge to act in one way—despite an explicit belief that adopting a different course of action would be more effective. This dynamic also has a resemblance to theories in reinforcement learning that posit an uncertainty-based competition between model-based and model-free control, which have also garnered empirical support (Daw et al., 2005, 2011; Dolan & Dayan, 2013). Thus, to the extent that this type of motivational force is considered broadly conative in nature, it may capture another relevant (and psychologically intuitive) aspect of the phenomenology of decision-making.

As stressed above, however, this does not plausibly map to the motivational force of desires themselves, since it does not have the correct direction of fit. In contrast, there are other elements of the formalism that do correspond well to the motivational force or felt urgency of desires. We turn to these next.

8 The motivational force of desires

As opposed to desired outcomes, one might also wonder about a different reading of “desire”. This reading is not about the “thing that is desired”, but instead about the transient presence of the motivational force to approach a thing that we desire (or to avoid something that we find aversive). We now illustrate how this aspect of conative states, which is arguably also a part of folk psychology, can be captured within dAI.

The key point here is that \(p\left( {o_{\tau } } \right)\) does not only encode which outcomes are more desired than others. It also encodes how strongly each is desired. This corresponds to the precision of the distribution over outcomes. For example, assume there are two observations, “ice cream” and “no ice cream”. Now consider the following possible distributions:

In this case, the first and second distributions both specify preferences for ice cream (left entry), but the second distribution entails a stronger motivational force than the first.

To see how these can have distinct, motivation-like influences on forming intentions to achieve desired outcomes, we show some simple example simulations in Fig. 1 [see the “Appendix” and Supplementary Code for technical details regarding the generative model, and see Smith et al. (2022) for a detailed explanation of how these dAI simulations are implemented; Supplementary Code can be found at: https://github.com/rssmith33/Active-Inference-and-Folk-Psychology]. To illustrate this, however, we will add an additional element to the ice cream example. Namely, we will simulate a case where there is ice cream in the fridge, but where the kitchen is currently dark and so the agent doesn’t know whether the fridge is to the left or to the right. In this case, the agent can either first flip a light switch to see where the fridge is, or it can just guess and try to “feel its way” to the left or the right. Crucially, if the agent is hungry for ice cream, then the longer it takes to find the ice cream, the more aversive the hunger becomes. So, getting ice cream sooner is more preferred than getting it later (if hungry).

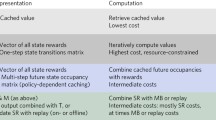

Simulation of an active inference agent deciding whether to turn on a light in a dark room when motivated versus not motivated to find the fridge to eat some ice cream. In the 3 “action” panels, cyan dots indicate chosen actions and darker colors indicate higher probability (confidence) in the choice of one action over others. Bottom panels show observed outcomes (cyan dots) and prior preference distributions \({\text{p}}\left( {{\text{o}}_{{\uptau }} } \right)\), where darker colors indicate stronger desires. The right panel shows the value (cyan) and rate of change in value (black) of the precision estimate for expected free energy (γ) previously linked to affective states (Hesp et al., 2021). In the absence of a desire (flat prior preference distribution; left), or under a weak (less urgent) desire for ice cream, the agent immediately chooses to turn on the light. Under a weak desire, the agent then confidently chooses to approach the fridge to get the ice cream. Unlike when desires are present, no change in γ occurs in these simulations when desires are absent. Under a strong desire, getting ice cream is urgent and so the agent takes a guess about where the fridge is without first flipping the light switch (despite being unconfident about whether to go left or right). See main text for further discussion. We do not describe the generative model or simulations in more detail here, but we provide additional information in the “Appendix” and Supplementary Code to reproduce them. Also see Smith et al. (2022) for details about how these simulations are implemented using the dAI formalism

In the “No Desire” panel of the figure, the agent is not hungry; that is, \(p\left( {o_{\tau } } \right)\) is a flat distribution (bottom box). In this case, the agent still confidently chooses to flip the switch (minimizing uncertainty about the location of the fridge), but then has no specific drive for what to do next (grey distribution over actions in the second column of the “actions” box), arbitrarily choosing to go right (cyan dot). This illustrates how an agent who minimizes expected free energy will act to leave a dark room simply to maximize information gain, even with no desired outcomes. In the “Weak Desire” panel, we make the agent mildly hungry, where the black and white colors in the bottom box indicate a higher value for ice cream and a lower value for no ice cream in \(p\left( {o_{\tau } } \right)\), respectively. In this case, it chooses to flip the switch and then confidently goes to get ice cream in the fridge on the left. Here, turning on the light is strategic in helping the agent achieve its desires. In the “Strong Desire” panel, we make the agent very hungry (although not clear in the figure, a greater difference in values in \(p\left( {o_{\tau } } \right)\) has been set for observing ice cream vs. no ice cream). Because getting the ice cream is urgent, the agent becomes risk-seeking and immediately goes left without taking time to turn on the light. In this example simulation, the agent gets lucky and finds the ice cream, but it would be expected to choose incorrectly 50% of the time. This illustrates how the precision of \(p\left( {o_{\tau } } \right)\) represents a plausible candidate for the motivational aspect of desire. (Although we have not shown it here, one can also use a baseline preference level for neutral observations to distinguish strongly preferred observations from strongly non-preferred observations, where an agent might instead become risk-averse if it strongly fears not getting ice cream.)

It is important to highlight that this proposed mapping from the precision of \(p\left( {o_{\tau } } \right)\) to magnitude of desire is not only of theoretical interest. In practice, several empirical studies have used model-fitting to identify the value of this precision in individual participants. For example, two studies in psychiatric samples fit this precision within the context of an approach-avoidance conflict task to identify differences in motivations to avoid exposure to unpleasant stimuli; and to identify continuous relationships between this precision and self-reported anxiety and decision uncertainty (Smith et al., 2021a, 2021b). Two other studies in substance users identified individual differences in this precision value while participants performed a three-armed bandit task designed to examine the balance of information- versus reward-seeking behavior (Smith et al., 2020, 2021c). A fifth study quantified this precision while examining the neural correlates of uncertainty within a risk-seeking task (Schwartenbeck et al., 2015). Finally, a sixth study evaluated this precision to explain differences in patterns of selective attention (Mirza et al., 2018). These examples illustrate how, when understood in the context of the current discussion, the precision of \(p\left( {o_{\tau } } \right)\) can provide a precise quantification of differences in the motivational force or felt urgency to achieve a desired outcome.

It is worth noting that one can also see this motivating force as corresponding to the magnitude of the KL divergence, \(D_{KL} [q(o_{\tau } |\pi )|{|}p\left( {o_{\tau } } \right){]}\), within the expected free energy; i.e., the “risk” term. This is because stronger preference values over rewarding outcomes in \(p\left( {o_{t} } \right)\) increase this KL divergence and lead the agent to seek reward over information gain. Thus, the precision of prior preferences or the magnitude of this KL divergence can equivalently be identified as playing the functional role of desire-based motivation.