Abstract

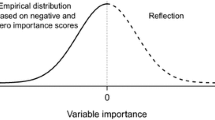

This paper is about variable selection with the random forests algorithm in presence of correlated predictors. In high-dimensional regression or classification frameworks, variable selection is a difficult task, that becomes even more challenging in the presence of highly correlated predictors. Firstly we provide a theoretical study of the permutation importance measure for an additive regression model. This allows us to describe how the correlation between predictors impacts the permutation importance. Our results motivate the use of the recursive feature elimination (RFE) algorithm for variable selection in this context. This algorithm recursively eliminates the variables using permutation importance measure as a ranking criterion. Next various simulation experiments illustrate the efficiency of the RFE algorithm for selecting a small number of variables together with a good prediction error. Finally, this selection algorithm is tested on the Landsat Satellite data from the UCI Machine Learning Repository.

Similar content being viewed by others

References

Ambroise, C., McLachlan, G.J.: Selection bias in gene extraction on the basis of microarray gene-expression data. Proc. Natl. Acad. Sci. 99, 6562–6566 (2002)

Archer, K.J., Kimes, R.V.: Empirical characterization of random forest variable importance measures. Comput. Stat. Data Anal. 52, 2249–2260 (2008)

Auret, L., Aldrich, C.: Empirical comparison of tree ensemble variable importance measures. Chemometr. Intell. Lab. Syst. 105, 157–170 (2011)

Bi, J., Bennett, K.P., Embrechts, M., Brenemanand, C.M., Song, M.: Dimensionality reduction via sparse support vector machines. J. Mach. Learn. Res. 3, 1229–1243 (2003)

Biau, G., Devroye, L., Lugosi, G.: Consistency of random forests and other averaging classifiers. J. Mach. Learn. Res. 9, 2015–2033 (2008)

Blum, A.L., Langley, P.: Selection of relevant features and examples in machine learning. Artif. Intell. 97, 245–271 (1997)

Breiman, L.: Bagging predictors. Mach. Learn. 24, 123–140 (1996)

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Breiman, L., Friedman, J.H., Olshen, R.A., Stone, C.J.: Classification and Regression Trees. Wadsworth Advanced Books and Software, Pacific Grove (1984)

Bühlmann, P., Rütimann, P., van de Geer, S., Zhang, C.-H.: Correlated variables in regression: clustering and sparse estimation. J. Stat. Plan. Inference 143, 1835–1858 (2013)

Díaz-Uriarte, R., Alvarez de Andrés, S.: Gene selection and classification of microarray data using random forest. BMC Bioinform. 7, 3 (2006)

Genuer, R., Poggi, J.-M., Tuleau-Malot, C.: Variable selection using random forests. Pattern Recogn. Lett. 31, 2225–2236 (2010)

Grömping, U.: Variable importance assessment in regression: linear regression versus random forest. Am. Stat. 63, 308–319 (2009)

Guyon, I., Elisseeff, A.: An introduction to variable and feature selection. J. Mach. Learn. Res. 3, 1157–1182 (2003)

Guyon, I., Weston, J., Barnhill, S., Vapnik, V.: Gene selection for cancer classification using support vector machines. Mach. Learn. 46, 389–422 (2002)

Hapfelmeier, A., Ulm, K.: A new variable selection approach using random forests. Comput. Stat. Data Anal. 60, 50–69 (2013)

Haury, A.-C., Gestraud, P., Vert, J.-P.: The influence of feature selection methods on accuracy, stability and interpretability of molecular signatures. PLoS One 6, 1–12 (2011)

Ishwaran, H.: Variable importance in binary regression trees and forests. Electron. J. Stat. 1, 519–537 (2007)

Jiang, H., Deng, Y., Chen, H.-S., Tao, L., Sha, Q., Chen, J., Tsai, C.-J., Zhang, S.: Joint analysis of two microarray gene-expression data sets to select lung adenocarcinoma marker genes. BMC Bioinform. 5, 81 (2004)

Kalousis, A., Prados, J., Hilario, M.: Stability of feature selection algorithms: a study on high-dimensional spaces. Knowl. Inf. Syst. 12, 95–116 (2007)

Kohavi, R., John, G.H.: Wrappers for feature subset selection. Artif. Intell. 97, 273–324 (1997)

Křížek, P., Kittler, J., Hlaváč, V.: Improving stability of feature selection methods. Comput. Anal. Images Patterns 4673, 929–936 (2007)

Lazar, C., Taminau, J., Meganck, S., Steenhoff, D., Coletta, A., Molter, C., de Schaetzen, V., Duque, R., Bersini, H., Nowe, A.: A survey on filter techniques for feature selection in gene expression microarray analysis. IEEE/ACM Trans. on Comput. Biol. Bioinform. 9, 1106–1119 (2012)

Louw, N., Steel, S.J.: Variable selection in kernel fisher discriminant analysis by means of recursive feature elimination. Comput. Stat. Data Anal. 51, 2043–2055 (2006)

Maugis, C., Celeux, G., Martin-Magniette, M.-L.: Variable selection in model-based discriminant analysis. J. Multivar. Anal. 102, 1374–1387 (2011)

Meinshausen, N., Bühlmann, P.: Stability selection. J. R. Stat. Soc. Ser. B 72, 417–473 (2010)

Neville, P.G.: Controversy of variable importance in random forests. J. Unified Stat. Tech. 1, 15–20 (2013)

Nicodemus, K.K.: Letter to the editor: on the stability and ranking of predictors from random forest variable importance measures. Brief. Bioinform. 12, 369–373 (2011)

Nicodemus, K.K., Malley, J.D.: Predictor correlation impacts machine learning algorithms: implications for genomic studies. Bioinformatics 25, 1884–1890 (2009)

Nicodemus, K.K., Malley, J.D., Strobl, C., Ziegler, A.: The behaviour of random forest permutation-based variable importance measures under predictor correlation. BMC Bioinform. 11, 110 (2010)

Rakotomamonjy, A.: Variable selection using svm based criteria. J. Mach. Learn. Res. 3, 1357–1370 (2003)

Rao, C.R.: Linear Statistical Inference and Its Applications. Wiley Series in Probability and Mathematical Statistics: Probability and Mathematical Statistics. Wiley, Hoboken (1973)

Reunanen, J.: Overfitting in making comparisons between variable selection methods. J. Mach. Learn. Res. 3, 1371–1382 (2003)

Scornet, E., Biau, G., Vert, J.-P.: Consistency of random forests. arXiv:1405.2881, (2014)

Strobl, C., Boulesteix, A.-L., Kneib, T., Augustin, T., Zeileis, A.: Conditional variable importance for random forests. BMC Bioinform. 9, 307 (2008)

Svetnik, V., Liaw, A., Tong, C., Wang, T.: Application of breiman’s random forest to modeling structure-activity relationships of pharmaceutical molecules. In Proceedings of the 5th International Workshop on Multiple Classifier Systems, vol. 3077, pp. 334–343 (2004)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B 58, 267–288 (1996)

Toloşi, L., Lengauer, T.: Classification with correlated features: unreliability of feature ranking and solutions. Bioinformatics 27, 1986–1994 (2011)

van der Laan, M.J.: Statistical inference for variable importance. Int. J. Biostat. 2, 1–33 (2006)

Zhu, R., Zeng, D., Kosorok, M.R.: Reinforcement learning trees. Technical report, University of North Carolina (2012)

Acknowledgments

The authors would like to thank Gérard Biau for helpful discussions and Cathy Maugis for pointing us the Landsat Satellite dataset. The authors also thank the two anonymous referees for their many helpful comments and valuable suggestions.

Author information

Authors and Affiliations

Corresponding author

Appendix: Proofs

Appendix: Proofs

1.1 Proof of Proposition 1

The random variable \(X_j^{\prime }\) and the vector \({\mathbf {X}}_{(j)}\) are defined as in Section 2:

since \( \mathbb {E}[ \varepsilon f({\mathbf {X}}) ] = \mathbb {E}[ f({\mathbf {X}}) \mathbb {E}[ \varepsilon | {\mathbf {X}} ] ] = 0 \) and \( \mathbb {E}[ \varepsilon f({\mathbf {X}}_{(j}) ] = \mathbb {E}( \varepsilon ) \mathbb {E}[ f({\mathbf {X}}_{(j}) ] = 0 \). Since the model is additive, we have:

as \(X_j\) and \(X_j^{\prime }\) are independent and identically distributed. For the second statement of the proposition, using the fact that \(f_j(X_j)\) is centered we have:

1.2 Proof of Proposition 2

This proposition is an application of Proposition 1 for a particular distribution. We only show that \( \alpha = C^{-1} \varvec{\tau }\) in that case.

Since \(({\mathbf {X}}, Y)\) is a normal multivariate vector, the conditional distribution of Y over \({\mathbf {X}}\) is also normal and the conditional mean \(f({\mathbf {x}}) = \mathbb {E}[Y|{\mathbf {X}}={\mathbf {x}}]\) is a linear function: \(f({\mathbf {x}}) = \sum _{j=1}^p \alpha _j x_j\) (see for instance Rao 1973, p. 522). Then, for any \(j \in \{1, \dots , p \}\),

The vector \(\alpha \) is thus solution of the equation \({\varvec{\tau }} = C \alpha \) and the expected result is proven since the covariance matrix C is invertible.

1.3 Proof of Proposition 3

The correlation matrix C is assumed to have the form \(C = (1-c) I_p + c \mathbbm {1}\mathbbm {1}^t\). We show that the invert of C can be decomposed in the same way. Let \(M = a I_p + b \mathbbm {1}\mathbbm {1}^t\) where a and b are real numbers to be chosen later. Then

since \(\mathbbm {1}^t \mathbbm {1}= p\). Thus, \(C M = I_d\) if and only if

which is equivalent to

Consequently, \(M^{-1}_{jk} = C^{-1}_{jk} = b\) if \(j \ne k\) and \(M^{-1}_{jk} = C^{-1}_{jj} = a+b\). Finally we find that for any \(j \in \{1\dots p\}\):

The second point derives from Proposition 2.

Rights and permissions

About this article

Cite this article

Gregorutti, B., Michel, B. & Saint-Pierre, P. Correlation and variable importance in random forests. Stat Comput 27, 659–678 (2017). https://doi.org/10.1007/s11222-016-9646-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-016-9646-1