Abstract

Software engineering researchers have, over the years, proposed different critical success factors (CSFs) which are believed to be critically correlated with the success of software projects. To conduct an empirical investigation into the correlation of CSFs with success of software projects, we adapt and extend in this work an existing contingency fit model of CSFs. To archive the above objective, we designed an online survey and gathered CSF-related data for 101 software projects in the Turkish software industry. Among our findings is that the top three CSFs having the most significant associations with project success were: (1) team experience with the software development methodologies, (2) team’s expertise with the task, and (3) project monitoring and controlling. A comprehensive correlation analysis between the CSFs and project success indicates positive associations between the majority of the factors and variables, however, in most of the cases at non-significant levels. By adding to the body of evidence in this field, the results of the study will be useful for a wide audience. Software managers can use the results to prioritize the improvement opportunities in their organizations w.r.t. the discussed CSFs. Software engineers might use the results to improve their skills in different dimensions, and researchers might use the results to prioritize and conduct follow-up in-depth studies on those factors.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Software industry has grown with incomparable pace during the past decades. The challenges of today’s software systems are different, in terms of increased complexity, size and diversity of demand, than the challenges of the systems 50 years ago (Boehm 2006). The challenges and advances in the field of software engineering have inspired process improvement models such as CMMI and SPICE, as well as software development paradigm shifts such as lean and agile models of software development. Although there are improvements, software development projects continue to have larger failure rates than traditional engineering projects (Savolainen et al. 2012). As a result, understanding the key factors underlying the success and failure of software projects is a critical subject of contemporary software engineering research.

The patterns of success and failure, often referred to as the Critical Success Factors (CSFs), are some of the best indicators of the lessons learned, adapted and used by software industry today. Systematic analyses of success and failure in software projects have been performed in many studies, e.g., (Ahimbisibwe et al. 2015; Lehtinen et al. 2014; Whitney and Daniels 2013; Hashim et al. 2013; Stankovic et al. 2013; Subiyakto and bin Ahlan 2013; Sudhakar 2012; Nasir and Sahibuddin 2011; McLeod and MacDonell 2011; Cerpa et al. 2010; Chow and Cao 2008; Agarwal and Rathod 2006; Weber et al. 2003; Pereira et al. 2008; Drew Procaccino et al. 2002). The notion of CSFs was first introduced by Rockart in 1979 (Stankovic et al. 2013) (Rockart 1979) as a method to help managers determine what information is the most relevant in order to successfully attain their goals in an increasingly complex world.

In this study, we aim to characterize the CSFs and analyze their correlations with the success of software projects by means of a questionnaire-based survey. Our study aims at determining the ranking of CSFs, which is a measure of the importance of CSFs for project success. The ranking is derived by quantitative ordering of the CSFs based on their correlations with software project success, for various aspects of project success perceptions. To achieve this goal, we have selected a CSF model, compiled and developed previously, based on a large-scale literature review of 148 primary studies (Ahimbisibwe et al. 2015) as the baseline. Using the Goal, Question, Metric (GQM) approach, we then systematically developed an online questionnaire. We distributed the survey to a network of our industry contacts and various channels including social media and technology development zones in Turkey. The survey gathered responses from 101 software projects during the period of August–September of 2015.

The results of the survey are the basis for a detailed discussion of the relations between CSF factors and perceptions of project success. Interesting findings from this study include identification of the relative significance of team factors such as team’s experience with the task and methodologies and organizational factors such as project monitoring/controlling, and insignificance of customer factors such as customer experience and almost all of the project factors such as level of specification changes. The contributions of this paper are three-fold:

-

A review of the existing CSF classification schemes and applying necessary improvements to one of the most compressive models (Ahimbisibwe et al. 2015),

-

Contributing further empirical evidence in the area of project outcomes and CSFs by assessing the correlations among the CSFs and various project success metrics,

-

Serving as the first work to empirically assess changes in importance rankings of CSFs for different company sizes (larger versus smaller companies), different project sizes (in terms of number of team members) and also different software development methodologies (Agile versus traditional).

The remainder of this paper is structured as follows. A review of background and the related work is presented in Section 2 including the contingency fit model of CSFs that we used. Section 3 discusses the research goal and research methodology. Section 4 presents the demographics and independent statistics of the dataset. Section 5 presents the results and findings of the survey. We then provide the discussions and implications of the findings in Section 6. Finally, in Section 7, we draw conclusions, and discuss our ongoing and future works.

2 Background and related work

2.1 Related work

CSFs are defined as the key areas where an organization must perform well on a consistent basis to achieve its mission (Gates 2010). It is argued by (Caralli 2004) that managers implicitly know and consider these key areas when they set goals and as they direct operational activities and tasks that are important to achieving goals. When these key areas of performance are made explicit, they provide a common point of reference for the entire organization. The project management literature in general, and the software project management literature in particular, has seen a significant number of studies about CSFs.

There are a few studies in the literature that have attempted to reveal CSFs of software projects either by review of the related literature or by empirical investigation (e.g., using opinion surveys). There are many “primary” studies on success factors and CSFs in software projects and it is thus impossible to review and discuss each of those studies in this paper. Thus, we selected a subset of these studies as we list in Appendix Table 9. We provide a brief summary of those papers in the following.

The studies (Lehtinen et al. 2014; Whitney and Daniels 2013) investigated the reasons of project failures. The study (Weber et al. 2003) focused on predicting project outcomes. Six of the related studies are based on literature review of CSFs. Among these, studies (Ahimbisibwe et al. 2015; Sudhakar 2012; McLeod and MacDonell 2011) offered conceptual models of CSFs for software projects after reviewing and synthesizing the relevant literature. These studies ranked CSFs based on their occurrence frequencies in the literature. Some studies, such as (Ahimbisibwe et al. 2015), identified and ranked the CSFs for plan-driven and agile projects separately, while studies such as (Sudhakar 2012) did not consider such a distinction. Using a literature review of 177 empirical studies, the study in (McLeod and MacDonell 2011) proposed 18 CSFs in four different dimensions: institutional context, people and action, project content, development processes.

Some of the studies aimed at empirical validation of the CSFs. Such studies are investigations of CSFs either by surveys or case studies, e.g., (Lehtinen et al. 2014). One of the studies in the set (Chow and Cao 2008) conducted a literature review to first elicit a list of CSFs and then conducted a questionnaire-based survey for assessing the importance of each CSF in agile projects.

Among the studies that used opinion surveys as the empirical investigation method, the studies (Stankovic et al. 2013; Chow and Cao 2008) focused on the CSFs in agile software projects only. The study (Cerpa et al. 2010) proposed a regression model to predict project success based on data from multiple companies, and reported a prediction accuracy in the range of [67%–85%]. Another study (Agarwal and Rathod 2006) searched for a view of successful projects from the perceptions of different project roles in terms of generic factors such as time, cost, and scope. The study (Pereira et al. 2008) investigated regional/cultural differences in definitions of project success between Chilean and American practitioners, while the study (Drew Procaccino et al. 2002) identified early risk factors and their effect on software project success. The study (Weber et al. 2003) examined the accuracy of several case-based reasoning techniques in comparison to logistic regression analysis in predicting project success.

The study in (Lyytinen and Hirschheim 1987) presented a survey and classification of the empirical literature about failures in Information Systems (IS). It proposed a classification of IS failure based on the empirical literature, into four groups: process failure, interaction failure, and expectation failure. It also classified the sources of failure into the following categories: IS failure, IS environment failure, IS development failure, and IS development environment failure.

The study in (Poulymenakou and Holmes 1996) presented a contingency framework for the investigation of information systems failure and CSFs. The proposed CSFs were under these groups: users, social interaction, project management, use of a standard method, management of change, and organizational properties.

Another study (Scott and Vessey 2002) discussed how to manage risks in enterprise systems implementations. It proposed a classification of risk (and failure) factors, which were based on several case-study projects in which the authors were involved.

The study in (Tom and Brian 2001) assessed the relationship between user participation and the management of change surrounding the development of information systems. It also proposed a classification of CSFs which were based on a case study in a large organization.

Entitled “Critical success factors in software projects”, the study in (Reel 1999) also proposed a classification of CSFs which were based on the author’s experience. It discussed five essential factors to managing a successful software project: (1) Start on the right foot (Set realistic objectives and expectations; Build the right team; Give the team what they think they need); (2) Maintain momentum (Management, quality); (3) Track progress; (4) Make smart decisions; (5) Institutionalize post-mortem analyses. The paper included many interesting observations, e.g., “At least seven of 10 signs of IS project failures are determined before a design is developed or a line of code is written” (Reel 1999).

Last but not the least, the work in (Berntsson-Svensson and Aurum 2006) assessed whether there could be different perceptions about what effect various factors have on software project success among different industries. Based on an empirical investigation, the study concluded that there are indeed differences among the factors which are important for project/product success across different industries.

2.2 Reviewing the existing CSF classification schemes

To conduct our empirical investigation, we had to choose a suitable CSF classification scheme from the literature (as discussed above). In the set of studies that we had reviewed in the literature (as listed in Appendix Table 9 and summarized above), we specifically chose the papers which had presented CSF classification schemes. We found that the papers presenting CSF classification schemes are either regular surveys, SLR papers, or studies based on opinion-surveys (questionnaires). The advantage of looking at survey or SLR papers in this area was that each such paper had already reviewed a large number of “primary” studies on CSFs and thus the resulting CSF classification scheme in the survey or SLR paper was a synthesis of the literature which would prevent us from having to read each of those many primary studies. Thus, we decided to review the papers which had presented CSF classifications and chose the most suitable one.

At the end of our literature searches for CSF classification schemes, we populated our pool as shown in Table 1. We also show in Table 1 the coverage of CSFs in each existing CSF classification scheme. We should note that, while we were as rigorous as possible in search for the CSF classification schemes, we did not conduct a systematic literature review (SLR).

After a careful analysis and comparison, we decided to adopt the CSF classification by Ahimbisibwe et al. (Ahimbisibwe et al. 2015) due to the following reasons:

-

Many of the studies have taken a coarse-grained approach by aggregating a subset of factors into one single CSF, while (Ahimbisibwe et al. 2015) has considered the factors in finer granularity. For example, “Organizational properties “has been considered as one single factor in many studies (e.g., (McLeod and MacDonell 2011)), while (Ahimbisibwe et al. 2015) has considered that factor in finer granularity, e.g., top management support, organizational culture, leadership, vision and mission. As another example, the “User” aspect has been considered as a single factor by many studies (e.g., (McLeod and MacDonell 2011)), while (Ahimbisibwe et al. 2015) has considered that aspect in finer granularity, e.g., user support, experience, training and education. We believe it is important to assess those aspects separately rather than seeing them as one single factor.

-

The study (Ahimbisibwe et al. 2015) has the finest granularity and number of CSFs (38 factors) compared to other studies.

-

We found that some of the groupings (clustering) done in many of the works are weak and/or impartial, and thus need to be improved, e.g., “project management” is placed in (McLeod and MacDonell 2011; Lyytinen and Hirschheim 1987; Poulymenakou and Holmes 1996; Scott and Vessey 2002; Tom and Brian 2001) under the “Development processes” group while it shall be placed under the “Project aspects” group. As another example, (McLeod and MacDonell 2011) placed “User participation” and “User training” under the “Development processes” group while they should be under the “Users” factor. Many classifications are also impartial (not providing full coverage of all CSFs) as we can see in Table 1 that many studies do not cover all the types of CSFs. The classification of the CSFs proposed by Ahimbisibwe et al. (Ahimbisibwe et al. 2015) seems among the most suitable one in this set of studies, which we choose in this study and review in more detail in Section 2.3.

2.3 Reviewing and extending the CSFs model of Ahimbisibwe et al.

As discussed above, we systematically assessed and chose as the base model the CSF model developed by Ahimbisibwe et al. (Ahimbisibwe et al. 2015), which provides a framework for investigating the internal and external factors leading to success or failure in software projects.

After reviewing Ahimbisibwe et al.’s model (Ahimbisibwe et al. 2015), we found several improvement opportunities which we decided to perform and then use it in our study, e.g., we added several new CSFs and revised a few of the CSFs. Fig. 1 shows both the original model as developed by Ahimbisibwe et al. (Ahimbisibwe et al. 2015) (part a) and also our improved version (part b). In this model, CSFs are categorized into organizational factors, team factors, customer factors, and moderating variables (contingency / project factors). The variables describing project success are included under Project Success. In our model, organizational factors, team factors, and customer factors are the factors that could be correlated with the project success (i.e., the dependent variable). Moderating variables (contingency / project) factors) potentially have a moderating effect on the relations between the factors (variables) describing project success, and also have a negative effect on project success (Ahimbisibwe et al. 2015), e.g., more technical complexity of a project would challenge the success of the project.

Project success in Fig. 1 is a set of variables that measure and describe success. Revised/added aspects are shown in gray color. The rationale/justifications behind the changes to the base model are explained next.

-

As a fundamental issue, as a result of feedback from our industry partners, we added “Software development methodology” (a spectrum between traditional and Agile) as a contingency (project) factor.

-

“Organizational culture” in the base model was expanded to “Organizational culture and management style” since management style was actually discussed in the work’ of Ahimbisibwe et al. (Ahimbisibwe et al. 2015) but was not included in the model explicitly.

-

“Project planning and controlling” in the base model was divided into separate items: (1) project planning, and (2) project monitoring and controlling, since we believe these two factors are better separated as their quality/impacts could differ in the context of a typical project, e.g., project planning could be perfect, while project monitoring and controlling is conducted poorly.

-

“Customer skill, training and education” and “Customer experience” in the base model are somewhat unclear and overlapping with one another, and as a result, based on reviews (Ahimbisibwe et al. 2015) and the relevant literature, we expanded them to “Customer skill, training and education in IT”, and “Customer experience in its own business domain” since the two aspect shall be separated as a customer may have a good skill level in one and not in the other.

-

As a result of our recent research (Karapıçak and Demirörs 2013; Garousi and Tarhan 2018) on studying the need to consider personality types in software team formations, and discussion with and feedback from our industry partners in the course of our long-term industry-academia collaborations (e.g., (Garousi and Herkiloğlu 2016; Garousi et al. 2016a; Garousi et al. 2016b; Garousi et al. 2015a)), we added “Team building and team dynamics” as a new CSF to the ‘process’ category of project success. Throughout our industrial projects, we have observed many times that one side product of successful projects is the project’s influence in positive team building, improving team dynamics (compared to the state before the project), and enhancing team members’ opinions about each other. Previous studies have also reported the importance of this factor (Hoegl and Gemuenden 2001).

-

We revised the independent-variable category of “project success” to make it better align with well-known software quality attributes (both functional and non-functional) by adding several other quality attributes: performance, security, compatibility, maintainability, and transferability. We revised “ease of use” to “usability” to ensure a broader meaning.

-

According to many studies and also the belief of many practitioners, customer satisfaction, e.g., (Ellis 2005; Pinto et al. 2000), and team satisfaction, e.g., (Thompson 2008), are often among the most important project metrics. To address this issue in the model, we added a new category under project success about satisfaction of stakeholders including customer, team and top-management satisfaction.

3 Research goal and methodology

3.1 Goal and research questions

The goal of the empirical investigation reported in this paper was to characterize and analyze the relationship of CSFs (as proposed by Ahimbisibwe et al. (Ahimbisibwe et al. 2015) and modified in our work as discussed above) with the success level of software projects, from the point of view of software project managers in the context of software projects, for the purpose of benefitting software project managers, and also advancing the body of evidence in this area.

Based on the above goal, we raised the following research questions (RQs):

-

RQ 1: How strongly are CSFs and project success metrics associated (correlated)?

-

RQ 2: What is the ranking of the proposed CSFs based on their correlations with project success metrics? Note that RQ 2 is a follow-up to RQ 1. Once we numerically assess the correlation between the CSFs and project success metrics, we can rank the CSFs based on their correlation values.

-

RQ 3: What is the ranking of the project success metrics based on their correlations with the CSFs? Similar to RQ 2, RQ 3 is also a follow-up to RQ 1. Once we numerically assess the correlation between the CSFs and project success metrics, we can rank the project success metrics.

As the research goal and the RQs show, our investigation is an “exploratory” study (Runeson and Höst 2009). To collect data for our study, we designed and executed an online questionnaire-based opinion survey. We discuss the survey design and execution in Section 3.2.

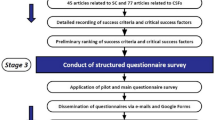

3.2 Survey design and execution

We discuss next the sampling method, survey questions, and survey execution.

3.2.1 Sampling method

In survey-based studies, there are two types of sampling methods, which are probabilistic sampling, and non-probabilistic sampling (Punter et al. 2003; Linåker et al. 2015; Groves et al. 2009). The six sampling methods under probabilistic and non-probabilistic sampling sorted in descending order by the level of sample randomness are (Punter et al. 2003; Linåker et al. 2015; Groves et al. 2009): (1) random sampling, (2) stratified sampling, (3) systematic sampling, (4) convenience sampling, (5) quota sampling, and (6) purposive sampling. Probabilistic sampling is a systematic approach where every member of the entire population is given an equal chance to be selected. Where probabilistic sampling is not possible, researchers use non-probabilistic sampling and take the charge in selecting the sample. In this study, probabilistic sampling and its methods (i.e., stratified sampling) were not possible options since we did not have access to a large dataset to apply probabilistic sampling (also discussed in Section 6.2).

A-posteriori probability-based (systematic) sampling, where data for various companies is grouped and then a sample is extracted from the data so that every member of the population has statistically an equal chance of being selected, is a way to mimic probability sampling. However, since the participants would have hesitated to report honest opinions if their names or employing companies were not anonymous (such experience have been observed many times before, e.g., (Groves et al. 2009)), such revealing information would have seriously damaged the quality of the project related data reported by participants.

In convenience sampling, a type of non-probabilistic sampling method, “Subjects are selected because of their convenient accessibility to the researcher” (Lunsford and Lunsford 1995). In all forms of research, it would be ideal to test the entire population, but in most cases, the population is just too large that it is impossible to include every individual. This is the reason why most researchers rely on sampling techniques like convenience sampling, the most common of all sampling techniques used in software engineering research (Sjoeberg et al. 2005). We decided to use “convenience sampling”, after careful review of the pros and cons and also given the constraints that we elaborated above.

Another issue in our survey design, which is inter-related with the sampling method, is the unit of interest (analysis). It has been shown in prior research that participants assuming different roles have different perceptions of project success for the same project (Agarwal and Rathod 2006; Davis 2014). Therefore, the unit of analysis in this survey is the survey participants’ perception of completed or near-completion software projects. Thus, for all the statistics and analysis that we report in Section 4, software projects as perceived by survey participants are the unit of analysis, and the implications shall be tied to the Turkish software projects.

3.2.2 Survey questions

We designed an online survey based on the contingency fit model of CSFs for software projects as explained in Section 2.3. We designed a draft set of questions aiming at covering the CSFs in the contingency fit model while also economizing the number of questions.

Based on our experience in conducting online surveys (Garousi and Zhi 2013; Garousi and Varma 2010; Garousi et al. 2015b; Akdur et al. 2015) and also based on several survey guidelines and experience reports, e.g., (Punter et al. 2003; Linåker et al. 2015; Torchiano et al. 2017); after initial design of the survey, we sent the questions to eight of our industrial contacts as the pilot phase. The goal behind this phase in our survey design was to ensure that the terminology used in our survey was familiar to the participants. This is because, as other studies have shown, e.g., (Garousi and Felderer 2017), researchers and industrial practitioners often use slightly different terminologies and, when conducting joint studies/projects, it is important to discuss and agree on a set of consistent terminology. The feedback from the industrial practitioners was used to finalize the set of survey questions, while their answers to the survey questions were not included in the survey data. Early feedback from the practitioners was useful for ensuring the proper context for the survey and also the familiarity of its terminology for industrial respondents.

After improvement of the questions based on the feedback from the industry practitioners in the pilot study, we ended up with having 53 questions. The complete list of the questions used in the survey is shown in Appendix Table 10. The first set of 12 questions gathered profiles and demographics of the projects, participants, and companies. Most of these questions had quantitative pre-designed single or multiple response answers. For example; the first question about participants’ gender required a single response answer (male or female), while participants were able to provide several answers to the second question (the participant’s current position) as they could have more than one single role in their employer organizations.

The remaining of the questions probed data related to software project in the context of the set of CSFs and metrics describing project success. There was a one-to-one relationship between CSFs/variables describing project success and the questions #13–53. For brevity, we are not showing the pre-designed possible answers of the questions in Appendix Table 9, but they can be found in an online resource (Garousi et al. 2016c). As we can see in Appendix Table 10, these questions (questions 13–53) had answers of type 5-point Likert scale and participants were also given “Not applicable” and “I do not know” choices in each question.

3.2.3 Recruitment of subjects and survey execution

To execute the survey, the survey instrument was implemented by using the Google Forms tool (docs.google.com/forms) and hosted on the online file storage service by Google Drive (drive.google.com). Research ethics approval for the survey was obtained from Hacettepe University’s Research Ethics Board in August 2015. Immediately after getting ethics approval, the survey was made available to participants in the time period of August–September 2015. Participants were asked to provide their answers for software projects that were either completed or were near completion. Participation was voluntary and anonymous. Respondents could withdraw from the survey at any time.

To ensure that we would gather data from as many software projects as possible, we established and followed a publicity and execution plan. We sent email invitations to our network of partners/contracts in 55 Turkish software companies (and their departments). In the email invitations, we asked the survey participants to forward the invitation email (which included the survey URL) to their contacts as well. The goal was to maximize the outreach of the survey. Thus, although we were able to monitor the progress weekly, it was not possible for us to determine the ratio of the practitioners who received the email actually filled out the survey (i.e., the response rate). We also made public invitations to the Turkish software engineering community by posting messages in social media, e.g., LinkedIn (we found seven software-engineering-related LinkedIn groups), Facebook, and Twitter, and by sending emails to management offices of more than 30 Technology Development Zones and Research Parks in Turkey.

As we also discussed above, we established and followed a publicity and execution plan in our survey. In order to not to disturb practitioners with multiple duplicate invitations (received from multiple sources), we communicated our invitation with each organization through a single point of contact. Our plan included the target sectors, contact person, publicity schedule and status for each organization. We did not keep track of the response rates since participation in our survey was anonymous, however an iterative publicity schedule (with three main iterations) allowed us to not only monitor the changes in survey population but also to get a feeling about the response rates after each iteration.

An issue about recruitment of subjects (i.e. completed or near-completion software projects) was that, in some cases, various participants might have provided data for the same project. This problem is called “duplicate entries”, where two or more data points represent the same unit under study (Linåker et al. 2015). After careful review and discussions, we were convinced that although this issue could lead to a validity threat, the nature of our study that assesses the success factors of software projects as perceived by the participants would allow these kinds of duplicate entries in our data set. This is so since, we assumed that different project stakeholders of the same project would have different perceptions regarding the success factors. So that, as long as each data unit is about a different project and/or from a different participant, it represents a different unit in our data set. After all, we did not expect to have duplicate project data provided by the same respondents.

Also, similar to previously-conducted online SE surveys, e.g., (Garousi and Zhi 2013; Garousi and Varma 2010; Garousi et al. 2015b), we noticed that a number of participants left some of the questions unanswered. This is unfortunately a usual phenomenon in almost any online SE survey. Similar to our surveys in Canada (Garousi and Zhi 2013; Garousi and Varma 2010) and Turkey (Garousi et al. 2015b; Akdur et al. 2015), we found partial answers useful, i.e., even if a given participant did not answer all the 53 questions, her/his answer to a subset of questions were useful for our data set. The survey included data from 101 software projects across Turkey. For replicability and also to enable other researchers to conduct further analysis on our dataset, we have made our raw dataset available in an online resource (Garousi et al. 2016d) (https://dx.doi.org/https://doi.org/10.6084/m9.figshare.4012779).

3.3 Quality assessment of the dataset

We did sanity checks on the data set and ensured the quality of the dataset. One important issue was the issue of partially-answered questions and their impact on the dataset. We looked into the number of provided (complete) answers to each question of the online survey and show the numbers in Fig. 2. We see that generally, among the 101 data points, many questions had almost close to complete answers, except five questions, which were:

-

Q8: Is your company/team certified under any of the process improvement models (e.g., CMMI, ISO 9000, ISO/IEC 15504)? (72 of the 101 respondents answered this), question completion rate = 71.2%: We suppose that, since majority of the respondents were developers working on the project basis, and process improvement activities are usually conducted organization-wide, we could interpret that many respondents (close to 30) simply were not aware of (formal) process improvement activities based on standard models in their companies and thus they left this question unanswered.

-

Q9: If your company/team has been certified under CMMI or ISO/IEC 15504, what is its process maturity certification level? Question completion rate = 80.1%. This question is similar and a follow-up to Q8 (process improvement models) and thus the case of many unanswered relies to it.

-

Q18: Organization-wide change management practices: Question completion rate = 86.1%. Since change management is usually conducted organization-wide or by dedicated configuration-management teams, we felt that the developers, whose involvement and awareness in change-management practices were low, would have hesitated to provide answers.

-

Q37: To what extent did the project finish on budget? Question completion rate = 74.2%. Since budgeting is arranged in management levels, the respondents who were developers could most probably not answer this.

-

Q42: To what extent has the software product/service been able to generate revenues for the organization? Question completion rate = 85.1%.

Since there was no cross-dependency among the questions except Q8 and Q9, partial data would not and could not have impacted the internal and construct validity of our study. Also, since empty answers were not all overlapping on the same data points, excluding partial answers would have excluded a large ratio of our dataset. Therefore, we did not exclude partial answers.

Furthermore, we wanted to assess the potential impacts of partially-completed answers on results of study in Section 5. Out of the above five partially-answered questions, two are about general profiles and demographics and were not included in results analysis: Q8 (certifications of process improvement practices) and Q9 (company’s process maturity certification level). Only three questions, i.e., Q18 (change management practices), Q37 (finishing projects on budget) and Q42 (products/services generating revenues) are included in the dataset used for results analysis in Section 5. When we inspected the data for these questions in particular, we found that empty answers were not all overlapping on the same data points. For the purpose of correlation analysis, when a given CSF or project success measure was not provided in a data point, only for correlation analysis of that CSF, that data point was not considered. In this way, we ensured the soundness of our correlation analysis approach.

4 Demographics of the dataset and independent statistics of the factors

4.1 Profiles and demographics

In order to assess the nature of the set of projects and participants appearing in this study, we extracted and analyzed the profiles and demographics of the participants and the projects in the survey dataset. Since high diversity within the data set was something we wished to achieve, we wanted to understand the level of diversity within the data set and to determine the profiles of the respondents that participated in the projects and the companies that carried out the projects.

4.1.1 Participant profile

In terms of the respondents’ gender profile, 70% of the project data were provided by male participants, while 30% were provided by female participants.

We were interested about the positions held by the respondents in projects, since people in different software engineering positions often have different perceptions in rating the importance of success factors (Garvin 1988). It is possible that as participants might have more than one position in a given project (e.g., a person can be a developer and tester at the same time). Thus, respondents were allowed to select more than one position. As presented in the bar chart in Fig. 3, most of the respondents were software developers (programmers). We can see that software designer, software architect, and projects manager positions were also common positions in the dataset.

The survey also asked about the city of residence of each respondent. As most of the authors and most of our industry contacts were located in Ankara (the capital city of Turkey), it is not surprising that 71% of the projects were reported by participants from Ankara. Istanbul, the largest city of Turkey, followed Ankara with 23% of the dataset. A 2012 study reported that 47 and 33% of the Turkish software companies were located in Istanbul and Ankara, respectively (Akkaya et al. 2012). Although the geographic distribution of our sample pool does not perfectly fit the regional breakdown of Turkish software companies, there is a reasonable mix of respondents from Turkish cities where the majority of the software industry is located.

Figure 4 shows the individual-value plot of the respondents’ years of work experience. The plot and data’s mean and median values (8.0 and 6.5, respectively) denote a young pool of participants, but still there is a good mix of participants with various levels of experience in software projects.

Another survey question was about the certifications held by respondents. Most popular certifications held by respondents are Agile-related certifications, certifications offered by the Project Management Institute (PMI) and by Microsoft Corporation. Certifications reported by our respondents in the “other” category included: certification for the Open Group Architecture Framework (TOGAF), the COSMIC (Common Software Measurement International Consortium), and Capability Maturity Model Integration (CMMI) certifications and certifications offered by SAP Corporation, ISACA (Information Systems Audit and Control Association), and Offensive Security.

4.1.2 Company profile

Figure 5 shows the results of the next question asking about the target sectors of the products developed (or services offered) by the companies that the projects were performed by. We acknowledge the positive impact of diversity in target sectors of projects, as project information from various target sectors would help increase the representativeness of the dataset and the generalizability of our results. As shown in Fig. 5, projects from companies producing software products for government, IT and telecommunication, military and defense, banking and finance, engineering and manufacturing and business are all represented in our data set.

We wanted to have projects from various company sizes. Thus, another survey question gathered that data. Figure 6 shows the percentage distribution of data points by company size. We can see that there is a good mix of projects from various company sizes.

We also wanted to know about which process improvement models are used in the companies where the projects in our data set are carried out. It makes sense to expect that using process improvement models by a company or team would have a positive impact on project outcomes. CMMI and ISO9000 family of standards, with 46 and 41 data points respectively, were the highly used models in the companies in our survey sample. Other process improvement models were also mentioned, e.g., ISO/IEC 15504: Information technology — Process assessment, also known as Software Process Improvement Capability Determination (SPICE), and ISO/IEC 25010: Systems and Software Quality Requirements and Evaluation. Note that as a company might use more than one process improvement model, participants were allowed to report multiple models.

4.1.3 Project profile

As discussed in Section 3.2, we asked participants to fill the survey in the context of their completed or near completion projects. However, we realized that not all software projects are in the scope of the entire software development life-cycle phases, e.g., in several of our current industry-academia collaborative projects, the project scopes have only been in software maintenance or only in testing. Thus, we wanted to know the number and types of phases followed in projects. 88 of the projects in our survey followed the entire software development life-cycle. Most of the remaining projects followed at least two phases/activities. Among projects that were consisted of only one phase/activity, five were software requirements / business analysis projects, and one was a software testing project.

Participants were asked about the nature of the projects based on development type, where they were allowed to report multiple development types, e.g., a project might be a new “development” project or a software maintenance project. The results reveal that new development projects are prevalent in our dataset with 82 data points. However, there is also a good representation of software maintenance projects of adaptive, perfective, corrective and preventive types with 38, 21, 21, and 13 data points respectively.

As discussed in Section 3.2, the measurement unit of this study is a single software project, but in many cases software development activities exist as a part of a larger project. In order to understand the frequency of such cases in our survey data and ensure that participants would provide answers related only to ‘software’ aspects of larger system-level projects, participants were asked about the independence level of the projects. Results show that about 62% of the projects were stand-alone whereas the remaining 38% were part of larger projects. Note that we asked participants to answer rest of the questions in the survey based on only the project/activity that is part of the larger project, if they had selected the latter choice.

4.2 Independent statistics of the factors and variables

In this study, as explained in Sections 2 and 3, we grouped the factors and variables under three categories: Critical success factors (CSFs), contingency (project) factors, and project success metrics. Similar to what we expected in profiles and demographics, we wanted diversity in the projects in our survey in terms of these factors and variables. With this aim, we did not encourage participants to report about successful or failed projects, but we let them free to determine the projects that they wished to report in the survey, as long as projects were either completed or near completion. We discuss next the factors under each of the three categories.

4.2.1 Critical success factors

As discussed in Section 2.3, the revised CSF model groups factors that are could relate to the project success under three categories: (1) organizational factors, (2) team factors, and (3) customer factors. We report next the independent statistics of the factors in each these groups.

Organizational factors

Based on the CSF model, we asked participants about the organizational factors below:

-

The level of top-management support

-

(Maturity of the) organizational culture and management style

-

(Maturity of the) vision and mission

-

(Maturity of the) project planning

-

(Maturity of the) project monitoring and controlling

-

(Maturity of the) organization-wide change management practices

As discussed in Section 3.2.2, the answers to these questions could be selected from 5-point Likert scales, which was designed carefully for each question, e.g., answers for the question about top-level management support could be chosen from this scale: (1) very low, (2) low, (3) average, (4) high, and (5) very high. Organizational culture and management style could be chosen from: (1) rigid organizational culture (i.e., bureaucratic command and control), (2) slightly rigid, (3) moderate (between rigid and adaptive), (4) slightly adaptive / flexible, and (5) fully adaptive / flexible collaborative organizational culture (i.e., family-like mentoring and nurturing). These categories and Likert scales were largely adopted from the literature, e.g., (Ahimbisibwe et al. 2015).

Distributions (histograms) of the data points in the dataset w.r.t. each of the above factors are shown in Fig. 7, along with median values for each factor. The medians for all cases, except project planning and monitoring, are in “medium” level. For project planning and monitoring, the medians are “Planned” and “High”, respectively.

The results show that among the organizational factors project planning and project monitoring and controlling were the factors that had a median at position 4 of the 5-point Likert scale. This indicates that participants generally rated the level of these factors in their projects as medium or higher. On the other hand, scores for the organization culture and management style ranged from “rigid” to “adaptive/flexible” almost symmetrically, meaning that we had projects from across that spectrum in the dataset.

Team factors

Participants were asked about following team factors:

-

Team commitment

-

Internal team communication

-

Team empowerment

-

Team composition

-

Team’s general expertise (in software engineering and project management)

-

Team’s expertise in the task and domain

-

Team’s experience with the development methodologies

Distributions (histograms) of the data points are shown in Fig. 8. Project team commitment, project communication among internal stakeholders during the project under study, and level of team members’ expertise and competency with the tasks assigned are rated generally high, according to the median values shown in Fig. 8. It is noteworthy that median of scores for project teams’ composition and team empowerment are lower than they are for other team factors. This denotes that some participants seemed not to be pleased with the formation of the team in terms of cohesion, team sense, harmony, and respect among team members in some projects.

Customer factors

The survey asked about the following customer factors:

-

Customer (client) participation

-

Customer (client) support

-

Customer skill, training and education in IT

-

Customer experience in its own business domain

Figure 9 shows the distributions (histograms) of the data points w.r.t the above customer factors. We can see that level of customer (client) experience in their own business domain in projects is rated highest among customer factors. We have a good mix of scores for customer participation, customer support, and customer IT skills, training and education in projects. We see that while there was high customer participation and support in some projects, those factors were quite poor in some other projects.

4.2.2 Contingency (project) factors

Based on the revised CSF model, the survey asked the participants about following contingency (project) factors (i.e., moderating variables) in their projects:

-

Technological uncertainty

-

Technical complexity

-

Urgency

-

Relative project size

-

Specification changes

-

Project criticality

-

Software development methodology

Distribution of the data points including the median values for each factor are shown in Fig. 10. Technological uncertainty in the projects mostly ranges between very few and moderate, whereas project complexity has mostly moderate to high scores. The response histograms for both the level of urgency and level of specification changes are slightly skewed towards the right, indicating that the level of these two factors as experienced in most projects was moderate to high. Project size in terms of number of team members are mostly between 11 and 50 people or lower. However, there exist also projects in our survey that are very large (this refers to projects having with more than 100 team members).

The response histogram for project criticality, on the other hand, is more visibly skewed towards the right showing that our project portfolio includes mostly critical projects. Software development methodologies used in projects are distributed quite evenly in the 5-point Likert scale between Agile and plan-based (traditional).

4.2.3 Project success

Variables describing project success in our contingency fit model (Fig. 1) include: (1) process success, (2) product-related factors, and (3) stakeholders’ satisfaction. In order to understand the process outcomes and process success, we asked in the survey about the following factors:

-

Budget

-

Schedule

-

Scope

-

Team building and team dynamics

Figure 11 shows the distribution (histogram) of the data points. We show the median values for each histogram. We can see that most projects were in good shape regarding team building and team dynamics factors. Scores for other three project success variables show that most projects were also in reasonable shape and not challenged in terms of conforming to project scope, budget, and schedule. In terms of projects finishing on budget, 21 and 5 (of 101) of the corresponding responses were “I do not know” and “not applicable” options, respectively. This seems to denote that survey participants were relatively challenged in assessing that aspect or, as expected, they were not in the top management team and thus were understandably not aware of the projects’ budget-related issues.

According to the CSF model (Fig. 1), metrics describing product-related factors were:

-

Overall quality

-

Business and revenue generation

-

Functional suitability

-

Reliability

-

Performance

-

Usability (ease of use)

-

Security

-

Compatibility

-

Maintainability

-

Transferability

Variables describing satisfaction of various stakeholders were as follows:

-

Customer (user) satisfaction

-

Team satisfaction

-

Top management satisfaction

The survey asked the respondents about product characteristics and level of stakeholders’ satisfaction. Figure 12 shows the results. Overall quality of the products/services perceived by project members were mostly high or average, as shown by median values.

Business and revenue generation in projects is also rated high by participants. However, we should note that a few participants were challenged in assessing this project success variable, as 9 and 6 of the data points were “I do not know” and “not applicable” options respectively.

Transferability is the lowest rated project success variable among rest of the product related variables with the median of ‘average’. Besides transferability, histograms for rest of the product-related variables are visibly skewed towards right and these variables are rated mostly high by most participants. Scores of performance and reliability are relatively high which indicates that respondents were mostly happy with these quality aspects of the software/service delivered.

On the overall, level of satisfaction of various stakeholders (i.e., top management, customer, and team members) with the project outputs is high or average in most projects.

We also wanted to have a single metric to measure “overall” success of a given project (data point in the dataset) to be able to assess and differentiate the success distributions of the projects. For this purpose, we calculated an aggregated metric, for each project, by calculating the average on all of its 17 success factors and then normalizing the value into the range of [0,1]. Since we used Likert scales, we assumed that the response data could be treated like an interval scale.Footnote 1 We assigned the value ‘4’ to the most positive endpoint of the scale, i.e., when the response indicated that the project was 100% successful with regard to the indicated factor, and the value ‘0’ to the most negative endpoint of the scale, i.e., when the response indicated that the project was 0% successful (or 100% unsuccessful) with regard to the indicated factor. For normalization, we divided the data by four. For example, for a data point with a list of 17 values for its success factors {4, 3, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 1, 4, 4, 4}, the aggregated metric is 3.76 out of 4, or 0.94 after normalization, indicating a highly successful project.

As we can see in Fig. 13, overall success measures of the majority of the projects (in the original dataset) are above the middle line, denoting that the data pool in general is slightly skewed towards more successful projects. Since we did not filter the projects entered in the survey based on their success (the survey was open to any project), this has been the outcome of the data collection. The purpose of the ‘balanced’ dataset in Fig. 13 and the need for it will be discussed in the next section.

4.3 Creating a balanced dataset based on project success metrics

As reported above, our original dataset is skewed towards successful projects and thus was not “balanced” in terms of the aggregated project success metric as visualized in Fig. 13. For the analysis reported in the rest of this paper, in addition to the original dataset, we wanted to have another balanced dataset in terms of the aggregated success metric in which we would have quite similar number of more successful projects and less successful projects. We felt that it is important to also have the balanced dataset so to be able to compare the findings of our study based on original dataset (skewed towards successful projects) with the balanced dataset (one which has similar number of more successful projects and less successful projects). Our approach to derive that balanced dataset from the original dataset was as follows.

We sorted all the data points (projects) in the original dataset by their aggregated success metric. The minimum and maximum values were 0.53 and 3.76 (out of 4) respectively. We then put the middle cut-off (partition) point at 2.0. The category of more successful projects was those with success metric >2.0. Similarly, the category of less successful projects was those with success metric <2.0. Out of 101 projects, based on their success metrics, 80 projects were placed in the more successful category, and the remaining 21 projects were placed in the less successful category. To create a balanced (sub)dataset, we then kept the 21 projects in the less successful category, and randomly sampled 21 projects from the more successful category and joined the two sets to create a balanced dataset with 42 projects. Figure 13 shows the boxplots of the aggregated success metrics for both the original and the balanced dataset. As shown in Fig. 13, the balanced dataset is ‘almost’ balanced in terms of the aggregated project success metrics. Note that the points (projects) across the two sides of the middle line are not exactly symmetric, thus we do not have the ‘perfectly’ balanced case. As a result, in going further with our analysis, we use an original dataset that includes all the projects (i.e., 101 data points) and a balanced dataset that includes an equal number of less and more successful projects (i.e., 42 data points).

Also, we should mention any repetition of the random sampling of 21 projects from the set of 80 more successful projects could provide a slightly different balanced dataset. To prevent further complexity in our analysis, we picked one possibility and chose it as the balanced dataset to be used in the analysis of our RQs. Note that as discussed in Section 3.2, for replicability purposes, we have made our raw dataset available in an online resource (Garousi et al. 2016d) which also includes the balanced dataset.

5 Results and findings

We present the results of our study and answer the three RQs in this section. For brevity and to prevent repeating the long names of factors again and again throughout the study, we use codes in this study. The table of codes for factors and variables is provided in Appendix Table 11 (e.g., OF.01 for ‘Top management support’ factor under organizational factors).

5.1 RQ 1: Correlations between CSFs and project success

For RQ 1, we wanted to assess how strongly CSFs and project success metrics are correlated. To tackle RQ 1, we conducted a correlation analysis between the four categories of CSFs and the three categories of variables describing project success. We do this first for the original dataset (in Section 5.1.1) and then for the balanced dataset (in Section 5.1.3). How we created the balanced data set was described in Section 4.3. In addition, we analyzed the presence of cross-correlation among the proposed CSFs both for the original and balanced datasets in Section 5.1.3.

5.1.1 Analysis using the original dataset

Table 2 presents the correlation coefficients between proposed CSFs and the variables describing project success. As reviewed in previous sections and shown in Table 2, there are 24 CSFs, categorized into four categories: organizational factors (OF in Table 2), team factors (TF), customer factors (CF), and contingency (project) factors (PF). In the CSF model (Fig. 1), there are 17 variables describing project success, categorized into three categories: (1) process quality (ProcF.01, …, ProcF.04 in Table 2), (2) product quality (ProdF.01, …, ProdF.10), and (3) satisfaction of stakeholders (SF.01, ..., SF.03). Since our data is ordinal, we used Spearman’s rank correlation (rho) to assess the association (relationship) among the variables. We also calculated the level of significance for each correlation and show them in Table 2: ‘*’ (p value <= 0.05) and ‘**’ (p value <= 0.01).

We should note the issue of possible family-wise error rate in this analysis, which is “the probability that the family of comparisons contains at least one Type I error” (Howell 2013). Since we have compared and assessed the pair-wise correlations (Spearman’s rho) between proposed CSFs (rows) and variables describing project success (columns) in Table 2, one should be careful about the family-wise error rate here. One of the widely-used controlling procedures for this possible error is the Bonferroni correction (Howell 2013), in which the threshold for acceptable p values for the original pair-wise correlation values should be divided by the total number of comparisons (see page 261 of (Malhotra 2016)). The number of comparisons in Table 2 is 24 × 17 = 408. We checked and found that when applying this Bonferroni correction for the chosen p value levels of 0.05 and 0.01, in Table 2, none of the correlations are significant anymore.

For easier analysis, and to not exclusively rely on the level of significance, we classified the correlation values using a widely-used four-categories scheme (Cohen 1988), as shown in Table 3 (None or very weak, Weak, Moderate and Strong). Classified correlation values in our analysis are directly shown by columns N, W, M, S in Table 2. Based on the classified correlation values, we have added the total for each row and column in Table 2. Those total values show, for the case of each CSFs (rows) and project success variables (columns), how strongly it is correlated with the factors in the other group.

Out of the total of 408 combinations in Table 2, we found 168 statistically significant (p < = 0.05) associations (98 of which had a p value less than or equal to 0.01) between proposed CSFs and variables describing project success. This meant that about 41% of the combinations (168/408) have statistically significant correlation with variables describing project success. Most of the associations were positive, with only a very small number of negative associations at a statistically significant level (i.e., eight statistically-significant associations in total, with one negative association having p < = 0.01). According to the Ahimbisibwe et al.’s CSFs model (Ahimbisibwe et al. 2015), one would generally expect a negative correlation of contingency (project) factors with project success metrics (referring to Fig. 1). However, we found that this expectation was not observed in our dataset. One possible root cause could be the specific mix of successful/unsuccessful projects in our dataset (as reviewed in Section 4.2.3).

Only one contingency (project) factor (out of seven), i.e., PF.07 (software development methodology) is negatively correlated with four (out of 14) of the variables describing project success at a statistically significant level. This means that, considering the Likert scale used for PF.07 (software development methodology), ranging between (1) Agile and (5) traditional plan-driven, we can say that the more traditional (plan-driven) a development methodology is, according to the correlation findings, it seems that there is a tendency for less satisfaction among all three stakeholder types (user, team members, and top-management). Let us remind in this context that, as it has been widely discussed in the research community, a high correlation does not necessarily mean causality. In the literature, “correlation proves causation” is considered a cause logical fallacy, also known as “cum hoc ergo propter hoc”, Latin for “with this, therefore because of this” (Hatfield et al. 2006). Especially the issue is a subject of active discussions in the medical community, e.g., (Novella 2009; Nielsen 2012). At the same time, however many in the community believe that: “Correlation may not imply causation, but it sure can help us insinuate it” (Chandrasekaran 2011). Thus, we only discuss the existence (or lack) of correlations among various project factors and project success in this paper, and we do not intend to imply causality, i.e., if the development methodology is plan-driven, we cannot necessarily say that the stakeholder satisfaction will be low. In fact, one CSF alone cannot guarantee project success. By focusing on and improving all CSFs, a project team can only increase the chances of success.

Interestingly, one of the proposed CSFs, PF.05 (Project Factor – Specification Changes), was not associated with any of the variables describing project success at a statistically significant level (p < = 0.05). On the other hand, among the variables describing project success, ProcF.02 (Characteristics of the Process – Schedule), was associated with only one of the proposed CSFs at a statistically significant level (p < = 0.05), i.e., with OF.04 (Organizational Factor – Project Planning). This actually makes sense since project planning and project schedule are two closely-related entities and, in general, a more careful planning would lead to a more controlled schedule and project’s on-time delivery. We see that empirical data is confirming the generally believed expectations in this regard.

In Table 2, we have also calculated the average values of correlations for each row and each column. These average values show, for the case of each CSF (row) and project success variable (column), how strongly it is correlated with the factors in the other group. The highest value of this average correlation metric for a CSF could be 1.0 which would denote that it has strongest correlation (rho value = 1.0) with “all” success factors. Thus, the average correlation metric is a suitable one to rank the CSFs. We will use these average correlation values when answering RQ 2 (Ranking of CSFs) in Section 5.2.

Almost all team factors (TF.01 … TF.07) are correlated with all variables describing satisfaction of various stakeholders. Interestingly, among all CSFs, only project monitoring and controlling (OF.05) is significantly and positively correlated with all variables describing project success in product characteristics and stakeholder satisfaction categories. We identified the five most-correlated pairs in Table 2 as follows:

-

OF.05 (Monitoring and controlling) and SF.03 (Top management satisfaction), with rho = 0.54. This denotes that better project monitoring and controlling usually is associated with higher top-management satisfaction.

-

TF.07 (Team’s experience with the development methodologies) and ProdF.08 (Compatibility), with rho = 0.52, which denotes that the higher the team’s experience with the development methodologies, the higher the ability of the delivered software to exchange information and/or to perform their required functions with other software systems/environments, which is also expected.

-

OF.04 (Project planning) and SF.03 (Top management satisfaction), with rho = 0.49, which denotes that better project planning is closely related to top management satisfaction.

-

TF.02 (Internal team communication) and ProcF.04 (Team building and team dynamics), with rho = 0.48, which denotes that the higher the quality of internal team communication, the higher the team building and team dynamics at the end of the project.

-

OF.06 (Organization-wide change management practices) and SF.03 (Top management satisfaction), with rho = 0.47, which denotes that more mature organization-wide change management practices is closely related to top management satisfaction.

5.1.2 Analysis using the balanced dataset

We also wanted to know the extent to which the correlations among proposed CSFs and project success would differ, if we did the analysis on the balanced dataset instead of the original dataset, since the project success factors are skewed towards more successful projects in the original dataset, as discussed in Sections 4.2.3 and 4.3. Table 4 shows the correlation values and has the same layout as Table 2 (for the original dataset). However, different to our analysis results shown in Table 2, in Table 4 we indicate whether correlations are significant even after applying Bonferroni correction.

We looked for strong correlations in Table 4, and we identified the nine correlation coefficients (rho values) above 0.6 and show them as red bold values. Even with Bonferroni correction, these nine correlations were significant at level 0.05.Footnote 2 The strong correlations are between the following CSF and project success metrics:

-

1.

OF.04 (Project planning) vs. ProcF.04 (Team building and team dynamics) (with rho = 0.65)

-

2.

OF.05 (Project monitoring / control) vs. ProcF.04 (Team building and team dynamics) (with rho = 0.68)

-

3.

OF.05 (Project monitoring / control) vs. ProdF.03 (Functional suitability) (with rho = 0.60)

-

4.

OF.05 (Project monitoring / control) vs. SF.02 (Team satisfaction) (with rho = 0.62)

-

5.

OF.05 (Project monitoring / control) vs. SF.03 (Top management satisfaction) (with rho = 0.61)

-

6.

OF.05 (Project monitoring / control) vs. SF.02 (Team satisfaction) (with rho = 0.62)

-

7.

TF.01 (Team commitment) vs. ProcF.04 (Team building and team dynamics) (with rho = 0.61)

-

8.

TF.06 (Expertise in task and domain) vs. ProdF.01 (Overall quality) (with rho = 0.67)

-

9.

TF.06 (Expertise in task and domain) vs. ProdF.03 (Functional suitability) (with rho = 0.62)

When comparing the above listed pairs of entries and their correlations with the corresponding correlations in Table 2, it turns out that none of them had a correlation of 0.6 or higher. This indicates that using the balanced dataset yields stronger (and significant) top correlations. This seems to imply that, when studying a population of projects which are “balanced” in terms of success metrics, one could expect to observe stronger top correlations among CSFs and project success metrics.

5.1.3 Cross-correlations among the CSFs

Another issue that we looked into was the presence of cross-correlations among the CSFs themselves. For this purpose, we calculated the correlation coefficient of each pair of the 24 CSFs, resulting in 276 correlation values. Similar to the previous analysis, we calculate the cross-correlations among the CSFs for both the original and the balanced dataset.

Tables 5 and 6 show the breakdowns of correlation coefficients, for the two datasets, based on the classification of ‘None’, ‘Weak’, ‘Moderate’ or ‘Strong’ correlation values. Due to the large number of CSFs and their combinations, we show the correlation values among all CSFs, for the two datasets, in Appendix Table 12. As we can see in the tables in Appendix Table 12, as expected, many of the CSFs have cross-correlations with the other CSFs in both cases.

In the original dataset, about 66% (=49% + 16% + 1.5%) of the CSF pairs showed at least weak cross-correlations. We observe higher correlation values often among CSFs in the same CSF category, e.g., organizational factors, or team factors. For example, TF.01 (Team commitment) and TF.02 (Internal team communication) have a moderate correlation of 0.49, denoting that, as expected, when one is higher, the other would be higher too. Since weak to moderate internal cross-correlations among many CSFs are expected and normal, we do not believe these cross-correlations could have any negative impacts on our analysis nor on the results in any way. Actually, on the other hand, these cross-correlations enabled us to check the plausibility of answers by the respondents (“sanity check”), i.e., whether responses to CSFs, which we expected to be correlated, actually are correlated (e.g., see the above example).

Similarly, for the case of the balanced dataset, about 74% (=49% + 20% + 5%) of the CSF pairs showed at least weak cross-correlations. Thus, both cases confirmed the plausibility of answers by the respondents (“sanity check”).

5.2 RQ 2: Ranking of CSFs based on their correlations with project success metrics

To better understand whether some of the proposed CSFs are associated with variables describing project success stronger than others, we ranked the proposed CSFs based on their average correlations as calculated in the Section 5.1.1 for the original dataset and showed in Table 2. For easier view of the factors’ average correlations, we visualize the average values of Table 2 in Fig. 14. Since we felt that the weak correlations value could have had a noise effect on the regular average values, we also calculated “weighted” average values and show them in Fig. 14 too. For the weighted average, we used the following weight values: 1: for None or very weak (N) correlations, 2: for weak (W) correlations, 3: for moderate (M) correlations, and 4: for strong (S) correlations.

We can see in Fig. 14 that, according to average values, the top-three CSFs having the highest average correlations are: (1) OF.05 (Project monitoring and controlling), (2) OF.04 (Project planning), and (3) TF.07 (Team’s experience with the development methodologies). The top-three CSFs w.r.t. average and weighted average values almost overlap, differing in one factor only. According to weighted average values, the top-three CSF list changes slightly. OF.05 is still #1. However, the 2nd and 3rd ranked CSF are TF.07 and TF.06 (Expertise in task and domain).

We also compare in Table 7 our ranking of proposed CSFs with the ranking of CSFs as reported in Ahimbisibwe et al. (Ahimbisibwe et al. 2015). The definitions of our CSFs correspond largely to those in (Ahimbisibwe et al. 2015) (as discussed in Section 2.3). We have, however, only 24 CSFs as compared to the 27 CSFs in (Ahimbisibwe et al. 2015), since as discussed in Section 2.3 we “merged” a few similar CSFs of Ahimbisibwe et al. (Ahimbisibwe et al. 2015). The rankings of CSFs in (Ahimbisibwe et al. 2015) were based on frequency count, i.e., how often a CSF is mentioned in the surveyed literature. Instead of asking our survey participants what CSFs they propose and their importance which could provide subjective and imprecise results from their side, we provided them with the set of factors and asked participants to what degree the factors correlated with success of projects. The differences in the two ranking methods have resulted in two quite different ranking results. Our way of ranking the proposed CSFs can be considered as complementing the way of ranking proposed in (Ahimbisibwe et al. 2015).

We visualize in Fig. 15 the CSF rankings in our study versus those in (Ahimbisibwe et al. 2015). We see that the two ranks are quite different and we cannot notice a visual similarity. We wanted to see if a CSF is high in one ranking, is it necessarily ranked high in the other rank too? A correlation analysis using Pearson’s correlation coefficient did not show any significant correlation between the two ranks (r = 0.17). Despite the lack of significant overall correlation between the rankings, it is visible from the scatter plot shown in Fig. 15 that some factors situated in the quadrant bisector of the scatter plot have similar rankings in our study and Ahimbisibwe et al. (Ahimbisibwe et al. 2015). For example, OF.04, OF.05 and OF.06 were all ranked on top 10 in both ranks, while PF.03, PF.04, and PF.05 were ranked in the last 10 in both ranks.

Visualization of rankings of CSFs in our study (with original dataset) versus the ranking in (Ahimbisibwe et al. 2015)

5.3 RQ 3: Ranking of project success metrics based on strength of association with CSFs

To better understand whether some of the project success metrics are more strongly associated with the set of proposed CSFs than others, we calculated the average of correlation values for each project success metrics for both the datasets, i.e., average of all values for each column in Tables 2 and 4.

Table 8 shows the average values and the rankings using both datasets. Using the original dataset, the top three project success metrics, having the largest average associations with proposed CSFs, are: (1) SF.02 (Team Satisfaction), (2) ProcF.04 (Team building and team dynamics), and SF.03 (Top management satisfaction). Using the balanced dataset, the top three project success metrics are: (1) ProcF.04, (2) SF.02, and (3) ProdF.04 (Reliability).

It is interesting that the two ranks are quite similar. To cross compare the two rankings, we visualize the two rankings as a scatter plot in Fig. 16, which depicts the similarity of the two rankings. Two metrics are outside the general trend in this scatter plot, i.e., SF.01 (User satisfaction) and ProdF.05 (Performance efficiency). SF.01 is ranked 12th in one dataset while ranked 4th in the other one. ProdF.05 is ranked 13th in one dataset while ranked 4th in the other one. We could not find any interpretations for these observations in our data.

6 Discussions and implications of the findings

In this section, we discuss the summary of our findings, lessons learned, and potential threats to the validity of our study.

6.1 Summary of findings and implications

Summary of findings regarding RQ 1 is as follows. Note that all these observations and results elaborated in Section 5 are based on certain characteristics (profile) of the dataset, e.g., as discussed in Section 4.2, our dataset mostly includes small to medium projects, concerning mostly new development projects, with low to medium technological uncertainty, and moderate to high technological complexity. Thus, all our observations are only specific to the specific dataset and the generalization of our findings should be done with care.

-

The five most-correlated pairs of CSF and project success metrics (from the original dataset) were (Table 2): (1) OF.05 (Monitoring and controlling) and SF.03 (Top management satisfaction); (2) TF.07 (Team’s experience with the development methodologies) and ProdF.08 (Compatibility); (3) OF.04 (Project planning) and SF.03 (Top management satisfaction); (4) TF.02 (Internal team communication) and ProcF.04 (Team building and team dynamics); and (5) OF.06 (Organization-wide change management practices) and SF.03 (Top management satisfaction). These findings clearly denote our suggested priority of issues, needing attention by project managers if they want to increase their chances of project success.

-

Level of specification (requirements) changes (i.e., volatility) was not correlated with any of the project success metrics. This would require further research to find out whether project success is not related to the level of specification changes or this is a case specific to our sample population.

-

Schedule as a variable describing projects success is related to only one CSF that is project planning. Level of project planning in projects being a factor for schedule variance might be an anticipated outcome.

-

Interestingly, among all CSFs, only project monitoring and controlling is significantly and positively correlated with all variables describing project success in product characteristics and stakeholder satisfaction categories.

-

In general, CSFs in organizational and team factors categories have more moderate to strong correlations with the variables describing project success than the ones in customer and project CSF categories.

-

In the balanced dataset, top 6 strong correlations between CSFs and project success metrics were: (1) OF.04 (Project planning) and ProcF.04 (Team building and team dynamics); (2) OF.05 (Project monitoring/control) and ProcF.04 (Team building and team dynamics); (3) OF.05 (Project monitoring/control) and SF.02 (Team satisfaction); (4) TF.01 (Team commitment) and ProcF.04 (Team building and team dynamics); (5) TF.06 (Expertise in task and domain) and ProdF.01 (Overall quality); and (6) TF.06 (Expertise in task and domain) and ProdF.03 (Functional suitability).

-

In both the original dataset and the balanced dataset, we observed higher correlation values often among CSFs in the same CSF category, e.g., organizational factors, or team factors.

Summary of the findings about RQ 2 are as follows:

-