Abstract

Research and development are central to economic growth, and a key challenge for countries of the global South is that their research performance lags behind that of the global North. Yet, among Southern researchers, a few significantly outperform their peers and can be styled research “positive deviants” (PDs). In this paper we ask: who are those PDs, what are their characteristics and how are they able to overcome some of the challenges facing researchers in the global South? We examined a sample of 203 information systems researchers in Egypt who were classified into PDs and non-PDs (NPDs) through an analysis of their publication and citation data. Based on six citation metrics, we were able to identify and group 26 PDs. We then analysed their attributes, attitudes, practices, and publications using a mixed-methods approach involving interviews, a survey and analysis of publication-related datasets. Two predictive models were developed using partial least squares regression; the first predicted if a researcher is a PD or not using individual-level predictors and the second predicted if a paper is a paper of a PD or not using publication-level predictors. PDs represented 13% of the researchers but produced about half of all publications, and had almost double the citations of the overall NPD group. At the individual level, there were significant differences between both groups with regard to research collaborations, capacity development, and research directions. At the publication level, there were differences relating to the topics pursued, publication outlets targeted, and paper features such as length of abstract and number of authors.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

A nation’s scientific research capability, characterised by its direct engagement in the creation of knowledge, plays a vital role in its sustainable economic development, and the strong correlation between science and technology development and economic development is well documented (King, 2004; Man et al., 2004). Scientific research is required both to create the new technologies and techniques that increase local productivity and economic growth, and to adapt technologies imported from abroad (Goldemberg, 1998). A necessary part of this, in order to build a strong knowledge society with a thriving ‘culture of science’, is the publication and dissemination of research results (Salager-Meyer, 2008).Footnote 1

A clear research divide is visible between the global SouthFootnote 2 and the global North. This can be seen in terms of research investment and capability. For example, the average national expenditure on research and development from 2005 to 2014 was 1.44% of GDP in Northern countries but only 0.38% of GDP in Southern countries (Blicharska et al., 2017) while the number of researchers per million population in 2017 was 4,351 in the global North and 713 in the global South (World Bank, 2020). The divide is also manifest in scientific outputs. In 2018, global North countries produced an average of more than 35,000 scientific and technical journal articles per country while global South countries produced an average of 9700, or 4000 if China and India are excludedFootnote 3 (World Bank, 2020). Despite some signs of progress, there also remains an important gap in terms of per-country and per-researcher citation rates between North and South (Confraria et al., 2017; Gonzalez-Brambila et al., 2016). The divide in terms of highly-cited outputs is even starker, with global South researchers (again excluding China and India) authoring less than 2% of the top 1% most-cited articles globally (National Science Board, 2018).

It is this latter issue—low citation rates for Southern research—that forms our particular focus in this paper, and for which a number of explanations have been put forward. Statistical evidence shows that the lower levels of investment and lower relative populations of researchers in the global South are key factors; the latter issue is exacerbated by the brain drain of Southern researchers who relocate to the global North (Man et al., 2004; Pasgaard & Strange, 2013; Salager-Meyer, 2008). Lower levels of English language proficiency are also a factor, given the skew of international journal publication towards English (Confraria et al., 2017; Gonzalez-Brambila et al., 2016; Man et al., 2004). Other recognised institutional exclusion factors and/or biases against Southern researchers include difficulty in securing research grants (Karlsson et al., 2007), and a greater likelihood that reviewers and editors of mainstream scientific journals will reject a paper from a global South institution than a paper of equivalent quality from a global North institution (Gibbs, 1995; Leimu & Koricheva, 2005).

Among the valuable research conducted on this issue to date, there have been three main approaches: country-level statistical analysis, paper-level statistical analysis, or individual-level analysis. While the latter includes author-related factors, Southern researchers as individuals are rarely investigated. In particular, there has been no previous research focusing on “exceptions to the rule”: those few Southern researchers who are able to achieve much higher research performance than their peers. Pre hoc, it is reasonable to hypothesise that such researchers could provide valuable insights and lessons that might help to better understand and even mitigate the current North–South divide in research outputs and citation. It is therefore the purpose of this paper to specifically study these exceptions by investigating what characterises high-performing researchers and their publications in a global South context.

In order to do this, we make use of the “positive deviance” (PD) approach, given that this attempts to systematically identify and learn from “outliers”—individuals who are performing substantially better than expected and better than their peers, given the resources and socio-economic conditions they are exposed to (Sternin et al., 1997). First used at scale in order to learn from Vietnamese families with well-nourished children in contexts of widespread malnutrition (Sternin, 2002), the positive deviance approach has subsequently spread to other domains (Albanna & Heeks, 2019). However, the conventional PD approach relies heavily on primary data collection to develop a baseline from which positive deviants (PDs) are identified—a process that is both time- and labour-intensive with costs directly proportional to sample size. Recent developments in the availability of digital datasets have presented new possibilities for the identification and understanding of PDs (Albanna & Heeks, 2019). This new digital data-powered approach to positive deviance was seen as particularly relevant for investigation of scientific researchers given the existence of platforms that digitally index and/or analyse the scholarly work of researchers, enabling evaluation of their performance through multiple dimensions and metrics.

For this study, we chose a sample of 203 information systems (IS) researchers from Egypt to identify factors that enabled a few positive deviants to outperform their peers. Positive deviants are defined as researchers who outperform their peers in both productivity (articles published) and impact (article citations). They were identified based on six citation metrics that take into account those two dimensions of performance in different ways. We conducted an analysis of the researchers’ attributes, attitudes, practices, and publications, based on a mixed-methods approach that employed interviews, surveys, and analysis of publication-related datasets. Two methodological innovations were developed in this study. The first was the use of multiple performance metrics in identifying PDs, which enabled us to profile PDs into groups based on those metrics. The second was the identification of extrinsic and intrinsic predictors of PDs’ publications as a way of understanding and reflecting on some of their publication strategies. Hence, this paper has two main contributions. The first is contextual, which is the identification of predictors of high performers or PDs in a Southern country, who face challenges different from those facing researchers in Northern countries. And the second is methodological, where a combination of multiple performance indicators and a number of data sources were used to develop a holistic approach for identifying, profiling and characterising PDs.

In what follows, we first present a review of related work on high-performing researchers before explaining the data sources and methodology of this data-powered positive deviance approach. The methodology steps are then undertaken: defining the study focus, determining the positive deviants, and discovering the features of positive deviants and their published papers. We end with discussion and conclusions.

Related work

There is a substantial body of research on the predictors of individual-level high research performance over the last four decades. While the terminology of “positive deviants” has not been used; analogous concepts and synonymous terms have been. Relevant literature on highly productive academics includes work studying their attitudes, practices and perceptions in 11 European countries (Kwiek, 2016), and their attributes, perceptions and structural predictors in China, Japan and South Korea (Postiglione & Jung, 2013); a series of studies that investigated the characteristics and work habits of the top (three or four) educational psychology researchers in the US (Kiewra & Creswell, 2000; Patterson-Hazley & Kiewra, 2013) and in Germany (Flanigan et al., 2018); and a paper on the strategies and attributes of highly productive academics in school psychology, who were mainly Americans (Martínez et al., 2011). Other research that has looked into top performers includes Kwiek’s study (2018), which investigated both individual and institutional variables to identify predictors of research success for the top 10% of Polish academics, and Kelchtermans and Veugelers’ (2013) study on top performing Belgian researchers, which investigated the effects of co-authorship, gender and previous top performance. There are also studies on research stars such as the study by Yair et al. (2017) on Israeli Prize laureates in life and exact sciences; and the study by White et al. (2012) on American researchers in business schools, where individual and situational variables were explored. High achievers were identified in a study by Harris and Kaine (1994) that investigated the preferences and perceptions of high-performing Australian university economists and highly cited scientists were studied by Parker et al. (2010) and Parker et al. (2013) who sought to identify the social characteristics and opinions of the 0.1% most cited environmental scientists and ecologists worldwide. Eminent scientists were studied by Prpić (1996) to explore the most important predictors of productivity among Croatian scientists and top producing researchers were identified in a study by Mayrath (2008) which aimed at understanding the attributes of the authors having the most publications in educational psychology journals.

Beneath the factors identified in these studies have lain theoretical models proposed to explain the research performance and outperformance observed, of which three will be mentioned here. The sacred spark theory (Cole & Cole, 1973) states that highly productive researchers have an inner drive and motivation to do science that is fuelled by their love of the work. Other theories look more at the external environment. Utility maximisation theory (Kyvik, 1990) argues that the extent to which researchers research and publish—as opposed to other activities—is determined by the personal utility or benefit they perceive themselves getting; that utility often being significantly determined by external incentives or disincentives that attach to the different activities. Cumulative advantage theory (Merton, 1968) is somewhat similar in identifying external reward systems, and their reinforcement or otherwise of research and publication activity, as important (Cole & Cole, 1973). But it sees researchers who begin with some advantage (either innate or external) being increasingly more productive over time compared to others as they gain further advantage, such as greater likelihood of obtaining research grants, or participating in collaborations.

As summarised next, this work has been of significant value in providing insights into high-performing researchers. However, we can also identify three lacunae which the current paper seeks to address.

First, geographic. It is evident from the above that there is a geographic concentration of such studies on high-income countries of the global North: the one study including China is the sole exception. There has thus been practically no consideration of research performance in the resource-constrained countries of the global South. Addressing this unexplored topic is particularly pressing, given the imperative to improve the contribution of research to national development in these countries, and given that findings from such a study might lead to new context-aware predictors of high research performance that could mitigate some of the challenges reflected in the current North–South divide. Hence the justification for the current paper.

Second, methodological in relation to the dependent variable of performance measurement. The majority of studies identify and rank high-performing researchers based on (i) productivity, as measured by number of articles published (e.g. Harris & Kaine, 1994; Kwiek, 2016; Postiglione & Jung, 2013; Prpić, 1996) or, in case of some studies, (ii) impact as measured by number of citations (e.g. Parker et al., 2010, 2013). There are clearly benefits in incorporating both productivity and impact measures yet this was rarely found in the literature reviewed (Altanopoulou et al., 2012). Recent advances in citation metrics and availability of tools such as Harzing’s Publish or Perish software (Harzing, 2007) provide an opportunity to measure performance along different dimensionsFootnote 4 and using combined measures. The current study therefore combines a number of citation metrics to evaluate researchers; enabling a balanced consideration of both productivity and impact and allowing control for factors like article and author age.

Third, methodological in relation to the independent variables or predictors. Table 1 presents the significant predictors of high performers in research, identified from previous studies and forming a foundation for modelling and analysis for the current study. These can be grouped into seven main categories: Personal or Demographic characteristics such as age, gender and education; Internationalisation and Research Collaboration such as participation in domestic or international research teams; Research Engagement with publishing entities; Research Approach including focus; Academic Roles covering distribution of time between different academic activities; Practices associated with undertaking research; and Institution predictors related to work environment actuality or preferences.

These predictors were almost all identified using qualitative methods such as interviews (Flanigan et al., 2018; Kiewra & Creswell, 2000), quantitative methods such as surveys (Harris & Kaine, 1994; Kwiek, 2016, 2018; Postiglione & Jung, 2013; Prpić, 1996) or a mix of both (Martínez et al., 2011; Mayrath, 2008; Patterson-Hazley & Kiewra, 2013). What is consistent here is the focus on individual researcher-level data. Largely missing has been publication-level data.

Yet there are a number of reasons for thinking that adding in publication-level data can provide valuable additional insights. Publication data can provide insights into some of the individual-level predictors: for example, the amount and type of research collaboration undertaken by high-performing researchers compared to other researchers. Past studies also identify three main groups of high citation predictors: author, journal and paper (Onodera & Yoshikane, 2015; Walters, 2006) and there is some evidence that within these predictors, the most important are those related to the paper (Stewart, 1983). Publication analysis can therefore determine whether the papers of high-performing researchers match characteristics of papers known to be associated with high citation rates, such as title length (Elgendi, 2019), paper length (Onodera & Yoshikane, 2015), number of references (Didegah & Thelwall, 2013) and figures (Haslam et al., 2008), coverage of certain topics (Mann et al., 2006) and keywords (Hu et al., 2020), number of authors (Peng & Zhu, 2012), quality of journal (Davis et al., 2008), etc. Additionally, predictors relevant to the global North–South divide have been identified in a number of paper-focused studies which found that the author’s nationality (whether from the United States or not) (Walters, 2006), the regional focus of the articles (focusing on the United States or Europe) and the language of the journal (Van Dalen & Henkens, 2005) had a significant positive effect on the average citations.

Three particular theories are employed by these studies to examine the factors affecting publication citation. The normative view (Hagstorm 1965; Kaplan, 1965; Merton, 1973) assumes that science is a normative institution governed by internal rewards and sanctions (Baldi, 1998). According to this perspective the intrinsic characteristics of papers (i.e. content/quality) are the main driver of citations. The social constructivists’ view (Gilbert, 1977; Knorr-Cetina, 1981; Latour, 1987) argues that scientific knowledge is socially constructed through the manipulation of political and financial resources and citations are used as persuasion tools (Peng & Zhu, 2012). The citing behaviour in this view is driven by a paper’s extrinsic characteristics, such as the location of the cited paper author within the stratification structure of science, that would convince the reader with the validity of the arguments (Baldi, 1998). The natural growth mechanism (Glänzel & Schoepflin, 1995) states that citations are driven mainly by the interaction between publication-level time dependent factors and factors related to the publication outlets. It sees that the characteristics of academic journals (e.g. journal prestige, self-citation rate, maturation speed) will interact with time to affect citations of papers (Peng & Zhu, 2012).

All of this supports incorporation of publication-level predictors of outperformance when considering what predicts outperformance of individual researchers. In this study we therefore use a combination of researcher-level data gathering (via interview and survey) and publication-level analysis, in order to provide a fuller picture of research performance predictors.

Methodology and data

The positive deviance approach consists of five steps: “(1) Define the problem, current perceived causes, challenges and constraints, common practices, and desired outcomes. (2) Determine the presence of positive deviant individuals or groups in the community. (3) Discover uncommon but successful practices and strategies through inquiry and observation. (4) Design activities to allow community members to practice the discovered behaviours. (5) Monitor and evaluate the resulting project or initiative” (Positive Deviance Initiative, 2010). Time and resourcing constraints meant that only the first three steps could be essayed in this study. We also diverged from the traditional approach by using this study as a testbed for what we will call the “data-powered positive deviance” (DPPD) methodology. Where traditional PD relies on freshly- and specifically-gathered field data, the idea behind DPPD is that it uses pre-existing digital data sources instead of—or in conjunction with—traditional data sources. It uses digital datasets to identify positive deviants (those performing unexpectedly well in a specific outcome measure that is digitally recorded, mediated or observed) and potentially also to understand the characteristics and practices of those PDs if digitally recorded (Albanna & Heeks, 2019). The potential of DPPD is that it can mitigate some of the challenges of traditional PD approaches by reducing time, cost and effort, and can add to the positive deviance approach by identifying positive deviants in new ways and domains (Albanna & Heeks, 2019).

Scientometrics—the field of study that focuses on measuring and analysing scientific literature (‘Scientometrics’ 2020)—is well suited for a positive deviance approach because, as reflected in the discussion of literature above, research performance does not follow a normal distribution (O’Boyle & Aguinis, 2012). Instead, it follows a Pareto or power distribution characterised by strong skewness with a long tail to the right that includes a number of high-performing outliers; sufficient to provide a sample of positive deviants. Scientometrics is well suited to DPPD specifically for two reasons. First, because the proliferation of electronic research databases has made it possible to develop scientific evaluation indicators that can be used to digitally measure the performance of researchers (e.g. h-index) and journals (e.g. impact factor). Second, because the emergence of advanced data analytics tools alongside the emergence of a variety of large scale datasets (such as citations, references, publication outlets, usage data, paper content, etc.) has made it possible to not only measure performance, but to also analyse the practices of the researchers, characterise their scientific outputs, and predict their future performance. Specifically, this can be rendered possible through techniques such as network analysis, topic modelling, predictive analytics and co-citation analysis.

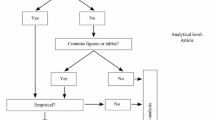

As discussed above, in this study we used a mixed-methods approach to identify PDs and to analyse their practices. In the Define step, we used secondary data from Egyptian university websites and from Google Scholar to set the frame of Egyptian researchers for analysis. In the Determine step, we extracted for each researcher the bibliometric data of all his/her academic outputs that were produced while being affiliated to an Egyptian university, without the exclusion of publication or research type. This data was analysed with statistical software R v3.4.1 to identify the positive deviants and non-positive deviants (NPDs) within the overall population. During Stage 1 of the Discover step, primary data was collected through in-depth interviews from a sample of PDs to explore practices, attitudes and attributes that might distinguish them from NPDs. During Stage 2 of the Discover step, the key findings from Stage 1 plus other predictors of research performance drawn from the literature (see Table 1) were used to design a survey tool. That survey then targeted the whole population and tested if the proposed differentiators were significantly different between the two groups (PDs and NPDs). Finally, in Stage 3 of the Discover step, the Scopus secondary dataset was used as the basis for analysis of researcher publications; extending and validating some of the findings identified in the previous steps. Figure 1 summarises the process used to identify PDs and to discover predictors of their performance, and outlines the structure of findings, presented next.

Findings

Define

The study population comprises IS researchers in Egyptian public universities. A single discipline was chosen to avoid variations in, for example, typical publication and citation rates that arise between different disciplines. Information systems was chosen because of the growing importance of research on digital technologies including technological development and implementation research to economic development, and because a pre-check showed ready presence of a substantial number of Egyptian IS researchers and publications in the main secondary datasets. Egypt was chosen because it was the first author’s home country, with social contacts affording ready access to university departments and staff. Public universities were chosen to ensure that all researchers in the sample worked in a context of similar resource constraints, albeit with slight variations between universities within or outside the Greater Cairo area.

In Egypt there are 29 public universities, 11 of which do not have computer science faculties or IS departments and seven of which do not have an online directory of the IS department staff. So the final sample included 11 universities: Cairo, Ain Shams, Benha, Helwan, Mansoura, Fayoum, Menofeya, Assiut, Zagazig, Kafrelsheikh and Port Said. The total number of faculty members in those universities was 304 but for this study we only included those researchers who hold at least a Masters’ degreeFootnote 5 and have published at least one article. This guarantees that they have some publishing experience. Consequently, the final sample that we targeted for this study included 203 researchers who were assistant lecturers, lecturers, assistant professors and professors. (In the Egyptian higher education system, the first academic rank is assistant lecturer, which you receive once you obtain your Masters’ degree and then you become a lecturer when you obtain your PhD. The following rank is associate professor and then professor, which are obtained based on both years of experience and publications.)

Using the university websites, the names, degrees and email addresses (if provided) of these researchers were identified and this information was used to extract their citation data from the Publish or Perish (PoP) software. PoP is a freely accessible software program that extracts data for researchers from a number of sources (e.g. Web of Science, Scopus, Google Scholar) to provide a variety of research citation metrics (Harzing, 2007). The citation data was extracted for the study sample in July 2018. We only included the papers that had the Egyptian university affiliation i.e. publications produced while doing a PhD abroad were excluded to ensure a fair comparison and to reduce the effects of confounding variables associated with universities abroad.

For this study, Google Scholar was chosen as the source for bibliometrics. The choice of Google Scholar was driven by the fact that ISI citation databases, such as Web of Science, limit citations to journals in the ISI databases. They do not count citations from books and conference proceedings, cover mainly English language articles and provide different coverage in different fields. Such databases significantly underestimate researchers’ publications and citations (Ortega, 2015). Prior literature also supports the fact that Google Scholar outperforms Web of Science in coverage (Kousha & Thelwall, 2007), especially for articles that were published from 1990 onwards (Belew, 2005) and for computer science-related research in which conference papers form a key means of publication (Franceschet, 2010). Additionally, Google Scholar is freely accessible, which makes the DPPD method used in this case study easily replicable in different scientific fields and countries. The main drawback of Google Scholar is that its consistency and the accuracy of data is lower than commercial citation enhanced databases such as Web of Science (Jacso, 2005). Hence, extra time was needed to check the accuracy of obtained results.

For every researcher six main citation metrics—extracted and derived from PoP query results—were used to measure research performance as shown in Table 2.

In this study positive deviants are researchers who outperformed their peers in at least one of the six citation metrics presented in Table 2. The need to use multiple measures was motivated by the drawbacks of relying only on the h-index. These drawbacks include the influence of length of researcher’s scientific career, with the h-index reflecting longevity as much as it reflects quality (Alonso et al., 2009; Van Noorden, 2010), in addition to its insensitivity to highly cited papers (Egghe, 2006). Using multiple measures enabled us to avoid putting certain groups at a disadvantage due to factors such as the length of their research career, the size of their research departments, or their publication strategies. Measures like the m-quotient, the aw-index and the hc-index ensured that outperformance is detected regardless of the publication age of the author or the age of the paper. Similarly, some IS departments are larger than others, enabling them to have more research collaborations, and to reduce the potential bias due to the larger pool of research collaborators, the hi-index was employed. We were also interested in researchers with selective publication strategies: those who do not necessarily publish a very high number of papers but who do attain a high impact. This group of researchers can be unfairly assessed using the h-index, while led us to use the g-index. In summary, we can see that these established citation metrics are complementary, as they make different assumptions and have different biases, and that combining the different measures provides a more comprehensive picture of performance.

Determine

Positive deviants are typically identified as specifically-calculated outliers from some measure of central tendency. As can be seen from Fig. 2—violin plotsFootnote 6 of the distribution of each of the six measures across the entire sample—the data here is not normally distributed. Instead, and consistent with the past findings on researcher performance reported above (O’Boyle & Aguinis, 2012) it shows a skewed, Pareto distribution with a long tail above the mean. This makes the mean a skewed indicator of central tendency and invalidates the method of identifying positive deviants or outliers in a normally-distributed population, which would define them as those observations lying beyond two or three standard deviations above the mean.

Instead, we used the median as an indicator of central tendency and employed the interquartile range (IQR) method (Hampel, 1974) to identify positive deviants based on their deviation from the median. In the IQR method, the dataset is divided into four parts, the values that separate the parts are called the first, second, and third quartiles; and they are denoted by Q1, Q2, and Q3, respectively. Q2 is the median of ordered observations, Q1 is the median of observations ordered before Q2 and Q3 is the median of observations ordered after Q2. IQR is Q3–Q1 and outliers are defined as observations that lie beyond 1.5*IQR (Walfish, 2006). In this case study, PDs were defined as individuals lying beyond the 1.5*IQR added to the third quartile in at least one of the six citation metrics that we used as measures of performance. In total, 26 unique PDs were identified and their average performance metrics in comparison to the NPDs are summarised in Table 3.

Cluster analysis

Hierarchical clustering was used to identify groups of PDs based on the citation metrics in which they were found similar, i.e. all members of a cluster are outliers in similar citation metrics. To support this analysis, a binary vector composed of six dummy variables (representing the six citation metrics) was constructed for each of the positive deviants identified,. A value of “1” indicates that this PD is an outlier in the corresponding metric and a value of “0” indicates that this PD is not an outlier in this metric. We then used the hclust function of the R cluster packageFootnote 7 to implement complete linkage agglomerative hierarchical clustering using the Gower distance. This method usually yields clusters that are compact and well separated, and the complete linkage criterion ensures direct control of the maximum dissimilarity in each cluster. A graphical representation of the resulting hierarchical tree (i.e. dendrogram) is presented in Fig. 3.Footnote 8

As shown in Fig. 3, we were able to cluster the 26 researchers into three main clusters as follows.Footnote 9

Cluster 1: Rising stars

This cluster includes six researchers who were outliers either in the m-quotient, which discounts longevity and citation skews against junior researchers, and/or the aw-index, which gives weight to more recent and as yet less cited papers by calculating age weighted citation rates for the researcher’s papers. Researchers belonging to this group were mainly assistant lecturers and lecturers (with the exception of one associate professor) and they were characterised by a short publication span and a small publication volume (as shown in Table 5).

Cluster 2: Exceptional performers

This cluster includes ten researchers who were outliers in all the six metrics collectively, each being an outlier in at least five metrics. Researcher 46 was an outlier in all metrics except for the hi-index, which might indicate that they have very few single authored papers or that they usually publish with a large number of authors. Researchers 216, 94 and 214 were outliers in all measures except for the m-quotient. The remaining six researchers were outliers in all six metrics. Researchers belonging to this group are characterised by balancing all performance measures i.e. productivity, impact and consistency, and having an old average publication age. They also have the highest average aw-index indicating sustained production of highly cited articles. They were mainly professors with the exception of one lecturer and one associate professor.

Cluster 3: Highly cited researchers

This cluster includes seven researchers who are all outliers in the g-index, which gives more weight to highly cited papers. In addition to the g-index, researchers 220 & 227 were outliers in the hi-index which means that they publish mainly individually or with small groups of co-authors; researchers 31 and 89 were outliers in the aw-index, meaning that their highly cited papers are recent, resulting in a high age weighted citation rate. As shown in Table 5, they are characterised by having the longest publication span and the highest number of citations per paper across all clusters.

Table 4 shows the average scores of the three clusters across the six performance measures and Table 5 shows the average scores of those clusters across other relevant performance indicators.

Discover

This study used three separate methodologies—in-depth interviews, surveys and publication analysis—to triangulate data on underlying attributes, practices and attitudes of PDs, thus helping to validate findings. The three methodologies are interrelated and were undertaken sequentially in three stages, with the findings from one stage guiding design of the following stage.

Stage 1: Interviews

The objective of this stage was to identify uncommon strategies and practices among positive deviant researchers, which could be used to guide the design of the Stage 2 survey questionnaire. This stage was incorporated into the methodology because predictors of high research performance used in prior studies did not take into account the particular challenges of global South researchers. Hence there was a need to check the relevance of predictors from past literature and also to explore any additional context-specific predictors.

In order to do this, a semi-structured interview guide (English language version in Appendix A) was developed based on a combination of past literature on high-performing researchers and on the context of global South research. To reduce the need for extensive travel, interviews were restricted to the four universities in Greater Cairo: Cairo, Ain Shams, Helwan and Benha. Those universities were home to 12 of the 26 PDs identified in the Determine phase, all of whom were interviewed along with the heads of the IS departments in each university.Footnote 10 Table 6 shows the distribution of the interviewed PDs across gender and rank.

Permission was obtained for the interviews to be recorded so that the transcript could subsequently be analysed to identify common themes, patterns and explanations. In all, interview data was coded into nine main categories of potential differentiators of PDs:

-

Previous education: A number of PDs mentioned that they obtained their PhD degrees from global North universities, explaining how it had a fundamental role in changing how they viewed and practised scientific research.

-

Research motives: PDs were seen as having different motives and drivers for conducting and publishing research. Getting a promotion was definitely one of those drivers, especially for early-career researchers. However most of the PDs mentioned motives related to international recognition, staying competitive and how they enjoy the process of publishing research. A number also mentioned that their research satisfies a personal interest they have and publishing in it adds to their satisfaction.

-

Research type: Almost all of the interviewed PDs worked on applied and experimental research, while only two focused mainly on theoretical research. In terms of topics, all that stood out were areas avoided by most PDs: only one did research in the management of information systems, and only two showed interest in research that had broader social and developmental impact.

-

Research strategies: A number of PDs said that they were more inclined to do incremental research i.e. building upon previous work; one of them saying “I do not innovate by finding new problems; I innovate by finding new ways and methods to solve a well-established problem”. Applying for research funding from schemes like the European Region Action Scheme for the Mobility of University Students (ERASMUS +) and the German Academic Exchange Service (DAAD) was also mentioned by a number of them. Another strategy that was mentioned by most of the PDs is reaching out to foreign authors to conduct collaborative research with them. When they were asked why they do that, their answers varied. Some said that it ensures better access to resources; with sample statements including “When a paper is accepted in a conference or a journal, their universities can fund their travel expenses or pay for journal submission fees”, and “my research requires computing facilities that are hard to provide here and my research partner in Canada has access to those facilities”. Such resources could include complementary skill sets: “he [foreign collaborator] is good at scientific writing and in the statistical analysis of results and I’m good at coming up with ideas and in the interpretation of results, we were a great team”. Another view was that foreign authorship assisted publication: “foreign authors increase the chances of paper acceptance and reduce the time of acceptance drastically”.

-

Publication strategies: PDs were aware of the importance of publishing in indexed journals and conferences (such as those indexed in Scopus or ISI), stating it as a major criterion in selecting where to publish. Within this overall focus, the interviewees’ strategies could be grouped into three main categories: (a) Publishing in international indexed journals and in international indexed conferences. These interviewees saw top-tier international conferences (e.g. the Very Large Databases series) being as prestigious as journals rated Q1 and Q2 in the SCImago Journal Ranking (SJR)Footnote 11 in addition to providing very high paper visibility. (b) Publishing in international indexed journals and in local indexed conferences; the majority of the interviewed PDs fell into this category due to financial constraints that limited their ability to attend international conferences. They used conferences as a medium to retain ownership, promote, refine and develop their research ideas before submitting extended versions of papers to journals. “The journal paper should have at least 30% expansion to the work in the conference paper” said one of the PD researchers. (c) Publishing in international indexed journals only: this group could not afford travel to international conferences and could not find any value in publishing in local conferences stating, for example, that “Journal papers are more respected in Egypt”. A number of PDs also stressed the importance of the publisher, indicating that they noticed that there are certain publishers which provided very high visibility to their papers which lead to higher citation. One of them said “I started to focus on publishers instead of journals, because a strong publisher will make a journal powerful but a strong journal without a strong publisher, will die … any paper published by Elsevier will have great visibility, even if it is a new journal … I would rather publish in a Q4 journal published in Elsevier than publish in a Q3 journal published somewhere else”.

-

Research direction: A few PDs mentioned that they usually trace their own citations to see what other authors are saying about their work, and to see how their research is evolving, to get ideas for future work. One of the PDs also mentioned that he follows publishers like ACM, IEEE, Springer and Elsevier to keep informed of new conferences, and thereby indicating hot topics, “if ACM decided to do a conference on recommender systems, this implies that recommender systems are picking up or will become a hot topic”.

-

Writing their papers: Interviewees were asked about factors that increase the chances of paper acceptance, and almost all of them agreed on the importance of the paper structure and presentation. One of them even stated that “a well-written average idea is more likely to be accepted than a poorly-written great idea.” Interviewees also mentioned the importance of issues including: showing the contribution clearly and frequently in the paper, mathematical and theoretical validity, recency of references, use of formal and scientific writing, mastery of the English language, and a self-contained abstract showing clearly the contribution, the method used and the study findings or results. They were also asked about the process of writing a paper but nothing seemed unusual in that regard. Finally, they were asked about factors that could increase paper citations. The answers varied but, again, publishing with a reputable foreign author was mentioned as a key factor in attracting citations. One of the PDs said “When you are publishing with a trusted author in the field, people feel comfortable to cite his work”. Publishing in top journals and conferences was also mentioned several times although a few PDs stated that some of their most highly cited work was published in local journals and conferences. PDs also mentioned the importance of publicising research work either through sending emails to researchers they thought would benefit from a paper or through making it available on academic networking sites like ResearchGate and Academia. A number of PDs mentioned the role of the title in attracting citations and one stated that “I always try to borrow the same keywords used in the titles of the highly cited related papers, because when they search for them, mine will appear.” Some of them also mentioned that survey papers in new fields guarantee high citation and the same for publishing in hot topics at the beginning of their hype cycle.

-

Research challenges: PDs were asked about their research challenges and how they were able to overcome them. A number of PDs mentioned that they encounter difficulties in choosing the right journal for their publications. Only one PD suggested a way to overcome this, which was through use of online journal finder tools. PDs mentioned the language barrier especially with the students they supervise; one PD stated that he uses the paid-for language editing services provided by Elsevier. Another PD said “I asked one of my students to stop his PhD for 3 months just to enhance his English writing by taking courses”. Some of them mentioned having overseas contacts that proofread their work. The prolonged time from submission to acceptance was repeatedly mentioned by PDs as a major challenge, especially when the topics they want to publish are time sensitive. In such cases, they would resort to conferences for early communication of those ideas. Finally, all of them mentioned that the limited financial support they receive from the university—to attend conferences and to publish in open access journals—is a major challenge. The alternative was to self-finance their travel and publishing activities or to seek support from funding agencies. Some PDs also mentioned that they overcame this challenge by having as co-authors their former supervisors from their foreign PhD-granting universities, which would sometimes cover conference travel expenses and journal submission fees.

-

Research skills development: A number of PDs mentioned taking scientific and technical writing courses. One of them also mentioned the importance of formal writing saying that “I paid a lot of attention to learn the formal way of writing; you learn it from observation, trial and error”. Indeed, a number mentioned observing highly cited papers written by top authors to see how it is written and structured as a means to enhancing their writing skills. A lot of PDs mentioned using tools like Grammarly “A number of powerful researchers I know recommended this tool” said a PD, and Latex, “I could spend a whole day rearranging figures on Word while it takes me a few minutes using Latex”.

In summary, the interviews led to the identification of potential patterns in attributes, attitudes and practices amongst PDs: some similar to those from earlier studies but a number that had not previously been identified. Some of these—such as use of keywords from titles of highly cited papers—were practices identified by only one interviewee that, while interesting, were not seen to warrant inclusion in the survey questionnaire. But those appearing repeatedly—studying for a PhD abroad, taking scientific and formal writing courses, publishing with foreign reputable authors, etc.—were incorporated into the Stage 2 questionnaire.

Stage 2: Survey

The primary objective of this stage was to validate the findings from the earlier parts of the methodology and to identify predictors of PDs that are significantly different from those of NPDs. An online survey questionnaire (English language version in Appendix B) was developed based on the review of related work (see e.g. Table 1), amended in light of the findings from Stage 1. A message and link to the survey was sent to the whole sample of PDs and NPDs (n = 203) in the 11 universities, including PDs who were interviewed in the previous stage. In total, 90 survey responses were collected: 20 from PDs and 70 from NPDs yielding an overall response rate of 44%.

Survey responses (n = 90) were entered and analysed with the use of the statistical software R Studio. Table 7 shows the distribution of the sample of PDs and NPDs across gender and rank. 70% of the respondents held PhD degrees (i.e. were lecturer rank or above) and 30% had MSc degrees (i.e. were assistant lecturers) and the responses came evenly from males and females. It also shows pronounced gender imbalance within the PDs and how seniority still plays a role in being a PD, despite incorporation of measures of performance that would control for that (e.g. hi-index).

Feature selection

The survey tool had 38 questions covering researcher attributes such as gender and rank; attitudes such as what motivates them to publish research; and practices such as the type of research collaborations they engage in. After transforming categorical variables into dummy variables, the final sample had 90 observations and 185 variables. The next step was to build a predictive model to identify significant predictors of PDs among those 185 variables. But before building such a model, it is important to undertake two necessary steps. The first is to reduce complexity through feature selection i.e. selecting the predictor/independent variables that will be used to predict the dependent variable which in our case was a binary variable with the value of 1 for PD researchers and 0 for NPD researchers. And the second is to identify and address potential issues of multicollinearity.

Feature selection was done by running a simple univariate logistic regression (i.e. relation of the dependent variable with each predictor, one at a time) and then including only predictors that met a certain pre-set cut-off for significance to run in the multiple regression. For the simple regression a cut-off of p < 0.1 was used since its purpose was to identify potential predictor variables rather than test a certain hypothesis (Ranganathan et al., 2017). A stricter cut-off point (p < 0.05) was then used in the multiple logistic regression to identify significant predictors of PD. Out of all the explored predictors, 23 were identified as potentially significant predictors as shown in Table 8. Predictors derived from the interviews in Stage 1 are denoted by “(i)”.

Following the construction of the simple univariate regression models, we proceeded to check multicollinearity. Specifically, the strength of the association between all possible pairs of the 23 predictors was determined using the Spearman rank correlation (for numeric variables), Chi square (for categorical variables) and Anova (for pairs involving one categorical and one numerical variable) implementations in the cor function of the caret package.Footnote 12A lot of the predictors identified were significantly correlated with each other, which would be problematic when jointly used in a multiple logistic regression, creating what is referred to as the separation problem (Mansournia et al., 2018). In practice, stepwise regression can be used to overcome this issue but the problem with this approach is that it might eliminate important predictors that are correlated with the response variable and are important for the user. Partial least squares (PLS) regression allows us to retain in the model all the predictors that have a strong explanatory power. For that reason, it was our preferred method for multiple regression. This is further explained in the following section. PLS regression is a technique that reduces the predictors to a smaller set of uncorrelated components or latent variables and performs least squares regression on these components, instead of performing it on the original predictors. PLS regression is particularly useful when there are more predictors than observations and when the predictors are highly collinear (Abdi, 2003).

Multiple regression

PLS regression is a technique that reduces the predictors to a small set of uncorrelated components and performs regression on those components instead of performing it on the predictors (Tobias, 1995). The plsRglm packageFootnote 13 implements the PLS regression for generalised linear models which is an extension of the classical PLS regression introduced by Bastien et al. (2005). We also used the cv.plsRglm function to identify the ideal number of components to retain in a ten-fold cross-validation (k = 10), using six components (n = 6) as the maximum number of components to try with each group or fold. After plotting the results of the cross-validation, we decided to retain only two components based on the mis-classed criterion (i.e. components achieving the least number of misclassified observations) and the non-significant predictor criterion (i.e. components that had significant predictors). Cross-validation with a 70–30 split was used in each of ten training datasets with their test data pairs to calculate the model’s prediction accuracy and its AUC i.e. area under the receiver operating characteristics (ROC) curveFootnote 14; which is considered a good metric for evaluating the performance of binary classifiers. Across the ten folds, the model resulted in an average accuracy of 0.78 and an average AUC of 0.70. Significant predictors (p values < 0.05) of the two components we retained are presented in Table 9. The table also shows the estimates of the two retained components across the ten folds with an average coefficient of 1.5 for component one and 1.64 for component two.

In the analysis shown in Table 9, significant differences between PDs and NPDs emerged, covering attributes such as gender (PDs were mainly males) and rank (a large number of PDs were professors who are also department chairs). However, it is hard to tell if the latter is a cause or an effect. This is because becoming a department chair in the higher education system in Egypt is mainly based on years of experience rather than academic merit. Additionally, department chairs get the biggest share of MSc and PhD student supervisions, which are also significant predictors of PDs. Having a larger number of students implies a larger number of publications and citations, hence better citation metrics. Differences related to practices included the ways PDs developed their skills, such as taking scientific writing courses and English writing courses and travelling abroad for their PhD degrees. It was also strongly evident that PDs publish more papers with foreign authors. This links to a key difference that persistently appeared, with a relatively high loading, which was doing research with academics overseas. Other collaborations such as doing research with academics in other universities in Egypt and in other departments in the same university, were also significantly higher among PDs but they were not as strong as collaborations overseas. Practices that were found to be less common among PDs included getting research ideas from publications of researchers online, and surprisingly, doing radical research that suggests new models, frameworks, methods and architectures that were not implemented before; which is somewhat counterintuitive. Finally, differences relating to attitudes included how the researchers rated the climate of their department: PDs perceived their departments as more hostile and competitive while NPDs viewed departments as more friendly. They also viewed the number of issues as a less important factor when selecting the journals to publish in.

Table 9 also shows that component one included key predictors that are positively correlated with high research performance while component two was able to better capture the direction of variation of two predictors that are negatively correlated with high research performance (“Rating of department climate” and “I prefer to do radical research that suggests new models frameworks, methods and architecture that were not implemented before”) and had very small loadings in component one.

We were also interested in developing a model that would exclude non−controllable factors in order to identify transferable practices that could be adopted by other researchers. It excluded gender, rank and being a department chair. Table 10 presents the findings of this model which resulted in an average accuracy of 0.77 and an average AUC of 0.72. The table also shows the estimates of the two retained components across the ten folds with an average coefficient of 1.55 for component one and 1.58 for component two. Reassuringly, this model’s predictive power was very close to the average predictive power of the previous model (mean 0.78 and AUC 0.70) despite the exclusion of those significant predictors that had a relatively high loading. This model reinforces the results from the previous model on the importance of international research collaboration since “Doing research with academic overseas”, “Establishing research teams overseas” and “Publishing with foreign reputable authors” appeared repeatedly as significant predictors with high loadings across the ten folds. The significance was also evident of supervising more students (MSc and PhD), having a foreign PhD degree, receiving travel funds, and taking scientific or formal writing courses.

Stage 3: Publication analysis

While Stages 1 and 2 were focused on the identification of individual-level predictors of PDs, Stage 3 is focused on the identification of publication-level predictors. In other words, in this stage, the unit of analysis is the paper instead of the researcher. The general motivation for publication-level analysis was noted above but, in addition, some of the significant predictors identified in the previous stages required validation that could only be done through publication analysis. For instance, while PDs mentioned publishing with foreign authors and this was established as a significant predictor, it was not possible to validate this practice and quantify its prevalence within PD publications, relative to NPD publications, without analysing the actual papers. The same was true for the number of authors, the choice of publication outlet, the frequency of research collaborations, etc. In summary, the objective of Stage 3 is twofold: the first is to quantify and validate some of the findings of Stages 1 & 2 through the analysis of the researchers’ publications. The second is to identify additional predictors of PDs that can be derived directly from their publications.

In this stage we analysed the publication corpus of PDs versus the publication corpus of NPDs. We defined a PD publication as a paper that has at least one PD author from the 26 high-performing researchers identified in the Determine Phase. In contrast, an NPD publication is defined as a paper with at least one NPD author but where none of the authors is a PD. By doing so, we were able to create two mutually exclusive corpora to capture distinguishing characteristics of each. The papers were collected from the Scopus database using the RscopusFootnote 15 data package which links R Studio to the Scopus database API interface. For every researcher in the study population (n = 203) a Scopus ID was identified manually through the Scopus advanced search tool form on the website. This ID was then used to retrieve all the possible information associated with their publications including co-authors, co-author affiliations, abstracts, keywords and titles. For consistency purposes, we excluded publications not having the Egyptian university affiliation and/or produced while researchers were abroad (e.g. during overseas PhD study) or produced after 2018 (since the citation metrics upon which we selected the PDs were calculated in 2018). In total, 991 publication records were extracted for PDs and 677 publications were extracted for NPDs. Those publications were further reduced to 876 unique publications (in total), after excluding duplicate publications and publications that did not have abstracts on Scopus. The final corpus of papers included 392 PD papers and 484 NPD papers. Skews consistent with the early-discussed Pareto distribution of performance (O’Boyle & Aguinis, 2012) were immediately reflected: PDs make up 13% of the study population but contributed to the creation of 48% of the publications. Those 392 papers were cited 3210 times while NPD papers were cited 1810 times.

We proceeded to examine the three types of paper-level predictors of citation rates used in previous studies: (1) “extrinsic” features of the paper that are not directly related to its content (e.g. paper length, number of authors, etc.); (2) “intrinsic” or content-related features such as the topics covered in the paper; and (3) the publication “outlets” of the paper (e.g. conference or journal paper, journal SJR, etc.). The papers’ “extrinsic” features were extracted for each paper using the Scopus API functions. Paper “intrinsic” features were extracted from the paper title, abstract and keywords using a topic modelling technique that is further explained in a subsequent section. The publication “outlet” features were obtained using the sjrdataFootnote 16 package which contains data extracted from the SCImago Journal & CountryFootnote 17 open data portal.

Publication predictors

There is a substantial body of research on publication-level predictors of citation rates. In this study we selected several of those predictors based on three main conditions. The first is their relevance to measuring or validating findings from the previous two stages. The second is their relevance to the issues previously raised in the literature relating to Southern researchers. Finally, we excluded features that are difficult to ascertain or would require manual validation (e.g. gender of authors), subjective features (e.g. title attractiveness) or features that would require extensive additional computation or additional measure development e.g. internationalisation of journals. Table 11 presents the different publication features that were used as predictors, and references studies that used them as potential predictors. How paper topics (i.e. paper intrinsic features) were identified and converted into a feature space is explained in the following section.

Topic extraction

The objective of this analysis is (i) to identify the various research topics of the study population, and (ii) to develop for every paper a vector representing the distribution of the content across the topics identified. The author topics resulting from this analysis were used as the paper “intrinsic” features in the regression analysis (presented below) in order to explore if there are certain topics that are associated with PD performance and vice versa. Figure 4 explains the steps involved in the topic extraction process. Abstracts are considered a compact representation of the whole article, so we used them as a proxy of the paper content to identify the topics of research. We started by extracting publication data for each author from the paper corpus (876 unique abstracts). Standard text mining pre-processing steps were applied on the entire corpus of abstracts such as lowercasing the corpus, removal of standard stopwords (e.g. a, an, and, the), stemming of terms to remove pluralisation or other suffixes and to normalise tenses. Additional pre-processing steps involved removing numbers, special characters and white spaces (Kao & Poteet, 2007; Mahanty et al., 2019). An unsupervised topic modelling technique called the Latent Dirichlet Allocation (LDA) (Blei et al., 2003) was used to identify the topics within the abstracts corpus. The basic idea of LDA is that articles will be represented as a mixture of topics, and each topic is characterised by a distribution over words. LDA was applied over the entire corpus to identify topics and calculate the probability distribution across topics for each document.

Topic extraction process, developed from Mahanty et al. (2019)

Automatic topic coherence scoring: To develop the LDA model, we need to have a predetermined value for the number of topics (k). A small number of topics can lead to very generic topics and a large number can result in the generation of overlapping and non-comprehensive topics. Hence, we decided to calculate automatically the topic coherence (the degree of semantic similarity between high scoring words in the topic) at every k from 1 to 20, and established that k = 19 achieved the highest topic coherence score as shown in Fig. 5.

Topic labelling: Since the labelling of the topics is not done automatically by LDA, we assigned for every topic a relevant label based on the abstracts and keywords of articles with a probability > 90% of falling into that topic. We then validated those manually-generated labels by checking if their terms were automatically generated in the most frequent words within that topic. Table 12 summarises the topics identified, the topic labels assigned and the most frequent keywords.

Time series analysis: We were also interested in visualising topic prevalence over time for the PDs and NPDs separately, in a similar way to the analysis presented in the study by Mahanty et al. (2019). This was done by calculating the mean topic proportion per year for the PD corpus and in the NPD corpus as shown in Fig. 6. The first finding was that NPDs had a longer publication span starting in 1988 while PDs had a shorter publication span starting in 1993. However, for better visualisation we used the same chronological scale for both groups (2002–2018). There were topics, such as Classification Models, where PDs were early movers and then they were followed by NPDs. We can also clearly see the prevalence of Expert Systems and GIS-related topics in the PD corpus in comparison to the NPD corpus, where there is more prevalence of Neural Networks and Business Process Management & Process Mining. There are also topics that had very similar proportions over time for both groups, such as Social Network Mining.

Feature selection

Table 13 presents paper features that were used as predictors of PD-authored papers in the multiple logistic regression model. The features with P values less than 0.1 were the ones identified as potential predictors using the same approach adopted in Stage 2. Those 23 features are the ones we selected for the multiple logistic regression model. Subsequently, we calculated all pairwise correlations, using the cor function, and we found that a large number of them were correlated. Hence, consistent with Stage 2, we used the PLS regression for generalised linear models as it allowed us to retain all the potential predictors that could have a strong explanatory power. This is further explained in the following section.

Multiple regression

We started by using cv.plsRglm function to identify the ideal number of components to retain in a ten-fold cross-validation (k = 10), using six components (nt = 6) as the maximum number of components to try with each group or fold. After plotting the results of the cross-validation, we decided to retain only three components based on the mis-classed criterion and the non-significant predictor criterion. Cross-validation with a 70–30 split was used in ten different samples of test and training datasets to calculate the model’s prediction accuracy and its AUC. Across the ten folds, the model resulted in an average accuracy of 0.74 and an average AUC of 0.73. Significant predictors (P values < 0.05) within each of the three components were retained and their loadings are presented in Table 14. The table also shows the estimates of the three retained components across the ten folds with an average coefficient of 1.09 for component one and 0.58 for component two and 0.41 for component three.

In the analysis (results shown in Table 14), significant differences emerged between the PD and NPD corpuses. Regarding the paper extrinsic features, it was clear that papers of PDs had longer titles and abstracts and more pages and references. Their papers had a larger number of authors and affiliations which supports the findings of Stage 2 around research collaborations. While Stage 2 showed that PDs are more likely to publish their papers with foreign authors and establish research teams overseas, this analysis enables us to better understand the type of collaborations by showing us that they were mainly with authors from US universities. Additional findings include PDs having more references in their papers and more titles with colons.

Paper intrinsic features, represented by the topics covered in a paper, turned out to be an important distinguishing predictor. It seems that PDs publish fewer papers covering business process management and neural networks in comparison to NPDs. The latter can be linked to an earlier finding from Stage 2 that PDs “do not prefer doing radical research that suggests new models, frameworks, methods and architecture that were not implemented before”. One possible explanation could be that neural networks, despite being a cyclical phenomenon, requires radical research whenever there is a recurrence. There were also topics that had much larger coverage in PD papers in comparison to NPD papers, e.g. wireless sensor networks and hardware systems.Footnote 18

As for the publication outlet, we can see that PDs published more journal articles and fewer conference papers; an important predictor that persistently appeared with a high loading. Their preferred publishers were Springer, Elsevier and Wiley, with Elsevier being the one with the highest average loading, supporting the comments of one of the PDs we interviewed who believed that Elsevier journals enable better visibility and impact. PDs were also less likely to publish their papers in ACM. SJR of PD papers was also significantly higher than the SJR of NPD papers, which implies that PD researchers targeted journals with higher quality and impact.

Table 14 also shows that the first component captures variation associated with PD journal articles while the second component appears to relate to PD conference papers, making it possible to infer some of the characteristics of PD publications in either type of outlet. For instance, component one shows that PD journal articles were correlated with a larger number of authors and affiliations, longer abstracts and higher SJR scores. On the other hand, PD conference papers had fewer affiliations and lower SJR values, while still having long abstracts.

Discussion and conclusions

The main motivation of this study was to understand more about research in the global South through a first application of the data-powered positive deviance methodology; a methodology that helped identify and understand those researchers who were able to achieve better research outcomes than their peers. We used a combination of data sources (interviews, surveys and publications) and analytical techniques (PLS regression and topic modelling) to identify predictors of positively-deviant information systems researchers in 11 Egyptian public universities. We found that PDs, despite representing roughly one-eighth (13%) of the study population, contributed to the creation of roughly half (48%) of the publications and achieved nearly double (1.7x) the total number of citations of NPDs.

Significant Predictors of PDs and their Publications

Starting with the practices of PDs, a reasonably clear picture emerged from the analysis showing that significantly more PDs had travelled to get their PhD degrees from global North universities in comparison to NPDs. They had been part of multi-country research teams and published papers with foreign reputable authors. It seems that studying abroad did not just equip them with the technical know-how and the degree needed to pursue their academic careers, but also helped them establish channels of collaboration with their supervisors and their PhD granting universities, long after they returned to their home countries. This confirms findings from previous studies regarding the importance of international research collaborations (Harris & Kaine, 1994; Kwiek, 2016, 2018; Postiglione & Jung, 2013; Prpić, 1996). Another significant predictor of PDs was their receipt of research grants and travel funds. The findings also show that PDs took scientific/formal writing and English language courses.

The attitudes of PDs were also different to those of NPDs, when it comes to how they perceived their workplaces. PDs viewed their departments as more hostile or competitive while NPDs viewed them as more friendly. This is somewhat at odds with findings from a previous study that high performers preferred working in a relaxed work environment (Harris & Kaine, 1994). Another finding that came as counter-intuitive was that PDs were less inclined to do radical research when compared to NPDs.

In terms of personal attributes, PDs were mainly males and professors, which confirms conclusions from previous studies that identified gender (Kwiek, 2016; Parker et al., 2010; Patterson-Hazley & Kiewra, 2013; Prpić, 1996) and professorship (Kelchtermans & Veugelers, 2013; Kwiek, 2016) as significant predictors of high performance. A significant number of PDs in comparison to NPDs were department chairs at some point in their academic careers (after becoming professors), which is consistent with a number of studies (Kelchtermans & Veugelers, 2013; Patterson-Hazley & Kiewra, 2013). PDs also supervised a larger number of postgraduate students, which would help in generation of publications. The ability to select higher quality students would likely result in higher quality publications and more citations, and, in Egypt, department chairs have more leverage than any other academic staff member in the choice of students they will supervise. More generally, the direction of causality here is questionable. For example, given promotions in Egypt are linked to academic performance, it is likely that some of these factors are impacts of above-average research performance; perhaps more so than causes.

While our work did not set out to test particular theories, we can relate findings to all three of the ideas presented earlier. Consistent with the sacred spark notion, PDs clearly did have an internal drive and motivation for undertaking research, though more often mentioned were external rewards or drivers such as promotion, external recognition and competition that fit with utility maximisation. Perhaps most seen was a sense of cumulative advantage with, for example, researchers who undertook their PhDs overseas then building on that advantage in terms of later publications, grants, and promotions.

The majority of predictors of PD papers, resulting from the publication analysis, are in concordance with existing literature on highly cited papers. They confirm conclusions related to the length of the paper (Elgendi, 2019; Onodera & Yoshikane, 2015), abstract (Lokker et al., 2008) and title (Haslam et al., 2008); the number of authors (Didegah & Thelwall, 2013; Elgendi, 2019; Onodera & Yoshikane, 2015), co-author affiliations from overseas institutions (Van Dalen & Henkens, 2001) and references (Davis et al., 2008; He, 2009); and the quality of the journals (Fu & Aliferis, 2010; Peng & Zhu, 2012). New predictors included the identification of topics that significantly distinguished PDs from NPDs, such as “neural networks” and “wireless sensor networks”, along with publishers who were strongly associated with PD papers, such as Elsevier. This thus provides support for theories based around normative, social constructivist and natural growth factors driving publication citation rates.

Predictors relevant to global South challenges

Through this study, we were able to identify predictors of PDs in a global South context that had not been identified in previous studies, and could provide pointers to ways of overcoming challenges specific to Southern researchers. Southern researchers work in contexts of resource limitation, and PD researchers apply more for research grants and travel funds from international funding bodies. Some applications included partners from Northern universities, which increased the chances of securing the funds, as those partners are more familiar with grant procurement processes and more experienced in writing proposals. PDs build long-standing research collaborations with their overseas supervisors and PhD granting institutions, which may provide further access to research funds either directly or via joint grant applications. In terms of papers, the publication analysis showed that PDs published more journal articles and fewer conference papers. This choice may relate to seeking profile and citations for outputs: avoiding low-visibility local conferences, and selecting journals as more likely to deliver citations than conferences. But it also fits well as a strategy in the context of limited availability of travel funds. Tendency of PDs to publish with more authors and with foreign authors could also help pay for journal publication fees, with fees split across more authors or paid from overseas sources.

Southern researchers were seen to encounter institutional biases that make it harder for them to get published and cited. PDs are more likely to co-publish with foreign authors, especially US authors, which will help compensate for any such biases among editors, reviewers in single-blind or open review systems, and readers. (Seeking out foreign co-researchers and co-authors also acts as a compensation against the local contextual challenge of there being a smaller research population from which to draw research and publication collaborations.) PDs’ preference for working on established research areas rather than on radical research topics may also help in relation to institutional barriers, with research that builds incrementally on existing ideas and literature being more likely to be accepted for publication by referees, and cited by others working in the established area. Any biases against citation of work by Southern researchers may be counteracted by PDs’ publication of papers with more authors and more affiliations than NPDs. Having multiple authors and affiliations increases the likelihood of citations, as each author has their own network and bringing those networks together can increase readership (Elgendi, 2019). Multiple authorship may also enrich the paper through the integration of different perspectives and expertise, which could lead to greater citation (Peng & Zhu, 2012). Similarly, PDs publish papers with a larger number of references which increases paper visibility through citation-based search in databases that allow it, such as Google Scholar (Didegah & Thelwall, 2013), and through the “tit-for-tat” hypothesis i.e. authors tend to cite those who cite them (Webster et al., 2009).Footnote 19 By and large, then, this tends to support social constructivist views of publication citations; showing how contextual factors influence publication—but also how researchers seek to compensate when those factors may tend to reduce citation rates.

Southern researchers work in contexts of lower English proficiency, and PDs were shown to take scientific writing and English writing courses more than NPDs, and their greater likelihood of PhD study at a global North university may also have enhanced their command of English.

Methodological innovation

The use of six different citation metrics enabled us to evaluate performance using different dimensions while controlling for factors that could disadvantage certain groups. It also enabled us to identify and profile PD researchers into three main clusters: rising stars, high performers and highly cited researchers. It was not possible to investigate predictors specific to each cluster individually, due to their small sample size, but this could be a possible avenue for future research.