Abstract

Human–computer interaction (HCI) has become an important area for designers and developers worldwide, and research activities set in national cultural contexts addressing local challenges are often needed in industry and academia. This study explored HCI research in the Nordic-Baltic countries using bibliometric methods. The results show that the activity varies greatly across the region with activities dominated by Finland, Sweden, and Denmark, even when adjusting for differences in population size and GDP. Research output variations were larger for the top-tier conferences compared to entry-tier conferences and journals. Locally hosted conferences were associated with local increases in research activity. HCI research longevity appears to be an indicator of research maturity and quantity. HCI researchers typically collaborated either with colleagues within the same institution or with researchers from countries outside the Nordic-Baltic region such as US and the UK. There was less collaboration between national and Nordic-Baltic partners. Collaboration appeared especially prevalent for top-tier conference papers. Top-tier conference papers were also more frequently cited than regional-tier and entry-tier conferences, yet journal articles were cited the most. One implication of this study is that the HCI research activity gaps across the Nordic-Baltic countries should be narrowed by increasing the activity in countries with low research outputs. To achieve this, first-time authors could receive guidance through collaborations with experienced authors in the same institution or other labs around the world. More conferences could also be hosted locally. Furthermore, journals may be more effective than conferences if the goal is to accumulate citations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Successful digital transformations in society depend on highly qualified designers and developers with strong human–computer interaction (HCI) competences that can help establish high quality user experiences in the respective organizational and cultural contexts. It is claimed that HCI has become one of the most important thematic areas within computer science (Tscheligi, 2007), and the inclusion of HCI in the curriculum of computer science and related disciplines is therefore important (Douglas et al., 2002; van der Veer & Van Vliet, 2003). It is also generally accepted that research-based teaching improves quality in higher education (Healey et al., 2010), and one may assume that it is easier for teachers to provide research-based learning experiences if they are research active. Using this rationale, one could argue that each country should have a certain level of HCI research activities in its higher education institutions to meet these competence needs. Research capacity is indeed one of the accreditation criteria for higher education in many countries.

HCI research has been studied in several regional contexts such as India (Gupta, 2015; Kumar, 2013), Brazil (Barbosa et al., 2017; Paschoarelli et al., 2015), Australia (Mubin et al., 2017), New Zealand (Nichols & Cunningham, 2015), Britain (Padilla et al., 2014a, 2014b), and Asia (Sakamoto, 2015). Other geographic regions have received less attention including the Nordic and the Baltic regions. Helander (1995) argued for comparing Nordic research (in general) as past comparisons have contributed to helpful debates about the quality and the international standing of research within the region. The situation in the neighboring Baltic region is particularly relevant due to the transformations that have occurred after the breakup of the Soviet Union (Grančay et al., 2017). This study therefore explored the HCI research activity observed through publication counts within the Nordic-Baltic region. The Nordic-Baltic Eight Countries are in geographical proximity to each other and will in context of this study be referred to as regional. The Nordic-Baltic Eight are also EU members or European Free Trade Association members, and many formal and strong economic ties exist. One could argue that there should not be too much difference across neighboring countries in terms of building competence to avoid knowledge and economic gaps within the region. All the countries included in this study follow the European Association for Quality Assurance in Higher Education’s (ENQA) common Standards and Guidelines for quality assurance in the European higher education (ESG), and one would expect there to be some minimum level of similarities in the education systems. While there are several general studies of research within the Nordic region (Helander, 1995; Persson et al., 1997) and the Baltic region (Lovakov & Agadullina, 2019; Teodorescu & Andrei, 2014; Zavadskas et al., 2011), there has to the best of the author’s knowledge been no previous studies of Nordic-Baltic HCI research. General bibliometric studies such as (Correia et al., 2019) typically omit data based on regional conferences such as NordiCHI.

This study predominantly focused on conference publications as browsing the publication lists of several renowned HCI researchers is evidence that many influential HCI works have been published in conferences as opposed to scientific journals. When attending HCI conferences, it is quite noticeable that some countries are more visible in the conference programs than others. If focusing on the Nordic-Baltic countries in particular, papers by Norwegian, Islandic, Estonian, Lithuanian, and Latvian authors seem uncommon, while papers authored by Danish, Finish, and Swedish authors seem comparatively more frequent. This observation is supported by bibliometric comparisons of international HCI research which only list Denmark, Sweden, and Finland (Bartneck & Hu, 2009; Sakamoto, 2015). These studies do not give any insights about the remaining countries, namely Norway, Iceland, Estonia, Latvia, and Lithuania. One possible explanation for such differences could be that journal articles are valued more in certain countries. For example, Norway has a monetary incentive system where journal articles are awarded between 30 to 300% more than conference papers (Haugen & Sandnes, 2016). Mannocci et al. (2019) observed that Finland published more articles in the International Journal of Human–Computer Studies (IJHCS) than both Sweden and Denmark, while Sweden and Denmark both published more Computer–Human Interaction (CHI) conference papers than Finland.

Grančay et al. (2017) gave valuable insights into the Baltic context. The Baltic research systems appeared journal-oriented as there was little mention of conference proceedings. Latvia had a strict requirement for scholars to publish in international journals to get promotion to full professor (five papers during the last six years) although there were no strict requirements for the journals to be indexed. Latvian universities with international accreditation ambitions have introduced stricter requirements. Estonia had a centralized publication classification system with several levels (Estonian Research Portal), but universities were free to set their own promotion criteria. Consequently, criteria vary from the very strict (indexed journal articles) to the more flexible where it is possible to gain status as associate professor without any indexed journal publications. Although criteria have been tightened during the last decades Grančay et al. (2017) described the system as vague. Lithuanian universities were free to set their own criteria. Papers in local indexed journals were not considered equal to internationally indexed journals, but rather deemed to be at the level of lower ranking international journals. Web of Science had a higher standing than Scopus in Lithuania.

Several studies have documented the positive effects of collaboration (Bu, Ding, et al., 2018; Bu, Murray, et al., 2018; Uzzi et al., 2013; Xu et al., 2015) as the collective input from authors with diverse backgrounds tends to increase paper quality. It is thus relevant to explore how, and with whom, Nordic-Baltic HCI researchers collaborate. In particular, to what degree do countries within the Nordic-Baltic region consolidate their efforts through co-authored papers that address common challenges relevant to the region? If empirical evidence indeed confirms large differences in HCI research activity in the Nordic-Baltic region one may argue that changes are needed, such as training of researchers, more focused research funding and incentives, improvements to the organization of research within the universities and encouragement of increased collaboration.

The following research questions (RQs) were raised:

-

RQ1 Research output: How visible are the research activities of the eight Nordic-Baltic countries in different tiers of HCI conferences? (top, regional, and entry-tiers).

-

RQ2 Publication type Does the tendency to publish in conferences versus journals vary across the Nordic-Baltic region?

-

RQ3 Longevity What does the research activity over time reveal about the HCI research longevity of the Nordic-Baltic countries?

-

RQ4 Co-authorship To what extent do HCI researchers within the Nordic-Baltic region co-author conference papers, and who do they collaborate with?

-

RQ5 Citation impact How frequently is Nordic-Baltic HCI research cited?

To answer these questions a bibliometric study was carried out based on publication counts aggregated at national level as a measure of active research participation. It was an explicit intention not to focus on individual merits, but rather what HCI researchers achieve collectively.

Related work

Broadus (1987) explored the use of the term bibliometrics in the literature and summarized that “there does seem to be a clearly delineated body of research involving physical units of publications, bibliographic citations, and surrogates for them. The measurement of these items is called, logically, bibliometrics”. Broadus (1987) also gave some clarity to the distinctions between bibliometrics and the related term scientometrics when writing: “There is a large area of overlap, then, between bibliometrics and scientometrics, but many of the former deal with matters other than the measurement of science, or scientists, or scientific activity, while, on the other hand, many of the measurements interesting to scientometricians are based on data not derived from publication or other forms of communication.” Bibliometric research methods can be useful in understanding research effort, behavior, structures, growth, and impact; these have also been popular in the fields of human factors (Lee et al., 2005) and HCI (Bartneck & Hu, 2009; Kaye, 2009; Koumaditis & Hussain, 2017). Subfields of HCI have also been studied using bibliometric methods, for example user centered design (Cho et al., 2020), ubiquitous computing (Silva et al. 2012), world wide web (Agarwal et al., 2016), human–robot interaction (Mubin, Arsalan, et al., 2018; Mubin, Tejlavwala, et al., 2018), accessibility (Sandnes, 2021), mobile technology (Palomäki et al., 2014), intelligent user interfaces (Völkel et al., 2020), human-agent interaction (Mubin et al., 2017), and computer-supported cooperative work (Jacovi et al., 2006; Correia et al., 2013; Keegan et al. 2013; Correia et al., 2018a, 2018b, 2019). Several bibliometric studies of conferences conclude with informed advice to the conference organizers (Kostakos, 2015; Mubin et al. 2017) on how improvements can be made. As argued by Kaye (2009), it is healthy to occasionally take an introspective look at a research field.

Bibliometric data facilitate easy comparison of researchers and are increasingly used for recruiting academics, promotion, tenure, and allocating funding (Franceschet, 2010; Haugen & Sandnes, 2016; Sandnes, 2018). Such data must be used with caution as publication and citation counts can only give support to hypotheses of causalities (Sandnes 2020). An informative example of ethical use of bibliometric methods can be found in Hammer et al. (2018) where quantitative bibliometric methods were combined with qualitative methods (interviews) to get a more complete understanding of the phenomenon.

International comparisons of research output

Several studies have used conference publications to compare HCI research in different countries (Bartneck & Hu, 2009; Henry et al., 2007; Sakamoto, 2015). Sweden was the only Nordic-Baltic country mentioned in a study of human–robot interaction (Bartneck, 2011), where it was listed as the 11th most active nation worldwide. In a broader study Bartneck and Hu (2009) found that only 8% of the world countries was responsible for 80% of the papers, signaling a lack of geographical diversity. US contributed most papers. Sweden, Finland, and Demark were the only Nordic-Baltic countries listed together with seven other productive countries. This is an indication that Sweden, Finland, and Denmark indeed are influential HCI-research nations. They also created a ranking list based on the h-index where Sweden ranked the highest (h-index 22), Denmark (h-index 18), Finland (h-index 16), and Norway (h-index 3). None of the other countries studied herein was listed. Moreover, they found that papers awarded as the best were not cited more often than a random sample of papers from the same years. Most papers were affiliated with universities. There were twice as many papers affiliated with universities as companies. Only a fraction of papers was affiliated with research institutions. They also observed a dip in participation during CHI 2000 and attributed this to the conference being hosted outside the US and the dotcom bubble.

Six years later Sakamoto (2015) revisited Bartneck and Hu’s study with the view that the results no longer were representative due to a growth in Asian participation. Other interesting observations in Sakamoto’s study were that Finland had moved up two places past both Sweden and Denmark, Sweden had fallen three places and with no change for Denmark.

A recent study by Mannocci et al. (2019) showed that Denmark was the 14th most productive country at the CHI conference immediately followed by Sweden and Finland, while Finland had more articles in the IJHCS journal (11th place) than both Sweden (14th place) and Denmark (20th place). They also found that Swedish papers were more frequently cited by CHI conference papers and IJHCS journal articles than papers by Finnish and Danish authors. Finland was included in the top 10 list for several areas at CHI (machine learning and virtual reality) and IJHCS (human computer interaction, mobile computing, computer security and computer games), while Sweden appeared on one top 10 list for CHI (robotics).

Bibliometric studies have not been limited to comparisons of the research output in different geographical regions. Researchers have also explored how different HCI research topics have been growing, shrinking, or remained stable over time (Giannakos et al., 2020; Liu et al., 2014; Padilla et al., 2014a, 2014b). Hornbæk et al. (2019) tracked the use of the term “interaction” at CHI across several decades.

However, Mubin, Arsalan, et al. (2018), Mubin, Tejlavwala, et al. (2018)) was unable to find any connection between the readability of CHI papers and the region of affiliation. Instead, they found that papers with theoretical content had lower readability compared to papers presenting artefacts. Yet, papers with theoretical contributions were more likely to win awards than papers presenting artefacts.

Longevity and evolution of HCI

Several researchers have evaluated the evolution of the field of HCI at various points in time (see Wulff & Mahling, 1990; Hartson, 1998). Savage et al. (2005) argued that HCI is a relatively young field compared to other human factors areas. They characterized 1978–1988 as the GUI paradigm period and 1989–1999 as the Internet period. They observed that the textbooks on HCI emerged around 1992–1994. Savage et al. (2005) analyzed the HCI evolution using articles published in the Human Factors journal between 1984 and 2004. HCI papers emerged in 1995 with a significant increase from 2001 and onwards. In a study of 24 years of CHI proceedings Barkhuus and Rode (2007) found that the use of qualitive methods had increased over the years compared to quantitative methods. An early study by Newman (1994) used bibliometric data to explore the impact of papers reporting on radical solutions on the evolution of the HCI field. The take-away message was that papers presenting radical solutions need to be complemented by more elaborate follow-up studies.

Research collaboration

Co-authorship of papers is typically used to study research collaborations (Horn et al., 2004; Melin & Persson, 1996). Co-authored papers have been linked to higher citation counts (Wuchty et al., 2007). It is claimed that the scientific impact can be related to the number of authors (Larivière et al., 2015) as a larger research team typically will contain more collective competence than a small team or a single author. Kumar (2015) found that HCI researchers’ publication patterns follow Lotka’s law but HCI research is characterized by many low-producing authors and few high producing authors compared to other fields. Collaboration between first-time authors and experienced researchers is thus even more important. Bu, Ding, et al. (2018), Bu, Murray, et al. (2018)) pointed out that collaboration, leading to co-authorship, is a means to make better use of limited resources such as time, knowledge, funding, and equipment. One may also argue that major scientific breakthroughs can emerge from transdisciplinary collaborations among diverse teams (Bu et al., 2018; Bu, Murray, et al., 2018; Uzzi et al. 2013; Xu et al. 2015).

Cross-discipline citations have also been observed to assess the degree in which different scientific communities draw inspiration from each other. For example, Guha et al. (2013) found a strong connection between CHI and SIGGRAPH, Karamanis et al. (2009) found few connections between HCI and natural language processing, while Panjwani (2004) uncovered that ergonomics was an isolated field, while the human factors and HCI fields were intertwined.

As HCI is a multidisciplinary field it follows that collaboration and co-authorship should be the norm. Still, Bartneck and Hu (2010) claimed that collaboration within the multidisciplinary field of HCI can be challenging. Since collaboration has been identified as a key to making relevant scientific advancements and impact it is thus relevant to investigate the degree of collaboration within the Nordic-Baltic region.

Journal versus conference dichotomy

There seem to be a general disagreement within the computer science community regarding the status and value of journal versus conference publications (Franceschet, 2010). Some of the arguments raised are that the rapidness of publication at conferences makes them suitable for computer science with rapid paradigm shifts and bring people together. On the downside, conferences were criticized for not allowing enough time between acceptance and camera-ready submission. Also, conferences may push authors into following conference deadlines rather than publishing when the work is ready. Franceschet (2010) argued that conferences encourage safe papers that communicate incremental improvements rather than large leaps. Most computer scientists published in both conferences and journals with similar ratios of approximately 2/3 conference papers and 1/3 journal articles. Moreover, highly cited researchers published only a third as many papers as the most active researchers, while Turing award winners only published 1/6 of the most active researchers. Franceschet concluded by recommending publishing fewer more mature works in journals compared to incremental works in conferences. Although reporting similar observations, Kim (2019) argues that fields outside computer science need to acknowledge the publication patterns within computer science. In a study of nearly 50,000 conference papers, Lee (2019) found that impact was linked to conference longevity, proceedings with few papers, low acceptance rates, broad scope, papers by authors from different countries, and papers presented during the months January through June and August.

Method

Bibliometric methods were used to collect empiric evidence about HCI research activity, longevity, choice of publication type, collaboration, and citation impact. This study is based on the Nordic-Baltic Eight which comprises the Nordic countries (Denmark, Finland, Iceland, Norway, and Sweden) and the Baltic countries (Estonia, Latvia, and Lithuania). An underlying assumption of this work is that publication counts reflect research activity, where active HCI researchers generate publications, and passive academics do not. No attempt was made to discriminate between different categories of publications within each venue such as full papers, short papers, posters, demos, workshops, and work-in-progress.

Choice of conferences

Conferences that were believed to give a representative impression of the state of HCI research were selected. Three general HCI conferences at different tiers were selected to establish an impression of how the different countries have positioned themselves in terms of ambition (quality) and activity (quantity). The three conferences include CHI (top-tier), NordiCHI (regional-tier), and HCI International (entry-tier). CHI is by many HCI researchers considered the premiere conference (Bernstein et al., 2012; Grudin, 2006; Pohl & Mottelson, 2019). It has a Computing Research and Education (CORE) A* rating and is ranked as the 1st HCI conference by Google Scholar. CHI is also a large conference in terms of participants (approximately 3800 in 2019) and proceedings (703 papers in 2019) and was therefore considered to provide a sufficiently large sample. NordiCHI practices rigorous reviews and was regarded a highly relevant conference due to its regional anchoring. Comparatively, NordiCHI is a small conference (89 papers in 2020) but the only international venue regularly hosted in the region. HCI International can be classified as an inclusive conference with reviews mostly based on extended abstracts. It does not have a CORE rating but is listed at the 14th place in the Google Scholar HCI conference rankings. HCI International was considered a relevant venue as it contrasts and complements both CHI and NordiCHI in being more accessible to early career researchers as well as being attended by renowned and experienced HCI researchers. Although early career researchers, master and PhD students present their first papers and get feedback on their work in all three venues, HCI International is a tangible option for those who did not get their work accepted at CHI, NordiCHI, or other highly competitive conferences such as ACM Symposium on User Interface Software and Technology (UIST), INTERACT, ACM Designing Interactive Systems (DIS), and Annual Conference on Intelligent User Interfaces (IUI). It is argued that including a less competitive venue in the sample will help capture a broader and more complete picture of HCI research activities in the region (RQ1). The results will thereby reveal more about the (more than) 75% of submissions than never made it to the most competitive conferences. HCI International was considered to provide a sufficiently large sample as it is one of the largest annual HCI conferences at the entry-tier in terms of participants (approximately 2350 in 2020) and proceedings size (40 Springer volumes in 2020). It also has a broad thematic scope. The International Conference on Applied Human Factors (AHFE) is another large entry-level alternative; however, this conference was not included as several of its thematic areas are outside the scope of HCI.

Choice of databases

Publication data were extracted using Scopus. Scopus and WoS are often used in general bibliometric studies and in some bibliometric HCI studies (Koumaditis & Hussain, 2017; Kumar, 2013; Meho & Rogers, 2008; Palomäki et al., 2014; Shiau et al., 2016). Although such databases have limited coverage (García-Pérez, 2010; Gehanno et al. 2013; Sandnes and Grønli, 2018; Sandnes & Brevik, 2019) inspections showed that the publication channels chosen for this study were indexed. Scopus facilitates the extraction of publication records as comma separated value files (CSV). Many of the bibliometric studies of the HCI field make use of Association for Computing Machinery Digital Library (ACM-DL), however metadata are often scraped from the ACM-DL using scripts (Bartneck & Hu, 2009; Sakamoto, 2015). The ACM-DL data are also reported to contain problems, inconsistencies, and spelling mistakes. For instance, Bill Buxton being listed as William Buxton and W. Buxton, many authors from the US not listing US as the country, and holes such as no CHI conference in 1984, 4% of affiliation missing from CHI metadata in 1981, 1982, and 1992.

The HCI Bibliography is another valuable source of HCI research as it provides a wider view of HCI research than specific databases such ACM-DL, Scopus, Web of Science, etc. Unfortunately, the project has not been updated since 2018. Moreover, the database contains limited information about author affiliations and is therefore not suitable for several types of bibliographic analyses.

Procedure

The conference publication records were extracted from Scopus as CSV-files using the advanced search options in Scopus with the queries “(CONF ("human factors in computing" sigchi) AND AFFILCOUNTRY (norway OR sweden OR denmark OR finland OR iceland OR estonia OR latvia OR lithuania)) for CHI,, “(CONF (nordichi) …” for NordiCHI, and “(CONF (“HCI international”) …” for HCI International. Extracted records were not postprocessed and included therefore also non-scientific records, forewords, and editorials. Searches were conducted on October 29th, 2020.

A simple Java program was written to extract summary statistics from the CSV-files. Statistics computed included number of publications per year, authorship profiles per country, including ratio of single authored papers, mean number of authored papers, mean citation counts, number of unique and recurring authors, collaboration profiles including intra-institutional collaborations, national collaborations, Nordic-Baltic collaborations, and global collaborations.

Publication statistics were prepared such that a paper was counted once for each country if that country was listed under one of the author affiliations. For example, if a paper was authored by a Danish researcher and two Swedish researchers the paper would count once towards the tallies of both Denmark and Sweden.

To investigate if publication cultures differed across the eight countries in terms of preference for conference and journals, a set of publication counts for the most notable HCI journals were extracted from Scopus. No authoritative list of key HCI journals embraced by associations such as ACM and The Institute of Electrical and Electronics Engineers (IEEE) could be found, and Scopus did not seem to have a dedicated HCI thematic area. Therefore, the list of journals was based on a list of HCI journals maintained by Mathias Rautenberg, co-founder and co-editor of Entertainment Computing (see http://www.idemployee.id.tue.nl/g.w.m.rauterberg/hci-journals.html). The list of journals was also corroborated against the HCI Bibliography journal list (http://www.hcibib.org/hci-sites/JOURNALS.html) and Google’s list of HCI journals. The inclusion criteria were as follows: the journals had to be indexed by Scopus, and there had to be a distinct HCI focus. Technical journals not primarily focusing on HCI were omitted such as IEEE Transactions on System, Man and Cybernetics. The resulting list comprised 12 journals (see Table 1). Journal articles published from 1987 to 2020 were counted. According to Kumar (2014) HCI researchers tend to focus on a smaller set of journals compared to other disciplines where the works are scattered across a wider range of journals. Consequently, the journal list was considered relatively representative.

Analysis

Research activity was analyzed using absolute publication counts, as well as these publication counts normalized according to the respective gross domestic products (GDP) and the country population sizes. Relationships between GDP and research output have been reported in several works (see for instance Hart & Sommerfeld, 1998; Vinkler, 2008; Onyancha, 2020) as well as relationships between GDP per capita and research indicators (see for instance Ye, 2007).

The absolute publication output gives an indication of the respective efforts of the different countries in relation to each other and how this is likely to fare in the research competition globally. However, the Nordic-Baltic Eight countries are rather diverse in terms of economy and population. If one assumes that a country with a strong economy is likely to invest more resources into research than a country with an emerging economy, and if we assume that a country with many inhabitants is more likely to foster research talents than a country with a smaller population, it makes sense to normalize the publication metrics according to economic and talent-pool dimensions. As a measure of economic strength, the GDP was used. By dividing the publication output of a country by the GDP we get a measure of publication production corrected for economic strength. A large value thus signifies a country that has a large publication output irrespective of economy and a low value will indicate a low publication production.

Next, the population size was used as a measure of the size of the talent pool. By dividing a country’s publication output by the population, we get a measure of publications per person. A high value thus indicates a country with a high activity per person. The data were also normalized using the number of tertiary education graduates in the eight countries in 2018 based on World Bank data as a representation of education output. The results were quite similar to the ones obtained using the population size. Therefore, only results obtained using the population sizes are reported herein.

Results and discussions

HCI research activity (RQ1)

Results

Figure 1 sheds light on the extent of HCI research output in the Nordic-Baltic region (RQ1). Research output of the Nordic-Baltic countries for top-tier, regional-tier, and entry-tier conferences are ranked. The results show that the HCI research activity in the Nordic-Baltic countries varied from low to high. Sweden, Denmark, and Finland exhibited consistently high number of publications of similar magnitude. At the top-tier Norway was in fourth place but its productivity was just under 10% of its three more productive neighbors. At the regional-tier, the research outputs were higher for papers from Norway (about 50%), Iceland (about 10%) and Estonia (about 5%) compared to the three top countries. Latvia had no papers in the top-tier or the regional-tier conference, while Lithuania, Iceland and Estonia had less than 5 papers each. All the Nordic-Baltic countries were represented at the entry-tier. Norway exhibited the highest productivity at the entry-tier, also with a notable productivity from Estonia (about 30% of the most active countries).

Research intensity within HCI in terms of conference publication output for Nordic-Baltic countries. Columns list top-tier (CHI), regional-tier (NordiCHI), and entry-tier (HCI International) conferences. Rows represent the absolute number of publications, publications normalized according to GDP (per 1,000) and publications normalized according to population (per 10,000)

When normalizing the publication counts of the top-tier according to GDP, Finland ranked top and Sweden fell to the third place. Estonia and Iceland rose to the fourth and fifth place and Norway fell to the sixth place. Denmark topped the list when publication counts were normalized using the population sizes.

Normalizing the regional-tier observations according to both GDP and publication revealed a distinct pattern where Iceland topped the list in both cases. The normalized productivity of Estonia was also much larger, while that of Sweden was drastically smaller.

Entry-tier publication counts normalized according to GDP show that Estonia was much ahead of the other countries, while normalizing according to population put Norway in the lead and Estonia in second place. With both these normalizations the productivity of Sweden was low.

The portion of unique authors and recurring authors give some indication of research environment maturity (see Fig. 2). A unique author was defined as an author with just one publication, and a recurring author was defined as an author with multiple publications. For the top-tier conference about half of the authors were unique and half were recurring for the three most active countries (Sweden, Denmark, and Finland), while for Norway most of the authors of top-tier papers were unique. At the regional-tier and entry-tier conferences the ratio of unique authors was generally higher than for the top-tier conference (approximately 2/3 unique authors and 1/3 recurring authors).

Discussion

In answering RQ1 (extent of Nordic-Baltic HCI research output) the entry-tier results evidence that there were HCI research activities in all the Nordic-Baltic countries. Yet, the results also confirm that the HCI research activity in this region varied from low to high. Sweden, Denmark, and Finland consistently published most papers. These three countries are also the most populated countries with the largest economies. One may speculate that the probability of developing talents into internationally leading researchers is higher if the talent pool is large. Perhaps there is a minimum critical mass needed? If we assume that Aminer’s top 100 list of most influential HCI researchers (Aminer, 2016) represents such internationally leading researchers, the list supports such a speculation as two of the three largest Nordic-Baltic countries in terms of HCI research is represented with a Danish researcher in 88th place and a Finnish researcher in 91st place.

Varying level of research maturity is one possible explanation for the large variation in research activity in the Nordic-Baltic region where Sweden, Denmark, and Finland exhibit traits of mature HCI research, while the HCI research in the five other countries seemed less developed. Sweden, Denmark, and Finland’s activity levels at the top-tier venue demonstrate that researchers in these countries successfully competed for spots in the highly competitive conference program. The presence of papers from the five other countries at the entry-tier conference confirm that there indeed was HCI activity in all the Nordic-Baltic countries. Perhaps work published at the entry-tier has not yet reached the maturity level of meriting publication in the top-tier conference? A possible delayed HCI research maturity of the Baltic countries could perhaps be explained by their relatively recent independence from the Soviet Union. Estonia’s position of the most active of the Baltic countries in terms of HCI research can possibly be linked to the nation’s intense efforts on digitalization, having received international attention for success in building up a high-tech industry, implementing online public services, and early adapters of computer in schools (Billon et al., 2010; Björklund, 2016).

Research maturity may explain why the top-tier papers had the largest portion of recurring authors. Scholars gain experience as they build up their publication portfolios. Eventually, they may have acquired the skill and practices needed to have papers accepted at the top-tier. First time authors may learn such skills by collaborating with more experienced colleagues. Academic environments populated with experienced HCI researchers are therefore more likely to yield top-tier publishing cultures. Early career researchers without access to experienced colleagues may find it harder to have papers accepted at the top-tier conferences. It must also be noted that some authors may have been incorrectly classified as unique in this study since only three conferences were sampled. It is possible that some of these authors in fact were experienced authors usually publishing their research elsewhere.

A third explanation for the large variation in publication activity across the Nordic-Baltic region could be large differences in population and GDP. Normalized publication counts seem to partially explain some of the differences in publication output. Indeed, the normalized research activity observations reveal that Iceland with its population of just 300,000 is on par with Sweden, Denmark, and Finland in the regional-tier conferences. Norway’s HCI research activities at top-tier and regional-tier conferences seem lower than what could be expected in view of its GDP and population. Normalized publication outputs for Estonia also reveal a more nuanced impression with reasonable research activity. However, Estonia is the smallest of the three Baltic countries, and one would expect higher activity level in both Latvia and Lithuania since both their populations and economies are larger than those of Estonia. If one subscribes to the assumption that quality in education hinges on faculty members’ being active researchers, one may hypothesize that the state of HCI education in Latvia and Lithuania is not at the same level as those of the other countries. The eight countries have different sociocultural norms, academic traditions, cultures, and research systems. Each of the eight countries face different challenges and have different needs and consequently make different national priorities. National priorities ultimately affect higher education and research, and the degree to which scholars are motivated to publish and how they publish. Indeed, these factors may explain some of the observed differences.

The data only captured texts written in English and published in international conferences and journals. Many countries also have national journals and conferences where research is disseminated in the local languages. It is therefore possible that the research activity for some countries may be higher than what the results reported herein suggest.

Different salary level may influence the attraction of talent to academia. The observed publication output appears related to the salary profiles in the respective countries. For instance, Latvia came out as one of the countries with the (6th) lowest salaries for academics in a study of academic salaries of 28 countries around the world (Altbach et al., 2012), where academic salaries in Norway were found to be twice as high in spending power. Similarly, in 2007 the European Commission listed the academic salaries in Estonia and Latvia the lowest of the countries studied herein and somewhat higher in Lithuania. One exception is Finland, which yielded a high publication output yet with salaries in the middle of the range.

The normalization of research output was intended to compensate for the large population differences across the Nordic-Baltic region. Abramo et al. (2020) discussed challenges associated with comparing the research output of large and small countries and how to make such comparisons as equitable as possible. In their study the research output of Italy (large country) was compared to that of Norway (small country) and challenges listed included incompatibility of the data used, and differences in what they term “professor field classification schemes”. Despite these challenges, the authors emphasized the importance of comparing the research outputs of small and large countries as it provides policy makers with facts that can be used to strategically analyze strengths and weaknesses of various fields and disciplines. Especially for areas where a country has too few professors the comparisons with larger countries may facilitate more robust analyses.

Although any nation has the option of stimulating the growth of HCI research through funding and incentives, we should also ask what the international HCI community can do to diversify participation and stimulate global HCI research activity. Would it increase activity levels if representatives in low-performing countries were invited to host future HCI conferences?

Conferences vs journals (RQ2)

Results

To probe if publication cultures in terms of preference for conference versus journals could explain the variation in publication intensity in conferences across the countries (RQ2), publication counts for the most notable HCI journals were extracted (see Table 1). Observations show that the journal article profile was similar to the conference publication profile. Finland was the most active in terms of journal articles followed by Sweden, Denmark, and Norway. Next follows Iceland, Estonia, and Lithuania with noticeably fewer publications. Only one HCI journal article was identified for Latvia. Finland topped the list when the publications counts were normalized using the GDPs, followed by Iceland, Estonia, Denmark, Sweden, Norway, and Lithuania. Finland also topped the list when population counts were normalized according to population size, followed by Iceland, Denmark, Norway, and Sweden. Table 1 shows that the journal Computers in Human Behavior published most of all journal articles by Nordic-Baltic authors (about one third), followed by Behaviour & Information Technology (about one fifth) and International Journal of Human–Computer Studies (approximately one in every ten papers).

To get an indication of the relationship between conference and journal articles (RQ2) the ratio of the total number of conference papers and journal articles were plotted (see Fig. 3) where a low value indicates a tendency towards journal articles and a high value indicates a tendency towards conference publications. Lithuania yielded the strongest tendency towards journal articles closely followed by Norway, Finland, Sweden, and Denmark. Iceland exhibited the strongest prevalence of conference publications while Latvia and Estonia were somewhere in the middle. This is a rough estimate as both the set of conferences and journals represent subsets of all publication channels. Although the absolute ratios could be biased by the sample the ranking of the ratios may be less sensitive to sampling bias as the same sample is used for all the eight countries.

Discussion

The answer to RQ2 is that the tendency to publish in journals and conferences varied across the Nordic-Baltic region. The differences between Norway and the other Scandinavian countries were smaller for journal articles than for conference papers. Perhaps Norwegian HCI researchers prefer publishing in journals instead of the top-tier conferences? The Norwegian incentive system could partially explain this as journals are given a higher monetary reward (30% more) than a conference paper (classified as a book chapter). This could also explain why Norway have more papers in HCI International than the ACM conferences as the proceedings of HCI International are published in the Lecture Notes in Computer Science (LNCS) series which technically is classified as a journal in the Norwegian incentive system (a series with an ISSN number). The national incentive system could also explain why Norwegian scholars have twice as many publications in the Journal of Computer-Mediated Communication than the other countries as this journal is ranked as a top journal (level-2) resulting in a 300% monetary reward compared to ordinary (level-1) journals. A similar explanation could be used for the Computer Supported Cooperative Work journal (level-2), where Norwegian researchers also have published more work than scholars from the other countries. Note that only 4 of the 12 journals listed are classified as top-tier journals in the Norwegian incentive system.

It is also noticeable that the journal with most frequent articles by Nordic-Baltic researchers, namely Computers in Human Behavior, is also the one with the higher journal impact factor (IF: 5.003 in 2019). Could it be that this journal is especially attractive for the Nordic-Baltic researchers because of the high impact factor, or its reputation, or both? No other obvious relationships between journal impact factor and publication patterns could be observed.

The observed ratio of journal to conference papers (31.3%/68.7%) by Nordic-Baltic HCI researchers is not too far from the approximate ratio of 2/3 conference papers and 1/3 journal articles observed a decade ago for computer science (Franceschet, 2010), indicating that publishing patterns have not changed much during the last decade. The journal-conference ratios indicate that both Lithuania and Norway may be more journal-oriented than the other six countries, but observations do not give support to the hypothesis that Baltic researchers in general tend to publish more in journals than conferences as there are more HCI-related conference papers than journal articles for all the Baltic countries. Although Lithuania seems to be the most journal-oriented country, and Estonia and Latvia among the least journal-oriented, the publication counts are too low to provide statistical power. The evidence suggests that Latvian HCI research activity is too low.

The observed dominance of journal articles from Estonia in the Baltic region followed by Lithuania and Latvia agrees with the observations of Lovakov and Agadullina (2019) for psychology, where Estonia was affiliated with 26.8% of all psychology journal articles published by post-Soviet countries, while Lithuania was affiliated with 7.5% of the papers; Latvia was only affiliated with 1.9% of the post-Soviet psychology papers.

The results do not echo the journal orientation described by Grančay et al. (2017). They found that most of the Estonian research was published in journals, while the work by Lithuanian researchers was published in both journals and conferences to a similar degree. Their observations do agree with those of this study in that only a small portion of Latvian research was published in journals. The discrepancies may be explained by the source of the data as Grančay et al. (2017) used Web of Science, while this study used Scopus.

Grančay et al. (2017) found that Lithuania had the largest growth of papers in indexed journals and Latvia the least. Yet, Lithuanian research were mostly published in local indexed journals while most Estonian research was published internationally. Latvian and Estonian researchers also published their work in Lithuanian journals.

The results confirm the obvious connection between population size and research output. However, a sizable population and high GDP may not be enough to generate critical mass. Research output may also be dependent on how the research is organized. One may ponder whether Norway’s absence in the top-tier conference is a result of the incentive system or inefficient organization of research? However, Norway had a strong journal article profile compared to its neighbors, therefore the research organization is probably not the key cause. Imbalances in the reward for conference and journal articles may be a more likely explanation for why Norwegian HCI researchers seem to have prioritized journals over top-tier conferences.

HCI research activity may be viewed as a prerequisite for providing high quality HCI education. This argument could also be reversed in that an intensified effort on HCI education, especially at graduate and postgraduate levels ultimately may result in more publications as it is common for most PhD students and some master students to have their work published. Pachoarelli et al. (2015) noted that the publication output within the area of graphic design in Brazil increased with the introduction of more graduate programs in design. Perhaps the HCI research output would increase if the HCI education was intensified in the Baltic countries?

HCI research longevity (RQ3)

Results

To gain insight into the Nordic-Baltic HCI research maturity (RQ3) the research productivity was plotted over time (see Figs. 4, 5 and 6). Denmark has the longest HCI-research history with a CHI paper dating back to 1983 entitled “Design practice and interface usability: Evidence from interviews with designers” by Anker Helms Jørgensen co-authored with researchers from Cambridge, UK. The first CHI paper from Finland appeared in 1986, while the first CHI paper from Sweden and Norway emerged in 1990. CHI papers from Iceland appeared in 2004 and onwards. Estonia did not become active at CHI before 2010. The data also show that the activities of Denmark, Sweden, and Finland did not intensify until 2006.

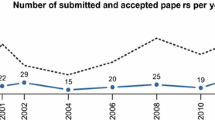

NordiCHI data are limited as the conference is hosted biannually with a shorter history than CHI. Still, the research activities in the respective countries seem stable. The activity peaked for countries that hosted the conference except for Denmark and Iceland, that is, Finland in 2004 and 2014, Sweden in 2008 and 2016, and Norway in 2006 and 2018. Figure 5 also shows the recent emergence of Estonia on the NordiCHI scene in 2014, while Lithuania had one paper in 2004.

The HCI international timeline shows that there were no papers from the Baltic Region before 2007 even though this conference goes back to 1984 and first branded as HCI International in 1987. There are no obvious trends between 2007 to 2019 apart from 2017 being a generally inactive year for all the Nordic-Baltic countries.

Discussion

The answer to RQ3 is that the HCI research longevity in the Nordic-Baltic region differ by several decades, and that HCI research activities in the Nordic countries preceded the activities in Baltic countries. The longevity of Denmark, Sweden and Finland is consistent with their dominance at the top-tier. Norway deviates from this pattern. Although the longevity of Norway in HCI research suggests maturity, this maturity is not echoed in the research output and the quantity of recurring authors at the top-tier.

Estonia and Lithuania gained independence from the Soviet Union during the early 90s, and this may be the reason why the first Lithuanian paper first emerged at NordiCHI a decade later, in 2002. The activity in Estonia emerged two decades after the independence. Zavadskas et al. (2011) gave a convincing explanation to why Latvia, Lithuania and Estonia had few publications within certain disciplines after gaining independence. The Soviet Union did not support certain fields of study including civil engineering and environmental protection. Researchers working within these fields were not allowed to attend conferences on the other side of the “Iron Curtain”. Publications from the entire Soviet region were all affiliated with the Soviet Union during the Soviet era. After gaining independence in 1991, the three Baltic countries chose different strategic paths which have resulted in three different research profiles. The results presented by Zavadskas et al. (2011), based on work published between 1990 and 2009, exhibited similar trends to those observed herein; research productivity in Lithuania had grown by a factor of 8, the research productivity in Estonia had grown by a factor of 5.5, while the productivity in Latvia had only grown by a factor of 1.9. The authors attributed Lithuania’s growth in research productivity to the indexing of several local journals in Web of Science.

Iceland has had a consistent yet small representation throughout the NordiCHI history. Looking at these contributions in more detail, it is evident that the papers were authored by a handful of authors, although one author being particularly active. An environment with few researchers is likely to exhibit large variations in research output over time. Small research groups are also more vulnerable.

The observed peaks in local productivity following NordiCHI confirm that the hosting of NordiCHI stimulated national HCI research activity. This is thus an argument for using the hosting conference as a means of stimulating research attention and activity.

The Scopus queries used did not return any HCI International paper prior to 2007. This is probably because the proceedings were first published by Elsevier until 1997, and then by Lawrence Erlbaum Associates Inc from 1999 to 2005.

Collaboration (RQ4)

Several studies show that research quality and impact are connected to collaboration (Bu, Ding, et al., 2018; Bu, Murray, et al., 2018; Wuchty et al., 2007). It is therefore relevant to inspect how, and with whom, Nordic-Baltic HCI researchers collaborate. Although international collaboration can take many forms collaboration is for pragmatic reasons observed in terms of co-authored papers.

Results

To answer RQ4 Figs. 7, 8, and 9 summarize the number of papers co-authored by Nordic-Baltic researchers at CHI, NordiCHI, and HCI International. Figure 7 shows a moderate intensity of Nordic-Baltic collaboration on top-tier papers (5.5%) limited to Sweden, Finland, Denmark, Norway, and Iceland. Absolute regional-tier collaborations were few but there are also fewer regional-papers, giving the highest relative collaboration intensity (9.0%). The entry-tier had the lowest number of papers with co-authors from several Nordic-Baltic countries (2.3%).

There were more collaborations with Iceland at the regional-tier (links to both Sweden and Denmark). Estonia collaborated with Norway at the entry-tier, while Denmark only co-authored papers with Sweden at this tier. There were no collaborations within the Baltic region, and no collaborations between Latvia, Lithuania, and the Nordic countries.

Figures 10 and 11 show the timeline of the most active collaborations. Only collaborations with 10 or more co-authored papers were plotted. Figure 10 shows that Nordic collaborations did not really intensify before year 2000, although Norway and Denmark had a co-authored paper already in 1990 and Norway and Sweden had a co-authored paper in 1999. Collaborations between Finland and Denmark, and between Denmark and Sweden seem to have increased during the last five years, while Sweden-Finland collaboration peaked in 2011.

The regional-tier timelines show that Denmark and Sweden have maintained a relatively low yet persistent level of collaboration over time, while there has been a recent increase in collaborations between Finland and Sweden. Collaborations at the entry-tier were all below the inclusion criteria of 10 papers and were therefore not plotted.

To explore the collaboration patterns further the ratio of single authored papers for the three conference venues were computed (see Fig. 12) as these represents no collaboration. Generally, the percentage of single authored top-tier papers were the lowest, followed by the regional-tier and highest for the entry-tier conferences. Lithuania is an anomaly as is it is associated with just one CHI paper. The differences in ratios of single authored papers across the countries are smaller at the top-tier and larger at the entry-tier.

Figure 13 shows that the most active countries had just over four authors per top-tier paper, just over three authors per regional-tier paper and entry-tier paper. Clearly, the differences across the countries were small. Top-tier papers from Norway had the highest mean number of authors followed by Estonia, while Iceland had the highest mean number of authors on regional-tier papers.

There was a high degree of collaboration on Nordic-Baltic research papers although the degree of Nordic-Baltic collaboration was low. It is therefore relevant to identify the nature of these collaborations in more detail. Figure 14 shows the percentage of papers with institutional collaborations within the institutions (HEI), national collaborations, Nordic-Baltic collaboration, and collaborations with countries outside the Nordic-Baltic region (global).

Collaborations on top-tier papers were dominated by countries outside the Nordic-Baltic region (global). This was followed by collaborations within the same institution. In contrast, national and Nordic-Baltic collaborations were comparatively less common.

At the regional-tier, the presence of global collaborations was generally much lower, and institutional collaborations much higher, followed by national and Nordic-Baltic collaborations. A similar pattern can be observed for the entry-tier conference, which generally exhibited lower presence of national, Nordic-Baltic, and global collaborations. Iceland was an exception as nearly half of the reginal-tier papers were co-authored with Nordic collaborators.

Tables 2, 3 and 4 show the top 5 most favored collaborators of the Nordic-Baltic countries. Clearly, US and UK are the two most common collaborators for top-tier papers, with Nordic-Baltic collaborators further down the lists (typically 4th and 5th place).

The list of top 5 collaborators at the regional-tier contains more Nordic countries and they generally appear with a higher rank than what was observed for the top-tier. The UK appears more frequently than the US. Entry-tier collaborators appeared geographically more spread. One common trait was the appearance of UK and US as frequent collaborators.

Discussion

The answer to RQ4 is that there has been a moderate level of collaborations within the region. Yet, the level of collaboration seems related to the level of activity, as the collaboration intensity is higher between Finland, Sweden, and Denmark, and to some extend Norway. If a country yields a low number of publications, it follows that the number of co-authored papers is low.

Given the common historic roots of Norway and Iceland, one may have expected a higher degree of collaboration than was observed. It is probably not practical for the few HCI researchers in Iceland to collaborate broadly, as they must narrow their focus and prioritize their efforts strategically.

It is also interesting to note that the most regional collaborations were observed at the regional-tier (9.0%) and fewest regional collaborations at the entry-tier (2.3%). Perhaps collaborations help provide the necessary quality to have papers accepted at the top-tier, while such collaborations are less of a necessity at the entry-tier? Indeed, this pattern is consistent with the findings in the literature that diversity of collaborations increases quality (Bu, Ding, et al., 2018; Bu, Murray, et al., 2018; Uzzi et al. 2013; Xu et al. 2015). Collaborators may come from different academic fields and have different degrees of experiences. The single authored paper ratios also agree with this, in that there are fewer single authored papers at the top-tier and the entry-tier. Similarly, top-tier papers have more co-authors than entry-tier papers. In other words, the results and the literature appear to support a recommendation for HCI researchers to collaborate more to succeed at the top-tier.

At the top-tier both Norway and Estonia exhibited the highest mean number of authors, yet Norway and Estonia had a relatively low research output at the top-tier. Is it possible that these top-tier papers indeed were possible because of the collaboration? Do these results indicate that less mature HCI research environments need to rely more on collaborations to compete at the top-tier compared to more mature research environments?

Temporal collaboration patterns indicate a recent increase in Nordic-Baltic collaboration especially between Sweden, Denmark, and Finland. If this trend continues, we are likely to experience more regional collaborations between these countries in the years to come. However, no such trend could be observed for the other countries.

A key question is if regional collaborations are perceived as useful? Perhaps other forms of collaboration constellations provide more benefit? There was a higher ratio of Nordic-Baltic collaborations at the regional-tier than at the two other tiers. Iceland stood out as more active in regional collaboration at the regional-tier as well as exhibiting the highest normalized research output. Perhaps one explanation is that Iceland is the smallest and the most geographically isolated country in the region and thereby utilized the regional-tier more actively? There were also more Nordic countries on the top-5 list of regional-tier collaborators than the two other tiers. The low intensity of collaboration between Nordic and Baltic countries and the absence of collaboration among the Baltic countries indicate that there are indeed opportunities for improvements and regional sharing of experiences and competences, especially in terms of addressing relevant challenges in a regional context. Another perspective may be that the success of Nordic-Baltic HCI research probably does not hinge on collaborations across the region if HCI researchers solicit collaborators elsewhere.

Top-tier papers were dominated by global collaboration, while entry-tier papers were characterized by intra-institutional collaborations. Perhaps successful competition at the top-tier require researchers to collaborate with leading researchers outside the region? US and UK were generally the most frequent collaborators, and these are also the most active countries at the top-tier (Bartneck & Hu, 2009). It may be a fruitful strategy to seek collaboration with researchers from countries such as US and UK with mature HCI research environments to lean on their experiences and practices. The observed preference to collaborate outside the Nordic-Baltic region as opposed to within the region agrees with previous studies of research collaboration within the Nordic (Persson et al., 1997) and the Baltic (Lovakov & Agadullina, 2019) regions. Persson et al. (1997) concluded that international collaboration is highly prevalent among Nordic researchers. Only 10% of these international collaborations occurred within the Nordic region, although variations were observed across different scientific fields. Lovakov and Agadullina (2019) showed that there was a moderate level of collaboration between the Baltic countries. However, among the post-Soviet countries the collaborations between Estonia and Russia, and between Lithuania and Russia were the most intense.

The findings by Grančay et al. (2017) based on a broad set of academic fields agree with the results reported herein in that Estonia demonstrated the highest ratio of authors per paper. Moreover, their observations of international collaboration match the current results, namely that Latvia had the largest percentage of international collaborators (49.1%), followed by Latvia (28.2%) and Lithuania (24.1%). As with the current study, they found that US and UK were the most frequent collaborators. Latvia collaborated frequently with Sweden and Lithuania, Estonia collaborated frequently with Germany and Finland, and Lithuania with Iran and Belgium. The observations of Grančay et al. (2017) differ from the current observations in terms of single authorship patterns; Estonia and Latvia exhibited the highest ratio of single authored papers (29%) while only 16.3% of Lithuanian papers had just one author. These discrepancies are probably due to the difference in thematic scope (wide versus HCI-focus). The wide scope will also have yielded a larger dataset.

The dominance of intra-institutional collaborations at the entry-tier may be explained by scholars publishing master and PhD work in collaboration with their students. It must also be noted that simply observing co-authored papers with authors from different countries only reveals one dimension of international collaboration. Valuable collaboration can take place even if the activities do not result in co-authored papers. Mobility of scholars can be a valuable mechanism for sharing practices and learning.

Citation impact (RQ5)

Results

To answer RQ5 concerning the citation impact of the HCI research published by the eight countries in the three types of conference and journals the mean number of citations per paper were plotted in Fig. 15. Journal articles were cited most frequently with a mean of 28.0 citations per paper, followed by the top-tier conference papers with a mean of 19.8 citations per paper, regional-tier conference with a mean of 10.3 citations per paper. The entry-tier conference had a mean of 3.7 citations per paper.

There were small differences in mean citation counts across the different countries. Lithuania tops the list of CHI papers simply because there was only one Lithuanian CHI paper with 38 citations. Among the most productive countries Denmark appears to have achieved the highest citation impact followed by Sweden and Finland, except for the entry-tier conference where Denmark was in fourth place after Norway. Papers from Sweden accumulated the highest mean number of citations at the entry-tier.

Discussion

The answer to RQ5 is that the research quality does not seem to vary much across the Nordic-Baltic countries as there are relatively small differences in mean citation counts. This may partially be explained by the longevity of research activities and the quantity of research. Older papers are more likely to have accumulated more citations than more recently published papers. Consequently, countries with a short history, such as the Baltic countries, may not have the same portfolio of highly cited papers. The observed citation patterns for the Baltic countries agree with the general findings of Grančay et al. (2017) who also found that despite small variations, Lithuania had the highest number of citations per paper (7.0) followed by Estonia (5.8) and Latvia (4.6). The observations also point in a similar direction to results for social science reported by Teodorescu and Andrei (2014) where Lithuania had a higher sum of journal impact factors for its local journals than Estonia and Latvia. They explained that this was because Lithuania had more journals that were indexed in the Journal Citation Reports/Social Sciences Edition (JCR/SSE) than Latvia and Estonia. Although the impact factors have increased Teodorescu and Andrei (2014) found that the article influence scored had decreased (the article influence score takes the impact of the citing source into consideration). Observations for psychology gives a different picture, as results by Lovakov and Agadullina (2019) suggest that Estonia was the post-Soviet country with the highest overall impact on several indicators although Latvia exhibited the highest mean number of citations per article. As HCI is a comparatively young field, it is possible that HCI research is not covered by the scope of these local Baltic journals.

There is a higher chance for more highly cited papers to emerge from a larger set of papers than a small set of papers. The high mean citation counts observed for Sweden, Denmark, and Finland are probably also related to their high publication activity.

It is not surprising that journal articles were cited more when viewed in context of traditional computer science as journal articles typically have been considered archival and conference papers have been considered work-in-progress. But, in context of HCI it is somewhat surprising that journal articles were cited more as HCI conferences seems to get more attention than journals. Moreover, HCI research from all the Baltic countries were cited and the citation to research from Lithuania was on par with that of Sweden, Denmark, and Finland.

The observations match the perceptions of top-tier papers being cited the most among conferences, and the entry-tier conference the least. One may argue that these results validate the methodological classification of the three conferences into top, regional and entry-tiers. If the goal is to achieve citations HCI researchers should aim to publish their work in top-tier conferences or journals. However, a citation count is an indication of scholarly interest; it is not the ultimate measure of societal impact. Citation context is also important. For example, a negative citation, that is citations to work under criticism, will incorrectly count positively. It is also relevant to consider the scientific status of the citing source, i.e., if the citing source is highly cited or have no citations, whether the citing course is rigorously peer-reviewed or not, or whether the citing source represents mature work or work-in-progress. Furthermore, individual citation counts may deviate greatly from the mean. Although top-tier papers have a higher mean citation count than the entry-tier papers, there are entry-tier papers with higher citation counts than the top-tier papers (see Table 5). In fact, 179 of the 1159 CHI papers were never cited, while 370 of the 526 HCI International papers had at least one citation (one HCI International paper was even cited 167 times).

Although it seems to be a common view that researchers should aim to publish their work in high quality channels (see for instance advice given by Grančay et al., 2017), one may argue that the availability of different tiers of conferences is beneficial. Inclusive venues such as HCI International may provide researchers with a platform to present and get feedback on unconventional and radical ideas. Similarly, competitive top-tier venues such as CHI are also valuable as these will help researchers stretch and aspire for higher quality and larger research projects. It is therefore worth monitoring each country’s active participation in these venues. Clearly, there is a connection between the number of publications in the top-tier venues and the reputation of the HCI research as expressed through ranking lists as high reputation is a consequence of quality research. One may also ponder whether high publication activity in entry-tiers is a predictor of future presence in top-tiers. Yet, it is a matter of concern if researchers only disseminate their works through entry-tier venues. Grančay et al. (2017) recommend that one should limit the number of times a publication venue may be used to receive a reward; perhaps such mechanisms may help stimulate diversity and quality. It should be noted that the San Francisco Declaration on Research Assessment (DORA) signals that a paper should not be assessed based on where it is published but rather on its contents.

Limitations

This study represents a snapshot of the situation on the days the searches were carried out. However, it is expected that the results are stable as they represent publication outputs accumulated over many years. Note that observations were based on the country of affiliation and not the type of institution. Hence, the analyses do not discriminate between research conducted at higher education institutions or research carried out at non-educational research institutions and companies.

The Scopus data used in this study was incomplete as conference proceedings from certain years were missing. Another source of irregularity includes conference frequency, that is, annual, biannual, and both as some conferences have changed from biannually to annually. Moreover, the conferences have different lifetimes. CHI has long traditions. NordiCHI is hosted biannually with a much shorter history, while HCI International was a biannual conference until 2013 and has been an annual event since then. Conference policies may also have been an influencing factor, for example, the introduction of extended abstracts at CHI in 1992 which led to a large increase in publications (Bartneck & Hu, 2009), and 2006 when proceedings reveal that CHI started to accept more papers resulting in a huge growth of papers (Liu et al., 2014). The increase in work-in-progress slots may have made active conference participation more accessible, thereby resulting in a more diverse country profile.

Database coverage is a challenge and the empirical evidence provided herein is by no means complete. Although much of the influential HCI research is covered by the sample of conferences and journals there are also important HCI research papers published elsewhere. Examples of other highly relevant conferences include UIST, INTERACT, DIS, and IUI. Some researchers may choose to publish interface implementation-oriented contributions without user tests in IEEE sponsored conferences whose proceedings appear in the IEEE Xplore digital library. HCI or HCI-adjacent research is also multidisciplinary in nature and work may therefore appear in other venues used by the respective disciplines.

Conclusions

A bibliometric study of HCI research activity in the Nordic-Baltic countries was reported. The results confirm that there was an unbalanced participation in the HCI research communities among the Nordic-Baltic countries even when adjusting for variations in GDP and population size. Sweden, Denmark, and Finland dominated the HCI research with the largest quantity of published papers, while only a small number of papers were published by authors from Latvia. Sweden, Denmark, Finland, and Norway were the only countries to consistently publish papers at all tiers, Iceland was also consistently active at the regional tier, while Estonia and Lithuania have been consistently present at the entry-tier. Locally hosted conferences were associated with local increases in research activity. Compared to the Nordic countries the Baltic countries have a much shorter history as independent nations; this is also reflected in the analysis of HCI research longevity. Although Norway and Sweden had similar HCI research longevity, Sweden appears to have reached a more mature research state matching those of Denmark and Finland.

The Nordic-Baltic HCI papers typically appeared with 4–5 authors per paper, but these collaborators were rarely authors from other Nordic-Baltic countries or other institutions nationally. Collaboration with researchers at the same institution and researchers outside the Nordic-Baltic region was common with US and UK as frequent partners. Next, collaboration within the same institution was most common at the entry-tier, while global collaboration outside the Nordic-Baltic region was more common at the top-tier. Observations are consistent with the view that collaboration help succeed in publishing at the top-tier.

Citation impact results were consistent with the perceived status of the conference tier, as conference papers published at the top-tier were more frequently cited than work published at the regional-tier and lowest for papers published at the entry-tier. Journal articles accumulated the most citations. Citation counts did not differ much across the different countries.

Implications of these results are that the HCI research activity intensity should be increased in several of the Nordic-Baltic countries. Countries with low research output could host conferences locally to stimulate research activity. At institutional level first time authors may be encouraged to collaborate with more experienced researchers within or outside the same institution. Furthermore, it may be more effective to publish in journals than top-tier conferences if the goal is to accumulate citations. It is hoped that the results presented herein will stimulate fruitful debates about the quality and extent of HCI research and research collaborations within the Nordic-Baltic Region.

References

Abramo, G., Aksnes, D. W., & D’Angelo, C. A. (2020). Comparison of research performance of Italian and Norwegian professors and universities. Journal of Informetrics, 14(2), 101023. https://doi.org/10.1016/j.joi.2020.101023

Agarwal, S., Mittal, N., & Sureka, A. (2016). A glance at seven acm sigweb series of conferences. ACM SIGWEB Newsletter, 10(1145/2956573), 2956578.

Altbach, P. G., & Pacheco, I. F. (Eds.). (2012). Paying the professoriate: A global comparison of compensation and contracts. Routledge.

Aminer. 2016 Most influential scholars. Retrieved March 22, 2020 from https://www.aminer.cn/mostinfluentialscholar/hci.

Barbosa, S. D. J., Silveira, M. S., & Gasparini, I. (2017). What publications metadata tell us about the evolution of a scientific community: The case of the Brazilian human–computer interaction conference series. Scientometrics, 110(1), 275–300. https://doi.org/10.1007/s11192-016-2162-4

Barkhuus, L., & Rode, J. A. (2007). From mice to men-24 years of evaluation in CHI. In Proceedings of the SIGCHI conference on human factors in computing systems (Vol. 10, No. 1240624.2180963). https://doi.org/10.1145/1240624.2180963.

Bartneck, C. (2011). The end of the beginning: A reflection on the first five years of the HRI conference. Scientometrics, 86(2), 487–504. https://doi.org/10.1007/s11192-010-0281-x

Bartneck, C., & Hu, J. (2009). Scientometric analysis of the CHI proceedings. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 699–708). https://doi.org/10.1145/1518701.1518810.

Bartneck, C., & Hu, J. (2010). The fruits of collaboration in a multidisciplinary field. Scientometrics, 85(1), 41–52. https://doi.org/10.1007/s11192-010-0242-4

Bernstein, M., Cosley, D., DiSalvo, C., Kairam, S., Karger, D., Kriplean, T., Lampe, C., Mackay, W., Terveen, L., Wobbrock, J. & Yardi, S. (2012). Reject me: peer review and SIGCHI. In CHI'12 extended abstracts on human factors in computing systems (pp. 1197–1200). https://doi.org/10.1145/2212776.2212422.

Billon, M., Lera-Lopez, F., & Marco, R. (2010). Differences in digitalization levels: A multivariate analysis studying the global digital divide. Review of World Economics, 146(1), 39–73. https://doi.org/10.1007/s10290-009-0045-y

Björklund, F. (2016). E-government and moral citizenship: The case of Estonia. Citizenship studies, 20(6–7), 914–931. https://doi.org/10.1080/13621025.2016.1213222

Broadus, R. N. (1987). Toward a definition of “bibliometrics.” Scientometrics, 12(5–6), 373–379. https://doi.org/10.1007/BF02016680

Bu, Y., Ding, Y., Xu, J., Liang, X., Gao, G., & Zhao, Y. (2018a). Understanding success through the diversity of collaborators and the milestone of career. Journal of the Association for Information Science and Technology, 69(1), 87–97. https://doi.org/10.1002/asi.23911

Bu, Y., Murray, D. S., Ding, Y., Huang, Y., & Zhao, Y. (2018b). Measuring the stability of scientific collaboration. Scientometrics, 114(2), 463–479. https://doi.org/10.1007/s11192-017-2599-0

Cho, Y., Park, H. J., & Lim, H. (2020). Research in User-Centered Design 2009 to 2018: A Systematic Keyword Network Analysis. In International conference on human-computer interaction (pp. 11–18).Cham: Springer. https://doi.org/10.1007/978-3-030-50726-8_2.