Abstract

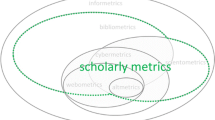

Altmetrics is an emergent research area whereby social media is applied as a source of metrics to assess scholarly impact. In the last few years, the interest in altmetrics has grown, giving rise to many questions regarding their potential benefits and challenges. This paper aims to address some of these questions. First, we provide an overview of the altmetrics landscape, comparing tool features, social media data sources, and social media events provided by altmetric aggregators. Second, we conduct a systematic review of the altmetrics literature. A total of 172 articles were analysed, revealing a steady rise in altmetrics research since 2011. Third, we analyse the results of over 80 studies from the altmetrics literature on two major research topics: cross-metric validation and coverage of altmetrics. An aggregated percentage coverage across studies on 11 data sources shows that Mendeley has the highest coverage of about 59 % across 15 studies. A meta-analysis across more than 40 cross-metric validation studies shows overall a weak correlation (ranging from 0.08 to 0.5) between altmetrics and citation counts, confirming that altmetrics do indeed measure a different kind of research impact, thus acting as a complement rather than a substitute to traditional metrics. Finally, we highlight open challenges and issues facing altmetrics and discuss future research areas.

Similar content being viewed by others

Notes

http://altmetrics.org/about/. Accessed 18 Feb 2016.

http://www.niso.org/topics/tl/altmetrics_initiative, Accessed 18 Feb 2016.

Mendeley is an online reference manager for scholarly publications.

http://www.altmetric.com. Accessed 18 Feb 2016.

https://impactstory.org. Accessed 18 Feb 2016.

http://www.plumanalytics.com. Accessed 18 Feb 2016.

http://article-level-metrics.plos.org. Accessed 18 Feb 2016.

https://www.growkudos.com. Accessed 18 Feb 2016.

http://lexiurl.wlv.ac.uk. Accessed 18 Feb 2016.

http://www.snowballmetrics.com. Accessed 18 Feb 2016.

http://readermeter.org. Accessed 18 Feb 2016.

http://50.17.213.175. Accessed 18 Feb 2016.

http://link.springer.com/journal/11192. Accessed 18 Feb 2016.

http://www.journals.elsevier.com/journal-of-informetrics. Accessed 18 Feb 2016.

http://onlinelibrary.wiley.com/journal/10.1002/(ISSN)2330-1643. Accessed 18 Feb 2016.

http://plosone.org. Accessed 18 Feb 2016.

https://www.asis.org/proceedings.html. Accessed 18 Feb 2016.

http://insights.uksg.org/. Accessed 18 Feb 2016.

http://www.emeraldgrouppublishing.com/products/journals/journals.htm?id=AJIM. Accessed 18 Feb 2016.

http://journals.plos.org/plosbiology/. Accessed 18 Feb 2016.

http://www.issi2015.org/en/default.asp. Accessed 18 Feb 2016.

http://crln.acrl.org/. Accessed 18 Feb 2016.

http://recyt.fecyt.es/index.php/EPI/. Accessed 18 Feb 2016.

http://www.nature.com. Accessed 18 Feb 2016.

http://ceur-ws.org/. Accessed 18 Feb 2016.

https://www.meta-analysis.com/. Accessed 27 November 2015.

https://aminer.org/, Accessed 18 Feb 2016.

http://www.papercritic.com/, Accessed 18 Feb 2016.

https://www.zotero.org/blog/studying-the-altmetrics-of-zotero-data/, Accessed 18 Feb 2016.

References

Adie, E., & Roe, W. (2013). Altmetric: Enriching scholarly content with article-level discussion and metrics. Learned Publishing, 26(1), 11–17.

Allen, H. G., Stanton, T. R., Di Pietro, F., & Moseley, G. L. (2013). Social media release increases dissemination of original articles in the clinical pain sciences. PLoS One, 8(7), e68914.

Alperin, J. P. (2015a). Geographic variation in social media metrics: An analysis of Latin American journal articles. Aslib Journal of Information Management, 67(3), 289–304.

Alperin, J. P. (2015b). Moving beyond counts: A method for surveying Twitter users. http://altmetrics.org/altmetrics15/alperin/. Accessed 18 Feb 2016.

Andersen, J. P., & Haustein, S. (2015). Influence of study type on Twitter activity for medical research papers. In Proceedings of the 15th international society of scientometrics and informetrics conference.

Araújo, R. F., Murakami, T. R., De Lara, J. L., & Fausto, S. (2015). Does the global south have altmetrics? Analyzing a Brazilian LIS journal. In Proceedings of the 15th international society of scientometrics and informetrics conference, (pp. 111–112).

Bar-Ilan, J. (2012). JASIST@mendeley. In ACM web science conference 2012 workshop.

Bar-Ilan, J. (2014). Astrophysics publications on arXiv, Scopus and Mendeley: a case study. Scientometrics, 100(1), 217–225.

Bar-Ilan, J., Haustein, S., Peters, I., Priem, J., Shema, H., & Terliesner, J. (2012). Beyond citations: Scholars’ visibility on the social Web. arXiv preprint. arXiv:1205.5611.

Bornmann, L. (2014a). Alternative metrics in scientometrics: A meta-analysis of research into three altmetrics. Scientometrics, 103(3), 1123–1144.

Bornmann, L. (2014b). Do altmetrics point to the broader impact of research? An overview of benefits and disadvantages of altmetrics. Journal of Informetrics, 8(4), 895–903.

Bornmann, L. (2014c). Validity of altmetrics data for measuring societal impact: A study using data from atmetric and F1000Prime. Journal of Informetrics, 8(4), 935–950.

Bornmann, L. (2015a). Interrater reliability and convergent validity of F1000Prime peer review. Journal of the Association for Information Science and Technology, 66(12), 2415–2426.

Bornmann, L. (2015b). Letter to the editor: On the conceptualisation and theorisation of the impact caused by publications. Scientometrics, 103(3), 1145–1148.

Bornmann, L. (2015c). Usefulness of altmetrics for measuring the broader impact of research. Aslib Journal of Information Management, 67(3), 305–319.

Bornmann, L., & Haunschild, R. (2015). Which people use which scientific papers? An evaluation of data from F1000 and Mendeley. Journal of Informetrics, 9(3), 477–487.

Bornmann, L., & Leydesdorff, L. (2013). The validation of (advanced) bibliometric indicators through peer assessments: A comparative study using data from InCites and F1000. Journal of Informetrics, 7(2), 286–291.

Bornmann, L., & Leydesdorff, L. (2015). Does quality and content matter for citedness? A comparison with para-textual factors and over time. Journal of Informetrics, 9(3), 419–429.

Bornmann, L., & Marx, W. (2015). Methods for the generation of normalized citation impact scores in bibliometrics: Which method best reflects the judgements of experts? Journal of Informetrics, 9(2), 408–418.

Bowman, T. D. (2015). Tweet or publish: A comparison of 395 professors on Twitter. In Proceedings of the 15th international society of scientometrics and informetrics conference.

Buschman, M., & Michalek, A. (2013). Are alternative metrics still alternative? Bulletin of the American Society for Information Science and Technology, 39(4), 35–39.

Cabezas-Clavijo, Á., Robinson-García, N., Torres-Salinas, D., Jiménez-Contreras, E., Mikulka, T., Gumpenberger, C., Wernisch, A., & Gorraiz, J. (2013). Most borrowed is most cited? Library loan statistics as a proxy for monograph selection in citation indexes. In Proceedings of the 14th international society of scientometrics and informetrics conference (Vol. 2, pp. 1237–1252).

Chamberlain, S. (2013). Consuming article-level metrics: Observations and lessons. Information Standards Quarterly, 25(2), 4–13.

Chen, K., Tang, M., Wang, C., & Hsiang, J. (2015). Exploring alternative metrics of scholarly performance in the social sciences and humanities in Taiwan. Scientometrics, 102(1), 97–112.

Colledge, L. (2014). Snowball metrics recipe book, 2nd ed. Amsterdam, the Netherlands: Snowball Metrics program partners.

Costas, R., & van Leeuwen, T. N. (2012). Approaching the “reward triangle”: General analysis of the presence of funding acknowledgments and “peer interactive communication” in scientific publications. Journal of the American Society for Information Science and Technology, 63(8), 1647–1661.

Costas, R., Zahedi, Z., & Wouters, P. (2015). Do “altmetrics” correlate with citations? Extensive comparison of altmetric indicators with citations from a multidisciplinary perspective. Journal of the Association for Information Science and Technology, 66(10), 2003–2019.

Cronin, B. (2013). The evolving indicator space (iSpace). Journal of the American Society for Information Science and Technology, 64(8), 1523–1525.

Davis, B., Hulpuş, I., Taylor, M., & Hayes, C. (2015). Challenges and opportunities for detecting and measuring diffusion of scientific impact across heterogeneous altmetric sources. http://altmetrics.org/altmetrics15/davis/. Accessed 18 Feb 2016.

De Winter, J. (2015). The relationship between tweets, citations, and article views for PLOS One articles. Scientometrics, 102(2), 1773–1779.

Edelman, B., Larkin, I., et al. (2009). Demographics, career concerns or social comparison: Who Games SSRN download counts? Harvard Business School.

Eyre-Walker, A., & Stoletzki, N. (2013). The assessment of science: The relative merits of post-publication review, the impact factor, and the number of citations. PLoS Biol, 11(10), e1001675.

Eysenbach, G. (2012). Can tweets predict citations? Metrics of social impact based on Twitter and correlation with traditional metrics of scientific impact. Journal of Medical Internet Research, 13(4), e123.

Fairclough, R., & Thelwall, M. (2015). National research impact indicators from Mendeley readers. Journal of Informetrics, 9(4), 845–859.

Fausto, S., Machado, F. A., Bento, L. F. J., Iamarino, A., Nahas, T. R., & Munger, D. S. (2012). Research blogging: Indexing and registering the change in science 2.0. PLoS One, 7(12), e50109.

Fenner, M. (2013). What can article-level metrics do for you? PLoS Biol, 11(10), e1001687.

García, N. R., Salinas, D. T., Zahedi, Z., & Costas, R. (2014). New data, new possibilities: exploring the insides of Altmetric.com. El profesional de la información, 23(4), 359–366.

Glänzel, W., & Gorraiz, J. (2015). Usage metrics versus altmetrics: Confusing terminology? Scientometrics, 3(102), 2161–2164.

Gordon, G., Lin, J., Cave, R., & Dandrea, R. (2015). The question of data integrity in article-level metrics. PLoS Biol, 13(8), e1002161.

Haak, L. L., Fenner, M., Paglione, L., Pentz, E., & Ratner, H. (2012). ORCID: A system to uniquely identify researchers. Learned Publishing, 25(4), 259–264.

Hammarfelt, B. (2013). An examination of the possibilities that altmetric methods offer in the case of the humanities. In Proceedings of the 14th international society of scientometrics and informetrics conference, (Vol. 1, pp. 720–727).

Hammarfelt, B. (2014). Using altmetrics for assessing research impact in the humanities. Scientometrics, 101(2), 1419–1430.

Haunschild, R., Stefaner, M., & Bornmann, L. (2015). Who publishes, reads, and cites papers? An analysis of country information. In Proceedings of the 15th international society of scientometrics and informetrics conference.

Haustein, S., & Larivière, V. (2014). A multidimensional analysis of Aslib proceedings-using everything but the impact factor. Aslib Journal of Information Management, 66(4), 358–380.

Haustein, S., & Larivière, V. (2015). The use of bibliometrics for assessing research: possibilities, limitations and adverse effects. In Incentives and performance, Springer, pp. 121–139.

Haustein, S., Bowman, T. D., Holmberg, K ., Tsou, A., Sugimoto, C. R., & Larivière, V. (2015a). Tweets as impact indicators: Examining the implications of automated “bot” accounts on Twitter. Journal of the Association for Information Science and Technology, 67(1), 232–238.

Haustein, S., Costas, R., & Larivière, V. (2015b). Characterizing social media metrics of scholarly papers: The effect of document properties and collaboration patterns. PLoS One, 10(3), e0120495.

Haustein, S., Bowman, T. D., & Costas, R. (2016). Interpreting ‘Altmetrics’: Viewing acts on social media through the lens of citation and social theories. In Cassidy R. Sugimoto (Ed.), Theories of informetrics and scholarly communication. A Festschrift in honor of Blaise Cronin (pp. 372–406). De Gruyter.

Haustein, S., Peters, I., Bar-Ilan, J., Priem, J., Shema, H., & Terliesner, J. (2013). Coverage and adoption of altmetrics sources in the bibliometric community. In Proceedings of the 14th international society of scientometrics and informetrics conference (Vol. 1, pp. 468–483).

Haustein, S., Peters, I., Bar-Ilan, J., Priem, J., Shema, H., & Terliesner, J. (2014a). Coverage and adoption of altmetrics sources in the bibliometric community. Scientometrics, 101(2), 1145–1163.

Haustein, S., Peters, I., Sugimoto, C. R., Thelwall, M., & Larivière, V. (2014b). Tweeting biomedicine: An analysis of tweets and citations in the biomedical literature. Journal of the Association for Information Science and Technology, 65(4), 656–669.

Haustein, S., & Siebenlist, T. (2011). Applying social bookmarking data to evaluate journal usage. Journal of Informetrics, 5(3), 446–457.

Henning, V. (2010). The top 10 journal articles published in 2009 by readership on Mendeley. Mendeley Blog. http://www.mendeley.com/blog/academic-features/the-top-10-journalarticles-published-in-2009-by-readership-on-mendeley. Accessed 18 Feb 2016.

Hoffmann, C. P., Lutz, C., & Meckel, M. (2015). A relational altmetric? Network centrality on ResearchGate as an indicator of scientific impact. Journal of the Association for Information Science and Technology, 67(4), 765–775.

Holmberg, K. (2015). Online Attention of Universities in Finland: Are the bigger universities bigger online too? In Proceedings of the 15th international society of scientometrics and informetrics conference.

Holmberg, K., & Thelwall, M. (2014). Disciplinary differences in Twitter scholarly communication. Scientometrics, 101(2), 1027–1042.

Hopkins, W. G. (2004). An introduction to meta-analysis. Sportscience, 8, 20–24.

Howison, J., & Bullard, J. (2015). Software in the scientific literature: Problems with seeing, finding, and using software mentioned in the biology literature. Journal of the Association for Information Science and Technology (in press).

Jiang, J., He, D., & Ni, C. (2013). The correlations between article citation and references’ impact measures: What can we learn? In Proceedings of the American society for information science and technology,(Vol. 50, pp. 1–4). Wiley Subscription Services, Inc., A Wiley Company.

Knoth, P., & Herrmannova, D. (2014). Towards semantometrics: A new semantic similarity based measure for assessing a research publication’s contribution. D-Lib Magazine, 20(11), 8.

Kousha, K., & Thelwall, M. (2015a). Alternative metrics for book impact assessment: Can choice reviews be a useful source? In Proceedings of the 15th international society of scientometrics and informetrics conference.

Kousha, K., & Thelwall, M. (2015b). An automatic method for assessing the teaching impact of books from online academic syllabi. Journal of the Association for Information Science and Technology (in press).

Kousha, K., & Thelwall, M. (2015c). Can Amazon.com reviews help to assess the wider impacts of books? Journal of the Association for Information Science and Technology, 67(3), 566–581.

Kraker, P., Schlögl, C., Jack, K., & Lindstaedt, S. (2015). Visualization of co-readership patterns from an online reference management system. Journal of Informetrics, 9(1), 169–182.

Kumar, S., & Mishra, A. K. (2015). Bibliometrics to altmetrics and its impact on social media. International Journal of Scientific and Innovative Research Studies, 3(3), 56–65.

Kurtz, M. J., & Henneken, E. A. (2014). Finding and recommending scholarly articles. Beyond bibliometrics: harnessing multidimensional indicators of scholarly impact, pp. 243–259.

Li, X., Thelwall, M., & Giustini, D. (2011). Validating online reference managers for scholarly impact measurement. Scientometrics, 91(2), 461–471.

Li, X., Thelwall, M., & (uk, W. W. L. (2012). F1000, Mendeley and traditional bibliometric indicators. In Proceedings of the 17th international conference on science and technology indicators, pp. 451–551.

Lin, J. (2012). A case study in anti-gaming mechanisms for altmetrics: PLoS ALMs and datatrust. http://altmetrics.org/altmetrics12/lin. Accessed 18 Feb 2016.

Lin, J., & Fenner, M. (2013a). Altmetrics in evolution: defining and redefining the ontology of article-level metrics. Information Standards Quarterly, 25(2), 20.

Lin, J., & Fenner, M. (2013b). The many faces of article-level metrics. Bulletin of the American Society for Information Science and Technology, 39(4), 27–30.

Liu, C. L., Xu, Y. Q., Wu, H., Chen, S. S., & Guo, J. J. (2013). Correlation and interaction visualization of altmetric indicators extracted from scholarly social network activities: dimensions and structure. Journal of Medical Internet Research, 15(11), e259.

Liu, J., & Adie, E. (2013). Five challenges in altmetrics: A toolmaker’s perspective. Bulletin of the American Society for Information Science and Technology, 39(4), 31–34.

Loach, T. V., & Evans, T. S. (2015). Ranking journals using altmetrics. In Proceedings of the 15th international society of scientometrics and informetrics conference.

Maflahi, N., & Thelwall, M. (2015). When are readership counts as useful as citation counts? Scopus versus Mendeley for LIS journals. Journal of the Association for Information Science and Technology, 67(1), 191–199.

Maleki, A. (2015a). Mendeley readership impact of academic articles of Iran. In Proceedings of the 15th international society of scientometrics and informetrics conference.

Maleki, A. (2015b). PubMed and ArXiv vs. Gold open access: Citation, Mendeley, and Twitter uptake of academic articles of Iran. In Proceedings of the 15th international society of scientometrics and informetrics conference.

Mas-Bleda, A., Thelwall, M., Kousha, K., & Aguillo, I. F. (2014). Do highly cited researchers successfully use the social web? Scientometrics, 101(1), 337–356.

Mayr, P., & Scharnhorst, A. (2015). Scientometrics and information retrieval: weak-links revitalized. Scientometrics, 102(3), 2193–2199.

Mohammadi, E., & Thelwall, M. (2013). Assessing non-standard article impact using F1000 labels. Scientometrics, 97(2), 383–395.

Mohammadi, E., & Thelwall, M. (2014). Mendeley readership altmetrics for the social sciences and humanities: Research evaluation and knowledge flows. Journal of the Association for Information Science and Technology, 65(8), 1627–1638.

Mohammadi, E., Thelwall, M., Haustein, S., & Larivière, V. (2015a). Who reads research articles? An altmetrics analysis of Mendeley user categories. Journal of the Association for Information Science and Technology, 66(9), 1832–1846.

Mohammadi, E., Thelwall, M., & Kousha, K. (2015b). Can Mendeley bookmarks reflect readership? A survey of user motivations. Journal of the Association for Information Science and Technology, 67(5), 1198–1209.

NISO (2014). NISO alternative metrics (altmetrics) initiative phase 1 white paper. http://www.niso.org/apps/group_public/download.php/13809/Altmetrics_project_phase1_white_paper.pdf. Accessed 18 Feb 2016.

Orduña-Malea, E., Ortega, J. L., & Aguillo, I. F. (2014). Influence of language and file type on the web visibility of top European universities. Aslib Journal of Information Management, 66(1), 96–116.

Ortega, J. L. (2015a). How is an academic social site populated? A demographic study of Google Scholar citations population. Scientometrics, 104(1), 1–18.

Ortega, J. L. (2015b). Relationship between altmetric and bibliometric indicators across academic social sites: The case of CSIC’s members. Journal of Informetrics, 9(1), 39–49.

Paul-Hus, A., Sugimoto, C. R., Haustein, S., & Larivière, V. (2015). Is there a gender gap in social media metrics? In Proceedings of the 15th international society of scientometrics and informetrics conference, pp. 37–45.

Peters, I., Beutelspacher, L., Maghferat, P., & Terliesner, J. (2012). Scientific bloggers under the altmetric microscope. In Proceedings of the American society for information science and technology (Vol. 49, pp. 1–4) Wiley Subscription Services, Inc., A Wiley Company.

Peters, I., Jobmann, A., Hoffmann, C. P., Künne, S., Schmitz, J., & Wollnik-Korn, G. (2014). Altmetrics for large, multidisciplinary research groups: Comparison of current tools. Bibliometrie-Praxis und Forschung, 3(1), 1–19.

Peters, I., Kraker, P., Lex, E., Gumpenberger, C., & Gorraiz, J. (2015). Research data explored: Citations versus altmetrics. in Proceedings of the 15th international society of scientometrics and informetrics conference.

Piwowar, H. (2013). Altmetrics: Value all research products. Nature, 493(7431), 159–159.

Piwowar, H., & Priem, J. (2013). The power of altmetrics on a CV. Bulletin of the American Society for Information Science and Technology, 39(4), 10–13.

Priem, J. (2014). Altmetrics. Beyond Bibliometrics: harnessing multidimensional indicators of scholarly impact, pp. 263–287.

Priem, J., & Hemminger, B. M. (2010). Scientometrics 2.0: Toward new metrics of scholarly impact on the social Web. First Monday, 15(7).

Priem, J., Parra, C., Piwowar, H., & Waagmeester, A. (2012a). Uncovering impacts: CitedIn and total-impact, two new tools for gathering altmetrics. Paper presented at the iConference 2012.

Priem, J., Piwowar, H. A., & Hemminger, B. M. (2012b). Altmetrics in the wild: Using social media to explore scholarly impact. arXiv preprint. arXiv:1203.4745

Priem, J., Taraborelli, D., Groth, P., & Neylon, C. (2010). Altmetrics: A manifesto. http://altmetrics.org/manifesto. Accessed 18 Feb 2016.

Ringelhan, S., Wollersheim, J., & Welpe, I. M. (2015). I like, I cite? Do Facebook likes predict the impact of scientific work? PLoS One, 10(8), e0134389.

Schlögl, C., Gorraiz, J., Gumpenberger, C., Jack, K., & Kraker, P. (2014). Comparison of downloads, citations and readership data for two information systems journals. Scientometrics, 101(2), 1113–1128.

Shema, H., Bar-Ilan, J., & Thelwall, M. (2014). Do blog citations correlate with a higher number of future citations? Research blogs as a potential source for alternative metrics. Journal of the Association for Information Science and Technology, 65(5), 1018–1027.

Shema, H., Bar-Ilan, J., & Thelwall, M. (2015). How is research blogged? A content analysis approach. Journal of the Association for Information Science and Technology, 66(6), 1136–1149.

Shuai, X., Pepe, A., & Bollen, J. (2012). How the scientific community reacts to newly submitted preprints: Article downloads, Twitter mentions, and citations. PLoS One, 7(11), e47523.

Sotudeh, H., Mazarei, Z., & Mirzabeigi, M. (2015). CiteULike bookmarks are correlated to citations at journal and author levels in library and information science. Scientometrics, 105(3), 2237–2248.

Sud, P., & Thelwall, M. (2015). Not all international collaboration is beneficial: The Mendeley readership and citation impact of biochemical research collaboration. Journal of the Association for Information Science and Technology, 67(8), 1849–1857.

Tang, J., Zhang, J., Yao, L., Li, J., Zhang, L., & Su, Z. (2008). Arnetminer: extraction and mining of academic social networks. In Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and data mining, ACM, pp. 990–998.

Tang, M.-c., Wang, C.-m., Chen, K.-h., & Hsiang, J. (2012). Exploring alternative cyberbibliometrics for evaluation of scholarly performance in the social sciences and humanities in Taiwan. In Proceedings of the American society for information science and technology, (Vol. 49, pp. 1–1). Wiley Subscription Services, Inc., A Wiley Company.

Taylor, M. (2013). Exploring the boundaries: How altmetrics can expand our vision of scholarly communication and social impact. Information Standards Quarterly, 25(2), 27–32.

Thelwall, M. (2010). Introduction to LexiURL searcher: A research tool for social scientists. Statistical cybermetrics research group, University of Wolverhampton. http://lexiurl.wlv.ac.uk. Accessed 18 Feb 2016

Thelwall, M. (2012a). Introduction to webometric analyst 2.0: A research tool for social scientists. Statistical cybermetrics research group, University of Wolverhampton. http://webometrics.wlv.ac.uk. Accessed 18 Feb 2016.

Thelwall, M. (2012b). Journal impact evaluation: A webometric perspective. Scientometrics, 92(2), 429–441.

Thelwall, M., & Fairclough, R. (2015a). Geometric journal impact factors correcting for individual highly cited articles. Journal of Informetrics, 9(2), 263–272.

Thelwall, M., & Fairclough, R. (2015b). The influence of time and discipline on the magnitude of correlations between citation counts and quality scores. Journal of Informetrics, 9(3), 529–541.

Thelwall, M., Haustein, S., Larivière, V., & Sugimoto, C. R. (2013). do altmetrics work? twitter and ten other social web services. PLoS One, 8(5), e64841.

Thelwall, M., & Kousha, K. (2014). Academia.edu: Social network or academic network? Journal of the Association for Information Science and Technology, 65(4), 721–731.

Thelwall, M., & Kousha, K. (2015). ResearchGate: Disseminating, communicating, and measuring scholarship? Journal of the Association for Information Science and Technology, 66(5), 876–889.

Thelwall, M., & Maflahi, N. (2015a). Are scholarly articles disproportionately read in their own country? An analysis of Mendeley readers. Journal of the Association for Information Science and Technology, 66(6), 1124–1135.

Thelwall, M., & Maflahi, N. (2015b). Guideline references and academic citations as evidence of the clinical value of health research. Journal of the Association for Information Science and Technology, 67(4), 960–966.

Thelwall, M., & Sud, P. (2015). Mendeley readership counts: An investigation of temporal and disciplinary differences. Journal of the Association for Information Science and Technology (in press).

Thelwall, M., & Wilson, P. (2015a). Does research with statistics have more impact? The citation rank advantage of structural equation modeling. Journal of the Association for Information Science and Technology, 67(5), 1233–1244.

Thelwall, M., & Wilson, P. (2015b). Mendeley readership altmetrics for medical articles: An analysis of 45 fields. Journal of the Association for Information Science and Technology (in press).

Torres-Salinas, D., & Milanés-Guisado, Y. (2014). Presencia en redes sociales y altmétricas de los principales autores de la revista “El Profesional de la Información”. El profesional de la información, 23(3),

Uren, V., & Dadzie, A.-S. (2015). Public science communication on Twitter: A visual analytic approach. Aslib Journal of Information Management, 67(3), 337–355.

Waltman, L., & Costas, R. (2014). F1000 recommendations as a potential new data source for research evaluation: A comparison with citations. Journal of the Association for Information Science and Technology, 65(3), 433–445.

Weller, K. (2015). Social media and altmetrics: An overview of current alternative approaches to measuring scholarly impact. In Incentives and Performance, (pp. 261–276). Springer.

Weller, K., & Peters, I. (2012). Citations in Web 2.0. Science and the Internet, (pp. 209–222).

Wouters, P., & Costas, R. (2012). Users, narcissism and control: tracking the impact of scholarly publications in the 21st century. SURFfoundation Utrecht.

Yan, K.-K., & Gerstein, M. (2011). The spread of scientific information: Insights from the web usage statistics in PLoS article-level metrics. PLoS One, 6(5), 1–7.

Zahedi, Z., Costas, R., & Wouters, P. (2014a). How well developed are altmetrics? A cross-disciplinary analysis of the presence of ’alternative metrics’ in scientific publications. Scientometrics, 101(2), 1491–1513.

Zahedi, Z., Costas, R., & Wouters, P. (2015a). Do Mendeley readership counts help to filter highly cited WoS publications better than average citation impact of journals (JCS)? In Proceedings of the 15th international society of scientometrics and informetrics conference.

Zahedi, Z., Fenner, M., & Costas, R. (2014b). How consistent are altmetrics providers? Study of 1000 PLoS One publications using the PLoS ALM, Mendeley and Altmetric.com APIs. In altmetrics 14. Workshop at the web science conference, Bloomington, USA.

Zahedi, Z., Fenner, M., & Costas, R. (2015b). Consistency among altmetrics data provider/aggregators: what are the challenges? In altmetrics15: 5 years in, what do we know? The 2015 altmetrics workshop, Amsterdam.

Zhou, Q., & Zhang, C. (2015). Can book reviews be used to evaluate books’ influence? In Proceedings of the 15th international society of scientometrics and informetrics conference.

Zuccala, A. A., Verleysen, F. T., Cornacchia, R., & Engels, T. C. (2015). Altmetrics for the humanities: Comparing Goodreads reader ratings with citations to history books. Aslib Journal of Information Management, 67(3), 320–336.

Acknowledgments

This research is supported by the National Research Foundation, Prime Minister’s Office, Singapore under its Science of Research, Innovation and Enterprise programme (SRIE Award No. NRF2014-NRF-SRIE001-019).

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Overview of cross-metric validation studies

In the following sections, an overview of the cross-metric validation studies discussed in “Results of studies on cross-metric validation” section are shown. Table 12 gives an overview of cross-metric validation studies that compare altmetrics to citations from CrossRef, PubMed, journal based citations , book citations, and university rankings. Table 13 gives an overview of cross-metric validation studies that compare altmetrics to usage metrics. Table 14 and Table 15 give an overview of cross-metric validation studies performed that compare altmetrics to altmetrics.

Appendix 2: Details of the results from the meta-analysis

In the following sections, the details of the results from the meta-analysis, answering research questions RQ2.1, RQ2.2, and RQ2.3 presented in “Results of studies on cross-metric validation”, are shown. Table 16 gives an overview of the studies covered in the meta-analysis. The results of the meta-analysis are shown in the following sections depicted as forest plots. In a forest plot, the study names of the studies considered in each meta-analysis are listed according to Table 16, including an additional index added to the name if several results were reported in a single study. For each study, the reported correlation value, the lower and upper limits, the Z-value and p-value are reported, as well as the sample size, i.e., the total number of data points (research artifacts) considered in the study. For each study, the measured correlation is represented by a black square. The size of the square depicts the study’s weight (according to sample size) in the meta-analysis. The horizontal lines show confidence intervals. The overall measured correlation from the meta-analysis is shown as a diamond, whose width depicts the confidence interval. When the studies are grouped, several diamonds are shown, each representing the overall measured correlation across the group.

Mendeley

Figure 6 shows the results of the meta-analysis for Mendeley compared to citations, resulting in an overall correlation of 0.37, thus answering RQ2.1: 0.631 with Google Scholar (Li et al. 2011, 2012; Bar-Ilan 2012), 0.577 with Scopus (Schlögl et al. 2014; Haustein et al. 2014a; Thelwall and Sud 2015; Maflahi and Thelwall 2015; Li et al. 2012; Bar-Ilan 2012; Bar-Ilan et al. 2012), and 0.336 with WoS (Zahedi et al. 2014a; Mohammadi and Thelwall 2014; Mohammadi et al. 2015a; Li et al. 2011; Maleki 2015b; Zahedi et al. 2015a; Li et al. 2012; Priem et al. 2012b; Bar-Ilan 2012). However in response to RQ2.2, correlations with citations considering non-zero datasets was overall 0.547: 0.65 with Scopus (Thelwall and Wilson 2015b) and 0.543 with WoS (Mohammadi and Thelwall 2014; Mohammadi et al. 2015a; Sud and Thelwall 2015).

In response to RQ2.3, Fig. 7 shows the results of the meta-analysis for Mendeley compared to other altmetrics. The overall correlation was 0.18: 0.016 with Amazon Metrics (Kousha and Thelwall 2015c), 0.557 with CiteULike (Li et al. 2011, 2012; Bar-Ilan et al. 2012), 0.031 with Delicious (Zahedi et al. 2014a), 0.309 with F1000 (Li et al. 2012), 0.070 with Twitter (Zahedi et al. 2014a), and 0.083 with Wikipedia (Zahedi et al. 2014a). The overall correlation with citations and altmetrics was 0.335.

Answering RQ2.1, Fig. 8 shows the results of the meta-analysis for Twitter compared to WoS citations, resulting in an overall correlation of 0.108 (Haustein et al. 2015b, 2014b; Zahedi et al. 2014a; Priem et al. 2012b; Maleki 2015b). However, correlations with citations considering non-zero datasets (in response to RQ2.2) was 0.156: 0.392 with Google Scholar (Eysenbach 2012), 0.229 with Scopus (Eysenbach 2012), and 0.078 with WoS (Thelwall et al. 2013; Haustein et al. 2015b, 2014b).

In answer to RQ2.3, the overall correlation with other altmetrics resulted in 0.151 as shown in Fig. 9: 0.194 with Blogs (Haustein et al. 2015b), 0.125 with Delicious (Zahedi et al. 2014a), 0.32 with Facebook (Haustein et al. 2015b), 0.142 with Google+ (Haustein et al. 2015b), 0.07 with Mendeley (Zahedi et al. 2014a), 0.137 with News (Haustein et al. 2015b), and 0.056 with Wikipedia (Zahedi et al. 2014a). Finally, the overall correlation with citations and altmetrics was 0.111.

CiteULike

Figure 10 shows the results of the meta-analysis for CiteULike compared to citations, resulting in an overall correlation of 0.288: 0.383 with Google Scholar (Li et al. 2012, 2011), 0.257 with Scopus (Li et al. 2012; Liu et al. 2013; Haustein et al. 2015b; Bar-Ilan et al. 2012), and 0.256 with WoS (Priem et al. 2012b; Li et al. 2012, 2011) in answer to RQ2.1. As shown in Fig. 11 and in answer to RQ2.3, the overall correlation with other altmetrics resulted in 0.322: 0.076 with Blogs (Liu et al. 2013), 0.194 with Connotea (Liu et al. 2013), 0.127 with F1000 (Li et al. 2012), and 0.557 with Mendeley (Li et al. 2011). Finally, the overall correlation was 0.302 across citations and altmetrics.

Blogs

Figure 12 shows the results of the meta-analysis for Blogs. The correlation with WoS citations (Haustein et al. 2015b; Priem et al. 2012b) was 0.117 in answer to RQ2.1. However, correlations with WoS citations considering non-zero datasets (answering RQ2.2) was 0.194 (Thelwall et al. 2013; Haustein et al. 2015b).

In answer to RQ2.3, correlation with other altmetrics was 0.14: 0.076 with CiteULike (Liu et al. 2013), 0.031 with Connotea (Liu et al. 2013), 0.18 with Facebook (Haustein et al. 2015b), 0.196 with Google+ (Haustein et al. 2015b), 0.279 with News (Haustein et al. 2015b), and 0.194 with Twitter (Haustein et al. 2015b). Overall across citations and altmetrics the correlation was 0.135.

F1000

Figure 13 shows the results of the meta-analysis for F1000 compared to citations (in response to RQ2.1), resulting in an overall value of 0.229: 0.18 with Google Scholar (Li et al. 2012; Eyre-Walker and Stoletzki 2013), 0.278 with Scopus (Li et al. 2012; Mohammadi and Thelwall 2013), 0.25 with WoS (Priem et al. 2012b; Li et al. 2012; Bornmann and Marx 2015; Bornmann and Leydesdorff 2013).Footnote 30 As shown in Fig. 14 and in answer to RQ2.3, the overall correlation with altmetrics resulted in 0.22: 0.127 with CiteULike, and 0.309 with Mendeley (Li et al. 2012). Finally, the overall correlation was 0.219 across citations and altmetrics.

Figure 15 shows the results of the meta-analysis for Facebook across all studies. The correlation with WoS citations (Haustein et al. 2015b; Priem et al. 2012b) was 0.122, in answer to RQ2.1. However, correlations with WoS citations considering non-zero datasets and answering RQ2.2 was 0.109 (Thelwall et al. 2013; Haustein et al. 2015b). The correlation with other altmetrics was 0.202: 0.18 with Blogs, 0.144 with Google+, 0.161 with News, and 0.32 with Twitter (Haustein et al. 2015b), thus answering RQ2.3. The overall correlation across citations and altmetrics resulted in 0.182.

Google+

Figure 16 shows the results of the meta-analysis for Google+. In response to RQ2.2, the correlation with WoS citations for non-zero datasets was 0.123 (Thelwall et al. 2013; Haustein et al. 2015b). Only one study investigated the correlation between Google+ and other altmetrics and citations (Haustein et al. 2015b). From that study, the correlation with WoS citations (Haustein et al. 2015b) was 0.065, thus answering RQ2.2. The overall correlation with other altmetrics (answering RQ2.3) was 0.165: 0.196 with Blogs, 0.144 with Facebook, 0.179 with News, and 0.142 with Twitter (Haustein et al. 2015b). The overall correlation across citations and altmetrics was 0.145.

Wikipedia

Figure 17 shows the results of the meta-analysis for Wikipedia. In answer to RQ2.1, the correlation with WoS citations (Zahedi et al. 2014a; Priem et al. 2012b) was 0.096. Overall with other altmetrics it was 0.053, thus answering RQ2.3: 0.021 with Delicious, 0.083 with Mendeley, and 0.056 with Twitter (Zahedi et al. 2014a). The overall correlation across citations and altmetrics was 0.076.

Delicious

Figure 18 shows the results of the meta-analysis for Delicious. The correlation with WoS citations (Zahedi et al. 2014a; Priem et al. 2012b) was 0.07, thus answering RQ2.1. Overall with other altmetrics it was 0.059 (answering RQ2.3): 0.031 with Mendeley, 0.125 with Twitter, and 0.021 with Wikipedia (Zahedi et al. 2014a). The overall correlation across citations and altmetrics was 0.064.

Rights and permissions

About this article

Cite this article

Erdt, M., Nagarajan, A., Sin, SC.J. et al. Altmetrics: an analysis of the state-of-the-art in measuring research impact on social media. Scientometrics 109, 1117–1166 (2016). https://doi.org/10.1007/s11192-016-2077-0

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-016-2077-0