Abstract

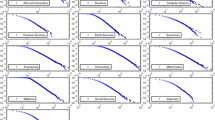

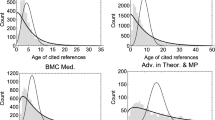

Certain key questions in Scientometrics can only be answered by following a statistical approach. This paper illustrates this point for the following question: how similar are citation distributions with a fixed, common citation window for every science in a static context, and how similar are they when the citation process of a given cohort of papers is modeled in a dynamic context?

Similar content being viewed by others

Notes

For reasons of space, only methods that belong to the class of target or “cited side” normalization procedures will be discussed in this paper.

Of course, from the beginning of Scientometrics it is generally believed that citation distributions are highly skewed (Price 1965; Seglen 1992). However, until the results previously summarized, the empirical evidence, although valuable, was very scant and mostly unsystematic. See Albarrán et al. (2011) for a discussion of the previous literature, including the limitations of the few systematic studies by Schubert and Braun (1986), Glänzel (2007) and Albarrán and Ruiz-Castillo (2011).

In addition, consider the possibility of defining a high-impact indicator over the sub-set of articles with citations above a high percentile of citation distributions, say the 80th percentile. The distribution of high-impact values for the 219 sub-fields according to an indicator of this type is highly skewed to the right, and it presents some important extreme observations (see Herranz and Ruiz-Castillo 2012).

In general, these exceptions are hybrid subject categories, like the “Multiplidisciplinary sciences”, or not so well defined sub-fields, like “Engineering, petroleum” or “Biodiversity conservation”, whose papers are also assigned, through the journals where they have been published, to other broader subject-categories.

This is exactly what Albarrán et al. (2011) find with the dataset mentioned in “The skewness of science” section: over the 219 sub-fields in the multiplicative case, for example, the average percentage of un-cited articles is 24.7 % and the standard deviation 13.9. Therefore, the coefficient of variation is 0.56, while the one for the percentage of articles in class I in Table 1 is 0.05. On the other hand, RC also find that the transformation of Eq. 1 becomes less descriptive beyond the top 10 % of highly cited articles, and the removal of the bias in the raw data worsens.

References

Albarrán, P., Crespo, J., Ortuño, I., & Ruiz-Castillo, J. (2011). The skewness of science in 219 sub-fields and a number of aggregates. Scientometrics, 88, 385–397.

Albarrán, P., & Ruiz-Castillo, J. (2011). References made and citations received by scientific articles. Journal of the American Society for Information Science and Technology, 62, 40–49.

Arellano, M., & Bonhomme, S. (2011). Nonlinear panel data analysis. Annual Review of Economics, 3, 395–424.

Arellano, M., & Honoré, B. (2001). Panel data models: Some recent developments. In J. Heckman & E. Leamer (Eds.), Handbook of econometrics (Vol. 5). Amsterdam: North Holland.

Azoulay, P., Stuart, T., & Wang, Y. (2012). Mathew: Effect or Fable? NBER Working Paper No. 18625. (http://www.nber.org/papers/w18625).

Braun, T., Glänzel, W., & Schubert, A. (1985). Scientometrics indicators. A 32 country comparison of publication productivity and citation impact. Singapore: World Scientific Publishing Co. Pty. Ltd.

Clauset, A., Shalizi, C. R., & Newman, M. E. J. (2009). Power-law distributions in empirical data. SIAM Review, 51, 661–703.

Cole, J. R., & Zuckerman, H. (1984). The productivity puzzle: Persistence and change in patterns of publication of men and women scientists. Advances in Motivation and Achievement, 2, 217–258.

Crespo, J. A., Li, Y., & Ruiz-Castillo, J. (2012). The comparison of citation impact across scientific fields. Working paper 12-06. Madrid: Universidad Carlos III. (http://hdl.handle.net/10016/14771).

Diggle, P. J., Heagerty, P., Liang, K.-Y., & Zeger, S. L. (2002). Analysis of longitudinal data (2nd ed.). Oxford: Clarendon Press.

Glänzel, W. (2007). Characteristic scores and scales: A bibliometric analysis of subject characteristics based on longterm citation observation. Journal of Informetrics, 1(1), 92–102.

Glänzel, W. (2011). The application of characteristic scores and scales to the evaluation and ranking of scientific journals. Journal of Information Science, 37, 40–48.

Herranz, N., & Ruiz-Castillo, J. (2012). Multiplicative and fractional strategies when journals are assigned to several sub-fields. Journal of the American Society for Information Science and Technology. (doi:10.1002/asi.22629); in press.

Katz, J. S. (2000). Scale-independent indicators and research evaluation. Science and Public Policy, 27, 23–36.

Kelchtermans, S., & Veugelers, R. (2012). Top research productivity and its persistence: Gender as a double-edged sword. The Review of Economics & Statistics (forthcoming).

Merton, R. K. (1968). The Matthew effect in science. Science, 159, 56–63.

Merton, R. K. (1988). The Matthew effect in science, II: Cumulative advantage and the symbolism of intellectual property. Isis, 79, 606–623.

Moed, H. F., Burger, W. J., Frankfort, J. G., & van Raan, A. F. J. (1985). The use of bibliometric data for the measurement of university research performance. Research Policy, 14, 131–149.

Moed, H. F., De Bruin, R. E., & van Leeuwen, T. N. (1995). New bibliometrics tools for the assessment of national research performance: Database description, overview of indicators, and first applications. Scientometrics, 33, 381–422.

Moed, H. F., & van Raan, A. F. J. (1988). Indicators of research performance. In A. F. J. van Raan (Ed.), Handbook of quantitative studies of science and technology (pp. 177–192). Amsterdam: North Holland.

Molenberghs, G., & Verbeke, G. (2005). Models for discrete longitudinal data. New York: Springer.

Price, D. D. (1965). Networks of scientific papers. Science, 149, 510–515.

Radicchi, F., & Castellano, C. (2012a). A reverse engineering approach to the suppression of citation biases reveals universal properties of citation distributions. PLoS One, 7(e33833), 1–7.

Radicchi, F., & Castellano, C. (2012b). Testing the fairness of citation indicators for comparisons across scientific domains: The case of fractional citation counts. Journal of Informetrics, 6, 121–130.

Radicchi, F., Fortunato, S., & Castellano, C. (2008). Universality of citation distributions: Toward an objective measure of scientific impact. Proceedings of the National Academy of Sciences of the United States of America, 105, 17268–17272.

Schubert, A., & Braun, T. (1986). Relative indicators and relational charts for comparative assessment of publication output and citation impact. Scientometrics, 9, 281–291.

Schubert, A., & Braun, T. (1996). Cross-field normalization of scientometric indicators. Scientometrics, 36, 311–324.

Schubert, A., Glänzel, W., & Braun, T. (1983). Relative citation rate: A new indicator for measuring the impact of publications. In D. Tomov & L. Dimitrova (Eds.), Proceedings of the First National Conference with International Participation in Scientometrics and Linguistics of Scientific Text (pp. 80–81). Varna, Bulgaria: ICS.

Schubert, A., Glänzel, W., & Braun, T. (1987a). Subject field characteristic citation scores and scales for assessing research performance. Scientometrics, 12, 267–292.

Schubert, A., Glänzel, W., & Braun, T. (1987b). Subject field characteristic citation scores and scales for assessing research performance. Scientometrics, 12, 267–292.

Schubert, A., Glänzel, W., & Braun, T. (1988). Against absolute methods: Relative scientometric indicators and relational charts as evaluation tools. In A. F. J. van Raan (Ed.), Handbook of quantitative studies of science and technology (pp. 137–176). Amsterdam: Elsevier.

Seglen, P. (1992). The skewness of science. Journal of the American Society for Information Science, 43, 628–638.

Vinkler, P. (1986). Evaluation of some methods for the relative assessment of scientific publications. Scientometrics, 10, 157–177.

Vinkler, P. (2003). Relations of relative scientometric indicators. Scientometrics, 58, 687–694.

Waltman, L., & van Eck, N. J. (2012). Universality of citation distributions revisited. Journal of the American Society for Information Science and Technology, 63, 72–77.

Acknowledgments

Financial support from the Spanish MEC, through Grant No. SEJ2007-67436, as well as conversations with Pedro Albarrán are gratefully acknowledged. A referee report helped to improve the original version of the paper. All shortcomings are the author’s sole responsibility.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ruiz-Castillo, J. The role of statistics in establishing the similarity of citation distributions in a static and a dynamic context. Scientometrics 96, 173–181 (2013). https://doi.org/10.1007/s11192-013-0954-3

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-013-0954-3