Abstract

Purpose

Ankle fractures are commonly occurring fractures, especially in the aging population, where they often present as fragility fractures. The disease burden and economic costs to the patient and society are considerable. Choosing accurate outcome measures for the evaluation of the management of ankle fractures in clinical trials facilitates better decision-making. This systematic review assesses the evidence for the measurement properties of patient-reported outcome measures (PROMs) used in the evaluation of adult patients with ankle fractures.

Methods

Searches were performed in CINAHL, EMBASE, Medline and Google Scholar from the date of inception to July 2021. Studies that assessed the measurement properties of a PROM in an adult ankle fracture population were included. The included studies were assessed according to the COnsensus-based Standards for the selection of health Measurement INstruments (COSMIN) methodology for systematic reviews of PROMs.

Results

In total, 13 different PROMs were identified in the 23 included articles. Only the Ankle Fracture Outcome of Rehabilitation Measure (A-FORM) presented some evidence on content validity. The Olerud-Molander Ankle Score (OMAS) and Self-reported Foot and Ankle Score (SEFAS) displayed good evidence of construct validity and internal consistency. The measurement properties of the OMAS, LEFS and SEFAS were most studied.

Conclusion

The absence of validation studies covering all measurement properties of PROMs used in the adult ankle fracture population precludes the recommendation of a specific PROM to be used in the evaluation of this population. Further research should focus on validation of the content validity of the instruments used in patients with ankle fractures.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Patients presenting with an ankle fracture is a common sight in the emergency department. A study demonstrated that approximately one of ten sustained fractures in patients older than 11 years are due to ankle fractures [1]. An epidemiological study on ankle fractures of the entire population in the United States estimated 673,214 cases over a period of five years, giving a incidence rate of 4.22/10,000 person years [2]. Ankle fractures occurs in all ages and both genders, but with a bimodal distribution curve, with the first peak in young men, and a second peak in older women [1]. The link between an increased risk of ankle fractures in the elderly population and a reduction in bone mineral density has been established [3], indicating that ankle fractures in the older female population are considered a predictor for fragility. With increases in life expectancy, it is likely that the frequency of fragility ankle fractures will also rise in the future [4]. Presumably, this will have implications for the management of ankle fractures, considering the challenging nature of fragility fractures and the increasing complexity of patients’ clinical status as they age [5, 6]. With such a heterogeneous patient population and enhanced focus on patient-specific treatment, treatment approaches also differs largely. The estimated cost of surgically treated ankle fractures per patient was $8688–20,414 (2016 USD), with a mean duration of unemployment of 53–90 days [7]. Alongside this trend within the field of orthopedic surgery, there is a need for more accurate outcome measures, reflected in the increased use of patient-reported outcome measures (PROMs) in clinical and research settings in the last decade [8, 9].

A patient-reported outcome (PRO) is defined as “any report of the status of a patient’s health condition that comes directly from the patient without interpretation of the patient’s response by a clinician or anyone else”, and PROMs are the instruments used to measure PROs [10]. The measurement properties of an instrument provide information on the validity, reliability and responsiveness of the instrument in the context of use, and content validity is considered the most important aspect [11]. A recent review identified the Olerud-Molander Ankle Score (OMAS) as the most commonly used primary outcome in the assessment of patients with ankle fractures in clinical trials [12]. The American Orthopedic Foot and Ankle Score (AOFAS), which is considered a partially patient-reported outcome measure, was the fourth most commonly used outcome score for ankle fracture patients. Other reviews found that the AOFAS was the most commonly used instrument in foot and ankle disorders [13, 14], regardless of repeated concerns with its measurement properties [15,16,17]. However, the quality of the instrument relies upon on the measurement properties and should be the main concern when choosing the outcome measure in research and for clinical use [9]. A recommendation on which PROM should be used in patients with ankle fracture based on current evidence on measurement properties is warranted.

This systematic review assesses the evidence for the measurement properties and the interpretability of PROMs used in the evaluation of adult patients with ankle fractures and adheres to the COnsensus-based Standards for the selection of health Measurement INstruments (COSMIN) guidelines [11, 18, 19]. It takes into consideration the limitations from previously published systematic reviews [20, 21] by including validation studies of all PROMs and studies in a population mainly composed of ankle fracture patients. This will ensure an adequate representation of the target population and provide a more complete overview of the PROMs validated for use in this context.

Methods

Protocol and registration

The reporting of this review followed the checklist provided in the Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) statement [22, 23]. The protocol has been submitted to the International Prospective Register of Systematic Reviews (PROSPERO) (registration number: CRD42019122800).

Eligibility criteria

Studies that assessed the measurement properties of PROMs in an adult ankle fracture population with the Arbeitsgemeinschaft für Osteosynthesefragen/Orthopaedic Trauma Association (AO/OTA) classification 44 [24], including medial malleolar fracture, were selected for the current systematic literature review. The included studies involved a study population of at least 50% patients with ankle fractures.

The exclusion criteria were as follows: (1) articles in languages other than English or a Scandinavian language; (2) validation of a PROM against a non-PROM instrument, as these studies provide only indirect information on the measurement properties; and (3) proxy-reported PROMs, as these were considered observer-reported outcomes [10].

Data sources and search strategy

A literature search was performed in Medline, EMBASE and CINAHL from the inception of the databases to the 6th of July 2021. Three filters were applied: (1) a PROM-inclusion filter developed by the University of Oxford [25], (2) a validated sensitive search filter for measurement properties by Terwee et al. [26] and (3) an age filter to exclude results indexed with child and adolescent age groups only. A separate search in Google Scholar was performed with the following search phrase: “ankle fracture” validation “patient reported outcomes” “measurement properties”. The search strategy was devised in collaboration with expert research librarians and details are presented in Online Resource 1.

Selection process

The review team consisted of four reviewers. The results from the search strategy were uploaded to Covidence [27]. All titles and abstracts were randomly screened for potential eligibility by two reviewers independently. Any disagreements were discussed between the two reviewers, and if in doubt, the full text was retrieved. The full text was retrieved for all abstracts that were potentially eligible for inclusion and again independently screened by two reviewers. Any disagreements were discussed between the two reviewers, and if consensus was not achieved, a third reviewer was consulted.

The initial screening included screening PROMs used in a more general fracture population. Two reviewers separately performed a secondary final screening of the included articles to retrieve studies limited to those meeting the eligibility criteria for the ankle fracture review.

The first author screened the references of the included articles for potential eligible studies.

Data extraction

The extracted outcome variables were (1) content validity, including PROM development; (2) structural validity; (3) internal consistency; (4) cross-cultural validity/measurement invariance; (5) reliability; (6) measurement error; (7) criterion validity; (8) hypothesis testing for construct validity; (9) responsiveness; and (10) interpretability.

Assessing the methodological quality of the studies

The COSMIN Risk of Bias checklist [19] was applied for the assessment of the methodological quality of the studies. The list contained questions for each measurement property, and each question was given a rating of very good, adequate, doubtful or inadequate. The overall rating for each measurement property per study followed the “the worse score counts” principle.

Ratings of PROM development and content validity

PROM development was not considered a measurement property but was taken into account in the assessment of content validity and consisted of (1) PROM design, which accommodates concept elicitation and item generation, and (2) testing of the new PROM, which refers to a cognitive interview or a pilot study. It was a prerequisite when rating the PROM development that the methodological quality did not have an inadequate rating when rating the results against the criteria for good measurement properties.

The evaluation of content validity included three aspects: (1) relevance, (2) comprehensibility, and (3) comprehensiveness. For translations, only the comprehensibility aspect was assessed. Each aspect was rated sufficient, insufficient or indeterminate. PROMs that included the target population for the current review in the development phase were also given a content validity rating by the reviewers.

The results from the development study, content validity studies and reviewers’ ratings were summarized, and an overall rating of sufficient, insufficient or inconsistent was obtained based on the criteria for good content validity [11].

Rating of the remaining measurement properties

The remaining measurement properties were assessed according to the COSMIN criteria for good measurement properties [18], resulting in a rating of sufficient, insufficient or indeterminate per study. Subsequently, the results from all studies on each measurement property were summarized and again rated against the COSMIN criteria for good measurement properties to yield an overall rating of sufficient, insufficient, inconsistent or indeterminate. In the assessment of the methodological quality of studies, twenty percent of the included articles were randomly selected to be independently assessed by two reviewers. Any disagreements or difficulties in ratings were discussed to achieve consensus. If this was not reached, a third reviewer was consulted.

The review team agreed that there are no gold standards in the evaluation of construct validity, except when comparing a shortened version against its original version [28]. Rather, hypotheses were formulated for the validation of construct validity. As there were no limitations to which PROMs were included in this review, it was not feasible to define hypotheses for every possible scenario a priori. Instead, threshold categories for correlations and a ground set of hypotheses were constructed (Online resource 2) [29]: instruments measuring (1) the same construct were expected to have at least moderate to high correlation (r > 0.6), (2) moderate correlation for related constructs (0.3 < r < 0.7), and (3) weak to moderate correlation for weakly related constructs (0.2 < r < 0.4). More specific hypotheses were formulated throughout the review with the expected direction and magnitude of the correlation depending on the construct of each instrument (Online Resource 3).

A similar approach was used in the assessment of responsiveness, but hypotheses were formed based on the expected correlation between the change scores of the instruments. The threshold categories for correlation was expected to be lower for change scores when compared to the scores of instruments at a single time point [30]. When the comparator instrument measure the same construct as the instrument under study, the correlation was expected to be high (r ≥ 0.5). If the comparator instrument measure a related construct, the correlation was expected to be moderate (0.3 < r < 0.5). For external measures with a dichotomous variable, an area under the curve (AUC) of 0.7 or above indicated sufficient ability of the instrument to discriminate between patients who improved and patients who did not improve according to the external measure of change.

Interpretability

Interpretability is not considered a measurement property but refers to “the degree one can assign qualitative meaning” to the PROM score or change in PROM score [31], and is used as additional information when choosing the instrument. Data on distribution of scores, rate of missing items, floor/ceiling effect and minimal important change (MIC) were extracted.

Quality of evidence

The modified Grading of Recommendations, Assessment, Development and Evaluation (GRADE) approach [18, 32] was applied to the summarized results to yield a grading for the quality of evidence. This grading expresses the level of certainty for the summarized results. Each measurement property received a grading of high, moderate, low or very low depending on four factors: (1) risk of bias; (2) inconsistency in the results across studies; (3) imprecision, which referred to the total sample size; and (4) indirectness, i.e., if evidence was derived from different populations or from the context of use.

Recommendations

PROMs in category A are recommended for use in the evaluation of patients with ankle fractures. These PROMs exhibit evidence for sufficient content validity and at least low quality evidence for internal consistency. If there is good evidence for an insufficient measurement property, the PROM is disapproved for use and placed in category C. The remaining PROMs are placed in category B. These could be recommended by obtaining more evidence on sufficient measurement properties with further validation [18].

Results

Study selection

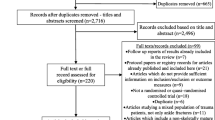

Of the 8339 potential articles for this review, 3107 duplicates were identified and removed before screening commenced. The titles and abstracts of the remaining 5232 articles were screened for eligibility, and 4531 articles were excluded. In the next step, 696 full-estext articles were screened by the inclusion/exclusion criteria, and 680 articles were excluded. Five articles were included from the screening of references in the included articles [33,34,35,36,37], one article was included based on a Google Scholar search [38], and one article [39] was included based on a systematic review [21]. In total, 23 articles were included in the review (Fig. 1).

Template from: Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 2021;372:n71. https://doi.org/10.1136/bmj.n71

PRISMA flow diagram for the search strategy and selection of records.

Study characteristics

Thirteen PROMs were identified (Table 1) and characteristics of PROMs under study are reported in Table 2. Comparator instruments identified in the studies are described in online resource 4. In the 23 articles included, 28 studies were described. For some of the articles, multiple studies were described assessing different measurement properties in the same article. Eleven studies included only surgically treated ankle fractures. Patient ages ranged from 16–94, with a mean age 41–58. Follow-up times ranged from one month to five years (Table 3).

Measurement properties

One article assessed the measurement properties of several PROMs [40]. Most of the studies assessed multiple measurement properties. No studies assessed cross-cultural validity/measurement invariance or criterion validity. The measurement properties of the OMAS were most frequently assessed. Table 4 presents the results from the Ankle Fracture Outcome of Rehabilitation Measure (A-FORM) and the three most validated instruments. A summary of findings table for all PROMs is presented in Online Resource 5.

PROM development and content validity

Only the A-FORM [41] had a methodologically adequate PROM design, but a lack of cognitive interviews or pilot studies yielded an inadequate rating for methodological quality regarding the total PROM development. The Trauma Expectation Factor Trauma Outcome Measure (TEFTOM) [42] was rated as having inadequate methodology in both PROM design and pilot study measures. Due to inadequate ratings regarding total PROM development, the ratings of both instruments were based on reviewers’ ratings only and achieved the lowest level of evidence.

Three studies included translations [35, 36, 43] and were assessed for comprehensibility as part of the content validity study but were not given a total content validity rating or quality of evidence grading due to lack of validation on the relevance and comprehensiveness aspects.

Structural validity

One study performed confirmatory factor analysis (CFA) on the OMAS, Self-reported Foot and Ankle Score (SEFAS) and Lower Extremity Functional Scale (LEFS) [40], and each met the criteria for a sufficient rating of structural validity (Table 4). However, two other studies demonstrated lack of unidimensionality for the LEFS with Rasch analysis [37, 44]. In addition, exploratory factor analysis (EFA) was performed to explore the dimensionality of the OMAS [45] and LEFS [34], and two subscales were identified in both instruments. The summarized results for the LEFS are conflicting, and the level of evidence was not graded. As the COSMIN guidelines do not define criteria for EFA, the result of these studies did not receive a rating.

Internal consistency

Summarized results from several studies of very good methodological quality yielded a Cronbach’s alpha of 0.76–0.84 and 0.93 for the OMAS and SEFAS, respectively, indicating sufficient internal consistency. Internal consistency parameters were reported for the LEFS and Western Ontario and McMaster Universities Osteoarthritis Index (WOMAC), but these were not rated due to a lack of evidence for sufficient structural validity.

Reliability

The LEFS achieved high quality evidence for sufficient reliability, supported by two studies with adequate methodological quality reporting intraclass correlation coefficient (ICC) of 0.91–0.93 (Table 4). The OMAS, SEFAS and Visual Analogue Scale Foot and Ankle (VAS-FA) had moderate evidence for sufficient reliability, while the TEFTOM and Munich Ankle Questionnaire (MAQ) had low and very low evidence for sufficient reliability, respectively.

Measurement error

The summarized result of the smallest detectable change (SDC) for the OMAS was 9.1–19.0, One study of inadequate methodological quality reported a value of 9.1 and was less decisive for the overall rating. The remaining studies reported values of 12.0 and 19.0, which was higher than the MIC of 9.7 points reported by McKeown et al. This indicated that the instrument cannot separate an important change (from the patients’ perspective) from measurement error between these values, resulting in an insufficient rating. The quality of evidence was downgraded to very low for three reasons: (1) presence of only one study assessing the MIC, (2) only one study of adequate methodological quality, and (3) indirectness due to considerable differences in follow-up times (16 weeks and 4.3 years) (Table 4).

Two studies on the SEFAS provided SDCs between 6.6 and 6.8 [40, 43], with one exhibiting inadequate methodological quality due to lack of stability between measurement points. Both studies reported SDCs to be higher than the MIC based on five points reported in the study by Erichsen et al. [43], but the calculation of this MIC carries a considerable risk of bias due to low sample size and inconsistency in the change score across subgroups.

The LEFS lacked MIC reporting and could not be rated according to the criteria for good measurement properties.

Hypothesis testing for construct validity

The OMAS, WOMAC, LEFS, SEFAS and MAQ had 75% or more confirmed hypotheses. Validation of the Finnish version of the VAS-FA was not a clearly defined construct, and the study was rated as having inadequate methodological quality. The TEFTOM and PROMIS-PF CAT were the only instruments with an insufficient overall rating, however, the quality of evidence was low.

Responsiveness

The LEFS achieved a sufficient rating with two confirmed hypotheses (Table 4). The authors used an external measure but did not specify the question that resulted in a downgrading of the level of evidence. Regarding the MAQ, three hypotheses were confirmed based on the construct approach, correlating the three domains to the same GRS and yielding a sufficient rating with moderate quality of evidence.

Interpretability

The MICs of the OMAS and SEFAS were 9.7 and five points, respectively. The latter included a small sample size of 39 patients, and the data did not present a gradual increase in change scores among patients who improved, which introduces risk of bias in the determination of this value.

A floor effect of 22.4% was reported with the SEFAS at the six-week follow-up. A ceiling effect was reported for the OMAS (17%) and LEFS (27–29%), where both studies had a follow-up time of more than four years (Online Resource 6).

Discussion

Summary of evidence

A recent review of PROMs used as primary outcomes in interventional trials for patients with ankle fractures [12] identified the OMAS as the most commonly used multi-item PROM. In a systematic review assessing measurement properties of PROMs used in foot and ankle disease, the Manchester-Oxford Foot Questionnaire (MOXFQ) was reported to have the best overall psychometric properties [46]. However, the current review illustrates that the MOXFQ is completely absent in validation studies for the ankle fracture population. Collectively, there is still a lack of studies covering all measurement properties of PROMs for patients with ankle fractures. Among the PROMs used in the evaluation of the ankle fracture population, the measurement properties and interpretation of the OMAS, LEFS and SEFAS were most studied. However, there is a consistent lack of validation of the most important measurement property, i.e., content validity, reflecting the uncertainty in covering all aspects of a given construct. Thus, none of the PROMs could be categorized in category A.

Validity and reliability

The OMAS was most frequently assessed PROM in this study population but was missing a content validity study of good quality. Despite inadequate methodology in PROM development, subsequent content validity studies could provide evidence for sufficient content validity. Of the instruments included in this review, only the A-FORM had an adequate PROM design [41]. The design and concept elicitation were based on a qualitative study on the life impact of ankle fractures [47] but lacked cognitive interviews or pilot tests. The developers of this instrument complied with many of the crucial steps in the development phase of a PROM, rendering a sound foundation for subsequent validation studies. The TEFTOM [42], on the other hand, had severe flaws in the development phase, where the study population was limited to fractures of the ankle and distal tibia. Such a limitation will not suffice to provide an adequate representation of the instrument’s intended population of general trauma patients.

In regard to structural validity, CFA is preferred over EFA for testing existing factor structures [48]. The OMAS and SEFAS appeared to be unidimensional when assessed with CFA [40]. However, the OMAS was also assessed with EFA [45], and two subscales were found, namely, (1) ankle function and (2) ankle symptoms, which indicates a bifactor structure in this instrument.

The LEFS was also assessed with CFA and achieved a sufficient rating of structural validity, but data from the same study showed a better fit with a bidimensional structure [40]. Another study validating the Finnish version of the instrument also found a two-factor structure [34]. Lin et al. [44] performed a Rasch analysis of the LEFS at three different time points. Most of the items were within the acceptable range for goodness-of-fit, but one item (sitting for 1 h) had unacceptable outfit statistics at all time points. The article did not provide enough information for a rating based on criteria for good measurement properties [49], but the Rasch analysis showed a lack of items for patients with greater abilities, drawing attention toward the cautious use of the instrument in patients with high demands or long-term follow-up of ankle fractures. Another Rasch analysis of the LEFS demonstrated disordered item thresholds for the response categories [37]. These studies had a methodological quality of at least adequate rating, but the results were conflicting. No obvious separation of studies into subgroups was identified that could explain the discrepancies. If this instrument was to be used in an ankle fracture population, one should be wary of the possible lack of unidimensionality.

Reliability and measurement error are usually assessed with a test–retest study. Often, the measurement error of an instrument is neglected, and reliability is tested only by providing an ICC. However, the assessment of measurement error, together with MIC values, permits another dimension to the interpretation of the statistical and clinical meaning of the scores. In the current review, the OMAS, SEFAS and LEFS displayed good evidence of sufficient reliability. Measurement error parameters for these instruments were reported, but the lack of MIC values for these instruments in the ankle fracture population made the interpretation incomplete. As an example, only one study reported the MIC for the OMAS [45]. When evaluated together with two other studies that reported an SDC larger than the MIC [40, 50], this implied that the OMAS cannot discriminate between a clinically important change from a measurement error of the instrument when the scores are between these two values. The quality was rated very low due to considerable risk of bias, but it still signifies the importance of reporting the measurement error and MIC.

In the assessment of subjective outcome measures such as PROMs, one can hardly declare an instrument to accommodate nearly perfect validity and reliability, hence the reluctant use of the word “gold standard”. In situations where PROMs are compared to each other, hypotheses are formed based on the assumed construct of each instrument while simultaneously acknowledging the current evidence on the comparator instruments’ measurement properties. The hypothesis testing of construct validity perhaps provides the least information regarding the validity of the application of an instrument since the method depends on the measurement properties of the comparator instruments and on the inquiring hypotheses postulated by the reviewers. However, acquiring evidence on this measurement property is a continuous process, and with growing empirical evidence, demonstration of construct validity is achievable through the process of probing hypotheses. In the current review, the OMAS was subject to the most hypothesis testing, with nine articles of varying methodological quality assessing construct validity, resulting in 75% confirmed hypotheses. The LEFS also had multiple studies of at least adequate methodological quality assessing construct validity with hypothesis testing, resulting in 87% confirmed hypotheses.

Limitations

When methodologically adequate studies are missing in the assessment of content validity, the reviewers’ rating remained the only rating. Depending on the reviewers’ level of knowledge and experience, this can introduce bias in the assessment. Likewise, for the definition of hypothesis testing for construct validity, the categorization of expected correlations was discussed and agreed upon within the review team, but this might differ for other reviewers.

Seven articles were not included by the main search. Four of the articles did not include the word “fracture” in their title, abstract or keywords [34, 35, 37, 39]. They were also not indexed with subject headings for ankle fractures. The remaining three articles were excluded due to lack of terms or phrases found in the PROM-inclusion filter developed by the University of Oxford [33, 36, 38].

Conclusion

None of the PROMs included in this study received a category A recommendation due to lack of evidence on sufficient content validity and internal consistency. In addition, none of the PROMs had good evidence on an insufficient measurement property, leaving category C empty. Therefore, all PROMs included in this review were assigned to category B. Due to the lack of PROMs in category A, the OMAS, SEFAS and A-FORM received a temporary recommendation of use for evaluative purposes in the ankle fracture population pending additional evidence. Further research should focus on conducting high quality content validity studies for the PROMs used in this context. There is also a significant need for more empirical evidence on the remaining measurement properties of the A-FORM.

References

Court-Brown, C. M., & Caesar, B. (2006). Epidemiology of adult fractures: A review. Injury, 37(8), 691–697. https://doi.org/10.1016/j.injury.2006.04.130

Scheer, R. C., Newman, J. M., Zhou, J. J., Oommen, A. J., Naziri, Q., Shah, N. V., & Uribe, J. A. (2020). Ankle fracture epidemiology in the united states: Patient-related trends and mechanisms of injury. Journal of Foot and Ankle Surgery, 59(3), 479–483. https://doi.org/10.1053/j.jfas.2019.09.016

So, E., Rushing, C. J., Simon, J. E., Goss, D. A., Jr., Prissel, M. A., & Berlet, G. C. (2020). Association between bone mineral density and elderly ankle fractures: A systematic review and meta-analysis. Journal of Foot and Ankle Surgery, 59(5), 1049–1057. https://doi.org/10.1053/j.jfas.2020.03.012

Court-Brown, C. M., Duckworth, A. D., Clement, N. D., & McQueen, M. M. (2018). Fractures in older adults: A view of the future? Injury, 49(12), 2161–2166. https://doi.org/10.1016/j.injury.2018.11.009

van Halsema, M. S., Boers, R. A. R., & Leferink, V. J. M. (2021). An overview on the treatment and outcome factors of ankle fractures in elderly men and women aged 80 and over: A systematic review. Archives of Orthopaedic and Trauma Surgery. https://doi.org/10.1007/s00402-021-04161-y

Kadakia, R. J., Ahearn, B. M., Schwartz, A. M., Tenenbaum, S., & Bariteau, J. T. (2017). Ankle fractures in the elderly: Risks and management challenges. Orthopedic Research and Reviews, 9, 45–50. https://doi.org/10.2147/ORR.S112684

Bielska, I. A., Wang, X., Lee, R., & Johnson, A. P. (2019). The health economics of ankle and foot sprains and fractures: A systematic review of English-language published papers. Part 2: The direct and indirect costs of injury. Foot (Edinburgh, Scotland), 39, 115–121. https://doi.org/10.1016/j.foot.2017.07.003

Churruca, K., Pomare, C., Ellis, L. A., Long, J. C., Henderson, S. B., Murphy, L. E. D., & Braithwaite, J. (2021). Patient-reported outcome measures (PROMs): A review of generic and condition-specific measures and a discussion of trends and issues. Health Expectations, 24(4), 1015–1024. https://doi.org/10.1111/hex.13254

Gagnier, J. J. (2017). Patient reported outcomes in orthopaedics. Journal of Orthopaedic Research, 35(10), 2098–2108. https://doi.org/10.1002/jor.23604

Johnston, B. C., Patrick, D. L., Devji, T., Maxwell, L. J., Bingham III, C. O., Beaton, D., … Guyatt, G. H. (2021). Chapter 18: Patient-reported outcomes. In T. J. Higgins, J. Chandler, M. Cumpston, T. Li, M. J. Page, & V. A. Welch (Eds.), Cochrane handbook for systematic reviews of interventions version 6.2 (updated February 2021): Cochrane. Retrieved from https://training.cochrane.org/handbook.

Terwee, C. B., Prinsen, C. A. C., Chiarotto, A., Westerman, M. J., Patrick, D. L., Alonso, J., & Mokkink, L. B. (2018). COSMIN methodology for evaluating the content validity of patient-reported outcome measures: A Delphi study. Quality of Life Research, 27(5), 1159–1170. https://doi.org/10.1007/s11136-018-1829-0

McKeown, R., Rabiu, A. R., Ellard, D. R., & Kearney, R. S. (2019). Primary outcome measures used in interventional trials for ankle fractures: A systematic review. BMC Musculoskeletal Disorders, 20(1), 388. https://doi.org/10.1186/s12891-019-2770-2

Hunt, K. J., & Lakey, E. (2018). Patient-reported outcomes in foot and ankle surgery. Orthopedic Clinics of North America, 49(2), 277–289. https://doi.org/10.1016/j.ocl.2017.11.014

Hijji, F. Y., Schneider, A. D., Pyper, M., & Laughlin, R. T. (2020). The popularity of outcome measures used in the foot and ankle literature. Foot & Ankle Specialist, 13(1), 58–68. https://doi.org/10.1177/1938640019826680

Baumhauer, J. F., McIntosh, S., & Rechtine, G. (2013). Age and sex differences between patient and physician-derived outcome measures in the foot and ankle. Journal of Bone and Joint Surgery (American Volume), 95(3), 209–214. https://doi.org/10.2106/JBJS.K.01467

Guyton, G. P. (2001). Theoretical limitations of the AOFAS scoring systems: An analysis using Monte Carlo modeling. Foot and Ankle International, 22(10), 779–787. https://doi.org/10.1177/107110070102201003

Pinsker, E., & Daniels, T. R. (2011). AOFAS position statement regarding the future of the AOFAS clinical rating systems. Foot and Ankle International, 32(9), 841–842. https://doi.org/10.3113/FAI.2011.0841

Prinsen, C. A. C., Mokkink, L. B., Bouter, L. M., Alonso, J., Patrick, D. L., de Vet, H. C. W., & Terwee, C. B. (2018). COSMIN guideline for systematic reviews of patient-reported outcome measures. Quality of Life Research, 27(5), 1147–1157. https://doi.org/10.1007/s11136-018-1798-3

Mokkink, L. B., de Vet, H. C. W., Prinsen, C. A. C., Patrick, D. L., Alonso, J., Bouter, L. M., & Terwee, C. B. (2018). COSMIN risk of bias checklist for systematic reviews of patient-reported outcome measures. Quality of Life Research, 27(5), 1171–1179. https://doi.org/10.1007/s11136-017-1765-4

Ng, R., Broughton, N., & Williams, C. (2018). Measuring recovery after ankle fractures: A systematic review of the psychometric properties of scoring systems. Journal of Foot and Ankle Surgery, 57(1), 149–154. https://doi.org/10.1053/j.jfas.2017.08.009

McKeown, R., Ellard, D. R., Rabiu, A. R., Karasouli, E., & Kearney, R. S. (2019). A systematic review of the measurement properties of patient reported outcome measures used for adults with an ankle fracture. Journal of Patient Report Outcomes, 3(1), 70. https://doi.org/10.1186/s41687-019-0159-5

Moher, D., Liberati, A., Tetzlaff, J., Altman, D. G., & Group, P. (2010). Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. International Journal of Surgery (London, England), 8(5), 336–341. https://doi.org/10.1016/j.ijsu.2010.02.007

Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., & Moher, D. (2021). The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ, 372, n71. https://doi.org/10.1136/bmj.n71

Meinberg, E. G., Agel, J., Roberts, C. S., Karam, M. D., & Kellam, J. F. (2018). Fracture and dislocation classification compendium-2018. Journal of Orthopaedic Trauma, 32(Suppl 1), S1–S170. https://doi.org/10.1097/BOT.0000000000001063

Mackintosh, A., Comabella, C. C. I., Hadi, M., Gibbons, E., Fitzpatrick, R., & Roberts, N. (2010). PROM group construct & instrument type filters February 2010.

Terwee, C. B., Jansma, E. P., Riphagen, I. I., & de Vet, H. C. (2009). Development of a methodological PubMed search filter for finding studies on measurement properties of measurement instruments. Quality of Life Research, 18(8), 1115–1123. https://doi.org/10.1007/s11136-009-9528-5

Covidence systematic review software, V. H. I., Melbourne, Australia. Retrieved from www.covidence.org

Mokkink, L. B., Terwee, C. B., Knol, D. L., Stratford, P. W., Alonso, J., Patrick, D. L., & de Vet, H. C. (2010). The COSMIN checklist for evaluating the methodological quality of studies on measurement properties: A clarification of its content. BMC Medical Research Methodology, 10, 22. https://doi.org/10.1186/1471-2288-10-22

Abma, I. L., Rovers, M., & van der Wees, P. J. (2016). Appraising convergent validity of patient-reported outcome measures in systematic reviews: Constructing hypotheses and interpreting outcomes. BMC Research Notes, 9(1), 226. https://doi.org/10.1186/s13104-016-2034-2

de Vet, H. C. W., Terwee, C. B., Mokkink, L. B., & Knol, D. L. (2011). Measurement in medicine. Cambridge University Press.

Mokkink, L. B., Terwee, C. B., Patrick, D. L., Alonso, J., Stratford, P. W., Knol, D. L., & de Vet, H. C. (2010). The COSMIN study reached international consensus on taxonomy, terminology, and definitions of measurement properties for health-related patient-reported outcomes. Journal of Clinical Epidemiology, 63(7), 737–745. https://doi.org/10.1016/j.jclinepi.2010.02.006

Guyatt, G. H., Oxman, A. D., Vist, G. E., Kunz, R., Falck-Ytter, Y., Alonso-Coello, P., & Group, G. W. (2008). GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ, 336(7650), 924–926. https://doi.org/10.1136/bmj.39489.470347.AD

Olerud, C., & Molander, H. (1984). A scoring scale for symptom evaluation after ankle fracture. Archives of Orthopaedic and Trauma Surgery, 103(3), 190–194. https://doi.org/10.1007/BF00435553

Repo, J. P., Tukiainen, E. J., Roine, R. P., Ilves, O., Jarvenpaa, S., & Hakkinen, A. (2017). Reliability and validity of the Finnish version of the Lower Extremity Functional Scale (LEFS). Disability and Rehabilitation, 39(12), 1228–1234. https://doi.org/10.1080/09638288.2016.1193230

Repo, J. P., Tukiainen, E. J., Roine, R. P., Kautiainen, H., Lindahl, J., Ilves, O., & Hakkinen, A. (2018). Reliability and validity of the Finnish version of the Visual Analogue Scale Foot and Ankle (VAS-FA). Foot and Ankle Surgery, 24(6), 474–480. https://doi.org/10.1016/j.fas.2017.05.009

Turhan, E., Demirel, M., Daylak, A., Huri, G., Doral, M. N., & Celik, D. (2017). Translation, cross-cultural adaptation, reliability and validity of the Turkish version of the Olerud-Molander Ankle Score (OMAS). Acta Orthopaedica et Traumatologica Turcica, 51(1), 60–64. https://doi.org/10.1016/j.aott.2016.06.012

Repo, J. P., Tukiainen, E. J., Roine, R. P., Sampo, M., Sandelin, H., & Hakkinen, A. H. (2019). Rasch analysis of the lower extremity functional scale for foot and ankle patients. Disability and Rehabilitation, 41(24), 2965–2971. https://doi.org/10.1080/09638288.2018.1483435

Shah, N. H., Sundaram, R. O., Velusamy, A., & Braithwaite, I. J. (2007). Five-year functional outcome analysis of ankle fracture fixation. Injury, 38(11), 1308–1312. https://doi.org/10.1016/j.injury.2007.06.002

Zelle, B. A., Francisco, B. S., Bossmann, J. P., Fajardo, R. J., & Bhandari, M. (2017). Spanish translation, cross-cultural adaptation, and validation of the American academy of Orthopaedic surgeons foot and ankle outcomes questionnaire in Mexican-Americans with traumatic foot and ankle injuries. Journal of Orthopaedic Trauma, 31(5), e158–e162. https://doi.org/10.1097/BOT.0000000000000789

Garratt, A. M., Naumann, M. G., Sigurdsen, U., Utvag, S. E., & Stavem, K. (2018). Evaluation of three patient reported outcome measures following operative fixation of closed ankle fractures. BMC Musculoskeletal Disorders, 19(1), 134. https://doi.org/10.1186/s12891-018-2051-5

McPhail, S. M., Williams, C. M., Schuetz, M., Baxter, B., Tonks, P., & Haines, T. P. (2014). Development and validation of the ankle fracture outcome of rehabilitation measure (A-FORM). Journal of Orthopaedic and Sports Physical Therapy, 44(7), 488–499. https://doi.org/10.2519/jospt.2014.4980

Suk, M., Daigl, M., Buckley, R. E., Paccola, C. A., Lorich, D. G., Helfet, D. L., & Hanson, B. (2013). TEFTOM: A promising general trauma expectation/outcome measure-results of a validation study on pan-American ankle and distal tibia trauma patients. ISRN Orthop, 2013, 801784. https://doi.org/10.1155/2013/801784

Erichsen, J. L., Jensen, C., Larsen, M. S., Damborg, F., & Viberg, B. (2021). Danish translation and validation of the Self-reported foot and ankle score (SEFAS) in patients with ankle related fractures. Foot and Ankle Surgery, 27(5), 521–527. https://doi.org/10.1016/j.fas.2020.06.014

Lin, C. W. C., Moseley, A. M., Refshauge, K. M., & Bundy, A. C. (2009). The lower extremity functional scale has good clinimetric properties in people with ankle fracture. Physical Therapy, 89(6), 580–588. https://doi.org/10.2522/ptj.20080290

McKeown, R., Parsons, H., Ellard, D. R., & Kearney, R. S. (2021). An evaluation of the measurement properties of the Olerud Molander Ankle Score in adults with an ankle fracture. Physiotherapy, 112, 1–8. https://doi.org/10.1016/j.physio.2021.03.015

Jia, Y., Huang, H., & Gagnier, J. J. (2017). A systematic review of measurement properties of patient-reported outcome measures for use in patients with foot or ankle diseases. Quality of Life Research, 26(8), 1969–2010. https://doi.org/10.1007/s11136-017-1542-4

McPhail, S. M., Dunstan, J., Canning, J., & Haines, T. P. (2012). Life impact of ankle fractures: Qualitative analysis of patient and clinician experiences. BMC Musculoskeletal Disorders, 13(1), 224. https://doi.org/10.1186/1471-2474-13-224

Floyd, F. J., & Widaman, K. F. (1995). Factor analysis in the development and refinement of clinical assessment instruments. Psychological Assessment, 7(3), 286–299. https://doi.org/10.1037/1040-3590.7.3.286

Terwee, C. B., Bot, S. D. M., de Boer, M. R., van der Windt, D. A. W. M., Knol, D. L., Dekker, J., & de Vet, H. C. W. (2007). Quality criteria were proposed for measurement properties of health status questionnaires. Journal of Clinical Epidemiology, 60(1), 34–42. https://doi.org/10.1016/j.jclinepi.2006.03.012

Nilsson, G. M., Eneroth, M., & Ekdahl, C. S. (2013). The Swedish version of OMAS is a reliable and valid outcome measure for patients with ankle fractures. BMC Musculoskeletal Disorders, 14(1), 109. https://doi.org/10.1186/1471-2474-14-109

Schultz, B. J., Tanner, N., Shapiro, L. M., Segovia, N. A., Kamal, R. N., Bishop, J. A., & Gardner, M. J. (2020). Patient-reported outcome measures (PROMs): Influence of motor tasks and psychosocial factors on FAAM scores in foot and ankle trauma patients. Journal of Foot and Ankle Surgery, 59(4), 758–762. https://doi.org/10.1053/j.jfas.2020.01.008

Greve, F., Braun, K. F., Vitzthum, V., Zyskowski, M., Muller, M., Kirchhoff, C., & Beirer, M. (2018). The Munich ankle questionnaire (MAQ): A self-assessment tool for a comprehensive evaluation of ankle disorders. European Journal of Medical Research, 23(1), 46. https://doi.org/10.1186/s40001-018-0344-7

Buker, N., Savkin, R., Gokalp, O., & Ok, N. (2017). Validity and reliability of Turkish version of Olerud-Molander ankle score in patients with malleolar fracture. Journal of Foot and Ankle Surgery, 56(6), 1209–1212. https://doi.org/10.1053/j.jfas.2017.06.002

Lash, N., Horne, G., Fielden, J., & Devane, P. (2002). Ankle fractures: Functional and lifestyle outcomes at 2 years. ANZ Journal of Surgery, 72(10), 724–730. https://doi.org/10.1046/j.1445-2197.2002.02530.x

Ponzer, S., Nasell, H., Bergman, B., & Tornkvist, H. (1999). Functional outcome and quality of life in patients with type B ankle fractures: A two-year follow-up study. Journal of Orthopaedic Trauma, 13(5), 363–368. https://doi.org/10.1097/00005131-199906000-00007

Gausden, E. B., Levack, A., Nwachukwu, B. U., Sin, D., Wellman, D. S., & Lorich, D. G. (2018). Computerized adaptive testing for patient reported outcomes in ankle fracture surgery. Foot and Ankle International, 39(10), 1192–1198. https://doi.org/10.1177/1071100718782487

Obremskey, W. T., Brown, O., Driver, R., & Dirschl, D. R. (2007). Comparison of SF-36 and short musculoskeletal functional assessment in recovery from fixation of unstable ankle fractures. Orthopedics, 30(2), 145–151. https://doi.org/10.3928/01477447-20070201-01

Fang, C., Platz, A., Muller, L., Chandy, T., Luo, C. F., Vives, J. M. M., & Babst, R. (2020). Evaluation of an expectation and outcome measurement questionnaire in ankle fracture patients: The Trauma Expectation Factor Trauma Outcomes Measure (TEFTOM) Eurasia study. Journal of Orthopaedic Surgery (Hong Kong), 28(1), 2309499019890140. https://doi.org/10.1177/2309499019890140

Ponkilainen, V. T., Hakkinen, A. H., Uimonen, M. M., Tukiainen, E., Sandelin, H., & Repo, J. P. (2019). Validation of the Western Ontario and McMaster Universities osteoarthritis index in patients having undergone ankle fracture surgery. Journal of Foot and Ankle Surgery, 58(6), 1100–1107. https://doi.org/10.1053/j.jfas.2019.01.018

Ponkilainen, V. T., Tukiainen, E. J., Uimonen, M. M., Hakkinen, A. H., & Repo, J. P. (2020). Assessment of the structural validity of three foot and ankle specific patient-reported outcome measures. Foot and Ankle Surgery, 26(2), 169–174. https://doi.org/10.1016/j.fas.2019.01.009

Acknowledgements

We thank Elisabeth Hundstad Molland and Hilde Elin Sperrevik Magnussen for their contribution in composing the search strategy.

Funding

Open access funding provided by University Of Stavanger. This study was funded by the Department of Quality and Health Technology, Faculty of Health Sciences, University of Stavanger and by the Department of Orthopedic Surgery, Stavanger University Hospital, Helse Stavanger HF.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or nonfinancial interests to disclose.

Ethical approval

This article does not contain research involving human or animal subjects.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nguyen, M.Q., Dalen, I., Iversen, M.M. et al. Ankle fractures: a systematic review of patient-reported outcome measures and their measurement properties. Qual Life Res 32, 27–45 (2023). https://doi.org/10.1007/s11136-022-03166-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-022-03166-3