Abstract

Purpose

A barrier to using HRQOL questionnaires for individual patient management is knowing what score represents a problem deserving attention. We explored using needs assessments to identify HRQOL scores associated with patient-reported unmet needs.

Methods

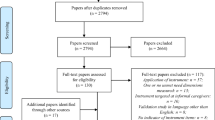

This cross-sectional study included 117 cancer patients (mean age 61 years; 51% men; 77% white) who completed the Supportive Care Needs Survey (SCNS) and EORTC QLQ-C30. SCNS scores were dichotomized as “no unmet need” versus “some unmet need” and served as an external criterion for identifying problem scores. We evaluated the discriminative ability of QLQ-C30 scores using receiver operating characteristic (ROC) analysis. Domains with an area under the ROC curve (AUC) ≥ .70 were examined further to determine how well QLQ-C30 scores predicted presence/absence of unmet need.

Results

We found AUCs ≥ .70 for 6 of 14 EORTC domains: physical, emotional, role, global QOL, pain, fatigue. All 6 domains had sensitivity ≥ .85 and specificity ≥ .50. EORTC domains that closely matched the content of SCNS item(s) were more likely to have AUCs ≥ .70. The appropriate cut-off depends on the relative importance of false positives and false negatives.

Conclusions

Needs assessments can identify HRQOL scores requiring clinicians’ attention. Future research should confirm these findings using other HRQOL questionnaires and needs assessments.

Similar content being viewed by others

References

Aaronson, N. K., & Snyder, C. (2008). Using patient-reported outcomes in clinical practice: Proceedings of an international society for quality of life research conference. Quality of Life Research, 17, 1295.

Donaldson, G. (2009). Patient-reported outcomes and the mandate of measurement. Quality of Life Research, 18, 1303–1313.

Aaronson, N. K., Cull, A. M., Kaasa, S., & Sprangers, M. A. G. (1996). The European Organization for Research and Treatment of Cancer (EORTC) modular approach to quality of life assessment in oncology: An update. In B. Spilker (Ed.), Quality of life and pharmacoeconomics in clinical trials (2nd ed., pp. 179–189). Philadelphia: Lippincott-Raven.

Velikova, G., Booth, L., Smith, A. B., et al. (2004). Measuring quality of life in routine oncology practice improves communication and patient well-being: A randomized controlled trial. Journal of Clinical Oncology, 22, 714–724.

Gustafson, D. H. (2005). Needs assessment in cancer. In J. Lipscomb, C. C. Gotay, & C. Snyder (Eds.), Outcomes assessment in cancer: Measures, methods, and applications (pp. 305–328). Cambridge: Cambridge University Press.

Sanson-Fisher, R., Girgis, A., Boyes, A., et al. (2000). The unmet supportive care needs of patients with cancer. Cancer, 88, 226–237.

Bonevski, B., Sanson-Fisher, R. W., Girgis, A., et al. (2000). Evaluation of an instrument to assess the needs of patients with cancer. Cancer, 88, 217–225.

Snyder, C. F., Garrett-Mayer, E., Brahmer, J. R., et al. (2008). Symptoms, supportive care needs, and function in cancer patients: How are they related? Quality of Life Research, 17, 665–677.

Snyder, C. F., Garrett-Mayer, E., Blackford, A. L., et al. (2009). Concordance of cancer patients’ function, symptoms, and supportive care needs. Quality of Life Research, 18, 991–998.

Hosmer, D. W., & Lemeshow, S. (2000). Applied logistic regression (2nd ed.). Chichester, New York: Wiley.

Acknowledgments

The authors would like to thank David Ettinger, MD, and Charles Rudin, MD, for assistance in recruiting patients and Danetta Hendricks, MA, and Kristina Weeks, BA, BS, for assistance in coordinating the study. Drs. Snyder, Carducci, and Wu and Ms. Blackford are supported by a Mentored Research Scholar Grant from the American Cancer Society (MRSG-08-011-01-CPPB). This research was also supported by the Aegon International Fellowship in Oncology.

Author information

Authors and Affiliations

Corresponding author

Technical Appendix

Technical Appendix

Receiver operating characteristic (ROC) curve is a common technique used in medical decision-making research to determine how well a potential classifying variable can discriminate between two classes [10]. In the context of this paper, the potential classifying variables are the continuously measured scores on the QLQ-C30, and the two classes are the binary grouping of the SCNS domains/items. As a working example, we will use the physical function domain of the QLQ-C30 as our potential classifier and the “work around the home” item from the SCNS as our binary class. For this specific pairing, there were 6 individuals with a missing result on the SCNS and 1 with a missing result on the QLQ-C30, leaving 110 patients for the analysis.

The ROC curve is a graphical representation of the trade-off between the sensitivity and specificity of a potential classifier. Sensitivity is the probability that an individual will be correctly classified as “positive” given that he or she is truly positive. Specificity is the probability that an individual will be correctly classified as “negative” when he or she is truly negative. Sensitivity is also equal to the true positive rate:

Specificity is also equal to 1 minus the false positive rate:

Two other commonly used quantities in ROC analyses are positive predictive value (PPV) and negative predictive value (NPV). The positive predictive value is the probability of being a true positive when you are classified as positive:

The negative predictive value is the probability of being a true negative when you are classified negative:

The ROC curve shows the trade-off between the true and false positive rates. The example in Fig. 3 illustrates three potential curves. The curve where sensitivity = 1 − specificity for all values represents mere guessing, or doing no better at classifying individuals than just flipping a fair coin. A moderately good classifier is represented by the curve in the middle, where the false positive rate increases as the true positive rate increases. An even better classifier is shown in the top curve, where the false positive rate increases at a slower rate as the true positive rate increases. A perfect classifier has a single point at (0,1), where the true positive rate is 100% and the false positive rate is 0%.

The area under the ROC curve (AUC) is a commonly used measure to describe the performance of the potential classifier. The AUC is the probability that a randomly chosen true positive will be ranked higher by the classifier than a randomly chosen true negative. Thus, an AUC of .50 represents a chance classification, and an AUC of 1.0 represents perfect classification. In our example, there are 26 individuals who are true positives (report unmet needs performing work around the home) and 84 true negatives (do not report unmet needs performing work around the home). The ROC curve in Fig. 4 for the physical function domain of the QLQ-C30 has an AUC of .81, which means that of all possible pairings of the 26 positives and 84 negatives, the positive will be more highly ranked than the negative 81% of the time.

In our analysis, once a good classifier was identified, we examined potential cut-offs for the classifier by creating 2 × 2 tables showing the number of patients who are correctly and incorrectly classified. The physical function classifier takes on values ranging from 0 to 100 for each individual. For the purpose of illustration, in the table below we present two potential cut-offs of this score for classifying an individual as having trouble with work around the home: 80 and 90 (Table 3).

There are 31 individuals classified positive with a physical function score higher than or equal to 80 and 79 classified negative with a score less than 80. Among the 31 called positive, 17 are true positives, which results in a sensitivity of 17/26 = .65. Among the 79 individuals classified negative, 70 are true negatives, which results in a specificity of 70/84 = .83. The PPV and NPV of this cut-off are equal to 17/31 = .55 and 70/79 = .89, respectively. Using a cut-off of 90 results in a more sensitive (sensitivity = 22/26 = .85), but less specific test (specificity = 49/84 = .58). The PPV and NPV of this cut-off are equal to 22/57 = .39 and 49/53 = .92, respectively.

Rights and permissions

About this article

Cite this article

Snyder, C.F., Blackford, A.L., Brahmer, J.R. et al. Needs assessments can identify scores on HRQOL questionnaires that represent problems for patients: an illustration with the Supportive Care Needs Survey and the QLQ-C30. Qual Life Res 19, 837–845 (2010). https://doi.org/10.1007/s11136-010-9636-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-010-9636-2