Abstract

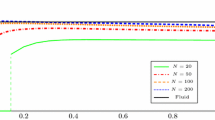

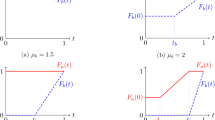

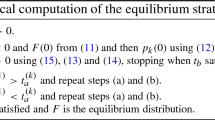

We consider the noncooperative choice of arrival times by individual users, who seek service at a first-come first-served queueing system that opens up at a given time. Each user wishes to obtain service as early as possible, while minimizing the expected wait in the queue. This problem was recently studied within a simplified fluid-scale model. Here, we address the unscaled stochastic system, assuming a finite (possibly random) number of homogeneous users, exponential service times, and linear cost functions. In this setting, we establish that there exists a unique Nash equilibrium, which is symmetric across users, and characterize the equilibrium arrival-time distribution of each user in terms of a corresponding set of differential equations. We further establish convergence of the Nash equilibrium solution to that of the associated fluid model as the number of users is increased. We finally consider the price of anarchy in our system and show that it exceeds 2, but converges to this value for a large population size.

Similar content being viewed by others

Notes

The relation between N and M is more intricate in the stochastic case, as discussed in Sect. 6.

Exactly the same result is obtained if we keep μ fixed and then scale the resulting distribution G(t) as G(Nt).

Note that only M is required to determine the equilibrium arrival distribution. N then determines the overall system load.

The optimal social cost in the case of N=2 turns out to be \(\mu^{-1}\beta (1+ \log ( \frac{\alpha+\beta}{\beta } ) )\), with the first user arriving at t=0 and the second at \(t= \mu^{-1}\log ( \frac{\alpha+\beta}{\beta} )\). The PoA can still be seen to be larger than 2 relative to this solution.

These two options coincide in the fluid model.

It is relatively easy to rule out the case where F i strongly dominates F j (or vice versa) over (t 0,t 0+ϵ), proceeding similarly to case (i). However, such dominance is not entailed in general from \(F_{i}\not= F_{j}\). For example, suppose F i (t)−F j (t)=t 3sin(1/t) for \(t>0\stackrel {\triangle}{=}t_{1}\). This is a continuous function that has an infinite number of sign changes near 0, hence neither F i or F j dominates the other. We therefore resort to a more elaborate argument involving uniqueness of solutions to a certain differential equation.

References

Allon, G., Gurvich, I.: Pricing and dimensioning competing large-scale service providers. Manuf. Serv. Oper. Manag. 12(3), 449–469 (2010)

Armony, M., Maglaras, C.: On customer contact centers with a call-back option: customer decisions, routing rules, and system design. Oper. Res. 52(2), 271–292 (2004)

Glazer, A., Hassin, R.: ?/M/1: On the equilibrium distribution of customer arrivals. Eur. J. Oper. Res. 13, 146–150 (1983)

Hale, J.K., Verduyn Lunel, S.M.: Introduction to Functional Differential Equations. Springer, Berlin (1993)

Hassin, R., Haviv, M.: To Queue or Not to Queue. Kluwer Academic, Dordrecht (2003)

Hassin, R., Kleiner, Y.: Equilibrium and optimal arrival patterns to a server with opening and closing times. IEE Trans. 43(3), 164–175 (2011)

Haviv, M.: When to Arrive at a Queue with Tardiness Costs? Technical report, Submitted (2010)

Honnappa, H., Jain, R.: Strategic arrivals into queueing networks. In: Proc. 48th Annual Allerton Conference, Illinois, pp. 820–827 (2010)

Juneja, S., Jain, R.: The Concert/Cafeteria queuing problem: a game of arrivals. In: Proc. ValueTools’09—Fourth ICST/ACM Fourth International Conference on Performance Evaluation Methodologies and Tools, Pisa, Italy (2009)

Jain, R., Juneja, S., Shimkin, N.: The concert queuing problem: to wait or to be late. Discrete Event Dyn. Syst. 21, 103–138 (2011)

Kumar, S., Randhawa, R.S.: Exploiting market size in service systems. Manuf. Serv. Oper. Manag. 12(3), 511–526 (2010)

Lasry, J.-M., Lions, P.-L.: Mean field games. Jpn. J. Math. 2(1), 229–260 (2007)

Lindley, R.: Existence, uniqueness, and trip cost function properties of user equilibrium in the bottleneck model with multiple user classes. Transp. Sci. 38(3), 293–314 (2004)

Maglaras, C., Zeevi, A.: Pricing and design of differentiated services: approximate analysis and structural insights. Oper. Res. 53(2), 242–262 (2005)

McAfee, R.P., McMillan, J.: Auctions with a stochastic number of bidders. J. Econ. Theory 43(1), 1–19 (1987)

Newell, G.F.: The morning commute for nonidentical travellers. Transp. Sci. 21(2), 74–88 (1987)

Otsubo, H., Rapoport, A.: Vickrey’s model of traffic congestion discretized. Transport. Res. B 42, 873–889 (2008)

Rapoport, A., Stein, W.E., Parco, J.E., Seale, D.A.: Equilibrium play in single-server queues with endogenously determined arrival times. J. Econ. Behav. Organ. 55, 67–91 (2004)

Seale, D., Parco, J., Stein, W., Rapoport, A.: Joining a queue or staying out: effects of information structure and service time on arrival and staying out decisions. Exp. Econ. 8(2), 117–144 (2005)

Vickrey, W.S.: Congestion theory and transport investment. Am. Econ. Rev. 59, 251–260 (1969)

Acknowledgements

The first author would like to thank Yahoo Research India Lab for partially funding this research. This research was additionally supported by the Israel Science Foundation, grant No. 1319/11.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Proofs for Sect. 3

Proof of Lemma 3

We use stochastic coupling with the two arrival processes implemented on a common probability space, and with identical service times for all users. Noting that \({\mathbf{A}}(s_{2})-{\mathbf{A}}(s_{1})= \sum_{i} 1_{\{s_{1}<T_{i}\leq s_{2}\}}\) for s 1<s 2, where T i ∼F i , it follows by the assumed dominance relation in (i) that \({\mathbf{A}}(s_{2})-{\mathbf{A}}(s_{1}) \geq\tilde{{\mathbf{A}}}(s_{2}) - \tilde{{\mathbf{A}}}(s_{1})\) for all s 1<s 2≤t. That is, the number of arrivals under \({\mathcal{F}}=\{F_{i}\}\) is at least as large as under \(\tilde{{\mathcal{F}}}\), over any time span up to t. This clearly implies that \({{\mathbf{Q}}}(t)\geq\tilde{{\mathbf{Q}}}(t)\) w.p. 1, hence \(Q(t)\geq\tilde{Q}(t)\). As for (ii), with the same coupling it now follows that \({\mathbf{A}}(t) > \tilde{{\mathbf{A}}}(t)\) with positive probability, hence \({{\mathbf{Q}}}(t) > \tilde{{\mathbf{Q}}}(t)\) on an event of positive probability (e.g., when there are no service completions by time t), so that \(Q(t) > \tilde{Q}(t)\). Assertion (iii) follows by similar considerations applied to the indicator 1{Q(t)>0} in place of Q(t). □

Proof of Lemma 4

It is sufficient to establish the claim for the case where the arrival profiles are the same except for one component, as it then extends to the general case via the triangle inequality. Suppose then that \(F_{i}=\tilde{F_{i}}\) for i≥2, we need to show that

Suffices to show that P{Q(t)>0}=1−P{Q(t)=0} is Lipschitz continuous. In particular, we argue that

To see this, note that (32) holds whenever, \(\| F_{1} - \tilde{F}_{1}\|_{t} > \frac{1}{2}\), since

Now suppose that \((\tilde{F}_{1}(s):s \leq t)\) satisfies the constraint \(\| F_{1} - \tilde{F}_{1}\|_{t} \leq\epsilon\leq\frac{1}{2}\). Then it maximizes \({P}\{\tilde{{\mathbf{Q}}}(t)>0\}\) when

for 0≤s<t and \(\tilde{F}_{1}(t) = \min(1, {F}_{1}(t)+ \epsilon)\). To see this, consider any distribution function H such that ∥F 1−H∥ t ≤ϵ. We can couple an arrival from H and \(\tilde{F}_{1}\) using the same uniform random variable U distributed uniformly over [0,1].

Define for any distribution function R(⋅)

Then, since \(H(s) \geq\tilde{F}_{1}(s)\) for s<t, it follows that for U<H(t −), H −1(U)<t and

Furthermore, U≤H(t) is equivalent to H −1(U)≤t. It then follows that

so that then \(\tilde{F}_{1}^{-1}(U) \leq t\). Hence, U≤H(t) implies \(H^{-1}(U) \leq\tilde{F}_{1}^{-1}(U) \leq t\), so that

where P{Q H (t)>0} corresponds to P{Q(t)>0} with distribution F 1 replaced by H.

Now it is easy to see that

To see this, let U again denote uniform random variable as above. Let V=U−ϵ for U≥ϵ and V=1−U for U<ϵ. It is easy to see that V is also uniformly distributed over [0,1].

We develop a stochastic coupling where U is used to generate samples from F 1 and V is used to develop samples from \(\tilde{F}_{1}\). Samples from the remaining distributions (F i :2≤i≤N) are kept the same in the two systems.

Then note that \(F_{1}^{-1}(U)= \tilde{F}_{1}^{-1}(V)\) except if U≤ϵ or U≥F 1(t). For U>F 1(t)+ϵ, the arrivals occur after t under both the distributions. Hence, under the two systems, the arrivals that occur before or at time t have different times with at most 2ϵ probability. Now, (32) easily follows. □

Appendix B: Proof of Proposition 1: Symmetry

The proof proceeds through several lemmas. The first establishes that if a certain user assigns a positive probability of arrival to a single point in time, then other users will choose not to arrive at or shortly after that time.

Lemma 12

Let \({\mathcal{F}}\) be an equilibrium profile. Suppose F i has a point mass at t, namely, F i (t)>F i (t−). Then

-

(i)

F j (t+ϵ)−F j (t−)=0 for some ϵ>0 and all \(j\not=i\).

-

(ii)

In particular, F j does not have a point mass at t.

Proof

Claim (ii) clearly follows from (i). To establish (i), we show that if user \(j\not= i\) arrives just before t, he will incur a cost that is smaller than if he arrives at t or shortly thereafter. Therefore, arriving at t or shortly thereafter is not a valid choice at equilibrium.

The expected queue size faced by user j arriving at t is

Here, the second equality follows by Lemma 2, the first inequality holds since \(F^{-j}=\sum_{k\not= j}F_{k}\) includes F i as an additive term, and the last inequality holds since F i does have a point mass at t by assumption. Note that Q −j(t−ϵ)→Q −j(t−) as ϵ↓0. Observing (7), it follows that \(C_{j}(t-\epsilon_{1},{\mathcal{F}}^{-j}) < C_{j}(t,{\mathcal{F}}^{-j})\) for ϵ 1 small enough.

Further, since the cost is right-continuous (Lemma 7), this inequality extends to s>t, namely \(C_{j}(t-\epsilon_{1},{\mathcal{F}}^{-j}) < C_{j}(s,{\mathcal{F}}^{-j})\) for s∈[t,t+ϵ] with ϵ>0 small enough. This means that arriving at any point in [t,t+ϵ 1] is not an optimal choice for j, so that F j must assign zero probability to that interval. Hence, F j (t+ϵ)−F j (t−)=0, as claimed. □

Recall from Lemma 1 that \(C_{i}(t,{\mathcal{F}}^{-i}) = c_{i}\) on a set of F i -measure 1. We wish to establish that this property holds pointwise on \({\mathcal{T}}_{i}\). The next lemma establishes this claim, with the possible exception of points to which other users assign point masses. Later we will show that such point masses do not exist.

Lemma 13

Let \({\mathcal{F}}=\{F_{i}\}\) be an equilibrium profile. Then, for each i,

-

(i)

The support \({\mathcal{T}}_{i}\) of F i is bounded.

-

(ii)

\(C_{i}(t,{\mathcal{F}}^{-i})=c_{i}\), if both \(t\in{\mathcal{T}}_{i}\) and F −i has no point mass at t.

-

(iii)

\(C_{i}(t-,{\mathcal{F}}^{-i}) = c_{i}\) for all \(t\in{\mathcal{T}}_{i}\).

Proof

(i) Follows since the cost \(C_{i}(s,{\mathcal{F}}^{-i})\) tends to infinity at |s|→∞.

(ii) \(t\in{\mathcal{T}}_{i}\) implies that (t−ϵ,t+ϵ) has positive F i measure for any ϵ>0. It follows from Lemma 1(ii) that \(C_{i}(s,{\mathcal{F}}^{-i})=c_{i}\) for some point s∈(t−ϵ,t+ϵ). But F −i does not have a point mass at t by assumption, so that by Lemma 7, t is a continuity point of \(C_{i}(\cdot ,{\mathcal{F}}^{-i})\). It follows that \(C_{i}(t,{\mathcal{F}}^{-i})=c_{i}\) at t as well.

(iii) Consider again \(t\in{\mathcal{T}}_{i}\). If F −i has no point mass at t, then t is a continuity point of \(C_{i}(t,{\mathcal{F}}^{-i})\) by Lemma 7, and the conclusion follows from (ii). Suppose F −i does contain a point mass at t. Lemma 12 then implies that F i assigns zero probability to [t,t+ϵ) for some ϵ>0. But, as argued in (ii), (t−ϵ,t+ϵ) has positive F i measure for any ϵ>0. It follows that (t−ϵ,t) has positive F i measure for all ϵ>0. It now follows by Lemma 1(ii) that \(C_{i}(s,{\mathcal{F}}^{-i})=c_{i}\) for some point s∈(t−ϵ,t), for all ϵ>0. But since the left limit \(C_{i}(t-,{\mathcal{F}}^{-i})\) exists by Lemma 7, the claim follows. □

We next show that the equilibrium costs are the same for all users. From this, we will subsequently infer that their arrival distribution must be identical as well.

Lemma 14

In any equilibrium profile \({\mathcal{F}}\), the equilibrium costs c i of the users are all the same.

Proof

It suffices to show that c j ≤c i for all \(j\not= i\). Fix i and j. Let t i be the smallest point in the support \({\mathcal{T}}_{i}\) of F i (t i exists since the support is bounded by Lemma 13, and closed by definition). In the next paragraph we will show that \(C_{j}(t_{i}-,{\mathcal{F}}^{-j})\leq c_{i} \); that is, an arrival of j just before t i will incur a cost not exceeding c i . (Note that this also applies to an arrival at t=t i itself, unless F j has a point mass there.) This inequality clearly implies that c j ≤c i , since c j minimizes \(C_{j}(t,{\mathcal{F}}^{-j})\).

By definition of t i we have that F i (t)=0 for t<t i , hence F −j(t)≤F −i(t) there. Invoking the monotonicity property in Lemma 3(i), it follows that Q −j(t i −)≤Q −i(t i −). Observing (7), this implies that \(C_{j}(t_{i}-,{\mathcal{F}}^{-j})\leq C_{i}(t_{i}-,{\mathcal{F}}^{-i})\). But the latter cost equals c i by Lemma 13(iii). Therefore, \(C_{j}(t_{i}-,{\mathcal{F}}^{-j})\leq c_{i}\), and the lemma is established. □

Lemma 15

Any equilibrium profile \({\mathcal{F}}\) is symmetric, in the sense that F i does not depend on i.

Proof

Suppose, in contradiction, \(F_{i}\not= F_{j}\) for some i and j. Let t 0=max{t:F i (s)=F j (s),s<t} be the maximal time up to which these distributions are identical. Note that t 0>−∞ since the supports of F i and F j are bounded, and it may then be easily seen from the definition that the maximum is indeed attained at a finite point. We consider separately the following two cases:

-

(i)

Either \((t_{0},t_{0}+\epsilon)\not\subset{\mathcal{T}}_{i}\) for all ϵ>0, or \((t_{0},t_{0}+\epsilon)\not\subset{\mathcal{T}}_{j}\) for all ϵ>0. That is, the support of F i , or that of F j , does not extend continuously beyond t 0.

-

(ii)

\((t_{0},t_{0}+\epsilon)\subset{\mathcal{T}}_{i}\cap{\mathcal{T}}_{j}\) for some ϵ>0. That is, both supports extend to some interval beyond t 0.

Case (i): Suppose the stated condition holds for i (or otherwise swap indices). Observing Assumption 1, there must exist a whole interval (t 0,t 0+ϵ) which is outside of \({\mathcal{T}}_{i}\). Let t 1>t 0 be the first point in \({\mathcal{T}}_{i}\) beyond t 0 (such a point must exist since F i (t 0)=F j (t 0)<1 since \(F_{i}\not= F_{j}\) and by definition of t 0). Then F i (t 1−)−F i (t 0)=0. But this implies that F j (t 1−)−F j (t 0)>0, by definition of t 0. It therefore follows that F j strictly dominates F i on (−∞,t 1) in the sense of Lemma 2, namely F j (t)−F j (s)≥F i (t)−F j (s) for all s<t<t 1, with strict inequality holding for some s<t<t 1. Applying Lemma 2(ii) with \({\mathcal{F}}={\mathcal{F}}^{-i}\) and \(\tilde{{\mathcal{F}}}={\mathcal{F}}^{-j}\), we obtain that Q −i(t 1−)>Q −j(t 1−). We next show that this implies c i >c j , contradicting Lemma 14. Indeed, since \(t_{1}\in{\mathcal{T}}_{i}\), Lemma 13(iii) implies that \(C_{i}(t_{1}-, {\mathcal{F}}^{-i})=c_{i}\), while the definition of the equilibrium costs (see Lemma 1) implies that \(c_{j} \leq C_{j}(t_{1}-, {\mathcal{F}}^{-j})\). We therefore obtain that c i >c j , in contradiction to Lemma 14.

Case (ii): Here, we shall argue that F i (t)=F j (t) for t∈(t 0,t 0+ϵ). As this stands at odds with the definition of t 0, the required contradiction will be established.Footnote 6

Let k stand for i or j. Since \((t_{0},t_{0}+\epsilon)\subset {\mathcal{T}}_{k}\), it follows by Lemma 12(ii) that F −k(t) has no point masses for t∈(t 0,t 0+ϵ). Therefore, by Lemma 13(ii), \(C_{k}(t,{\mathcal{F}}^{-k})=c_{k}\) on that interval. But c i =c j =c 0 by Lemma 14, so that \(C_{i}(t,{\mathcal{F}}^{-i})=C_{j}(t,{\mathcal{F}}^{-j})\equiv c_{0}\) for t∈(t 0,t 0+ϵ). We proceed to show that this implies F i (t)=F j (t) for t∈(t 0,t 0+ϵ).

Consider henceforth k∈{i,j} and t∈(t 0,t 0+ϵ). As F −k has no point masses there we have by Lemma 2 that Q −k(t) is continuous, so that the equality in (6) is in effect. Using the relations in (4) and (3), we obtain

Suppose first that t 0<0. We directly obtain from the last equality that

Observe that F −i=F 0+F j and F −j=F 0+F i , where \(F_{0}\stackrel{\triangle}{=}\sum_{m\not= i,j} F_{m}\) is common to both. It therefore follows from (34) that F j (t)=F k (t) on that interval, which contradicts the definition of t 0.

We may therefore restrict attention to t 0≥0. Taking the derivative in (33) and rearranging, we obtain

wherever the derivative exists. But since F −k has no point masses in [t 0,t 0+ϵ) (as already observed), the right-hand side may be seen to be continuous in t, so that the derivative exists for all t>0 in that range. A possible exception is for t 0=0 (due to the removal of the indicator), where we simply consider the right derivative.

We next interpret (35) as a coupled pair of functional differential equations for F −i and F −j over t≥t 0. Let us start with the initial conditions F −k(t 0). As we deal with a specific equilibrium profile \({\mathcal{F}}\), we consider \(\{ F_{m}, m\not= i,j\}\) as given and fixed. We further consider F i and F j as given up to t<t 0 (with F i =F j there). Since there is no point mass at t 0, then by continuity F i (t 0)=F j (t 0) are given as well. Hence, F −i(t 0)=F −j(t 0) are also given and serve as initial conditions for (35).

Observe next that P{Q −i(t)=0} generally depends on \({\mathcal{F}}^{-i}=\{F_{m}, m\not= i\}\). Since we consider \(\{F_{m}, m\not= i,j\}\) as given, then P{Q −i(t)=0} is effectively a function of F j only, hence of F −i=F 0+F j . Furthermore, we claim that P{Q −i(t)=0} is Lipschitz continuous in F j , in the sense that

for some constant K>0 and all t≥t 0, where Q −i and \(\tilde{{\mathbf{Q}}}^{-i}\) correspond to F i and \(\tilde{F}_{j}\), respectively, and \(F_{j}(s)=\tilde{F}_{j}(s)\) for s<t 0. The proof of this inequality essentially follows from Lemma 4. Evidently, the same continuity property holds when F j is replaced by F −i=F 0+F j . Similar observations clearly hold with i and j interchanged.

It follows that (35) is a retarded functional differential equation (in the sense of [4]) in the pair (F −i,F −j) over t≥t 0, with initial conditions given at t 0, and Lipschitz continuous right hand side. Furthermore, (F −i,F −j) are continuous (given that they contain no point masses). It therefore follows by Theorem 2.2.3 in [4] that these equations have a unique solution in [t 0,t 0+ϵ). But this solution must be symmetric, namely F −i(t)=F −j(t), as otherwise we can obtain a different solution by interchanging the two. We have thus shown that F i (t)=F j (t) for t∈(t 0,t 0+ϵ), and the proof of case (ii) by contradiction is complete. □

Proposition 1 now follows as a direct consequence of Lemmas 15 and 12.

Appendix C: Proofs for Sect. 4.3

Proof of Lemma 9

(i) The Lipschitz continuity of P 0 in (14) clearly extends to the right-hand side of Eq. (12). Claim (i) now follows by standard results, for example [4, Theorem 2.2.3].

(ii) Continuous dependence of G(⋅) on the initial conditions, uniformly in t, again follows from (14) by standard results, see [4, Theorem 2.2.2]. This clearly extends to G′(t) by the Lipschitz continuity noted in that lemma.

(iii) Consider two solutions G and F with F(0)>G(0). Observe that P 0(F 0)=(1−F(0))M<(1−G(0))M=P 0(G 0), so that F′(0)>G′(0), and by continuity this extends to t∈[0,ϵ] for some ϵ>0. Suppose now, by contradiction, that F′(t)≤G′(t) for some t>ϵ, and let t 0 be the first time for which equality holds (which exists by continuity). But then F′(t)>G′(t) for all t∈[0,t 0), hence F(t)>G(t) there. By Lemma 3(iii), this entails that \(P_{0}(\mathbf{F}_{t_{0}})<P_{0}(\mathbf{G}_{t_{0}})\), and the FDE implies that F′(t 0)>G′(t 0), which contradicts the definition of t 0. □

The following notation will be used in the next proofs. Let

(recall that τ is the first time at which either G(t)=1 or G′(t)=0.) Evidently, τ=min{t 0,t 1}.

Proof of Proposition 4

The proof proceeds in several steps.

-

1.

Bounded final time: We first observe that τ is finite, and in fact uniformly bounded as a function of G(0)∈[γ min,1]. Indeed, observe that the expected number of departures by time t is given by (cf. (4)):

$$E\bigl({\mathbf{D}}(t)\bigr)= \mu E\bigl({\mathbf{B}}(t)\bigr) =\mu\int _0^t \bigl(1- P_0( \mathbf{G}_{t})\bigr) \,dt . $$But Eq. (12) implies that \(P_{0}(\mathbf{G}_{t})< \frac{\alpha }{\alpha +\beta}\) as long as G′(t)>0, so that \(E({\mathbf{D}}(t))\geq\mu\frac{\beta}{\alpha+\beta}t\). But since D(t) cannot exceed M, the number of potential arrivals, it follows that either G′(t)=0 or G(t)=1 must occur for some \(t \leq\frac{M(\alpha+\beta)}{\mu\beta}\stackrel {\triangle}{=}T_{\max}\). Therefore, τ≤T max for all G(0).

-

2.

Monotonicity of t 0: Suppose τ=t 0 for some G(0)=γ, namely G′(τ)=0 and G(τ)≤1. Observe that by Lemma 9(iii) both G(t) and G′(t) are strictly increasing in G(0) for any fixed t. It follows directly that τ=t 0 for all G(0)<γ, and further that t 0 is strictly increasing in G(0) there.

-

3.

Monotonicity of t 1: Suppose τ=t 1 for some G(0)=γ, namely G′(τ)≥0 and G(τ)=1. It similarly follows, by monotonicity of G(t) and G′(t) in G(0), that τ=t 1 for all G(0)>γ, and further that t 1 is strictly decreasing in G(0) there.

-

4.

Crossing to infinite t 0, t 1: Let γ 0=sup{G(0)∈[γ min,1]:t 0<∞}, and γ 1=inf{G(0)∈[γ min,1]:t 1<∞}. Observe that both t 0 and t 1 are indeed finite for some G(0) in that range. Indeed, t 0=0 for G(0)=γ min (since then G′(0)=0), and t 1=0 for G(0)=1.

-

5.

γ 0=γ 1: We can infer that γ 0=γ 1 from the above-mentioned monotonicity properties of t 0 and t 1. Indeed: t 0<∞ for G(0)<γ 0; t 1<∞ for G(0)>γ 0; t 0 and t 1 cannot both be infinite for the same G(0) since τ is finite; and finally note that if t 0 and t 1 are both finite then t 0=t 1 by their definition, so that this can hold for a single value of G(0) at most, due to the opposite monotonicity of t 0 and t 1 in G(0).

-

6.

Properties (i) and (ii): Let γ ∗ denote the common value of γ 0 and γ 1. Given the definitions of the latter, we obtain the following:

-

For G(0)<γ ∗, t 1=∞ and τ=t 0<∞; that is, G′(τ)=0 and G(τ)<1.

-

For G(0)>γ ∗, t 0=∞ and τ=t 1<∞; that is, G′(τ)>0 and G(τ)=1.

In view of the monotonicity properties of t 0 and t 1, it follows that properties (i) and (ii) of the proposition are satisfied.

-

□

Proof of Theorem 2

Consider γ ∗ from Proposition 4. Clearly, both terminal conditions can hold together only for G(0)=γ ∗. It remains to show that they indeed hold at γ ∗.

Suppose G(0)=γ ∗. Let t 0 and t 1 be defined as in the last proof. Recall that t 0 and t 1 cannot both be infinite (since τ is bounded), and t 0=t 1 when both are finite. Therefore, there are three mutually-exclusive possibilities at γ ∗:

-

Option 1: t 0=∞. That is, τ=t 1<∞, G(τ)=1, G′(τ)>0.

-

Option 2: t 0=t 1<∞. That is, G(τ)=1, G′(τ)=0.

-

Option 3: t 1=∞. That is, τ=t 0<∞, G(τ)<1, G′(τ)=0.

Option 2 is just the required property in the theorem statement. Hence, the proof will be complete once we rule out Options 1 and 3.

In the following, we let G(t,γ) and τ(γ) denote the solution and final time that correspond to G(0)=γ, and similarly for t 0(γ), t 1(γ). Also denote τ ∗=τ(γ ∗). Recall that G(t,γ) and G′(t,γ) are continuous in t and γ (Lemma 9).

Suppose Option 1 holds at G(0)=γ ∗. By Proposition 4(i), for G(0)=γ<γ ∗ we have G′(τ(γ),γ)=0, G(τ(γ),γ)<1, and τ(γ)=t 0(γ) is increasing in γ there. Define \(\hat{\tau}= \lim_{\gamma\uparrow\gamma^{*}} t_{0}(\gamma)\). Consider two cases.

-

(a)

\(\hat{\tau}\leq\tau^{*}\). By continuity of G′(t;γ) in γ and t, we obtain \(G'(\hat{\tau},\gamma^{*}) = \lim_{\gamma\uparrow\gamma ^{*}}G'(t_{0}(\gamma),\gamma)=0\). But since \(\hat{\tau}\leq\tau^{*}\) this means that \(t_{0}(\gamma^{*})=\hat{\tau} <\infty\), contrary to Option 1.

-

(b)

\(\hat{\tau}> \tau^{*}\). This means, in particular, that \(\tau(\gamma)=t_{0}(\gamma)>\tilde {\tau}\) for some \(\tilde{\tau}>\tau^{*}\) and all γ<γ ∗ close enough to γ ∗. However, we will use G(τ ∗,γ ∗)=1 and G′(τ ∗,γ ∗)>0 to show that G(τ ∗+ϵ,γ)>1 must hold for any ϵ>0 and γ<γ ∗ close enough to γ ∗, in obvious contradiction to \(\tau(\gamma)>\tilde{\tau}\). Indeed, by continuity in γ, \(\lim_{\gamma\uparrow\gamma^{*}} G'(\tau^{*},\gamma)= G'(\tau^{*},\gamma^{*}) \stackrel{\triangle}{=} a_{0} > 0\). Therefore, there exists γ 1<γ ∗ so that \(G'(\tau^{*},\gamma_{1}) \geq\frac{1}{2}a_{0}\). Further, by continuity in t, there exists ϵ 1>0 so that \(G'(t,\gamma_{1}) \geq\frac{1}{4}a_{0}\) for t∈[τ ∗,τ ∗+ϵ 1]. By monotonicity in γ, this inequality extends to all γ∈[γ 1,γ ∗). Therefore,

$$G\bigl(\gamma,\tau^*+\epsilon_1\bigr) \geq G\bigl(\gamma,\tau^* \bigr)+ \frac{1}{4}a_0 \epsilon_1, \quad\gamma\in[ \gamma_1,\gamma^*) . $$Now, since \(\lim_{\gamma\uparrow\gamma^{*}}G(\gamma,\tau^{*})= G(\gamma^{*},\tau^{*})=1\), it follows that G(γ,τ ∗+ϵ 1)>1 for γ close enough to γ ∗. But this holds for ϵ 1 arbitrarily small (and in particular for \(\epsilon_{1}<\hat{\tau}\)), which obtains the required contradiction. This completes the argument that Option 1 is not possible.

Turning to Option 3, suppose that it holds as stated. That is G(τ ∗,γ ∗)<1, G′(τ ∗,γ ∗)=0. We will show that this contrasts with property (ii) of γ ∗ in Proposition 4, namely that G(τ(γ),γ)=1 and G′(τ(γ),γ)>0 for G(0)=γ>γ ∗. Define \(\hat{\tau}= \lim_{\gamma\downarrow\gamma^{*}} t_{1}(\gamma )\). Consider again two cases.

-

(a)

\(\hat{\tau}\leq\tau^{*}\). Then \(G(\tau^{*},\gamma^{*})\geq G(\hat{\tau},\gamma^{*}) = \lim_{\gamma \downarrow\gamma ^{*}}G(\tau(\gamma),\gamma)=1\), which contradicts G(τ ∗,γ ∗)<1.

-

(b)

\(\hat{\tau}> \tau^{*}\). Here, analogously to the argument in Option 1(b), we will show that G′(τ ∗+ϵ,γ)<0 must hold for ϵ>0 and γ>γ ∗ close enough to γ ∗, which contradicts \(\hat{\tau}>\tau^{*}\). For that purpose, we will require some properties of the second derivative G″. The argument proceeds through several claims.

-

(b1)

For any γ, G(t)<1 and G′(t)=0 imply that G″(t)<0.

Indeed, by (12) \(G''(t)=-\frac{\mu}{N-1}\frac{d}{dt}P\{ {\mathbf{Q}}^{-i}(t)=0\}\). But \(\frac{d}{dt}P\{{\mathbf{Q}}^{-i}=0\}>0\) follows similarly to Lemma 6.

-

(b2)

G″(t,γ) is locally Lipschitz continuous in t and γ, and uniformly so while G(t,γ)≤1−ϵ<1.

To see this, consider the differential equation (8) jointly with the evolution equations for the queue size probabilities p t (k,m) in (18). Note that \(P\{{\mathbf{Q}}^{-i}(t)=0\}=\sum_{m=0}^{N-1} p_{t}(0,m)\). Together these equations can be considered as a set of ordinary differential equations, with the right-hand size smooth and uniformly bounded as long as 1−G(t)≥ϵ>0. The conclusion now follows by expressing G″(t) in terms of these variables.

-

(b3)

G″(t,γ) is strictly negative in some neighborhood of (τ ∗,γ ∗).

Indeed, recalling that G(τ ∗,γ ∗)<1 and G′(τ ∗,γ ∗)=0, we have by (b1) that \(G''(\tau^{*},\gamma^{*})\stackrel{\triangle}{=}- b_{0}<0\). Further, by the Lipschitz continuity of G there is an ϵ 3>0 so that G(τ ∗,γ ∗)<1−ϵ 3 for all t≤τ ∗+ϵ 3 and γ∈[γ ∗,γ ∗+ϵ 3]. Now (b2) implies that G″ is uniformly Lipschitz in that region, so that \(G''(t,\gamma)< -\frac{1}{2}b_{0}\) for (t,γ) as above, possibly with smaller ϵ 3>0.

-

(b4)

Estimating G′: A two-term Taylor expansion for G′(t) gives

$$G'(t,\gamma)=G'\bigl(\tau^*,\gamma\bigr)+ G''(\zeta,\gamma) \bigl(t-\tau^*\bigr) $$for some ζ=ζ(t,γ)∈[τ ∗,t]. Restricting to t∈(τ ∗,τ ∗+ϵ 3) and γ∈(γ ∗,γ ∗+ϵ 3) as in (b3), we get

$$G'(t,\gamma) \leq G'\bigl(\tau^*,\gamma\bigr)- \tfrac{1}{2}b_0 \bigl(t-\tau^*\bigr) . $$Now, since \(\lim_{\gamma\downarrow\gamma^{*}} G'(\tau^{*},\gamma)= G'(\tau^{*},\gamma^{*})=0\), it follows that

$$\lim_{\gamma\downarrow\gamma^*} G'(t,\gamma) <0 $$for t∈(τ ∗,τ ∗+ϵ 3). This provides the required contradiction to \(\hat{\tau}>\tau^{*}\), so that Option 3 is ruled out.

-

(b1)

We are therefore left with Option 2, which establishes the theorem. □

Rights and permissions

About this article

Cite this article

Juneja, S., Shimkin, N. The concert queueing game: strategic arrivals with waiting and tardiness costs. Queueing Syst 74, 369–402 (2013). https://doi.org/10.1007/s11134-012-9329-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-012-9329-3