Abstract

Consider a single server queueing system with several classes of customers, each having its own renewal input process and its own general service times distribution. Upon completing service, customers may leave, or re-enter the queue, possibly as customers of a different class. The server is operating under the egalitarian processor sharing discipline. Building on prior work by Gromoll et al. (Ann. Appl. Probab. 12:797–859, 2002) and Puha et al. (Math. Oper. Res. 31(2):316–350, 2006), we establish the convergence of a properly normalized state process to a fluid limit characterized by a system of algebraic and integral equations. We show the existence of a unique solution to this system of equations, both for a stable and an overloaded queue. We also describe the asymptotic behavior of the trajectories of the fluid limit.

Similar content being viewed by others

References

Altman, E., Jiménez, T., Kofman, D.: DPS queues with stationary ergodic service times and the performance of TCP in overload. In: Proc. IEEE INFOCOM’04, Hong-Kong (2004)

Altman, E., Avrachenkov, K., Ayesta, U.: A survey on discriminatory processor sharing. Queueing Syst. 53, 53–63 (2006)

Athreya, K.B., Rama Murthy, K.: Feller’s renewal theorem for systems of renewal equations. J. Indian Inst. Sci. 58(10), 437–459 (1976)

Ben Tahar, A., Jean-Marie, A.: Population effects in multiclass processor sharing queues. In: Proc. Valuetools 2009, Fourth International Conference on Performance Evaluation Methodologies and Tools, Pisa, October 2009

Ben Tahar, A., Jean-Marie, A.: The fluid limit of the multiclass processor sharing queue. INRIA research report RR 6867, version 2, April 2009

Berman, A., Plemmons, A.J.: Nonnegative Matrices in the Mathematical Sciences. SIAM Classics in Applied Mathematics, vol. 9 (1994)

Billingsley, P.: Convergence of Probability Measures. Wiley, New York (1968)

Bramson, M.: Convergence to equilibria for fluid models of FIFO queueing networks. Queueing Syst., Theory Appl. 22(1–2), 5–45 (1996)

Bramson, M.: Convergence to equilibria for fluid models of head-of-the-line proportional processor sharing queueing networks. Queueing Syst., Theory Appl. 23(1–4), 1–26 (1997)

Bramson, M.: State space collapse with application to heavy traffic limits for multiclass queueing networks. Queueing Syst., Theory Appl. 30(1–2), 89–148 (1998)

Chen, H., Kella, O., Weiss, G.: Fluid approximations for a processor sharing queue. Queueing Syst., Theory Appl. 27, 99–125 (1997)

Dawson, D.A.: Measure-valued Markov processes, école d’été de probabilités de Saint Flour. Lecture Notes in Mathematics NO 1541, vol. XXI. Springer, Berlin (1993)

Durrett, R.T.: Probability: Theory and Examples, 2nd edn.. Duxbury, Belmont (1996)

Egorova, R., Borst, S., Zwart, B.: Bandwidth-sharing networks in overload. Perform. Eval. 64, 978–993 (2007)

Gromoll, H.C.: Diffusion approximation for a processor sharing queue in heavy traffic. Ann. Appl. Probab. 14, 555–611 (2004)

Gromoll, H.C., Kruk, L.: Heavy traffic limit for a processor sharing queue with soft deadlines. Ann. Appl. Probab. 17(3), 1049–1101 (2007)

Gromoll, H.C., Williams, R.: Fluid Limits for Networks with Bandwidth Sharing and General Document Size Distributions. Ann. Appl. Probab. 10(1), 243–280 (2009)

Gromoll, H.C., Puha, A.L., Williams, R.J.: The fluid limit of a heavily loaded processor sharing queue. Ann. Appl. Probab. 12, 797–859 (2002)

Gromoll, H.C., Robert, Ph., Zwart, B.: Fluid Limits for Processor-Sharing Queues with Impatience. Math. Oper. Res. 33(2), 375–402 (2008)

Horn, R., Johnson, C.: Matrix Analysis. Cambridge University Press, Cambridge (1985)

Jean-Marie, A., Robert, P.: On the transient behavior of the processor sharing queue. Queueing Syst., Theory Appl. 17, 129–136 (1994)

Puha, A.L., Williams, R.J.: Invariant states and rates of convergence for the fluid limit of a heavily loaded processor sharing queue. Ann. Appl. Probab. 14, 517–554 (2004)

Puha, A.L., Stolyar, A.L., Williams, R.J.: The fluid limit of an overloaded processor sharing queue. Math. Oper. Res. 31(2), 316–350 (2006)

de Saporta, B.: Étude de la solution stationnaire de l’équation Y(n+1)=a(n)Y(n)+b(n) à coefficients aléatoires. PhD thesis, University of Rennes 1 (2004)

Williams, R.J.: Diffusion approximation for open multiclass queueing networks: sufficient conditions involving state space collapse. Queueing Syst., Theory Appl. 30, 27–88 (1998)

Yashkov, S.F., Yashkova, A.S.: Processor sharing: a survey of the mathematical theory. Autom. Remote Control 68(9), 1662–1731 (2007)

Zhang, J., Dai, J.G., Zwart, B.: Law of large number limits of limited processor-sharing queues. Math. Oper. Res. 34(4), 937–970 (2009)

Author information

Authors and Affiliations

Corresponding author

Additional information

Part of this work was performed while the first author was a CNRS Postdoctoral fellow at LIRMM, then Lecturer at LMRS (UMR 6085 CNRS-Univ. Rouen).

Appendices

Appendix A: Multidimensional renewal equations

Consider F(t)=(F ij (t)) i,j a matrix of increasing and r.c.l.l. functions, such that F ij (t)=0 when t<0. Let \(\hat {F}(\theta)\) denote the Laplace–Stieltjes transform of F. With the definition of matrix-matrix and matrix-vector convolutions assumed in Sect. 1.4, define the renewal matrix as

Let H be a vector of measurable and bounded functions. The matrix-renewal equation is the system of equations:

for all i. In vector notation, this equation can be written as

The following result is quoted from [24] (see also [3, Lemma 2.1]). The notation ϱ(A) is used here for the spectral radius of matrix A.

Lemma A.1

([24], Lemma 3, p. 23)

The function U(t) is finite for all t if, and only if, ϱ(F(0))<1.

If ϱ(F(0))<1, then V=U∗H is the unique measurable and bounded solution to the matrix-renewal equation.

The matrix F will be called lattice if there exists a real value λ such that: as a distribution function, F ij is concentrated on b ij +n ij λℤ (with n ij ∈ℕ, b ii =0) and if, assuming that a ij is a point of increase for F ij , all values (a ij +a jk −a ki )/λ are integer. A nonnegative matrix F is said to be reducible if there exists a permutation matrix P such that

where A and C are square matrices, and it is called irreducible if it is not reducible. The following proposition summarizes the asymptotic results we need. They are a direct consequence of the results of [3] or [24]. The irreducibility condition, which is implicit in [3], is explicitly added here.

Proposition A.1

Let g be a directly integrable function. Then:

-

(i)

If ϱ(F(∞))=1, F(∞) is irreducible and F(⋅) not lattice, then, for u and v left- and right-eigenvectors of F(∞) for the eigenvalue 1, such that u.v=1,

$$\lim_{t\to\infty} (U_{ij} * g) (t) = \frac{v_i u_j}{u \varGamma v} \int _0^{\infty} g(s) \, \mathrm {d}s ,$$with

$$\varGamma = \int_{0}^{\infty} s \, \mathrm {d}F(s) = -\frac{\mathrm {d}\hat {F}}{\mathrm {d}\theta}(0) .$$If one component of Γ is infinite, the limit above is 0.

-

(ii)

If \(\varrho(F(\infty))\not= 1\), and if there exists a positive θ 0 such that \(\varrho(\hat {F}(\theta_{0}))=1\) and \(\hat {F}(\theta_{0})\) is irreducible, then for \(\tilde{u}\) and \(\tilde{v}\) left- and right-eigenvectors of \(\hat {F}(\theta_{0})\) for the eigenvalue 1, such that \(\tilde{u}.\tilde{v}=1\),

$$\lim_{t\to\infty} (U_{ij} * g) (t) e^{-\theta_0 t} =\frac{\tilde{v}_i \tilde{u}_j}{\tilde{u}\tilde{\varGamma}\tilde{v}} \int_0^{\infty} e^{-\theta_0 s}g(s) \, \mathrm {d}s ,$$where

$$\tilde{\varGamma} = \int_{0}^{\infty} se^{-\theta_0 s}\, \mathrm {d}F(s) = - \frac{\mathrm {d}\hat {F}}{\mathrm {d}\theta}(\theta_0) .$$If one component of \(\tilde{\varGamma}\) is infinite, the limit above is 0.

Appendix B: Convergence of shifted convolutions

This section is devoted to the following technical result:

Lemma B.1

Consider:

-

A sequence of increasing functions A n:ℝ+→ℝ, which are such that A n(0)=0 and which converges uniformly on compacts to a function A which is Lipschitz-continuous, and such that A(t)>0 if t>0.

-

A sequence of functions \(f^{n}: \mathbb {R}_{+}^{2} \to \mathbb {R}\) which converges uniformly on every compact of (0,∞)×(0,∞), to a continuous function f which satisfies:

-

for every t>0 the function f t (⋅):(0,t]→ℝ+ defined by f t (s)=f(t,s) is continuous and strictly decreasing, and f(t,t)=0.

Then for every probability measure ν on ℝ+, the following statements hold, for every function g∈C b (ℝ+), extended with g(x)=0 for x<0:

-

(i)

for each t>0 the function s∈(0,t]↦〈g(⋅−f(t,s)),ν〉 is continuous, except for the at most countable set of values s where \(\nu(\{f(t,s)\})\not= 0\);

-

(ii)

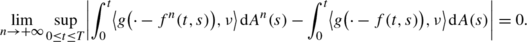

for every ε>0 and for every T>T 0>0 there exists η 0>0 and N 0>0 such that

(B.1)

(B.1)for all n≥N 0 and t∈[T 0,T];

-

(iii)

the sequence of functions \(h_{n}(t)=\int_{0}^{t}\langle g( \cdot- f(t,s) ) , \nu \rangle \, \mathrm {d}A^{n}(s)\) is equicontinuous on finite intervals;

-

(iv)

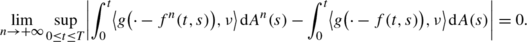

for every T>0, the following convergence holds:

(B.2)

(B.2)

Proof of (i)

Denote with h t (s) the function:

Let s≤t be such that ν({f(t,s)})=0. Let s n be a decreasing sequence converging to s. We have

When rewriting the first term in (B.3), we have used the fact that s<s n and that f t (⋅) is decreasing. This first term tends to 0 as n→+∞ because ν({f(t,s)})=0. By the continuity of both f and g, g(x−f(t,s n ))−g(x−f(t,s))→0 as n→∞. The dominated convergence theorem implies that the second term in (B.3) tends to 0 as well. Hence, h t is right-continuous. A similar argument proves that it is left-continuous, hence continuous at s. □

Proof of (ii)

Note that, for all t 1<t 2,

Let L be the Lipschitz constant of the function A(⋅). Using the uniform convergence of A n(⋅) on the interval [0,T], there exists N 0∈ℕ such that

for all 0≤t 1≤t 2≤T and n≥N 0. Let \(\varDelta =\frac{\varepsilon}{4}+ L (T-T_{0} )\). Note that A n(T)−A n(T 0)≤Δ. The difficulty in proving (B.1) lies in the fact that ν can have atoms. Let \(\mathcal{A}\subset \mathbb {R}_{+}\) denotes the set of all the atoms of ν, which is countable. Let \(\nu^{d}=\sum_{a\in \mathcal{A}}\nu(\{a\})\delta_{a}\) be the Borel measure formed with the atoms of ν, and ν c=ν−ν d be the measure that has no atoms. By Lemma A.1 of [18], there exists η 1>0 such that for all η≤η 1 we have

Since \(\sum_{a\in\mathcal{A}}\nu({a})\leq1\), there exists a finite set \(\mathcal{A}_{\varepsilon}\subset\mathcal{A}\) such that

Let T 0<T, t∈[T 0,T], n≥N 0 and η≤η 1. We have:

In the third line, we have used (B.5) and in the last line we have used the fact that A n(t)−A n(T 0)≤Δ and (B.6). If \(\mathcal{A}_{\varepsilon}=\varnothing\), let η 0=η 1. The right-hand side in (B.7) is ε/2. Otherwise, for any 0<η≤η 1, T 0≤s≤t and \(a\in\mathcal{A}_{\varepsilon}\), we have

This implies for 0<η≤η 1, t∈[T 0,T], \(a\in \mathcal{A}\) and n≥N 0, that

Since n>N 0 and \(f_{t}^{-1}(a+\eta)\leq f_{t}^{-1}((a-\eta)^{+})\leq f_{t}^{-1}(0)=t\leq T\), by (B.4) we have

On the other hand, since \(\nu(\{a\})( f_{t}^{-1}((a-\eta)^{+}) -f_{t}^{-1}( a+\eta))\leq t \nu(\{a\})\) and \(\sum_{a\in\mathcal {A}_{\varepsilon}} t\nu(\{a\})\leq t\), the function of η:

is continuous, and there exists 0<η 0<η 1 such that

Finally, using (B.8), (B.9), and (B.10) in (B.7), we have, for η 0,

The inequality (B.1) is therefore proved. This concludes the proof of (ii). □

Proof of (iii)

For each n≥0, the function h n (⋅) is continuous on each interval [0,T]. Indeed, s↦A n(s) is a continuous and increasing function, so that it is differentiable almost everywhere, and h n can be written as

for all t≤T. On the other hand, the function

has the following properties. First, for each T≥t≥0 the function s↦λ(t,s) is bounded by \(\|g\|_{\infty}\dot{A}^{n}(s)\) which is integrable independently of t. Second, for each T≥s≥0 the function t↦λ(t,s) is continuous except for a denumerable set of t≤T.

Hence, by Lebesgue’s theorem, the function h n is continuous on [0,T]. To prove the equicontinuity of (h n (⋅),n≥0) on finite intervals, it suffices to show that, for every T>0 and ε>0, there exists η>0 and N 0∈ℕ such that if 0≤t 1, t 2≤T and |t 2−t 1|<η then

for all n≥N 0. Note that for each 0≤t 1, t 2≤T,

Let ε>0. By the uniform convergence of A n(⋅) on [0,T], there exists N 0∈ℕ such that

Let \(T_{1}=\frac{\varepsilon}{16L( \Vert g \Vert _{\infty} \vee1)}\). Inequality (B.12) implies, for t 2=T 1 and t 1=0

For all n≥N 0. Thus for t 1∈[0,T 1] and n≥N 0, using the fact that A n(0)=0,

The second inequality follows from the increasingness of A n(⋅), and the third one uses (B.13). Then by (B.11), (B.12), and (B.14), we have

Since in (B.11) the variables t 1,t 2 play a symmetrical role, the same estimate as above is derived when t 2∈[0,T 1] and t 1∈[0,T].

It remains to consider the case where t 1, t 2∈[T 1,T]. Let therefore be two such real numbers with \(\vert t_{2}-t_{1}\vert \leq\frac{3\varepsilon}{16L(\Vert g\Vert _{\infty}\vee 1)}\). We have

for all n≥N 0. We have used (B.11) in the first inequality, and (B.12) and (B.14) in the last inequality.

In order to conclude on the equicontinuity of h n , it suffices to show that there exists η 0>0 such that if t 1, t 2∈[T 1,T] are such that |t 2−t 1|<η 0, then

for all n≥N 0. An estimate for the integrand in (B.16) can be derived as follows. We first introduce the shorthand notation m 1(s):=f(t 1,s) and m 2(s):=f(t 2,s), where we shall omit the argument s when no ambiguity occurs. Let \(\varDelta= \frac{\varepsilon}{16(\Vert g \Vert _{\infty}\vee 1)}+ L(T-T_{1})\). From (B.12), A n(T)−A n(T 1)≤Δ for all n≥N 0. Next, let M>0 be such that

Such an M exists, since ν is a proper probability measure. Therefore, for every fixed s, the integral:

can be decomposed according to the intervals [0,m 1∧m 2), [m 1∧m 2,m 1∨m 2), [m 1∨m 2,M] and (M,+∞). Using the fact that g(⋅) is zero on negative half line, we obtain the bound:

Since g(⋅) is continuous, it is uniformly continuous on [0,M] and there exists δ>0 such that

for all x,y∈[0,M] verifying |x−y|≤δ. On the other hand the function f(⋅ ,⋅) is continuous on (0,∞)×(0,∞), it is uniformly continuous on [T 1,T]×[T 1,T] then for each δ′∈(0,δ] there exists η(δ′)>0 such that if (t 1,s 1), (t 2,s 2)∈[T 1,T]×[T 1,T] and |t 2−t 1|+|s 2−s 1|≤η(δ′) then |f(t 1,s 1)−f(t 2,s 2)|<δ′. In particular for all t 1, t 2∈[T 1,T] such that |t 2−t 1|≤η(δ′),

Thus, for all s∈[T 1,T], x∈[f(t 1,s)∧f(t 2,s),M] and t 1, t 2∈[T 1,T] such that |t 2−t 1|<η(δ′). So, by (B.20) we have |(x−f(t 2,s))−(x−f(t 1,s))|=|f(t 2,s)−f(t 1,s)|≤δ′ and by (B.19), we have

Note that (B.20) implies (f(t 1,s)−δ′)+≤f(t 2,s)≤f(t 1,s)+δ′. Consequently, we have

We combine the estimates (B.17), (B.21), and (B.22) in (B.18) to obtain the following bound:

Coming back to the left-hand side of (B.16), we conclude that for all δ′∈(0,δ], t 1, t 2∈[T 1,T] such that |t 2−t 1|≤η(δ′) and n≥N 0

where the second inequality uses the fact that A n(T)−A n(T 1)≤Δ for all n≥N 0 and t 1≤T. Replacing ε by ε/2∥g∥∞ in (ii), there exists δ 0>0 and N 0∈ℕ such that

for all n≥N 0. Therefore, if δ 0≥δ, then the second term of right-hand side of (B.24) is less than ε/2 in this case it suffices to take η 0=η(δ) and (B.16) holds. Otherwise, there exists η(δ 0) such that (B.24) is verified, consequently (B.16) holds for η 0=η(δ 0). □

Proof of (iv)

Fix T>0. Using a double difference, we have

For the term (B.25), we use the following reasoning. Let 0<t≤T. Choose N>0 such that A n(t)>0 for all n≥N. This N exists because A n(t)→A(t). Define for all n≥N, F n (s)=A n (s)/A n (t) if s<t and F n (s)=1 if t≤s. It is clear that {F n (⋅);n≥N} is a sequence of distribution functions that satisfies F n (⋅)⟶F(⋅), where F(s)=A(s)/A(t) if s<t and F(s)=1 if s≥t. From (i), the function s↦〈g(⋅−f(s)),ν〉 is bounded and continuous, except for countably many values of s∈(0,t]. Then, by the continuous mapping theorem (cf. [13, Theorem 2.3, Chap. 2]):

Then, replacing F n and F by their expressions and using the fact that A n(t)→A(t) one deduces

Then by (iii) the uniform convergence of the above limit holds on every finite interval, thus (B.25) tends to 0. For the term (B.26), we adopt the following strategy which is similar to that of the proof (ii). First, we isolate and bound the integral when s is close to 0. Indeed, we have not specified the behavior of the function f(t,s) when s→0, and the difference |f n (t,s)−f(t,s)| is not necessarily uniformly bounded for (t,s)∈[0,T]×[0,T]. Next, we eliminate the unbounded part of the integral with respect to the measure ν, in order to reduce the integral to a compact. Finally, we bound the difference on this compact. The objective is therefore to prove that, for each given T>0, g∈C b (ℝ+) and ε>0, there exists N>0 such that for all n≥N,

Observe first that the steps (B.12)–(B.14) of the proof of (iii) do not depend on the nature of the shift inside g, and are still valid here. For each ε>0, let therefore \(T_{1}= \frac{\varepsilon}{16L(\Vert g \Vert _{\infty} \vee1)}\): for all t∈[0,T 1] and n≥N 0 we have

The next step is to bound the part of the integral in (B.27) for the range t∈[T 1,T]. In order to obtain (B.27), it suffices to find some N 1>0 such that for n ≥N 1:

Set m 1(s):=f n(t,s) and m 2(s):=f(t,s). The arguments of the proof of (iii) between (B.17) and (B.18) still apply. Set therefore \(\varDelta=\frac{\varepsilon}{16(\Vert g \Vert _{\infty} \vee 1)}+L(T-T_{1})\), and fix M>0 be such that (B.17) holds. We then (B.18) holds as well. Since f n(⋅ ,⋅)→f(⋅ ,⋅) uniformly on [T 1,T]×[T 1,T], for each δ′≤δ there exists N 1(δ′)>0 such that

This bound implies: |(x−m 1(s))−(x−m 2(s))|=|m 1(s)−m 2(s)|≤δ′≤δ|, for all T 1≤t,s≤T, f n(t,s)∨f(t,s)≤x≤M and n≥N 1(δ′). Then by (B.19), we have

for all T 1≤t,s≤T, f n(t,s)∨f(t,s)≤x≤M and n≥N 1(δ′). From (B.29), we have (f(t,s)−δ′)+≤f n(t,s)≤f(t,s)+δ′. So,

Coming back to (B.18), we have

for all T 1≤t,s≤T and n≥N 1(δ′). The first inequality is by (B.31), (B.30), and (B.17). Then the estimate of the integral in (B.28) is obtained by integrating (B.32) on [T 1,t] with respect to dA n(s),

for all T 1≤t≤T and n≥N 1(δ′)∨N 0. The estimate of the last term in (B.33) is obtained as that of the last term in (B.24) by using (ii). □

Appendix C: Relationship with the single-class case

We begin by stating in the following lemma the properties of the sequences {V k (i);i≥1} and \(\{V^{0}_{k}(i);i \geq1\}\) defined in Sect. 5.1. Where there is no ambiguity, we shall denote also with V k (x) the common distribution function of the random variable V k , and similarly for \(V^{0}_{k}\).

Lemma C.1

We have the following properties:

-

(i)

The sequence {V k (i);i≥1} is i.i.d. with common distribution function given by

$$ V_k (x) = \bigl( e \bigl(I-P'\bigr) (\mathcal{B} * B) (x) \bigr) _k ,$$(C.1)with Laplace transform given by

$$ \hat{V}_k (s) = \bigl( e \bigl( I -P'\bigr) \bigl( I - \hat {B}(s)P'\bigr)^{-1} \hat {B}(s) \bigr) _k ,$$(C.2)and with the first two moments:

$$ \mathbb{E}(V_k) = ( e\beta Q)_k , \qquad \mathbb{E}\bigl(V^2_k\bigr) =\bigl( e\bigl( \beta^{(2)}+ 2 \beta P'Q \beta\bigr) Q\bigr)_{k} ,$$(C.3)where \(\beta=\operatorname{diag}\{\langle\chi,\nu_{k}\rangle\}\) and \(\beta^{(2)}=\operatorname{diag}\{\langle\chi^{2},\nu_{k}\rangle\}\).

-

(ii)

The sequence \(\{V^{0}_{k} (i); i \geq1\}\) is i.i.d. with common distribution function given by

$$ V^0_k (x) = \bigl( e\bigl(I-P'\bigr) \bigl(\mathcal{B} * B^0\bigr) (x) \bigr) _k .$$(C.4)

Proof

The proofs of (i) and (ii) are similar. Let us prove (i). The total service time of a customer of class k is the sum of one service time v k and of the service it requires after the end of this service. The latter duration is distributed according to V j with probability p kj and is zero with probability p k0. The service time after reentering the queue is independent from the first service. Accordingly, we have the identity for distribution functions:

Expressed in vector-matrix form, with \(V(x) = (V_{k}(x); k\in\mathcal{K})\) (a row vector), we have

and this is a multidimensional renewal equation in the sense of Lemma A.1. By application of the lemma, we obtain \(V(x) = e (I-P') ( \mathcal{B} * B )(x)\), hence (C.1). □

Coming back to our multiclass queue, a customer taken “at random” in the external input flow will be of class k with probability α k /α e , where \(\alpha_{e} := \sum_{k\in\mathcal{K}}\alpha_{k} = e.\alpha\) is the “equivalent” arrival rate of single-class customers. Accordingly, the service time distribution of such a typical customer should be a mixture of the distributions V k with these probabilities. The following result summarizes the properties of this distribution.

Lemma C.2

Consider the random variable v s whose distribution B s is formed as a mixture of the V k , proportionally to the arrival rates α k :B s(x)= \(\sum_{k\in\mathcal{K}} \alpha_{k} V_{k}(x) /\alpha_{e}\). The Laplace transform of this distribution is given by:

Its first two moments \(\beta^{s} = \mathbb {E}{v^{s}}\) and \(\beta^{s,2}= \mathbb {E}{(v^{s})^{2}}\) are given by:

Let the excess life distribution associated to B s(⋅) be denoted by \(B^{s}_{e}(\cdot)\), with first moment \(\beta_{e}^{s}\). Its Laplace transform satisfies the identities:

Proof

The formulas for the Laplace–Stieltjes transform and the moments are direct consequences of Lemma C.1. Expression (C.6) is the application of the classical formula \(\hat {B}^{s}_{e}(\theta) = (1-\hat {B}^{s}(\theta))/(\theta\beta^{s})\). The second expression (C.7) is derived from the first one as

and simplifying. Finally, the expansion (C.8) follows from the fact that \(\beta^{s}_{e} = \beta^{s,2}/2\beta^{s}\). □

Consider now the Processor Sharing queue with a single class of customers having the service time distribution B s with their first and second moment are denoted by β s and β s,2, and the arrival rate α e . We have the following result, the two first statements of which is Proposition 2 of [21], and the last statement of which is a simple consequence, using flow conservation equations.

Proposition C.1

Assume that α e β s>1. There exists a unique positive solution θ 0 to the equation:

If L(t) is the number of customers in the single class system at time t, then a.s.:

If D(t) is the number of customers that have departed the system at time t, then a.s.:

We have now gathered enough information to state the correspondence results with the single-class case. We refer to [23] for the terminology associated to the single-class queue.

Lemma C.3

Consider a processor sharing queue with arrival rate α e and service time distribution B s, associated to the Borel measure ν s. Assume that the data (α e ,ν s) is supercritical. Consider the initial measure ξ such that

where \(\mathcal{B}(t)=\sum_{n\geq0} ( BP')^{*n}(t)\) and C(t)=(I−B 0(t))+(I−B(t))QP′. Then the function T is given by:

where \(U_{e}(u) = \sum_{n=0}^{\infty} \rho^{n}(B^{s}_{e})^{*n}(u), H(x)=\int_{0}^{x}\langle1_{(y,+\infty)} , \xi \rangle \,\mathrm{d}y\), and \(B^{s}_{e}(\cdot)\) is the excess life distribution associated to B s(⋅). Its Laplace–Stieltjes transform is given by

where

Proof

From (4.12), it is easy to see that the Laplace–Stieltjes transform of T must satisfy: \(\hat {T}(\theta) =\hat {H}(\theta) + \rho \hat {F}_{e}(\theta) \hat {T}(\theta)\), which readily gives (C.9). The expression for \(\hat {H}(\theta)\) uses the identity \(\mathcal{B}*C = Q - \mathcal{B}*B^{0}\) mentioned in the proof of Lemma 4.2, and the fact that if \(H(t) =\int_{0}^{t} h(u)\, \mathrm {d}u\), then \(\hat {H}(\theta) = \theta^{-1}\hat{h}(\theta)\). □

Appendix D: Laws of large numbers for visits and residual times

In the following lemmas we give the properties of the sequences {N lk (i), i≥1} and {V lk (i,n),i≥1,n≥1} introduced in Sect. 5.2, where \(l,k\in \mathcal{K}\). For each j=1,…,N lk (i) let \(\tilde{V}_{lkk}(i,j)\) be the sum of service times experienced by the ith customer, between just after its jth visit of class k until that of the (j+1)st, included. It is a simple consequence of the memoryless nature of customer routing, and the independence of successive service times, that for each \(l,k\in\mathcal{K}\) and each i, the sequence \(\{ \tilde{V}_{lkk}(i,j) \}_{j=1}^{N_{lk}(i)}\) is i.i.d. and the common distribution does not depend on l. Accordingly, we drop the reference to l in the notation, which becomes \(\tilde{V}_{kk}\). For different i, the sequences are independent. Finally, for each n=1,…,N lk (i), V lk (i,n) can be expressed on the event {N lk (i)≥m} as

for all n=1,…,m.

Lemma D.1

For each \(l,k\in\mathcal{K}\), the sequence {N lk (i),i≥1} is i.i.d. with distribution given by ℙ(N lk (i)=0)=1−f lk , and for m≥1:

Here, f lk =Q kl /Q kk if l≠k and f kk =(P′Q) kk /Q kk .

Proof

Actually, N lk (i) is the number of visits of state k starting from state l in a time-homogeneous Markov chain with state space \(\mathcal{K}\) and transition matrix P. The sequence is i.i.d. because routing events of different customers are independent. Since the spectral radius of P is less than 1, we have (D.2) (cf. [13, Sect. 5.3, Chap. 5]). □

A shorthand notation will be useful in the following. An element k of \(\mathcal{K}\) being fixed (its value will always be clear from the context), let \(\bar{\mathcal{K}}=\mathcal{K}\backslash\{k\}\).

Lemma D.2

Provided that f lk >0 and f kk >0 respectively, the distributions V lk and \(\tilde{V}_{kk}\) of the random variables (V lk (1,1)|{N lk (1)≥1}) and \((\tilde{V}_{kk}(1,1) | \) {N kk (1)≥1}) are given by

Moreover, we have

for all m≥2 and n=2,…,m.

Proof

Conditioning on the sequence of classes visited by the customer:

Since ℙ(N lk ≥1)=f lk, we have (D.3). A similar reasoning holds for (D.4). The proof of (D.6) is by (D.1). Since V lk (1,n)−V lk (1,n−1) represents the sum of service times for one cycle around a class k, so we conclude from (D.4). □

Lemma D.3

For each \(l,k\in\mathcal{K}\), we have

Moreover, we have the following expected value:

Proof

Let us prove (D.7). For each \(l,k\in\mathcal{K}\), denote by

and observe that Ψ lk ∗B l =V lk f lk and \(\varPsi_{kk}=\tilde{V}_{kk}f_{kk}\). It suffices to prove that

For each \(k\in\mathcal{K}\), let \(\hat {B}_{k}\) be the Laplace Transform of the distribution B k and \(\hat{\mathcal{B}}\) be the Laplace transform of the matrix function \(\mathcal{B}:=\sum_{n=0}^{\infty}(BP')^{\ast n}\), which is \(\hat{\mathcal{B}}=(I-\hat {B}P')^{-1}\). The formula for inverting partitioned matrix (see, e.g., [20, p. 18]) yields for the partition \(\{k\},\bar{\mathcal{K}}\):

Since the Laplace transform of Ψ kk is \((\hat {B}P')_{k\bar {\mathcal{K}}}(I-(\hat {B}P')_{\bar{\mathcal{K}}\bar{\mathcal{K}}})^{-1}(\hat {B}P')_{\bar{\mathcal{K}}k}\) and \(\hat{\mathcal{B}}_{kk}>0\), we have from (D.10)

Hence, \(\sum_{n=0}^{\infty}(\hat{\varPsi}_{kk})^{n}=\hat{\mathcal{B}}_{kk}\) and by the uniqueness of the Laplace transform, the first identity in (D.9) is satisfied. For the second identity and by definition of Ψ lk , we have

where \(\hat{\varPsi}_{\bar{\mathcal{K}}k}=(\hat{\varPsi}_{lk},l\in\bar {\mathcal{K}})\) and \(\hat{\varPsi}_{lk}\) is the Laplace transform of Ψ lk . By (D.11), for \(l\in\bar{\mathcal{K,}}\) we have \(\hat{\varPsi}_{lk}=\hat{\mathcal{B}}_{kk}^{-1}\hat{\mathcal{B}}_{kl}\). Then the Laplace transform of \(\sum_{n=0}^{\infty}(\varPsi_{kk})^{\ast (n)}\ast\varPsi_{lk}\) is \(\hat{\mathcal{B}}_{kl}\).

This proves the lemma. □

Lemma D.4

(Convergence of random sums)

Consider a sequence of nonnegative real numbers r→∞. For each r, let N r be an integer-valued random variable with distribution ρ r, and \(\{ z^{r}_{i} \}_{i=1}^{\infty}\) be a sequence of random variables such that \(z^{r}_{1}\) and the increments \(z^{r}_{i+1} - z^{r}_{i}\) are conditionally independent and have the following conditional distributions:

Let ρ, ν 0 and ν be probability distributions, and let:

Finally, let g be a Borel-measurable, ν s -a.e. continuous function. Assume that the following holds as r→∞: \(\nu^{r}\stackrel{w}{\longrightarrow} \nu\), \(\nu_{0}^{r}\stackrel{w}{\longrightarrow} \nu_{0}\), and \(\rho^{r}\stackrel{w}{\longrightarrow} \rho\). Then the sequence of random variables

converges in distribution.

Proof

Let h:ℝ+→ℝ+ be a bounded and continuous function, it suffices to prove that the limit as r→∞ of

exists and is finite. Conditioning on the value of N r, we have

Let p≥1 be such that ρ({p})>0 (which implies ρ({≥p})>0). As a consequence of the ν s -a.e. continuity, g is a.e. continuous with respect to the measure ν 0∗ν ∗(p−1), and each term g(x 1+⋯+x p ) is a.e. continuous with respect to the product measure \(\nu_{0}^{r}(\mathrm {d}x_{1}) \times\nu^{r}(\mathrm {d}x_{2})\times\cdots\times\nu^{r}(\mathrm {d}x_{p})\). Since h is bounded and continuous, the function

is bounded and also a.e. continuous with respect to the product measure. Therefore, the integral inside the second member of (D.12) converges as r→∞. Since h is bounded, the limit is bounded above by ∥h∥ρ({m}). This implies the normal convergence of the series in the right-hand term of (D.12). Limit of A r thus exists and finite. □

Lemma D.5

Assume that conditions (3.9)–(3.12) and (3.14) holds. For \(l,k \in \mathcal{K}\) given, let g:ℝ+→ℝ+ be a Borel measurable and \((\mathcal{B}_{lk}\ast\nu_{l})\)-a.e. continuous such that as r→∞,

Then we have, as r→∞

Proof

The proof uses two steps.

- Step 1: :

-

According to Lemma D.3, the sequence \(\{X_{lk}^{r}(i)\}_{i = 1}^{\infty}\) defined as

$$X_{lk}^{r}(i) = \sum_{n=1}^{N_{lk}^{r}(i)}g\bigl(V_{lk}^{r}(i,n)\bigr)$$is i.i.d. with common expectation

$$ \mathbb{E}^r\bigl(X_{lk}^{r}(1)\bigr) = \bigl\langle g,\mathcal{B}_{kl}^{r}\ast \nu_{l}^{r}\bigr\rangle .$$(D.14)By (D.5) and (D.6) of Lemma D.2, \(\{ V_{lk}^{r}(1,n)\}_{n=1}^{\infty}\) and \(N^{r}_{lk}(1)\) satisfy the first two conditions of Lemma D.4 with \(\nu_{0}^{r}(\cdot)=V^{r}_{lk}(\mathrm{d}x)\), \(\nu^{r}(\cdot)=\tilde{V}^{r}_{kk}(\mathrm{d}x)\) and the distribution of \(N_{lk}^{r}(1)\) is given in Lemma D.1. Under assumptions (3.11), (3.12), the distributions \(V^{r}_{lk}(\mathrm{d}x),~\tilde{V}^{r}_{kk}(\mathrm{d}x)\) and that of \(N_{lk}^{r}\) converge as r→∞. This is due to the continuity of the linear operations involved in the definition of these distributions in Lemmas D.1 and D.2. On the other hand, it follows from (D.7) in Lemma D.3 that the measure ν s defined in Lemma D.4 corresponds here to \(\mathcal{B}_{lk}\ast \nu_{l}\). The conditions of Lemma D.4, are therefore fulfilled, and the distribution of the random sums \(X_{lk}^{r}(1)\), say \(\nu^{r}_{g,s}\) converges in distribution to some limit measure ν g,s .

- Step 2: :

-

Consider the counting process \(\bar{E}_{l}^{r}(t)\) of customers arriving to class l. We apply Lemma A.2 of [18] to this process, with the function χ as the lemma’s function g, and the measure \(\nu^{r}_{g,s}\) as the lemma’s measure ν. Assumption (A.2) of the lemma holds under assumption (3.10). Assumption (A.3) holds thanks to Lemma D.4. Assumption (A.4) is equivalent to (D.13) because \(\langle\chi, \nu^{r}_{g,s} \rangle = \langle g , \mathcal{B}_{kl}^{r}\ast\nu_{l}^{r} \rangle\), according to (D.14). Assumption (A.5) is trivial, and Assumptions (A.6)–(A.7) are a consequence of (3.14). Therefore,

$$\frac{1}{r}\sum_{i=1}^{r\bar{E}_{l}^{r}(t)} \sum _{n=1}^{N_{lk}^{r}(i)}g\bigl(V_{lk}^{r}(i,n)\bigr) \Rightarrow \alpha_{l} t \langle\chi, \nu_{g,s}\rangle = \alpha_{l} t \langle g , \mathcal{B}_{kl}\ast\nu_{l} \rangle $$which was to be proved.

□

Rights and permissions

About this article

Cite this article

Ben Tahar, A., Jean-Marie, A. The fluid limit of the multiclass processor sharing queue. Queueing Syst 71, 347–404 (2012). https://doi.org/10.1007/s11134-012-9287-9

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-012-9287-9