Abstract

The Great Plains drought of 1931–1939 was a prolonged socio-ecological disaster with widespread impacts on society, economy, and health. While its immediate impacts are well documented, we know much less about the disaster’s effects on distal human outcomes. In particular, the event’s effects on later life mortality remain almost entirely unexplored. Closing this gap would contribute to our understanding of the long-term effects of place-based stress. To help fill this gap, I use a new, massive, linked mortality dataset to investigate whether young men’s exposure to drought and dust storms in 341 Great Plains counties was linked to a higher risk of death in early-old age. Contrary to expectations, results suggest exposure to drought conditions had no obvious adverse effect among men aged 65 years or older at time of death—rather, the average age at death was slightly higher than for comparable men without exposure. This effect also appears to have been stronger among Plainsmen who stayed in place until the drought ended. A discussion of potential explanations for these counterintuitive results is provided.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Almost a century ago, the Great Plains were the setting for what would become one of the most impactful socio-ecological disasters in American history (Arthi, 2018; Egan, 2006; Hurt, 1981; Worster, 1979). In the spring of 1931, unrelenting agricultural droughtFootnote 1 and extreme heat fell upon the middle of the continent, from Texas to the Canadian prairies. Millions of acres of winter wheat—all of it hardy grassland before being plowed under the previous decade—dried out and died, devastating an agrarian economy already reeling from a collapse in the price of wheat (Egan, 2006; McLeman et al., 2014). With little to hold it in place, the overturned soil began to blow away with the strong Plains winds, creating rolling storms of choking dust and stinging sand that assailed the region’s residents. In some parts of the Plains, these conditions lasted 7 or 8 years.

While the drought’s immediate impacts on employment, economy, health, and mortality are well documented, little is known about its effects on distal human outcomes (Arthi, 2018; Cutler et al., 2007). In particular, the drought’s influence on the longevity of individuals who lived through it remains almost entirely unexplored. But it is possible that drought-related stresses increased the risk of premature death. Associations have been found between drought and stress (e.g., O’Brien et al., 2014; Polain et al., 2011; Stain et al., 2011) and between stress and mortality (e.g., Aldwin et al., 2011; Amick et al., 2002; Ferraro & Nuriddin, 2006; Rodgers et al., 2021), and the 1930s drought was a highly stressful period for individuals and families. This was likely even more so the case for those residing in the region of the Southern Plains that came to be known as the Dust Bowl, where the effects of drought and soil erosion were especially severe (Egan, 2006; Hornbeck, 2012; Worster, 1979).Footnote 2

Under such circumstances, drought and blowing dust and sand may have contributed to mortality risk later in life. Throughout the 1930s, isolated spikes in acute mortality were attributed to dust storm exposure, and “dust pneumonia,” in particular, was estimated to be responsible for thousands of excess deaths (Worster, 1979). However, these deaths occurred mainly among the young and elderly despite individuals of all ages being exposed to the same conditions. An unanswered question for those outside these vulnerable age groups is whether the onslaught of environmental insults directly or indirectly led to an elevated risk of premature later-life mortality. The answer may not only help explain the longevity effects of an unprecedented disaster but inform how mortality might be affected by future ecological shocks in an era of climate change-induced aridification (Romm, 2011; Alexander et al., 2018; Cowan et al., 2017). It may also provide a useful historical counterpoint to the understudied yet relevant association between drought and population health (Berman et al., 2017; Lynch et al., 2020).

With this study, I extend to longevity outcomes the approach by Cutler et al. (2007) and Arthi (2018) to relate early-life drought exposure to later-life health and human capital outcomes. Specifically, I investigate whether protracted exposure to the drought’s burdensome conditions as a young man resulted in a higher risk of death in early-old age or later (65 years and older), using a new public-use dataset that links the 1940 full-count Decennial Census to the Social Security Death Master File. Based on the stress-mortality pathway, I hypothesize that young men residing in the drought-afflicted Southern Plains throughout the 1930s experienced higher mortality after age 64 than peers who resided in agricultural regions less affected by drought, dust storms, or soil erosion during the same decade.

Linking drought and its effects to early-old age mortality

Scant research explicitly ties 1930s drought conditions to later-life mortality. However, the links between stress, health, and mortality in other contexts have received much theoretical, empirical, and clinical attention and I draw on some of this work to build the case for potential life span decrease among Great Plains residents. In particular, accelerated aging and biological age are relevant to the sustained and repeated stresses facing these individuals. Both relate to the possibility that the acute ecological and economic shocks at the onset of the drought evolved into chronic stresses as the drought continued through the decade, provoking longevity-reducing reactions inside the body. Broadly, the mechanism linking stress to mortality is the wear and tear that occurs “under the skin”—i.e., at the cellular, molecular, vascular, or biological system level (Hamczyk et al., 2020; Meier et al., 2019)—as the body labors to recover from chronic biochemical stress responses (Elliott et al., 2021; Foo et al., 2019). Biological aging characterizes cellular aging and functional decline in response to stress (Li et al., 2013; Forrester et al., 2021). When this process advances more rapidly than expected given the person’s chronological age, aging is accelerated. This can happen through somatic (physiological) and psychosocial mechanisms, with the latter as particularly important (Lantz et al., 2005; Rentscher et al., 2020).

Among adults, psychosocial stressors are negatively associated with long-term health (Rentscher et al., 2020; Russ et al., 2012; Schneiderman et al., 2005). Adverse outcomes include higher risk of depression, cardiovascular disease, and autoimmune disorders (Cohen et al., 2012). Stressors are also linked to higher mortality through cardiovascular etiologies (Everson-Rose & Lewis, 2005; Forrester et al., 2021; Pedersen et al., 2017). For residents of the Great Plains in the 1930s, persistent drought, heat, and blowing soil offered no shortage of psychosocial stress. Much of this stress originated in crop failures and associated negative consequences. Farm foreclosure, for example, was an especially distressing event (Perkins, quoted in Alston, 1983), as is often the case for farmers who lose their livelihoods in a drought (Bryan et al., 2020). It was also a widely shared experience; in 1933, at the peak of the Great Depression, more than 200,000 farms across the USA were foreclosed (Alston, 1983), a rate exceeding one farm in twenty. During this period, other psychosocial stresses included job loss, mounting debt, financial insecurity, property damage, and anxiety about when the rains would return to normal (the latter made worse by several incidents of rain sowing false hope throughout the decade) (Egan, 2006; Hurt, 1981).

Physiological stresses, meanwhile, are also associated with poorer long-term health outcomes (Seiler et al., 2020; Walter et al., 2012), and these were experienced with each dust storm that swept through the region. Inhalation of PM10 and PM2.5 mineral and biological particulates in dust and soil can scar lung tissue or invade the alveoli, causing conditions such as pneumoconiosis, sinusitis, asthma, and chronic obstructive pulmonary disease (Manisalidis et al., 2020; Morman & Plumlee, 2013; Schenker et al., 2009; Tong et al., 2017; Uchiyama, 2013). Metals in airborne dust have been shown to suppress immune function (Keil et al., 2018) and cause pulmonary damage (Nemery, 1990). At sub-acute levels, long-term exposure to dust and particulate matter can lead to chronic lung diseases (Alexander et al., 2018; Schenker et al., 2009), which in turn can increase mortality (Chen et al., 2019). Through the 1930s, acute somatic injuries were observed after major dust storms (Alexander et al., 2018), especially among the young and elderly (Burns et al., 2012), and it is highly plausible that sub-acute responses were also widely experienced. Moreover, a mechanical factor to wind events involving larger and heavier soil particles likely stimulated psychosocial stress reactions distinct from those induced by the storm after-effects described above. Aeolian saltation and sand creep regularly buried crops, dwellings, vehicles, and machinery under mounds several feet high or more (Hansen & Libecap, 2004; Burns et al., 2012). During a high wind, the blowing sand was sometimes harsh enough to blind cattle and strip paint from automobiles, making outdoor activities and travel especially unpleasant and dangerous (Egan, 2006). For many families, sealing the indoors and protecting children and belongings from the incursion of dust and sand was next to impossible, inducing despair about quality of life that could severely impact mental health (Burns et al., 2012).

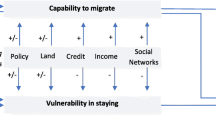

Altogether, conditions in the Great Plains during this period were often extraordinarily adverse. Figure 1 presents a conceptual model of the presumed effects pathways to higher male mortality in early-old age based on these conditions, with drought being the source cause of all effects. In the model, drought directly causes mental and emotional stress among residents while dust storms precipitate chronic somatic and psychosocial injuries. Drought and dust storms also lead to loss of livelihood and its attendant harms, such as insolvency, unemployment, and food insecurity, for individuals tied to the farm economy. These latter outcomes induce psychosocial stresses that, combined with physiological injuries, spur biological reactions that accelerate the aging process, leading to premature death later in life. Lack of data makes it challenging to determine which groups of residents may have been subject to lesser or greater levels of stress, but the consistency of climate and desertification in the Southern Plains during the 1930s (Hansen & Libecap, 2004; Hurt, 1981; Worster, 1979), combined with the regional economy’s focus on wheat as a cash crop (Egan, 2006), supports the presumption of a higher stress exposure baseline for most residents in this region. This confluence of conditions, specific to the Southern Plains, may have been uniquely injurious, even if psychosocial stresses stemming from collapsing agricultural prices were experienced throughout the USA, and not just in the Plains, during the 1930s.

Research design

Study area

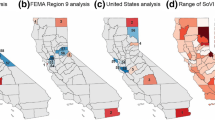

This study takes the form of a natural experiment (Craig et al., 2017; Zarulli, 2013), wherein Great Plains counties subject to extensive drought, soil loss, and windblown dust constitute a treatment region affecting all persons residing within it. Although the entirety of the Plains was subject to the drought, the Southern Plains subregion was also heavily affected by soil erosion and dust storms (Hansen & Libecap, 2004; Hurt, 1981). For example, a cumulative soil erosion map by Hornbeck (2012), using survey data collected in 1934 by the Soil Conservation Service (Online Resource 1), locates the region of moderate to high wind erosion predominantly within Nebraska, Kansas, Oklahoma, Texas, Colorado, and New Mexico. This map is representative of conditions throughout the decade and, consequently, I use it to delineate a geographically compact treatment region constituting 341 Southern Plains counties (Fig. 2) following a list of Great Plains counties by Gutmann et al. (2005) that defines the region by physiography and ecology. To reduce the confounding influence of urban dwellers who may have been less vulnerable to the effects of the drought, I exclude 36 counties home to cities with more than 30,000 residents per the 1930 Census (see Online Resource 2 for the list of excluded counties).

Study area. Wind-eroded Southern Plains counties (N = 245) in six states (Colorado, Kansas, Nebraska, New Mexico, Oklahoma, and Texas) comprise the main treatment region and are shaded in light brown while the subset of Dust Bowl counties (n = 96) is marked in red. The counties of the main control region (n = 196) in five states (Illinois, Indiana, Kentucky, Ohio, and Tennessee) are part of the historical corn and winter wheat belts and are shaded in green. Counties within or adjacent to study area containing cities home to more than 30,000 residents per the 1930 Decennial Census are excluded (see Online Resource 2)

Serving as the control region is a grouping of 196 Midwestern and Southern agricultural counties across five states, occupying the eastern half of the Midwest corn belt and the middle part of the historical corn and winter wheat belt centered along the 38th parallel north (Fig. 2). This region is situated within the Ohio River Basin (also known as the Ohio Valley), a watershed spanning seven states (ORSANCO, n.d.). Selection of counties into the control region is informed by thematic maps showing the boundaries of the Basin (ORSANCO, n.d.) and the agricultural regions of the country in the 1920s per the Department of Agriculture (Baker, 1922) (Online Resource 3). To reduce the physiographic and cultural heterogeneity of the control region, I exclude Ohio Valley counties on the east identified as part of Appalachia by the Appalachian Regional Commission (ARC, 2009).

Treatment and control groups will ideally have no significant differences in unobserved characteristics in a natural experiment. This allows the use of multivariable regression to identify a treatment effect after controlling for observed population traits (Craig et al., 2017). But while the Great Plains are ecologically unique, the people who settled and farmed the Plains were as eager to profit from the land as those who farmed the corn and wheat belts further east (Worster, 1986). Both treatment and control regions were largely agricultural, focusing on cash crops that led to widespread financial hardship for residents after the late 1920s collapse in commodity prices (Long & Siu, 2018; Worster, 1979). Similarly, both regions showed similar patterns in forced and voluntary sales of farms in the first half of the 1930s, although the pattern was stronger in the Plains than elsewhere in the country (Alston, 1983; Stauber & Regan, 1936). One aspect, however, that distinguishes the two regions is the more humid continental climate of the Ohio Valley, which was less severely impacted by the 1930s drought.Footnote 3

Even so, one can look more locally for an alternate contrast group. Within the Southern Plains was an area hit especially hard by drought, wind erosion, and dust storms. Due to annual fluctuations in precipitation, this area, popularly known as the Dust Bowl, has never been precisely delineated (McLeman et al., 2014). However, there is consensus from contemporary reports and maps about its general location being coterminous with the Oklahoma and Texas panhandles, southwestern Kansas, southeastern Colorado, and northeastern New Mexico (Porter & Finchum, 2009). Cartography from the US Department of Agriculture’s Natural Resources Conservation Service (NRCS, 2012) reflects this, identifying three overlapping areas centered on the Oklahoma panhandle as having experienced massive soil erosion at different times during the drought (Online Resource 4). For a robustness check, I partition the Plains treatment region into a Dust Bowl-specific alternate treatment area comprising 96 counties, and each of which was at least one-half covered by one or more of the three erosion boundaries in the NRCS map. The extent covered by these counties is congruent with the area defined as the Dust Bowl in previous studies, e.g., Long and Siu (2018), Lee and Gill (2015), and Porter and Finchum (2009). The remaining 245 counties, constituting an alternate control area, form an outer ring to the treatment area where soil erosion was significant during the 1930s but not experienced as intensely as in the Dust Bowl.

Data

Mortality data are drawn from CenSoc-DMF (Goldstein et al., 2021), one of two new public-use datasets from UC Berkeley’s CenSoc Project that link records from the 1940 Decennial Census and the Social Security Administration (SSA) at the individual level. CenSoc-DMF specifically links persons identified on the 1940 Census form to records in the SSA Death Master File (DMF), a database of approximately 83 million SSA-reported deaths between 1975 and 2005, representing an estimated 97% of all deaths occurring in the USA in that period.Footnote 4 (Records from the 1940 Census, numbering about 132 million, are maintained in the IPUMS USA database by the Minnesota Population Center (Ruggles et al., 2020)). In version 2.0 of CenSoc-DMF, released November 2020, approximately 7.4 million Census and SSA records are matched on first name, last name, and birthdate using the “ABE” automated matching method (Abramitzky et al., 2012, 2014, 2020). This approach applies an exact matching algorithm to standardized names but allows SSA-reported birth years up to 2 years earlier or later than Census-reported birth years to accommodate misreporting. A subset of matched records, for which Census and SSA birth years match exactly, represents 4.5 million individuals. These “ABE-conservative” records are used in this study as they are less likely to include false matches. Because linkage to SSA records by name is more accurate for men than for women due to the high rate of female surname change upon marriage, current versions of CenSoc-DMF only consist of male decedents.Footnote 5

The DMF window of observed deaths spans 31 years, from 1975 to 2005, with high coverage of deaths for males born from the 1900s to the 1920s. Decedents are left-truncated at 65 years of age, which coincides with the conventional starting age for longevity studies among older adults (e.g., Stoeldraijer et al., 2013; UN Population Division, 2013; Zuo et al., 2018). I focus on the 1909–1915 birth cohorts, encompassing a 7-year span of birth years containing the cohort (1910) that reached age 65 at the start of the observation window. I also impose an upper bound of 90 for age at death for this pooled sample, allowing counts of decedents from each birth cohort to span the same age range within the observation window, thus ensuring all cohorts are equally represented in terms of age at death. This right-truncation excludes 4.6% of observed cases, all of them representing men in the sample who died between ages 91 and 100.

Treatment intensity

As discussed above, the Southern Plains and the Ohio Valley represent the treatment and control areas, respectively, of a large-scale natural experiment where severe drought served as a treatment effect. At a smaller scale, the Dust Bowl counties form a distinct treatment area of extreme hardship within the Southern Plains. Even though almost all Plains counties were broadly similar on climate, ecology, mismanagement of cropland, and rampant pre-Depression speculation on agricultural commodities (Egan, 2006; Hornbeck, 2012; Worster, 1979, 1986)—attributes that collided in the early decades of the twentieth century to create a systemic risk for catastrophic environmental and economic failure (Hornbeck, 2012)—the arrival of drought in the early 1930s soon distinguished the Dust Bowl region from the rest of the Southern Plains by the fact that crop loss, soil erosion, and blowing dust were experienced more intensely in the Dust Bowl than elsewhere.Footnote 6

This intensity becomes relevant when considering that the drought lasted nearly a decade. Dosage becomes a concern, as individuals who left the Dust Bowl before the end of the drought received less treatment than those who stayed. Between 1930 and 1940, migration flows across the entire Great Plains region resulted in a net loss of approximately 669,000 people or 12% of the population in 1930 (Gutmann et al., 2005). Counties in the Dust Bowl contributed a greater share of out-migrants than elsewhere on the Plains (Hornbeck, 2012; Long & Siu, 2018); indeed, between the 1920s and 1930s, out-migration rates remained high in these counties while falling in the rest of the country (Long & Siu, 2018). In the context of unrelenting drought and economic depression, Dust Bowl out-migration may have been a reasonable response for many men and their families, but it also calls attention to the role of place attachment or rootedness for those who did not migrate. Hostile environmental conditions and economic shocks are decisive push factors for migration (Black et al., 2015; Boustan et al., 2010), yet three-quarters of Dust Bowl residents stayed in place through the entire drought (Burns et al., 2012). As most individuals in the Dust Bowl had been born and raised in the area or elsewhere in the Plains (Hudson, 1986), rootedness may have dissuaded families from relocating, even in the face of an unprecedented socio-natural disaster. Whether drought migrants were positively selected for departure or non-migrants were negatively selected, place attachment carries potential implications for male longevity. To explore this idea, subsets of the treatment groups are designated by their degree of rootedness, as discussed next.

Treatment and control groups

Assignment of individuals to the treatment and control groups or their subsets is based on state and county of residence on April 1, 1940, state and county of residence in 1935, and state or place of birth. These attributes are derived or taken directly from the 1940 full-count Census via the IPUMS USA database (Ruggles et al., 2020). Men are assigned to the main treatment group (n = 24,498) if they were born between 1909 and 1915 and resided in a household per Census Bureau definitions in any of 341 treatment counties in both 1935 and 1940. Main control group members (n = 19,152) are designated in the same manner for any of 196 Ohio Valley counties. In neither of these main groups are members encumbered by restrictions on within-region migration between 1935 and 1940; such constraints are instead used to subset the sample according to a three-step gradient of place attachment. The first subset differs from the full sample by restricting birthplace to states wholly or partially within the study area. For the treatment group, that means any of six Southern Plains states (Colorado, Kansas, Nebraska, New Mexico, Oklahoma, and Texas). For the control group, it means any of five Ohio Valley states (Illinois, Indiana, Kentucky, Ohio, and Tennessee). The second subset further restricts each sample to men who were living in the same county in 1940 as in 1935, while the third subset limits the sample yet further to men who lived in the same house in 1940 as in 1935. Each subset, representing a higher degree of rootedness, is smaller than the preceding one (see Table 1).

The same assignment process is used to identify the alternate treatment and control groups of the robustness check, only applied more locally. Men in the treatment group (n = 5056) are so assigned if they resided in any of the 96 Dust Bowl counties in 1935 and 1940, though not necessarily the same one, while men in the control group (n = 20,011) must have resided in any of the study area’s 245 Southern Plains counties outside the Dust Bowl in those same years. Together, these treatment and control groups cover the same counties as found in the main treatment area. The same three-step place attachment gradient is also used to identify subsets of these groups, which also decrease in size with increasing rootedness, as shown in Table 1.

There is, however, an additional grouping element unique to the alternate experiment. To help account for potential confounding caused by Dust Bowl out-migration, I differentiate stayers and leavers within the Dust Bowl treatment group. Men who resided in a Dust Bowl county in 1935 and 1940 are denoted as stayers, while men residing in a non-Dust Bowl county (inside or outside the Southern Plains) in 1940 are denoted as leavers. It is important to note that leavers received considerable exposure to drought; even if they left the region as early as the second half of 1935, they would have still experienced numerous dust storms (including the notorious “Black Sunday” storm of April 14 that gave rise to the term “Dust Bowl”) and the loss of their agricultural livelihoods during the first few years of the drought.Footnote 7 For simplicity and to address concerns about small cell sizes, the place attachment gradient is applied only to stayers, as even the full sample of leavers is small relative to the sample size of stayers.

Methods

I adapt the example of Arthi (2018) in using ordinary least squares (OLS) regression with fixed effects and sample weights to estimate mean age at death as a function of individual and place-based attributes.Footnote 8 Although Gaussian linear regression is inappropriate for modeling life expectancy from complete life tables because human age at death is negatively skewed (Moser et al., 2015), this caveat is less applicable to mortality distributions after age 60, which tend toward Gaussian when conditioned on surviving to the modal age at death (Kannisto, 2001; Robertson & Allison, 2012). In the present study, the imposition of fixed age-at-death truncation values on each birth cohort results in a mean age at death of 77.6 (SD = 7.04), not far from the modal age at death of 80 years.

I run four groups of regressions—the main experiment and three alternate experiments as a robustness check—in a manner resembling a fractional factorial design. Each group includes a regression run on the full sample (baseline) and each step of the place attachment gradient. For each instantiation, the form of the model is the same. Let Yisb represent the DMF-reported age at death (in years) for individual i born in state s in year b, let β1 represents a dichotomous treatment effect and X represents a vector of potentially confounding covariates with values obtained on Census Day (April 1) 1940, and let θ and γ represent fixed effects for birth state and birth year, respectively. We may then estimate an equation of the specification:

where X contains continuous variables for years of education and size of place (town, village, etc.), a factor variable for the number of family members residing with the respondent, and indicator variables for farm household status, housing tenure, marital status, race, farming occupation, and urban status, and ε is the error term. The model also includes an interaction between farm household status and farming occupation to identify nonlinear effects of prolonged drought for a key segment of the population. Birth year and birth state fixed effects are included to help control for important sources of unobserved heterogeneity (Arthi, 2018) and, in the former case, to control for the different left and right ages of truncation for each birth cohort. All work was done in R 4.1.1.

Table 2 describes and summarizes selected covariates for different groups within the analytic sample of observed decedents. Southern Plainsmen as a main treatment group were largely comparable to their Ohio Valley counterparts in marriage rate, family size, and years of completed schooling in 1940, although they were also somewhat more likely to have lived on a farm and owned the house in which they lived. Meanwhile, the Dust Bowl residents among these Plainsmen were slightly more educated than their non-Dust Bowl counterparts and a little less likely to have lived on a farm or owned a home. It is worth noting that the leaver subset of Dust Bowl residents showed distinctly higher levels of education, marriage, and homeownership relative to all other groups, as well as a considerably lower propensity to live on a farm.

One concern with linear regression on truncated data is the potential for downwardly biased coefficients, i.e., effect sizes that tend toward zero. This may occur if sample truncation reduces the observed variation and, consequently, reduces the average differences between groups within the sample (Goldstein & Breen, 2020; Greene, 2005). As a robustness check, I use maximum likelihood estimation (MLE) to identify the parameter estimates of the treatment effect and all covariates, on the assumption that the truncated age-at-death data follow a Gompertzian distribution.Footnote 9 These estimates are used to evaluate the congruity of each effect on average age at death between the OLS and truncated MLE approaches. Information on the MLE implementation used in this paper, newly developed for CenSoc data, is presented in Online Resource 5.

Results

OLS regression results for the main experiment, where the treatment group is all Southern Plainsmen, and the first alternate experiment, where the treatment group is all Dust Bowl residents, are presented in the left half of Table 3. As a reminder, effects apply to men with Social Security numbers who were aged at least 65 years and not more than 90 years at the time of death. The positive treatment coefficient for the main experiment (β1 = 0.52, p < 0.001) suggests that prolonged exposure to the drought and its effects was strongly linked with a higher mean age at death, which is opposite of the hypothesized outcome. Men residing in the Ohio Valley control region through the 1930s lived an average of 76.6 years, all else equal and without considering covariates, while their counterparts residing in the drought-stricken Southern Plains lived half a year longer. This outcome was not observed in the alternate experiment, which showed no statistically significant difference in mean age at death (β0 = 77.1) between treatment and control.

Regarding covariates, the two experiments produced a similar pattern of effect size and direction, although there were fewer statistically significant effects in the alternate. In the main experiment, each additional year of education increased the average age at death for observed men in the Southern Plains or Ohio Valley by one-fifth of a year (βeduc = 0.22, p < 0.001), while reducing it by 3 months among men who were not currently married or were renting their home in 1940. Residing with two or more additional family members and residence in a nonrural community were negatively associated with male longevity. Nonwhiteness, although only marginal in effect, carried a life expectancy penalty of four-and-a-half months (βrace = 0.38, p < 0.1). But perhaps the key finding was the seemingly protective effect of farming; men who lived and worked on farms in 1940 lived 8 months longer than nonfarmers, all else equal (βlived on farm x farm occup = 0.69, p < 0.001). This result conflicts with the expectation underpinning the stated hypothesis, as farmers and farm laborers are conceptually described in the “Linking drought and its effects to early-old age mortality” section as a population subset especially vulnerable to the detriments of the drought.

Despite showing no meaningful treatment effect, the alternate model in Table 3 is notable for the persistence of significance in effects for education, farming life (indicated by the farm interaction term), family size, and race across itself and the main experiment despite the contrast in the former being between two fundamentally similar groups from within the same Plains region. Taken one step further, when Dust Bowl men are disaggregated as stayers and leavers and contrasted with non-Dust Bowl Plainsmen (Table 4), education and farming life remain the only covariates with a statistically significant effect on longevity in all experiments. Results from the truncated MLE robustness check (see Online Resource 5) demonstrate very limited bias in the OLS estimators from any of the four experiments.

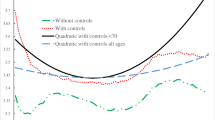

Treatment effects in the four experiments in Tables 3 and 4 are not conditioned on place attachment. To clarify intergroup differences for different levels of rootedness within each experiment, Fig. 3 shows OLS treatment effects across the place attachment gradient ordered on the x-axis by degree of rootedness. For the main experiment (Southern Plainsmen vs. Ohio Valley control group), increasing levels of place attachment do not substantially change the positive treatment effect on longevity, although the most rooted treatment group, whose members lived in the same house in 1940 as in 1935, may be slightly less advantaged.

Treatment effects for the main and alternate experiments in Tables 3 and 4, disaggregated by degree of rootedness to the Dust Bowl treatment area. Subsets in a group increase in their theorized level of place attachment from left to right: (1) baseline; (2) born in a local state; (3) born in a local state and lived in same county in 1935 and 1940; (4) born in a local state and lived in same house in 1935 and 1940. See “Treatment and control groups” section for details. In all experiments, treatment and control groups are conditioned identically from one gradient step to the next, except for the leavers treatment group, which has no conditioned states

The treatment effect pattern across the gradient is largely repeated for the three alternate experiments, except for the experiment contrasting Dust Bowl leavers with non-Dust Bowl Plainsmen. The overall Plainsmen advantage in old-age longevity relative to counterparts in the Ohio Valley appears driven by a two-tiered longevity regime inside the Southern Plains, which favored males who stayed in the drought-ravaged region through the entire decade.

Discussion

By all accounts, the Great Plains drought of the 1930s was an exceptionally difficult period; a time of economic depression, increasing privation, and compounding hardships for those who lived through it. However, in refuting the hypothesized negative association between drought exposure and mortality in early old age, the results of the main experiment suggest that even a disaster of the scale of the 1930s drought was not enough to subdue the men who stayed and waited for the rains to return. Undoubtedly, the residents of the Southern Plains in the 1930s were uncommonly resilient and stoic; known as “next year” people, they held out unyielding hope for a return to normal weather patterns (Hurt, 1981; Worster, 1979) and by the end of the decade were finally rewarded for their patience. Dispositional optimism can act as a psychological buffer against disaster (Gero et al., 2021; Nes & Segerstrom, 2006), and there is a growing body of work to suggest that drought and dust exposure may have few or no meaningfully adverse impacts on health or mortality (e.g., Cutler et al., 2007; Lynch et al., 2020; Menéndez et al., 2017). But the results of this study go as far as to suggest that prolonged drought-related hardships are somehow associated with a longer average life span, all else equal, which strains credibility and prompts the consideration of alternate explanations. Two are described below.

One possibility is that the outcomes presented in this paper may be biased by data constraints. The main concern here is selection bias when defining the analytic sample and the treatment and control groups within it (Elwert & Winship, 2014). The lower bounding of male deaths at age 65, for example, is a constraint that excludes from the analysis any biologically accelerated deaths occurring in midlife instead of early-old age. Truncation makes it impossible to know how many such deaths were unobserved, but if midlife mortality was more prevalent among Southern Plainsmen than among men in the Ohio Valley control group, we could expect a larger proportion of less-frail Plainsmen to reach age 65 (Costa, 2012; Zarulli, 2016). If sufficient data become available in the future to identify midlife deaths in the same proportion as CenSoc-DMF for ages 65 and above, it may behoove to repeat the study. Note that MLE, not linear regression, would be the appropriate modeling approach for deaths observed in middle age (around age 40) and older, as the distribution would now be distinctly exponential.

Another possible explanation for the longevity advantage among Plainsmen involves unobserved push/pull migration factors for men who left the Southern Plains and unobserved effects from the New Deal for those who stayed. Leaving the region may have been easier for some men; recall in Table 2 that Dust Bowl leavers had more education and smaller families than stayers. For these men, and all else equal, their departure might be seen as broadly consistent with conventional economic migration theory, which holds that migrants tend to be favorably self-selected for relocation to places with more opportunities (Borjas, 1987; Chiswick, 1999; Gabriel & Schmitz, 1995; Hamilton, 2014). Such migrants may be expected to live longer, too, to the extent that the attributes of self-selected out-migrants were gainfully leveraged later in the life course.Footnote 10 The persistently negative pattern of treatment effects for Dust Bowl leavers, however, is inconsistent with the notion of relatively advantaged out-migrants and may require a different interpretation of observed traits. For example, leavers being less than half as likely as stayers to live in a farming household in 1940 (Table 2) suggest that it may not have been the case that leavers succeeded in establishing new livelihoods in nonfarming trades as much as they were unsuccessful in re-establishing their farming livelihoods out-of-state. Such an outcome would likely be a source of stress that could continue to accelerate biological aging. Moreover, the longevity advantage for stayers might have been partly attributable to the effects of various programs to aid struggling farmers (unobserved in this analysis) implemented as part of President Roosevelt’s New Deal, which made available to the young men of the Southern Plains several paths toward gainful employment and the salutary benefits that accompany it.Footnote 11

Ultimately, while the results of this study do not support the expectation of a drought-specific adverse influence on longevity, the potential explanations described above do not deny a role for the accelerated aging hypothesis. Anecdotal evidence speaks of Dust Bowl survivors looking years older than their chronological ages would imply (Egan, 2006); of being worn out and frailer after years of drought and hardship, with ordinary tasks taking more effort than they used to (Burns et al., 2012). Drought, dust, and loss undeniably took a toll, and it seems more plausible that data constraints and migration selection concentrated a larger proportion of physiologically robust men in the Southern Plains than surviving the drought lengthened a person’s life.

Looking ahead, there are several opportunities to advance this line of inquiry. In addition to investigating longevity outcomes in light of the explanations above, place attachment remains a potentially informative variable. Many individuals and families choose to stay in place even when environmental conditions and long-term change suggest they should move (Adams, 2016). That three-quarters of Dust Bowl residents decided to stay in the Southern Plains despite nearly a decade of agricultural ruin and imposed financial destitution speaks forcefully to the attachment or rootedness stayers had for their farms and communities. Note that place attachment is not always positive—a person may feel trapped by obligations to family, employer, or lender, unable to move away even if he or she wanted to (Adams, 2016). But there is reason to expect that the attachment people felt for the Southern Plains was fundamentally positive, given that voluntary out-migration was often seen as the solution of last resort (Burns et al., 2012). The role of place attachment, of the reluctance to leave a place that has always been home, may be essential to understanding how stayers differed from leavers and how the mortality for both groups varied because of it.

Future work may address other limitations of the present study. For example, drought exposure effects on women’s mortality risk may vary relative to men’s risk owing to gendered differences in physiological response to chronic stress (Ferraro & Nuriddin, 2006; Mayor, 2015; Nielsen et al., 2008). Although the examination of women’s outcomes using name-matched longitudinal linked data is hindered by marriage-based surname changes (Asher et al., 2020), such efforts are necessary to properly evaluate disparities in outcomes for the other half of the population in the wake of a large-scale environmental and agricultural disaster. Samples of sufficient size could potentially be constructed if appropriate additional data were available in the future. On a related note, case selection on attributes other than sex may have possible confounding effects—for example, CenSoc data reflects the large majority of the US population born in the first two decades of the twentieth century only to the extent that Social Security numbers (SSNs) were assigned to all US adults after the Social Security Act was signed into law in August 1935. Fortunately, the use of SSNs to track the employment of US workers for the purpose of determining federal benefits suggests the proportion of adults likely to avoid obtaining an SSN prior to retirement is small. This, coupled with the intensive and national enumeration effort during the 1940 Census, reduces the risk that CenSoc-DMF is systematically biased. Nonetheless, if certain persons, such as undocumented immigrant workers or the precariously employed, were less able, willing, or likely to obtain SSNs before they died, these individuals would be underrepresented.

Other limitations are more conceptual than methodological. For example, the biological and stress-health pathways linking drought to mortality are unclear (Berman et al., 2017; Bryan et al., 2020), which has implications for the conceptual model (Fig. 1) presented in this study.Footnote 12 Resolving this may be a challenge; due to a lack of relevant data, studies tracing early-life pollution exposure to long-term outcomes are in short supply (Currie et al., 2014), while the dearth of contemporaneous attempts to track illness onset following dust storm events (Morman & Plumlee, 2013) complicate the effort to develop an empirical drought-mortality model for historical events. Nevertheless, the results presented here shed new light on the complexities of population-level resilience and rootedness and warrant further research into the long-term outcomes of the unique disaster that was the 1930s drought.

Notes

Agricultural drought refers to one of four main categories of drought, in which the absence of precipitation and soil moisture is sufficient to cause an adverse agricultural outcome such as crop failure or significant yield loss (Wilhite & Glantz, 1985).

The term “Dust Bowl” is often used as a shorthand for the entire Great Plains region during the 1930s drought, but more accurately applies only to the several dozen counties of the Southern Plains, centered on the Oklahoma panhandle, that experienced the greatest amount of erosion (Hurt, 1981; McLeman et al., 2014). I follow the latter usage in this study (see “Study area” section).

See, for example, the monthly maps of drought conditions in the USA by the National Centers for Environmental Information (https://www.ncdc.noaa.gov/temp-and-precip/drought/historical-palmers/psi/193101-193912), which show Palmer drought severity index scores at the sub-state level since January 1900. From early 1933 until the end of the drought, areas east of the Mississippi River and within the Ohio Valley (encompassing the control region) were not as severely and/or consistently affected by drought as areas in the Southern Plains.

To be included in the SSA DMF, a person must have obtained a Social Security number (SSN) prior to death. The Social Security Act of 1935, signed into law by President Franklin Roosevelt in response to the Great Depression (and motivated in part by the plight of Dust Bowl farmers and unemployed), authorized the issuance of SSNs to individuals to track the distribution of unemployment insurance and social welfare benefits.

A sibling datafile known as CenSoc-Numident (Goldstein et al., 2021) contains approximately 7.9 million records for male and female decedents at a sex ratio of approximately 1.29, but observed deaths begin in 1988 rather than 1975 as in CenSoc-DMF. For this study, the larger sample size and wider window of observed deaths in CenSoc-DMF was an advantage in capturing a wider age-at-death range for each birth cohort of interest.

Data are insufficient to know precisely when Dust Bowl leavers migrated between 1935 and 1940, although evidence suggests that the cataclysmic size and intensity of the Black Sunday storm may have been a turning point for many tentative leavers (Burns et al., 2012).

The CenSoc-DMF dataset contains a vector of post-stratification weights for all ABE-conservative linked records intended to reconfigure the sample to the Human Mortality Database (www.mortality.org) on age at death and other variables (see Goldstein & Breen, 2020).

The Gompertz distribution is an exponential distribution commonly used to model human age at death and whose exponential function represents the age-dependent rise in a population’s death rate. In developed countries, the distribution is a largely accurate model of population-scale human mortality from around age 30 to around age 85 (Easton & Hirsch, 2008).

Dust Bowl historians such as Hurt (1981) and Worster (1979) have stated that the popular notion of out-migrating Plainsmen and their families as destitute victims of drought is an inaccurate representation of the migration decision for thousands of farming families. Moving, especially when it involved long distances, was a challenging endeavor that required access to a vehicle and money to pay for fuel and tires—assets that were usually obtained by selling land or possessions. From this perspective, leavers were certainly more advantaged than stayers who would have left if they had had the resources to do so.

New Deal agencies and bodies such as the Farm Security Administration, Soil Conservation Service, and Civilian Conservation Corps provided a range of opportunities to employ idled workers and restore the agricultural viability of the Great Plains.

One implication involves whether the presumed biological pathways to mortality are plausible, considering that data are unavailable regarding the physiological/biological responses that may have occurred between intervention and death within the treated groups.

References

Abramitzky, R., Boustan, L. P., & Eriksson, K. (2012). Europe’s tired, poor, huddled masses: Self-selection and economic outcomes in the age of mass migration. American Economic Review, 102(5), 1832–1856. https://doi.org/10.1257/aer.102.5.1832

Abramitzky, R., Boustan, L. P., & Eriksson, K. (2014). A nation of immigrants: Assimilation and economic outcomes in the age of mass migration. Journal of Political Economy, 122(3), 467–506. https://doi.org/10.1086/675805

Abramitzky, R., Mill, R., & Pérez, S. (2020). Linking individuals across historical sources: A fully automated approach. Historical Methods, 53(2), 94–111. https://doi.org/10.1080/01615440.2018.1543034

Adams, H. (2016). Why populations persist: Mobility, place attachment and climate change. Population and Environment, 37, 429–448. https://doi.org/10.1007/s11111-015-0246-3

Aldwin, C. M., Molitor, N.-T., Spiro, A., Levenson, M. R., Molitor, J., & Igarashi, H. (2011). Do stress trajectories predict mortality in older men? Longitudinal findings from the VA Normative Aging Study. Journal of Aging Research, 2011, 896109. https://doi.org/10.4061/2011/896109

Alexander, R., Nugent, C., & Nugent, K. (2018). The Dust Bowl in the US: An analysis based on current environmental and clinical studies. American Journal of the Medical Sciences, 356(2), 90–96. https://doi.org/10.1016/j.amjms.2018.03.015

Alston, L. J. (1983). Farm foreclosures in the United States during the interwar period. Journal of Economic History, 43(4), 885–903. https://doi.org/10.1017/S0022050700030801

Amick, B. C., McDonough, P., Chang, H., Rogers, W. H., Pieper, C. F., & Duncan, G. (2002). Relationship between all-cause mortality and cumulative working life course psychosocial and physical exposures in the United States labor market from 1968 to 1992. Psychosomatic Medicine, 64(3), 370–381. https://doi.org/10.1097/00006842-200205000-00002

ARC (Appalachian Regional Commission) [cartographer]. (2009). Subregions in Appalachia [map]. https://www.arc.gov/wp-content/uploads/2020/06/Subregions_2009_Map-1.pdf

Arthi, V. (2018). “The dust was long in settling”: Human capital and the lasting impact of the American Dust Bowl. Journal of Economic History, 78(1), 196–230. https://doi.org/10.1017/S0022050718000074

Asher, J., Resnick, D., Brite, J., Brackbill, R., & Cone, J. (2020). An introduction to probabilistic record linkage with a focus on linkage processing for WTC registries. International Journal of Environmental Research and Public Health, 17, 6937. https://doi.org/10.3390/ijerph17186937

Baker, O. E. (1922). A graphic summary of American agriculture, based largely on the Census of 1920. Yearbook of the Department of Agriculture, Publication 878. Washington, DC: U.S. Government Printing Office. https://doi.org/10.5962/bhl.title.25330

Berman, J. D., Ebisu, K., Peng, R. D., Dominici, F., & Bell, M. L. (2017). Drought and the risk of hospital admissions and mortality in older adults in western USA from 2000 to 2013: A retrospective study. Lancet Planetary Health, 1(1), e17–e25. https://doi.org/10.1016/S2542-5196(17)30002-5

Black, D. A., Sanders, S. G., Taylor, E. J., & Taylor, L. J. (2015). The impact of the Great Migration on mortality of African Americans: Evidence from the Deep South. American Economic Review, 105(2), 477–503. https://doi.org/10.1257/aer.20120642

Borjas, G. J. (1987). Self-selection and the earnings of immigrants. American Economic Review, 77(4), 531–553.

Boustan, L. P., Fishback, P. V., & Kantor, S. (2010). The effect of internal migration on local labor markets: American cities during the Great Depression. Journal of Labor Economics, 28(4), 719–746. https://doi.org/10.1086/653488

Bryan, K., Ward, S., Roberts, L., White, M. P., Landeg, O., Taylor, T., & McEwen, L. (2020). The health and well-being effects of drought: Assessing multi-stakeholder perspectives through narratives from the UK. Climatic Change, 163, 2073–2095. https://doi.org/10.1007/s10584-020-02916-x

Burns, K. (Director), Duncan, D., & Dunfey, J. (Producers). (2012). The Dust Bowl [documentary miniseries]. Walpole, NH: Florentine Films, & Washington, DC: WETA-TV.

Chen, C. -H., Wu, C. -D., Chiang, H. -C., Chu, D., Lee, K. -Y., Lin, W. -Y., et al. (2019). The effects of fine and coarse particulate matter on lung function among the elderly. Nature Scientific Reports, 9, 14790. https://doi.org/10.1038/s41598-019-51307-5

Chiswick, B. R. (1999). Are immigrants favorably self-selected? AEA Papers and Proceedings, 89(2), 181–185.

Cohen, S., Janicki-Deverts, D., Doyle, W. J., Miller, G. E., Frank, E., Rabin, B. S., & Turner, R. B. (2012). Chronic stress, glucocorticoid receptor resistance, inflammation, and disease risk. PNAS, 109(16), 5995–5999. https://doi.org/10.1073/pnas.1118355109

Costa, D. L. (2012). Scarring and mortality selection among Civil War POWs: A long-term mortality, morbidity, and socioeconomic follow-up. Demography, 49(4), 1185–1206. https://doi.org/10.1007/s13524-012-0125-9

Cowan, T., Hegerl, G. C., Colfescu, I., Bollasina, M., Purich, A., & Boschat, G. (2017). Factors contributing to record-breaking heat waves over the Great Plains during the 1930s Dust Bowl. Journal of Climate, 30(7), 2437–2461. https://doi.org/10.1175/JCLI-D-16-0436.1

Craig, P., Katikireddi, S. V., Leyland, A., & Popham, F. (2017). Natural experiments: An overview of methods, approaches, and contributions to public health intervention research. Annual Review of Public Health, 38, 39–56. https://doi.org/10.1146/annurev-publhealth-031816-044327

Currie, J., Zivin, J. G., Mullins, J., & Neidell, M. (2014). What do we know about short- and long-term effects of early-life exposure to pollution? Annual Review of Resource Economics, 6, 217–247. https://doi.org/10.1146/annurev-resource-100913-012610

Cutler, D. M., Miller, G., & Norton, D. M. (2007). Evidence on early-life income and late-life health from America’s Dust Bowl era. PNAS, 104(33), 13244–13249. https://doi.org/10.1073/pnas.0700035104

Easton, D. M., & Hirsch, H. R. (2008). For prediction of elder survival by a Gompertz model, number dead is preferable to number alive. AGE, 30, 311–317. https://doi.org/10.1007/s11357-008-9073-0

Egan, T. (2006). The worst hard time: The untold story of those who survived the Great American Dust Bowl. Mariner Books/Houghton Mifflin.

Elliott, M. L., Caspi, A., Houts, R. M., Ambler, A., Broadbent, J. M., Hancox, R. J., et al. (2021). Disparities in the pace of biological aging among midlife adults of the same chronological age have implications for future frailty risk and policy. Nature Aging, 1, 295–308. https://doi.org/10.1038/s43587-021-00044-4

Elwert, F., & Winship, C. (2014). Endogenous selection bias: The problem of conditioning on a collider variable. Annual Review of Sociology, 40, 31–53. https://doi.org/10.1146/annurev-soc-071913-043455

Everson-Rose, S. A., & Lewis, T. T. (2005). Psychosocial factors and cardiovascular diseases. Annual Review of Public Health, 26, 469–500. https://doi.org/10.1146/annurev.publhealth.26.021304.144542

Ferraro, K. F., & Nuriddin, T. A. (2006). Psychological distress and mortality: Are women more vulnerable? Journal of Health and Social Behavior, 47(3), 227–241. https://doi.org/10.1177/002214650604700303

Foo, H., Mather, K. A., Thalamuthu, A., & Sachdev, P. S. (2019). The many ages of man: Diverse approaches to assessing ageing-related biological and psychological measures and their relationship to chronological age. Current Opinion in Psychiatry, 32(2), 130–137. https://doi.org/10.1097/YCO.0000000000000473

Forrester, S. N., Zmora, R., Schreiner, P. J., Jacobs, D. R., Jr., Roger, V. L., Thorpe, R. J., Jr., & Kiefe, C. I. (2021). Accelerated aging: A marker for social factors resulting in cardiovascular events? SSM - Population Health, 13, 100733. https://doi.org/10.1016/j.ssmph.2021.100733

Gabriel, P. E., & Schmitz, S. (1995). Favorable self-selection and the internal migration of young white males in the United States. Journal of Human Resources, 30(3), 460–471.

Gero, K., Aida, J., Shirai, K., Kondo, K., & Kawachi, I. (2021). Dispositional optimism and disaster resilience: A natural experiment from the 2011 Great East Japan Earthquake and Tsunami. Social Science and Medicine, 273, 113777. https://doi.org/10.1016/j.socscimed.2021.113777

Goldstein, J. R., Alexander, M., Breen, C., González, A. M., Menares, F., Snyder, M., & Yildirim, U. (2021). CenSoc Mortality File: Version 2.0. Berkeley, CA: University of California. https://censoc.berkeley.edu

Goldstein, J. R., & Breen, C. (2020). Berkeley Unified Numident Mortality Database: Public administrative records for individual level mortality research [Working paper]. Berkeley, CA: University of California.

Greene, W. H. (2005). Censored data and truncated distributions. SSRN. https://doi.org/10.2139/ssrn.825845

Gutmann, M. P., Deane, G. D., Lauster, N., & Peri, A. (2005). Two population–environment regimes in the Great Plains of the United States, 1930–1990. Population and Environment, 27(2), 191–225. https://doi.org/10.1007/s11111-006-0016-3

Hamczyk, M. R., Nevado, R. M., Barettino, A., Fuster, V., & Andrés, V. (2020). Biological vs. chronological aging: JACC Focus Seminar. Journal of the American College of Cardiology, 75(8), 919–930. https://doi.org/10.1016/j.jacc.2019.11.062

Hamilton, T. G. (2014). Selection, language heritage, and the earnings trajectories of Black immigrants in the United States. Demography, 51(3), 975–1002. https://doi.org/10.1007/s13524-014-0298-5

Hansen, Z. K., & Libecap, G. D. (2004). Small farms, externalities, and the Dust Bowl of the 1930s. Journal of Political Economy, 112(3), 665–694. https://doi.org/10.1086/383102

Hornbeck, R. (2012). The enduring impact of the American Dust Bowl: Short- and long-run adjustments to environmental catastrophe. American Economic Review, 102(4), 1477–1507. https://doi.org/10.1257/aer.102.4.1477

Hudson, J. C. (1986). Who was “forest man?” Sources of migration to the Plains. Great Plains Quarterly, 6, 69–83.

Hurt, R. D. (1981). The Dust Bowl: An agricultural and social history. Nelson-Hall.

Kannisto, V. (2001). Mode and dispersion of the length of life. Population: An English Selection, 13(1), 159–171.

Keil, D. E., Buck, B., Goossens, D., McLaurin, B., Murphy, L., Leetham-Spencer, M., et al. (2018). Nevada desert dust with heavy metals suppresses IgM antibody production. Toxicology Reports, 5, 258–269. https://doi.org/10.1016/j.toxrep.2018.01.006

Lantz, P. M., House, J. S., Mero, R. P., & Williams, D. R. (2005). Stress, life events, and socioeconomic disparities in health: Results from the Americans’ Changing Lives Study. Journal of Health and Social Behavior, 46, 274–288. https://doi.org/10.1177/002214650504600305

Lee, J. A., & Gill, T. E. (2015). Multiple causes of wind erosion in the Dust Bowl. Aeolian Research, 19, 15–36. https://doi.org/10.1016/j.aeolia.2015.09.002

Li, T., & Anderson, J. J. (2013). Shaping human mortality patterns through intrinsic and extrinsic vitality processes. Demographic Research, 28, 341–372. https://doi.org/10.4054/DemRes.2013.28.12

Long, J., & Siu, H. (2018). Refugees from dust and shrinking land: Tracking the Dust Bowl migrants. Journal of Economic History, 78(4), 1001–1033. https://doi.org/10.1017/S0022050718000591

Lynch, K. M., Lyles, R. H., Waller, L. A., Abadi, A. M., Bell, J. E., & Gribble, M. O. (2020). Drought severity and all-cause mortality rates among adults in the United States: 1968–2014. Environmental Health, 19, 52. https://doi.org/10.1186/s12940-020-00597-8

Manisalidis, I., Stavropoulou, E., Stavropoulos, A., & Bezirtzoglou, E. (2020). Environmental and health impacts of air pollution: A review. Frontiers in Public Health, 8, 14. https://doi.org/10.3389/fpubh.2020.00014

Mayor, E. (2015). Gender roles and traits in stress and health. Frontiers in Psychology, 6, 779. https://doi.org/10.3389/fpsyg.2015.00779

McLeman, R. A., Dupre, J., Ford, L. B., Ford, J., Gajewski, K., & Marchildon, G. (2014). What we learned from the Dust Bowl: Lessons in science, policy, and adaptation. Population and Environment, 35, 417–440. https://doi.org/10.1007/s11111-013-0190-z

Meier, H. C. S., Hussein, M., Needham, B., Barber, S., Lin, J., Seeman, T., & Roux, A. D. (2019). Cellular response to chronic psychosocial stress: Ten-year longitudinal changes in telomere length in the Multi-Ethnic Study of Atherosclerosis. Psychoneuroendocrinology, 107, 70–81. https://doi.org/10.1016/j.psyneuen.2019.04.018

Menéndez, I., Derbyshire, E., Carrillo, T., Caballero, E., Engelbrecht, J. P., Romero, L. E., et al. (2017). Saharan dust and the impact on adult and elderly allergic patients: The effect of threshold values in the northern sector of Gran Canaria, Spain. International Journal of Environmental Health Research, 27(2), 144–160. https://doi.org/10.1080/09603123.2017.1292496

Moser, A., Clough-Gorr, K., & Zwahlen, M. (2015). Modeling absolute differences in life expectancy with a censored skew-normal regression approach. PeerJ, 3, e1162. https://doi.org/10.7717/peerj.1162

Morman, S. A., & Plumlee, G. S. (2013). The role of airborne mineral dusts in human disease. Aeolian Research, 9, 803–829. https://doi.org/10.1016/j.aeolia.2012.12.001

National Resources Conservation Service (NRCS) [cartographer]. (2012). Areas subject to severe wind erosion, 1935–1938 [map]. Scale not given. Map ID: m12545a/55. Beltsville, MD: U.S. Department of Agriculture, NRCS, Soil Science and Resource Assessment, Resource Assessment Division. https://www.nrcs.usda.gov/wps/portal/nrcs/detail/national/about/history/?cid=stelprdb1049437

Nemery, B. (1990). Metal toxicity and the respiratory tract. European Respiratory Journal, 3(2), 202–219.

Nes, L. S., & Segerstrom, S. C. (2006). Dispositional optimism and coping: A meta-analytic review. Personality and Social Psychology Review, 10(3), 235–251. https://doi.org/10.1207/s15327957pspr1003_3

Nielsen, N. R., Kristensen, T. S., Schnohr, P., & Grønbæk, M. (2008). Perceived stress and cause-specific mortality among men and women: Results from a prospective cohort study. American Journal of Epidemiology, 168(5), 481–491. https://doi.org/10.1093/aje/kwn157

O’Brien, L. V., Berry, H. L., Coleman, C., & Hanigan, I. C. (2014). Drought as a mental health exposure. Environmental Research, 131, 181–187. https://doi.org/10.1016/j.envres.2014.03.014

Ohio River Valley Water Sanitation Commission (ORSANCO) [cartographer]. (2013). Counties of the Ohio River Basin [map]. In: Characterizing the water-use in the Ohio River Basin [map]. Scale not given. Cincinnati, OH: ORSANCO. https://www.orsanco.org/wp-content/uploads/2016/09/Characterizing-Water-Use-in-the-Ohio-River-Water-Resources-Initiative.pdf

Pedersen, S. S., von Känel, R., Tully, P. J., & Denollet, J. (2017). Psychosocial perspectives in cardiovascular disease. European Journal of Preventive Cardiology, 24(3S), 108–115. https://doi.org/10.1177/2047487317703827

Polain, J. D., Berry, H. L., & Hoskin, J. O. (2011). Rapid change, climate adversity and the next ‘big dry’: Older farmers’ mental health. Australian Journal of Rural Health, 19(5), 239–243. https://doi.org/10.1111/j.1440-1584.2011.01219.x

Porter, J. C., & Finchum, G. A. (2009). Redefining the Dust Bowl region via popular perception and geotechnology. Great Plains Research, 19(2), 201–214.

Rentscher, K. E., Carroll, J. E., & Mitchell, C. (2020). Psychosocial stressors and telomere length: A current review of the science. Annual Review of Public Health, 41, 223–245. https://doi.org/10.1146/annurev-publhealth-040119-094239

Robertson, H. T., & Allison, D. B. (2012). A novel generalized normal distribution for human longevity and other negatively skewed data. PLoS ONE, 7(5), e37025. https://doi.org/10.1371/journal.pone.0037025

Rodgers, J., Cuevas, A. G., Williams, D. R., Kawachi, I., & Subramanian, S. V. (2021). The relative contributions of behavioral, biological, and psychological risk factors in the association between psychosocial stress and all-cause mortality among middle- and older-aged adults in the USA. GeroScience. https://doi.org/10.1007/s11357-020-00319-5

Romm, J. (2011). The next dust bowl. Nature, 478, 450–451. https://doi.org/10.1038/478450a

Ruggles, S., Flood, S., Goeken, R., Grover, J., Meyer, E., Pacas, J., & Sobek, M. (2020). IPUMS USA: Version 10.0 . Minneapolis, MN: IPUMS.

Russ, T. C., Stamatakis, E., Hamer, M., Starr, J. M., Kivimäki, M., & Batty, G. D. (2012). Association between psychological distress and mortality: Individual participant pooled analysis of 10 prospective cohort studies. BMJ, 345, e4933. https://doi.org/10.1136/bmj.e4933

Schenker, M. B., Pinkerton, K. E., Mitchell, D., Vallyathan, V., Elvine-Kreis, B., & Green, F. H. Y. (2009). Pneumoconiosis from agricultural dust exposure among young California farmworkers. Environmental Health Perspectives, 117(6), 988–994. https://doi.org/10.1289/ehp.0800144

Schneiderman, N., Ironson, G., & Siegel, S. D. (2005). Stress and health: Psychological, behavioral, and biological determinants. Annual Review of Clinical Psychology, 1, 607–628. https://doi.org/10.1146/annurev.clinpsy.1.102803.144141

Seiler, A., Fagundes, C. P., & Christian, L. M. (2020). Chap. 6: The impact of everyday stressors on the immune system and health. In: A. Choukèr (ed.), Stress challenges and immunity in space (pp. 71–92). Cham, Switzerland: Springer.

Stain, H. J., Kelly, B., Carr, V. J., Lewin, T. J., Fitzgerald, M., & Fragar, L. (2011). The psychological impact of chronic environmental adversity: Responding to prolonged drought. Social Science and Medicine, 73(11), 1593–1599. https://doi.org/10.1016/j.socscimed.2011.09.016

Stauber, B. R., & Regan, M. M. (1936). The farm real estate situation, 1935–1936. USDA Circular No. 417. Washington, DC: U.S. Department of Agriculture.

Stoeldraijer, L., van Duin, C., van Wissen, L., & Janssen, F. (2013). Impact of different mortality forecasting methods and explicit assumptions on projected future life expectancy: The case of the Netherlands. Demographic Research, 29, 323–354. https://doi.org/10.4054/DemRes.2013.29.13

Tong, D. Q., Wang, J. X. L., Gill, T. E., Lei, H., & Wang, B. (2017). Intensified dust storm activity and Valley fever infection in the southwestern United States. Geophysical Research Letters, 44(9), 4304–4312. https://doi.org/10.1002/2017GL073524

Uchiyama, I. (2013). Chronic health effects of inhalation of dust or sludge. Japan Medical Association Journal, 56(2), 91–95.

UN Population Division. (2013). Life expectancy and mortality at older ages. PopFacts No. 2013/8. New York: United Nations, Department of Economic and Social Affairs. https://www.un.org/en/development/desa/population/publications/pdf/popfacts/PopFacts_2013-8_new.pdf

Walter, S., Mackenbach, J., Vokó, Z., Lhachimi, S., Ikram, M. A., Uitterlinden, A. G., et al. (2012). Genetic, physiological, and lifestyle predictors of mortality in the general population. American Journal of Public Health, 102(4), e3–e10. https://doi.org/10.2105/AJPH.2011.300596

Wilhite, D. A., & Glantz, M. H. (1985). Understanding the drought phenomenon: The role of definitions. Water International, 10(3), 111–120. https://doi.org/10.1080/02508068508686328

Worster, D. (1979). Dust Bowl: The Southern Plains in the 1930s. Oxford University Press.

Worster, D. (1986). The Dirty Thirties: A study in agricultural capitalism. Great Plains Quarterly, 6, 107–116.

Zarulli, V. (2013). The effect of mortality shocks on the age-pattern of adult mortality. Population, 68(2), 265–292. https://doi.org/10.3917/popu.1302.0303

Zarulli, V. (2016). Unobserved heterogeneity of frailty in the analysis of socioeconomic differences in health and mortality. European Journal of Population, 32, 55–72. https://doi.org/10.1007/s10680-015-9361-1

Zuo, W., Jiang, S., Guo, Z., Feldman, M. W., & Tuljapurkar, S. (2018). Advancing front of old-age human survival. PNAS, 115(44), 11209–11214. https://doi.org/10.1073/pnas.1812337115

Acknowledgements

The author gratefully acknowledges Dr. Joshua Goldstein, Dr. Leora Lawton, Dr. Elizabeth Fussell, and session attendees at the 2021 PAA Annual Meeting for their valuable input and guidance.

Funding

The research leading to these results received funding from the National Institute on Aging under grant #R01AG058940.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Atherwood, S. Does a prolonged hardship reduce life span? Examining the longevity of young men who lived through the 1930s Great Plains drought. Popul Environ 43, 530–552 (2022). https://doi.org/10.1007/s11111-022-00398-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11111-022-00398-w