Abstract

It is well known that Sobolev-type orthogonal polynomials with respect to measures supported on the real line satisfy higher-order recurrence relations and these can be expressed as a (2N + 1)-banded symmetric semi-infinite matrix. In this paper, we state the connection between these (2N + 1)-banded matrices and the Jacobi matrices associated with the three-term recurrence relation satisfied by the standard sequence of orthonormal polynomials with respect to the 2-iterated Christoffel transformation of the measure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Given a vector of measures (μ0, μ1,⋯, μm) such that μk is supported on a set Ek, k = 0, 1,⋯, m, of the real line, consider the Sobolev inner product

Several examples of sequences of orthogonal polynomials with respect to the above inner products have been studied in the literature (see [23] as a recent survey):

-

1.

When Ek, k = 0, 1,⋯,m, are infinite subsets of the real line. (Continuous Sobolev)

-

2.

When E0 is an infinite subset of the real line and Ek, k = 1,⋯, m, are finite subsets (Sobolev-type)

-

3.

When Em is an infinite subset of the real line and Ek, k = 0,⋯, m − 1, are finite subsets.

In the above cases, the three-term recurrence relation that every sequence of orthogonal polynomials (with respect to a measure supported on an infinite subset of the real line) satisfies, does not hold anymore. This is a direct consequence of the fact that the multiplication operator by x is not symmetric with respect to any of the above mentioned situations.

In the Sobolev-type case, you get a multiplication operator by a polynomial intimately related with the support of the discrete measures. In [17], an illustrative example when dμ0 = xαe−xdx, α > − 1, \(x\in \lbrack 0,+\infty )\) and dμk(x) = Mkδ(x), Mk ≥ 0, k = 1, 2,⋯, m, has been studied. In general, there exists a symmetric multiplication operator for a general Sobolev inner product if and only if the measures μ1,⋯,μm are discrete (see [10]). On the other hand, in [8] the study of the general inner product such that the multiplication operator by a polynomial is a symmetric operator with respect to the inner product has been done. The representation of such inner products is given as well as the associated inner product. Assuming some extra conditions, you get a Sobolev-type inner product. Notice that there is an intimate relation about these facts and higher-order recurrence relations that the sequences of orthonormal polynomials with respect to the above general inner products satisfy. A connection with matrix orthogonal polynomials has been stated in [9].

When we deal with the Sobolev-type inner product, a lot of contributions have emphasized on the algebraic properties of the corresponding sequences of orthogonal polynomials in terms of the polynomials orthogonal with respect to the measure μ0. The case m = 1 has been studied in [1], where representation formulas for the new family as well as the study of the distribution of their zeros have been analyzed. The particular case of Laguerre Sobolev-type orthogonal polynomials has been introduced and deeply analyzed in [19]. Outer ratio asymptotics when the measure belongs to the Nevai class and some extensions to a more general framework of Sobolev-type inner products have been analyzed in [22] and [20]. For measures supported on unbounded intervals, asymptotic properties of Sobolev-type orthogonal polynomials have been studied for Laguerre measures (see [14, 24]) and, in a more general framework, in [21].

The aim of our contribution is to analyze the higher-order recurrence relation that a sequence of Sobolev-type orthonormal polynomials satisfies when you consider dμ0 = dμ + Mδ(x − c) and dμ1 = Nδ(x − c), where M, N are nonnegative real numbers. In a first step, we obtain connection formulas between such Sobolev-type orthonormal polynomials and the standard ones associated with the measures dμ and (x − c)2dμ, respectively. A matrix analysis of the five diagonal symmetric matrix associated with such a higher-order recurrence relation is presented taking into account the QR factorization of the shifted symmetric Jacobi associated with the orthonormal polynomials with respect to the measure dμ. The shifted Jacobi matrix associated with (x − c)2dμ is RQ (see [4, 16]). Our approach is quite different and it is based on the iteration of the Cholesky factorization of the symmetric Jacobi matrices associated with dμ and (x − c)dμ, respectively (see [2, 12]).

These polynomial perturbations of measures are known in the literature as Christoffel perturbations (see [13] and [29]). They constitute examples of linear spectral transformations. The set of linear spectral transformations is generated by Christoffel and Geronimus transformations (see [29]). The connection with matrix analysis appears in [6] and [7] in terms of an inverse problem for bilinear forms. On the other hand, Christoffel transformations of the above type are related to Gaussian rules as it is studied in [12]. For a more general framework about perturbations of bilinear forms and Hessenberg matrices as representations of a polynomial multiplication operator in terms of sequences of orthonormal polynomials associated with such bilinear forms, see [3].

The structure of the manuscript is as follows. Section 2 contains the basic background about polynomial sequences orthogonal with respect to a measure supported on an infinite set of the real line. We will call them standard orthogonal polynomial sequences. In Section 3, we present several connection formulas between the sequences of standard orthonormal polynomials associated with the measures dμ and (x − c)2dμ and the orthonormal polynomials with respect to a Sobolev-type inner product. We give alternative proofs to those presented in [15]. In Section 4, we deduce the coefficients of the three-term recurrence relation for the orthonormal polynomials associated with the measure (x − c)2dμ. In Section 5, we study the five-term recurrence relation that orthonormal polynomials with respect to the Sobolev-type inner product satisfy. Section 6 deals with the connection between the shifted Jacobi matrices associated with the measures dμ and (x − c)2dμ in terms of QR factorizations. Then, taking into account the Cholesky factorization of the symmetric five diagonal matrix associated with the multiplication operator (x − c)2 in terms of the Sobolev-type orthonormal polynomials by commuting the factors, we get the square of the shifted Jacobi matrix associated with the measure (x − c)2dμ. Finally, in Section 7, we show an illustrative example in the framework of Laguerre-Sobolev-type inner products when c = − 1. Notice that in the literature, the authors have focused the interest in the case c = 0 and the analysis of the corresponding differential operator such that the above polynomials are their eigenfunctions (see [18, 25] and [26]).

2 Preliminaries

Let μ be a finite and positive Borel measure supported on an infinite subset E of the real line such that all the integrals

exist for n = 0, 1, 2,…. μn is said to be the moment of order nof the measure μ. The measure μ is said to be absolutely continuous with respect to the Lebesgue measure if there exists a non-negative function ω(x) such that dμ(x) = ω(x)dx.

In the sequel, let \(\mathbb {P}\) denote the linear space of polynomials in one real variable with real coefficients, and let {Pn(x)}n≥ 0 be the sequence of polynomials in \(\mathbb {P}\) with leading coefficient equal to one (monic OPS, or MOPS in short), orthogonal with respect to the inner product \(\langle \cdot ,\cdot \rangle _{\mu }:\mathbb {P}\times \mathbb {P}\rightarrow \mathbb {R}\) associated with μ

It induces the norm \(||f||_{\mu }^{2}=\langle f,f\rangle _{\mu }\). Under these considerations, these polynomials satisfy the following three-term recurrence relation (TTRR, in short)

where for every n ≥ 1, γn is a positive real number and βn, n ≥ 0 is a real number.

The n th reproducing kernel for ω(x) is

Because of the Christoffel-Darboux formula, see [5], it may also be expressed as

The confluent formula becomes

We introduce the following usual notation for the partial derivatives of the n th reproducing kernel Kn(x, y)

We will use the expression of the first y-derivative of (3) evaluated at y = c

and the following confluent formulas

whose proof can be found in [15, Sec. 2.1.2].

We will denote by {pn(x)}n≥ 0 the orthonormal polynomial sequence with respect to the measure μ. Obviously,

Notice that

Using orthonormal polynomials, the Christoffel-Darboux formula (4) reads

and its confluent form is

Next, we define the Christoffel canonical transformation of a measure μ (see [2, 28] and [29]). Let μ be a positive Borel measure supported on an infinite subset \(E\subseteq \mathbb {R}\), and assume that c does not belong to the interior of the convex hull of E. Here and in the sequel, \(\{P_{n}^{[k]}(x)\}_{n\geq 0}\) will denote the MOPS with respect to the inner product

\(\{P_{n}^{[k]}(x)\}_{n\geq 0}\) is said to be the k-iterated Christoffel MOPS with respect to the above standard inner product. If k = 1, we have the Christoffel canonical perturbation of μ. It is well known that, in such a case, Pn(c)≠ 0, and (see [5, (7.3)])

are the monic polynomials orthogonal with respect to the modified measure dμ[1]. They are known in the literature as monic kernel polynomials. If k > 1, then we have the k-iterated Christoffel transformation of dμ. In the sequel, we will denote

and \(x_{n,r}^{[k]},\) r = 1, 2,..., n, will denote the zeros of \(P_{n}^{[k]}(x)\) arranged in an increasing order. Since \(P_{n}^{[2]}(x)\) are the polynomials orthogonal with respect to (10) when k = 2 we have

Notice that from (5) we have

and hence the denominator in (11) is nonzero for every n ≥ 0. Thus, from (11), we get

where

Similar determinantal formulas can be obtained for k > 2. For orthonormal polynomials, the above expression reads

or, equivalently.

Furthermore, from [27, Theorem 2.5], we conclude that

On the other hand, taking (12) into account

which implies that

Replacing in (14), the orthonormal version of the connection formula (12) reads

In this paper, we will focus our attention on the following Sobolev-type inner product

where μ is a positive Borel measure supported on \(E=[a,b]\subseteq \mathbb {R}\), c∉E, and M, N ≥ 0. In general, E can be a bounded or unbounded interval of the real line. Let \(\{S_{n}^{M,\;N}(x)\}_{n \geq 0}\) denote the monic orthogonal polynomial sequence (MOPS in short) with respect to (16). These polynomials are known in the literature as Sobolev-type or discrete Sobolev orthogonal polynomials. It is worth to point out that many properties of the standard orthogonal polynomials are lost when an inner product as (16) is considered.

3 The TTRR for the 2-iterated orthogonal polynomials

In order to obtain the corresponding symmetric Jacobi matrix, in this section, will find the coefficients of the three-term recurrence relation satisfied by the 2 −iterated orthonormal polynomials \(\{p_{n}^{[2]}(x)\}_{n\geq 0}\). First, we deal with the monic orthogonal polynomials \(\{P_{n}^{[2]}(x)\}_{n\geq 0}\). Taking into account it is a standard sequence, we will have

where

In order to obtain the explicit expression of the above coefficients, we first study the numerator in κn. Taking into account (10) and (12), we have

Next, applying (2)

Taking into account (12)

we obtain

Thus,

Next, we study the denominator in the expression of κn. From (12), we have

Hence,

where

Hence, we have proved the following

Proposition 1

The monic sequence \(\{P_{n}^{[2]}(x)\}_{n\geq 0}\) satisfies the three-term recurrence relation

with \(P_{-1}^{[2]}(x)=0\), \(P_{0}^{[2]}(x)=1\), and

where, taking into account the explicit expressions for dn and en given in (12), we also have

Although all the above coefficients are well known in the literature, for the sake of completeness we will now present as a Corollary their corresponding version for the case of the three-term recurrence relation satisfied by the 2 −iterated Christoffel perturbed orthonormal polynomials of the sequence \(\{p_{n}^{[2]}(x)\}_{n \geq 0}\), since we will use them in Section 7 for the computation of the entries of the Jacobi matrix J[2]. Observe that the orthonormal version of Proposition 1 is

and, according to [13, Th. 1.29, p. 12–13],

so we can conclude that

Therefore,

As a consequence,

Replacing in κn these alternative expressions for en and dn we have

Therefore,

We have then proved the following

Corollary 1

The orthonormal polynomial sequence \(\{p_{n}^{[2]}(x)\}_{n\geq 0}\) satisfies the three-term recurrence relation

with \(p_{-1}^{[2]}(x)=0\), \(p_{0}^{[2]}(x)=1/\sqrt {\tau _{0}}\), where

where en, and dn are given in (17).

4 Connection formulas for Sobolev-type and standard orthogonal polynomials

As we have seen in the previous section, the connection formulas are the main tool to study the analytical properties of new families of OPS, in terms of other families of OPS with well-known analytical properties. Indeed, the problem of finding such expressions is called the connection problem, and it is of great importance in this context.

In this section, we present some results of [15], and which will be useful later. We will give some alternative proofs of them. From now on, let us denote by \(\{s_{n}^{M,\;N}\}_{n\geq 0}\), {pn}n≥ 0 the sequences of polynomials orthonormal with respect to (16) and (1), respectively. We will write

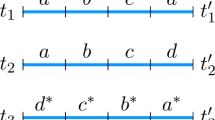

In the sequel the following notation will be useful. For every \(k\in \mathbb {N}_{0}\), let us define J[k] as the semi-infinite symmetric Jacobi matrix associated with the measure (x − c)kdμ, verifying

where \(\boldsymbol {\bar {p}}^{[k]}\) stands for the semi-infinite column vector with orthonormal polynomial entries \(\boldsymbol {\bar {p}} ^{[k]}=[p_{0}^{[k]}(x),p_{1}^{[k]}(x),p_{2}^{[k]}(x),{\ldots } ]^{\intercal }\), being \(\{p^{[k]}(x)\}_{n\geq 0}\) the orthonormal polynomial sequence with respect to the measure (x − c)kdμ (10) . One has \(\boldsymbol {\bar {p}}^{[0]}=\boldsymbol {\bar {p}}=[p_{0}(x),p_{1}(x),p_{2}(x),{\ldots } ]^{\intercal }\) being {p(x)}n≥ 0 the orthonormal polynomial sequence with respect to the standard measure μ, and J[0] = J is the corresponding Jacobi matrix.

Next, we will present an expansion of the monic polynomials \(S_{n}^{M,\;N}(x)\) in terms of polynomials Pn(x) orthogonal with respect to μ. When necessary, we refer the reader to [15, Th. 5.1] for alternative proofs to those presented here.

Lemma 1

where

Proof 1

We search for the expansion

where

From these coefficients, (19) follows. Next, considering its first derivative with respect to x, and taking x = c we get the following linear system

It is well known , see [1], that a linear system as above has a unique solution if and only if the determinant

for each fixed \(n\in \mathbb {N}\). Indeed, it is important to point out that (22) is a necessary and sufficient condition for the existence of every Sobobev-type polynomial \(S_{n}^{M,N}(x)\) of n-th degree. Provided that, from the very beginning in (16), we restrict ourselves to the case in which M, N ≥ 0, c is outside of the interior of the convex hull of \(E\subseteq \mathbb {R}\), and {Kn(c, c)}n≥ 0, \(\{K_{n}^{(0,\;1)}(c,\;c)\}_{n\geq 0}\), and \(\{K_{n}^{(1,\;1)}(c,\;c)\}_{n\geq 0}\) are all of them monotonic non-decreasing sequences (this comes from their definitions (5), (7) and (8)), we next check that (22) holds for each fixed \(n\in \mathbb {N}\).

That determinant can be rewritten as

Under the aforementioned conditions, \(MK_{n-1}(c,c)\) (respectively \(NK_{n-1}^{(1,1)}(c,c)\)) equals zero if, and only if \(M=0\) (respectively \(N=0\)). Next, concerning the term in square brackets above, it is a simple matter to observe the following Cauchy-Schwarz inequality

so the term in square braquets is always greater or equal than zero. Therefore, we can finally assert that

Hence, solving the above linear system for \(S_{n}^{M,\;N}(c)\) and \([S_{n}^{M,\;N}]^{\prime }(c)\) we obtain (20) and (21).

This completes the proof.

From the above lemma, we can also express \(S_{n}^{M,\;N}(x)\) as follows

In terms of the orthonormal polynomials, (19) becomes

As a direct consequence of Lemma 1, we get the following result concerning the norm of the Sobolev-type polynomials \(S_{n}^{M,\;N}\)

Lemma 2

For \(c\in \mathbb {R}_{+}\) the norm of the monic Sobolev-type polynomials \(S_{n}^{M,\;N}\), orthogonal with respect to (16) is

Proof 2

From (19), we have

and according to (16) we get

This completes the proof.

Next, we represent the Sobolev-type orthogonal polynomials in terms of the polynomial kernels associated with the sequence of orthonormal polynomials {pn(x)}n≥ 0 and its derivatives. Another proof of this result can be found in [15, Prop. 5.6, p. 115].

Lemma 3

The sequence of Sobolev-type orthonormal polynomials \(\{s_{n}^{M,\;N}(x)\}_{n\geq 0}\) can be expressed as

where

Proof 3

From (9)

Substituting the above relations into (23) yields

This completes the proof.

Next, we expand the polynomials {pn(x)}n≥ 0 in terms of the polynomials \(\{p_{n}^{[2]}(x)\}_{n\geq 0}\). This result is already addressed in [15, Prop. 5.7, p.116] as well as in [11] but we include here an alternative proof.

Lemma 4

The sequence of polynomials {pn(x)}n≥ 0, orthonormal with respect to dμ, is expressed in terms of the 2 − iterated orthonormal polynomials HCode \(\{p_{n}^{[2]}(x)\}_{n\geq 0}\) as follows

where

Proof 4

Taking into account (12), (15) and (14 ), for the first coefficient, we immediately have

For the second coefficient, from (14), we have

Finally, for the last coefficient, we get

This completes the proof.

Next, let us obtain a third representation for the Sobolev-type OPS in terms of the polynomials orthonormal with respect to (x − c)2dμ. This expression will be very useful to find the connection of these polynomials with the matrix orthogonal polynomials, and we include the proof for the convenience of the reader.

Theorem 1

Let \(\{s_{n}^{M,\;N}(x)\}_{n\geq 0}\) be the sequence Sobolev-type polynomials orthonormal with respect to (16), and let \(\{p_{n}^{[2]}(x)\}_{n\geq 0}\) be the sequence of polynomials orthonormal with respect to the inner product (10) with k = 2. Then, the following expression holds

where,

Proof 5

For γn, n, matching the leading coefficients of \(s_{n}^{M,\;N}(x)\) and \(p_{n}^{[2]}(x)\), it is a straightforward consequence to see that

Next, from (15),

For γn− 1,n we need some extra work. From (24), we have

The last integral can be computed using (23)

Thus,

Finally, for the last coefficient, we have

This completes the proof.

5 The five-term recurrence relation

In this section, we will obtain the five-term recurrence relation that the sequence of Sobolev-type orthonormal polynomials \(\{s_{n}^{M,\;N}(x)\}_{n\geq 0}\) satisfies. We use orthonormal polynomials because all the matrices associated with the multiplication operators we are dealing with are symmetric. Later on, we will derive an interesting relation between the five diagonal matrix H associated with the multiplication operator by (x − c)2 in terms of the orthonormal basis \(\{s_{n}^{M,\;N}(x)\}_{n\geq 0}\), and the tridiagonal Jacobi matrix J[2] associated with the three-term recurrence relation satisfied by the 2 −iterated orthonormal polynomials \(\{p_{n}^{[2]}(x)\}_{n\geq 0}\).

To do that, we will use the following remarkable fact

Proposition 2

The multiplication operator by (x − c)2 is a symmetric operator with respect to the discrete Sobolev inner product (16). In other words, for any \(p(x), q(x)\in \mathbb {P}\), it satisfies

Proof 6

The proof is a straightforward consequence of (16).

Next, we will obtain the coefficients of the aforementioned five-term recurrence relation. Let consider the Fourier expansion of \((x-c)^{2}s_{n}^{M,\;N}(x)\) in terms of \(\{s_{n}^{M,\;N}(x)\}_{n\geq 0}\)

where

From (26),

Hence, ρk, n = 0 for k = 0,…, n − 3. Taking into account that

and using [24, Th. 1, p. 174] we get

Notice that

Next, using the connection formula (25), we have

Introducing the following notation

(27) reads as the following

Theorem 2

The sequence \(\{s_{n}^{M,\;N}(x)\}_{n\geq 0}\) satisfies a five-term recurrence relation as follows

where, by convention,

6 A matrix approach

In this section, we will deduce an interesting relation between the five diagonal matrix H, associated with the multiplication operator by (x − c)2, associated with the Sobolev-type orthonormal polynomials and the Jacobi matrix J[2] associated with the 2 −iterated orthonormal polynomials \(\{p_{n}^{[2]}(x)\}_{n\geq 0}\).

First, we deal with the matrix representation of (30)

where H is the five diagonal semi-infinite symmetric matrix

and \(\mathbf {\bar {s}}^{M,\;N}=[s_{0}^{M,\;N}(x),s_{1}^{M,\;N}(x),s_{2}^{M,\;N}(x), {\ldots } ]^{\intercal }\).

Proposition 3

From (25), we get

where T is the lower triangular, semi-infinite, and nonsingular matrix with positive diagonal entries

and \(\boldsymbol {\bar {p}}^{[2]}=[p_{0}^{[2]}(x),p_{1}^{[2]}(x),p_{2}^{[2]}(x), {\ldots } ]^{\intercal }\).

We will denote by J the Jacobi matrix associated with the orthonormal sequence {pn(x)}n≥ 0, with respect to the measure dμ. As a consequence, we have

Let J[2] be the Jacobi matrix associated with the 2 −iterated OPS \(\{p_{n}^{[2]}(x)\}_{n\geq 0}\). Notice that from

we get

Starting with (J − cI), and assuming c is located in the left hand side of supp(μ), all their leading principal submatrices are positive definite, so we get the following Cholesky factorization

Here, L is a lower bidiagonal matrix with positive diagonal entries. From [3], we know

where L1 is a lower bidiagonal matrix with positive diagonal entries. Notice that if c is located in the right hand side of the support, then you must deal with the Cholesky factorization of the matrix cI −J.

Next, we show that the five diagonal matrix H associated with (30) can be given in terms of the five diagonal matrix \((\mathbf {J}_{[2]}-c\mathbf {I)}^{2}\). Combining (31) with (33), we get

Hence, we state the following

Proposition 4

The semi-infinite five diagonal matrix H can be obtained from the matrix \(\left (\mathbf {J}_{[2]}-c\mathbf {I}\right )^{2}\) as follows

Next, we repeat the above process commuting the order of factors in \(\mathbf {L}_{1}\mathbf {L}_{1}^{\intercal }\), Thus,

From (37), we have \(\mathbf {L}_{1}=\mathbf {L}^{\intercal }\mathbf {LL}_{1}^{-\intercal }\), and replacing this expression as above, it yields

Notice that \(\mathbf {R}=\left (\mathbf {LL}_{1}\right )^{\intercal }\) is an upper triangular matrix, with positive diagonal entries because L and L1 are lower bidiagonal matrices. Now, for the matrix \(\mathbf {Q}=\mathbf {LL}_{1}^{-\intercal }\), we have

Next, from (37), \(\mathbf {L}_{1}\mathbf {L}_{1}^{\intercal }=\mathbf {L}^{\intercal }\mathbf {L}.\) Thus,

as well as

This means that Q is an orthogonal matrix. Thus, we have proved the following

Proposition 5

The positive definite matrix J[2] − cI can be factorised as follows

where R is an upper triangular matrix, and Q is an orthogonal matrix, i.e. \(\mathbf {QQ}^{\intercal }=\mathbf {Q}^{\intercal } \mathbf {Q}=\mathbf {I}\).

Notice that the above result has been also proved in [12] but the fact that also \(\mathbf {QQ}^{\intercal }=\mathbf {I}\) holds is not proved therein.

Taking into account the previous result, we come back to (36) to observe

Thus, we can summarize the above as follows

Proposition 6

Let J be the symmetric Jacobi matrix such that

If \(\boldsymbol {\bar {p}=}[p_{0}(x),p_{1}(x),p_{2}(x),\ldots ]^{\intercal }\) is the infinite vector associated with the orthonormal polynomial sequence with respect to dμ and we assume pn(c)≠ 0 for n ≥ 1, then the following factorization

holds. Here, R is an upper triangular matrix, and Q is an orthogonal matrix , i.e. \(\mathbf {QQ}^{\intercal }=\mathbf {Q}^{\intercal } \mathbf {Q}=\mathbf {I}\). Under these conditions,

where J[2] is the symmetric Jacobi matrix such that \(x \boldsymbol {\bar {p}}^{[2]}=\mathbf {J}^{[2]} \boldsymbol {\bar {p}}^{[2]}\), where \(\boldsymbol {\bar {p}}^{[2]}\) is the infinite vector associated with the orthonormal polynomial sequence with respect to (x − c)2dμ.

Observe that this is an alternative proof of Theorem 3.3 in [4].

Since J[2] is a symmetric matrix, from (40) and \(\mathbf {QQ}^{\intercal }=\mathbf {I}\), we easily observe

Thus,

Proposition 7

The square of the positive definite symmetric matrix J[2] − cI has the following factorization

where R is an upper triangular matrix. Furthermore

Next, we are ready to prove that there is a very close relation between the five diagonal semi-infinite symmetric matrix H defined in (32), and the lower triangular, semi-infinite, nonsingular matrix T defined in (34).

We will use the following notation. Let \(\boldsymbol {\bar {f}}\) be any semi-infinite column vector with polynomial entries \(\boldsymbol {\bar {f}} =[f_{0}(x),f_{1}(x),f_{2}(x),\ldots ]^{\intercal }\). Then, \(\langle \boldsymbol {\bar {f}},\boldsymbol {\bar {g}}\rangle\) will represent the given inner product of \(\boldsymbol {\bar {f}}\) and \(\boldsymbol {\bar {g}}\) componentwise, that is we get the following semi-infinite square matrix

Next, let us recall (33), i.e. \(\boldsymbol {\bar {s}}^{M,\;N}= \boldsymbol {T \bar {p}}^{[2]}.\) Let us consider the inner product

where \(\langle \boldsymbol {\bar {p}}^{[2]},\boldsymbol {\bar {p}}^{[2]}\rangle _{\lbrack 2]}=\mathbf {I}\) because we deal with orthonormal polynomials. On the other hand, from (16) and (31), one has

where again \(\langle \boldsymbol {\bar {s}}^{M,\;N},\boldsymbol {\bar {s}}^{M,\;N}\rangle _{S}=\mathbf {I}\) since we deal with orthonormal polynomials. Thus, we have proved the following

Theorem 3

The five diagonal semi-infinite symmetric matrix H defined in (32), has the following Cholesky factorization

where T is the lower triangular, semi-infinite matrix defined in (34).

Finally, from (33) and (31), we have

According to (35), we get

Next, from (41), we obtain

Therefore,

and, as a consequence,

Theorem 4

For any positive Borel measure dμ supported on an infinite subset \(E\subseteq \mathbb {R}\), if J is the corresponding semi-infinite symmetric Jacobi matrix, and assuming that c does not belong to the interior of the convex hull of E, then for the 2 −iterated perturbed measure (x − c)2dμ such that J[2] is the corresponding semi-infinite symmetric Jacobi matrix, we get

This is the symmetric version of Theorem 5.3 in [6], where the authors use other kind of factorization based on monic orthogonal polynomials.

7 An example with Laguerre polynomials

In [14] and Section 5, the coefficients of (30) for the monic Laguerre Sobolev-type orthogonal polynomials have been deduced. In the sequel, we illustrate the matrix approach presented in the previous section for the Laguerre case with a particular example. First, let us denote by \(\{\ell _{n}^{\alpha }(x)\}_{n\geq 0}\), \(\{\ell _{n}^{\alpha ,\;[2]}(x)\}_{n\geq 0}\), \(\{s_{n}^{M,\;N}(x)\}_{n\geq 0}\) the sequences of orthonormal polynomials with respect to the inner products (1), (10) and (16), respectively, when dμ(x) = xαe−xdx, α > − 1, is the classical Laguerre weight function supported on \((0,+\infty )\).

In order to obtain compact expressions of the matrices, in this section, we will particularize all of those presented in the previous section for the choice of the parameters α = 0, c = − 1, M = 1, and N = 1. In these conditions, using any symbolic algebra package as, for example, Wolfram MathematicaⒸ, the explicit expressions of the sequences of orthogonal polynomials appearing in our study can be deduced.

From Section 5, we know

On the other hand, from (33), we obtain

Notice that if we multiply T by its transpose then one recovers H according to the statement of Proposition 3.

The tridiagonal symmetric Jacobi matrix associated with the standard orthonormal family \(\{\ell _{n}^{\alpha ,\;[2]}(x)\}_{n\geq 0}\) reads

and from this expression it is straightforward to check Proposition 4. Next, from the symmetric Jacobi matrix

associated with \(\{\ell _{n}^{\alpha }(x)\}_{n\geq 0}\), we can implement the Cholesky factorization of \(\mathbf {J-}c\mathbf {I=\mathbf {LL}^{\intercal }}\) in such a way the lower bidiagonal matrix is

Following (37), we commute the order of L and its transpose to obtain \(\mathbf {\mathbf {L}^{\intercal }\mathbf {L}=J}_{[1]}-c \mathbf {I}\), where

The computation of a new Cholesky factorization of J[1] − cI yields \(\mathbf {\mathbf {L}_{1}\mathbf {L}_{1}^{\intercal }}\), where

Commuting the order of the matrices in the decomposition then we finally deduce the expression (39), i.e. \(\mathbf {L}_{1}^{\intercal } \mathbf {L}_{1}=\mathbf {J}_{[2]}-c\mathbf {I}\). With these last matrices in mind we find R and Q at Proposition 5. Thus,

and

Observe that Q is a matrix whose rows are orthogonal vectors, and multiplying (44) above by its transpose (in this order) we get

Notice that we implement our algorithm with finite matrices. Notwithstanding the foregoing, multiplying the transpose of (44) by (44 ), then we have \(\mathbf {Q}^{\intercal }\mathbf {Q}=\mathbf {I}\).

Employing these matrices above it is easy to test numerically expressions \(\mathbf {H}=\mathbf {T}\left (\mathbf {J}_{[2]}-c\mathbf {I}\right )^{2}\mathbf {T }^{-1}\), J − cI = QR, and J[2] − cI = RQ according to the statements of Propositions 4, 5 and 6 respectively. It is also possible to check that using the numerical expression (42), and alternatively the expression (45), we recover

according to Proposition 4.

Finally, Proposition 7 can be numerically tested from (43), (45) and (46).

Data Availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Alfaro, M., Marcellán, F., Rezola, M.L., Ronveaux, A.: On orthogonal polynomials of Sobolev type: algebraic properties and zeros. SIAM J. Math. Anal. 23(3), 737–757 (1992)

Bueno, M.I., Marcellán, F.: Darboux transformation and perturbation of linear functionals. Linear Algebra Appl. 384, 215–242 (2004)

Bueno, M.I., Marcellán, F.: Polynomial perturbations of bilinear functionals and Hessenberg matrices. Linear Algebra Appl. 414, 64–83 (2006)

Buhmann, M.D., Iserles, A.: On orthogonal polynomials transformed by the QR algorithm. J. Comput. Appl. Math. 43(1–2), 117–134 (1992)

Chihara, T.S.: An introduction to orthogonal polynomials. Mathematics and Its Applications Series. Gordon and Breach, New York (1978)

Derevyagin, M., Marcellán, F.: A note on the Geronimus transformation and Sobolev orthogonal polynomials. Numer. Algorithms 67(2), 271–287 (2014)

Derevyagin, M., García-Ardila, J.C., Marcellán, F.: Multiple Geronimus transformations. Linear Algebra Appl. 454, 158–183 (2014)

Durán, A.J.: A generalization of Favard’s theorem for polynomials satisfying a recurrence relation. J. Approx. Theory 74(1), 83–109 (1993)

Durán, A.J., Van Assche, W.: Orthogonal matrix polynomials and higher-order recurrence relations. Linear Algebra Appl. 219, 261–280 (1995)

Evans, W.D., Littlejohn, L.L., Marcellán, F., Markett, C., Ronveaux, A.: On recurrence relations for Sobolev orthogonal polynomials. SIAM J. Math. Anal. 26(2), 446–467 (1995)

García-Ardila, J.C., Marcellán, F., Villamil-Hernández, P.H.: Associated orthogonal polynomials of the first kind and Darboux transformations. J. Math. Anal. Appl. 508, 125883, 26 (2022)

Gautschi, W.: The interplay between classical analysis and (numerical) linear algebra-A tribute to Gene Golub. ETNA. 13, 119–147 (2002)

Gautschi, W.: Orthogonal polynomials: computation and approximation. Numer. Math. Sci. Comput. Oxford University Press Oxford (2004)

Huertas, E.J., Marcellán, F., Pérez-Valero, M.F., Quintana, Y.: Asymptotics for Laguerre-Sobolev type orthogonal polynomials modified within their oscillatory regime. Appl. Math. Comput. 236, 260–272 (2014)

Huertas, E.J.: Analytic properties of Krall-type and Sobolev-type orthogonal polynomials. Doctoral Dissertation, Universidad Carlos III de Madrid (2012)

Kautský, J., Golub, G.H.: On the calculation of Jacobi matrices. Linear Algebra Appl. 52/53, 439–455 (1983)

Koekoek, R.: Generalizations of laguerre polynomials. J. Math. Anal. Appl. 153(2), 576–590 (1990)

Koekoek, J., Koekoek, R., Bavinck, H.: On differential equations for Sobolev-type Laguerre polynomials. Trans. Amer. Math. Soc. 350(1), 347–393 (1998)

Koekoek, R., Meijer, H.G.: A generalization of Laguerre polynomials. SIAM J. Math. Anal. 24(3), 768–782 (1993)

López, G., Marcellán, F., Van Assche, W.: Relative asymptotics for polynomials orthogonal with respect to a discrete Sobolev inner product. Constr. Approx. 11(1), 107–137 (1995)

Marcellán, F., Moreno Balcázar, J.J.: Asymptotics and zeros of Sobolev orthogonal polynomials on unbounded supports. Acta Appl. Math. 94(2), 163–192 (2006)

Marcellán, F., Van Assche, W.: Relative asymptotics for orthogonal polynomials with a Sobolev inner product. J. Approx. Theory 72(2), 193–209 (1993)

Marcellán, F., Xu, Y.: On Sobolev orthogonal polynomials. Expo. Math. 33(3), 308–352 (2015)

Marcellán, F., Zejnullahu, R.X.H., Fejzullahu, B.X.H., Huertas, E.J.: On orthogonal polynomials with respect to certain discrete Sobolev inner product. Pacific J. Math. 257(1), 167–188 (2012)

Markett, C.: On the differential equation for the Laguerre-Sobolev polynomials. J. Approx. Theory 247, 48–67 (2019)

Markett, C.: Symmetric differential operators for Sobolev orthogonal polynomials of Laguerre- and Jacobi-type. Integral Trans. Spec. Funct. 32(5–8), 568–587 (2021)

Szegő, G.: Orthogonal Polynomials, vol. 23. 4th edn. Amer. Math. Soc. Colloq. Publisher Series, Amer. Math. Soc. Providence, RI (1975)

Yoon, G.J.: Darboux transforms and orthogonal polynomials. Bull. Korean Math. Soc. 39, 359–376 (2002)

Zhedanov, A.: Rational spectral transformations and orthogonal polynomials. J. Comput. Appl. Math. 85, 67–83 (1997)

Acknowledgements

We thank the anonymous referees for their carefully reading of the manuscript and for giving constructive comments, which helped us to improve its presentation.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. The work of CH, EJH and AL is supported by Dirección General de Investigación e Innovación, Consejería de Educación e Investigación of the Comunidad de Madrid (Spain), and Universidad de Alcalá under grants CM/JIN/2019-010 and CM/JIN/2021-014, Proyectos de I+D para Jóvenes Investigadores de la Universidad de Alcalá 2019 and 2021, respectively. The work of FM has been supported by FEDER/Ministerio de Ciencia e Innovación-Agencia Estatal de Investigación of Spain, grant PGC2018-096504-B-C33, and the Madrid Government (Comunidad de Madrid-Spain) under the Multiannual Agreement with UC3M in the line of Excellence of University Professors, grant EPUC3M23 in the context of the V PRICIT (Regional Programme of Research and Technological Innovation).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hermoso, C., Huertas, E.J., Lastra, A. et al. Higher-order recurrence relations, Sobolev-type inner products and matrix factorizations. Numer Algor 92, 665–692 (2023). https://doi.org/10.1007/s11075-022-01402-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01402-y

Keywords

- Orthogonal polynomials

- Sobolev-type orthogonal polynomials

- Jacobi matrices

- Five diagonal matrices

- Recurrence relations

- Laguerre polynomials