Abstract

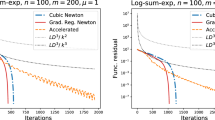

In this paper, we present a version of the cubic regularization of Newton’s method for unconstrained nonconvex optimization, in which the Hessian matrices are approximated by forward finite difference Hessians. The regularization parameter of the cubic models and the accuracy of the Hessian approximations are jointly adjusted using a nonmonotone line search criterion. Assuming that the Hessian of the objective function is globally Lipschitz continuous, we show that the proposed method needs at most \(\mathcal {O}\left (n\epsilon ^{-3/2}\right )\) function and gradient evaluations to generate an 𝜖-approximate stationary point, where n is the dimension of the domain of the objective function. Preliminary numerical results corroborate our theoretical findings.

Similar content being viewed by others

Notes

Throughout this paper, by call of the oracle we mean one function evaluation or one gradient evaluation.

The choice of performing 10 iterations was done based on a few preliminary numerical tests. Running the method in [8] with this number of iterations often provided a very good initial point for the BFGS method.

This dataset is freely available in the UCI Machine Learning Repository (https://archive.ics.uci.edu/ml).

References

Bellavia, S., Gurioli, G., Morini, B.: Adaptive cubic regularization methods with dynamic inexact Hessian information and applications to finite-sum minimization. IMA J. Numer. Anal. 41, 764–799 (2021)

Bergou, E., Diouane, Y., Gratton, S.: A line-search algorithm inspired by the adaptive cubic regularization framework with a worst-case complexity o(𝜖− 3/2). Optimization Online (2017)

Bianconcini, T., Sciandrone, M.: A cubic regularization algorithm for unconstrained optimization using line search and nonmonotone techniques. Optim. Methods Softw. 31, 1008–1035 (2016)

Birgin, E.G., Martínez, J.M.: The use of quadratic regularization with cubic descent condition for unconstrained optimization. SIAM J. Optim. 27, 1049–1074 (2017)

Birgin, E.G., Gardenghi, J.L., Martínez, J. M., Santos, S.A., Toint, P.L.: Worst-case evaluation complexity for unconstrained nonlinear optimization using high-order regularized models. Math. Program. 163, 359–368 (2017)

Carmon, Y., Duchi, J.C., Hinder, O., Sidford, A.: Lower bounds for finding stationary points I. Math. Program. 184, 71–120 (2020)

Cartis, C., Gould, N.I.M., Toint, P.L.: On the complexity of steepest descent, Newton’s and regularized Newton’s methods for nonconvex unconstrained optimization problems. SIAM J. Optim. 20, 2833–2852 (2010)

Cartis, C., Gould, N.I.M., Toint, P.L.: Adaptive cubic regularization methods for unconstrained optimization. Part I: motivation, convergence and numerical results. Math. Program. 127, 245–295 (2011)

Cartis, C., Gould, N.I.M., Toint, P. h. L.: Adaptive cubic regularization methods for unconstrained optimization. Part II: worst-case function - and derivative - evaluation complexity. Math. Program. 130, 295–319 (2011)

Cartis, C., Gould, N.I.M., Toint, P.L.: On the oracle complexity of first-order and derivative-free algorithms for smooth nonconvex minimization. SIAM J. Optim. 22, 66–86 (2012)

Curtis, F.E., Robinson, D.P., Samadi, M.: A trust-region algorithm with a worst-case complexity of \(\mathcal {O}\left (\epsilon ^{-3/2}\right )\) for nonconvex optimization. Math. Program. 162, 1–32 (2017)

Demmel, J.: Applied numerical linear algebra. SIAM, Philadelphia (1997)

Dussault, J.-P.: ARCq: a new adaptive regularization by cubics variant. Optim. Methods Softw. https://doi.org/10.1080/10556788.2017.1322080 (2017)

Grapiglia, G.N., Yu, N: Regularized Newton Methods for minimizing functions with hölder continuous Hessians. SIAM J. Optim. 27, 478–506 (2017)

Grapiglia, G.N., Sachs, E.W.: On the worst-case evaluation complexity of non-monotone line search algorithms. Comput. Optim. Appl. 68, 555–577 (2017)

Griewank, A.: The Modification of Newton’s Method for Unconstrained Optimization by Bounding Cubic Terms. Technical Report NA/12, Department of Applied Mathematics and Theoretical Physics. University of Cambridge, Cambridge (1981)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 23, 707–716 (1986)

Kohler, J.M., Lucchi, A.: Subsampled cubic regularization for non-convex optimization. 34th Int Conf Machine Learn ICML 2017(4), 2988–3011 (2017)

Martínez, J.M., Raydan, M.: Cubic-regularization counterpart of a variable-norm trust-region method for unconstrained minimization. J. Glob. Optim. 68, 367–385 (2017)

Melicher, V., Haber, T., Vanroose, W.: Fast derivatives of likelihood functionals for ODE based models using adjoint-state method. Comput. Stat. 32, 1621–1643 (2017)

Moré, J.J., Garbow, B.S., Hillstrom, K.E.: Testing unconstrained optimization software. ACM Trans. Math. Softw. 7, 17–41 (1981)

Nesterov, Y., Polyak, B.: Cubic regularization of Newton method and its global performance. Math. Program. 108, 177–205 (2006)

Niri, T.D., Heydari, M., Hosseini, M.M.: An improvement of adaptive cubic regularization method for unconstrained optimization problems. Int. J. Comput. Math. 98, 271–287 (2021)

Stapor, P., Froöhlich, F., Hasenauer, J.: Optimization and profile calculation of ODE models using second order adjoint sensitivity analysis. Bioinformatics 34, i151–i159 (2018)

Street, W.N., Wolberg, W.H., Mangasarian, O.L.: Nuclear Feature Extraction for Breast Tumor Diagnosis. IS&T/SPIE 1993 International Symposium on Electronic Imaging: Science and Technology, vol. 1905, pp.861-870. San Jose, CA (1993)

Wang, Z., Zhou, Y., Liang, Y., Lan, G.: Stochastic Variance-Reduced Cubic Regularization for Nonconvex Optimization. arXiv:1802.07372 (2018)

Wang, Z., Zhou, Y., Liang, Y., Lan, G.: A note on inexact gradient and Hessian conditions for cubic regularized Newton’s method. Oper. Res. Lett. 47, 146–149 (2019)

Weyl, H.: Das asymptotische Verteilungsgesetz der Eigenwerte linearer partieller Differentialgleichungen. Math. Ann. 71, 441–479 (1912)

Zhang, H.C., Hager, W.W.: A nonmonotone line search technique for unconstrained optimization. SIAM J Optim 14, 1043–1056 (2004)

Zheng, Y., Zheng, B.: A modified adaptive cubic regularization method for large-scale unconstrained optimization problem. arXiv:1904.07440 [math.OC] (2019)

Acknowledgments

We are very grateful to the two anonymous referees, whose comments helped to improved the paper.”

Funding

G.N. Grapiglia was partially supported by CNPq - Brazil grant 312777/2020-5. M.L.N. Gonçalves was partially supported by CNPq - Brazil grant 408123/2018-4.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Grapiglia, G.N., Gonçalves, M.L.N. & Silva, G.N. A cubic regularization of Newton’s method with finite difference Hessian approximations. Numer Algor 90, 607–630 (2022). https://doi.org/10.1007/s11075-021-01200-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-021-01200-y