Abstract

Estimating a nonlinear model from experimental measurements of a vibrating structure remains a challenge, despite huge progress in recent years. A major issue is that the dynamical behaviour of a nonlinear structure strongly depends on the magnitude of the displacement response. Thus, the validity of an identified model is generally limited to a certain range of motion. Also, outside this range, the stability of the solutions predicted by the model are not guaranteed. This raises the question as to how a nonlinear model derived using data from relatively low amplitude excitation can be used to predict the dynamical behaviour for higher amplitude excitation. This paper focuses on this problem, investigating the extrapolation capabilities of data-driven nonlinear state-space models based on a subspace approach. The experimental vibrating structure consists of a cantilever beam in which magnets are used to generate strong geometric nonlinearity. The beam is driven by an electrodynamic shaker using several levels of broadband random noise. Acceleration data from the beam tip are used to derive nonlinear state-space models for the structure. It is shown that model predictions errors generally tend to increase when extrapolating towards higher excitation levels. Furthermore, the validity of the estimated nonlinear models become poor for very strong nonlinear behaviour. Linearised models are also estimated to have a complete view of the performance of each candidate model for each level of excitation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 1 Introduction

System identification is an essential tool for understanding and modelling the behaviour of engineering structures, facilitating the mathematical description of real-life structures based on measured data. Historically, this process has been used to estimate linear models, taking advantage of linear theory based on the superposition principle [1]. However, many engineering structures are only linear to a first approximation and some do not behave linearly at all. The development of methods for system identification of such structures has gained more attention in recent years, driven by the need to capture inherent nonlinear effects [2, 3]. They can be used to estimate a linearised model for a specific working condition, or to estimate a model to capture the full nonlinear characteristics of the structure. The first case generally aims to determine an equivalent linear model [4, 5], sometimes referred to as the best linear approximation (BLA) [3, 6]. This kind of model is generally reliable in the case of weak nonlinearity and only under the conditions of the training dataset, because the dynamical behaviour of a nonlinear structure significantly depends on the amplitude of vibration. A nonlinear model, however, has a wider region of validity, and can provide greater understanding of the system behaviour, including potential bifurcations in the response.

Nonlinear system identification is still a challenging task, even though several methods have been developed by the research community [7]. The identification process generally involves three steps: nonlinear detection, characterisation, and estimation. The first two steps can be addressed using ad-hoc methods or prior knowledge of the system [2], while the last step involves estimation of the model parameters from the experimental data. Except for the theoretical case when a perfect match exists between the actual structure and the identified model, the outcome of this step is related to the range of motion (vibration level) covered by the data itself. The reason is twofold: (i) even in the case of a successful estimation of a model structure, the true nonlinear phenomena might change when moving out of the training dataset; (ii) the estimated nonlinear model itself can behave differently when extrapolated, for instance in the case of a polynomial representations. This is why extrapolation from nonlinear models is generally not recommended [8]. Ideally, nonlinear system identification should be performed using training data that covers the operational range of motion of the structure under test. This is not always possible in real-life scenarios, and knowledge of the limitations of an identified model structure is crucially important information. This topic was first investigated in [9], and for extrapolated nonlinear models estimated using a polynomial nonlinear state-space representation [10].

This paper further investigates this subject by considering the extrapolation of data-driven state-space models estimated using a subspace approach [11, 12]. State-space formulations are widely used in engineering applications, and the nonlinear problem addressed in this paper derives directly from the standard linear one. This makes the use of a subspace approach to estimate the system matrices quite convenient, since the essential definitions of classical subspace methods remain valid. A cantilever beam with geometric nonlinearity caused by the addition of magnets, is excited using an electrodynamic shaker with several levels of random excitation. This causes a response ranging from quasi-linear to strongly nonlinear. Linearised and nonlinear models are estimated from the measured data, and extrapolation (and interpolation) issues are discussed in both cases. In particular, linearised models show acceptable results only for weak nonlinear behaviours and around the selected working point, while they hugely fail in case of stronger nonlinearity or when trying to interpolate/extrapolate. Nonlinear models generally outperform linear ones, but extrapolation remains an important limitation. To the authors’ best knowledge, an experimental investigation on this topic comprising several excitation levels and a strong nonlinearity is not present in the scientific literature yet. The investigation described in this paper offers a useful perspective on experimental and methodological aspects. The intention is to provide comprehensive insight on the importance of accurately selecting an appropriate range of motion when designing the experimental setup. The results provide useful insight into the reliability and the limits of the estimated models both in the case of interpolation and extrapolation.

The paper is organised as follows. Section 2 presents the methods adopted in this paper and describes the experimental test rig. Section 3 presents the results obtained considering first linearised models and then nonlinear models. Finally, the conclusions of the present work are summarised in Sect. 4. Appendix A provides a numerical demonstration of the proposed methodology.

2 Methods

2.1 Nonlinear state-space modelling using subspace identification

The nonlinear state-space approach is used in this paper to model the dynamic behaviour of a nonlinear structure. Different formulations of this have been proposed in the literature, such as [10,11,12,13]. In particular, the formulation in [11] is the cornerstone of the Nonlinear Subspace Identification (NSI) technique, and is adopted in this paper. NSI has been used previously to estimate nonlinear models from experimental measurements considering discrete [14,15,16] and distributed [17] nonlinearities, as well as double-well systems [18, 19]. NSI treats the nonlinear restoring force of the system as a feedback force on the underlying-linear system (ULS). Considering the discrete case of a mechanical system with \(N\) degrees-of-freedom, yields the equation of motion

where \(\mathbf{M}\), \({\mathbf{C}}_{\mathrm{v}}\) and \(\mathbf{K}\) \(\in {\mathbb{R}}^{N\times N}\) are the mass, viscous damping, and stiffness matrices respectively, while \(\mathbf{y}\left(t\right)\) and \(\mathbf{f}(t)\) \(\in {\mathbb{R}}^{N}\) are the generalised displacement and external force vectors respectively. The nonlinear part of the equation is described by the term \({\mathbf{f}}^{\mathrm{nl}}\left(\mathbf{y}, \dot{\mathbf{y}}\right)\in {\mathbb{R}}^{N}\), which generally depends on displacements and/or velocities. It is assumed that \({\mathbf{f}}^{\mathrm{nl}}\) can be decomposed into \(J\) distinct nonlinear contributions using a linear-in-the-parameters model, yielding

Each term of the summation is defined by a coefficient \({\upmu }_{j}\), a nonlinear basis function \({\mathrm{g}}_{j}\left(\mathbf{y}, \dot{\mathbf{y}}\right)\) and a location vector \({\mathbf{L}}_{j}\in {\mathbb{R}}^{N}\). The elements of \({\mathbf{L}}_{j}\) define the position of the \({j}^{th}\) nonlinearity, and can have values of \(-1\), \(1\) or \(0\) and. Introducing the extended-input vector \({\mathbf{f}}^{\mathrm{e}}\) as

and the state vector \(\mathbf{x}={\left[{\mathbf{y}}^{\mathrm{T}},{\dot{\mathbf{y}}}^{\mathrm{T}}\right]}^{\mathrm{T}}\), a discrete state-space formulation can be written down as

where \(\uptau \) is the sampled time and matrices \(\mathbf{A},{\mathbf{B}}^{\mathrm{e}}, \mathbf{C}, {\mathbf{D}}^{\mathrm{e}}\) are the state, extended input, output and extended direct feedthrough matrices, respectively. These matrices can be estimated by using a subspace approach and assuming knowledge of the nonlinear basis functions \({\mathrm{g}}_{j}\) and the location vectors \({\mathbf{L}}_{j}\) of the nonlinear terms. The reader is referred to [11, 20] for extensive information on this process. Once the state-space matrices are estimated, the model described by Eq. (4) can be used to estimate both the underlying-linear and nonlinear features of the system. The first step involves:

-

Estimating the modal parameters of the ULS by eigenvalue decomposition of hte matrix \(\mathbf{A}\)

-

Estimating the FRFs of the ULS by first defining the extended-FRF \({\mathbf{G}}^{\mathrm{e}}\left(\omega \right)\)

$$ {\mathbf{G}}^{{\text{e}}} \left( \omega \right) = {\mathbf{D}}^{{\text{e}}} + {\mathbf{C}}\left( {{\text{z}}{\mathbf{I}}_{{2N}} - {\mathbf{A}}} \right)^{{ - 1}} {\mathbf{B}}^{{\text{e}}} ,{\text{~}}\quad {\text{z}} = {\text{e}}^{{i\omega {{\Delta t}}}} , $$(5)where \({\mathbf{I}}_{2\mathrm{N}}\) is the identity matrix of size \(2N\) and \(i\) is the imaginary unit. The matrix \({\mathbf{G}}^{\mathrm{e}}\left(\omega \right)\) has the same structure of the extended force vector \({\mathbf{f}}^{\mathrm{e}}\), so that its first block \(\mathbf{G}\left(\omega \right)\) is the FRF matrix of the underlying-linear system:

$${\mathbf{G}}^{\mathrm{e}}\left(\omega \right)=\left[\mathbf{G}\left(\omega \right), \mathbf{G}\left(\omega \right){\upmu }_{1}{\mathbf{L}}_{1}, \dots , \mathbf{G}\left(\omega \right){{\upmu }_{J}\mathbf{L}}_{J}\right]$$(6)

Note that this step provides full knowledge of the underlying-linear dynamics of the system without the need for a linear measurement.

The next step involves the determination of the coefficients of the nonlinear terms \({\upmu }_{j}\). They are frequency-dependent and complex-valued, and are determined from the remaining blocks of \({\mathbf{G}}^{\mathrm{e}}\). However, the true coefficients should be real numbers with no dependence on frequency. This only occurs in complete absence of noise and nonlinear modelling errors. When real measurements are performed, there are generally non-zero imaginary parts which are much smaller than their corresponding real parts. The reader is referred to [17, 19, 20] for detailed information on this step.

Finally, the estimated state-space model of Eq. (4) can be used to predict the response of the system to a given input vector \(\mathbf{f}(t)\) or initial state vector. This can be carried out by propagating Eq. (4) over time, and it must be performed recursively for each time step due to the nonlinear nature of the problem. If the input vector \(\mathbf{f}(t)\) is from a validation set, the outputs generated by the method \({\mathbf{y}}^{\mathrm{sim}}\) should replicate the measurements \({\mathbf{y}}^{\mathrm{val}}\). The deviation between the simulated and measured outputs can be generally attributed to noise and/or nonlinear modelling errors. The output simulation percentage error \({\epsilon }_{n}\) can be defined for each output \(n\) as

which represents the capability of the estimated model in predicting the system dynamics.

This work focuses on the last point—to understand the limitations of this process due to the nonlinearity. A numerical example is proposed in Appendix A: numerical demonstration to validate the methodology in a case where both the true underlying-linear FRF and nonlinear restoring force are known. The numerical example consists of a two-degrees-of-freedom system with a non-smooth asymmetrical nonlinearity. Monte Carlo simulations have been performed to account for stochastic variations and model uncertainties.

2.2 Experimental test rig

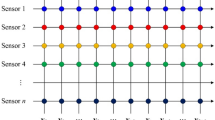

The test rig consists of a cantilever beam with total length of 400 mm and rectangular cross-section (width of 25 mm and thickness of 5 mm). There are three small neodymium magnets located at the tip as depicted in Fig. 1. Different nonlinear effects can be obtained by changing the polarization of the magnets such as hardening, softening, multi-stable or quasi-zero-stiffness [21,22,23]. A repelling configuration was considered in this work, generating a predominantly hardening nonlinearity. The system was excited using an electromagnetic shaker (Modal Shop – model K2007E01) that provides brown noise to the structure. This allows to have higher intensity at lower frequencies so that the analysis can be focused on the first mode. Six levels of excitation were considered from 0.08 NRMS to 1.16 NRMS following a geometric progression, as listed in Table 1. The excitation level was increased roughly by 70% from one level to the subsequent. An accelerometer A1 (model PCB 352A24) was used to measure the response of the beam at the tip, the sampling frequency was 2000 Hz and each acquisition lasted 120 s. All the signals were recorded using a NI USB-4431 acquisition board from National Instrument. The last 20 s of the measured data are used as validation set in all the identification steps involved in the following sections, while the rest is adopted as a training set.

The measured accelerations in the time domain are depicted in Fig. 2a for each level of excitation. The corresponding displacements are obtained by integrating twice the accelerations and depicted in Fig. 2b. Note that the overdots denote differentiation with respect to time.

The experimental FRF obtained using the H1-estimator and the corresponding coherence function are depicted in Fig. 3 for each level. A clear shift of the resonance peak to the higher frequencies can be observed for higher excitation levels, which is a sign of strong nonlinear hardening behaviour. Also note the diminishing level of coherence as the excitation level increases, which is an additional indication of the presence of a strong nonlinearity.

3 Results

3.1 Best linear approximation (BLA) via subspace identification

A linear state-space model is estimated for each level of excitation using the linear subspace identification technique [24] with a model order of 2. To ensure that the estimated model is the best possible linear candidate, an a-posteriori optimisation is carried out using the validation set. This serves to estimate the BLA of the selected data, and is used in the extrapolation process of Sect. 3.4 as a comparative measure.

To this end, the system output is generated using the estimated linear state-space matrices (A, B, C, D) and the measured forcing input. A least-square minimisation problem is defined to reduce the residual between the measured output \({\mathrm{y}}_{1}^{\mathrm{val}}\) and the simulated output \({\mathrm{y}}_{1}^{\mathrm{sim}}\) by optimising the state-space matrices. The minimisation problem is defined by

where \( {{\varvec{\uptheta}}} = {\text{vec}}\left( {\left[ {{\mathbf{A}}\quad {\mathbf{B}}\quad {\mathbf{C}}\quad {\mathbf{D}}} \right]} \right) \) and the vector operation \(\mathrm{vec}\left(\cdot \right)\) stacks the coloumn of a matrix on top of each other. The starting point of the optimisation is the set of matrices obtained from the subspace approach and the Levenberg–Marquardt algorithm is adopted with a step tolerance of 10–8.

The system FRFs for the different excitation levels of Fig. 3 are now depicted in Fig. 4 together with the corresponding BLA. The estimated natural frequencies, damping ratios and simulation errors are listed in Table 2. The output simulation errors are computed according to Eq. (7). It is clear that an increase in the excitation level is associated with a decrease in the BLA performance. The output simulation errors become extremely high from level 3 (light green line in the figure), denoting the inability of a linear model to capture the system dynamics in the case of severe nonlinear behaviour.

Since the model obtained using the BLA is a linearisation for a specific working range, it is generally not advisable to use this model away from the training data set when the system behaves nonlinearly [3]. This is confirmed by the error matrix shown in Fig. 5, obtained from the output simulation error \({\epsilon }_{1}\) when extrapolating and interpolating towards higher and lower levels, respectively. The values on the diagonal correspond to those reported in Table 2. The off-diagonal values dramatically increase when moving out of the BLA training set both in the case of interpolation (blue background) and extrapolation (red background). This result is expected for the latter case, but it is not so obvious in the case of interpolation. The reason can be seen by close examination of Table 2 and Fig. 4. The estimated natural frequencies and damping ratios of the linearised models change with the excitation level, and this occurs both when moving towards higher levels (i.e. extrapolating) or lower ones (i.e. interpolating). As an example, the error of the model BLA3 at excitation level 3 is 25.5% (row 3, column 3). The same model gives an error of 51.5% when reducing the excitation to level 2 (row 3, column 2), while it gives an error of 64.4% when increasing the excitation to level 4 (row 3, column 4).

Error matrix on the validation set, BLA. The values refer to the percentage errors defined in Eq. (7). The darkness of the cells is proportional to the error level. Blue background (lower-triangular matrix) indicates simulations with interpolation; red background (upper-triangular matrix) indicates simulations with extrapolation; bold type indicates same-level simulations

Consequently, the minimum errors for each identification level are those on the diagonal, corresponding to the excitation level at which the BLA is calculated. A surface plot of the error matrix is depicted in Fig. 6 to highlight this behaviour.

3.2 Qualitative nonlinear characterisation

The nonlinear behaviour of the system is first investigated using the Acceleration Surface Method (ASM) [25] to get a qualitative data-based visualisation of the system restoring force. Since the sensor is located close to the source of the nonlinearity (i.e., the magnets), and is quite far from the excitation point, its restoring surface can give a rough visualisation of the system characteristic. In particular, a qualitative representation of the restoring force can be obtained by slicing the acceleration surface around the low-velocity region. The points of the restoring force are therefore obtained as the ones that satisfy the condition

where the tolerance \({\varepsilon }_{v}\) is set to \({10}^{-3}\). The result is shown in Fig. 7. It can be seen that the system has a hardening restoring force, and there is consistency for the different excitation levels.

A polynomial representation of the nonlinear restoring force is therefore used to apply the NSI technique in the following section, considering the nonlinear basis functions

in which maximum exponent \(P=J+1\) is selected independently for each excitation level to minimise the errors over the output residual given in Eq. (7).

3.3 Estimation of the nonlinear model parameters

A nonlinear state-space model of order 2 is estimated for each level of excitation using the nonlinear subspace identification technique. The following information is estimated for each level:

-

1.

The modal parameters and FRFs of the ULS. These are compared with the lowest level of excitation (0.08 NRMS), which is the closest to linear behaviour. The FRFs of the ULS are depicted Fig. 8a, and the natural frequencies and damping ratios are listed in Table 3.

-

2.

The coefficients \({\upmu }_{j}\) of the nonlinear basis functions \({\mathrm{g}}_{j}\) and the nonlinear restoring force of the structure. The final shape of the estimated nonlinear restoring force is depicted in Fig. 8b for each level of excitation.

-

3.

The errors over the output residual \({\epsilon }_{1}\) as in Eq. (7). To this end, the last 20 s of each test are used again as a validation set, and the estimated state-space model is adopted to generate the outputs given the measured forcing input.

The identification is repeated for each level considering different sets of candidate basis functions having the maximum exponent \(P\) Eq. (10) ranging from 3 to 7. The best set is then selected as the one that minimises the error \({\epsilon }_{1}\). The maximum exponents are listed in Table 3 for each test.

The results of the identification show significant consistency up to the fifth level (0.64 NRMS) on both the underlying-linear parameters and the nonlinear estimation. Residuals and errors increase noticeably at level 6 (0.81 NRMS), suggesting that the selected nonlinear basis functions are not appropriate when describing the nonlinear behaviour of the system at high-level responses. This is likely to be associated with the occurrence of new nonlinear phenomena such as geometric nonlinearity caused by large-amplitude displacements [17], which are not well captured by the polynomial functions used. The model estimated from level 6 is therefore considered as erratic and marked with the symbol \({\cdot }^{*}\) in Table 3.

Interestingly, the output simulation error of lowest excitation level (Level 1) is higher than the subsequent ones. A possible explanation is that vibrations are so low in this case that noise and/or boundary connections affect the result. A similar behaviour is observed and discussed in Sect. 3.4.

3.4 Extrapolation, interpolation and model stability

The estimated state-space models have been used above to replicate the outputs of the system considering a validation set belonging to the same excitation level of the training set. It is, however, interesting to analyse the errors of the residuals when extrapolating and interpolating towards higher and lower levels, respectively. The results are illustrated in the error matrix of Fig. 9. The best linear approximation (BLA) is also reported for each level as a comparative measure, considering the diagonal values of Fig. 5.

Error matrix on the validation set. The values refer to the percentage errors defined in Eq. (7). The darkness of the cells is proportional to the error level. Blue background (lower-triangular matrix) indicates simulations with interpolation; red background (upper-triangular matrix) indicates simulations with extrapolation; bold type indicates same-level simulations

The linearised model shows similar performances of the nonlinear models 1–5 when considering the lowest excitation level (first column), confirming the quasi-linear behaviour in this range of motion. Interestingly, it outperforms nonlinear model 6 (marked with \({\cdot }^{*}\)), which proved to be poor as indicated in Table 3. As for the nonlinear models, the errors tend to increase when extrapolating towards higher excitation levels (red background). This confirms the idea that extrapolation from nonlinear models is generally not advisable, especially in the case of polynomial expansions. Cells with a cross correspond to the cases when the simulation does not converge, making the extrapolation process not possible at all. Assuming that the underlying-linear state-space model is stable, this may occur for two reasons: (i) the extrapolated nonlinear restoring force becomes too large for convergence at a certain time step; (ii) the extrapolated nonlinear restoring force exhibits unstable behaviour, thus making the nonlinear model unstable. The latter case can be observed in Fig. 10 which shows the case when extrapolating from level 3 towards level 5. The origin of this behaviour is in the high polynomial terms of the selected nonlinear basis functions and must be carefully investigated when performing similar nonlinear data-based identifications.

It is worth highlighting that the estimated nonlinear models outperform the linear models in all the other cases, especially when no interpolation or extrapolation is involved (diagonal values). An interesting result can be observed by looking at the first column (lowest excitation level). The errors are generally higher than the subsequent columns for all the valuable nonlinear models (NSI1 ÷ NSI5). As mentioned in the previous section, this is possibly caused by a low signal to noise ratio, or to the occurrence of low-amplitude nonlinear effects related to the boundary connection of the beam, that are not included in the model.

4 Conclusions

The extrapolation and interpolation behaviour of data-driven state-space models from a nonlinear structure has been investigated in this paper. Analysis was carried out on a bench top experimental test rig consisting of a cantilever beam in which magnets were used to generate strong geometric nonlinearity. The beam was driven by an electrodynamic shaker using several levels of broadband random noise. Its behaviour ranged from quasi-linear to strongly nonlinear, and the data collected were used both as training and validations sets for subspace-based identification techniques (linear and nonlinear). The limitations of the identification process have been evaluated by comparing model predictions and measurements across the different levels of excitations. This was performed by defining first a measure of error based on the RMS deviation of the predicted and measured validation time histories. An error matrix was assembled which presents interpolation and extrapolation issues in one single view. This facilitates a clear picture of the limits of the estimated models when trying to simulate the behaviour of the structure in conditions that exceed the range of motion of the training data set. The results obtained with nonlinear models are also compared with linearised ones to have a complete view of the performance of each candidate model for each level of excitation. It is shown that nonlinear model predictions errors generally tend to increase when extrapolating towards higher excitation levels. The results are in accordance with the expectations, and provide useful insight on the importance of accurately selecting an appropriate range of motion when designing an experimental setup. Furthermore, the validity of the estimated nonlinear models becomes poor for very strong nonlinear behaviour. This is clear from the identification performed at the highest excitation level, which can be related to the occurrence of new nonlinear phenomena not included in the model. Linearised models on the other side can represent a valid modelling tool provided that the nonlinear behaviour is weak and the operational range of motion is covered by the validation set, making them inadequate to both interpolation and extrapolation processes.

Data availability

The datasets generated during and/or analysed during the current study are not publicly available but are available from the corresponding author on reasonable request.

References

Ewins, D.J.: Modal Testing: Theory. Practice and Application, Wiley (2000)

Kerschen, G., Worden, K., Vakakis, A.F., Golinval, J.-C.: Past, present and future of nonlinear system identification in structural dynamics. Mech. Syst. Signal Process. 20, 505–592 (2006). https://doi.org/10.1016/j.ymssp.2005.04.008

Schoukens, J., Vaes, M., Pintelon, R.: Linear system identification in a nonlinear setting: nonparametric analysis of the nonlinear distortions and their impact on the best linear approximation. IEEE Control. Syst. 36, 38–69 (2016). https://doi.org/10.1109/MCS.2016.2535918

Dobrowiecki, T., Schoukens, J.: Measuring a linear approximation to weakly nonlinear MIMO systems. Automatica 43, 1737–1751 (2007). https://doi.org/10.1016/j.automatica.2007.03.007

Friis, T., Tarpø, M., Katsanos, E.I., Brincker, R.: Equivalent linear systems of nonlinear systems. J. Sound Vib. (2020). https://doi.org/10.1016/j.jsv.2019.115126

Friis, T., Tarpø, M., Katsanos, E.I., Brincker, R.: Best linear approximation of nonlinear and nonstationary systems using operational modal analysis. Mech. Syst. Signal Process. (2021). https://doi.org/10.1016/j.ymssp.2020.107395

Noël, J.P., Kerschen, G.: Nonlinear system identification in structural dynamics: 10 more years of progress. Mech. Syst. Signal Process. 83, 2–35 (2017). https://doi.org/10.1016/j.ymssp.2016.07.020

T Hastie R Tibshirani J Friedman 2009 The Elements of Statistical Learning Springer New York https://doi.org/10.1007/978-0-387-84858-7

Schüssler, M., Nelles, O.: Extrapolation behavior comparison of nonlinear state space models. IFAC-PapersOnLine. 54, 487–492 (2021). https://doi.org/10.1016/j.ifacol.2021.08.407

Paduart, J., Lauwers, L., Swevers, J., Smolders, K., Schoukens, J., Pintelon, R.: Identification of nonlinear systems using Polynomial Nonlinear State Space models. Automatica 46, 647–656 (2010). https://doi.org/10.1016/j.automatica.2010.01.001

Marchesiello, S., Garibaldi, L.: A time domain approach for identifying nonlinear vibrating structures by subspace methods. Mech. Syst. Signal Process. 22, 81–101 (2008). https://doi.org/10.1016/j.ymssp.2007.04.002

Noël, J.P., Kerschen, G.: Frequency-domain subspace identification for nonlinear mechanical systems. Mech. Syst. Signal Process. 40, 701–717 (2013). https://doi.org/10.1016/j.ymssp.2013.06.034

Sadeqi, A., Moradi, S., Shirazi, K.H.: Nonlinear subspace system identification based on output-only measurements. J. Franklin Inst. 357, 12904–12937 (2020). https://doi.org/10.1016/j.jfranklin.2020.08.008

Noël, J.P., Marchesiello, S., Kerschen, G.: Subspace-based identification of a nonlinear spacecraft in the time and frequency domains. Mech. Syst. Signal Process. 43, 217–236 (2014). https://doi.org/10.1016/j.ymssp.2013.10.016

Anastasio, D., Marchesiello, S.: Free-decay nonlinear system identification via mass-change scheme. Shock and Vib. (2019). https://doi.org/10.1155/2019/1759198d

Anastasio, D., Marchesiello, S.: Nonlinear frequency response curves estimation and stability analysis of randomly excited systems in the subspace framework. Nonlinear Dyn. (2023). https://doi.org/10.1007/s11071-023-08280-6

Anastasio, D., Marchesiello, S., Kerschen, G., Noël, J.P.: Experimental identification of distributed nonlinearities in the modal domain. J. Sound Vib. 458, 426–444 (2019). https://doi.org/10.1016/j.jsv.2019.07.005

Zhu, R., Marchesiello, S., Anastasio, D., Jiang, D., Fei, Q.: Nonlinear system identification of a double-well Duffing oscillator with position-dependent friction. Nonlinear Dyn. 108, 2993–3008 (2022). https://doi.org/10.1007/s11071-022-07346-1

Anastasio, D., Fasana, A., Garibaldi, L., Marchesiello, S.: Nonlinear dynamics of a duffing-like negative stiffness oscillator: modeling and experimental characterization. Shock and Vib. (2020). https://doi.org/10.1155/2020/3593018

Marchesiello, S., Fasana, A., Garibaldi, L.: Modal contributions and effects of spurious poles in nonlinear subspace identification. Mech. Syst. Signal Process. 74, 111–132 (2016). https://doi.org/10.1016/j.ymssp.2015.05.008

Ramlan, R., Brennan, M.J., Mace, B.R., Kovacic, I.: Potential benefits of a non-linear stiffness in an energy harvesting device. Nonlinear Dyn. 59, 545–558 (2010). https://doi.org/10.1007/s11071-009-9561-5

Shaw, A.D., Gatti, G., Gonçalves, P.J.P., Tang, B., Brennan, M.J.: Design and test of an adjustable quasi-zero stiffness device and its use to suspend masses on a multi-modal structure. Mech. Syst. Signal Process. (2021). https://doi.org/10.1016/j.ymssp.2020.107354

Gatti, G., Shaw, A.D., Gonçalves, P.J.P., Brennan, M.J.: On the detailed design of a quasi-zero stiffness device to assist in the realisation of a translational Lanchester damper. Mech. Syst. Signal Process. (2022). https://doi.org/10.1016/j.ymssp.2021.108258

P Overschee van BL Moor de 1996 Subspace Identification for Linear Systems: Theory — Implementation — Applications Springer US https://doi.org/10.1007/978-1-4613-0465-4

Dossogne, T., Masset, L., Peeters, B., Noël, J.P.: Nonlinear dynamic model upgrading and updating using sine-sweep vibration data. Proceed. Royal Soc. A: Math. Phys. Eng. Sci. 475, 20190166 (2019). https://doi.org/10.1098/rspa.2019.0166

Funding

Open access funding provided by Politecnico di Torino within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript. The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Numerical demonstration

Appendix A: Numerical demonstration

The system considered for the numerical simulations is depicted in Fig.

11. It has two degrees-of-freedom and a mechanical stop positioned 2 mm away from the second mass. This creates a strong, non-smooth asymmetric nonlinear behavior of contact type when the gap is filled.

The theoretical nonlinear restoring force is therefore given by the following relationship:

The numerical example and the system parameters are taken from [16], except for the gap value \(d=2\mathrm{ mm}\) that is assumed to be known in this paper with a stochastic uncertainty, as described later. The system is excited at DOF 1 by a zero-mean Gaussian random input considering 10 equally spaced levels between 0.5 N RMS and 5 N RMS. The outputs are corrupted by 3% Gaussian noise and 100 Monte Carlo simulations are conducted for each level of excitation. For each simulation, the numerical integration of the equation of motion is performed using the Newmark method with a sampling frequency of 4096 Hz and considering a time span of 60 s. The first 40 s of the acquisition length are used to estimate the state-space model with NSI and the last 20 s are used as validation set. The uncertainty in the gap value is accounted for in the selection of the nonlinear basis functions of NSI that read:

where \(\xi \) is sampled from a zero-mean Gaussian distribution having \(3\sigma =0.2 \mathrm{mm}\). Therefore, the adopted gap value \({d}^{*}\) is different for each Monte Carlo simulation with a maximum variation of \(\pm 0.2 \mathrm{mm}\) from the nominal value (with a confidence interval of 99.7%).

The errors on the estimated modal parameters and nonlinear coefficients are listed in Table

4 for each level of excitation. Results are averaged over the Monte Carlo simulations, with standard deviations written in brackets. The errors on the modal parameters of the ULS remain quite low among the excitation levels and are generally higher for the first mode of the system. The reason is that the nonlinearity mainly affects the first mode. This is clear from Fig.

12, showing the H1-estimator for several excitation levels. As for the nonlinear coefficients \({k}_{1}\) and \({k}_{3}\), errors and standard deviations are very high for the lowest excitation levels, while they tend to stabilize for higher levels. The first level (0.5 N) does not provide any estimation of the nonlinear coefficients because the output displacement \({y}_{2}\) is not high enough to cover the gap value \(d\), therefore, the nonlinearity is not activated. Moreover, it can be observed that errors on \({k}_{1}\) are generally higher than the errors on \({k}_{3}\). This is to be addressed to the uncertainty on the gap estimation, that has a higher impact on the estimation of the stiffness \({k}_{1}\), especially at low levels.

The estimated state-space nonlinear model of each Monte Carlo simulation is used to replicate the outputs of the system for all the excitation levels. The output simulations error \({\epsilon }_{\mathrm{1,2}}\) (Eq. 7) associated with DOFs 1 and 2 are therefore evaluated and averaged across the simulations. Since errors on the DOF 2 are always higher than 1 for the considered system, only the error matrix of \({\epsilon }_{2}\) is depicted in Fig.

Error matrix on the validation set of DOF 2 averaged over MC simulations. The values refer to the percentage errors defined in Eq. (7). The darkness of the cells is proportional to the error level. Blue background (lower-triangular matrix) indicates simulations with interpolation; red background (upper-triangular matrix) indicates simulations with extrapolation; bold type indicates same-level simulations

13. The values on the diagonal correspond to the same-level identification and generally correspond to the lowest error for each model, as expected. Errors tend to increase when extrapolating (red background) or interpolating (blue background), with the exception of the first excitation level (first column). The reason is that it behaves linearly, therefore a good estimation of the ULS is enough to achieve acceptable errors. For the same reason, the model NSI1 generates huge errors when extrapolated towards every other excitation level.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Anastasio, D., Marchesiello, S., Gatti, G. et al. An investigation into model extrapolation and stability in the system identification of a nonlinear structure. Nonlinear Dyn 111, 17653–17665 (2023). https://doi.org/10.1007/s11071-023-08770-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-023-08770-7