Abstract

Considering the influence of environmental capacity and forgetting on rumor spreading, we improve the traditional SIR (susceptible–infected–removed) rumor propagation model and give two dynamic models of rumor propagation in heterogeneous environment and homogeneous environment, respectively. The main purpose of this paper is to make a dynamic analysis of rumor propagation models. In the spatial heterogeneous environment, we have analyzed the uniform persistence of the rumor propagation model and the asymptotic behavior of the positive equilibrium point when the diffusion rate of rumor–susceptible tends to zero. In the spatial homogeneous environment, we discuss the stability of rumor propagation model. Further, optimal control and the necessary optimality conditions are obtained by using the maximum principle. Finally, we study the Hopf bifurcation phenomenon through inducing time delay in the reaction–diffusion model. In addition, the existence of Hopf bifurcation is verified and the influence of diffusion coefficients is studied by numerical simulations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Rumor is a statement that arouses public interest and is spread without any corresponding official basis to prove it. Nowadays, with the development of science and technology, online social networks emerge in an endless stream, such as Instagram, WeChat, Twitter and Facebook, which make it more convenient for people to share information. At the same time, rumors spread faster and more diversified. Because the information spread by rumor is untrue or even harmful, rumor will often lead to economic loss, social unrest and other adverse effects. Particularly in major events, deliberately spreading rumors will often lead to the deterioration of the situation. For example, during the spread of the atypical pneumonia caused by a novel coronavirus (COVID-19) in China, many rumors cause public panic and even lead to the phenomenon of looting shuanghuanglian, which hinders the government from controlling the disease as soon as possible. Thus, to understand the mechanism of rumor spreading and then control rumor spreading have become the concern of scholars.

Because there are many similarities between the spread of rumor and the spread of infectious diseases, many epidemic models are applied to the analysis of rumor spread. In 1965, DK model, the first classic model of rumor spreading, was put forward by Daley and Kendal [1]. In DK model, the population are divided into three groups, ignorant (people who do not notice the rumor), spreader (people who notice and spread the rumor) and stifler (people who notice but do not spread the rumor). On this basis, Maki and Thompson assumed that when a spreader contacted with other spreader, the spreader would stop spreading rumors and proposed MK model [2]. Until today, many researchers applied or improved the classical rumor propagation model, such as SI (susceptible–infected), SIR, and applied it in rumor spreading [3,4,5,6,7,8,9,10]. In Ref. [4], Qian improved the traditional SIR model with the concept of independent spreaders in complex networks. In view of the fact that rumors are spread in multilingual environment, Wang established a SIR model with cross-transmitted mechanism [5]. Zhao changed SIR model to SIHR (susceptible–infected–hibernator–removed) model by adding the connection between ignorants and stiflers. At the same time, the influence of forgetting rate and average degree on rumor-spreading scale was discussed [6]. Yao improved SIR model to SDILR (susceptible–dangerous–infective–latent–recovered) model by considering the filtering function of social media for rumors [10]. In addition, many workers have also established new models based on specific situations in real life [8, 11,12,13,14]. For example, Tian assumed that ignorants had three different attitudes when facing rumors, and thus, they established a new SDILR model [8]. In Ref. [13], Liu divided the population into ignorant, lurker, spreader, removal and established the ILSR model.

With the deepening of research and the rapid development of network media, researchers find that the traditional model relying on ordinary differential equation cannot reflect the influence of space on rumor spreading. Therefore, many scholars begin to consider whether they could use partial differential equation to study the spread of rumors [15,16,17,18]. Considering the effect of space-time diffusion, Zhu proposed a rumor propagation model with uncertainty [15]. Guo discussed a kind of reaction–diffusion model with nonlocal delay effect and nonlinear boundary conditions [16]. Similarly, in the field of infectious diseases, more in-depth research is continuing [19,20,21,22,23,24]. However, the above discussions are all carried out in homogeneous environment; that is to say, the parameters corresponding to each point in the space are the same. Obviously, such a model cannot fully reflect the actual situation of rumor propagation. Therefore, many researchers try to improve it to spatial heterogeneous environment and analyze it. In the field of infectious diseases, there have been some achievements [25,26,27,28]. In Ref. [25], Lei improved SIR ordinary differential equation model, which was studied in Ref. [26], to the partial differential equation model in the heterogeneous space, and discussed the asymptotic behavior of the positive equilibrium point when the diffusion rate tended to zero. Zhang put forward a reaction–diffusion epidemic model–SVIR (susceptible–vaccinated–infected–removed) in heterogeneous environment and found that the diffusion rate of disease in heterogeneous space was higher than that in homogeneous space [27]. At present, it is rare to consider the spatial and temporal propagation model of rumors in heterogeneous environments.

In addition to the dynamic behavior of the model, the optimal control of the model is also an enduring topic. Scholars in various fields have studied the optimal control problem for different models [29,30,31,32,33]. Gashirai applied the optimal control theory to the FMD transmission model and analyzed how to effectively control the disease [31]. Based on MK rumor model, Kandhway discussed how to spread as much information as possible with limited funding [32]. In Ref. [33], Huo proved the existence of the optimal control of rumor propagation model with media reports by using the Pontryagin’s maximum principle. It can be seen that the study of optimal control has high practical value, in particular, the optimal control of spatiotemporal propagation model.

In the past, the growth rate of population was often recorded as a constant in the establishment of rumor propagation model or epidemic model. However, some researchers suggest that the capacity of rumor spreading system is not infinite due to the restriction of technology and the capacity of the real world for human beings is limited. Therefore, it is more realistic to express the population growth with the logistic equation, which is influenced by the internal growth rate and certain carrying capacity [34,35,36]. At the same time, many practical factors, such as people’s thinking and judgment based on their own experience and knowledge when they first contact with rumors, and people don’t necessarily review the news as soon as they receive it, will make the rumor communication system need to be added with time delay [12]. Similarly, there is usually a time interval between people’s exposure to rumors and spreading them. Based on the above two aspects, we will consider the influence of time delay and logistic equation when establishing rumor propagation model.

In this paper, we will improve the traditional SIR rumor propagation model to the following form

where S(t, x) denotes the rumor–susceptible who have not been exposed to rumors and may become a rumor–infector, I(t, x) denotes the rumor–infector who believe and spread rumors with a certain probability, and R(t, x) denotes the removal who already know the rumor is false and will not pay any more attention to it. We assume that the network exit rates of all three groups are \(\mu _1(x), \mu _2(x)\) and \(\mu _3(x)\). The diffusion coefficients \(\mathrm{d}_S\), \(\mathrm{d}_I\) and \(\mathrm{d}_R\) represent the migration rate of rumor–susceptible individuals, the migration rate of rumor–infector individuals and the rate of movement of removal, respectively. r(x) represents the internal growth rate of population, and K(x) represents the maximum capacity of network environment. The probability that a rumor–susceptible becomes a rumor–infector due to contact with rumor is expressed as \(\beta (x)\). \(\gamma (x)\) and \(\delta (x)\) indicate the probability that the rumor–infector becomes a rumor–susceptible due to forgetting and a remover due to identifying the false information, respectively. The habitat \(\Omega \subset {\mathbb {R}}^N\) \((N\ge 1)\) is a bounded area, and its boundary \(\partial \Omega \) is smooth. The Neumann boundary condition means that there is no population flow on the boundary \(\partial \Omega \). We assume that \(\mu _1(x)\), \(\mu _2(x)\), \(\mu _3(x)\), r(x), K(x), \(\beta (x)\), \(\gamma (x)\) and \(\delta (x)\) are positive and H\(\ddot{o}\)lder continuous functions on \({\overline{\Omega }}\).

Since the first two equations of system (1.1) are independent of R(t, x), we can simplify it to

In the following, we will study the dynamic behavior of system (1.2). Obviously, according to Ref. [37], by applying the strong maximum principle and Hopf lemma for the elliptic equations corresponding to system (1.2), we know that \(S(x)\ge 0\) and \(I(x)\ge 0\) for all \(x\in {\bar{\Omega }}\).

The innovation of this paper includes the following three points. Firstly, considering the difference of location parameters, we establish a heterogeneous spatial model, which is different from the traditional rumor-spreading process in a homogeneous environment [17]. Secondly, we study the spatial nonhomogeneous bifurcation caused by time delay. To some extent, our results have improved the phenomenon of space homogeneous bifurcation [18]. Finally, through numerical simulations, our work describes the propagation process from the perspective of heterogeneous space and finds out the influence of the difference of spatial diffusion ability on information propagation in heterogeneous environment. The numerical results confirm the necessity of the study of spatial propagation.

The paper is organized as follows. In Sect. 2, we establish a spatially heterogeneous model with logistic growth and discuss its consistent persistence and the asymptotic behavior of equilibrium point. In Sect. 3, we degenerate the model into a spatial homogeneous model and study the local and global stability. In addition, we use the maximum principle to study the optimal control of the model. In Sect. 4, considering the effect of time delay in real life, we research Hopf bifurcation of our model with time delay [38]. In order to verify our conclusions and discuss the effect of diffusion coefficients, we give some simulation results in Sect. 5. A brief conclusion is given in Sect. 6.

2 Spatially heterogeneous model

In this section, we always assume that \(r(x)>\mu _1(x)\) in \( {\overline{\Omega }}\). For any given nonnegative and continuous initial value \((S_0,I_0)\), system (1.2) has unique classical solution (S(t, x), I(t, x)) for all \(t>0\) and \(x\in {\overline{\Omega }}\) by the standard theory for parabolic equations. In addition, \((S(t,x),I(t,x))>(0,0)\) for \(t>0\), \(x\in {\overline{\Omega }}\) provided that \(I_0(x)\ge , \not \equiv 0\).

2.1 Uniform persistence

In this subsection, we will study the uniform persistence of the solution for system (1.2). For convenience, we define

where \(f(x)=\mu _1(x), \mu _2(x), \mu _3(x), r(x), K(x), \beta (x), \gamma (x)\; \text{ and }\; \delta (x)\) for \(x\in \Omega \).

Proposition 2.1

There exists a positive constant \({\hat{C}}\) depending on initial data such that the solution (S, I) of (1.2) satisfies

Furthermore, there exists a positive constant \({\tilde{C}}\) independent of initial data such that

for some large time \(T>0\).

Proof

By the first equation of (1.2), it can be calculated directly that

for all \(x \in \Omega , t>0\).

Denote \(M_1=\max \bigg \{\dfrac{ \gamma ^*}{ \beta _*},\dfrac{r^* K^*}{4(\mu _1)_*}\bigg \}\), then the following parabolic problem

has a unique solution w. We apply the comparison principle to conclude that

It is easy to see that \(v(t)=M_1+\max \limits _{x\in {\overline{\Omega }}}e^{-(\mu _1)_*t}\) is the supersolution of (2.3). Thus, we have

Therefore, we obtain

We can find a large \(T_1>0\) such that

Set \(\mu =\min \{(\mu _1)_*,(\mu _2)_*+\delta _*\}\), we define

then

Thus, we have

That is,

In view of (2.4) and the last two equations of system (1.1), we use Theorem 1.1 of [39] (or Lemma 2.1 in [40]) to deduce that there exists a positive constant \(M_2\) depending on the initial data such that

By (2.6), we have

We apply Lemma 2.1 of [40] to conclude that there exists a constant \(M_3>0\) independent of initial data such that

for some \(T_2>T_1\).

Set \({\hat{C}}=\max \{\max \{M_1,\max \limits _{{\overline{\Omega }}}S_0(x)\},M_2\}\) and \({\tilde{C}}=\max \{2M_1,M_3\}\). Due to (2.4) and (2.7), we obtain (2.1). It follows from (2.5) and (2.8) that (2.2) holds. \(\square \)

Define \(R_0\) as follows

where \({\tilde{S}}\) is the unique positive solution of

Lemma 2.1

The following properties of \(R_0\) hold.

-

(i)

\(R_0\) is a monotone decreasing function of \(\mathrm{d}_I\). \(R_0\rightarrow \max \limits _{x\in {\overline{\Omega }}}\frac{\beta (x){\tilde{S}}(x)}{\delta (x)+\mu _2(x)+\gamma (x)}\) as \(\mathrm{d}_I\rightarrow 0\), \(R_0\rightarrow \max \limits _{x\in {\overline{\Omega }}}\frac{\int _{\Omega }\beta (x){\tilde{S}}(x)\mathrm{d}x}{\int _{\Omega }[\delta (x)+\mu _2(x)+\gamma (x)]\mathrm{d}x}\) as \(\mathrm{d}_I\rightarrow \infty \);

-

(ii)

\(1-R_0\) has the same sign as \(\lambda _1\), where \(\lambda _1\) is the principal eigenvalue of the following eigenvalue problem

$$\begin{aligned} \left\{ \begin{array}{lll} \mathrm{d}_{I}\Delta \Phi +[\beta (x){\tilde{S}}(x)-(\delta (x)+\mu _2(x)+\gamma (x))]\Phi +\lambda \Phi =0,&{}x\in \Omega ,\\ \dfrac{\partial \Phi }{\partial \nu }=0, &{}x\in \partial \Omega . \end{array}\right. \end{aligned}$$(2.10)

Theorem 2.1

For the given initial data \((S_0,I_0)\), let (S, I) be the unique solution of (1.2). If \(R_0>1\), then system (1.2) is uniformly persistent: There exists a constant \(\epsilon >0\) such that

In particular, this implies that system (1.2) admits at least one positive equilibrium solution.

Proof

Let

and \(\partial \mathbf {X^0_+}:=\mathbf {X_+} \backslash \mathbf {X^0_+}=\{\varsigma \in \mathbf {X_+}:v\equiv 0\}\).

For a given \(\varsigma \in \mathbf {X_+}\), system (1.2) generates a semiflow, denoted by \(\Psi (t)\), and

where \((S(t,x,\varsigma ),I(t,x,\varsigma ))\) is the unique solution to system (1.2) with \((S_0,I_0)=\varsigma \).

Next, we will claim that \(({\tilde{S}},0)\) attracts \(\varsigma =(S_0,0)\in \partial \mathbf {X^0_+}\). As \(I_0\equiv 0\), the unique solution \((S(t,x,\varsigma ),I(t,x,\varsigma ))\) satisfies \(I(t,x,\varsigma )\equiv 0\) for all \(t\ge 0\) and S solves

It can be easily proved that

This proves our claim.

As \(R_0>1\), it follows from Lemma 2.1 that \(\lambda _1<0\). Then, we can conclude that there exists a constant \(0<\epsilon _0<-\lambda _1\) such that

for any \(\varsigma \in \mathbf {X^0_+}\) and \(\varsigma _0=({\tilde{S}},0)\).

Suppose that \(\limsup _{t\rightarrow \infty }||\Psi (t)\varsigma -\varsigma _0||< \epsilon _0\) for some \(\varsigma \in \mathbf {X^0_+}\). For the given \(\epsilon _0\), we can find \(T>0\) such that

for \(t\ge T\) and \(x\in {\overline{\Omega }}\). Then, we have

We apply the comparison principle to deduce that I is an upper solution of the following parabolic problem

Denote by \(\Phi _1\) the eigenfunction corresponding to \(\lambda _1\) which is positive on \({\overline{\Omega }}\). Then, we can find a small positive constant \(\sigma \) such that \(\sigma e^{-(\lambda _1+\epsilon _0)T}\Phi _1(x)\le I(T,x)\) on \({\overline{\Omega }}\). In view of the comparison principle, we obtain that \(\sigma e^{-(\lambda _1+\epsilon _0)t}\Phi _1(x)\) is a lower solution of (2.13). Thus,

This contradicts Proposition 2.1.

Similar to [41], we can apply [42, 61] to find that there is a real number \(\delta _0>0\), which is independent of initial data \((S_0, I_0,R_0)\), satisfying

In other words, there exists a large \(T_3>0\) such that

for all \(\varsigma \in \mathbf {X^0_+}\).

By (2.5) and (2.8), there is a sufficiently large \(T_4>\max \{T_1, T_2, T_3\}\),

for \(t> T_4\), \(x\in {\overline{\Omega }}.\) By the comparison principle, we can get

Let \(\epsilon =\min \{\delta _0,\delta _1\}\). As (2.14) and (2.15), we obtain the assertion (2.11). In addition, this implies that system (1.2) admits at least one positive equilibrium solution E(S(x), I(x)). \(\square \)

Remark 2.1

The uniform persistence of system (1.2) means that rumors persist for a long time. In addition, under the condition of \(S_0(x)>0\) and \(I_0(x)>0\), the rumor continues uniformly for \(t\ge 0\) and \(x\in {\overline{\Omega }}\).

2.2 Asymptotic behavior of E

In this subsection, we will discuss the asymptotic behavior of the positive equilibrium E based on Refs. [25, 43, 44].

Corresponding to (1.2), the equilibrium problem satisfies the following elliptic system:

If (S, I) is the solution of (2.16), \(S\ge 0\) and \(I\ge \not \equiv 0\), then we use the strong maximum principle and Hopf lemma for system (2.16) to conclude that \(S(x)>0\) and \(I(x)>0\) for all \(x\in {\bar{\Omega }}\).

Now, we recall some known facts, see, for instance, [45] and [46].

Lemma 2.2

Assume that \(\omega \in C^2({\overline{\Omega }})\) and \(\partial _\nu \omega =0\) on \(\partial \Omega \), then the following assertions hold.

-

(1)

If \(\omega \) has a local maximum at \(x_1\in {\overline{\Omega }}\), then \(\triangledown \omega (x_1)=0\) and \(\Delta \omega (x_1)\le 0\).

-

(2)

If \(\omega \) has a local maximum at \(x_2\in {\overline{\Omega }}\), then \(\triangledown \omega (x_2)=0\) and \(\Delta \omega (x_2)\ge 0\).

Lemma 2.3

Let \(\omega \in C^2(\Omega )\cap C^1({\overline{\Omega }})\) be a positive solution to the elliptic equation

where \(c\in C({\overline{\Omega }})\). Then, there exists a positive constant M which depends only on C where \(||c||_{\infty }\le C\) such that

First, we will study the dynamic behavior of system (1.2) as \(\mathrm{d}_S\rightarrow 0\). Let \(\mathrm{d}_S\rightarrow 0\); we apply ([47], Lemma 3.2) to deduce that the unique solution \({\tilde{S}}\) of (2.9) satisfies

then the principal eigenvalue \(\lambda _1\) of problem (2.10) converges to the principal eigenvalue \(\lambda _1^0\) of the following eigenvalue problem

Theorem 2.2

For fixed \(\mathrm{d}_I>0\), and assume that \(\lambda _1^0<0\). Let \(\mathrm{d}_S \rightarrow 0\). Then, any positive solution (S, I) of (2.16) satisfies (up to a subsequence of \(\mathrm{d}_S \rightarrow 0\)) \((S,I)\rightarrow (S^0,I^0)\) uniformly on \({\overline{\Omega }}\), where

and \(I^0\) is a positive solution of

Proof

Step 1: There exists a constant \(C>0\), independent of \(0<\mathrm{d}_S\le 1\), such that

Let \(S(x_1)=\max \limits _{x\in {\overline{\Omega }}} S(x)\) for some \(x_1\in {\overline{\Omega }}\).p. As Lemma 2.2, we have \(\Delta S(x_1)\le 0\). For the first equation of system (2.16), we can know

It follows that

If \(\beta (x_1)S(x_1)-\gamma (x_1)<0\), we obtain

If \(\beta (x_1)S(x_1)-\gamma (x_1)\ge 0\), we obtain

Therefore, we can get

Define \(W=\mathrm{d}_S S+\mathrm{d}_I I\), then we obtain

Let \(W(x_2)=\max \limits _{x\in {\overline{\Omega }}} W(x)\) for \(x_2\in {\overline{\Omega }}\), we use Lemma 2.2 to get \(\Delta W_2(x_0)\le 0\). Thus,

for \(\mu =\min \{(\mu _1)_*,(\mu _2)_*+\delta _*\}\). By the further calculation, we can get

Furthermore,

Then,

That is,

Now, we will give the positive lower bound for the component I. In view of (2.20), we use the Harnack inequality (Lemma 2.3)

to obtain

where the constant \(M>0\) independent of \(\mathrm{d}_S\).

Suppose that I has no positive lower bound, we can find a sequence \(\{\mathrm{d}_{S_n}\}\) satisfying \(\mathrm{d}_{S_n}\rightarrow 0\) as \(n\rightarrow \infty \) and the corresponding positive solution sequence \((S_n, I_n)\) of (2.16) with \(\mathrm{d}_S=\mathrm{d}_{S_n}\), such that \(\min _{x\in {\overline{\Omega }}}I_n(x)\rightarrow 0\) as \(n\rightarrow \infty \). In view of (2.22), we deduce that

By an analogous consideration (Lemma 3.2 [47]), we have

On the other hand, as \(\lambda _1^n\) (for \(n\ge 1\)) is the principle eigenvalue of

By (2.21), we have \(\lambda _1^n=0\). It is easy to see that the sequence of \(\{\lambda _1^n\}\) converges to \(\lambda _1^0<0\), which is contradiction with \(\lambda _1^0=0\). So we can draw a conclusion that I has a positive lower bound \(C_3\), which not depends on \(0<\mathrm{d}_S\le 1\).

Set \(S(x_3)= \min \limits _{x\in {\overline{\Omega }}} S(x)\) for \(x_3\in {\overline{\Omega }}\), then \(\Delta S(x_3)\ge 0\) by Lemma 2.2. We use the first equation of (2.16) to get

that is to say,

It is not difficult to find

In conclusion, we have proved that (2.19) holds.

Step: 2 We study the convergence of I as \(\mathrm{d}_S \rightarrow 0\). I is the solution of the following system

In view of (2.19)and the standard \(L^p\) theory ([48]), we obtain that

Then, we apply the Sobolev embedding theorem (see, e.g., Refs. [25, 43, 44]) to obtain

Hence, there exists a subsequence of \(\mathrm{d}_S\rightarrow 0\) represented by \(\mathrm{d}_n:=\mathrm{d}_{S_n}\), satisfying \(\mathrm{d}_n\rightarrow 0\) as \(n\rightarrow \infty \). And the corresponding positive solution \((S_n,I_n)\) of (2.16) with \(\mathrm{d}_S=\mathrm{d}_{S_n}\), which satisfies that

where \(I^0>0\).

Step: 3 We will prove the convergence of S. For any \(\epsilon >0\), we use (2.26) to find a constant \(N>0\) such that

for \(n\ge N\).

For fixed \(n\ge N\), we know that \(S_n\) is the supersolution of

and a subsolution of

where

and

By the proof of [25, 43], we can conclude that system (2.27) and system (2.28) have a unique positive solution \(U_n\) and \(V_n\), respectively. Then, we use the proof of Lemma 2.4 (in Ref. [49]) to conclude that

In fact, \(S_n\) is a supersolution for the problem (2.27) and is a subsolution for the problem (2.28), and we have \( U_n\le S_n\le V_n\) on \({\overline{\Omega }}\) for \(n>N\). Thus,

As \(\epsilon >0\) can be choose arbitrarily small, it is easy to show that

where

This completes the proof. \(\square \)

Theorem 2.3

For fixed \(\mathrm{d}_S>0\), assume that \(\bigg \{x\in {\overline{\Omega }}\big |\beta (x){\tilde{S}}(x)>\delta (x)+\mu _2(x)+\gamma (x)\bigg \}\) is nonempty. Let \(\mathrm{d}_I \rightarrow 0\). Then, any positive solution (S, I) of (2.16) satisfies (up to a subsequence of \(\mathrm{d}_I \rightarrow 0\)) that \(S\rightarrow S^*\) in \(L^1(\Omega )\) with \(||S^*||_{L^1(\Omega )}\) positive, \(\int _{\Omega }I\mathrm{d}x\rightarrow I^*\) with \(I^*\) is a positive constant.

3 Spatially homogeneous model

In this section, we assume that all the parameters \(\mathrm{d}_S, \mathrm{d}_I, r, K, \delta , \mu _1, \mu _2, \beta , \gamma \) in system (1.2) are positive constant.

3.1 The existence of equilibrium points

Firstly, we study the existence of rumor-eliminating equilibrium point \(E_0\) and rumor-spreading equilibrium point \(E_*\). Through a simple calculation, we can get \(E_0=(S_0, 0)=\bigg (K(1-\dfrac{\mu _1}{r}),0\bigg )\). Apparently, when \(\mu _1<r\), rumor-eliminating equilibrium point \(E_0\) exists.

Next, we use the next generation matrix to calculate the basic reproduction number \(R_0\) [50] and try to find the relationship between \(R_0\) and the existence of rumor-spreading equilibrium point \(E_*\).

Theorem 3.1

If \(R_0>1\), system (1.2) has the rumor-spreading equilibrium point \(E_*=(S_*,I_*)= \bigg (\dfrac{\delta +\mu _2+\gamma }{\beta }, \dfrac{r(\delta +\mu _2+\gamma )^2}{\beta ^2(\delta +\mu _2)K}(R_0-1)\bigg )\).

Proof

Let the equations of system (1.2) be equal to zero, we get

According to system (1.2), we can obtain the following two matrices

and

Through a direct calculation, we can get that

and \(E_*=(S_*,I_*)=\bigg (\dfrac{\delta +\mu _2+\gamma }{\beta }, \dfrac{r(\delta +\mu _2+\gamma )^2}{\beta ^2(\delta +\mu _2)K} (R_0-1)\bigg )\). So we can get the conclusion that there exists \(E_*\) when \(R_0>1\). \(\square \)

Since the stability of the equilibrium point is directly related to the spread and control of rumors, we first discuss the local stability and global stability of the equilibrium points \(E_0\) and \(E_*\) through the linearization technique, Hurwitz theorem and Lyapunov function.

3.2 The local and global stability analysis of \(E_0\) and \(E_*\)

In this section, we will analyze the local stability and global stability of \(E_0\) and \(E_*\).

Theorem 3.2

If \(0<R_0<1\) holds, the rumor-eliminating equilibrium point \(E_0\) is locally asymptotically stable.

Proof

The Jacobian matrix of system (1.2) at \(E_0\) is

and the characteristic equation becomes

Clearly,

assume \(0<R_0<1\), then \(\lambda _1<0\) and \(\lambda _2<0\). Therefore, \(E_0\) is locally asymptotically stable. \(\square \)

Theorem 3.3

If \(2>R_0>1\) holds, the rumor-spreading equilibrium point \(E_*\) is locally asymptotically stable.

Proof

The Jacobian matrix of system (1.2) at \(E_*\) is

and the characteristic equation is

Assume \(\lambda _1\) and \(\lambda _2\) are the roots of the characteristic equation, then

Thus, if \(2>R_0>1\), we can obtain \(\lambda _1+\lambda _2<0\) and \(\lambda _1\lambda _2>0\) for all \(k=0,1,2...\), and further, we can get \(E_*\) which is locally asymptotically stable. \(\square \)

Theorem 3.4

If \(0<R_0<1\), then the rumor-eliminating equilibrium point \(E_0\) is globally asymptotically stable under the condition of \(\mathrm{d}_S=\mathrm{d}_I\).

Proof

Define the Lyapunov function

where

Then,

Firstly, according to \(\mu _1=r(1-\dfrac{S_0}{K})\), we can get

and

Therefore, we obtain

According to the condition of \(\mathrm{d}_S=\mathrm{d}_I\) and the main method of definite integral calculation, we obtain

where \( \mathrm{d}_{SI}=\mathrm{d}_S=\mathrm{d}_I.\)

In other words, if \(0<R_0<1\), then \(\dfrac{\partial {\mathbb {V}}}{\partial t}\le 0\). Further, if and only if \(S=S_0\), \(I=0\), we have \(\dfrac{\partial {\mathbb {V}}}{\partial t}=0\). According to LaSalle invariance principle, the rumor-eliminating equilibrium point \(E_0\) is global asymptotically stable. \(\square \)

3.3 Optimal control

In the following subsection, we will discuss the optimal control problem for the spatial homogeneous system (1.1) based on Refs. [51,52,53,54,55,56,57,58].

Firstly, we introduce a control variable u(t, x), which represents the rumor clarification rate at time t and location x. Therefore, system (1.1) can be rewritten as follows:

with the homogeneous Neumann boundary conditions

and the corresponding initial conditions

Record the admissible control set as

Our aim is to maximize the density of R and minimize the density of I on \(\Omega \) through the minimal control cost. In other words, we want to minimize the following objective function

Consider the work space in this part as Hilbert space \(H=L^2(\Omega )^3\) and denote \(y=(S,I,R)\) is the solution of system (3.11) with the initial value \(y^0=(S^0,I^0,R^0)\). Further, define the linear operator \(A:D(A)\subset H\rightarrow H\), \(Ay=(\mathrm{d}_S\Delta S, \mathrm{d}_I\Delta I, \mathrm{d}_R\Delta R)\) where \(y\in D(A)=\bigg \{y=(S,I,R)\in (H^2(\Omega ))^3,\dfrac{\partial S}{\partial \nu } =\dfrac{\partial I}{\partial \nu }=\dfrac{\partial R}{\partial \nu }=0\bigg \}\). Then, system (3.11) can be rewritten as

where

For some large enough positive integer N, denote

It is easy to prove that \(f^N\) is Lipschitz continuous in y uniformly with respect to \(t\in [0,T].\) Thus, the existence of the solution for system (3.11) can be obtained in the following lemma based on Refs. [53, 54, 56].

Lemma 3.1

Suppose that \(y_0\) satisfies condition

Then, system (3.11) admits a unique strong positive solution \(y=(S, I, R)\in W^{1,2}(0,T;H)\) satisfying \(S(t,x),\;I(t,x)\) and \( R(t,x)\in L^2(0,T;H^2(\Omega ))\cap L^{\infty }(0,T;H^1(\Omega ))\cap L^{\infty }(Q).\) Moreover, there exists a constant \(cc>0\) independent of u and y satisfying

and

Theorem 3.5

If \(Q_1\) holds, then there exists an optimal state \(y_\star =(S_\star , I_\star , R_\star )\) for system (3.11) with the corresponding optimal control \(u^*\).

Proof

The proof here is the standard method, and thus, we just give a simple proof [54,55,56]. It is not difficult to find that there are sequences \(u_n\in {\mathcal {U}}\) and \(y_n=(S_n, I_n, R_n)\) which is the solution to this system down here

with the homogeneous Neumann boundary condition

and the initial conditions

In addition,

Combining Arzela–Ascoli theorem [59], there exists a subsequence (we might as well define it as \(S_n, I_n, R_n\).), which satisfies that \(S_n(t)\rightarrow S_\star , I_n(t)\rightarrow I_\star , R_n(t)\rightarrow R_\star \) when \(n\rightarrow \infty .\) Further, by the estimates for \(S_n\), \(I_n\), \(R_n\) according to Lemma 3.1, and \(u_n\in {\mathcal {U}}\), we obtain that

and

The further calculation can be obtained that

Thus, according to the above conclusion, we have

and

Obviously, when \(n \rightarrow \infty \), \((u^*, S_\star , I_\star , R_\star )\) is an optimal solution of system (3.11). \(\square \)

Next, we will apply the standardized method to look for the first order necessary condition of the optimal control (3.11). Define \(z_1^{\varepsilon }=(S^{\varepsilon }-S_\star )/{\varepsilon }\), \(z_2^{\varepsilon }=(I^{\varepsilon }-I_\star )/{\varepsilon }\), \(z_3^{\varepsilon }=(R^{\varepsilon }-R_\star )/{\varepsilon }\), where \((S_\star ,I_\star ,R_\star )\) is the optimal state with the optimal control \(u^*\), and \((S^{\varepsilon }, I^{\varepsilon }, R^{\varepsilon })\) is the solution for system (3.11) with \(u^{\varepsilon }=u^*+\varepsilon u_0 \in {\mathcal {U}}\), \(u_0\in L^2(0, T)\) and \(\varepsilon >0\). Then, system (3.11) can be rewritten as

a.e on Q, with the homogeneous Neumann boundary condition

and the initial condition

Similar to Theorem 3.5, it is easy to prove that \(\lim \limits _{\varepsilon \rightarrow 0}S^{\varepsilon }=S_\star \), \(\lim \limits _{\varepsilon \rightarrow 0}I^{\varepsilon }=I_\star \), \(\lim \limits _{\varepsilon \rightarrow 0}R^{\varepsilon }=R_\star \) and \(\lim \limits _{\varepsilon \rightarrow 0}z_i^{\varepsilon }=z_i\), \((i=1,2,3)\) in \(L^2(Q)\), where \(z=(z_1. z_2, z_3)\) satisfies

a.e on Q, with the homogeneous Neumann boundary condition

and the initial condition

In the following, we will establish the adjoint system corresponding to system (3.11). Let \(p=(p_1, p_2, p_3)\) be the adjoint variable, the adjoint system can be written as

a.e on Q, with the homogeneous Neumann boundary condition

and the initial condition

where \((S_\star ,I_\star ,R_\star )\) is the optimal state with the optimal control \(u^*\). Similar to Theorem 3.5, it is not difficult to obtain the existence of a strong solution for system (3.23).

Theorem 3.6

Suppose that \((S_\star , I_\star , R_\star , u^*)\) is an optimal solution of problem (3.11)–(3.12), then the optimal control function \(u^*\) can be written as

where \(p_2\) and \(p_3\) satisfy system (3.23).

Proof

According to \(\Phi (I_\star , R_\star , u^*)\) \(\le \) \(\Phi (I^\varepsilon , R^\varepsilon , u^\varepsilon )\), we have

where \(\varepsilon \rightarrow 0\). Multiplying both sides of the equations in (3.23) and (3.22) by \(z_1. z_2, z_3, p_1, p_2, p_3\), respectively, and through a simple calculation, we obtain

By integrating both sides of Eq. (3.27) at the same time and combining with Green’s formula, a straightforward calculation can be made that

Thus,

Let \(u_0={\tilde{u}}-u^*\) with arbitrary \({\tilde{u}}\in {\mathcal {U}}\), then

Clearly, when \(u^*\) is chosen as the form in (3.26), the above inequality satisfies. This completes the proof. \(\square \)

4 Spatially homogeneous model with time delay

In Sect. 1, we have mentioned that in order to better reflect the reality, it is necessary to add time delay to the model [38]. Considering that the growth of rumor–susceptible is delayed due to the influence of network environment, we establish the following spatially homogeneous model with time delay \(\tau \)

Similarly, system (4.1) can be simplified as follows

In the following, we will study the stability and Hopf bifurcation of the rumor-spreading equilibrium point \(E_*\) for system (4.2). Denote \(U(t)=(u_1(t), u_2(t))^{\top }=(S(t,\cdot ), I(t,\cdot ))^{\top },\) and we can rewrite system (4.2) as the following abstract differential equation in the phase space \({\mathcal {C}}=C([-\tau ,0],X)\)

where \({\mathbf {D}}=diag\{\mathrm{d}_S, \mathrm{d}_I\}\), \(U_t(\theta )=U(t+\theta )\), \(-\tau \le \theta \le 0\), \({\mathbf {L}}:{\mathcal {C}}\rightarrow X\), and \({\mathbf {G}}:{\mathcal {C}}\rightarrow X\) are given, respectively, by

and

for \(\psi (\theta )=U_t(\theta )\), \(\psi =(\psi _1, \psi _2)^{\top }\). The characteristic equation for the linearized system of (4.3) at (0, 0) is

where \(y\in dom(\Delta )\) and \(y\ne 0\), \(dom(\Delta )\subset X\).

The operator \(\Delta \) on X has the eigenvalues \(-\dfrac{k^2\pi ^2}{L^2}\), (\(k=0,1,2...\)), and the corresponding eigenfunctions are

where \(\zeta _k=\cos \bigg (\dfrac{k\pi }{L}x\bigg ).\) Obviously, \((\alpha _k^1, \alpha _k^2)_0^{\infty }\) constructs a basis of the phase space X. Thus, any element y in X can be expanded as follows

Then, we have

Thus, Eq. (4.6) can be written as

where

Therefore, the eigenvalue equation is

Theorem 3.3. has given the case of \(\tau =0\), so next we only discuss the case of \(\tau >0\).

Theorem 4.1

Assume \((H_{1})\;\dfrac{\mathrm{d}_S}{\mathrm{d}_I}>\dfrac{(\beta I_*+\mu _1-r)^2}{4\beta I_*(\delta +\mu _2)}\), we have the following conclusions when \(\tau >0\).

-

(1)

If \(\Delta _0=[2C_kD_k+(A_k^2-B_k^2)+\mathrm{d}_I^2\dfrac{k^4\pi ^4}{L^4}]^2-4[(A_k(E_k+F_k)+C_kD_k)^2-B_k^2(E_k+F_k)^2]<0\) holds for all \(k=0,1,2...\), then the rumor-spreading equilibrium point \(E_*\) is locally asymptotically stable for \(\forall \tau >0\).

-

(2)

If \(\Delta _0>0\) and \((H_{2})\;2C_0D_0+(A_0^2-B_0^2)<0\) hold, then the rumor-spreading equilibrium point \(E_*\) is locally asymptotically stable when \(\tau \in (0,\tau ^*)\) and unstable when \(\tau \in (\tau ^*,\tau ^*+\varepsilon )\) for some \(\varepsilon >0\). That is to say, system (4.2) occurs a Hopf bifurcation at the rumor-spreading equilibrium point \(E_*\) when \(\tau =\tau ^{*} \) for \(k\in {\mathbb {T}}\), where \({\mathbb {T}}\) is defined below.

Proof

Let \(\lambda =i\omega \) be a solution of Eq. (4.11). Separating the real part from image part, we obtain that

which leads to

Let \(z=\omega ^2\), it is easy to obtain that

Denote

By a direct calculation, we obtain

and

Because of \(A_k<0, B_k<0, C_k<0\) and \(D_k>0\), we obtain \(A_k\mathrm{d}_I\dfrac{k^2\pi ^2}{L^2}+C_kD_k+B_k\mathrm{d}_I\dfrac{k^2\pi ^2}{L^2}<0\). Therefore, if \(A_k\mathrm{d}_I\dfrac{k^2\pi ^2}{L^2}+C_kD_k-B_k\mathrm{d}_I\dfrac{k^2\pi ^2}{L^2}<0\), which is equal to

we can get \(A_k^2(E_k+F_k)^2+C_k^2D_k^2-2A_kC_kD_k(E_k+F_k)-B_k^2(E_k+F_k)^2>0\). When \(k \rightarrow \infty \),

Thus, if \((H_{1})\) and \((H_{2})\;2C_0D_0+(A_0^2-B_0^2)<0\) hold, we can know that there exists a constant \(k_0\in \{0,1,2\ldots \}\), if \(0\le k\le k_0\), then \(2C_kD_k+(A_k^2-B_k^2)+\mathrm{d}_I^2\dfrac{k^4\pi ^4}{L^4}\le 0\) and if \(k_0< k\), then \(2C_kD_k+(A_k^2-B_k^2)+\mathrm{d}_I^2\dfrac{k^4\pi ^4}{L^4}>0\). In the case of \(0\le k\le k_0\), if

then Eq. (4.14) has no positive root. If \(\Delta _0\ge 0,\) we can know the roots of Eq. (4.14) are

which leads to roots of (4.13)

According to (4.12), we obtain

The asymptotic behavior of the solution of system (1.2) when \(\overline{R_0}>1\)

Let

and

where

Differentiating the eigenvalue equation with respect to \(\tau \), we can get

Equation (4.23) implies that \(\mathrm{Re}\bigg (\dfrac{\mathrm{d}\lambda }{\mathrm{d}\tau }\bigg )^{-1}\bigg |_{\lambda =i\omega _{k}^{+}}>0\) when \(\Delta _0>0\). Then, we can come to a conclusion that system (4.2) undergoes a Hopf bifurcation at the rumor-spreading equilibrium point \(E_*\) when \(\tau =\tau ^*=\min \{\tau _{k0}^{+}\}\). In other words, for some small enough \(\varepsilon >0\), \(E_*\) is locally asymptotically stable when \(\tau \in [0,\tau ^*)\) and unstable when \(\tau \in (\tau ^*, \tau ^*+\varepsilon )\). \(\square \)

5 Numerical simulation

5.1 Asymptotic behavior of \(E^{*}\)

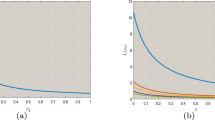

In the heterogeneous environment, we let parameters \(r=0.3, K=3.5, \mu _1=0.1, \mu _2=0.1, \delta =0.2, \gamma =0.3\) and \(\beta =0.5+0.05\sin x\). According to Ref. [60], we can estimate the basic regeneration number \(\overline{R_0}\) and get \(\overline{R_0}\ge \frac{\min \limits _{x\in {\overline{\Omega }}}\beta (x) K (r-\mu _1)}{r(\delta +\mu _2+\gamma )}=1.75>1\). In order to satisfy the condition of \(\mathrm{d}_S\rightarrow 0, \mathrm{d}_I>0\), we take diffusion coefficients as \(\mathrm{d}_S=0.0001\) and \(\mathrm{d}_I=0.1\). As Fig. 1 shows, we can get the change of rumor–susceptible and rumor–infector over time and their projections on the \(t-x\) plane. It indicates that as time goes on, the change range of solution curves will gradually decrease. That is to say, system (1.2) eventually tends to a stable state. Biologically speaking, it means that for any given location in the habitat, the number of rumor–susceptible and rumor–infector eventually converges to a positive constant.

5.2 The effect of diffusion coefficients

In order to explain the influence of diffusion coefficients , we make parameters \(r=0.3, K=3.5, \mu _1=0.1, \mu _2=0.1, \delta =0.2, \gamma =0.1, \beta =0.5+0.05\sin x\) and take different diffusion coefficients \(\mathrm{d}_S=\mathrm{d}_I=0.0001, 1, 5\), respectively. It can be seen from Fig. 2 that the fluctuation of S(t, x) and I(t, x) decreases gradually with the increase in diffusion coefficients in the current parameter environment. This means that the gap between the number of rumor–susceptible and rumor–infector in different locations will gradually narrow with the increase in diffusion coefficient.

5.3 Global stability

In case of all the parameters \(\mathrm{d}_S, \mathrm{d}_I, \mathrm{d}_R, r, K, \beta , \gamma , \delta , \mu _1, \mu _2\) in system (1.1) are positive constants, let \(r=0.7, K=3, \beta =0.4, \gamma =0.6, \delta =0.2, \mu _1=0.3, \mu _2=0.3\) and \(\mathrm{d}_S=\mathrm{d}_I=\mathrm{d}_R=0.01\), we obtain \(R_0=0.6234<1\) and \(E_0=(1.7143, 0, 0)\), which means that the parameters satisfy the conditions of Theorem 3.4. By choosing initial value (2.1, 0.4, 0.5), we obtain Fig. 3a–c, which depicts the trend of S, I, R over time and space. Obviously, system (1.1) is stable at the rumor-eliminating equilibrium point \(E_0\), which indicates that the number of rumor–susceptible tends to a positive constant and the number of rumor–infector tends to zero. Changing the initial value by perturbation, we obtain Fig. 3d. It can be seen that when we choose different initial data, rumors will be stable at the rumor eliminating equilibrium point \(E_0\), which is consistent with the conclusion of Theorem 3.4.

5.4 Optimal control

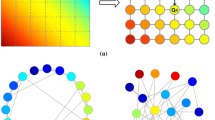

The parameters are given by \(r=0.4, L=0.9, \beta =0.2, \mu _1=0.2, \mu _2=0.2, \gamma =0.3, \delta =0.3\) and \(\mathrm{d}_S=0.003, \mathrm{d}_I=0.002, \mathrm{d}_R=0.002\) in system (3.11). The corresponding initial conditions are

The variation curves of solutions (S, I, R) and \((p_1, p_2, p_3)\) are calculated over the entire domain \(Q=[0,1 ]\times [0,1]\), as shown in Figs. 4, 5 and 6. It is obvious from Fig. 7. that the optimal control \(u^*\) of the interaction process is Bang-Bang form.

5.5 Local stability and Hopf bifurcation

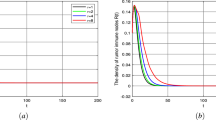

Considering the parameters \(r=0.2, K=5, \beta =0.4, \mu _1=0.1, \mu _2=0.1, \gamma =0.8, \delta =0.04\) in system (4.2), we have \(R_0=1.0638>1\) and the rumor-spreading equilibrium point \(E_*=(2.35, 0.1007)\). In addition, we can calculate that \(\frac{(\beta I_*+\mu _1-r)^2}{4\beta I_*(\delta +\mu _2)}=0.7295\), \(2C_0D_0+(A_0^2-B_0^2)=-0.0189<0\), \(\Delta _0=2.315\times 10^{-4}>0\) and \(\tau ^*=14.8769\). Therefore, we choose the diffusion coefficients \(\mathrm{d}_S=0.01\) and \(\mathrm{d}_I=0.01\), which meets the condition \(\frac{\mathrm{d}_S}{\mathrm{d}_I}=1>0.7295\). According to Theorem 4.1(2), when \(\tau \) exceeds \(\tau ^*\), system (4.2) has Hopf bifurcation at the rumor-spreading equilibrium point \(E_*\). As shown in Figs. 8 and 9, the rumor-spreading equilibrium point \(E_*\) is locally asymptotically stable when \(\tau =14<\tau ^*\) and unstable when \(\tau =16>\tau ^*\). In other words, \(\tau ^*\) is the threshold to determine whether \(E_*\) is stable, which is consistent with our conclusion.

6 Conclusions

In this work, we have established a new SIR rumor propagation model with logistic growth and spatial diffusion in heterogeneous environment. The discussion about the consistent persistence of the system and the asymptotic behavior of \(E^*\) when \(\mathrm{d}_S\rightarrow 0\) is given. In addition, we also find that when \(\mathrm{d}_S=\mathrm{d}_I\), the fluctuation of S(t, x) and I(t, x) decreases with the increase in diffusion coefficients by numerical simulations. Then, in order to further study the dynamic behavior of the system, we make the parameters of each location x the same and discuss the existence of equilibrium point, local stability and global stability. We find that the rumor-eliminating equilibrium point \(E_0\) exists when \(\mu _1<r\) and the rumor-spreading equilibrium point \(E_*\) exists when \(R_0>1\). Further, we obtain the conclusion that when \(0<R_0<1\), \(E_0\) is globally stable. In order to make our work more practical, we have analyzed the optimal control problem for the rumor propagation model. In particular, we find that the optimal control is in the form of Bang-Bang through numerical simulation. Finally, in order to make our model more realistic, we consider adding time delay to the model and study the stability and Hopf bifurcation of the delay model. Further, we verify our conclusion by several numerical simulations. It is undeniable that there are still many imperfections in our work. We will continue to conduct in-depth research and strive for more valuable results.

References

Daley, D.J., Kendall, D.G.: Stochastic rumours. IMA J. Appl. Math. 1, 42–55 (1965)

Maki, D.P., Thompson, M.: Mathematical Models and Applications. Prenrice-Hall, Wnglewood Cliffs (1973)

Zhu, L.H., Liu, W.S., Zhang, Z.D.: Delay differential equations modeling of rumor propagation in both homogeneous and heterogeneous networks with a forces silence function. Appl. Math. Comput. 370, 124925 (2020)

Qian, Z., Tang, S.T., Zhang, X., Zheng, Z.M.: The independent spreaders involved SIR Rumor model in complex networks. Phys. A 429, 95–102 (2015)

Wang, J.L., Jiang, H.J., Ma, T.L., Hu, C.: Global dynamics of the multi-lingual SIR rumor spreading model with cross-transmitted mechanism. Chaos Solitins Fractals 126, 148–157 (2019)

Zhao, L.J., Wang, J.J., Chen, Y.C., Wang, Q., Cheng, J.J., Cui, H.X.: SIHR rumor spreading model in social networks. Phys. A 391, 2444–2453 (2012)

Zhao, Z.J., Liu, Y.M., Wang, K.X.: An analysis of rumor propagation based on propagation force. Phys. A 443, 263–271 (2016)

Tian, Y., Ding, X.J.: Rumor spreading model with considering debunking behavior in emergencies. Appl. Math. Comput. 363, 124599 (2019)

Li, D.D., Du, J.G., Sun, M., Han, D.: How conformity psychology and benefits affect individuals’ green behaviours from the perspective of a complex network. J. Cleaner Prod. 248, 119215 (2020)

Yao, Y., Xiao, X., Zhang, C.P., Dou, C.S., Xia, S.T.: Stability analysis of an SDILR model based on rumor recurrence on social media. Phys. A 535, 122236 (2019)

Gao, X.Y., Tian, L.X., Li, W.Y.: Coupling interaction impairs knowledge and green behavior diffusion in complex networks. J. Clean. Prod. 249, 119419 (2020)

Zhu, L.H., Guan, G.: Dynamical analysis of a rumor spreading model with self-discrimination and time delay in complex network. Phys. A 533, 121953 (2019)

Liu, Q.M., Li, T., Sun, M.C.: The analysis of an SEIR rumor propagation model on hetergeneous. Phys. A 469, 372–380 (2017)

Li, W.Y., Tian, L.X., Gao, X.Y., Pan, B.R.: Impacts of information diffusion on green behavior spreading in multiplex networks. J. Clean. Prod. 222, 488–498 (2019)

Zhu, L., Wang, Y.G.: Rumor diffusion model with spatio-temporal diffusion and uncertainty of behavior decision in complex social networks. Phys. A 502, 29–39 (2018)

Guo, S.J., Li, S.Z.: On the stability of reaction-diffusion models with nonlical delay effect and onolinear boundary condition. Appl. Math. Lett. 103, 106197 (2020)

Zhu, L.H., Zhao, H.Y., Wang, H.Y.: Partial differential equation modeling of rumor propagation in complex networks with higher order of organization. Chaos 29, 053106 (2019)

Zhu, L.H., Zhao, H.Y., Wang, H.Y.: Stability and spatial patterns of an epidemic-like rumor propagation model with diffusions. Phys. Scr. 94, 085007 (2019)

Rahman, G., Shah, K., Haq, F., Ahmad, N.: Host vector dynamics of pine wilt disease model with convex incidence rate. Chaos Solitons Fractals 113, 31–39 (2018)

Gul, H., Alrabaiah, H., Ali, S., Shah, K., Muhammad, S.: Computation of solution to fractional order partial reaction diffusion equations. J. Adv. Res. 25, 31–38 (2020)

Shah, K., Alqudah, M.A., Jarad, F., Abdeljawad, T.: Semi-analytical study of Pine Wilt disease model with convex rate under Caputo–Febrizio fractional order derivative. Chaos Solitons Fractals 135, 109754 (2020)

Shah, K., Abdeljawad, T., Mahariq, I., Jarad, F.: Qualitative analysis of a mathematical model in the time of COVID-19. Biomed. Res. Int. 2, 1–11 (2020)

Yousaf, M., Zahir, S., Riaz, M., Hussain, S.M., Shah, K.: Statistical analysis of forecasting COVID-19 for upcoming month in Pakistan. Chaos Solitons Fractals 138, 109926 (2020)

Rahman, M., Arfan, M., Shah, K., Aguilar, J.F.: Investigating a nonlinear dynamical model of COVID-19 disease under fuzzy caputo, random and ABC fractional order derivative. Chaos Solitons Fractals 140, 110232 (2020)

Lei, C.X., Fu, F.J., Liu, J.: Theoretical analysis on a diffusive SIR epidemic model with nonlinear incidence in a heterogeneous environment. Discrete Contin. Dyn. Syst.-B 23(10), 4499–4517 (2018)

Korobeinikov, A., Maini, P.K.: A Lyapunov function and global properties for SIR and SEIR epidemiological models with nonlinear incidence. Math. Biosci. Eng. 1, 57–60 (2004)

Zhang, C., Gao, J.G., Sun, H.Q., Wang, J.L.: Dynamics of a reaction-diffusion SVIR model in a spatial heterogeneous environment. Phys. A 533, 122049 (2019)

Wang, X.Y., Zhao, X.Q., Wang, J.: A cholera epidemic model in a spatiotemporally. J. Math. Anal. Appl. 468, 893–912 (2018)

Zhang, F.X., Hua, J., Li, Y.M.: Indirect adaptive fuzzy control of SISO nonlinear systems with input-output nonlinear relationship. IEEE Trans. Fuzzy Syst. 26, 2699–2708 (2018)

Hua, J., An, L.X., Li, Y.M.: Bionic fuzzy sliding mode control and robustness analysis. Appl. Math. Model. 39, 4482–4493 (2015)

Tinashe, B.G., Musekwa-Hove, S.D., Lolika, P.O., Mushayabasa, S.: Global stability and optimal control analysis of a foot-and-mouth disease model with vaccine failure and environment transmission. Chaos Solitons Fractals 132, 109568 (2020)

Kandhway, K., Kuri, J.: Optimal control of information epidemics modeled as Maki Thompson rumors. Commun. Nonlinear Sci. Numer. Simul. 19, 4135–4147 (2014)

Huo, L.A., Wang, L., Zhao, X.M.: Stability analysis and optimal control of a rumor spreading model with media report. Phys. A 517, 551–562 (2019)

Zhu, L.H., Wang, X.W., Zhang, H.H., Shen, S.L., Li, Y.M., Zhou, Y.D.: Dynamics analysis and optimal control strategy for a SIRS epidemic model with two discrete time delays. Phys. Scr. 95, 035213 (2020)

Zhu, L.H., Guan, G., Li, Y.M.: Nonlinear dynamical analysis and control strategies of a network-based SIS epidemic model with time delay. Appl. Math. Model. 70, 512–531 (2019)

Xu, R., Ma, Z.E.: Global stability of a SIR epidemic model with incidence rate and time delay. Nonlinear Anal. Real World Appl. 10(5), 3175–3189 (2009)

Li, H., Peng, R., Wang, F.B.: Varying total population enhances disease persistence: qualitative analysis on a diffusive SIS epidemic model. J. Differ. Equ. 262, 885–913 (2016)

Rao, F., Castillo-Chavez, C., Kang, Y.: Dynamics of a diffusion reaction prey-predator model with delay in prey: Effects of delay and spatial components. J. Math. Anal. Appl. 461, 1177–1214 (2018)

Alikakos, N.D.: An application of the invariance principle to reaction-diffusion equations. J. Differ. Equ. 33, 201–225 (1979)

Du, Z., Peng, R.: A priori \(L^\infty \) estimates for solutions of a class of reaction-diffusion systems. J. Math. Biol. 72, 1429–1439 (2016)

Hsu, S.B., Wang, F.B., Zhao, X.Q.: Global dynamics of zooplankton and harmful algae in flowing habitats. J. Differ. Equ. 255, 265–297 (2013)

Smith, H.L., Zhao, X.Q.: Robust persistence for semidynamical systems. Nonlinear Anal. 47, 6169–6179 (2001)

Lei, C.X., Xiong, J., Zhou, X.H.: Qualitative analysis on an epidemic reaction-diffusion model with mass action infection mechanism and spontaneous infection in a heterogeneous environment. Discrete Contin. Dyn. Syst.-B 25, 81–98 (2020)

Li, H., Peng, R., Wang, Z.: On a diffusive susceptible-infected-susceptible epidemic model with mass action mechanism and birth-death effect: analysis, simulations, and comparison with other mechanisms. SIAM J. Appl. Math. 78, 2129–2153 (2018)

Lou, Y., Ni, W.M.: Diffusion, self-diffusion and cross-diffusion. J. Differ. Equ. 131, 79–131 (1996)

Peng, R., Shi, J., Wang, M.: On stationary patterns of a reaction-diffusion model with autocatalysis and saturation law. Nonlinearity 211, 1471–1488 (2008)

Peng, R., Shi, J., Wang, M.: On stationary patterns of a reaction-diffusion model with autocatalysis and saturation law. Nonlinearity 21, 1471–1488 (2008)

Gilbary, D., Trudinger, N.: Elliptic Partial Differential Equation of Second Order. Springer, Berlin (2001)

Du, Y., Peng, R., Wang, M.: Effect of a protection zone in the diffusive Leslie predator-prey model. J. Differ. Equ. 246, 3932–3956 (2009)

Liang, X., Zhang, L., Zhao, X.Q.: Basic reproduction ratios for periodic abstract functional differential equations (with application to a spatial model for lyme disease). J. Dyn. Diff. Equ. 31, 1247–1278 (2018)

Huo, J.W., Li, Y.M., Hua, J.: Global dynamics of SIRS model with no full immunity on semidirected networks. Math. Prob. Eng., p. 8792497, (2019)

Cai, S., Zhou, P., Liu, Z.: Synchronization analysis of hybrid-coupled delayed dynamical networks with impulsive effects: A unified synchronization criterion. J. Franklin Inst. 352, 2065–2089 (2015)

Vrabie, I.: \(C_0\)-Semifroups and Applications. Elsevier, North-Holland (2003)

Zhou, M., Xiang, H.L., Li, Z.X.: Optimal control strategies for a reaction-diffusion epidemic system. Nonlinear Anal. Real World Appl. 46, 446–464 (2019)

Apreutesei, N.C.: Necessary optimality conditions for three species reaction\(-\)diffusion system. Appl. Math. Lett. 24, 293–297 (2011)

Apreutesei, N.C.: An optimal control problem for a pest, predator, and plant system. Nonlinear Anal. Real World Appl. 13, 1391–1400 (2012)

Laaroussi, A., Rachik, M., Elhia, M.: An optimal control problem for a spatiotemporal SIR model. Int. J. Dyn. Control 6, 384–397 (2018)

Xian, H.L., Liu, B., Fang, Z.: Optimal control strategies for a new ecosystem governed by reaction-diffusion equations. J. Math. Anal. Appl. 467, 270–291 (2018)

Brezis, H., Ciarlet, P.G., Lions, J.L.: Analyse Fonctionnelle: Theorie et Applications. Dunod, Paris (1999)

Zhu, M., Xu, Y.: A time-periodic dengue fever model in a heterogeneous environment. Math. Comput. Simul. 155, 115–129 (2019)

Zhao, X.Q.: Uniform persistence and periodic coexistence states in infinite-dimensional periodic semiflows with applications. Can. Appl. Math. Q. 3, 473–495 (1995)

Acknowledgements

We would like to thank Chengxia Lei (Jiangsu Normal University) for her helpful suggestion during the revision of our work. This research is partly supported by National Natural Science Foundation of China (Grant Nos.12002135, 11872189), China Postdoctoral Science Foundation (Grant No. 2019M661732), Natural Science Foundation of Jiangsu Province (CN) (Grant No. BK20190836), Natural Science Research of Jiangsu Higher Education Institutions of China (Grant No. 19KJB110001), Jiangsu Province Postdoctoral Science Foundation (Grant No. 2021K383C) and Young Science and Technology Talents Lifting Project of Jiangsu Association for Science and Technology.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhu, L., Wang, X., Zhang, Z. et al. Spatial dynamics and optimization method for a rumor propagation model in both homogeneous and heterogeneous environment. Nonlinear Dyn 105, 3791–3817 (2021). https://doi.org/10.1007/s11071-021-06782-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-021-06782-9