Abstract

Thunderstorms, the main generator of lightning on earth, are characterized by the presence of extreme atmospheric conditions (turbulence, hail, heavy rain, wind shear, etc.). Consequently, the atmospheric conditions associated with this kind of phenomenon (in particular the strike itself) can be dangerous for aviation. This study focuses on the estimation of the lightning strike risk induced by thunderstorms over the sea, in a short-range forecast, from 0 to 24 h. In this framework, three methods have been developed and compared. The first method is based on the use of thresholds and weighting functions; the second method is based on a neural network approach, and the third method is based on the use of belief functions. Each method has been applied to the same dataset comprising predictors defined from numerical weather prediction model outputs. In order to assess the different methods, a “ground truth” dataset based on lightning stroke locations supplied by the World Wide Lightning Location Network (WWLLN) has been used. The choice of one method over the others will depend on the compromise that the user is willing to accept between false alarms, missed detections, and runtimes. The first method has a very low missed detection rate but a high false alarm rate, while the other two methods have much lower false alarm rates, but at the cost of a non-negligible missed detection rate. Finally, the third method is much faster than the other two methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Mature thunderstorms are usually characterized by the presence of strong winds, severe turbulence, heavy rain, hail, and lightning flashes, which are quite challenging flying conditions for aircraft (Stolzenburg et al. 1998, 2008; Houze 1993). Even if aircrafts are certified for these environments, thunderstorms can generate aircraft damage and passenger discomfort.

Lightning hazard is one of the risks to aviation when aircraft flies in the vicinity of, or inside, storm cells. The in-flight lightning experiments performed in the 1980s with CONVAIR and TRANSALL (Laroche et al. 2012) showed that there are two processes that lead to a lightning strike on aircraft. The first process is associated with the interception by the aircraft of a natural lightning flash. It accounts for 10% to 20% of lightning strikes. In the second process, the most frequent (80% to 90%), it was the aircraft itself that triggered the lightning strike (Yoshikawa and Ushio 2019; Laroche et al. 2012; Mazur et al. 2016). By flying inside or in the vicinity of the convective cloud, the aircraft enhances the atmospheric electric field produced by the storm. Measurements have shown that the atmospheric electric field required to trigger lightning from an aircraft is weaker than that needed for a natural lightning flash (McGorman and Rust 1998; Mazur 1984, 1989, 2016; Pavan et al. 2019). In commercial aviation, a lightning strike occurs on an aircraft once a year on average. This risk is taken into account in the design and certification process of an aircraft in order to ensure a safe flight even if a lightning strike occurs. Even if the flight is safe, a lightning strike on an aircraft always leads to a maintenance operation and, consequently, delays. From 2016 to 2020, the IATA (IATA 2020) indicated that 37% of threats to aircraft safety were from meteorological phenomena and 16% from thunderstorms.

Before a flight, pilots receive a meteorological briefing that allows them to prepare their flight plan, avoid flying inside thunderstorms, and follow regulatory advice (FAA’s publication 2008; FAA AC 00–24C 2013; document of EASA 2018; NATS 2010; Gultepe et al. 2019). In this framework, the forecast of lightning strike risk is relevant for flight plan preparation. We base our definition of lightning strike risk on the risks of flying near to or inside convective cells, which are able to produce an atmospheric electric field strong enough to trigger natural flashes or lightning flashes from aircraft. This atmospheric electric field is not directly forecast, but is inferred qualitatively from the meteorological conditions, such as the lifted index, the ice water content, the altitude of the cloud base and top, etc.

A lightning strike risk forecast implies evaluating the risk from several hours to several days before the flight. It is also more challenging than nowcasting (at a mesoscale within a time range from several minutes to 2 h), which can use near real-time direct observations (satellite images, radar, lightning detection networks) and mesoscale meteorological models. As mentioned above, thunderstorms are one of the most important phenomena inducing high electrical atmospheric fields, allowing favorable conditions for lightning strikes. The lifetime of an isolated thunderstorm is very short: around 30 min. Thunderstorms can grow and die in less than one hour with a cell diameter reaching 10 km (Houze 1993; Wilson et al. 1998; Malardel 2009). These characteristics make the estimation of lightning strike risk in a short-range forecast difficult. One of the main limitations for the lightning risk forecast will be the available dataset and timescale characteristics.

Over many years, numerous methods have been developed for the nowcasting and forecasting of thunderstorm risks. Most of these methods have been based on the use of one type of observation source, such as a rawinsonde (Haklander and Van Delden 2003; Senesi and Thenepier 1997), a ground lightning network (Betz et al. 2008; Pedeboy et al. 2016), radar (Dixon and Wiener 1993; Kober and Tafferner 2009; Lang 2001; Wapler et al. 2012), satellite (Mecikalski, et al. 2013), or output from numerical weather prediction models (Wesoleck 2012; Bright et al. 2005; Bouttier and Marchal 2020; Hill et al. 2020; Yair et al. 2010). For short- and long-range forecasts (from several hours to several days), the main source of information used as input for diagnostic tools is the outputs of numerical weather prediction (NWP) models. This kind of model provides an estimate of the state of the atmosphere in the future. Most of the existing short-range forecasting tools are based on the definition of predictors (i.e., meteorological parameters) related to convective systems, derived from NWP models, and developed for a specific area, mostly over land (Bright et al. 2005; Burrows et al. 2005; Wesoleck 2012). The main drawback of using model outputs is the error associated with the prediction of convective events, chiefly with regard to the timing and location (Osinki and Bouttier 2018). In a global model, isolated convective systems are difficult to predict because the model does not explicitly resolve this phenomenon. For these models, moist convection is simulated using physical-based parametrization (Bechtold et al. 2014; Tiedke 1993). To go further into the exploitation of numerical weather prediction, an ensemble prediction method based on a mesoscale model has been used to estimate probabilistic thunderstorm forecasting (Bouttier and Marchal 2020).

In the present article, we investigate methods enabling forecasts of the lightning strike risks for aircraft from one to several days. The North Atlantic Ocean has been selected for this study. This is a large area, relevant with the scale of the NWP model, and not covered by ground radars. The flat topography will not produce bias on the lightning density. Thunderstorms over oceans are less studied than thunderstorms over land. At low latitude, the convective cloud top levels are higher (18 km near the equator) than the cruise level of aircraft. They cannot avoid convective cells by flying over them as they can do at higher latitudes. In this study, three methods, using NWP model outputs, have been tested and compared to perform a lightning strike risks for aircraft: a novel method based on the use of thresholds and weighting functions, a neural network method, and a statistical method based on belief functions.

The neural network method has already been applied in environmental science. Since the 1990s, machine learning methods have become an important tool in many applications. For several years, machine learning methods such as neural networks (NN) have been used in environmental science (Collins and Tissot 2016; Mostajabi et al. 2019; Hsieh and Tang 1998; Sher and Messori 2018). Among the large set of applications, we can cite the forecasting of cell trajectories (Stenso 2018; Kordmahalleh et al. 2015), large oscillations such as El Niño (Hsieh and Tang 1998), or severe weather such as a tornado (Marzban and Stumpf 1996), the estimation of the amount of precipitation (Poornima and Pushpalatha 2019; Ravuri et al. 2021), the estimation of cloud cover based on satellite images (Bankert 1994; Berthomier et al., 2020), and climate applications (Passini et al. 2020). Hsieh and Tang (1998) recall that NN methods are difficult to apply to meteorology and oceanography science due to the nonlinear instability of phenomena, large spatial data fields, and the understanding and interpretation of nonlinear NN results.

As explained in Dezert et al (2021b), there are different theories based on distinct representations and models of uncertainty to deal with uncertain information to conduct information fusion, such as probability theory, fuzzy set theory, possibility theory, and belief function theory. The belief function is a mathematical method making it possible to assign a mass of belief to a disjunction of risk categories rather than a single category, managing the conflicting sources of information efficiently in a multi-criteria decision-making context. The use of belief functions in environmental science is less common, even though several studies have been carried out in hydrology (Abdallah et al. 2014).

This paper is organized as follows: In a first part, a description of the dataset used as input for each method is given. In a second part, each approach is presented, and the resulting forecasts are compared with cloud-to-ground lightning observations recorded by the World Wide Lightning Location Network (WWLLN), from the University of Washington (Jacobson et al. 2006). The last part of this paper contains a discussion and conclusions based on the results of this comparative analysis.

2 Datasets used to forecast of lightning strike risk and for the evaluation.

2.1 The input dataset of the methodologies

The input dataset used for the forecasting of lightning strike risk must be based on relevant meteorological parameters for detecting the development of convective cells, which are able to generate an atmospheric electric field of at least several kV/m. The necessary meteorological conditions that can lead to a lightning strike risk are:

-

Instability of air mass.

-

The presence of humidity and lifting (Houze 1993).

-

The presence of hydrometeors (supercooled liquid water, ice crystal, and graupel particle) distributed in the convective cloud. The most significant electrification processes are due to the noninduced charge process in which graupel particles and ice crystals are important keys (Saunders 1994; Takadashi 1978). In convective clouds, these two kinds of hydrometeor may exist simultaneously between the isotherm 0 °C and −40 °C.

-

The presence of an updraft in the cloud core with wind convergence below the base of the cloud and wind divergence near the cloud top.

The dataset used in this work comes from the output of the NWP model Action de Recherche Petite Grande Echelle (ARPEGE) developed by Météo-France in collaboration with the European Centre for Medium-Range Weather Forecasts (ECMWF) (Courtier et al. 1991). ARPEGE is a stretched global and spectral general circulation model with a horizontal resolution range from 7.5 km over Europe to 36 km at the opposite side of the globe (near 60°S; 180°E) in its 2015 version (Pailleux et al. 2015). The model supplies, as output, an estimate of the future state of the atmosphere.

A relevant list of twelve meteorological parameters has been compiled to help characterize the state of the atmosphere from the standpoint of lightning strike risk. In the following sections, these parameters are called ‘predictors.’ For each predictor, the meteorological process tested by this parameter is shown in Table 1.

Each of these twelve predictors provides information on the dynamical, thermodynamical, or electrical state of the atmosphere:

-

NEBCON (expressed in %) indicates the percentage convective cloud in a mesh. This parameter reflects the presence of convective clouds in a mesh. Consequently, the lightning strike risk increases with higher convective nebulosity value.

-

PCONV (expressed in kg/m2) is the estimate of 3-h accumulated precipitation induced by a convective process. The higher the accumulation of convective precipitation, the greater the lightning strike risk (James et al. 2018).

-

TWC (expressed in kg/m2) is the sum of the water content of all layers of the atmosphere in a given mesh of the model. A positive value indicates the presence of liquid water in the column. As mentioned previously, supercooled liquid water is essential for the electrification process. This provides no information in itself, but can become relevant when coupled with another parameter.

-

TIWC (expressed in kg/m2) is the sum of the ice content of all layers of the atmosphere in a given mesh of the model. A positive value indicates the presence of ice water in the column. As for the total water content, this is a key parameter in the electrification process and provides useful information when coupled with another parameter.

-

WISO (expressed in m/s) provides information on the dynamic component inside the cloud. Updraft inside the cloud is associated with positive vertical velocity. For the lightning strike risk, only the positive case of vertical velocity is relevant. The risk increases with high vertical velocity values (Holton 2004).

-

Max of RH is the maximum of relative humidity in the atmospheric column at a given location (expressed in %). Since the relative humidity can be difficult to forecast in a meteorological model, instead of using a threshold of 100% characterizing the potential presence of cloud in the mesh, a lower threshold is chosen as in McDonough et al. (2004).

-

LI (expressed in degrees Kelvin), developed by Galway (1956), characterizes the instability of the atmosphere. A negative value means that the atmosphere is potentially unstable, meaning that convective cloud can develop. The more negative the LI, the higher the potential for thunderstorm occurrence.

-

CAPE (expressed in J/kg) is the potential energy available to an air parcel to lift up from the free convection level (Holton 2004).

-

CTT (expressed in degrees Kelvin) provides information on the height of the cloud and indirectly on its vertical extension (and thus on the presence of hydrometeor in ice phase) (Price and Rind 1992). The presence of graupel and supercooled liquid water (Saunders 1994) in the same area between 0° C and −20 °C is necessary to generate a cloud electrification process. Consequently, CTT has been chosen as a key parameter. The lower the CTT, the higher the probability of electrical activity.

-

the difference between the altitude of the bottom of the convective cloud and the altitude of the 0 °C isotherm (ATLB; expressed in meters) will add information on the vertical extension of convective cloud and the probability of having hydrometeor in liquid or ice phase. The risk of thunderstorm is linked to a negative difference only (i.e., bottom of the cloud lower than the 0 °C isotherm).

-

DIV_B and DIV_T (expressed in m/s) provide an indication of the dynamic and the development of convection inside the cloud. Convective cloud areas are associated with convergence motion below the bottom of the cloud and divergence near the top. Consequently, a strong development of a convective cell and thus a lightning strike risk is associated with divergence near the top of a convective cloud. Risk increases with positive and higher divergence values near the top and with negative and lower divergence values near the bottom of the cloud.

Many of these parameters are often used as predictors for forecasting tools, such as CAPE or LI, which provide information on the stability of the atmosphere and the convective potential for the development of a thunderstorm (Ukkonen et al. 2017; Wesoleck 2012).

Our study has focused on lightning strike risk over the sea and mainly over the North Atlantic Ocean. In order to distinguish land and sea grid elements, a land-sea mask has been developed based on Global Multi-resolution Terrain Elevation Data, 2010 version (GMTED2010; Danielson and Gesh 2011).

2.2 The dataset used for the evaluation: lightning detection network

In evaluating the methodologies, it is impossible to compare the lightning strike forecasts with direct observations of lightning strikes to aircraft, because there are so few that they are not statistically relevant. The evaluation of the performance of the forecasting methods represented in this work is based on natural cloud-to-ground flash observations. If there are cloud-to-ground lightning flashes, it directly implies that there is a risk of lightning strike for aircraft. If there are risky areas on the forecast with no natural cloud-to-ground lightning, it does not mean that there is no risk. In such areas, the atmospheric electric field might not be strong enough to generate natural lightning flashes, but still strong enough that aircraft would trigger lightning.

Since 1950, many methods and sensors have been developed to locate and detect lightning strokes on earth. For example, we can cite the lightning ground networks operating in the low- and very low-frequency band, which have been installed either in a specific region (LINET, Betz et al. 2008; METEORAGE, Pedeboy et al. 2016; NLDN, Cummins et al. 1998) or expanded worldwide (GLD360, Mallick et al. 2014; WWLLN, Jacobson et al. 2006; ATDNET, Poelman et al. 2014).

The area of interest of our study is the North Atlantic Ocean. Only worldwide networks can provide information on the electrical state over the whole area. The WWLLN has been chosen as “ground truth” to compare the information on the state of the atmosphere, the thunderstorm hazard, and the detection of lightning flashes.

The WWLLN is a passive remote sensing network detecting lightning strokes. The WWLLN is composed of around 70 sensors from all around the world (Jacobson et al. 2006, http://wwlln.net/). Each sensor detects the energy released by a stroke in the VLF band [3–30 kHz] (Rodger 2004). Discharges associated with a lightning flash generate a strong electromagnetic signal over a very wide frequency band. At very low frequency, the lightning’s radiation can propagate in a waveguide between the earth and the low ionosphere. The WWLLN uses time of group arrival (TOGA algorithm) information from at least five sensors to estimate lightning stroke location (Dowden et al. 2002). The biggest advantage of this system is the weak attenuation during the propagation of the signal over a thousand kilometers.

The performance of a lightning ground network is based on two criteria: the efficiency of detection and the precision of location. The detection efficiency (DE) is the ratio of the number of detected events to the number of actual events. For networks such as the WWLLN, the detection efficiency varies from location to location around the world and with time (Holzworth 2019). The performance of the WWLLN network has been improved over many years, with an increasing number of stations (Hutchkins et al. 2012). At present, strokes are detected in a radius of 5 km compared to a radius of 10 km in 2010 (Abarca et al. 2010). The WWLLN DE is better over the oceans than over land by a factor of 2 to 3 (Holzworth et al. 2019). For the North Atlantic Ocean, on the one hand, a large number of WWLLN stations are located on the east coast of the USA, giving good location performance in the western part of the North Atlantic Ocean. On the other hand, the performance is poorer near longitude 40°W, in the middle of the ocean (far from ground stations for correct detection).

In this work, the locations and dates of the lightning strokes constitute the WWLLN information used. For this purpose, stroke data are aggregated into a flash using three items of information: the distance between the first return stroke and all the return strokes of the same lightning flash (noted \(\Delta r\)), the time between the first and last strokes (noted \(\Delta t),\) and the time between two following return strokes in the same lightning flash (noted \(\Delta {t}_{inter}\)). This method of aggregation is often applied (Poelman et al. 2013, 2014; Cummins et al. 1998; Betz et al. 2008; Schultz et al. 2015; Lay 2004; Soula et al. 2016; Goodman 2012, Goodman et al. 2013; Anderson and Klugmann 2014). The final threshold applied to each parameter (\(\Delta r,\Delta t,\Delta t_{{{\text{i}} {\text{nter}}}}\)) is deduced from the characteristics of the lightning network and the physics of lightning. In almost all methods, the distance \(\Delta r\) is chosen between 10 and 20 km, the time \(\Delta t\) is 1 s, and \(\Delta {t}_{\mathrm{inter}}\) is between 0.33 s and 0.5 s (Cummins et al. 1998; Poelman 2014; Lotte de vos 2015; Goodman et al. 2013; Said 2016; Anderson and Klugmann 2014). In this study, we consider that a stroke belongs to a flash if Δr is less than 15 km, Δt is less than 1 s, and Δtinter is less than 0.5 s. Finally, we make an assumption that the final location of the lightning flash is the location of the first return stroke composing it, following the method of Poelman et al. (2014) for the case of cloud-to-ground flashes (CG).

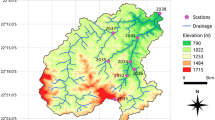

2.3 Atmospheric electrical activity over the North Atlantic Ocean

An illustration of the results obtained with the WWLLN network is given in Fig. 1 for the area studied, for the month of May 2016. In this figure is plotted the number of flashes per km2, for each mesh of 0.1° × 0.1°, deduced from the location of flashes detected by the WWLLN system during the month of May 2016. The size of the domain studied ranges from 101°W to 10°E in longitude and from 1°S to 71°N in latitude. Each colored point is associated with a number of lightning flashes per km2 between 0 and 0.1 flash/km2 (with 0 outside the interval). Lowest densities are colored blue and highest densities red.

As illustrated in Fig. 1 significant areas of lightning density are observed near the west coast of Africa and the East coast of America. An area of higher density spreads from Africa to America, corresponding to the Intertropical Convergence Zone (ITCZ). Moreover, we note strong flash density along the east coast of America with maxima in the Gulf of Mexico, the Caribbean, and along the Gulf Stream. An area of high flash density is also observed between latitude 30°N and 45°N, corresponding to the mid-latitude low-pressure systems. These areas are in agreement with studies based on LIS and OTD sensors and WWLLN data (Virts et al. 2013, 2015; Hobbs 1987).

3 Description of the three methods used for the estimation of lightning strike hazard

All methods use the outputs of the NWP model ARPEGE as their inputs. The dataset used as input, for the three methods, contains the estimate of the twelve predictors (described in Sect. 2) from the production time of 00:00 UTC in May 2016, with forecast time from 03:00 UTC to 24:00 UTC, with a time step of 3 h.

3.1 The LISHAE method

The first diagnostic tool, developed by ONERA (The French Aerospace Lab) and described hereafter, is called LISHAE (LIghtning Strike HAzard Estimation). This method is based on the use of thresholds and weighting functions for the fusion step. The LISHAE method (cf. Figure 2) consists of three steps.

In step 1, each predictor (noted p) listed in Table 1 is compared with a reference value (noted pref). Only the predictors satisfying the comparison (see the third column of Table 2) are kept in the subsequent steps. The reference values are either known physical thresholds or based on statistical analysis results.

For most meteorological parameters, such as CAPE or NEBCON, the reference values used come from statistical analyses performed for data over the North Atlantic Ocean for a period of 5 years, from 2012 to 2016. In the ARPEGE model, the North Atlantic Ocean covers a large area discretized into a mesh of size 0.5° × 0.5°. The statistical computations are performed for each grid element, each 3-h time step (called T) and each month. The values of the meteorological predictors have been saved and sorted into two groups according to the detection or not of flash in the mesh by the WWLLN network, in the time interval of [T—1h30; T + 1h30]. For both data groups (with or without lightning), quantiles have been calculated.

All statistics have been calculated per month and per time step in order to take into account the monthly variability of lightning and the diurnal variation. This test can be used to check the discriminatory power of one parameter between both cases (with or without lightning activity) (Sénési 1997). An example of the quantiles obtained is shown in Fig. 3 in the form of a box plot chart, for LI.

The left side of the box plot of Fig. 3 indicates the number of elements with and without flash detection. The box plot chart is on the right side. When a flash is detected, the quantiles of LI have negative values (Q3 = −4.5 K and Q1 = −6.5 K). These values are in agreement with values found in Wesoleck (2012) and with common knowledge in atmospheric science. As mentioned in Sect. 2, a negative LI value indicates the presence of instability in the atmosphere and the possibility that convective phenomena and, potentially, thunderstorms can occur.

For most of the parameters, the reference values have been inferred from this statistical analysis, whereas for CTT and ATLB, physical values are used. For CTT, we have chosen to compare the input value with the thresholds of −40 °C in order to select clouds with a cold top and allow for the possibility of the simultaneous existence of ice crystals, graupel particle, and super-cooled liquid water. The choice of reference value for each parameter and how a predictor is chosen throughout the risk estimation process are set out in Table 2.

In step 2 of the LISHAE method (Fig. 2), a weighting (w) is assigned to each parameter to allow for its relevance to the lightning strike risk probability. Four levels of importance have been chosen: very low importance, low importance, important, and very important. For each level, an arbitrary base 10 weighting has been chosen, to avoid the possibility that a combination of low importance parameters could reach the risk level of one or two parameters considered important or very important. The higher weightings are assigned to ALTB and CTT because two types of information are present in these predictors: the presence of convection in the mesh and additional information on the presence of liquid and ice particles. Note that the presence of graupel and supercooled liquid water is essential for the development of the cloud electrification process. Less importance is given to DIVB and DIVT because, compared to the other parameters, these predictors provide little information on the presence of a hydrometeor or a convective cell. No weighting is assigned to the maximum of the relative humidity because the result of the comparison for this parameter is merged with other parameters. In Table 2, the test applied to determine whether a predictor is selected for use in the risk estimation process is specified for each predictor. In addition, the reference value used for the test, the importance of the predictor, and its associated weighting are indicated.

The combination of all the predictors makes it possible to distinguish the environmental conditions in which convection can develop (and electrical activity can occur) and the conditions in which convection will probably be inhibited. Consequently, the estimate of lightning strike risk is calculated from the results obtained at steps 1 and 2 using a weighted multiplication approach as expressed by the following formula:

where w is the weighting (indicated in Table 2) and RISKi is an integer value between 1 and 10 indicating the level of agreement between the predictor magnitude and the reference threshold. The multiplication is applied only to predictors that are selected as output of step 1. Once the decimal logarithm of (RISKTOT) is obtained, a test is carried out to associate the magnitude of risk to a scale of four risk levels.

In step 3 of the LISHAE method, a risk level is assigned to a mesh based on the level of risk of the mesh itself and also on the risk level of the neighboring meshes. The risk level ranges from 0 (representing a zero risk) to 4 (high risk) (see Table 3). At level 3 or 4, the risk that a convective cell associated with electrical activity occurs is significant. Note that this risk scale is not linked to a quantitative probability of lightning strike.

3.2 The NN method

The second diagnostic tool applied in the present study is the NN method, developed by ONERA (Cherrier et al. 2017) and adapted to the lightning strike problem. With this method, we try to extract patterns linked to lightning strike risk from a dataset. For this study, information on lightning strike risk is obtained under a dichotomous form (possible risk or no risk), not a level of risk as in the other methods.

In 1990, Barnes and Frankel began to investigate the prediction of lightning strikes using NN based on instrumental information such as field mill data, meteorological tower data, or rawinsonde data. More recently, instead of measurements being used as input to NN methods, the estimates of the state of the atmosphere supplied by the outputs of the NWP model have been used to predict thunderstorm risk. Ukkonen et al. (2017) have already applied this principle with ERA-40 re-analysis as dataset. The main advantage of using NWP model outputs is the amount of information available to construct the training base and the test base. Nevertheless, it is important to recall that despite the fact that the NWP model improves steadily with more and more accurate forecasts, perfect forecasting is not possible. Sher and Messori (2018) highlight the risk that noise inside the initial dataset in all parameters can induce information not relevant to the problem of interest.

The NN method used in this study comprises two steps. The first is the training phase based on a dataset where information on the state of the atmosphere is associated with a lightning strike. During this phase, the NN learns and the NN’s parameters are tuned in order to minimize a cost function. The second step is the test phase where the score and the confusion matrix (cf. Fig. 4) are calculated.

More specifically, for these experiments, the NN is composed of two residual blocks (He et al. 2016) of size 3 plus two fully connected layers, all with Leaky Relu or Relu activation. We do not use batch normalization since we are dealing with physical predictors. All internal layers contain 256 neurons. Training is performed in the conventional manner, with cross-entropy loss, stochastic gradient descent (SGD), and momentum. We found that a low learning rate was required for convergence. The neural network inputs, however, are predictors and not raw values from a sensor. This can be an explanation for the observed conditions for ensuring convergence of the training. Finally, a simple multilayer perceptron of 8 layers can also converge on these data, but provides significantly lower results than the proposed one. It also seems that better performance is even possible using convolutional layers, taking predictors from neighboring cells as input. However, this convolutional neural network (CNN) would not be comparable with other methods.

The training base was constructed using the value of the meteorological predictors (listed in Table 1), in both cases: when a flash has been detected by the WWLLN in a mesh, or not. Datasets were created by collecting three years of data, from 2013 to 2015, over the North Atlantic Ocean. Concerning the statistics used in the LISHAE method: Different datasets were calculated for each time step of one day (every 3 h to be consistent with the time step of the NWP model) and each month (to take into account the monthly variability of the lightning activity over the area). The year 2016 was chosen as the test year to estimate the score, which helps to provide information on the relevance of the method. Sensitivity tests were then run to identify the best calibration of the network.

At each run of the NN method, a confusion matrix is calculated as illustrated in Fig. 4. To build the matrix, WWLLN information on the atmospheric electrical activity is used as reference. The matrix provides four items of information: the number of mesh with no flash detected by WWLLN and classified as no risk by a method (OK); the number of mesh where flashes have been detected by WWLLN and classified as risky (BD); the number of mesh where flashes have been detected by WWLLN but classified as no risk by a method (MISS) and the number of mesh with no flash detected by WWLLN and classified as risky by a method (i.e., false alarms, noted FA).

The first step before applying the NN method to different cases is to define the best calibration of this method. When fitting the training data, all network weightings are optimized, but there is a specific weighting that should not be optimized like the others. It is the threshold, which is compared to the likelihood of deciding whether the network predicts a flash or not (i.e., a smaller threshold means more risky cells). This single parameter can have a critical impact (even if it does not modify the likelihood, but only the interpretation of it). Specifically, on a balanced problem, 0 is expected to be a good threshold. Flash prediction, however, is not balanced. Thus, in this case, the literature suggests considering multiple values of it. For example, precision-recall and ROC curves are based on this idea of multiple threshold values. That is why we will optimize and evaluate the impact of this threshold, referred to as the NN parameter hereafter. In Fig. 5, the number of missed flashes (MISS on the y-axis) is plotted as a function of the number of false alarms (FA on the x-axis) for different threshold values. Consequently, when the number of false alarms increases, more meshes are classified with a nonzero risk, which involves a decrease in the number of misses. In Fig. 5, one colored point is associated with one NN parameter value, varying from -3 to 6. The data used for this test are the estimate of the 12 predictors, extracted from the ARPEGE model outputs for the 05/01/2016 at the timescale 03:00 UTC, from the production time 00:00 UTC. Figure 5 highlights the decrease in the number of missed flashes with the increase in the number of false alarms as a function of the NN parameter, which is intuitively an expected pattern. The best solution is the acceptable balance between a number of false alarms that is not too high and few missed flashes. The final choice of NN parameter will be fine-tuned using a lightning strike risk map (see Sect. 3).

Sensitivity test of the NN method as a function of the NN parameter. Test on May 1, 2016, at 03:00 UTC. On the x-axis, the number of false alarm (FA) points is plotted. On the y-axis, the number of miss cases (MISS) is plotted. Each colored point is associated with one value of the NN parameter, varying from -3 to 6 (value indicated in the caption). The dashed line links the different colored points

3.3 The SET method

The third diagnostic tool is based on the belief function (Shafer 1976) coupled with the Soft ELECTRI TRI (SET) outranking method (Dezert and Tacnet 2012a). In the mathematical theory of evidence developed by Shafer, we assume that the solution of the problem under consideration belongs to a given frame of discernment (FoD), e.g.,\(\Theta =\{{\theta }_{1},{\theta }_{2 }, \dots , {\theta }_{n}\}\). The elements θi (i = 1 … n) are mutually exclusive and \(\Theta\) constitutes the exhaustive list of potential solutions. The power set of the FoD \(\Theta\) (i.e., the set of all subsets of \(\Theta\), including the empty set \(\varnothing\) and \(\Theta\) itself) is denoted by \(2^{\theta }\), and its cardinality by \(2^{\left| \theta \right|}\). A source of evidence about the problem under consideration is represented by a basic belief assignment (BBA) function \(m\left(.\right) : {2}^{\Theta }\to \left[0, 1\right]\) such that \(m\left(\varnothing \right)=0\) (i.e., the impossible solution has no mass of belief), and \({\sum }_{X\in {2}^{\Theta }}m\left(X\right)=1\) (i.e., the BBA is normalized to one). From any BBA \(m(.)\) we define the belief function \(Bel(.)\) and the plausibility function \(Pl(.)\) by

We always have \(0\le Bel(X)\le Pl(X)\le 1\), see (Shafer 1976). Note that \(Bel\left(\varnothing \right)=Pl\left(\varnothing \right)=0\) and \(Bel\left(\Theta \right)=Pl\left(\Theta \right)=1.\) \(Bel(X)\) and \(Pl(X)\) are interpreted as the lower and upper bounds of unknown probability \(P(X)\) of X, i.e., \(Bel(X)\le P(X)\le Pl(X)\).

In our application, a source of evidence is a chosen meteorological parameter (i.e., a criterion) from which a BBA can be established about the categories of level of lightning risk at any given location. As an example, if we consider only three categories of risk level, we can consider \(\Theta =\{{\theta }_{1}=low risk,{\theta }_{2}= moderate risk,{\theta }_{3}=high risk\}\) where each category is defined by some predefined lower and upper bounds about the values that the meteorological parameter can take depending on the natural hazard context. When there are several different meteorological parameters to consider, we need to fuse (combine) several BBAs to obtain a global fusion result from which a decision can be made about the most pertinent solution to choose (i.e., the choice of best category of risk level) based on the available information. The difficulty of the fusion step is to deal efficiently with conflicting information when two (or more) sources of evidence disagree on their assessment of the level of risk. In this work we have tested the simple weighted averaging rule of combination and the more advanced Proportional Conflict Redistribution rule number 6 (PCR6), which is given for the combination of two BBAs \({m}_{1}(.)\) and \({m}_{2}(.)\) by \({m}_{\mathrm{1,2}}^{PCR6}(\varnothing\)) = 0, and for all \(A\in {2}^{\Theta }\backslash \{\varnothing \}\).

The basic idea of the PCR6 rule of combination is to use the conjunctive rule at first (which corresponds to the first sum in the PCR6 formula) and to redistribute the product of conflicting masses only on elements that are involved in the conflict and proportionally to their mass values (which corresponds to the second sum in the PCR6 formula). For instance, if \(X\cap Y=\varnothing\) and \({m}_{1}\left(X\right){m}_{2}\left(Y\right)>0,\) then this product value (committed to the conflict \(X\cap Y=\varnothing\)) is redistributed back to X and to Y only, and proportionally to \({m}_{1}\left(X\right)\) and \({m}_{2}\left(Y\right)\). See Smarandache and Dezert (2009) for more details and for a general formula of the PCR6 rule for more than two BBAs.

The interest of the SET outranking method is its ability to establish BBAs based on the concordance and discordance of each meteorological parameter and category bounds and to combine them with an effective rule of combination. The SET method also provides soft (probabilized) decision making that does not require ad hoc thresholding techniques such as those used in classical Electre Tri methods. Clearly, our storm risk prediction problem can be seen as a multi-criteria decision-making (MCDM) problem, which is summarized in Fig. 6. Each row corresponds to the range of a meteorological parameter (or criterion), and the profile bounds (the green plots of Fig. 6) delimit the category bounds for each criterion. An alternative is a particular set of values of different meteorological parameters represented by the red plot in this figure. The SET methodology commits an alternative (a red plot) in the best category thanks to belief functions, and it calculates the probability of good decision (commitment) even in difficult cases where information is conflicting, i.e., when a red plot makes zigzags in different risk categories, as shown in Fig. 6.

In this context, each category Ch corresponds to a risk level. For the LISHAE method, five levels of risk (h = 5) have been chosen, with C1 associated with no risk, C2 a low risk, C3 a moderate risk, C4 a high risk, and C5 a severe risk. Each category is delimited by a profile: a lower and an upper bound denoted bh and bh + 1, respectively. A profile bh is composed of the nG values of the criterion. For our problem, the Gi criteria are the 12 meteorological predictors, listed in Table 1 (nG = 12). For the LISHAE method, the SET method allows for an importance weighting between criteria. For each parameter, a preference ordering and an arbitrary importance weighting have been defined (see Table 4).

For each criterion (Gj), bounds (Gj(bh)) have been defined for each category of risk. For instance, for the parameter LI, the bounds b1, b2, b3, and b4 are 0 K, -3 K, -5.5 K, and -6.5 K, respectively. Consequently, if the value of LI is positive, the risk is zero; if the value of LI is in the interval [-3 K; 0 K], the risk level is 1; if the value of LI is in the interval [-6.5 K; -5.5 K], the risk level is 2, and so on. Each bound has been deduced from the quantiles obtained after the creation of a database containing the values of meteorological predictors in lightning conditions (the same database described in step 1 of the LISHAE method). These bounds defined for the North Atlantic Ocean area take the temporal variation into account.

Then, from the test dataset (year 2016 of the ARPEGE model data), in a given mesh and at a given time, an alternative profile called “a” (red line in Fig. 6) is created, consisting of the 12 predictors. This profile constitutes the input for the SET method that will try to assign a category of risk to this alternative profile.

The principle of the SET method, described in Dezert and Tacnet (2012a) and Dezert et al. (2021a) , requires four steps:

-

Step 1: the calculation of local concordance and discordance indices between the alternative ai and a profile bh (cf. Figure 6) using a sigmoidal model (Dezert et al., 2012b). These partial indices are then encapsulated in a Basic Belief Assignment (BBA), defined on the frame of discernment θ ≜ \(\left\{ {c,\overline{c}} \right\}\), where c means that the alternative is in agreement with the assertion “ai is at least as good as profile bh” and \(\overline{c }\) means that the alternative is discordant (opposed to the assumption);

-

Step 2: the calculation of global concordance and credibility indices by the fusion of local indices, calculated in step 1. To consider the importance weighting of each criterion Gj, two fusion methods have been tested: the weighting averaging (WA) fusion rule, which is a simple rule, taking important weightings into account, and the proportional conflict redistribution rule no. 6 (PCR6) fusion rule with importance discounting. Based on the BBA mass, the belief (Bel) and plausibility (Pl) functions of the outranking proposition \(X="{a}_{i}> {b}_{h}"\) and \(Y="{b}_{h}> {a}_{i}"\) are calculated. We deduced from these functions the probabilities P(X) defined by \(P\left(X\right)\in [Bel\left(X\right), Pl\left(X\right)]\) and P(Y) defined by \(P\left(Y\right)\in [Bel\left(Y\right), Pl\left(Y\right)]\);

-

Step 3: This step aims to solve the outranking problem. The choice must be made if, by analyzing, X dominates Y (X is the valid outranking) or vice versa. A soft outranking solution is possible by computing the probability that X dominates Y assuming uniform distribution of unknown probabilities between the lower and upper bounds. More details are given in Dezert et al. (2012a);

-

Step 4: The final assignment of ai into a category Ch (\({P}_{ih}=P({a}_{i}\to {C}_{h}\))) is obtained by the combinatorics of all the possible outranking sequences, taking the probabilities Pih into account.

It is important to mention that, for some parameters, data are missing, and a -9999 value is assigned. These missing values mainly concern the temperature and the divergence at the top of convective clouds and the altitude and divergence near the bottom of convective clouds. The SET method has been adapted to allow for these “gaps” in the dataset.

Moreover, the variability of ARPEGE data in each cell has been studied by calculating the level of randomness, characterized by Shannon’s entropy. For each cell of the North Atlantic Ocean, the probability of risk has been estimated by counting the number of criteria (predictors) associated with the category Ch and dividing by ng. The normalized Shannon entropy is given by the following formula:

The map of the normalized Shannon entropy is shown in Fig. 7. Results are obtained using the output of the ARPEGE model for the time production 05/09/2016 at 00:00 UTC and the time step 05/09/2016 at 09:00 UTC. If the entropy is equal to 0 in a mesh, all parameters give the same category of risk. If the entropy is equal to 1, there is a huge uncertainty on the choice of risk level. Figure 7 shows that the entropy is above 0.5 for a lot of elements, indicating a disagreement between predictors for the determination of the risk level. This map of the normalized Shannon entropy highlights the need to use a sophisticated MCDM method able to deal with conflicting sources of information.

In this study, two fusion methods were tested via the SET approach: PCR6 fusion and averaging fusion. For the PCR6 fusion method, different options were chosen to improve the results. Among the decision methods based on belief function (Dezert et al. 2016), we can cite methods based on the maximum of credibility or the maximum of plausibility (Smets and Kennes 1994; Dezert and Smarandache 2008). The main drawback of these methods is that the decision is constructed with incomplete information due to the use of the measure of probability. Two different BBA masses may be linked to the same probability. Consequently, for our study, we have chosen to use a metric of distance between two BBAs, named dBI (Han and Yang 2014, 2018), based on the distance between belief intervals. Here, the distance is calculated between the BBA mass vector obtained in step 1 and the focalized mass for each category with the Wasserstein distance. The best risk category is the one associated with the minimum distance. Moreover, as the PCR6 fusion is based on a conjunctive fusion endowed with a proportional conflict redistribution mechanism, we have chosen the importance discounting of type 1 as weighted factor between two BBAs. Moreover, the method has been fined-tuned using optimistic decision making, meaning that when an ambiguity appears between two categories of risk, the method will choose the category with the lower risk.

4 Comparison of the lightning strike hazard given by the three methods for one case

This section presents the lightning strike risk results obtained using the three methods (LISHAE, NN-based, and Soft ELECTRE Tri (SET)). To maintain a uniform basis of comparison, each method was applied to the same dataset. The dataset, containing the 12 predictors, was constructed based on the outputs of the ARPEGE model, for a given time step, from the time production of May 9, 2016, at 00:00 UTC. In the first instance, results obtained for one date and one time step (at 09:00 UTC on May 9, 2016) are presented. Then, secondly, the results are generalized to all time steps in the month of May 2016.

4.1 The LISHAE method

To help to analyze the risk map obtained by the LISHAE method, maps of some predictors over the studied area are indicated in Fig. 8.

Map of different meteorological parameters at 09:00 UTC on May 9, 2016, from the forecast of May 9, 2016, at 00:00 UTC derived from the ARPEGE model. Red dots: flashes detected by the WWLLN. a map of LI (Unit: K). In blue, negative temperature of LI and from white to green positive value (0 included). A contour has been plotted for the values (−20, −10, −4, −2, 0, 2, 4, 10, and 20 K). b Convective CTT (Unit: K). c: map of CAPE (Unit: J/Kg). A contour has been plotted for the values (10, 100, 250, 500, 1000, 1500, 2000, and 5000 J/Kg). d: Map of PCONV (Unit: kg/m2). A contour has been plotted for the values (0.1, 0.5, 1, 5, 7, and 10 kg/m2)

On each map of predictors, the locations of the lightning flashes detected by the WWLLN in the time interval [07:30–10:30] have been plotted in red dots. The overlay of both pieces of information makes it possible to highlight and check the validity of the method. On Fig. 8, the flash locations appear in an area with nonzero convective precipitation, with high CAPE and negative LI. We can also note that some flash locations are in areas such as the Caribbean, where no convective CTT have been calculated. One of the disadvantages of the LISHAE method is that if some parameters are not calculated by the NWP model, derived parameters cannot be calculated. In this case, the noncalculation of the convective CTT leads to the fact that DIV T is not calculated either. This point decreases the level of performance of the method.

Based on the data presented in Fig. 8, the lightning strike risk over the North Atlantic Area has been calculated using the LISHAE method and is shown in Fig. 9. Details of the confusion matrix associated with the case shown in Fig. 9 are given in Table 5 in sect. 4.4.

As in Fig. 8, the locations of flashes detected by the WWLLN are indicated by red dots in Fig. 9. For this LISHAE method, areas with a high level of lightning strike risk (risk level of 3 or higher, plotted in the darkest shade in Fig. 9) match with areas of low pressure:

-

A low at the west of Europe centered at (50°N; 15°W).

-

A low in the south of Greenland centered at (60°N; 50°W).

-

The intertropical convergence zone (ITCZ).

-

A low-pressure axis between longitude 70°W and 55°W.

The areas with a significant level of risk are in agreement with the map of predictors. For these areas, we can notice the presence of PCONV (nonzero value), a CAPE with a value above 500 J/Kg, a negative LI. Moreover, among the areas classified as ‘at risk,’ we found the ITCZ, the Gulf of Mexico, and the European coast as identified on the map of lightning density for May 2016 (cf. Fig. 1).

4.2 The NN method

The results obtained for May 9, 2016, at 09:00 UTC with the NN method are shown in Fig. 10 for three values of the NN parameter (−1, 0, 1). As mentioned before, the NN method provides binary information. When a mesh is colored gray, a lightning strike risk exists. In each picture, the locations of flashes detected by the WWLLN are indicated by red dots. The three pictures illustrate the variation in terms of false alarm as a function of the choice of NN parameter. The lower this parameter (negative value of the NN parameter), the higher the number of meshes with a possibility of lightning strike. Based on Figs. 5 and 10, the NN parameter has been set to 0 for the results presented in the article (corresponding to picture 10b).

The area delimited by elements colored in gray in Fig. 10b can be compared to the area with a risk level above 2 in Fig. 9. The comparison highlights the fact that similar areas of lightning strike risk are identified by both methods below 40°N. The main difference appears above the latitude of 40°N.

To understand the reason for these differences, the lightning strike hazard was estimated using a reduced dataset. This new dataset comprises six predictors: Max of RH, PCONV (accumulated over 3 h), TWC, TIWC, WISO, and NEBCON. The maps of these parameters highlight meteorological conditions that favor the occurrence of a thunderstorm for areas above the latitude 40°N and not previously identified (Fig. 11).

Maps of predictors for May 9, 2016, at 09:00 UTC from the ARPEGE model. Maps of the WISO (a – unit: m/s). Isocontours are plotted for the values (−1.10–1; −5.10–2; −1.10–2; −1.10–3; 0; 1.10–3; 1.10–2; 5.10–2; 1.10−1 m/s); TWC (b – unit: kg/m2). Isocontours are plotted for the values (1.10–4; 4.10–4; 6.10–4 8.10–4;1.10–3; 5.10–3; 1.10–1; 5.10–1 kg/m2); TIWC (c – unit: kg/m2). Isocontours are plotted for the values (1.10–4; 4.10–4; 6.10–4 8.10–4;1.10–3; 5.10–3; 1.10–1; 5.10–1 kg/m2) and NEBCON (d – unit: %). Isocontours are plotted for the values (10; 25; 50; 75; 99%). In each picture, the red dots indicate the WWLLN data

The NN method was applied to a reduced dataset. The results are present in Fig. 12 for the NN parameter 1 (better suited to this new configuration).

If we compare Figs. 12 with 10, we again find meshes classified as risky for latitude above 40°N (Fig. 12), in contrast with Fig. 10. If we focus on this specific area for the different maps of predictors (Fig. 8 and Fig. 11), we see that only a few parameters, such as NEBCON, TIWC, and TWC, detect information in this area. Only predictors detecting a signal in areas above 40°N are used in the second dataset. Consequently, new areas classified as risky appear with this second dataset compared to Fig. 10b. This test highlights the fact that the method classifies an area as risky if most predictors share the information, even if their weighting in the convective development is lower than some parameter, such as LI, for which the information at 40°N does not appear.

This highlights the good results obtained by the NN method in terms of estimation of lightning strike risk. The main disadvantage of the NN method is that it focuses on the most noteworthy features even if they are of less importance, which is a known phenomenon.

4.3 The SET method

Maps of lightning strike hazard, estimated for May 9, 2016, at 09:00 UTC, using the SET tools with different fusion methods, are shown in Fig. 13. In each picture, the flashes detected by the WWLLN in the time interval [07:30; 10:30] are indicated by red dots. Figure 13a shows the map of lightning strike risk estimated using the averaging fusion rule, and Fig. 13b shows the map using the PCR6 fusion rule. We observe an important difference between the results of the two fusion methods. The results obtained with averaging fusion show a large number of meshes with a risk level of 2 and no risk beyond latitude 60°N, unlike the results based on the PCR6 method. With the PCR6 method (Fig. 13b), the number of meshes classified as risky decreases. Restricted areas with a higher risk level appear at the location of the depression, located near the coast of Portugal between 40°N and 60°N, the ITCZ and along longitude 70°W, similar to the areas classified as risky using the LISHAE method (Fig. 9). Consequently, good agreement is found between flashes detected by the WWLLN and meshes classified as risky by the method based on the PCR6 rule.

Results with the SET method for May 9, 2016, at 09:00 UTC using the forecast of May 9, 2016, at 00:00 UTC. Red dots represent the flashes detected by the WWLLN. Top: level of lightning strike risk. Bottom: confidence in the decision of the classification of each mesh. On the right, results for SET based on the PCR6 fusion rule and on the left, results for SET based on the averaging fusion rule

Nevertheless, it is important to mention that the SET method with the averaging merge fusion rule has been applied to the dataset of five meteorological parameters (PCONV, LI, CAPE, DIV_B, DIV_T). The results are presented in Dezert et al. (2021a) . For the configuration with five parameters, better results have been found, similar to those obtained using PCR6 with five parameters. This means an improved rate of correct detection and a slightly reduced rate of false alarms.

One of the main advantages of the SET method is the possibility of obtaining a decision confidence map representing the probability associated with the risk level determined in a mesh. The confidence level has been plotted for both fusion methods in Figs. 13c (for the average rule) and 13d (for the PCR6 rule). The confidence level is between 0.8 and 1 for the PCR6 method (Fig. 13d) and ranges from 0 to 1 for the other method. As we observe in Fig. 13c and d, the range of confidence in the decisions made with SET based on the PCR6 rule and the range of confidence in the decisions made with the averaging rule are clearly different. The quality (confidence) in a decision based on the PCR6 rule is globally much higher than with the averaging fusion rule. This result is perfectly normal (and expected) because the PCR6 rule deals efficiently with conflicting information, unlike the averaging combination rule. Using PCR6 we significantly increase the quality of the decision made, and this is what Fig. 13d reveals versus Fig. 13c. The comparison of Fig. 13a and Fig. 13b highlights an important difference for meshes located above 40°N in latitude and between 70°W and 30°W in longitude. For both methods, less confidence is given to this area, with a level of confidence below 0.3 for the fusion rule and below 0.84 for the PCR6 rule. For this area, the predictors supply conflicting information, because only half of them predict the presence of convective cells. For example, for these areas, Fig. 8 shows a positive LI and no convective cloud (no value of CTT) but a nonzero value of CAPE and PCONV. A conflict between predictors implies a difference in terms of risk level between all the methods. This area is classified as no risk by the averaging method and as a risk level of 5 by the PCR6 method. This uncertainty for this area is also found with the other methods. NN assigns no risk based on a dataset of 12 parameters and a nonzero risk with a dataset of six parameters. The LISHAE method assigns a risk level of 3, expressing a significant risk.

4.4 Comparison

To supplement the maps of lightning strike risk presented previously for the three methods, statistical results on the risk level have been calculated for 05/09/2016 at 09:00 UTC. The results are summarized in Table 5. In the ARPEGE model, the North Atlantic Ocean from 1°S to 70°N latitude and from 100°W to 1°E longitude is composed of 21,592 grid elements. On the May 9, 2016, in the time interval [07:30; 10:30], flashes were detected in 317 meshes over the area. For this date, the LISHAE method, the NN method, and the SET PCR6 method give a rate of correct detection above 90%. The LISHAE method gives no missed lightning flashes at the cost of a false alarm rate of 69%. The NN method and SET PCR6 give false alarm rates around 30%.

For the LISHAE method, the distribution of the risk values of all the meshes classified as risky is indicated in Table 6. For the meshes where a flash was detected (317 meshes), 100% are classified as correct detection and 83% are classified with a risk equal to or above level 3 (Table 7).

For the NN method, out of a total of 317 flashes, nine flashes were missed and 308 correctly predicted (97% correct detection) for the case of 12 predictors (and NN parameter = 0). For the configuration with six predictors (and NN parameter equal to 1) as input, 56 flashes were missed, leading to a correct detection rate of 82%, with a similar FA rate: 26% with six predictors, compared to 28% with 12 predictors.

For the SET method, a 92% rate of correct detection of the flashes is obtained using PCR6, compared to 63% using the averaging rule. This improvement in performance of the SET-PCR6-based method with respect to the SET-averaging-based method is due to better (more effective) management of conflicting information in the combination process of the sophisticated PCR6 rule. The price to pay for this improvement in performance is the complexity of PCR6, which imposes a higher computational burden than the simple (weighted) averaging rule. Our results clearly show the need for advanced methods of conflicting information fusion in this application. Table 8 indicates the distribution of the category of risk, with the PCR6 method, in two cases: on the 294 meshes classified as correct detection (first line in Table 8) and on all the meshes (the 21,592 meshes of the domain). The statistics shown in Table 8 indicate that in 50% of cases the level of risk is above 4 when a mesh is classified as risky and a flash is detected therein. This fact is in agreement with the significance of the risk level; significant risk is associated with levels 4 and 5.

To illustrate the variability of the statistics according to the time step, results for the 03:00 and 06:00 forecasts of May 9, 2016, are given in Table 9. These results can be compared to those presented in Table 5. For all time steps, the three methods reach a correct detection rate above 83%, with false alarm rates varying from 30% for the SET and NN method to 70% for the LISHAE method. These results illustrate the stability of the scores for different time steps.

The results of the confusion matrix were used to plot the receiver operating characteristics (ROC) curve (Fig. 14). The ROC curve helps to summarize the performance of the different methods and to highlight the ability of each method to discern the presence of a lightning strike risk (the level of risk is not taken into account in the method). This curve is plotted using the information on recall and specificity. Recall is the ratio of the correct detection rate to the sum of meshes classified as misses or correct detections. Specificity is the ratio of the number of correct rejections to the sum of meshes classified as false alarms or correct rejections. On the ROC curve, recall is plotted as a function of 1 minus the specificity.

Receiver operating characteristics (ROC) curve for May 9, 2016, at 09:00 UTC. On the x-axis, 1- specificity is plotted. On the y-axis, the recall is plotted. Each color point is associated with one value of the NN parameter, varying from −3 to 6 (value indicated in the caption). The dashed line linking the different colored points corresponds to results from the NN method. The square indicates results from the SET-PCR6 method. The star indicates results from the LISHAE method

On the ROC curve, the dashed line associated with the colored dots represents the NN method (with 12 parameters). Each colored dot corresponds to a given NN parameter (varying from -3 to 6). As seen before, this plot confirms that the best compromise between false alarms and missed flashes is obtained when the NN parameter is around 0. The results for the LISHAE method (star marker) and the SET-PCR6 method (square marker) are overlaid on the NN curve. The point associated with the LISHAE method has a recall of 1 because no flash was missed on this date with this method. On the ROC curve, the point associated with the SET PCR6 method is below the NN curve, meaning that NN may be more interesting from an alert point of view.

5 Discussion and conclusions

In order to estimate the potential in terms of lightning strike risk of the three methods described in Sect. 3, each method was applied to one month of datasets. For each day of May 2016 and the eight time steps (03:00, 06:00, 09:00, 12:00, 15:00, 18:00, 21:00, 24:00 UTC), a dataset composed of the 12 predictors was constructed based on the ARPEGE simulation runs at 00:00 UTC and 12:00 UTC of each day. For the time steps 03:00, 06:00, 09:00, and 12:00, the time production of 00:00 UTC was chosen with the lead time varying from 3 to 12 h, whereas for the time step of 15:00, 18:00, 21:00, 24:00, the time production of 12:00 UTC was chosen with the lead time varying from 3 to 12 h. These choices allow short lead times to be used in order to have the associated smaller errors instead of longer lead times, where errors can be significant. For each mesh k, we estimate the lightning strike risk using the three methods and compare it to the flashes detected with the WWLLN (as done before). All the meshes between latitude 1°S to 71°N and longitude 101°W to 10°E with a resolution of 0.5° × 0.0.5° have been considered to calculate the confusion matrix value (cf. Figure 4). In order to allow for temporal variability (seasonal and diurnal), thresholds (for the LISHAE method) and a learning dataset (for the NN method) have been adapted for each time step. Statistical results for the month of May 2016 are shown in Table 10.

The results, for one month, indicate that more than 89% of meshes where flashes were detected by the WWLLN are correctly classified by the LISHAE method, the NN method, and the SET PCR6 method. We note a large fluctuation in the false alarm rate from one method to another, from 71% with the LISHAE method to 28% with the NN method. Nevertheless, a high false alarm rate must be considered with caution, because the dataset used to establish the confusion matrix does not allow access to the total lightning activity. One of the disadvantages of the WWLLN is the poor detection of IC flashes. This type of lightning flash radiates in very high frequencies (VHF) and does not propagate over the same distances as very low frequencies (the range in which the WWLLN works). Consequently, an area classed as a false alarm by our verification method should be weighted by the possibility that the atmospheric conditions are close to a lightning strike risk. Thus, if an aircraft flies in these environmental conditions, it may trigger a flash or intercept an inter- or intra-cloud flash (very few of these types of flash are detected by the WWLLN). However, as mentioned before, to cover the area under investigation, and since one aim of the method is to cover a wide area above the ocean, the WWLLN is one of the main usable networks. New sensors embedded in satellites, such as the GLM (Global Lightning Mapper) in the latest generation of GOES satellites, detecting both intra-cloud (IC) and CG flashes, will help to adjust the confusion matrix and test the different methods for future specific areas. The GLM sensor (Goodman 2013) is an optical sensor, detecting total lightning activity. Bürgesser (2017) has shown that WWLLN is good enough to detect the main features of tropical lightning despite low detection efficiency in some areas. Moreover, he indicates that optical detection systems such as GLM can also miss a significant number of lightning events, particularly CG flashes in the lower part of a thunderstorm. In conclusion, the best way to ensure global coverage of all types of lightning will be to use the different types of sensor (ground sensors such as WWLLN, and sensors embedded in satellites, such as GLM).

To supplement the statistics shown in Table 10, statistics on the category of risk obtained for the LISHAE method (Table 11) and the SET-PCR6 method (Table 12) have been calculated. For the LISHAE method, we observe that about 30% of all the meshes of the domain are classified in category 0 (no risk), 1, or 2 (low risk). When flashes are detected by the WWLLN in a mesh classified as risky, the main risk category assigned to this mesh is classified as level 3 or above, indicating a significant risk.

For the SET PCR6 method, 67% of meshes are classified as no risk, compared to the LISHAE method with 27% of meshes and 25% of meshes classified with a risk equal to 2 or 3 (associated with a low or moderate risk). When flashes have been detected by the WWLLN in a mesh classified as risky, the categories of risk are around 25% for categories with important risk (categories 4 and 5), corresponding to a total of 50% of meshes, similar to the LISHAE method.

To conclude, the estimation of lightning strike hazard is essential for mission planning in order to avoid extreme weather along aircraft flight trajectories. Knowledge of this parameter will help to alert and to anticipate the potential damage incurred by UAVs or aircraft during their flights, as well as improving passenger comfort. The information on lightning strike risks can be used at different timescales: at near real time to raise an alert on a specific area, or at short- and long-range times for air traffic management and flight scheduling. Our study relates to the second issue. The short-range estimation of lightning strike risk currently relies solely on the use of NWP model outputs. In this study, three methods have been developed or adapted and used as diagnostic tools with the same dataset as input. All the methods chosen can be easily adaptable to a specific area. Importantly, few changes are needed for the LISHAE and SET methods (only the adaptation of the thresholds), while a new learning base is required for the NN method. Although this would not be difficult to generate, it still constitutes a slight disadvantage of the NN method. Comparison of the results has highlighted the advantages and drawbacks of each method. To summarize, the main characteristics of each method are shown in Table 13. The execution time, the complexity, and the amount of information have been added to the advantages and drawbacks to compare the methods. All the methods run in less than 5 min for one time step and 21,592 meshes (without counting the preparation time: training time for the NN method, or statistical computation for the thresholds used in the LISHAE or SET methods).

The first method, LISHAE, is based on the use of weightings for data fusion and comparison of the meteorological predictors with thresholds. These thresholds are physical values or statistical values. Good results are obtained for this method when the estimate with risk level is compared with flashes detected by a ground location system (WWLLN in this study). The main drawback is the high number of false alarms.

The second method relies on the use of a neural network method. The problem of lightning strike risk is a good framework for the application of NN, thanks to a large amount of data to create the database for the learning and test phases. The method provides binary information as output. The method has shown a good ability to estimate the lightning strike risk. The main drawback is that the risk area relies on the features highlighted by all parameters. Some areas where few meteorological parameters highlight the risk (even though they are the most relevant in view of the issue under consideration) are not classified as risky. This behavior is well known with the use of NN methods and would require more adjustment and development.

The third method, SET, is based on the use of belief functions. This method has demonstrated good performance for the estimation of lightning strike risk. One advantage is the presence of a map of confidence as output, which provides additional information that helps to analyze a lightning strike hazard map in depth. Although the rate of missed flashes for this method is 10%, it demonstrates high rates of correct detection and correct rejection, with a moderate rate of false alarms.

All the methods provide relevant information on lightning strike risk. Each method has its advantages and drawbacks. The choice of one method over another will depend on the application targeted by the user. If we desire warning information, independently of the risk-level indication, the NN method is interesting, with high rates of correct detection and correct rejection, while demonstrating a low rate of missed flashes (4.4% for the tested month) and a moderate number of false alarms. In cases where more accurate information on the risk level is needed, the use of the LISHAE or SET methods is preferred. The choice between the two methods will depend on the trade-off that the user is willing to accept, between a very low rate of missed flashes (0.95%) obtained with LISHAE, at the cost of a high false alarm rate, and a moderate false alarm rate (30%) at the cost of a higher rate of missed flashes (10%) for the SET method. The first case could be favored in instances where a high level of safety is required, and the second case could be favored for economic reasons, for instance.

Moreover, the study of lightning strike risk at short-range forecast times could be improved with the estimation of a probability and not just a risk threshold. One of the shortcomings of these methods is the need for some applications to know a probability and a confidence level, and not just a risk level. Among the three methods tested in this work, only the SET method provides probabilistic information. On the other hand, the estimation of electrical activity probability could be improved by considering observations such as lightning location using fusion methods (e.g., fuzzy logic scheme) (James et al. 2018; Köhler et al. 2017). Some preliminary work is being conducted by the team to explore this avenue of research.

Change history

18 January 2023

A Correction to this paper has been published: https://doi.org/10.1007/s11069-022-05684-2

References

Abarca S, Corbosiero K, Galarneau T (2010) An evaluation of the Worldwide Lightning Location Network (WWLLN) using the National Lightning Detection Network (NLDN) as ground truth. J Geop Res 115:11p

Abdallah NB, Monhous-Voyneau N, Denoeux T (2014) Combining statistical and expert evidence using belief functions: application to centennial sea level estimation taken into account climate change. Int J Approx Reason 55:341–354. https://doi.org/10.1016/j.ijar.2013.03.008

Anderson G, Klugmann D (2014) A European lightning density analysis using 5 years of ATDnet data. Nat Hazard Earth Sys Sci 14:815–829. https://doi.org/10.5194/nhessd-1-6877-2013

Bankert R (1994) Cloud classification of AVHRR imagery in maritime regions using a probabilistic neural network. J App Meteor 33:909–918. https://doi.org/10.1175/1520-0450(1994)033%3c0909:CCOAII%3e2.0.CO;2

Barnes AA, Frankel DS (1990) the investigation of the prediction of lightning strikes using neural networks. AIAA 90:484. https://doi.org/10.2514/6.1990-484

Bechtold P, Semane N, Lopez P, Chaboureau J-P, Beljaars A, Bormann N (2014) Representing equilibrium and non-equilibrium convection in large-scale models. J Atmos Sci 71:734–753. https://doi.org/10.1175/JAS-D-13-0163.1

Berthomier L, Pradel B, Perez L (2020) Cloud cover nowcasting with deep learning. In: 10th International conference on image processing theory, tools and application, Paris, France

Betz HD, Schmidt K, Oettinger WP, Montag B (2008) Cell tracking with lightning data from LINET. Adv Geosci 17:55–61. https://doi.org/10.5194/adgeo-17-55-2008

Bouttier F, Marchal H (2020) Probabilistic thunderstorm forecasting by blending multiple ensemble. Tellus A Dyn Meteorol Oceanogr 72:1–20. https://doi.org/10.1080/16000870.2019.1696142

Bright D, Wandishim MS, Jewell RE, Weiss SJ (2005) A physically based parameter for lightning prediction and its calibration in ensemble forecasts, conference on meteorological applications of lightning data

Bürgesser RE (2017) Assessment of the World Wide Lightning Location Network (WWLLN) detection efficiency by comparison to the Lightning Imaging Sensor (LIS). Quater J Royal Meteorol Soc 143:2809–2817

Burrows WR, Price W, Wilson LJ (2005) Warm season lightning probability prediction for Canada and the Northern United States. Weather Forecast 20:971–988. https://doi.org/10.1002/qj.3129

Cherrier N, Castaings T, Boulch A (2017) Deep sequence-to-sequence neural networks for ionospheric activity map prediction. In: International Conference on Neural Information Processing, Springer, Cham

Collins WG, Tissot P (2016) Thunderstorm predictions using artificial neural networks. In: Artificial neural networks models and applications. InTech, London doi:https://doi.org/10.5772/63542.

Cummins KL, Philip KE, Malone MD (1998) The U.S. national lightning detection network and applications of cloud-to-ground lightning by electric power utilities. IEEE Trans Electromagn Compatibil 40:465–480. https://doi.org/10.1109/15.736207

Courtier P, Freydier C. Geleyn J.-F., Rabier F. Rochas M. (1991) The ARPEGE project at Météo-France, Proceedings of 1991 ECMWG Seminar on Numerical Methods in Atmospheric Models, ECMWF Reading, UK, pp. 193–231

Danielson JJ, Gesh DB (2011) Global Multi-resolution terrain elevation data 2010 (GMTED2010), Technical report, US. Geological Survey

Dezert J, Smarandache F (2008) A new probabilistic transformation of belief mass assignment. In: Proceedings of Fusion, Cologne, Germany

Dezert J., Tacnet J.-M. (2012a) Soft ELECTRI TRI outranking method based on belief functions. In: Proceedings of Fusion 2012a, Conference, Singapore

Dezert J, Tacnet J.M. (2012b) Sigmoidal model for belief function-based ELECTRE TRI method. In: Proceedings of Belief 2012b, Compiègne, France

Dezert J, Han D, Tacnet J-M, Carladous S, Yang Y (2016) Decision-making with belief interval distance. In: Proceedings of belief 2016, International Conference Prague, CZ, pp. 21–23 September 2016

Dezert J., Bouchard A., Buguet M. (2021a) Multi-criteria information fusion for storm prediction based on belief functions. In: Fusion 2021a Conference, South Africa, November 2021a

Dezert T, Dezert J, Smarandache F (2021) Improvement of proportional conflict redistribution rules of combination of basic belief assignments. J Adv Inf Fusion 16(1):48–74

Dixon M, Wiener G (1993) TITAN: thunderstorm identification, tracking, analysis and nowcasting—a radar based methodology. J Atmos Oceanic Tech 10:757–797. https://doi.org/10.1175/1520-0426(1993)010%3c0785:TTITAA%3e2.0.CO;2

Dowden RL, Brundell JB, Rodger CJ (2002) VLF lightning location by time of group (TOGA) at multiple sites. J Atmos Solar-Terres Phys 64:817–830. https://doi.org/10.1016/S1364-6826(02)00085-8

EASA document (2018) Weather Information to Pilots Strategy Paper, an outcome of the all weather Operations Project, Air Traffic Management Air Navigation Services Development, p 45

FAA publication (2008) Thunderstorms – don’t flirt … skirt ‘em, 2008, FAA P-8740-12

FAA AC 00-24C (2013) Advisory circular, thunderstorms, US department of Transportation, Federal Aviation Administration

Galway JG (1956) The lifted index as a predictor of latent instability. Bull Amer Meteor Soc. https://doi.org/10.1175/1520-0477-37.10.528

Goodman S (2012) GLM lightning cluster filter algorithm. NOAA/NESDIS, p 73