Abstract

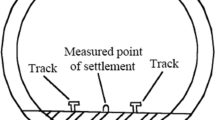

Nowadays, the issue of predicting soil settlement has gradually become an important research area. The theory of predicting soil settlement under static load is comparatively mature, while the method of predicting soil settlement under dynamic loading is still at the exploratory stage. This paper aimed to find a suitable model to satisfy the prediction of long-term settlements of subway tunnel. The settlement monitoring data of Subway Line 1 in Shanghai were taken as the case. In this paper, current nonlinear prediction methods of settlement were summarized. The fitting method was introduced and applied in the settlement data of Shanghai subway tunnel; correlation coefficient r of the fitting results can keep a high level in most cases, illustrating the validity of segmentation simulation. Two kinds of prediction methods and its utilizing methods were introduced in this paper, i.e., Grey Model (1, 1) and Auto-Regressive and Moving Average Model (n, m). The settlement trend of Subway Line 1 in Shanghai was predicted by GM (1, 1) and ARMA (n, m) model. The results show that ARMA (n, m) model is more precise than the GM (1, 1). As a new method in settlement prediction field, ARMA (n, m) model is prospective in the future.

Similar content being viewed by others

References

Cheng P, Huang T, Li GH (2010) Prediction and analysis of structure settlement of the metro tunnel based on TGM-ARMA model. Geotech Invest Surv 12:66–69 (in Chinese)

Deng JL (1982) Control problems of grey systems. Syst Control Lett 1(5):288–294

Deng JL (2002) The basis of grey theory. Press of Huazhong University of Science &Technology, Wuhan. (in Chinese)

George E, Gwilym M, Gregory C (1997) Time series analysis forecasting and control. China Statistics Press, Peking (in Chinese)

Li WX, Liu L, Dai LF (2010) Fuzzy probability measures (FPM) based non-symmetric membership function: engineering examples of ground subsidence due to underground mining. Eng Appl Artif Intell 23:420–431

Li GF, Huang T, Xi GY et al (2011) Review of researches on settlement monitoring of metro tunnels in soft soil during operation period. J Hohai Univ (Natl Sci) 39(3):277–284 (in Chinese)

Li WX, Li JF, Wang Q et al (2012) SMT-GP method of prediction for ground subsidence due to tunneling in mountainous areas. Tunn Undergr Space Technol 32:198–211

Liu XZ, Chen GX (2008) Advances in researches on mechanical behavior of subgrade soils under repeated-load of high-speed track vehicles. J Disaster Prev Mitig Eng 28(2):248–255 (in Chinese)

Liu SF, Dang YG, Fang ZG et al (2010) Grey system theory and its application. Science Press, Beijing (in Chinese)

Monismith CL, Ogawa N, Freeme CR (1975) Permanent deformation characteristics of subgrade soils due to repeated loading. Transportation Research Board, Washington, DC

Santos OJ, Celestino TB (2008) Artificial neural networks analysis of Sao Paulo subway tunnel settlement data. Tunn Undergr Space Technol 23(5):481–491

Tang YQ, Cui ZD, Wang JX et al (2008) Application of grey theory-based model to prediction of land subsidence due to engineering environment in Shanghai. Environ Geol 55(3):583–593

Wang Y, Tang Y, Li R (2010) Gray prediction and analysis on tunnel soil settlement under metro load. Subgrade Eng 05:4–7 (in Chinese)

Wang W, Su JY, Hou BW et al (2012) Dynamic prediction of building subsidence deformation with data-based mechanistic self-memory model. Chin Sci Bull 57(26):3430–3435

Xu JC, Xu YW (2011) Grey correlation-hierarchical analysis for metro-caused settlement. Environ Earth Sci 64:1249–1256

Zheng YL, Han WX, Tong QH et al (2005) Study on longitudinal crack of shield tunnel segment joint due to asymmetric settlement in soft soil. Chin J Rock Mech Eng 24(24):4552–4558 (in Chinese)

Acknowledgments

The work presented in this paper was supported by the National Natural Science Foundation of China (Grant No. 51208503) and the Natural Science Foundation of Jiangsu Province, China (Grant No. BK2012133).

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: GM (1, 1)

1.1 Definition of grey model

Grey theory, originally developed by Deng (1982), focuses on model uncertainty and information insufficiency in analyzing and understanding systems via research on conditional analysis, prediction and decision-making. The system with partial unknown structure, parameters, and characteristics is called a grey system. In the field of information research, deep or light colors represent information that is clear or ambiguous, respectively. Meanwhile, black indicates that the researchers have absolutely no knowledge of system structure, parameters and characteristics, while white represents that the information is completely clear. Colors between black and white indicate systems that are not clear, i.e., grey system. Grey model was built to describe a grey system in mathematic way. p in GM (p, q) means number of accumulating generating operator (AGO) times, and q means number of unknown. Therefore, GM (1, 1) is a grey model experience once accumulating generating operator (1-AGO) and including 1 unknown number. GM (2, 1) is a grey model experience twice accumulating generating operator (2-AGO) and including 1 unknown number.

1.2 Model building

Assuming raw sequence of data is:

After once accumulated generating operator (1-AGO), a new sequence was obtained:

where x (1)(i) = { ∑ i j=1 x 0(j)|i = 1, 2, …, N}.

Consisting background value sequence through x (1):

where z (1)(k) = αx (1)(k − 1) + (1 − α)x (1)(k), k = 2, 3, …, n.

Usually we assume α = 0.5 and build shadow equation:

It is the original style of GM (1, 1). After being discretization, a new equation is obtained:

where a and b are undetermined coefficients.

It is the basic style of GM (1, 1).

1.3 Solving parameter

Using the least square technique, the equation is proved,

where \(B = \left[ {\begin{array}{*{20}l} { - z^{(1)} (1)} \hfill & 1 \hfill \\ { - z^{(1)} (2)} \hfill & 1 \hfill \\ { - z^{(1)} (3)} \hfill & 1 \hfill \\ \ldots \hfill & \ldots \hfill \\ { - z^{(1)} (n - 1)} \hfill & 1 \hfill \\ \end{array} } \right]\), \(Y_{n} = \left[ \begin{gathered} x^{(0)} (2) \hfill \\ x^{(0)} (3) \hfill \\ x^{(0)} (4) \hfill \\ \ldots \hfill \\ x^{(0)} (n) \hfill \\ \end{gathered} \right].\)

-

(a)

Building predicting function:

-

(b)

Methods of model accuracy checking

Determining residual error Δ k (x (0)(k) minus \(\hat{x}^{\left( 0 \right)} \left( k \right)\)) and relative error (k):

Determining average value of raw data and average value of residual error:

Determining variance of raw data s 21 and variance of residual error s 22 , defining variance ratio C and small errors probability p,

The model predicting accuracy indexes are summarized in Table 2.

For GM (2, 1), the difference from GM (1, 1) is that the former made some changes on formula (2), i.e.,:

Assuming raw sequence of data is:

After twice accumulated generating operator (2-AGO), a new sequence was obtained:

where x (2)(i) = {∑ i j=1 x 1(j)|i = 1, 2, …, N}, x (1)(i) = {∑ i j=1 x 0(j)|i = 1, 2, …, N}.

When solving parameters, formulae (6), (7) and (8) should be operated twice. The first time we solve the solution is \(\hat{x}^{(1)} (i)\), the second time is the \(\hat{x}^{(0)} (i).\)

Appendix 2: ARMA (n, m) model

2.1 Definition of ARMA (n, m) model

ARMA(n, m) model consists of the Auto-regressive model (n) (i.e., AR(n)) and Moving average model(m) (i.e., MA(m)).

The basic AR (n) model is:

where ϕ i is parameters determined after n and n is the order number of AR model, ɛ t is the error term.

The basic MA (m) model is:

where θ i is parameters determined after m and m is the order number of MA model.

AR model is derived from Regression Analysis. As the prediction target, x t is influenced by the former data of itself. Therefore, we could predict the next x t by the former n weighted terms. As the ɛ t in formula (19) is unpredictable and random, we could only utilize the former m terms to fitting the error term, i.e., the MA model.

Based on n historical values and m previous prediction errors, ARMA model is relative largely to the previous data. The basic style of ARMA (n, m) model is (George et al. 1997),

where{x t } is raw data, \(\phi_{ 1} ,\phi_{2} , \ldots ,\phi_{n}\), θ 1, θ 2, …, θ m and ɛ 1, ɛ 2,…,ɛ t being the auto-regressive parameters, moving average parameters and white noise series. n means the order of historical values related to x t , m means the order of previous prediction errors related to ɛ t . Order selection will illustrate below. So, according to this definition, ARMA (1, 1) model means a model which result is related to one historical value and one previous prediction error. Modeling steps are as follows

2.2 Series stationarity analysis

The stationarity of time series is the premise of the ARMA modeling. In the modeling, we utilized runs test, the significant level α = 0.05. But in the actual condition, many time series are not stationary and need to be treated by mathematical method. The most common method is difference. The predicting model should be non-white noise series.

2.3 Model identification and order selection

After series stationarity being analyzed, the model identification and order selection should be decided. There are several kinds of methods to determined parameters n and m, illustrating two of them, as follows. According to the Autocorrelation Function (ACF) and the partial-autocorrelation function (PACF) table calculated by software Eviews, the number of the ACF term which was truncated is the parameter n, and the number of the PACF term which was truncated is the parameter m. Another method is Akaike information criterion (AIC). Assuming the orders (n and m) of ARMA model are a and b (a and b are natural numbers). AIC value was obtained by the function as follow:

where \(\hat{\sigma }_{a,b}^{2}\) is the estimation of variance of ARMA (a, b) model, N is the number of samples.

According to comparing the AIC value, the minimum of the value is the suitable pair of n and m. Some special cases should be noted. If the suitable order is n = 0, m > 0, the model is turn to MA (m) model. Similarly, if the suitable order is n > 0, n = 0, the model is turn to AR (n) model. Other common methods are standard error, logarithm likelihood function value, Schwarz Bayesian criterion (SBC) and so on. Therefore, after AIC order selection method, the suitable orders of settlement prediction models are n = 1 and m = 1, ARMA (1, 1) model was chosen as the prediction model.

2.4 Parameters estimation of the model

According to the optimal model and its order, the parameters ϕ i and θ i in the function (21) are estimated. The common methods of parameter estimation are moment estimation, maximum likelihood estimation and least square estimation.

2.5 Model test

The last step is testing and analyzing to residual error. When the residual error is white noise series, the fitting model is effective. The k steps autocorrelation parameter ρ 1, ρ 2, …, ρ k are calculated, the χ 2 statistics F k were constructed as follows, F k obeys χ 2 distribution whose degree of freedom is k.

where n is the capacity of the residual error.

Rights and permissions

About this article

Cite this article

Cui, ZD., Ren, SX. Prediction of long-term settlements of subway tunnel in the soft soil area. Nat Hazards 74, 1007–1020 (2014). https://doi.org/10.1007/s11069-014-1228-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-014-1228-y