Abstract

Remote sensing is proving very useful for identifying damage and planning support activities after an earthquake has stricken. Radar sensors increasingly show their value as a tool for damage detection, due to their shape-sensitiveness, their extreme versatility and operability, all weather conditions. The previous work of our research group, conducted on 1-m resolution spotlight images produced by COSMO-SkyMed, has led to the discovery of a link between some selected texture measures, computed on radar maps over single blocks of an urban area, and the damage found in these neighbourhoods. Texture-to-damage correlation was used to develop a SAR-based damage assessment method, but significant residual within-class variability makes estimations sometimes unreliable. Among the possible remedies, the injection of physical vulnerability data into the model was suggested. The idea here is to do so while keeping all the sources of data in the EO domain, by estimating physical vulnerability from the observation of high-resolution optical data on the area of interest. Although preliminary results seem to suggest that no significant improvement can be directly obtained on classification accuracy, there appears to be some link between estimated damage and estimated accuracy on which to build a more refined version of the damage estimator.

Similar content being viewed by others

1 Introduction

Satellite remote sensing can represent a useful tool to timely provide information (Voigt et al. 2007) in case of disaster events, by mapping damage to buildings and infrastructures through earth observation (EO) (Joyce et al. 2009; Eguchi et al. 2010). EO offers sensors in both the optical and radar domains; the literature accounts for a number of papers proposing methods to exploit information carried by the changes in synthetic aperture radar (SAR) backscattering and phase for earthquake damage mapping purposes. In Aoki et al. (1998); Matsuoka and Yamazaki (2004); Matsuoka and Yamazaki (2002); Stramondo et al. (2006), for example, combining SAR image intensity changes and the related correlation coefficient, the authors generate an index related to the damage level. SAR backscattering changes and signal phase changes are compared in Yonezawa and Takeuchi (2001) with the damage related to the earthquake in Kobe, Japan, in 1995. In Ito et al. (2000), different SAR change indicators are assessed, derived from L- and C-band sensors. Interferometric coherence (Hoffmann 2007) and intensity correlation between SAR images (Stramondo et al. 2006; Chini et al. 2009) have also been used for damage mapping at a block scale on the 2003 Bam earthquake.

In a disaster context, the literature reports several examples of automatic change-detection algorithms (Linke and McDermid 2011) applied to either optical (Chini et al. 2008) or SAR images, including multitemporal ones (Inglada and Mercier May 2007; Gamba et al. 2007). Change detection is, however, simply not applicable when a homogeneous pair of pre- and post-event images is not available. This happens frequently when data come from the newest spaceborne, VHR radar systems such as COSMO/SkyMed (C/S) and TerraSAR-X, for which the availability of highest resolution data is still scarce on urban areas.

Dell’Acqua et al. (2010) suggest that there may be a connection between a selected subset of texture measures computed on a single post-event VHR radar reflectivity map and the damage aggregated at the size of a neighbourhood in an urban area. In Dell’Acqua et al. (2009a, b), Dell’Acqua and Polli (2011), subgroups of the same authors proposed a method to turn such connection into a pre-operational method for damage assessment. Finally, in Cossu et al. (2012), a new subgroup of authors assesses some degree of applicability of the same approach to more widely available ASAR (Advanced Synthetic Aperture Radar) data.

Though promising as a technique, the accuracy of damage assessment based only on post-event radar data remains poor at around 60 %, and the injection of ancillary information is recognized as a possible way to improve the results. Physical vulnerability of buildings stands as a candidate information source; in short, physical vulnerability of a building is the likelihood that a given building suffers a given amount of damage for a given amount of ground shaking. This important component of seismic risk is often overlooked in favour of seismic hazard in the EO community.

The idea that categorizing buildings could help to better assess their damage level has been expressed by the authors in Dell’Acqua and Polli (2011) where some preliminary connections between the pre-event appearance of buildings and significant deviations from “normal” texture-to-damage correlations were hypothesized.

This paper will describe and discuss a more systematic attempt to tackle the issue of whether the availability of information on physical vulnerability of buildings can effectively be of help in improving post-event damage assessment based on SAR texture–to-damage correlation. It is to be noted from now that vulnerability itself is estimated from EO data, which means this experiment will turn—in perspective—into an all-EO damage assessment method.

The paper is organized as follows. The next section will briefly summarize the formerly proposed method; Sect. 3 will discuss vulnerability assessment and how it was introduced into the method, while Sect. 4 will present the consequent changes in the method performances. Section 5 will conclude the paper with some final comments and proposals for future developments.

2 The original method

2.1 Pre-processing of radar data

One post-event Spotlight-2 COSMO/SkyMed image was used for our experiments, acquired on an ascending orbit at a 19.07 degrees incidence angle by the 3rd C/S satellite on the 7th of April 2009, at 4:54:40 a.m.

The image was geocoded using SARSCAPE and SRTM-V3 (Shuttle Radar Topography Mission) DEM (Digital Elevation Model). It was then translated to radar cross-section density values through radiometric calibration.

2.2 Texture-damage relationship

According to Dell’Acqua et al. (2009), on an earthquake-stricken urban area, one may define an index called DAR (damaged area ratio) to each block j in the GIS layer that defines urban neighbourhoods:

where DAR j is the DAR value on j-th GIS polygon; d ij is the “damage flag” (with values 0 or 1) indicating whether building i in polygon j was damaged by the earthquake; A B ij is the footprint area of the ith building in jth polygon; A P j is the total area of the jth polygon.

DAR basically expresses the ratio of area covered by damaged buildings with respect to the total area of a city block. Here, a “damaged” building means EMS 98 grades “4” and “5” (“severely damaged” and “collapsed”, respectively) (European Seismological Commission 1998) as visible from a nadiral optical sensor. The “damage flag” was indeed set based on expert interpretation of high-resolution, post-event aerial images as explained in Dell’Acqua et al. (2009). In the case of L’Aquila, DAR values ranged from zero to 26.68 %, with an average value of 1.67 % overall.

DAR value series and different block-averaged grey level co-occurrence matrix (GLCM) (Cossu et al. Aug. 2012) value series, computed from SAR reflectivity data on corresponding blocks, were systematically compared. This led to discovering that homogeneity and entropy textures generally feature peak correlation with damage at 0.4–0.6 (in terms of absolute values), with better correlations on subsets at higher damage levels. Hence, a texture-threshold-based method was proposed in Dell’Acqua et al. (2009) to classify urban blocks into three different damage status levels, with overall accuracy around 60–70 % according to classification parameter choices. In the following, we try and leverage on physical vulnerability to attempt boosting the classification accuracy.

3 Estimating and introducing the physical vulnerability of buildings

In order to make the experiment as general as possible, we refrained from using non-EO data on buildings (e.g., cadastral data), as this is generally operationally difficult to obtain in any random location across the globe where an earthquake may have happened to strike. A big project [the Global Earthquake Model or GEM (2012)] is in progress that aims at systematically mapping physical vulnerability of buildings across the Earth; yet, it is still ongoing and its product will be a method to map, not a global map itself. We thus preferred to use ever-available, common-hand Google Earth (2012) data to estimate vulnerability in any given targeted area.

3.1 Physical vulnerability estimation

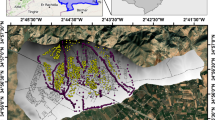

The vulnerability assessment has been performed considering a region that includes the historical centre and the neighbouring districts of the L’Aquila, Italy, urban area (Fig. 1). The evaluation is based on some parameters, called vulnerability indices, that have been estimated starting from satellite/aerial images. To this purpose, the Google Earth (2012) free resource has been used, which on L’Aquila offers pan-sharpened GeoEye data, with a spatial resolution of 50 cm on the brightness component. This was deemed sufficient to confidently estimate all of the indices reported in the following, by visual interpretation. It is difficult to determine to what extent a lower resolution would impair the interpreter’s capacity to estimate the index values. Age of buildings is probably the most demanding one in terms of availability of spatial details, while footprint/neighbourhood/height of buildings are more robust to unavailability of below-metre details.

The considered area around downtown L’Aquila, Italy. The coloured polygons represent the neighbourhoods considered as statistical units, and each different colour is associated with one of the three different “vulnerability classes” as defined in the text. Underlying image © Google Earth and respective data providers

The first step of the procedure was to subdivide the region of interest, that is, the L’Aquila downtown and surroundings, into smaller, apparently homogeneous subregions, having in mind the chosen vulnerability indices afterwards described.

Such subregions, defined in Google Earth by means of polygons, are meant to cluster buildings that are adjacent or close to each other and characterized by apparently similar vulnerability levels. Successive, deeper investigation int the Google Earth data may make inhomogeneities emerge, and blocks can be further splitted. All the block partition based on physical vulnerability was blind to the former block partition and damage estimation as described in Sect. 2.

The physical vulnerability of a building is influenced by several well-known characteristics such as structural typology, regularity in plan and elevation, plan distribution of resisting elements, position of the building in the aggregate, foundations typology, presence of ribbon windows, etc., as widely reported in the literature (Dolce and Martinelli 2005a, b; Formisano and Mazzolani 2010; Lantada et al. 2004; Otani 2002; Feriche et al. 2009; Vicente et al. 2008). However, from satellite/aerial images, only some of these parameters can be estimated, as already pointed out in Polli et al. (2009). In most cases, it is very difficult to determine even the structural system typology (reinforced or unreinforced masonry, reinforced concrete, precast, timber), which clearly has a strong influence on the seismic performance of a building. On the other hand, some other parameters can be estimated, with a certain degree of uncertainty, for example, the height or the period of construction. Finally, some parameters can be very well identified, even more easily with respect to an in situ investigation, such as the plan irregularity and the presence of adjacent buildings. In a very general sense, it is already proved that remote sensing is a useful tool for vulnerability estimation (Taubenböck et al. 2007), but here the problem addressed is more specific.

It is clear to the authors that only a limited amount of information relevant to physical vulnerability can be extracted from EO data. The purpose here is to evaluate whether that specific amount of information is sufficient to make any difference in an EO-based damage estimation framework.

Four vulnerability indices have been considered; for each of them, a vulnerability score S i , ranging from 0 to 10, is assigned to every sub-region. To achieve this, caution on two main aspects is needed: The buildings within a sub-region must be similar enough, in terms of assessed vulnerability, in particular for each vulnerability index. After that, possible discrepancies among different buildings are averaged, summing up the score of each building and dividing it by their number (in case of particular aggregates, the definition of the number of “units” is not straightforward). Each index score is then multiplied by an “importance factor” I (basically a weight) which accounts for the different relevance of each single index to the overall vulnerability. Finally, the factorized indices are summed up in order to obtain a single score of vulnerability S, also ranging from 0 to 10.

The indices chosen for the classification are explained in the following.

3.1.1 Footprint regularity

According to the most recent seismic codes [Eurocode 8 (UNI EN 1998 part 1)], a building can be considered regular if the footprint aspect ratio a/b is smaller than 4 (where a is the greater footprint length and b is the smaller one), and the wall recess, if existing, is smaller than 20 % of the correspondent footprint length; a regular building implies a lower vulnerability (Formisano and Mazzolani 2010; Feriche et al. 2009; Vicente et al. 2008) index score. Higher scores are instead assigned to buildings that do not fulfil the previous requirements; in particular, those buildings that are likely to develop significant torsional response and, due to the plan morphology, undesired in-plan deformations (e.g., a “L-shaped” plan with extended legs) will have a high vulnerability score from the regularity point of view.

A precise mathematical relationship between the plan area, perimeter, walls recess, etc., and the regularity index has not been defined yet; this is because a wide variety of possible plan configurations exists, and in addition, the correlation between the plan morphology and the presumed location of the resisting elements against horizontal loads (stairwell, walls, columns) shows several uncertainties and deserves a dedicated study. It is worth to remember that the footprint regularity is considered here directly related to the torsional effects. The centre of mass of each story, that is, the point where the resultant of the inertial forces is applied, can be estimated with good accuracy. On the other hand, the structural system can be made of elements (e.g., walls) located in particular positions that reduce (or increase) the eccentricity between the centre of mass and the centre of rigidity, thus limiting (or magnifying) the undesirable torsional effects. The position of such structural elements cannot be inferred by aerial images; hence, a dedicated study on the statistical correspondence between plan irregularity and real torsional effects of the existing buildings should be carried out, in order to define a direct relationship with the considered vulnerability index. In Figs. 2 and 3, an example of regular, moderately irregular and highly irregular footprint is reported; the regularity index has been assigned, respectively, equal to 0, 4.5 and 10.

3.1.2 Neighbourhood

We appreciate here isolated buildings (low score) versus adjacent irregular aggregates (high score); heterogeneous adjacent buildings can result in undesired seismic response (e.g., pounding between buildings with different height or having slabs at different elevation level (Formisano and Mazzolani 2010; Lantada et al. 2004; Vicente et al. 2008). Similarly to the footprint regularity index, a precise mathematical relationship between aggregate morphology and vulnerability index has not been defined at this stage.

3.1.3 Age of building/retrofit

The age of the building (or the period of the last structural retrofit) is strongly related to the seismic vulnerability; this is due to the relevant evolution, particularly over the past 50 years, of the seismic codes and the construction techniques. On the other hand, such parameter is difficult to estimate (even with in situ inspection) and the relationship with the vulnerability is not straightforward. However, because of its relevant influence, it has been considered here one of the most important factors.

Some indications on the age can be inferred by looking at the roofs appearance (e.g., aged and damaged tiles) and from generally easily recognizable historical centre aggregates. Building age can be also estimated from multitemporal sequences of EO data.

In general, a recent or retrofitted building implies lower vulnerability (Lantada et al. 2004; Otani 2002; Vicente et al. 2008) score; such parameter, in comparison with the previous ones, is certainly characterized by higher uncertainty.

It is worth to notice that the relationship between age and vulnerability must be defined according to the considered context. The examined case study has been thus related to the Italian evolution of the building codes and the construction techniques; to clarify the index definition, some of the major advances in the design and construction practice in Italy are briefly reported hereafter.

Until the 50–60s, most of the buildings were realized with unreinforced masonry or reinforced concrete with smooth rebars and a general poor detailing, and the design was oriented to vertical loads only (vulnerability score of 8–10). In the late 70s, some structural improvements have been achieved, particularly with the introduction of ribbed rebars (score of 7–8). In the mid-90s, the semi-probabilistic method of the limit states has been introduced in the design procedure, together with different provisions to account for the seismic load (score 4–7). In 2003, the Italian seismic regulations have been substantially modified, with the introduction of the strength hierarchy concept, the performance-based design approach, etc. (score 1–4).

3.1.4 Estimated height

The vulnerability score has been considered here directly proportional to the building height. Particularly, buildings that are not properly designed for the seismic load result in a vulnerability component somehow proportional to their height (Otani 2002). This is due to the progressively increasing bending moment at the base of the structure, needed to balance the increasing overturning moment produced by the horizontal inertial force multiplied by the height.

Experimentally, the vulnerability appears to increase at “higher rate” up to 3 storeys. For this reason, a piecewise linear relationship between the number of storeys and the vulnerability index has been assumed, as detailed in Fig. 4.

Such proportionality is generally true up to a certain height (approximately 10–15 storeys), as it can be observed in studies such as (Lynch et al. 2011), which includes the totality of the considered buildings. Over this limit, other effects become important, such as the decoupling between the structure and the ground motion, the increasingly important contribution of the higher modes to the global response of the structure due to the differences in the frequency properties, etc. These aspects are off the scope of the present study and thus not treated here.

Precise measurement of building height can be made using LIDAR (LIght Detection And Ranging) scanning (Mallet and Bretar 2009), but no such data were available for the case study at hand. Less precise but also less expensive height estimation can be made using Interferometric SAR (InSAR) data (Schmitt et al. 2011; Gamba et al. 2006). In the case at hand, though, a visual estimation was deemed sufficient as the relevant datum was the number of floors rather than precise height.

Height is the last estimated parameter, after which all the indices are to be fused together to produce the final vulnerability estimate.

As already mentioned, scores are thus weight-summed up to a single vulnerability index S. The weights I (Table 1) have been defined according to the expected influence of each index on the global vulnerability. Age is considered one of the most important factors, for the reasons previously discussed; hence, a 40 % of the total vulnerability derives from this index. Also, the footprint regularity and the presence of adjacent buildings play an important role in the total vulnerability, while height has been considered as a less influent parameter; thus, only a 5 % contribution to the total vulnerability has been assigned to this index.

The vulnerability indices, their score range and the weights I are shown in Table 1.

Starting from Table 1, to each one of the considered sub-regions, a vulnerability score has been assigned as a weighted sum of the considered components, averaged over the entire sub-region. Afterwards, three vulnerability classes have been defined as in Table 2.

The score assigned to each vulnerability index should be related as much as possible to objective parameters, in order to enhance the repeatability of the method and reduce the randomness related to the subjective judgment. This can be easily done for, for example, the height index; it can be indeed computed by defining a univocal function of the estimated parameter. However, for example, for the footprint irregularity, as previously explained, defining a strict rule is significantly more complicated.

Colour-coded polygons (Fig. 1) illustrating results of class assignments (Fig. 5) as in Table 2 compare well, in their overlap area, with a similar vulnerability assessment produced after a ground surveying by experts (Tertulliani et al. 2011). This latter can be taken as a reference because inspection produces far more information on any single building than any EO-based estimation.

Example of vulnerability class assignment. The building on top right is analysed for footprint regularity, height, and other features and assigned a vulnerability score. Its vulnerability score corresponded to “medium” vulnerability class, which is represented by an “orange” polygon in the GIS layer. Underlying images © Google Earth and respective data providers

The weights I, as well as the value ranges and the number of vulnerability classes, have been defined at this stage without considering the ground reference data on seismic damage. The following step (subsection B) involved checking the correlation of the vulnerability estimation with the actual post-event damage reported (i.e., the DAR values computed as in Sect. 2.2). This was done to support the criteria used for estimating vulnerability by checking that allegedly a priori vulnerable buildings are on average more frequently found damaged than a priori less vulnerable buildings. In this phase, the weights, as well as the vulnerability class ranges, can be refined, but only when a wider database of experimental data, considering more seismic events and locations, will be processed.

Once the correlation of the vulnerability score S with the vulnerability based on real data is proved and possibly refined, such information can be crossed with seismological data (e.g., expected local peak ground acceleration or PGA, return period, expected site effects) in order to obtain a risk map. Consequently, in case of a seismic event, it will be possible to get a quick estimate of the expected damage and its distribution. This is, however, out of the scope of the present paper, which only needs a rough assessment of vulnerability in order to test its usability in refining spaceborne damage estimation.

3.2 Analysis of vulnerability results versus previous reference data

After the operations described in the former subsection, the resulting polygon stock turned out to be very different from the one presented in the previous papers (Dell’Acqua et al. 2009). In the new partitioning, 124 polygons with an average size of about 21,000 m2 mark a big difference with respect to the older 58 polygons whose average size was about 117,680 m2. Vulnerability analysis was far more time-consuming than simple manual partitioning of town blocks, so a total of 2.5 km2 was covered as opposed to the previous 6.8 km2. Moreover, the partitioning has been done not simply following the main roads and the geometrical boundaries of the city, but it is instead based on a vulnerability study of building clusters. The final GIS layer with colour-coded vulnerability classes is shown in Fig. 6.

For the sake of homogeneity with the previous representation of damage using the DAR index as explained in Sect. 2, the DAR values were recomputed for the new block partition, with results summarized in Fig. 7. Even only qualitatively, a certain degree of matching between the a priori estimated vulnerability and the reported damage level can be appreciated. This will be expressed in a more quantitative way in the next section.

4 The analysis

As a first analysis step, the possibility to use the sheer vulnerability information estimated in the discussed method as a “first proxy” for damage was considered. This does make sense as, roughly speaking, for any given ground shaking more extensive damage can be expected on more vulnerable buildings. Actually, a confusion matrix reporting vulnerability class versus ground truth damage class was thus built as in Table 3, and it revealed that low vulnerability almost always corresponds to low or no damage, medium vulnerability to low or moderate damage; in areas with high vulnerability, damage has the widest variability. The reportedly very low overall accuracy of 36 % (only slightly better than chance) does not convey the information that the confusion matrix is actually nearly upper triangular. This means that, at least, the estimated vulnerability does redistribute likelihood of damage statuses—although it will probably not help in directly assessing damage.

A more precise analysis entails correlation between DAR and vulnerability indexes. Overall, the Pearson correlation between DAR and vulnerability index S reaches 0.506, meaning a significant link, though intra-class correlations may be as low as 0.06 for the highest vulnerability class. This is probably connected with the complex patterns of buildings downtown, which makes precise vulnerability estimation difficult, besides creating unexpected seismic responses.

The second—and perhaps more important—analysis was related to the possible use of vulnerability information to improve damage estimation. As already recalled in Sect. 2.2, a correlation has been found between local averages of texture measures and local level of seismic damage. Here, the step forward is to assess whether different—and more reliable—relationships between texture measures and damage could be defined per each vulnerability class, instead of a single, one-size-fits-all relationship as defined so far. Preliminary results in Table 4, with averages slowly drifting up (for entropy) and down (for homogeneity) across classes, seem to suggest—once more—a link between vulnerability and damage signatures.

Unfortunately, though, no significant improvement in correlation was found when splitting signature-to-damage correlation analysis among the defined vulnerability classes, as seen in Table 5.

A final classification was produced using the method in Dell’Acqua et al. (2009) on the new set of blocks defined as in Figs. 4, 5, and the result is shown in Fig. 8, which corresponds to an overall accuracy level of 56.45 %.

A practical method to apply the analysis findings and improve classification results is to force all the lowest-vulnerability blocks to “undamaged” status, which is the correct result in 95 % of cases in that selected subset of blocks. More difficult would be to inject information from other vulnerability class assignments into classification results, although it is probably possible to adjust the thresholds defined in Dell’Acqua et al. (2009) to account for the different a priori damage probabilities. Just to mention some quantitative references, for high vulnerability class, low, medium and high damage probabilities would be 17, 49 and 34 %, respectively; for medium vulnerability class, figures would change to 83, 15, 2 %, resulting in a great impact on behaviour of a possible statistical classifier.

We need, however, to be very careful in judging from these preliminary experiments, as:

-

The most important vulnerability score component is also the most uncertain one (structural features of the buildings)

-

The highest vulnerability classes include most of the historical centre of the town, where very different interaction phenomena may take place.

-

All in all, the statistical sample is limited; more case studies should be considered.

It is the authors’ opinion that further investigation is needed, especially on the inter-class DAR-to-texture scattergrams, to enable a deeper exploitation of the vulnerability information for damage assessment.

5 Conclusions

This paper builds on a method for seismic damage assessment based on spaceborne VHR radar data, to analyse whether ancillary physical vulnerability information may be of any help in improving EO-based damage estimation. In this paper, a preliminary investigation is presented on what changes can be expected in the boundary conditions for damage estimation if building clusters are splitted into three different vulnerability classes assessed using EO-visible proxies.

Although a deeper investigation is still needed, the first results seem to highlight a few main facts:

-

three different estimated vulnerability classes do not translate into three different and more accurate relationships between damage signatures and damage level, yet

-

the proposed method for rough vulnerability estimation results in vulnerability classes reporting significantly different damage status distributions (i.e., estimated vulnerability reflects in different likelihood of observed damage),

This means that intra-class, texture-to-damage correlation may not improve, but so will the classifier’s knowledge about a priori damage distribution; as mentioned in sect. IV, a priori probabilities of damage levels will change significantly between classes. Work is in progress to incorporate such information into a statistical classifier model, which be capable of exploiting the estimated vulnerability information.

As already mentioned, all these results are very preliminary and need to be further investigated on a wider statistical basis before coming to more definite conclusions. Though, even a very rough vulnerability estimation seems to impact significantly on expected damage distribution, which appears as a very good point in favour of EO-based vulnerability estimation as a supporting component to EO-based seismic damage assessment.

This paper also intends to raise the issue of integrating physical vulnerability and exposure into geohazard-related operations such as the GEO (2012), Supersites initiative (2012), as an important additional piece of information that the Global Earthquake Model (2012) will make publicly available at some point in the future.

References

Aoki H, Matsuoka M, Yamazaki F (1998) Characteristics of satellite SAR images in the damaged areas due to the Hyogoken-Nanbu earthquake. In: Proceedings of the 19th Asian conference remote sensing 1998, vol 7, pp 1–6

Chini M, Pacifici F, Emery WJ, Pierdicca N, Del Frate F (2008) Comparing statistical and neural network methods applied to very high resolution satellite images showing changes in man-made structures at Rocky Flats. IEEE Trans Geosci Remote Sens 46(6):1812–1821

Chini M, Pierdicca N, Emery WJ (2009) Exploiting SAR and VHR optical images to quantify damage caused by the 2003 Bam earthquake. IEEE Trans Geosci Remote Sens 47(1):145–152

Cossu R, Dell’Acqua F, Polli DA, Rogolino G (2012) SAR-based seismic damage assessment in urban areas: scaling down resolution, scaling up computational performance. IEEE J Sel Topics Appl Earth Obs Remote Sens 5(4):1110–1117. doi:10.1109/JSTARS.2012.2185926

Dell’Acqua F, Polli D (2011a) Post-event only VHR radar satellite data for automated damage assessment: a study on COSMO/SkyMed and the 2010 Haiti earthquake. Photogramm Eng Remote Sens 77:1037–1104

Dell’Acqua F, Gamba P, Lisini G, Polli D (2009b) Satellite remote sensing as a tool for management of emergencies from natural disasters: a case study on L’Aquila, 6th April 2009 earthquake. Progettazione Sismica, year 1, no 3, special issue in English, pp 85–94, ISSN 1973-7432

Dell’Acqua F, Polli D (2011) Post-event Spaceborne VHR radar for seismic damage assessment: integrating pre-event optical data? JRC. Ispra, Varese. ESA JRC EUSC 2011—Image information mining: geospatial intelligence from earth observation, 31 March–2 April 2011

Dell’Acqua F, Bignami C, Chini M, Lisini G, Polli DA, Stramondo S (2009a) Earthquake damages rapid mapping by satellite remote sensing data: L’Aquila April 6th, 2009 event. IEEE J Select Topics Appl Earth Obs Remote Sens. doi:10.1109/JSTARS.2011.2162721

Dell’Acqua F, Gamba P, Polli D (2010) Mapping earthquake damage in VHR radar images of human settlements: preliminary results on the 6th April 2009, Italy case. Geoscience and remote sensing symposium (IGARSS), 2010 IEEE International, pp 1347–1350, 25–30 July 2010. doi:10.1109/IGARSS.2010.5653973

Dolce M, Martinelli A (A cura di) (2005a) Inventario e vulnerabilità degli edifici pubblici e strategici dell’Italia centro-meridionale, Vol. I—Caratteristiche tipologiche degli edifici per L’Istruzione e la Sanità, INGV/GNDT-Istituto Nazionale di geofisica e Vulcanologia/Gruppo Nazionale per la Difesa dai Terremoti, L’Aquila, 2005

Dolce M, Martinelli A (A cura di) (2005b) Inventario e vulnerabilità degli edifici pubblici e strategici dell’Italia centro-meridionale, Vol. II—Analisi di vulnerabilità e rischio sismico, INGV/GNDT-Istituto Nazionale di geofisica e Vulcanologia/Gruppo Nazionale per la Difesa dai Terremoti, L’Aquila, 2005

Eguchi RT, Huyck CK, Ghosh S, Adams BJ, McMillan (2010) A utilizing new technologies in managing hazards and disasters. In: Geospatial techniques in urban hazard and disaster analysis. Springer NL, 2010, pp 295–323, ISBN 978-90-481-2237-0 (print) 978-90-481-2238-7 (online). doi:10.1007/978-90-481-2238-7_15

European Seismological Commission (1998) In: Grünthal G (ed) European Seismic Scale 1998 (EMS-98). Cahiers du Centre Européen de Géodynamique et de Séismologie 15. Centre Européen de Géodynamique et de Séismologie, Luxembourg, p 99

Feriche M, Vida F, Garcia R, Navarro M, Vidal MD, Montilla P, Pinero L (2009) Earthquake damage scenarios in Vélez—Malaga urban area (Southern Spain) applicable to local emergency planning. In: Proceedings of the 8th international workshop on seismic microzoning and risk reduction, Almeria, Spain, 15–18 March 2009

Formisano A, Mazzolani FM (2010) A quick methodology for seismic vulnerability assessment of historical masonry aggregates. In: Proceedings of the COST C26 final conference, Naples, 16–18 September 2010, CRC Press, London

Gamba P, Dell’Acqua F, Lisini G, Cisotta F (2006) Improving building footprints in InSAR data by comparison with a Lidar DSM. Photogramm Eng Remote Sens 72(1):63–70

Gamba P, Dell’Acqua F, Trianni G (2007) Rapid damage detection in the bam area using multitemporal SAR and exploiting ancillary data. IEEE Trans Geosci Remote Sens 45(6):1582–1589

Google Earth (2012) [online]. Available: earth.google.com/. Latest access 15 Mar 2012

Hoffmann J (2007) Mapping damage during the Bam (Iran) earthquake using interferometric coherence. Int J Remote Sens 28(3):1199–1216

Inglada J, Mercier G (2007) A new statistical similarity measure for change detection in multitemporal SAR images and its extension to multiscale change analysis. IEEE Trans Geosci Remote Sens 45(5):1432–1445

Ito Y, Hosokawa M, Lee H, Liu JG (2000) Extraction of damaged regions using SAR data and neural networks. In: Proceedings of the 19th ISPRS Congress, Amsterdam, The Netherlands, July 16–22, 2000, vol 33, pp 156–163

Joyce KE, Belliss SE, Samsonov SV, McNeill SJ, Glassey PJ (2009) A review of the status of satellite remote sensing and image processing techniques for mapping natural hazards and disasters. Prog Phys Geogr 33(2):183–207

Lantada N, Pujades LG, Barbat AH (2004) Risk scenarios for Barcelona, Spain. In: Proceedings of the 13th world conference on earthquake engineering, Vancouver, BC, Canada, 1–6 August 2004

Linke J, McDermid GJ (2011) A conceptual model for multi-temporal landscape monitoring in an object-based environment. IEEE J Select Topics Appl Earth Obs Remote Sens 4(2):265–271

Lynch KP, Rowe KL, Lielb AB (2011) Seismic performance of reinforced concrete frame buildings in southern California. Earthq Spectra 27(2):399–418

Mallet C, Bretar F (2009) Full-waveform topographic lidar: state-of-the-art. ISPRS J Photogramm Remote Sens 64(1):1–16. doi:10.1016/j.isprsjprs.2008.09.007 ISSN 0924-2716

Matsuoka M, Yamazaki F (2002) Application of the damage detection method using SAR intensity images to recent earthquakes. In: Proceedings of the IGARSS 2002, Toronto, Canada, June 24–28, vol 4, pp 2042–2044

Matsuoka M, Yamazaki F (2004) Use of satellite SAR intensity imagery for detecting building areas damaged due to earthquakes. Earthq Spectra 20(3):975–994

Otani S (2002) Seismic vulnerability assessment methods for buildings in Japan. Earthq Eng Eng Seismol 2(2):44–56

Polli D, Dell’Acqua F, Gamba P (2009) First steps towards a framework for earth observation (EO)-based seismic vulnerability evaluation. Environ Semeiot 2(1):16–30. doi:10.3383/es.2.1.2 ISSN 1971-3460 (online)

Schmitt M, Magnard C, Brehm T, Stilla U (2011) Towards airborne single pass decimeter resolution sar interferometry over urban areas. In: Photogrammetric image analysis Lecture Notes in Computer Science, vol 6952. Springer, Berlin, pp 197–208. doi:10.1007/978-3-642-24393-6_17

Stramondo S, Bignami C, Chini M, Pierdicca N, Tertulliani A (2006) The radar and optical remote sensing for damage detection: results from different case studies. Int J Remote Sens 27:4433–4447

Taubenböck H, Roth A, Dech S (2007) Vulnerability assessment using remote sensing: the earthquake prone mega-city Istanbul, Turkey. In: Proceedings of international symposium on remote sensing on environment (ISRSE, 2007), San Jose, Costa Rica

Tertulliani A, Arcoraci L, Berardi M, Bernardini F, Camassi R, Castellano C, Del Mese S, Ercolani E, Graziani L, Leschiutta I, Rossi A, Vecchi M (2011) An application of EMS98 in a medium-sized city: the case of L’Aquila (Central Italy) after the April 6, 2009 Mw 6.3 earthquake. Bull Earthquake Eng 9:67–80. doi:10.1007/s10518-010-9188-4

The Global Earthquake Model (2012) [online]. Available: http://www.globalquakemodel.org/. Latest access 15 Mar 2012

The Group on Earth Observations (2012) [online]. Available: http://www.earthobservations.org/. Latest access 15 March 2012

Vicente R, Parodi S, Lagomarsino S, Varun H, Mendes da Silva JAR (2008) Seismic vulnerability assessment, damage scenarios and loss estimation. Case Study of the Old City Centre of Coimbra, Portugal. In: Proceedings of the 14th world conference on earthquake engineering, Beijing, China, 12–17 October 2008

Voigt S, Kemper T, Riedlinger T, Kiefl R, Scholte K, Mehl H (2007) Satellite image analysis for disaster and crisis-management support. IEEE Trans Geosci Remote Sens 45(6):1520–1528

The Supersites Initiative (2012) Available: http://supersites.earthobservations.org/sendai.php

Yonezawa C, Takeuchi S (2001) Decorrelation of SAR data by urban damage caused by the 1995 Hoyogoken-Nanbu earthquake. Int J Remote Sens 22(8):1585–1600

Acknowledgments

The open access option charges were covered by the Italian Civil Protection Department in the framework of the “Progetto Esecutivo” as an action in line with its own policy of increasing dissemination of research results.

Open Access

This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Dell’Acqua, F., Lanese, I. & Polli, D.A. Integration of EO-based vulnerability estimation into EO-based seismic damage assessment: a case study on L’Aquila, Italy, 2009 earthquake. Nat Hazards 68, 165–180 (2013). https://doi.org/10.1007/s11069-012-0490-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-012-0490-0