Abstract

The ability to accurately forecast potential hazards posed to coastal communities by tsunamis generated seismically in both the near and far field requires knowledge of so-called source coefficients, from which the strength of a tsunami can be deduced. Seismic information alone can be used to set the source coefficients, but the values so derived reflect the dynamics of movement at or below the seabed and hence might not accurately describe how this motion is manifested in the overlaying water column. We describe here a method for refining source coefficient estimates based on seismic information by making use of data from Deep-ocean Assessment and Reporting of Tsunamis (DART\(^{\circledR}\)) buoys (tsunameters). The method involves using these data to adjust precomputed models via an inversion algorithm so that residuals between the adjusted models and the DART\(^{\circledR}\) data are as small as possible in a least squares sense. The inversion algorithm is statistically based and hence has the ability to assess uncertainty in the estimated source coefficients. We describe this inversion algorithm in detail and apply it to the November 2006 Kuril Islands event as a case study.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Tsunamis have been recognized as a potential hazard to United States coastal communities since the mid-twentieth century when multiple destructive tsunamis caused damage to the states of Hawaii, Alaska, California, Oregon, and Washington. The National Oceanic and Atmospheric Administration (NOAA) responded to these disasters with the establishment of two Tsunami Warning Centers responsible for providing warnings to the United States and her territories (these centers also provide warnings internationally to participating nations in the Pacific, Atlantic, and Indian Oceans, as well as the Caribbean Sea Region). In addition, the agency assumed the leadership role in the area of tsunami observations and research and has been measuring tsunamis in the deep ocean for several decades. The scale of destruction and unprecedented loss of life following the December 2004 Sumatra tsunami prompted a strengthening of efforts to address the threats posed by tsunamis, and, on 20 December 2006, the United States Congress passed the “Tsunami Warning and Education Act” (Public Law 109–424, 109th Congress). Central to the goal of protecting United States coastlines is a “tsunami forecasting capability based on models and measurements, including tsunami inundation models and maps \(\ldots\).” To meet this congressionally mandated forecasting capability, the NOAA Center for Tsunami Research has developed the Short-term Inundation Forecast for Tsunamis (SIFT) application (Gica et al. 2008; Titov 2009). This application is designed to rapidly and efficiently forecast tsunami heights at specific coastal communities.

At each community, estimates of tsunami wave arrival time and amplitude are provided by combining real-time tsunami event data with numerical models. Several key components are integrated within SIFT: deep-ocean observations of tsunamis collected near the tsunami sources, a basin-wide precomputed propagation database of water level and flow velocities based on potential seismic unit sources, an inversion algorithm to estimate coefficients associated with unit sources based upon the deep-ocean observations, and high-resolution tsunami forecast models developed for specific at-risk coastal communities. As a tsunami wave propagates across the open ocean, Deep-ocean Assessment and Reporting of Tsunamis (DART\(^{\circledR}\)) buoys observe the passage of the wave and relay data related to its arrival time and amplitude in real or near real time for use with SIFT (these buoys are one form of a specialized instrument known as a tsunameter). The SIFT application uses the reported observations to refine an initial assessment of the magnitude of the tsunami that is based purely on seismic information. The refinement is done by comparing observations from the DART\(^{\circledR}\) buoys to models in the precomputed propagation database via an inversion algorithm.

In this article, we focus on the inversion algorithm, which combines data from DART\(^{\circledR}\) buoys with precomputed models to yield refined estimates of the source coefficients. We begin with an overview of the SIFT application (Sect. 2), after which we describe the data collected by the DART\(^{\circledR}\) buoys (Sect. 3). We then discuss the precomputed time series of model tsunamis for the DART\(^{\circledR}\) buoy locations in Sect. 4 in preparation for a detailed description of the inversion algorithm in Sect. 5. Here we also illustrate use of the algorithm on the November 15, 2006 Kuril Islands event (this provides a good example since this event was observed by eleven buoys in the North Pacific Ocean along the Aleutian Islands and a considerable portion of the North American coastline). The inversion algorithm is based upon a statistical model, and hence, we are able to assess the uncertainty in the resulting source coefficient estimates. We end the main body of the paper with a discussion of potential extensions and refinements to the SIFT application in Sect. 6 and with our conclusions in Sect. 7. (We gives some technical details in Appendix 1 on assessing the uncertainty in the estimated source coefficients. In Appendix 2, we use the coefficient estimates and their associated uncertainties to come up with a measure of the strength of the tsunami-generating event that is of interest to compare with the seismically determined moment magnitude.)

2 Short-term inundation forecast for tsunamis (SIFT)

The SIFT application exploits the fact that the ocean acts as a low-pass filter, allowing long-period phenomenon such as tsunamis to be detected by measurement of pressure at a fixed point on the seafloor (Meinig et al. 2005). The strategy behind SIFT is to assess the potential effect of a tsunami by combining pressure measurements collected in real time with models, thus refining an initial assessment based purely on seismic data available soon after an earthquake. SIFT is intended to be an operational system that provides its assessments in a timely manner. Given that computations concerning wave generation, propagation, and inundation must be done under time constraints, SIFT makes use of a precomputed propagation database containing water elevations and flow velocities that are generated by standardized earthquakes located within “unit sources,” which are strategically placed near ocean basin subduction zones (Gica et al. 2008). Within SIFT, model time series are extracted from the numerical solution to the propagation of tsunami waves throughout the ocean basin as generated at the unit sources. Dynamics of these tsunami waves in the open ocean allow them to be linearly combined to mimic observed data. The SIFT application is designed primarily to predict trans-oceanic tsunamis rather than smaller regional events because of operational considerations and has certain other limitations in its current implementation (e.g., the unit sources all have the same fixed dimensions).

An inversion algorithm is used to estimate source coefficients that adjust the amplitudes of the precomputed models from each unit source using deep-ocean measurements collected by DART\(^{\circledR}\) buoys. These coefficients, once estimated by the inversion algorithm, provide the boundary conditions under which nonlinear inundation models are run to provide forecasts of incoming tsunami waves at threatened coastal communities. These models are run independent of one another in real time while a tsunami is propagating across the open ocean. The models provide an estimate of wave arrival time, wave height, and inundation following tsunami generation. Each inundation model has been designed and tested against historical events to perform under very stringent time constraints, given that time is generally the single limiting factor in saving lives and property. A total of seventy-five community inundation models are scheduled for completion at the end of federal fiscal year 2012.

3 Bottom pressure measurements from DART\(^{\circledR}\) buoys

A DART\(^{\circledR}\) buoy actually consists of two separate units, namely, a surface buoy and a bottom unit with a pressure recorder (NOAA Data Management Committee 2008). These units communicate with each other via acoustic telemetry, and the surface buoy in turn communicates with tsunami warning centers via the Iridium Satellite System. The bottom pressure recorder internally measures water pressure, which, for operational purposes, is converted within the bottom unit to equivalent sea surface elevation using the factor 670.0 mm/psi, i.e., 0.09718 mm/Pa (Mofjeld 2009). Some research studies involving bottom pressure might require a more exact conversion factor, an example being the tidal study by Mofjeld et al. (1995). The local water-column averaged product of the in-situ water density and acceleration of gravity is then used to compute the factor.

The water pressure is integrated over nonoverlapping 15-s time windows, so there are \(60\,\times\,4 = 240\) measurements every hour. We associate each window with an integer-valued time index n. For simplicity, we adopt the convention that \(n\,=\,0\) corresponds to the 15-s time window during which a particular tsunami-generating earthquake of interest commenced. The actual time associated with the nth time window is \(t_n = a + n \Updelta\) (in hours), where a is a fixed offset and \(\Updelta\,=\,1/240\,\doteq\,0.004167\) h. In what follows, it is convenient to set \(a\,=\,0\) so that t n is the elapsed time from the 15-s window containing the earthquake event. We denote the internal measurements by x n , where \(n\,<\,0\) (or \(n\,>\,0\)) is the index for a measurement recorded before (or after) the earthquake.

The internally recorded x n measurements only become fully available when the bottom unit is lifted to the surface for servicing (about once every two years). Normally, the buoy operates in a monitoring mode in which the bottom unit packages together one measurement every 15 minutes (a 60 fold reduction in data) over a 6-h block for transmission up to the surface buoy once every 6 h. We refer to measurements from this monitoring mode as coming from the “15-min stream.” Let \(n_l,\,l= -1, -2, \ldots\), represent the indices associated with the portion of the 15-min stream that occurs just prior to the \(n\,=\,0\) measurement (the measurement x 0 itself might or might not be available). Typically, we have \(n_{l} - n_{l-1} = 60\), but this need not be true for all l due to data dropouts. Also note that n −1 itself need not be a multiple of 60 since the earthquake can occur anywhere within the 15-min reporting cycle.

The bottom unit switches out of monitoring mode into a rapid reporting mode either automatically if a seismic event is detected by a DART\(^{\circledR}\) buoy or when forced to do so by an operator at a tsunami warning center sending a signal via satellite to the surface buoy, which then sends an initiating signal to the bottom unit. When in rapid reporting mode, the bottom unit transmits to the surface buoy either a full reporting of the 15-s data (from the “15-s stream”) or a reporting of 1-min averages, i.e., the average of four consecutive x n values (from the “1-min stream”). The index for a 1-min average is the index associated with the most recent 15-s time window used in forming the average:

Let \(n_l,\,l= 0, 1, \ldots\), represent the indices associated with the data that are available after (and possibly including) the \(n\,=\,0\) measurement. Ignoring the occurrence of dropouts, we have \(n_{l} - n_{l-1} = 1\) when dealing with just the 15-s stream; by contrast, if both \(\bar x_{n_{l-1}}\) and \(\bar x_{n_l}\) are from the 1-min stream, then \(n_{l} - n_{l-1} = 4\). Currently, the inversion algorithm uses time series extracted from the 1-min stream primarily, but it can make use of additional measurements from the 15-s or 15-min streams when available and as needed.

Figure 1 shows examples of time series from the 15-min and 1-min data streams as recorded by DART\(^{\circledR}\) buoy 21414 before and after the November 15, 2006 Kuril Islands earthquake. Note that there is a gap between the two time series. This gap is due to dropouts in the 15-min stream, which disappeared temporarily more than an hour before the earthquake and did not reappear again until more than 12 h later (well after the tsunami had passed by this buoy). Even if portions of the 15-min stream had not been lost, the time series available for use with the inversion algorithm during the critical time period following the earthquake might well have been limited to what is shown in the figure due to the fact that data from the 15-min stream are transmitted in 6-h blocks once every 6 h. Thus, assuming that the last value shown in the figure from the 15-min stream was in a 6-h block transmitted soon after it was recorded, the portion of the 15-min stream that would have filled in the gap would not have been scheduled for transmission until almost 5 h after the earthquake.

Data from DART\(^{\circledR}\) buoy 21414 recorded before and after November 15, 2006 Kuril Islands earthquake. The black dots show a time series extracted from the 15-min stream, while the black curve is from the 1-min stream. Time is in hours from the earthquake (a negative/positive time is before/after the start of the earthquake)

The time series in Fig. 1 have a prominent tidal component that must be removed prior to use of the inversion algorithm described in Sect. 5. Detiding must be done nearly in real time and is not a simple matter. We have explored approaches based on harmonic models, Kalman filtering/smoothing, empirical orthogonal functions and low-order polynomial fits (Percival et al. 2011). In what follows, we assume that data x n from the 15-s or 15-min streams or data \(\bar{x}_n\) from the 1-min stream have been suitably detided. We denote the detided data by d n and \(\bar{d}_n\). (We detided the data using a Kalman filter/smoother in all the examples presented below.)

4 Models for DART\(^{\circledR}\) buoy data

The purpose of the inversion algorithm is to use models to estimate the tsunami source strength and associated confidence limits from observed DART\(^{\circledR}\) data. Formulation of these models is discussed in detail in Titov et al. (1999) and Gica et al. (2008), from which the following overview is extracted. Seventeen tsunami source regions are defined along portions of the Pacific and Indian Oceans from which earthquake-generated tsunamis are likely to occur (there are also source regions defined for the Atlantic Ocean and Caribbean Sea). Each source region is divided up into a number of “unit sources.” For example, the Aleutian-Alaska-Canada-Cascadia source region consists of 130 unit sources, each of which has an area of \(100\,\times\,50\,\hbox{km}^2\) (see Fig. 3 below). A database has been constructed containing precomputed adjustable models that predict what would be observed at a given DART\(^{\circledR}\) buoy from the beginning of an earthquake event and onwards. This prediction is based on the assumption that the earthquake was located in a particular unit source and was of moment magnitude \(M_W\,=\,7.5\) from a reverse thrust of appropriate strike, dip, and depth (this corresponds to a co-seismic slip of 1 m along the fault in the down-dip direction with a rigidity of \(4.0\,\times\,10^{11}\,\hbox{dynes/cm}^2\); Sect. 5.2 has more details about the unit sources). The fault movement is assumed to be instantaneous and results in a vertical ground displacement, as computed by an elastic model developed independently by Gusiakov (1978) and Okada (1985), that generates the tsunami for the unit source. The database thus has a precomputed model for each pairing of a particular buoy and particular unit source.

Adjustable model for DART\(^{\circledR}\) buoy 21414 from an earthquake presumed to have originated in unit source a12 in the Kamchatka–Kuril–Japan source region. The black dots indicate the model values stored in the database at 1-min intervals. The black curve is a cubic spline interpolation of the model outside of the values in the database. The circles show the spline-interpolated values at the times associated with the 1-min stream transmitted from the buoy during the November 15, 2006 Kuril Islands tsunami event. Time is in hours since the earthquake

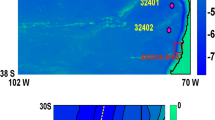

Locations (upper panel) of five unit sources (a12, a13, a14, z13, and z14) and eleven DART\(^{\circledR}\) buoys (21414, 46413, \(\ldots\), 46412), along with detided data from these buoys (gray circles, lower panel) and fitted models (solid curves) for the Kuril Islands event of November 15, 2006. The unit sources a13, a14, z13, and z14 were selected using seismic information only, while a12, a13, and a14 (used to determine the fitted models) came from a trial-and-error process involving examination of residuals from models fitted to various combinations of unit sources. The estimated coefficients \(\hat{\alpha}_1,\,\hat{\alpha}_2,\) and \(\hat{\alpha}_3\) (corresponding to a12, a13, and a14) used data from the buoys 1, 2, 3, and 4. Because the data for buoys 8 and 9 arrived at approximately the same time, the data and models for these have been displaced up and down by 5 cm

Each adjustable model was constructed with a 15-s time step, but, to save space in the database, was subsampled down to a discrete grid of times with a 1-min spacing. In general, the times used in a precomputed model might or might not correspond to the times at which the DART\(^{\circledR}\) buoy data were actually collected relative to the start of the earthquake. To facilitate matching the observed data with an adjustable model, we use cubic splines to interpolate the model. Let g(t) represent the spline-interpolated model at an arbitrary time t for a particular unit source and DART\(^{\circledR}\) buoy. The adjustable model value corresponding to a measurement \(x_{n_l}\) from that buoy over a 15-s time window associated with the elapsed time \(t_{n_l}\) is just \(g(t_{n_l})\). A 1-min average \(\bar{x}_{n_l}\) consists of an average of \(x_{n_l-3},\,x_{n_l-2},\,x_{n_l-1}\) and \( x_{n_l}\), so its associated adjustable model is an average of \(g(t_{n_l-3}),\,g(t_{n_l-2}),\,g(t_{n_l-1})\) and \(g(t_{n_l})\).

Figure 2 shows an example of a spline-interpolated adjustable model g(t) (black curve), which is based upon values precomputed at 1-min intervals and stored in the database (black dots). This model is for DART\(^{\circledR}\) buoy 21414 for an earthquake originating from unit source a12, which is in the Kamchatka–Kuril–Japan source region (see Fig. 3 below). During the November 15, 2006 Kuril Islands event, this buoy transmitted a 1-min stream \(\bar{x}_{n_l}\) at times \(t_{n_l}\). These times did not coincide exactly with those of the precomputed model. The circles in the plot show the spline-interpolated values \(g(t_{n_l})\) versus \(t_{n_l}\), each of which would be the adjustable prediction for a single (unavailable) 15-s average \(x_{n_l}\) (the corresponding prediction for the available \(\bar{x}_{n_l}\) would be the average of \(g(t_{n_l})\) and three values associated with times occurring 15, 30, and 45 s earlier). As the example shows, the cubic spline interpolation provides accurate estimates of the model values at the times of the DART\(^{\circledR}\) observations.

5 Inversion algorithm for extracting source coefficients

The purpose of the inversion algorithm is to use data collected by DART\(^{\circledR}\) buoys (after appropriate detiding) to produce an estimate of the initial fluid conditions or source of an earthquake-generated tsunami. As noted in the previous section, the inversion algorithm depends upon a database of precomputed models to produce synthetic boundary conditions of water elevation to initiate the forecast model computation. These models are scaled to unit sources that are based upon a moment magnitude \(M_W\,=\,7.5\) inverse-thrust fault earthquake with the assumed parameters, each corresponding to a segment of a subduction zone. There is a model in the database for every pairing of a particular unit source with a particular buoy. This model predicts what would be observed at the buoy if an earthquake was to originate from a selected unit source. The inversion algorithm adjusts the amplitude of the precomputed model to match the time series observed at the DART\(^{\circledR}\) buoys. The adjustment takes the form of a multiplicative factor, which we denote by α and refer to as the source coefficient. This coefficient in effect defines the tsunami source or fluid origin of a tsunami as the initial sea-surface deformation resulting from the transfer of energy released by geophysical processes to the fluid body. A value for α that is greater (less) than unity means that the modeled initial sea-surface deformation is greater (less) than what would arise from the standard \(M_W\,=\,7.5\) earthquake scenario.

The inversion algorithm estimates α by matching the precomputed model and the detided data from the buoy via a least squares procedure. As discussed below, the algorithm takes into account the possibilities that the earthquake might be attributable to more than just a single unit source (so that the adjustments take the form of a vector \(\varvec{\alpha}\) of multiplicative factors) and that more than one buoy might have collected data relevant to a particular event. In Sect. 5.1, we present the inversion algorithm under the simplifying assumptions that we know (1) the appropriate unit sources associated with the earthquake and (2) the portions of the detided buoy data that are relevant for assessing the tsunami event (we pay particular attention to assessing the effect of sampling variability on our estimates of \(\varvec{\alpha}\)). Proper selection of the unit sources and of the relevant data is vital for getting good results from the inversion algorithm. We discuss source selection in Sect. 5.2 and data selection in Sect. 5.3. (For earlier related work on inversion algorithms, see Johnson et al. 1996; Wei et al. 2003.)

5.1 Estimation and confidence limits for \(\varvec{\alpha}\)

Suppose that we have selected one or more unit sources to explain the tsunami event along with relevant subsets of the detided DART\(^{\circledR}\) data. Let \(J\,\geq\,1\) represent the number of buoys whose data are to be used in the inversion algorithm, and let \(K\,\geq\,1\) be the number of unit sources. Let d j be a column vector of length N j that contains the detided data from the jth buoy, where \(j=1,\ldots,J\) (this can consist of an arbitrary mixture of data from the 15-min, 1-min, and 15-s streams). Let \({\bf g}_{j,k},\,k=1,\ldots,K\), be a vector of length N j containing the adjustable model that predicts how the tsunami from a moment magnitude \(M_W\,=\,7.5\) earthquake from the kth unit source would be recorded at the jth buoy. The overall model for the data from the jth buoy is taken to be a linear combination of the models associated with the K unit sources; i.e., we write

where α k is the source coefficient for the kth unit source, and e j is a vector of N j error terms that accounts for the mismatch between the idealized overall model and the observed data. We can rewrite the above as

where G j is an N j × K matrix whose kth column is g j,k, while \(\varvec{\alpha}\) is a column vector of length K containing \(\alpha_1, \ldots, \alpha_K\). The models for the data from the individual buoys can be stacked together to form a model for the data from all the buoys, namely,

where \({\bf d} \equiv \left[{\bf d}^T_1, \ldots, {\bf d}^T_J\right]^T\) is a column vector of length \(N \equiv N_1 +\cdots+N_J\) formed by stacking the individual d j on top of one another (here and elsewhere, the superscript “T” denotes the transpose of a vector or a matrix); \(G\equiv \left[G^T_1,\ldots, G^T_J\right]^T\) is an \(N\,\times\,K\) matrix formed in a similar manner by stacking the G j together; and e is an N-dimensional column vector of errors whose nth element is e n .

While the data d and their models G are known, the K source coefficients in \(\varvec{\alpha}\) are not, so we need some way of determining them. Under scenarios that the SIFT application is intended to handle, the amount of data N from all the buoys is much greater than the number of unit sources K. Since there are more equations N in (2) than unknowns K, we must resort to some additional criterion to find an appropriate \(\varvec{\alpha}\). One reasonable—and time-honored—criterion is to find the vector such that the sum of squares of the error terms is as small as possible; i.e., we want \(\varvec{\alpha}\) to be such that

where \(\| {\bf e} \|\) is the Euclidean norm of the vector e:

This least squares estimator, say \(\hat{\varvec{\alpha}}\), is the solution to the so-called normal equations:

There are K equations and K unknowns in the above, so we can determine a unique estimator \(\hat{\varvec{\alpha}}\) for \(\varvec{\alpha}\) as long as G T G can be inverted. Although G T G is typically invertible, there is no guarantee that it is such, and numerical problems might prevent a routine that banks upon invertibility from coming up with a stable solution. Because of these considerations, we solve (4) using a singular value decomposition, which, when G T G is invertible, yields a numerically stable \(\hat{\varvec{\alpha}}\) and, when G T G has a rank lower than K, leads to a solution corresponding to the application of the so-called Moore–Penrose generalized inverse.

A potential complication with the solution to (4) is that the estimated \(\hat \alpha_k\) in \(\hat{\varvec{\alpha}}\) might be a mixture of positive and negative values. This introduces the possibility that prominent random fluctuations in the data that cannot be handled by a model from a single unit source are being matched by a combination of models with \(\hat{\alpha}_k\)’s that essentially cancel one another out, even though each \(|\hat{\alpha}_k|\) might be large. A mixture of positive and negative values for \(\hat{\alpha}_k\) is difficult to reconcile with the physics of earthquake generation. To prevent such a mixture, we can alter the least squares criterion such that we seek \(\varvec{\alpha}\) such that

i.e., \(\alpha_k\,\geq\,0\) for \(k\,=\,1,\ldots,K\). This minimization problem is a special case of Problem 10.1.1 of Fletcher (1987), and the method we use to solve it is a variation of Algorithm 10.3.4 in that same reference. Nonnegativity constraints are appropriate for the great majority of tsunamigenic earthquakes in subduction zones, which are the primary source of major trans-ocean tsunamis; however, exceptions do occur, as discussed in Sect. 6.

The constrained least squares procedure can result in some \(\hat{\alpha}_k\) being set to zero, which in effect removes the corresponding unit sources from our model for the data. If we were to entertain a reduced model made up of just the unit sources in our original model for which \(\hat{\alpha}_k\,>\,0\), the unconstrained least squares estimate for each unit source in the reduced model will be identical to the corresponding constrained least squares estimate in the original model. Accordingly, if need be, we redefine G by eliminating any unit sources for which \(\hat{\alpha}_k\,=\,0\) originally and redefine K to be the number of remaining unit sources. The end result of the constrained least squares procedure is thus a model that can be fit using unconstrained least squares. The corresponding fitted model is

where \(\hat{\varvec{\alpha}}>0\), and r contains the residuals, i.e., the observed errors \({\bf r} = {\bf d} - G\hat{\varvec{\alpha}}\). Conditional upon the selected model, these residuals can be examined to assess the sampling variability in the estimates \(\hat{\varvec{\alpha}}\) using statistical theory, the details of which are given in Appendix 1. Since \(\varvec{\alpha}\) is of length K, this assessment takes the form of a \(K\,\times\,K\) covariance matrix \(\Upsigma\) for \(\hat{\varvec{\alpha}}\). The kth diagonal element of \(\Upsigma\) gives us the variance of \(\hat{\alpha}_k\), while the (k, l)th off-diagonal element is the covariance between \(\hat{\alpha}_k\) and \(\hat{\alpha}_l\).

As an example, we consider the Kuril Islands event of November 15, 2006 (see Horrollo et al. 2008; Kowalik et al. 2008 for additional analyses of this event). Portions of the data received from \(J\,=\,11\) buoys during the event are shown (after detiding) as gray circles in the bottom panel of Fig. 3. The locations of the buoys are shown in the upper panel. Although the earthquake emanated from the Kamchatka–Kuril–Japan (KKJ) source region, ten of the eleven DART\(^{\circledR}\) buoys were positioned close to the Aleutian–Alaska–Canada–Cascadia source region. Additional buoys have been deployed since 2006, some now close to the KKJ source region (the reader can go to http://www.ndbc.noaa.gov/dart.shtml to see where buoys are currently deployed). The displayed data for four of the buoys (21414, 46413, 46408, and 46402) were fit to a model involving \(K\,=\,3\) unit sources (denoted as a12, a13, and a14—the rectangles representing their locations are shaded in dark gray in the insert in the upper panel). The curves in the bottom panel depict the fitted models at all eleven buoys. The fitted models and data are in reasonably good agreement, which demonstrates the efficacy of the procedure in modeling this event over a rather large oceanic area. The estimated source coefficients and their covariance matrix are

The square roots of the diagonal elements of \(\Upsigma\) give the standard errors of the corresponding elements of \(\hat{\varvec{\alpha}}\). We can form approximate 95% confidence intervals (CIs) for the true unknown source coefficients \(\varvec{\alpha}\) by multiplying the standard errors by 1.96 and then adding and subtracting the resulting products from the estimates \(\hat{\varvec{\alpha}}\). This procedure yields 95% CIs of [5.03, 6.73] for α1 (source a12), [3.25, 5.22] for α2 (a13), and [0.78, 3.81] for α3 (a14). Note that none of these CIs traps zero. Had the kth such interval done so, we would be unable to reject the null hypothesis that the unknown α k is equal to zero at the 5% level of significance. Since the CIs indicate that none of the unknown α k ’s are likely to be zero, we can deem all three source coefficients to be significantly different from zero with level of significance of 0.05.

The results shown in Fig. 3 are based upon using data from the first four DART\(^{\circledR}\) buoys to observe the Kuril Islands event. Figure 4 shows the effect on the estimated source coefficients caused by using differing numbers of buoys. We start by using data from the first buoy to see the tsunami event (21414) and then add in one buoy at a time in the order dictated by the arrival times of the tsunami event. The estimated α k is fairly stable across time, with the width of the 95% CIs decreasing markedly upon addition of the next two buoys (46413 and 46408) and then gradually after that, up until the addition of the last four buoys (46419, 46405, 46411, and 46412). The fact that the CIs increase upon adding these final buoys can be traced to a misalignment in time between the models and observed data, as is evident in the bottom panel of Fig. 3. For a variety of reasons (including inadequate bottom depth (bathymetry) information, assumed wave dynamics, limited spatial resolution in the model, and issues related to finite difference approximations to the equations of motion), any mismatch in propagation time between actual and modeled tsunamis will tend to increase with distance from the unit source. The recent deployment of addition DART\(^{\circledR}\) buoys ensures that most earthquake-generated tsunamis will be observable with near-field buoys. This allows reservation of far-field buoys more for confirmatory use rather than actual determination of the source coefficients during a tsunami event, as we have done in this example. Figure 4 also suggests that, in this example, use of two or three near-field buoys suffices to get good α k estimates (this also proved to be true for events studied by Tang et al. 2008 and Wei et al. 2008). Because adding more buoys does not lead to a marked improvement in the statistical properties of \(\hat{\alpha}_k\), there is fortunately no operational need to wait for additional data to arrive before proceeding with use of the estimated source coefficients to drive inundation models for coastal communities.

Estimated source coefficients \(\hat{\varvec{\alpha}}\) and associated 95% confidence intervals (CIs) versus number of buoys used in the least squares fit for the Kuril Islands event depicted in Fig. 3. The source coefficients \(\hat{\alpha}_1,\,\hat{\alpha}_2,\) and \(\hat \alpha_3\) (corresponding to unit sources a12, a13 and a14) are depicted by, respectively, open circles, solid circles, and open squares

Figure 5 shows estimated source coefficients based upon pairs of buoys for the set-up depicted in Fig. 5. Since we have eleven buoys in all, there are 55 two-buoy combinations in all. The upper three plots depict, from top on down, \(\hat{\alpha}_1,\,\hat{\alpha}_2\) and \(\hat{\alpha}_3\) for these 55 combinations as solid and open circles. A study of the trans-oceanic propagation (beam) pattern for this tsunami suggests classifying the buoys labeled as 1, 2, 3, and 4 in Fig. 5 as being in the near-field. The remaining seven buoys are in the far field, but four (8–11) are still in the path of the main part of the beam, while the remaining three (5–7) are outside the main beam. Six of the 55 pairs involve the near-field buoys exclusively. These are the ones with \(\hat{\alpha}_k\)’s indicated by solid circles. The figure indicates that, when sampling variability is taken into account, using data from pairs of near-field buoys yields \(\hat{\alpha}_k\)’s that are relatively consistent in the sense of being generally—but not always—within each others 95% CIs. By contrast, some of the most discordant \(\hat{\alpha}_k\)’s involve the three far-field buoys outside the main beam. Our finding that data from two or three buoys suffices for stable α k estimation must be tempered by the fact that the locations of the buoys play an important role also.

Estimated source coefficients \(\hat{\alpha}_1,\,\hat{\alpha}_2,\) and \(\hat{\alpha}_3\) using pairs of buoys in the constrained least squares fit (circles in top three plots) and their sum \(\hat{\alpha}_1 + \hat{\alpha}_2 +\hat{\alpha}_3\) (bottom plot), along with associated 95% confidence intervals. The buoys are numbered as in Fig. 3, which depicts the Kuril Islands event under consideration here. Starting from the left-hand side, the first ten circles (both solid and open) indicate \(\hat{\alpha}_k\)’s based upon data from buoy 1 in combination with, respectively, buoys 2, 3, \(\ldots\), 11; the next nine, buoy 2 paired with buoys 3, 4, \(\ldots\), 11; and so forth, with the right-most circle in each plot showing \(\hat{\alpha}_k\)’s based upon data from buoys 10 and 11. Six of the pairs involve just buoys 1, 2, 3, and 4. The values for these pairs are indicated by solid rather than open circles

The sum of the estimated α k ’s is also of interest since this measure of overall source strength provides initial conditions for models that predict coastal inundation. The bottom plot of Fig. 5 shows this sum for each of the 55 pairs, along with associated 95% CIs (standard statistical theory says that the standard error for this sum is given by the square root of the sum of all the elements in \(\Upsigma\)). Taking sampling variability into account, this sum is remarkably consistent among pairs with at least one buoy in the main beam. There are three pairs that do not fit this description, namely, (5,6), (5,7), and (6,7). These are associated with the three \(\sum \hat{\alpha}_k\)’s that are markedly smaller than the other 52. Thus, for this particular event, 52 of the 55 pairs would have produced similar initial conditions for the inundation models, but more cases need to be studied to ascertain whether we can expect this degree of consistency to occur routinely. Note that, even though the overall sum is relatively stable, the partitioning of the sum among its three \(\hat{\alpha}_k\)’s differs a fair amount across the 52 pairs; however, in the far field, well away from the source, the ambiguity in partitioning of source strength among the three unit sources should not markedly influence any inundation forecasts since the sources are so close together relative to the distance separating them from the region to be forecast.

5.2 Selection of sources

Selection of the unit sources to be used in the inversion algorithm is an iterative process commencing with preliminary estimates of the epicenter and moment magnitude M W for an earthquake with the potential for generating a tsunami. These estimates are provided by seismic networks and are available shortly after the occurrence of an earthquake and prior to the arrival of any relevant DART\(^{\circledR}\) data. We use these estimates to predetermine unit sources and their associated source coefficients α k as follows. First, we select K based upon the size of M W as indicated by Table 1, which is based on equations from Papazachos et al. (2004). Second, we select K unit sources using an algorithm that picks sources close to the epicenter, but in a pattern suggested by studies of past events. Third, we equate expressions for the seismic moment M 0 (which depends upon the unitless M W through an empirical relationships established by Hanks and Kanamori 1979) and an inversion-derived tsunami magnitude T M (which depends upon the coseismic slip S k , measured in cm):

where μ is the earth’s rigidity (taken to be \(4.0\,\times\,10^{11}\) dynes/\(\hbox{cm}^2\)); L and W are the length and width of each unit source measured in cm (the unit sources represent an area of \(100\,\times\,50\,\hbox{km}^2\), so \(L\,=\,10^7\) cm and \(W\,=\,L/2\)); and both M 0 and T M have units of \(\hbox{dynes}\cdot\hbox{cm}\). If we assume \(S_1=S_2=\cdots=S_K\) and equate T M with M 0, we obtain

Finally, we set the dimensionless α k by dividing S k by a reference value S 0:

For the unit source dimensions and rigidity chosen above, a reference value of \(S_0\,=\,100\) cm corresponds to \(M_W\,=\,7.5\).

As an example of this procedure, suppose we take the epicenter for the Kuril Islands event to be 46.592°N and 153.266°E and M W to be 8.3, as is currently listed on a USGS Web page (USGS 2010). Table 1 says to set \(K\,=\,4\), and the rule \(\alpha_k = S_k/S_0\) with \(S_0\,=\,100\) cm in conjunction with Eq. (9) yields \(\alpha_k\,\doteq\,3.95\) for \(k\,=\,1,2,3\), and 4. The epicenter of the earthquake is in unit source a13, and the algorithm picks z13, a14 and z14 in addition to a13 as the four unit sources (see Fig. 3, where the epicenter is indicated by an asterisk in the rectangle representing a13, with the rectangles for z13 and z14 being shaded in light gray). The solid curve in Fig. 6(a) shows the resulting model for what would be observed at buoy 21414 (Fig. 3 shows the location of this buoy). This model at time t takes the form

where g 1,k (t) is the spline-interpolated model for the kth unit source and buoy 21414 (indexed as j = 1). In principle, this model would have been available soon after the earthquake and prior to the arrival of the tsunami at any of the DART\(^{\circledR}\) buoys. The actual data recorded at buoy 21414 are indicated by circles and asterisks.

Three models for November 15, 2006 Kuril Islands tsunami at the location for buoy 21414 (solid curves), along with actual observations (gray circles and asterisks). In plot (a), the source coefficients α k for the model are based on Eqs. (9) and (10), which require knowledge of the epicenter and the moment magnitude of the earthquake; in plot (b), the same unit sources are used as in (a), namely, a13, z13, a14, and z14, but the α k ’s are now determined by a least squares fit with nonnegativity constraints to the portion of the data observed at buoy 21414 indicated by the circles; and in plot (c), a different set of unit sources is used, namely, a12, a13, and a14, with the α k ’s set in the same manner as for plot (b). The asterisks in each plot indicate data observed at the buoy, but not used in the least squares fits

Once sufficient DART\(^{\circledR}\) data become available, we can use the inversion algorithm with the initial selection of unit sources to obtain estimates \(\hat{\varvec{\alpha}}\) of the source coefficients—these estimates are refinements of the initial determination based on seismic information alone. Because of the nonnegativity constraints, it is possible that some, say K′, of the source coefficients will be set to zero, so that only K − K′ unit sources are retained in the model. An examination of the CIs for the remaining coefficients might recommend dropping additional unit sources whose corresponding \(\hat{\alpha}_k\)’s are not significantly different from zero. The solid curve in Fig. 6(b) is the model that results from using the subset of data from buoy 21414 (indicated by the gray circles in the bottom panel of Fig. 3) to obtain the least squares estimates \(\hat{\alpha}_k\). Here, \(K'\,=\,3\) of the coefficients were set to zero, thus eliminating unit sources z13, a14, and z14 from the model, while retaining only a13; however, the 95% CI for the α k corresponding to a13 indicates that its estimated coefficient is not significantly different from zero. The match between the models and the observed data is poor in both Fig. 6a, b—in fact the match in (a) based on seismic information alone is arguably more appealing visually than the one in (b) that makes use of the buoy data! By comparison, Fig. 6c shows that we can get a much better match with a different set of unit sources, namely, a12, a13, and a14 (as used in Figs. 3 and 4). This set was selected by trial and error from among all the units sources close to the epicenter. When source coefficients are subsequently used to provide initial conditions for models that forecast inundation, the contrast between Fig. 6a, b points out the possibility of actually degrading a seismically based forecast due to an inappropriate selection of unit sources in the inversion algorithm; on the other hand, the contrast between Fig. 6b, c suggests that an appropriate choice of unit sources can offer an improvement over the seismically based forecast. The interface for the SIFT application is designed to make it easy for an operator to add or remove unit sources, hence facilitating experimentation with various models.

5.3 Selection of data from buoys

Once we have an appropriate selection of unit sources, the inversion algorithm estimates \(\varvec{\alpha}\) based upon whatever selection of DART\(^{\circledR}\) buoy data we hand to it. It might seem obvious we would want to use as much data as possible since statistical theory would seem to suggest that, as the amount of data increases, the variability in \(\hat{\varvec{\alpha}}\) should tend to decrease, leading to a better estimate of \(\varvec{\alpha}\). There are, however, at least two reasons for entertaining smaller amounts of data. First, warnings to coastal communities must be provided in a timely manner—there is no luxury during a tsunami event of waiting for all possible relevant data to arrive from a DART\(^{\circledR}\) buoy. Second, empirical evidence suggests that models and data are not equally well matched across time. The quality of the match is time-dependent, suggesting that we focus on particular segments of the data for purposes of fitting the model. Here, we illustrate these points by considering the effect of data selection on the estimation of \(\varvec{\alpha}\) for the 15 November 2006 Kuril Islands tsunami.

Figure 7a shows the detided data (as circles and asterisks) obtained from buoy 21414 during the Kuril Islands tsunami event. The (subjectively determined) beginning of the event as observed at this buoy is indicated by the left-hand vertical dotted line. The data increase monotonically for a while, but then start to decrease. The right-hand line marks the time just following the crest of the first full tsunami wave (i.e., just after the so-called quarter-wave point). The two vertical lines delineate eleven data values. The left-most portions of the curves in Fig. 7b, c show results obtained by using the inversion algorithm with these eleven values to fit a model based upon unit sources a12, a13, and a14, while the remaining parts of the curves show what happens when we increase the amount of data going into the algorithm one value at a time. The solid curve in Fig. 7b indicates the estimate \(\hat{\alpha}_1\) for a12, whereas the dotted and dashed curves show, respectively, the estimates \(\hat{\alpha}_2\) for a13 and \(\hat{\alpha}_3\) for a14. The curve in Fig. 7c shows the so-called R 2 statistic, which is the percentage of the sample variance of the data explained by the model (this is the squared correlation—expressed as a percentage—between the observed data and the fitted model). As we keep giving the algorithm one more data value to work with, the change in \(\hat{\varvec{\alpha}}\) caused by addition of a new value tends to become smaller, indicating that, after a certain point in time, adding more data does not drastically change \(\hat{\varvec{\alpha}}\). The amount of variance explained by the model is relatively stable at the beginning, after which it starts to decrease markedly. The vertical dotted lines in Fig. 7b, c indicate the values for a fit involving the entire first full wave, as determined subjectively from an examination of the data (the circles in Fig. 7a denote this first full wave). Use of data from the first full wave gives us estimates of \(\varvec{\alpha}\) that do not differ markedly from those obtained with more data, with an associated R 2 statistic that is close to the maximum value.

Effect of estimating \(\varvec{\alpha}\) using different segments of data. Plot (a) shows detided data from buoy 21414 observed during the November 15, 2006 Kuril Islands event (circles and asterisks, with the former indicating data comprising the first full wave), along with a fitted model (solid curve) involving unit sources a12, a13, and a14 and the data indicated by the circles. The curves in plot (b) show the coefficient estimates \(\hat{\varvec{\alpha}}\) as we increase the amount of data that the estimates are based upon by one data value at a time (the estimates for a12, a13, and a14 are given by, respectively, the solid, dotted, and dashed curves). The two vertical dotted lines in (a) indicate the smallest segment of data used to estimate \(\varvec{\alpha}\) (eleven data values in all)—these estimates are indicated by the left-most portions of the curves in (b). The curve in plot (c) shows the R 2 statistic, which is the percentage of the sample variance of the data explained by the model. The vertical dotted lines in plots (b) and (c) indicate the \(\hat{\varvec{\alpha}}\) and R 2 values associated with the fit involving the first full wave

This Kuril Islands tsunami event is one in which the very first wave is the largest when observed at buoy 21414. This is because there is an unobstructed path for the tsunami to propagate from the source of the event to this buoy (see Fig. 3). For this case, we are thus better off just using the data up to the first complete wave to estimate \(\varvec{\alpha}\) since the data and the model disagree substantially beyond that point; i.e., the explanatory power of the model decreases beyond the first complete wave observed at the buoy. There are other situations in which later waves can be larger, in which case it would be desirable to use a longer stretch of the data for estimating the coefficients \(\varvec{\alpha}\). The interface for the SIFT application makes it easy for an operator to select the data to be used in the inversion algorithm.

6 Discussion

While the current version of the SIFT application is fully functional, here we discuss some possible extensions to the software that might impact upcoming versions.

The SIFT application is capable of estimating tsunami source coefficients in near real time, but there is a need to provide operators with help in its use during a tsunami event. As discussed above, two critical elements in successful use of the SIFT application are choosing a set of appropriate unit sources and selecting appropriate subsets of DART\(^{\circledR}\) buoy data. Currently, these choices depend upon experienced operators, but, for operators with limited experience (and as potential guidance for experienced operators under time constraints during a tsunami event), it is desirable to look for ways to automate the selection procedures. The problem of selecting unit sources is closely related to the topic of variable selection in linear regression analysis, for which there is a considerable literature we can draw upon for ideas. Complicating factors are the dynamic nature in which the data arrive, the potential desire to have spatially coherent unit sources, the correlated nature of the errors, and the possible interplay between selecting unit sources and subsetting the DART\(^{\circledR}\) data. The problem of selecting appropriate subsets of DART\(^{\circledR}\) buoy data is related to the topic of isolating transients, for which wavelets and other techniques for extracting a signal from a time series with nonstationary behavior can be looked to for guidance. How best to automatically select unit sources and to subset the DART\(^{\circledR}\) data are subjects of ongoing research.

A complicating factor we have not discussed is contamination of the DART\(^{\circledR}\) buoy data from seismic noise. While the November 2006 Kuril Islands tsunami event and many others are relatively free from such noise, there are cases where seismic noise is co-located in time with the tsunami event itself in the DART\(^{\circledR}\) data (see Uslu et al. 2011 for one example). How best to eliminate this noise is also the subject of ongoing research.

Another complication is that a tsunami can arise from an earthquake whose epicenter falls outside the set of all predefined unit sources. This happened with the 29 September 2009 Samoa event, which—after the event—prompted the addition of new unit sources to the database. In such a case, the current strategy within SIFT is to pick unit sources whose distance from the epicenter is as small as possible. For the Samoa event, it was possible to get good fits to data from individual buoys using this strategy, but not to data from a combination of buoys. This occurrence points out the need for a fail-safe option within the SIFT application for an operator to be able to set up new unit sources on the fly. Currently, implementation of this option faces substantial technical challenges due to the amount of time needed to compute the models.

As discussed in Sect. 5.1, there is a need to impose nonnegativity constraints on the estimated source coefficients as per Eq. (5). These constraints prevent a mixture of positive and negative estimates, which would be difficult to interpret physically. The assumption behind these constraints is a reverse thrust mechanism for the earthquake, which is the most common occurrence for major subduction zones. An earthquake can, however, be caused by a normal, or thrust, mechanism, for which we would then want to entertain nonpositivity constraints; i.e., in contrast to Eq. (5), we now seek \(\varvec{\alpha}\) such that

The SIFT application currently gives the operator the option of imposing nonnegativity, nonpositivity, or no constraints, with nonnegativity constraints being the default. It might be possible to provide guidance in selecting between nonnegativity and nonpositivity constraints from an analysis of the initial seismic waves from an earthquake, but the feasibility of doing so needs further research.

The primary use for the source coefficients that the inversion algorithm produces is to provide initial conditions for models that forecast inundation in particular coastal regions. Currently, the forecasts of wave heights and run-up in areas likely to be impacted by a tsunami do not take into account the uncertainty in the source coefficient estimates. Research is needed to determine how this uncertainty impacts these forecasts and how best to present this uncertainty to managers in charge of issuing warnings to coastal communities.

A secondary use for the source coefficients is to check that the size of the tsunami event is in keeping with the seismically determined moment magnitude M W . The fact that we can assess the sampling variability in the estimated source coefficients allows us to say whether the strength of the generating event as determined by the inversion algorithm is significantly different statistically from M W . Details are provided in Appendix 2, where we show that, for the Kuril Islands event, the two ways of assessing the strength give comparable results when sampling variability is taken into account.

The automated system in the current version of the SIFT application is designed to work for events with moment magnitudes M W at or below 9.3 (implying an initial selection of up to 24 unit source as per Table 1). An operator at a tsunami warning center can manually match a set of sources to the DART\(^{\circledR}\) data that extend over large distances along a fault zone if the automated system is deemed to have picked an inappropriate distribution of sources. A possible avenue for future development would be to enhance the automated system for handling larger events, but there are a number of technical issues to overcome (e.g., the timing of the contribution from the sources might need to be adjusted when sources are spread out widely because the assumption of simultaneity might be violated).

Finally, we note that all computations and graphics in this article were done in the R language (Ihaka and Gentleman 1996; R Development Core Team 2010). Portions of the computational R code were translated into Java, which, with augmentations, is the basis for the SIFT application.

7 Summary and conclusions

The SIFT application is a tool developed at the NOAA Center for Tsunami Research to estimate source coefficients during an on-going tsunami event. These source coefficients are needed in a timely manner as input to inundation models that can forecast tsunamis at coastal communities. While the source coefficients can be set initially based solely on seismic information, experience has shown that these initial settings can be improved upon substantially by estimating the coefficients based upon DART\(^{\circledR}\) buoy data collected during the on-going event. The SIFT application is designed to compute these refined estimates soon after the DART\(^{\circledR}\) data become available by making use of a database of precomputed models. These geophysically based models predict what would be observed at each buoy given a standardized earthquake emanating from a set of unit sources. The refined estimates of the source coefficients are computed within SIFT via an inversion algorithm, which relates the data to the geophysically based models via a linear regression model. With suitable nonnegativity or nonpositivity constraints, this statistical model allows for physically interpretable source coefficient estimates, along with an assessment of their sampling variability. The model is formulated in a manner flexible enough to allow for arbitrary combinations of the different types of data reported by the DART\(^{\circledR}\) buoys (either pressure measurements integrated over 15-s time windows or averages of four such measurements, i.e., 1-min averages).

We demonstrated the efficacy of the inversion algorithm by applying it to data from the November 15, 2006 Kuril Islands event. This example shows that, assuming an appropriate choice of unit sources, estimates of the source coefficients based upon data from a single buoy produce a much better match to the observed DART\(^{\circledR}\) buoy data than what is provided by coefficients set using just seismic information (see Fig. 6). These refined estimates in principle would have been available no more than 2.5 h after the occurrence of the earthquake generating the tsunami. Use of data from an additional one to three buoys (available within 3–4 h after the earthquake) produces estimates of the source coefficients with sampling variabilities that are not substantially improved upon by using data from distantly located buoys (see Fig. 4). Operationally, this finding suggests that there is no need to wait for the tsunami to pass by more than two or three buoys in the hope of getting better estimates of the source coefficients (see Fig. 5 also). Models for the data fit using four buoys were able to predict quite well the pattern—but not the exact timing—of the tsunami as it passed by distantly located buoys, demonstrating the ability to model tsunami events on ocean-wide scales based on just three freely adjustable source coefficients (see Fig. 3).

While work is in progress to add more functionality to the SIFT application, it has already proven to be a valuable tool for assessing the potential hazards of tsunamis to coastal communities, in part due to the inversion algorithm that is the focus of this article. Pending successful completion of the ongoing research described in Sect. 6, future versions of the SIFT application will have features that should make it easier for operators to specify the input required for successful use of the inversion algorithm.

References

Bernard EN, Mofjeld HO, Titov VV, Synolakis CE, González FI (2006) Tsunami: scientific frontiers, mitigation, forecasting, and policy implications. Proc Roy Soc Lond A 364(1845):1989–2007. doi:10.1098/rsta.2006.1809

Bernard EN, Titov VV (2007) Improving tsunami forecast skill using deep ocean observations. Mar Technol Soc J 40(4):23–26

Draper NR, Smith H (1998) Applied regression analysis. 3rd edn. Wiley, New York

Durbin J, Koopman SJ (2001) Time series analysis by state space methods. Oxford University Press, Oxford

Fletcher R (1987) Practical methods of optimization. 2nd edn. Wiley, Chichester and New York

Gica E, Spillane MC, Titov VV, Chamberlin CD, Newman JC (2008) Development of the forecast propagation database for NOAA’s Short-term Inundation Forecast for Tsunamis (SIFT). NOAA Technical Memorandum OAR PMEL–139, 89 p. http://nctr.pmel.noaa.gov/pubs.html

Gusiakov VK (1978) Static displacement on the surface of an elastic space. In Ill-posed problems of mathematical physics and interpretation of geophysical data, publication of the Computing Center of Soviet Academy of Sciences (VC SOAN SSSR), Novosibirsk, Russia (Soviet Union), pp 23–51 (in Russian)

Hanks TC, Kanamori H (1979) A moment magnitude scale. J Geophys Res 84(B5):2348–2350

Horrillo J, Knight W, Kowalik Z (2008) Kuril Islands tsunami of November 2006: 2. Impact at Crescent City by local enhancement. J Geophys Res 113:C01021. doi:10.1029/2007JC004404

Ihaka R, Gentleman R (1996) R: a language for data analysis and graphics. J Comput Graph Stat 5:299–314

Johnson JM, Satake K, Holdahl SR, Sauber J (1996) The 1964 Prince William earthquake: joint inversion of tsunami and geodetic data. J Geophys Res 101(B1):523–532

Jones RH (1980) Maximum likelihood fitting of ARMA models to time series with missing observations. Technometrics 22(3):389–395

Kanamori H (1972) Mechanism of tsunami earthquakes. Phys Earth Planet Inter 6:346–359

Kowalik Z, Horrillo J, Knight W, Logan T (2008) Kuril Islands tsunami of November 2006: 1. Impact at Crescent City by distant scattering. J Geophys Res 113:C01020. doi:10.1029/2007JC004402

Meinig C, Stalin SE, Nakamura AI, González FI, Milburn HB (2005) Technology developments in real-time tsunami measuring, monitoring and forecasting. In: Oceans 2005 MTS/IEEE, 19–23 September 2005, Washington, DC

Mofjeld HO (2009) Tsunami measurements. In: Bernard EN, Robinson AR (eds) The sea, vol 15. Harvard University Press, Cambridge, pp 201–235

Mofjeld HO, González FI, Elbé MC, Newman JC (1995) Ocean tides in the continental margin off the Pacific Northwest Shelf. J Geophys Res 100(C6):10789–10800

NOAA Data Management Committee (2008) Tsunami data management: an initial report on the management of environmental data required to minimize the impact of tsunamis in the United States (Version 1.0), 87 pp. Available at http://www.ngdc.noaa.gov/noaa_pubs/index.shtml

Okada Y (1985) Surface deformation due to shear and tensile faults in a half-space. Bull Seismol Soc Am 75:1135–1154

Okal EA (2009) Excitation of tsunamis by earthquakes. In: Bernard EN, Robinson AR (eds) The sea, vol 15. Harvard University Press, Cambridge, pp 137–177

Papazachos CB, Scordilis EM, Panagiotopoulos DG, Karakaisis GF (2004) Global relations between seismic fault parameters and moment magnitude of earthquakes. Bull Geol Soc Greece 25:1482–1489

Percival DB, Arcas D, Denbo DW, Elbé MC, Gica E, Mofjeld HO, Spillane MC, Tang L, Titov VV (2009) Estimating tsunami source parameters via inversion of DART® buoy data. NOAA Technical Memorandum OAR PMEL–144, 22 p. http://nctr.pmel.noaa.gov/pubs.html

Percival DB, Denbo DW, Elbé MC, Gica E, Mofjeld HO, Spillane MC, Titov VV, Tolkova EI (2011) Near real-time methods for detiding DART® buoy data, to be submitted

R Development Core Team (2010) http://www.r-project.org/

Tang L, Titov VV, Wei Y, Mofjeld HO, Spillane M, Arcas D, Bernard EN, Chamberlin C, Gica E, Newman J (2008) Tsunami forecast analysis for the May 2006 Tonga tsunami. J Geophys Res 113:C12015. doi:10.1029/2008JC004922

Tang L, Titov VV, Chamberlin CD (2009) Development, testing, and applications of site-specific tsunami inundation models for real-time forecasting. J Geophys Res 6. doi:10.1029/2009JC005476

Titov VV (2009) Tsunami forecasting. In: Bernard EN, Robinson AR (eds) The sea, vol 15. Harvard University Press, Cambridge, pp 371–400

Titov VV, Mofjeld HO, González FI, Newman JC (1999) Offshore forecasting of Hawaiian tsunamis generated in Alaska–Aleutian Subduction Zone. NOAA Technical Memorandum ERL PMEL–114, 22 p. http://nctr.pmel.noaa.gov/pubs.html

Uslu B, Power W, Greenslade DJM, Titov VV, Elbé MC (2011) The July 15, 2009 Fiordland, New Zealand tsunami: real-time assessment. Pure Appl Geophys (in press)

Wei Y, Bernard EN, Tang L, Weiss R, Titov VV, Moore C, Spillane M, Hopkins M, Kânoğlu U (2008) Real-time experimental forecast of the Peruvian tsunami of August 2007 for U.S. coastlines. Geophys Res Lett 35:L04609. doi:10.1029/2007GL032250

Wei Y, Cheung KF, Curtis GD, McCreery CS (2003) Inverse algorithm for tsunami forecasts. J Waterw Port Coast Ocean Eng 129(3):60–69

USGS (2010) http://earthquake.usgs.gov/earthquakes/eqarchives/significant/sig_2006.php

Acknowledgments

This work was funded by the Joint Institute for the Study of the Atmosphere and Ocean (JISAO) under NOAA Cooperative Agreement No. NA17RJ1232 and is JISAO Contribution No. 1783. This work is also Contribution No. 3557 from NOAA/Pacific Marine Environmental Laboratory. The authors would like to thank Bradley Bell for computer code and discussions on constrained least squares.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1

Here, we assess the statistical properties of the least squares estimator for \(\varvec{\alpha}\). To do so, let us reconsider the model (1) for the data from a single buoy. The vector d j potentially consists of a mixture of 15-s and 1-min measurements. To deal with this possibility, we can create a vector \(\tilde{\bf d}_j\) by conceptually replacing each 1-min measurement with the four unobserved 15-s values that were averaged to form it. For example, if \({\bf d}_j = [x_{15},\bar{x}_{20}, \bar{x}_{24}]^T\), then \(\tilde{\bf d}_j = [x_{15},x_{17}, x_{18}, x_{19}, x_{20}, x_{21}, x_{22}, x_{23}, x_{24}]^T\), and d j and \(\tilde {\bf d}_j\) are related by \({\bf d}_j =\Upgamma_j\tilde {\bf d}_j\), where

Let \(\widetilde{N}_j\) be the length of the vector \(\tilde{\bf d}_j\). In a similar manner, augment G j to obtain \(\widetilde G_j\), and consider the model

where \(\tilde{\bf e}_j\) is a vector of random variables (RVs) that obeys a multivariate normal (MVN) distribution with zero mean and a covariance matrix given by, say, V j . Under the assumption that the variance for all the error terms is the same, say, σ 2 j , we can express V j as \(\sigma^2_j\Upphi_j\), where \(\Upphi_j\) is an \(\widetilde N_j \times \widetilde N_j\) matrix whose diagonal elements are all unity. Then we have

where \({\bf e}_j = \Upgamma_j \tilde {\bf e}_j\) is MVN with zero mean and a covariance matrix given by \(\Upgamma_j \Upphi_j\Upgamma^T_j\sigma^2_j\) (cf. Eq. (1)). Standard least squares theory (Draper and Smith 1998) says that the least squares estimator for \(\varvec{\alpha}\) in the model above has a covariance matrix given by

By stacking together the models for \(\tilde{\bf d}_j\) for \(j=1,\ldots, J\), we obtain

Here, \(\tilde{\bf d}\) is related to d of Eq. (2) via \({\bf d} = \Upgamma\tilde{\bf d}\), where

(here and elsewhere, the zeros need to be interpreted as matrices of appropriate dimensions, all of whose elements are zero). Pending more research, we make the simplifying assumption that the errors associated with two different buoys are uncorrelated so that the covariance matrix for \(\tilde{\bf e}\) is given by

Then we have

where \({\bf e} = \Upgamma \tilde{\bf e}\) is MVN with zero mean and a covariance matrix given by \(\Upgamma V \Upgamma^T\) (cf. Eq. (2)). The least squares estimator \(\hat{\varvec{\alpha}}\) for \(\varvec{\alpha}\) in the model above has a covariance matrix given by

Since G and \(\Upgamma\) are known, we need only specify V to be able to compute the desired \(\Upsigma\); i.e., we need to set \(V_j =\sigma^2_j \Upphi_j\) for each j. To do so, we regard the RVs in \(\tilde{\bf e}_j\) as being extracted from a stationary first-order autoregressive (AR(1)) process with variance σ 2 j and AR(1) parameter ϕ j satisfying |ϕ j | < 1. If we take the indices associated with \(\tilde{\bf d}_j\) to be \(\tilde n_1, \tilde n_2,\ldots, \tilde n_{\widetilde N_j}\), then the (p, q)th element of \(\Upphi_j\) is \(\phi_j^{|\tilde n_p - \tilde n_q |}\). In practice we can estimate σ 2 j and ϕ j based upon the residuals \({\bf r}_j = {\bf d}_j - G_j \hat{\varvec{\alpha}}\) from the least squares fit. One approach for doing so is to make the simplifying assumption that r j obeys the same multivariate normal distribution as \(\Upgamma \tilde{\bf e}_j\) and to estimate σ 2 j and ϕ j using the maximum likelihood (ML) method. The likelihood function for a given σ 2 j and ϕ j can be evaluated using a state-space formulation (Jones 1980; Durbin and Koopman 2001), in which the state equation is dictated by an AR(1) process, while the observation equation handles the underlying AR(1) process and what is observable from a mixture of 15-s and 1-min measurements. The ML estimators are obtained by embedding the evaluation of the likelihood function in a nonlinear optimization procedure.

The above approach for determining the covariance matrix for the least squares estimator \(\hat{\varvec{\alpha}}\) works for an estimator based on an arbitrary mixture of 15-s and 1-min measurements, but it can be simplified considerably if we assume that each N j -dimensional vector d j consists of stretch of 1-min averages with no missing values, as is the case occurring most often in practice (Percival et al. 2009). With this additional assumption, we can dispense with the \(\Upgamma_j\) matrices and formulate a statistical model directly in terms of the model \({\bf d} = G {\varvec{\alpha}} + {\bf e}\). The least squares estimator \(\hat{\varvec{\alpha}}\) for \(\varvec{\alpha}\) now has a covariance matrix given by

where V has a structure analogous to Eq. (11), but with the (p, q)th element of the \(N_j\,\times\,N_j\) matrix \(\Upphi_j\) being given by ϕ |p--q| j . We can then estimate ϕ j via

where r j,(f) consists of all of r j except for its first element, and r j,(l) has everything but the last element. An approximately unbiased estimator of σ 2 j is given by

We use this simplified approach to obtain the statistical properties of \(\hat{\varvec{\alpha}}\) reported in this paper.

Appendix 2

The primary use for the estimated source coefficients \(\hat{\varvec{\alpha}}\) is to provide boundary conditions for inundation models for coastal regions (Bernard et al. 2006; Bernard and Titov 2007; Tang et al. 2009). The \(\hat{\varvec{\alpha}}\) can also be used for other purposes, one of which is to compare the magnitude of the tsunami event based on inverting the tsunami data with the seismically determined moment magnitude. As noted below, such a comparison can provide insight into the nature of the earthquake. We make the comparison with the seismically determined M W , by backing out an estimate of the moment magnitude from \(\hat{\varvec{\alpha}}\) per the following procedure.

In view of Eqs. (8) and (10), a natural estimator of the inversion-derived tsunami magnitude T M is

(in the above, we presume that μ is free of error and that the modeling assumptions concerning the unit sources are correct). Equating the seismic moment M 0 with this tsunami magnitude in turn leads to an estimator of the moment magnitude M W based on \(\widehat T_M\):

Standard least squares theory (Draper and Smith 1998) says that the variance of \(\widehat T_M\) is given by

where 1 is a vector of length K, all of whose elements are unity, while, as before, \(\Upsigma\) is the K × K covariance matrix for \(\hat{\varvec{\alpha}}\). A Taylor series expansion of \(\log_{10}( \widehat T_M )\) about the true T M leads to the approximation

Substitution of \(\widehat T^2_M\) for T 2 M in the denominator gives us a means of assessing the sampling variability in \(\widehat T_M\), which is needed to determine if any observed difference between \(\widehat M_W\) and M W is statistically significant, given the sampling variability in \(\widehat M_W\). Assuming a normal distribution, we can express this variability in terms of an approximate 95% confidence interval:

As an example, let us reconsider the November 15, 2006 Kuril Islands tsunami, for which \(M_W\,=\,8.3\). Using \(\hat{\varvec{\alpha}}\) and \(\Upsigma\) as given in Eq. (7), we obtain \(\widehat M_W\,\doteq\,8.23\), while the square root of \(\hbox{var}\{ \widehat M_W \}\) is 0.034. An approximate 95% CI is thus [8.16, 8.3], which just barely traps the value \(M_W\,=\,8.3\); however, if we also take into account potential rounding error in the latter, there is little evidence that \(\widehat M_W\) is significantly different from M W .

While the inversion-derived \(\widehat M_W\) and the seismically determined M W are consistent in this example, in other cases \(\widehat M_W\) could be significantly smaller or larger than M W . There are at least three explanations for the case \(\widehat M_W >M_W\). First, the initial estimate of M W might have been low because it was based on too short a set of seismic waves (e.g., slower—but more energetic—waves arrived after M W had been determined). Second, a slowly rupturing earthquake can produce less energetic seismic waves for the same vertical ground displacement that generates the tsunami; i.e., the strain release is much slower than expected, resulting in a larger tsunami than M W would suggest. This leads to a so-called tsunami earthquake, as defined by Kanamori (1972; see Okal 2009). Third, a co-seismic landslide can occur that generates an additional tsunami near the earthquake. On the other hand, if \(\widehat M_W < M_W\), a rare possibility is that M W was overestimated from a short set of seismic waves, but a more likely explanation is that the earthquake mechanism (e.g., strike-slip) is different from the one assumed in SIFT (a reverse thrust fault event), producing a smaller vertical ground displacement and hence a smaller tsunami. This case is of practical importance because of the potential need to cancel an initial warning that was issued based on the seismically determined M W .

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Percival, D.B., Denbo, D.W., Eblé, M.C. et al. Extraction of tsunami source coefficients via inversion of DART\(^{\circledR}\) buoy data. Nat Hazards 58, 567–590 (2011). https://doi.org/10.1007/s11069-010-9688-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-010-9688-1