Abstract

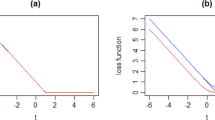

In this letter, we target the problem of model selection for support vector classifiers through in-sample methods, which are particularly appealing in the small-sample regime. In particular, we describe the application of a trimmed hinge loss function to the Rademacher complexity and maximal discrepancy-based in-sample approaches and show that the selected classifiers outperform the ones obtained with other in-sample model selection techniques, which exploit a soft loss function, in classifying microarray data.

Similar content being viewed by others

References

Alon U, Barkai N, Notterman D, Gish K, Ybarra S, Mack D, Levine A (1999) Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Proc Natl Acad Sci USA 96(12): 6745

Ancona N, Maglietta R, Piepoli A, D’Addabbo A, Cotugno R, Savino M, Liuni S, Carella M, Pesole G, Perri F (2006) On the statistical assessment of classifiers using dna microarray data. BMC Bioinform 7(1): 387

Anguita D, Ghio A, Greco N, Oneto L, Ridella S (2010) Model selection for support vector machines: advantages and disadvantages of the machine learning theory. In: Proceedings of the international joint conference on neural networks

Anguita D, Ghio A, Oneto L, Ridella S (2011a) In-sample model selection for support vector machines. In: Proceedings of the international joint conference on neural networks

Anguita D, Ghio A, Oneto L, Ridella S (2011b) Selecting the hypothesis space for improving the generalization ability of support vector machines. In: Proceedings of the international joint conference on neural networks

Anguita D, Ghio A, Ridella S (2011c) Maximal discrepancy for support vector machines. Neurocomputing 74: 1436–1443

Bartlett P, Boucheron S, Lugosi G (2002) Model selection and error estimation. Mach Learn 48: 85–113

Braga-Neto U, Dougherty E (2004) Is cross-validation valid for small-sample microarray classification?. Bioinformatics 20(3): 374

Collobert R, Sinz F, Weston J, Bottou L (2006) Trading convexity for scalability. In: Proceedings of the 23rd international conference on machine learning, pp 201–208

Duan K, Keerthi SS, Poo AN (2003) Evaluation of simple performance measures for tuning SVM hyperparameters. Neurocomputing 51: 41–59

Golub T, Slonim D, Tamayo P, Huard C, Gaasenbeek M, Mesirov J, Coller H, Loh M, Downing J, Caligiuri M et al (1999) Molecular classification of cancer: class discovery and class prediction by gene expression monitoring. Science 286(5439): 531

Page D, Zhan F, Cussens J, Waddell M, Hardin J, Barlogie B, Shaughnessy Jr J (2002) Comparative data mining for microarrays: A case study based on multiple myeloma. In: Poster presentation at international conference on intelligent systems for molecular biology, August

Pelckmans K, Suykens J, De Moor B (2004) Morozov, Ivanov and Tikhonov regularization based LS-SVMs. Neural Inf Process 3316: 1216–1222

Platt J (1999) Sequential minimal optimization: a fast algorithm for training support vector machines. Adv Kernel Methods Support Vector Learn 208: 98–112

Shawe-Taylor J, Cristianini N (2004) Kernel methods for pattern analysis. Cambridge University Press, Cambridge

Statnikov A, Aliferis C, Tsamardinos I, Hardin D, Levy S (2005a) A comprehensive evaluation of multicategory classification methods for microarray gene expression cancer diagnosis. Bioinformatics 21(5): 631

Statnikov A, Tsamardinos I, Dosbayev Y, Aliferis C (2005b) Gems: a system for automated cancer diagnosis and biomarker discovery from microarray gene expression data. Int J Med Inform 74(7–8): 491–503

Vapnik V (1998) Statistical learning theory. Wiley-Interscience, New York

West M, Blanchette C, Dressman H, Huang E, Ishida S, Spang R, Zuzan H, Olson J, Marks J, Nevins J (2001) Predicting the clinical status of human breast cancer by using gene expression profiles. Proc Natl Acad Sci USA 98(20): 11462

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Anguita, D., Ghio, A., Oneto, L. et al. In-sample Model Selection for Trimmed Hinge Loss Support Vector Machine. Neural Process Lett 36, 275–283 (2012). https://doi.org/10.1007/s11063-012-9235-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-012-9235-z