Abstract

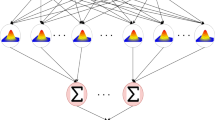

In this contribution, novel approaches are proposed for the improvement of the performance of Probabilistic Neural Networks as well as the recently proposed Evolutionary Probabilistic Neural Networks. The Evolutionary Probabilistic Neural Network’s matrix of spread parameters is allowed to have different values in each class of neurons, resulting in a more flexible model that fits the data better and Particle Swarm Optimization is also employed for the estimation of the Probabilistic Neural Networks’s prior probabilities of each class. Moreover, the bagging technique is used to create an ensemble of Evolutionary Probabilistic Neural Networks in order to further improve the model’s performance. The above approaches have been applied to several well-known and widely used benchmark problems with promising results.

Similar content being viewed by others

References

Alba E, Chicano JF (2004) Training neural networks with GA Hybrid Algorithms. In: Genetic and evolutionary computation—GECCO 2004, genetic an:d evolutionary computation conference, Seattle, WA, USA, June 26-30, 2004, Proceedings, Part I, vol. 3102 of Lecture notes in computer science. pp 852–863

Asuncion A, Newman D (2007) UCI machine learning repository. http://www.ics.uci.edu/~mlearn/MLRepository.html

Bouckaert RR, Frank E (2004) Evaluating the replicability of significance tests for comparing learning algorithms. In: Dai H, Srikant R Zhang C (eds) Proceedings of Pacific-Asia Conference on Knowledge Discovery and Data Mining, LNAI 3056, Sydney, Australia, pp 3–12

Breiman L (1996). Bagging predictors. Mach Learn 24(2): 123–140

Clerc M and Kennedy J (2002). The particle swarm—explosion, stability and convergence in a multidimensional complex space. IEEE Trans Evol Comput 6(1): 58–73

Detrano R, Janosi A, Steinbrunn W, Pfisterer M, Schmid J, Sandhu S, Guppy K, Lee S and Froelicher V (1989). International application of a new probability algorithm for the diagnosis of coronary artery disease. Am J Cardiol 64: 304–310

Evett IW, Spiehler EJ (1987) Rule induction in forensic science. Technical report, Central Research Establishment, Home Office Forensic Science Service

Ganchev T, Tasoulis DK, Vrahatis MN and Fakotakis N (2007). Generalized locally recurrent probabilistic neural networks with application to text-independent speaker verification. Neurocomputing 70(7–9): 1424–1438

Garcia-Pedrajas N and Fyfe C (2007). Immune network based ensembles. Neurocomputing 70: 1155–1166

Georgiou VL, Pavlidis NG, Parsopoulos KE, Alevizos PD and Vrahatis MN (2006). New self–adaptive probabilistic neural networks in bioinformatic and medical tasks. Int J Artif Intel Tools 15(3): 371–396

Gorunescu M, Gorunescu F, Ene M, El-Darzi E (2005) A heuristic approach in hepatic cancer diagnosis using a probabilistic neural network-based model. In: Proceedings of the international symposium on applied stochastic models and data analysis. Brest, France, pp 1016–1024

Gorunescu F, Gorunescu M, Revett K, Ene M (2007) A hybrid incremental Monte Carlo searching technique for the “Smoothing” parameter of probabilistic neural networks. In: Proceedings of the international conference on knowledge engineering, principles and techniques, KEPT 2007. Cluj-Napoca, Romania, pp 107–113

Guo J, Lin Y, Sun Z (2004) A novel method for protein subcellular Localization based on boosting and probabilistic neural network. In: Proceedings of the 2nd Asia-Pacific bioinformatics conference (APBC2004). Dunedin, New Zealand, pp 20–27

Hand JD (1982). Kernel discriminant analysis. Research Studies Press, Chichester

Holmes E, Nicholson JK and Tranter G (2001). Metabonomic characterization of genetic variations in toxicological and metabolic responses using probabilistic neural networks. Chem Res Toxicol 14(2): 182–191

Huang CJ (2002) A performance analysis of cancer classification using feature extraction and probabilistic neural networks. In: Proceedings of the 7th Conference on Artificial Intelligence and Applications. pp 374–378

Kaufman L and Rousseeuw PJ (1990). Finding groups in data: an introduction to cluster analysis. John Wiley and Sons, New York

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings IEEE International Conference on Neural Networks, vol IV. Piscataway, NJ, pp 1942–1948

Kotsiantis S and Pintelas P (2004). Combining bagging and boosting. Int J Comput Intel 1(4): 324–333

Mangasarian OL and Wolberg WH (1990). Cancer diagnosis via linear programming. SIAM News 23: 1–18

Nadeau C and Bengio Y (2003). Inference for the generalization error. Mach Learn 52(3): 239–281

Neruda R, Slusny S (2007) Variants of memetic and hybrid learning of perceptron networks. In: DEXA Workshops. pp 158–162

Parsopoulos KE and Vrahatis MN (2002). Recent approaches to global optimization problems through particle swarm optimization. Nat Compu 1(2–3): 235–306

Parsopoulos KE and Vrahatis MN (2004). On the computation of all global minimizers through particle swarm optimization. IEEE Trans Evolut Compu 8(3): 211–224

Prechelt L (1994) Proben1: a set of neural network benchmark problems and benchmarking rules. Technical Report 21/94, Fakultät für Informatik, Universität Karlsruhe

Quinlan J (1987). Simplifying decision trees. Int J Man-Mach Studies 27(3): 221–234

Sexton RS and Sikander NA (2001). Data mining using a genetic algorithm-trained neural network. Int J Intell Systems Account, Finance Manage 10: 201–210

Smith J, Everhart J, Dickson GW, Knowles CB and Johannes JR (1988). Using the ADAP learning algorithm to forecast the onset of diabetes melitus. Johns Hopkins APL Technical Digest 10: 262–266

Specht DF (1990). Probabilistic neural networks. Neural Netw 1(3): 109–118

Suresh S, Sundararajan N, Saratchandran P (2007) A sequential multi-category classifier using radial basis function networks. Neurocomputing doi: 10.1016/j.neucom.2007.06.003

Trelea IC (2003). The particle swarm optimization algorithm: convergence analysis and parameter selection. Info Process Lett 85: 317–325

Wang Y, Adali T, Kung S and Szabo Z (1998). Quantification and segmentation of brain tissues from MR Images: a probabilistic neural network approach. IEEE Trans Image Process 7(8): 1165–1181

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Georgiou, V.L., Alevizos, P.D. & Vrahatis, M.N. Novel Approaches to Probabilistic Neural Networks Through Bagging and Evolutionary Estimating of Prior Probabilities. Neural Process Lett 27, 153–162 (2008). https://doi.org/10.1007/s11063-007-9066-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-007-9066-5