Abstract

Global livelihoods are impacted by the novel coronavirus (COVID-19) disease, which mostly affects the respiratory system and spreads via airborne transmission. The disease has spread to almost every nation and is still widespread worldwide. Early and reliable diagnosis is essential to prevent the development of this highly risky disease. The computer-aided diagnostic model facilitates medical practitioners in obtaining a quick and accurate diagnosis. To address these limitations, this study develops an optimized Xception convolutional neural network, called "XCovNet," for recognizing COVID-19 from point-of-care ultrasound (POCUS) images. This model employs a stack of modules, each of which has a slew of feature extractors that enable it to learn richer representations with fewer parameters. The model identifies the presence of COVID-19 by classifying POCUS images containing Coronavirus samples, viral pneumonia samples, and healthy ultrasound images. We compare and evaluate the proposed network with state-of-the-art (SOTA) deep learning models such as VGG, DenseNet, Inception-V3, ResNet, and Xception Networks. By using the XCovNet model, the previous study's problems are cautiously addressed and overhauled by achieving 99.76% accuracy, 99.89% specificity, 99.87% sensitivity, and 99.75% F1-score. To understand the underlying behavior of the proposed network, different tests are performed on different shuffle patterns. Thus, the proposed "XCovNet" can, in regions where test kits are limited, be used to help radiologists detect COVID-19 patients through ultrasound images in the current COVID-19 situation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Coronavirus (COVID-19) was first identified in Wuhan City (Hubei Province, China) in December 2019. Global public health systems have been challenged by the COVID-19 pandemic because of its high infection and mortality rates [1, 2]. The epidemic has already severely damaged the global economy and the medical system due to the lack of intensive care units (ICUs). But the main problem here is the uncontrolled and unrecognized spread of the disease [3]. There is an urgent need for speedy and accurate techniques to assist in the diagnosis and decision-making process as the COVID-19 epidemic spreads [4]. Moreover, lab tests and diagnostic tools are critical for effectively containing the pandemic. Exact and appropriate diagnostic procedures are required to detect COVID-19 in an asymptomatic individual. In order to carry out the diagnostic processes, samples are often taken from each patient and examined in a lab or at a point-of-care testing facility [5]. Still, this method is labor- and time-intensive. As a result, this method is unsuitable for obtaining a rapid diagnosis during a pandemic [6]. Although the quick antigen test may identify IgG and IgM antibodies in human blood and provide results in 15 minutes, it may take more than a few days for the human body to develop the antibodies, increasing the risk of virus transmission before diagnosis. Therefore, the rate of false negative results is very high. As an alternative, there is a need for automated diagnostic methods that are sensitive, unique to COVID-19, and allow rapid prognosis [7]. This is where medical imaging comes into play; a computed tomography (CT scan) exam can be performed more thoroughly and is very common [8]. Studies show that the CT scan process is a relatively new tool that can even be sensitive when a PCR test is inconclusive [9]. However, there are significant flaws: CT scan is highly radiative, costly, and difficult to sterilize. These disadvantages limit the widespread use of CT scans for diagnosis. X-rays were viewed as an alternative, but their predictive power was found to be inferior [4]. Ultrasound imaging is a more accessible, affordable, safer, and modern imaging technique that has recently become increasingly popular. Lung ultrasound (LUS) is increasingly used in the point-of-care area for the diagnosis and treatment of acute respiratory infections [10]. When compared to CT scans and X-ray procedures, ultrasound produces less ionizing radiation, is less expensive, has higher diagnostic accuracy, and is available everywhere [11, 12]. Several reports [13] have demonstrated that lung ultrasound (LUS) imaging is useful in the diagnosis and follow-up of community-acquired pneumonia, particularly bronchiolitis. Several investigations [13] have proven the utility of lung ultrasonography (LUS) imaging in the diagnosis and monitoring of community-acquired pneumonia, particularly bronchiolitis. The AI community has paid far less attention to ultrasound in the context of COVID-19 than it has to CT and X-rays. However, there have been a lot of voices in the medical field calling for an ultrasound to play a bigger part in the present pandemic. LUS imaging may even aid in the reduction of infections among patients and medical personnel [14].

1.1 Motivation

With the emergence of the novel Coronavirus (COVID-19) and its rapid airborne spread, the disease has sparked a global crisis. Communities and economies across the world have been disrupted by the impact of this warmly contagious disease, making rapid and accurate diagnostic solutions an urgent need. It is imperative to curtail the virus' spread as long as it continues on its pervasive path. It becomes imperative to pioneer innovative approaches that can revolutionize the diagnosis of diseases in light of these pressing challenges. A quick and cost-effective method is needed to identify COVID-19 cases promptly, enabling timely intervention and containment measures. In this context, deep learning-enhanced computer-assisted diagnostic techniques are being developed as a visionary undertaking. A key strength of this research is the use of the Xception Convolutional Neural Network (XCovNet) as the cornerstone of COVID-19 classification based on point-of-care ultrasound (POCUS) images. Using state-of-the-art technology, this study aims to overcome current limitations and improve diagnostic accuracy, especially in resource-constrained areas where traditional testing methods may be few.

Instead, an automated diagnostic tool based on rapid and specific predictions for COVID-19 disease is needed. Our main contributions are summarized below:

-

We propose an optimized Xception convolutional neural network (XCovNet) for COVID-19 detection from POCUS images.

-

Depth-wise spatial convolution layers are used to accelerate convolution computation in the XCovNet model, which performs better on POCUS imaging than other models, including COVID-19 classification.

-

The results of the trial demonstrate that the proposed technique achieves the best performance among recent deep learning studies on POCUS imaging.

-

POCUS is a viable option for developing software COVID-19 screening systems based on medical imaging in settings when CT or X-ray screening is unavailable.

This paper is organized as follows: after the related work in Section 2, we explain the details of materials and method in Section 3, we address the details of our proposed approach, hyperparameter tuning, and evaluation metrics in Section 4, and we give experimental findings and ablation studies in Section 5. Section 6 concludes with a brief discussion.

2 Related Work

This section presents a summary of cutting-edge Artificial Intelligence (AI) technology for point-of-care ultrasound (POCUS) or LUS imaging in COVID-19 diagnosis. AI technologies have sparked a lot of interest in the healthcare industry as a potent tool for making predictions and assisting with interpretability [15]. Applications of AI in healthcare comprise disease identification, therapy selection, patient monitoring, and drug development [15]. Deep neural networks (DNN), a subset of AI methods, have fast-dominated medical imaging applications [16]. However, recently, few researchers have developed several AI techniques for detecting and classifying COVID-19 features in POCUS or LUS images and videos. Although several research groups have argued for the use of deep neural networks to diagnose COVID-19 based on observed erections in CT scans and X-rays, further studies have yet to be undertaken to validate the use of deep learning to diagnose COVID-19 with LUS [17]. Pre-trained convolutional neural networks for swine models were proposed in [18,19,20], and these models concentrate on identifying lung sliding and measuring the image's A and B-lines. Subhankar Roy et al. [21] developed a unique deep neural network for the categorization and localization of COVID-19 markers in POCUS images. The model predicted frame-based scores with a 71.40% avg accuracy. A unique LUS dataset for COVID-19 is presented by Born et al. [22], opening the door to a computer-aided diagnosis of COVID-19 in the United States. These images were created from 179 videos and 53 photographs and were made public via a database. Julia Diaz et al. [23] reported a pre-trained deep learning algorithm for COVID-19 detection in LUS images, evaluated with the POCOVID-Net model, achieved an accuracy of 91.50%, precision of 94.10%, recall of 87.9%, and an f1-score of 90.70%, respectively. Barros et al. [24] presented a combined CNN and LSTM model for LUS video classification, and this model attained 93% accuracy and 97% sensitivity. Dastider et al. [25] presented a CNN-based architecture model with DesnseNET-201 and trained on the Italian LUS database and achieved 79.10% accuracy and an f1-score of 78.60%. Awasthi et al. [26] used LUS images to construct a compact, mobile-friendly, and effective deep learning method for COVID-19 identification. It was discovered that the proposed model had an accuracy of 83.2% for detecting COVID-19 within 24 minutes. Hu et al. [27] created a brand-new classification deep neural network employing three datasets from four Chinese medical institutes for the completely automatic analysis of lung participation in COVID-19 patients and this model had an accuracy of 94.39%. Umair Khan et al. [28] developed a four-level scoring approach to assess lung health and classify COVID-19 patients in Pavia, and the model was validated using LUS recordings from COVID-19 patients. The suggested model had an extreme prognostic value of 82.35%. For COVID-19 classification, Xing et al. [29] suggested a different switching deep learning model and an automated LUS scoring method. This model scored a 96.1% f1-score, 96.3% sensitivity, 98.8% specificity, and 96.1% accuracy on LUS images. Jing Wang et al. [30] conducted a comprehensive study on advanced machine learning methods of LUS images in COVID-19 diagnosis using academic databases (PubMed and Google Scholar) and preprints on arXiv or TechRxiv preprints. Lingyi Zhao et al. [31] presented a review paper on deep learning models for COVID-19 identification in lung ultrasound images, as well as a description of the ultrasound equipment used for data collection, related datasets, and performance comparisons. Given the challenges, the goal of this study was to create an optimized Xception neural network (XCovNet) model for diagnosing COVID-19 using POCUS datasets. A new study presented by Ding et al. [32] demonstrated the use of fog and cloud computing to model collaborative federated learning frameworks for segmenting COVID-19 infection lesions from multi-institutional medical image databases. Song et al. [33] described an interpretable prototype network with few-shot learning that can detect cases of COVID-19 with very few ultrasound images. The model achieved an overall accuracy of 99.55%, a recall of 99.93%, and a cure rate of 99.83% in COVID-positive cases when trained with just 5 shots. Vasquez et al [34], presented an automatic deep-learning model that can detect lung ultrasound artifacts such as pleural effusion, and A-lines from ultrasound images of suspected lung lesions. The model achieved 89% accuracy when designed to predict the occurrence of A-lines. Shea et al. [35] presented a video classification of lung ultrasound images based on deep learning techniques. AUC of 90% was achieved by this model, which targets three key ultrasound features associated with lung pathologies including pleural effusion, B-lines, and lung consolidation.

There is a noticeable gap in studies that validate the effectiveness of deep learning specifically for COVID-19 diagnosis using Lung Ultrasound (LUS) images in the context of the discussed related works and the domain of AI application in healthcare, where there has been a significant amount of research focusing on using deep learning techniques to diagnose COVID-19 from various medical imaging modalities such as CT scans and X-rays. The majority of previous research has focused on other imaging modalities, indicating the need for more thorough investigations to establish the accuracy and reliability of deep learning approaches for diagnosing COVID-19 from LUS images and videos. Although several studies have used LUS datasets to diagnose COVID-19, there is still a deficit in the diversity and quantity of these datasets. Many existing datasets are tiny and may not adequately represent the variety of COVID-19 presentations. This constraint may have an impact on the generalizability of AI models generated with these datasets. In conclusion, while the previously stated related works have made substantial contributions to AI-based COVID-19 diagnosis using LUS images, these research gaps indicate areas that require additional exploration. Addressing these limitations could lead to more reliable, interpretable, and clinically useful AI models for COVID-19 diagnosis, benefiting both healthcare professionals and patients in the future.

3 Materials and Methods

3.1 Dataset Collection

A total of 2149 point-of-care ultrasound (POCUS) images were attained from various open-source repositories shown in Fig. 1. This study uses the POCUS dataset created by Born et al. [4] for this investigation, which consists of 202 videos and 59 illustrations taken with either convex or linear probes and samples of 216 patients with viral pneumonia, healthy individuals, and COVID-19 images. This dataset is publicly available through the authors' GitHub repository [36]. A POCUS dataset was created by analyzing 41 different sources, including recordings of LUS in other scholarly journals, community platforms, open medical repositories, healthcare technology companies, and others. The clinical information provided openly by hospitals or professors in academic ultrasound courses was among these sources [4]. Furthermore, two medical authorities assessed and authorized each sample in the POCUS database.

Our set of data included 2149 images after video sampling and image preprocessing, of which 524 relate to COVID-19, 463 to viral pneumonia, and 1162 to healthy labeled images. Figure 2 shows a few samples of images attained after preprocessing from LUS videos. Also, it shows POCUS images of people infected with COVID-19, healthy people, and people with viral pneumonia to provide a visual representation of their morphological properties. To incorporate into the proposed XCovNet network, POCUS images are isotopically transformed into 224 × 224 quality images with three channels (RBG). To ensure that all three diagnostics are equally represented in the training and test data sets, stratified random selection was used, which reduced the likelihood of class imbalances. The quantity of POCUS data samples employed for training and testing in the proposed model is illustrated in Table 1, with samples ranging from 10% to 50%. In order to increase the dependability of the findings, we frequently used k-fold cross-validation [37].

4 Proposed Optimized Xception Model

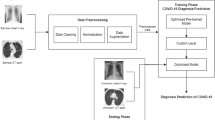

The optimized Xception neural network (XCovNet) strategy of the Xception neural network [38] is discussed in this part, to optimize network performance for COVID-19 classification in ultrasound images. This Xception, often termed "Extreme Inception," was first introduced by [39] and was motivated by Inception models. The Inception networks independently choose the sequence of convolution and pooling layer combinations while simultaneously computing convolutions of various filter sizes and pooling layers. The proposed optimized Xception neural network (XCovNet) is composed of three blocks: 1. Convolution block, 2. Depth-wise Separable Convolution block, and 3. In a fully connected layer block, as illustrated in Fig. 3. A description of the notations and symbols used in this article is given in Table 2.

4.1 Convolutional Block

The proposed architecture (XCovNet) employs convolutional layers, with the layer preceding the input producing convolutional kernels to construct multiple feature maps to present the input data's features. Every convolution kernel is dispersed across all regions of the input data when building a feature map. The feature maps' relative results are created by the various convolution kernels; theoretically, the location (i, j) of a feature value relative to another in the feature map's lth layer determines the kth layer. Mathematically, the feature value at location (i,j) on the lth feature map of kth layer, \({y}_{i,j,l}^k\), is computed as:

In Eq. (1), \(W\ast {v}_l^k\) is the weight vector, and \(b\ast {v}_l^k\) are the bias values of the lth filter and kth layer respectively, for \({C}_{i,j}^k\) as the center of the input patch on the kth layer’s (i, j) position. It should be noted that the kernels \(W\ast {v}_l^k\) that generate feature maps \({y}_{i,j,l}^k\) are shared. A weight distribution mechanism has several advantages such as it reduces model complexity and making it easier to train networks. After convolution layers, the activation function and Max pooling layer are employed in the feature maps. The parametric PReLU [40] is a generalized rectified unit activation function with a negative slope that is defined formally as follows:

In Eq (2), xi is the input on the ith channel of the network layer and βi is the negative slope which is a learnable parameter. This activation function is necessary for the CNN block [See Fig. 3] for discovering nonlinear features that create quick convergences and superior predictions for the developed framework with less overfitting, and the max-pooling layer is utilized to reduce the size of the feature map. In Fig. 3, Initially, the input of shaped 224×224×3 POCUS images is fed into a convolution block. This block consists of two convolution layers that extract a set of (local) features of COVID-19, viral pneumonia, and healthy portions. After these two convolution layers, there is a PReLU activation and a 2x2 max pooling layer. The activation maps of each layer are represented visually to comprehend the learning capacity of individual layers.

4.2 Depth-Wise Separable Convolution Block

The depthwise separable convolution method is a type of convolution method that works in depth as well as space. Two steps must be taken to simplify the convolution operation: (i) depthwise and (ii) pointwise convolution [38]. In general, deep learning architectures use this when filters cannot be decomposed into smaller ones.

4.2.1 Illustration of 2D Convolution

Assume the input layer has dimensions of 28 x 28 x 3 (height x width x channels) and the filter has dimensions of 3 x 3 x 3. Let's start with a standard 2D convolution as a sorting comparison. When we use a 2D convolution with only one filter, we get a 26x26x1 output plane with one channel. Assume you have 64 filters. When 64 filters are used in a 2D convolution, the output planes are 64 x 26 x 26 x 1. Stacking all of these layers together yields a dimension of 26 x 26 x 64. You can reduce the spatial dimensions' height and width (from 28x28 to 26x26). However, the depth has been expanded from three to sixty-four layers. Let us calculate the number of multiplications required using the traditional method. We have 64 x 3 x 3 x 3 filters that are moving 26 times. When we multiply the filters by the number of moves, we obtain 64 x 3 x 3 x 3 x 26 x 26 = 11,68,128 multiplications. Now consider how depthwise separable convolution produces comparable results.

4.2.2 Depthwise convolution

Let's start by using the depthwise convolution. Instead of the single 3 x 3 x 3 kernel used in conventional 2D convolution kernels, we employ three independent 3 x 3 x 1 kernels in this case. Each of the three convolutions results in feature maps with dimensions of 26x26x1, thanks to the interaction or conversation between the three kernels and the single input layer channel. To create a 26x26x3 feature map, stack one more. Although the depth is unchanged, it can be seen that the space's dimensions are decreasing.

4.2.3 Pointwise convolution

Pointwise convolution is a type of convolution that employs a 1x1 kernel, that is, a kernel that repeats over each point. The depth of this kernel corresponds to the number of channels in the input image. We employ a 1x1 convolution filter of size 1x1x3 with a 26x26x3 input to produce the same 26x26x1 output layer as before. Using a 64x1x1 convolution, we generate a result layer with the same size as the classic technique in Section 4.2.1. Deep convolution efficiency: 3x3x3x1 filters move 26x26 times for a total of 3x3x3x1x26x26 = 18,252 multiplications. We have a 64x1x1x3 filter moving 26x26 times at the pointwise convolution, resulting in a total of 64x1x1x3x26x26 = 1,29,792 multiplications, lowering the total to 18,252 + 1,29,792 = 1,48,044 multiplications, a tiny 12.5% of the total cost of the regular 2D convolution.

4.3 Proposed Network

XCovNet was developed by convolution layers out of which two are regular convolution layers and ten are depth-wise separable convolution layers used as a backbone of the network. Google's research brain created a new convolutional algorithm called Xception [38] to speed up processing. Its model was adapted from InceptionV3, and it used depth-wise separable convolution instead of the original Inception module to divide regular convolution into spatial convolution and point-by-point convolution. These works motivate us to develop deep neural networks called ‘XCovNet’ for COVID-19 classification in ultrasound images. In order to compute the convolutions more quickly, this study first applies a depth-wise spatial convolution before using a pointwise convolution to join the output channels of POCUS images. The XCovNet model consists of two convolutional layers, one on top of the other, along with ten depth-wise separable convolutional layers and a fully connected layer in a separable convolutional block. The model first performs a depth-wise spatial convolution before combining the resulting output channels with a pointwise convolution. On the other hand, to minimize the complexity of the network training, choose to use batch normalization.

In this case, batch normalization overcomes the problem of local minima by mapping the PReLU activations to the mean of zero and unit variance, allowing for larger gradient steps and thus faster convergence [41]. Following the sixth separable convolution layer, dropout layers with a ratio of 0.2 are added [42]. A final module in this work employs the fully linked layer and the categorical cross-entropy loss function. It is utilized to generate probabilities for each POCUS image across three classes in multi-class classification situations.

This method consists of the following steps:

-

Step 1:

This step involves collecting point-of-care ultrasound (POCUS) images from different sources and categorizing them according to our research objective.

-

Step 2:

An input of 224×224×3 POCUS images is fed into a convolution block. Two convolution layers extract (local) features from COVID-19, viral pneumonia, and healthy portions in this block. Then comes PReLU activation and 2x2 max pooling.

-

Step 3:

Each convolutional layer is composed of two separate Conv2D layers with 32 filters, PreLU activation, and padding. It includes two depth-separable convolutional layers, as well as PReLU and batch normalization. In order to enhance convergence, activations are normalized in batches. Spatial dimensions are further reduced with MaxPool2D layers of (2, 2). After some blocks, dropout layers with a rate of 0.2 are included for regularization.

-

Step 4:

In the Flatten layer, the 3D tensor is reshaped into a 1D vector. Using PReLU activations, dense layers follow, with decreasing numbers of units (512, 128, 64, 32). Overfitting is prevented by dropping out layers at varying rates (0.7, 0.5, 0.3).

-

Step 5:

A final dense layer consists of three units for each ultrasound image class (COVID-19, healthy, viral pneumonia). In the final layer, class probabilities are converted into outputs using a softmax activation function.

This architecture uses separable convolutions consisting of depth-wise and point-wise convolutions. This structure allows the model to capture relevant features while maintaining computational efficiency.

4.4 Parameter Optimization

The proposed XCovNet architecture is shown in Fig. 3. The weights of the XCovNet model are updated by adjusting the hyperparameters to minimize the loss rate in each training step. Categorical cross-entropy was employed in the study as a loss function [see Table 2]. It is used to produce the probabilities over the n classes for each POCUS image in multiclass classification situations. The main advantage of adaptive moment estimation ADAM [43] is that it combines two important techniques: adaptive learning rate and momentum. It converges faster and can navigate complex loss situations, increasing the chances of finding a high-quality minimum and achieving optimal performance. This study uses Adam's learning rates to fine-tune the parameters. Equation (3) & (4) illustrates how Adam employs both a decaying average of past squared gradients, similar to Adagrad, and a decaying average of past gradients.

Where mt, and vt are the moving averages, which are initialized to zero, are biased towards zero during the first updates. Therefore, the bias-corrected mt, and vt are calculated as shown in Eqs. (5) and (6).

The Adam update rules are shown in Eq. (7), which proposes the default values of α1 = 0.9, α2 = 0.999, η is the step size and ϵ = 10-8.

In this research, we observed that the acceptable number of separable convolution layers is 10, whereas the ideal number of regular convolution layers is 2. In deep learning models, the optimization algorithm you choose plays a key role in the speed of convergence, the training time, and the overall efficiency of the learning process. Recent years have seen a variety of optimization techniques emerge, each addressing different challenges and nuances associated with training. A lot of attention is being paid to ADAM (Adaptive Moment Estimation) because it can adapt the learning rate and learning moment based on past gradient information. However, it is important to understand how ADAM compares in terms of training time and efficiency to other established optimization algorithms. In this network, the Adam optimizer with weighted decay over 100 epochs is being used as the optimizer algorithm. To extract features, previously trained weights are employed. This extracted information is sent to a stack of fully connected layers, each of which has 64-32-3 neurons. SoftMax activates the final layer, while PReLU non-linearity activates the prior levels. The Python programming language is used to carry out the experimental work. Table 3 presents experimental results of the impact of different optimizers on network variations on the POCUS image dataset and provides information on the construction of the XCovNet model.

In Figs. 4 and 5, we compare ADAM's performance with several well-known optimization algorithms, including stochastic gradient descent (SGD), Adagrad, and AdaDelta. We are primarily interested in understanding how these algorithms interact and influence training.

5 Experimental Results

In this part, we use a variety of evaluation criteria to show how well the proposed network performs under various restrictions. In order to evaluate the final prediction accuracy of the XCovNet model, we used five metrics. The performance of the XCovNet model was estimated using classification metrics such as precision, accuracy, recall, F1 score, and area under the receiver operating characteristic curve (AUC) [44].

5.1 Performance metrics

Below, we briefly review the different types of evaluation criteria used in the studies reviewed in this article. In addition, we clarify some key acronyms that are commonly used to define evaluation criteria.

5.1.1 Accuracy

A prediction's accuracy is measured by the ratio between those who made the right prediction and those who made the wrong prediction. The accuracy is calculated using Eq. (8):

Where,

-

True Positive (Tpos) : a class is predicted to be true and is true in reality (Patients infected with COVID-19 and diagnosed with COVID-19).

-

True Negative (Tneg) : a class is predicted to be false and is false in reality (Patients that are healthy and diagnosed non-COVID-19).

-

False Positive (Fpos) : a class is predicted to be true but is false in reality (Patients who are healthy but diagnosed with COVID-19).

-

False Negative (Fneg) : a class is predicted to be false but is true in reality (Patients diagnosed with COVID-19 but diagnosed with non- COVID-19).

5.1.2 Sensitivity

Sensitivity, also known as Recall, calculates the ratio of expected positive samples to actual positive samples, which is defined as follows:

5.1.3 Specificity

Sensitivity, which calculates the difference between the number of anticipated negative samples and the actual number of negative samples, is complemented by specificity. It's presented as follows:

5.1.4 F1-Score

A F1-score is computed by averaging precision and recall. In general, this metric is more advantageous than accuracy, particularly if there is an unequal distribution of classes. It is defined as follows:

5.1.5 Area under the Receiver Operating Characteristic Curve (AUC)

This statistic is crucial for assessing classification models. ROC curves show how well classification models perform at different levels of categorization.

The proposed XCovNet is first contrasted with current cutting-edge classification architectures that outperformed the POCUS image dataset. The proposed model is assessed for testing different POCUS images ranging from 10% to 50%, as presented in Table 4.

The XCovNet network performed exceptionally well in 20% of the experimentations under a 20-fold cross-validation scheme, which included numerous splits. Table 3 illustrates the model's assessment of a few standard classification metrics, such as train and test accuracy score and model complexity for the individual split. But for the sake of comparability, a 20% split is only considered and is shown in Table 5.

The model achieved an accuracy of 99.30% while evaluating 20% of the test samples under one of the shuffling constraints.

The whole learning curves for the iterations for 100 epochs are shown in Fig. 6. 100 epochs of training and validation history were sufficient for our experiment, as shown in Fig. 6.

The accuracy of the conventional Xception model was surpassed by XCovNet with PReLU, which produced a validation accuracy of 99.30% with a validation loss of 0.0432%. With a generalization split of 80%–20% for training and evaluating samples on POCUS images, the performance of our model is contrasted with that of cutting-edge pre-trained models. The performance of our model is compared to that of state-of-the-art pre-trained models with an 80%-20% generalization split for training and testing samples on POCUS pictures. Table 5 illustrates the validity of implementation in terms of performance metrics such as precision, recall, and f1-score and compares the proposed method to other pre-trained models in terms of sensitivity, specificity, and f1-score. To generate the non-COVID-19 class for this investigation, POCUS images from classes with viral pneumonia and classes with healthy participants were chosen at random. Table 6 compares the performance of nine different models too that of the recommended XCovNet model. Researchers from all over the world have proposed a variety of COVID-19 detection methods. Following that, an extensive study was undertaken to compare the proposed network's efficiency to other current models published in the literature, as shown in Table. 6. Our XCovNet facilitates training by attaining faster convergence and requiring fewer computations (Iterations). The best model, as shown in Table 6, was the XCovNet, which was constructed of a pre-trained Xception model with optimized parameters on POCUS datasets.

The Receiver Operating Characteristics (ROC) curves of three classes are depicted in Fig. 7: COVID-19, Healthy images, and Viral pneumonia. The ROC curve is a graph that compares the true positive rate (TPR) against the false positive rate (FPR). It represents the model's diagnostic capacity by assessing the degree of separability between distinct classes.

The Receiver Operating Characteristics (ROC) curves of three classes are depicted in Fig. 7: COVID-19, Healthy images, and Viral pneumonia. The ROC curve is a graph that compares the true positive rate (TPR) against the false positive rate (FPR) (FPR). It represents the model's diagnostic capacity by assessing the degree of separability between distinct classes. The greater the area under the curve (AUC), the better the model distinguishes between distinct classes. The ideal model has an AUC of 1.0, whereas the inferior model has an AUC of 0.5 suggesting that the model is like random guessing. The normal class has an average area under the curve (AUC) of 1.00, the COVID-19 class has an AUC of 1.0, the viral pneumonia class has an AUC of 1.00, while the micro-average AUC is 1.0 and the macro-average AUC is 1.00. The main reason that the AUC of normal and viral pneumonia is 1.00 is that our model predicted three erroneous positives and one false negative in the case of normal patients, however, there are no false positives or false negatives in the case of COVID, hence AUC of COVID-19 is 1.0.

5.2 Complexity Analysis

In this section, we present a complexity analysis of the proposed XCovNet model described in Section 4. Each convolutional layer consists of a set of convolutional operations on a given input feature map. Therefore, the time complexity of the convolution operation can be expressed as O(k2 ∗ H ∗ W ∗ C ∗ F). Where, k: kernel size, H: height of input feature maps, W: width of input feature maps, C: number of input channels, F: number of filters. At the same time, each dense layer performs matrix multiplication, followed by bias addition and activation function computation. The time complexity of dense layers can be expressed as O(n ∗ m), where: n: number of input units, m: number of output units. Taking these facgors into account, the overall time complexity of the model would be the sum of the complexities of individual layers, weighted by the number of times they're applied. Separable convolutions are computationally more efficient compared to standard convolutions, especially for relatively large kernel sizes. Let's anlayze the time complexity of a single separable convolution operation. The time complexity of depthwise convolution might be expressed as: O(k2 ∗ H ∗ W ∗ C ∗ F) and time complexity of point-wise convolution might be expressed as: O(H ∗ W ∗ C ∗ F). Therefore, the total time complexity of the XCovNet model in Eq. (12) is:

6 Conclusions

In conclusion, the presented study introduces a novel deep learning approach, XCovNet, designed to aid radiologists in enhancing the accuracy of COVID-19 detection through ultrasound images. By harnessing the power of depth-wise separable convolutional layers within the Xception architecture, the proposed model exhibits remarkable diagnostic performance, paving the way for improved patient care and effective disease control strategies. It is shown that the XCovNet model is highly effective in detecting COVID-19, healthy images, and viral pneumonia images toward COVID-19 diagnosis with appropriate parameter tuning. As a result of this study, it is discovered that modifying the optimum parameters changes the performance of the proposed network. In tests of ultrasound images for COVID-19 classification, this proposed network outperformed standard deep learning algorithms, achieving 98.33% accuracy in a 20% test and 99.30% accuracy in a 50% test. As a result of its ability to defeat numerous models and its versatility, the established model is enduring, which leads to the conclusion that experimental results are positive. It is anticipated that as ultrasound datasets expand, more reliable deep-learning models can be constructed in the future, with the aim of diagnosing and monitoring COVID-19 and viral pneumonia in a more efficient manner, thus reducing the massive burden placed on the global public health system. The focus of this study is mainly on the use of ultrasound imaging as a means of diagnosing COVID-19 and viral pneumonia. However, the limitations and implications of applying the XCovNet model to other imaging modalities and disease states have not been discussed. Although this study shows strong classification results in a controlled setting, the lack of real-world clinical validation leaves open questions about the model's performance in real-world medical settings.

Data Availability

The data that support the findings of this study are openly available in Github Repository - Database POCUS [Internet]. Available from: https://github.com/jannisborn/covid19_pocus_ultrasound. and reference number Ref [36].

References

Li Q, Guan X, Wu P, Wang X, Zhou L, Tong Y, Ren R, Leung KSM, Lau EHY, Wong JY et al (2020) Early Transmission Dynamics in Wuhan, China, of Novel Coronavirus–Infected Pneumonia. N Engl J Med 382:1199–1207

World Health Organization. Coronavirus disease (COVID-19) pandemic. [cited 2021 July 8]. Available from: https://www.who.int/emergencies/diseases/novel-coronavirus-2019

Kundu R, Basak H, Singh PK et al (2021) Fuzzy rank-based fusion of CNN models using Gompertz function for screening COVID-19 CT-scans. Sci Rep 11:14133. https://doi.org/10.1038/s41598-021-93658-y

Born J, Brändle G, Cossio M, Disdier M, Goulet J, Roulin J et al (2020a) Pocovid-net: automatic detection of covid-19 from a new lung ultrasound imaging dataset (pocus). Preprint: arXiv:2004.12084

Bai Y, Yao L, Wei T, Tian F, Jin DY et al (2020) Presumed asymptomatic carrier transmission of COVID-19. Jama 323(14):1406–1407

Madhu G, Lalith Bharadwaj B, Boddeda R, Vardhan S, Sandeep Kautish K et al (2022) Deep stacked ensemble learning model for covid-19 classification. Comput Mater Continua 70(3):5467–5469

Kundu R (2022) Pawan Kumar Singh, Massimiliano Ferrara, Ali Ahmadian, and Ram Sarkar. "ET-NET: an ensemble of transfer learning models for prediction of COVID-19 infection through chest CT-scan images.". Multimed Tools Appl 81(1):31–50

Masood A, Sheng B, Li P, Hou X, Wei X, Qin J, Feng D (2018) Computer-assisted decision support system in pulmonary cancer detection and stage classification on CT images. J Biomed Inform 79:117–128

Ai T et al (2020) Correlation of chest CT and RT-PCR testing in coronavirus disease 2019 (COVID-19) in China: a report of 1014 cases. Radiology: 200642

Mojoli F, Bouhemad B, Mongodi S, Lichtenstein D (2019) Lung ultrasound for critically ill patients. Amer J Respiratory Crit Care Med 199:701–714

Xirouchaki N, Magkanas E, Vaporidi K et al (2011) Lung ultrasound in critically ill patients: comparison with bedside chest radiography. Intensive Care Med 37(9):1488–1493

Yang Y, Huang Y, Gao F, Yuan L, Wang Z (2020) Lung ultrasonography versus chest CT in COVID-19 pneumonia: a two-centered retrospective comparison study from China. Intensive Care Med 46(9):1761–1763

Berce V, Tomazin M, Gorenjak M, Berce T, Lovrenčič B (2019) The usefulness of lung ultrasound for the aetiological diagnosis of community-acquired pneumonia in children. Sci Rep 9:17957. https://doi.org/10.1038/s41598-019-54499-y

Buonsenso D, Pata D, Chiaretti A (2020) COVID-19 outbreak: less stethoscope, more ultrasound. The Lancet Respiratory Medicine. 8(5)

Yu K-H, Beam AL, Kohane IS (2018) Artificial intelligence in healthcare. Nature Biomed Eng 2(10):719–731

Shen D, Wu G, Suk H-I (2017) Deep learning in medical image analysis. Annu Rev Biomed Eng 19(1):221–248

Ulhaq A, Born J, Khan A, Gomes DPS, Chakraborty S, Paul M (2020) COVID-19 control by computer vision approaches: A survey. IEEE Access 8:179437–179456

Kulhare S, Zheng X, Mehanian C, Gregory C, Zhu M, Gregory K et al (2018) Ultrasound-Based Detection of Lung Abnormalities Using Single Shot Detection Convolutional Neural Networks. In: Simulation, Image Processing, and Ultrasound Systems for Assisted Diagnosis and Navigation. Springer International Publishing; 65–73. https://doi.org/10.1007/978-3-030-01045-4_8

Lindsey T, Lee R, Grisell R, Vega S, Veazey S (2018) Automated pneumothorax diagnosis using deep neural networks. Iberoamerican Congress on Pattern Recognition. Springer, In, pp 723–731. https://doi.org/10.1007/978-3-030-13469-3_84

Mehanian C, Kulhare S, Millin R, Zheng X, Gregory C, Zhu M et al (2019) Deep Learning-Based Pneumothorax Detection in Ultrasound Videos. In: Smart Ultrasound Imaging and Perinatal, Preterm and Paediatric Image Analysis. Springer International Publishing 74–82. https://doi.org/10.1007/978-3-030-32875-7_9

Roy S, Menapace W, Oei S, Luijten B, Fini E, Saltori C, Huijben I et al (2020) Deep learning for classification and localization of COVID-19 markers in point-of-care lung ultrasound. IEEE Trans Med Imaging 39(8):2676–2687

Born J, Wiedemann N, Cossio M et al (2021) Accelerating detection of lung pathologies with explainable ultrasound image analysis. Appl Sci 11(2):672

Diaz-Escobar J, Ordóñez-Guillén NE, Villarreal-Reyes S, Galaviz-Mosqueda A, Kober V, Rivera-Rodriguez R, Lozano Rizk JE (2021) Deep-learning based detection of COVID-19 using lung ultrasound imagery. PLoS One 16(8):e0255886

Barros B, Lacerda P, Albuquerque C, Conci A (2021) Pulmonary COVID-19: Learning spatiotemporal features combining CNN and LSTM networks for lung ultrasound video classification. Sensors. 21:5486. https://doi.org/10.3390/s21165486

Dastider AG, Sadik F, Fattah SA (2021) An integrated autoencoder-based hybrid CNN-LSTM model for COVID-19 severity prediction from lung ultrasound. Comput Biol Med 132:104296. https://doi.org/10.1016/j.compbiomed.2021.104296

Awasthi N, Dayal A, Cenkeramaddi LR, Yalavarthy PK (2021) Mini-COVIDNet: efficient lightweight deep neural network for ultrasound-based point-of-care detection of COVID-19. IEEE Trans Ultrason Ferroelectr Freq Control 68(6):2023–2037

Hu Z, Liu Z, Dong Y et al (2021) Evaluation of lung involvement in COVID-19 pneumonia based on ultrasound images. Biomed Eng Online 20(1):1–15

Khan U et al (2022) Deep Learning-Based Classification of Reduced Lung Ultrasound Data From COVID-19 Patients. IEEE Trans Ultrason Ferroelectr Freq Control 69(5):1661–1669

Xing W, He C, Li J, Qin W, Yang M, Li G, Li Q et al (2022) Automated lung ultrasound scoring for evaluation of coronavirus disease 2019 pneumonia using two-stage cascaded deep learning model. Biomed Signal Process Cont 75:103561

Wang J, Yang X, Zhou B, Sohn JJ, Zhou J, Jacob JT, Higgins KA, Bradley JD, Liu T (2022) Review of Machine Learning in Lung Ultrasound in COVID-19 Pandemic. J Imaging 8(3):65

Zhao L, Lediju Bell MA (2022) A review of deep learning applications in lung ultrasound imaging of COVID-19 patients. BME Frontiers, vol. 2022

Ding W, Abdel-Basset M, Hawash H, Pratama M, Pedrycz W (2023) Generalizable Segmentation of COVID-19 Infection From Multi-Site Tomography Scans: A Federated Learning Framework. IEEE Transactions on Emerging Topics in Computational Intelligence, pp1-14

Song J, Ebadi A, Florea A, Xi P, Tremblay S, Wong A (2023) COVID-Net USPro: An Explainable Few-Shot Deep Prototypical Network for COVID-19 Screening Using Point-of-Care Ultrasound. Sensors 23(5):2621

Vasquez C, Romero SE, Zapana J, Paucar J, Marini TJ, Castaneda B (2023) Automatic detection of lung ultrasound artifacts using a deep neural networks approach. In 18th International Symposium on Medical Information Processing and Analysis, vol. 12567, pp. 336-345. SPIE

Shea DE, Kulhare S, Millin R, Laverriere Z, Mehanian C, Delahunt CB, Banik D et al (2023) Deep Learning Video Classification of Lung Ultrasound Features Associated With Pneumonia. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 3102-3111

Born J, Brändle G, Cossio M, Disdier M, Goulet J, Roulin J et al (2020) Automatic Detection of COVID-19 from Ultrasound Data. [cited 2022 July 30]. Database POCUS [Internet]. Available from: https://github.com/jannisborn/covid19_pocus_ultrasound

Berrar D (2019) Cross-validation. Encyc Bioinform Comput Biol 1:542–545

Chollet F (2017) Xception: Deep learning with depthwise separable convolutions. In: IEEE conference on computer vision and pattern recognition. IEEE; 1251–1258

Maier A, Syben C, Lasser T, Riess C (2019) A gentle introduction to deep learning in medical image processing. Z Med Phys 29:86–101

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE international conference on computer vision, 1026-1034

Ioffe S, Szegedy C (2015) Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International conference on machine learning, pp. 448-456. PMLR

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15(1):1929–1958

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980

Hossin M, Sulaiman MN (2015) A review on evaluation metrics for data classification evaluations. Int J Data Min Knowl Manag Process 5(5):1–11

Che H, Radbel J, Sunderram J, Nosher JL, Patel VM, Hacihaliloglu I (2021) Multi-feature multi-scale CNN-derived COVID-19 classification from lung ultrasound data. In 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), pp 2618-2621. IEEE

Dastider AG, Sadik F, Fattah SA (2021) An integrated autoencoder-based hybrid CNN-LSTM model for COVID-19 severity prediction from lung ultrasound. Comput Biol Med 132:104296

Arntfield R, VanBerlo B, Alaifan T, Phelps N, White M, Chaudhary R, Ho J, Derek W (2021) Development of a convolutional neural network to differentiate among the etiology of similar appearing pathological B lines on lung ultrasound: a deep learning study. BMJ Open 11(3):e045120

Zheng W, Yan L, Gou C, Zhang Z-C, Zhang JJ, Hu M, Wang F-Y (2021) Pay attention to doctor-patient dialogues: Multi-modal knowledge graph attention image-text embedding for COVID-19 diagnosis. Inf Fusion 75:168–185

Saif AFM, Imtiaz T, Rifat S, Shahnaz C, Zhu W-P, Ahmad MO (2021) CapsCovNet: A modified capsule network to diagnose Covid-19 from multimodal medical imaging. IEEE Trans Artificial Intell 2(6):608–617

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Madhu, G., Kautish, S., Gupta, Y. et al. XCovNet: An optimized xception convolutional neural network for classification of COVID-19 from point-of-care lung ultrasound images. Multimed Tools Appl 83, 33653–33674 (2024). https://doi.org/10.1007/s11042-023-16944-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-16944-z