Abstract

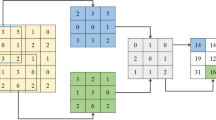

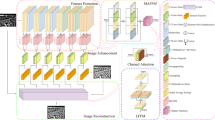

This study proposes a novel fusion framework for infrared and visual images based on a full convolutional network (FCN) in the local non-subsampled shearlet transform (LNSST) domain. First, the LNSST is used as a multi-scale analysis tool to decompose the source images into low-frequency and high-frequency sub-images. Second, the coefficients of the high-frequency sub-images are fed into the FCN to obtain the weight map, and then the average gradient (AVG) is used as the fusion rule to fuse the high-frequency sub-images while the low-frequency coefficients are fused by local energy fusion strategy. Finally, the inverse of the LNSST is applied to obtain the final fused image. The experimental results showed that the proposed fusion framework performed better than other typical fusion methods in both visual quality and objective assessment.

Similar content being viewed by others

References

Bai X (2016) Morphological center operator based infrared and visible image fusion through correlation coefficient[J]. Infrared Phys Technol 76:546–554

Bavirisetti DP, Dhuli R (2015) Fusion of infrared and visible sensor images based on anisotropic diffusion and Karhunen-Loeve transform[J]. IEEE Sensors J 16 (1):203–209

Bottou L (2010) Large-scale machine learning with stochastic gradient descent[M]. In: Proceedings of COMPSTAT’2010. Physica-Verlag HD, pp 177–186

Cheng B, Jin L, Li G (2018) Infrared and visual image fusion using LNSST and an adaptive dual-channel PCNN with triple-linking strength[J]. Neurocomputing 310:135–147

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks[C]. In: Proceedings of the thirteenth international conference on artificial intelligence and statistics, pp 249–256

Han Y, Cai Y, Cao Y et al (2013) A new image fusion performance metric based on visual information fidelity[J]. Inform Fus 14(2):127–135

Hossny M, Nahavandi S, Creighton D et al (2010) Image fusion performance metric based on mutual information and entropy driven quadtree decomposition[J]. Electron Lett 46(18):1266–1268

Jiang Z-t, WU H, Zhou X-l (2018) Infrared and visible image fusion algorithm based on improved guided filtering and dual-channel spiking cortical model[J]. Acta Opt Sin 38(2):112–120

Jin-xi L, Ding-fu Z, Sheng Y et al (2018) Modified image fusion technique to remove defocus noise in optical scanning holography[J]. Opt Commun 407:234–238

Kumar BKS (2015) Image fusion based on pixel significance using cross bilateral filter[J]. Signal Image Vid Process 9(5):1193–1204

Lewis JJ, O’Callaghan RJ, Nikolov SG et al (2007) Pixel-and region-based image fusion with complex wavelets[J]. Inform Fus 8(2):119–130

Li S, Kang X, Hu J (2013) Image fusion with guided filtering[J]. IEEE Trans Image Process 22(7):2864–2875

Li H, Wu XJ, Kittler J (2018) Infrared and visible image fusion using a deep learning framework[C]. In: 2018 24th International conference on pattern recognition (ICPR). IEEE, pp 2705–2710

Li J, Song M, Peng Y (2018) Infrared and visible image fusion based on robust principal component analysis and compressed sensing[J]. Infrared Phys Technol 89:129–139

Liu Y, Chen X, Ward RK et al (2016) Image fusion with convolutional sparse representation[J]. IEEE Signal Process Lett 23(12):1882–1886

Liu Y, Chen X, Peng H et al (2017) Multi-focus image fusion with a deep convolutional neural network[J]. Inform Fus 36:191–207

Liu Y, Chen X, Cheng J et al (2018) Infrared and visible image fusion with convolutional neural networks[J]. Int J Wavelets Multiresol Inform Process 16 (03):1850018

Lu H, Li Y, Mu S et al (2017) Motor anomaly detection for unmanned aerial vehicles using reinforcement learning[J]. IEEE Internet Things J 5(4):2315–2322

Lu H, Li Y, Chen M et al (2018) Brain intelligence: go beyond artificial intelligence[J]. Mob Netw Appl 23(2):368–375

Lu H, Li Y, Uemura T et al (2018) Low illumination underwater light field images reconstruction using deep convolutional neural networks[J]. Futur Gener Comput Syst 82:142–148

Lu H, Wang D, Li Y et al (2019) CONet: a cognitive ocean network[J]. arXiv:1901.06253

Ma J, Chen C, Li C et al (2016) Infrared and visible image fusion via gradient transfer and total variation minimization[J]. Inform Fus 31:100–109

Ma Y, Chen J, Chen C et al (2016) Infrared and visible image fusion using total variation model[J]. Neurocomputing 202:12–19

Ma J, Zhou Z, Wang B et al (2017) Infrared and visible image fusion based on visual saliency map and weighted least square optimiza-tion[J]. Infrared Phys Technol 82:8–17

Serikawa S, Lu H (2014) Underwater image dehazing using joint trilateral filter[J]. Comput Electr Eng 40(1):41–50

Toet A (1989) A morphological pyramidal image decomposition[J]. Pattern Recogn Lett 9(4):255–261

Wang W, Chang F (2011) A multi-focus image fusion method based on Laplacian pyramid[J]. JCP 6(12):2559–2566

Xiang Y, Jun M (2018) Image fusion method based on entropy rate segmentation and multi-scale decomposition[J]. Laser Optoelectron Progress 1:33

Xiang T, Yan L, Gao R (2015) A fusion algorithm for infrared and visible images based on adaptive dual-channel unit-linking PCNN in NSCT domain[J]. Infrared Phys Technol 69:53–61

Xydeas CS, Petrovic V (2000) Objective image fusion performance measure[J]. Electron Lett 36(4):308–309

Yan X, Qin H, Li J et al (2015) Infrared and visible image fusion with spectral graph wavelet transform[J]. JOSA A 32(9):1643–1652

Zhang Q, Guo B (2009) Multifocus image fusion using the nonsubsampled contourlet transform[J]. Signal Process 89(7):1334–1346

Zhang B, Lu X, Pei H et al (2015) A fusion algorithm for infrared and visible images based on saliency analysis and non-subsampled Shearlet transform[J]. Infrared Phys Technol 73:286–297

Zhao C, Shao G, Ma L et al (2014) Image fusion algorithm based on redundant-lifting NSWMDA and adaptive PCNN[J]. Optik-Int J Light Electron Opt 125(20):6247–6255

Zhao W, Lu H, Wang D (2017) Multisensor image fusion and enhancement in spectral total variation domain[J]. IEEE Trans Multimed 20(4):866–879

Zhou Z, Wang B, Li S et al (2016) Perceptual fusion of infrared and visible images through a hybrid multi-scale decomposition with Gaussian and bilateral filters[J]. Inform Fus 30:15–26

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Feng, Y., Lu, H., Bai, J. et al. Fully convolutional network-based infrared and visible image fusion. Multimed Tools Appl 79, 15001–15014 (2020). https://doi.org/10.1007/s11042-019-08579-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-08579-w