Abstract

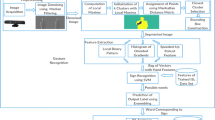

Sign language is the only means of communication for speech and hearing impaired people. Using machine translation, Sign Language Recognition (SLR) systems provide medium of communication between speech and hearing impaired and others who have difficulty in understanding such languages. However, most of the SLR systems require the signer to sign in front of the capturing device/sensor. Such systems fail to recognize some gestures when the relative position of the signer is changed or when the body occlusion occurs due to position variations. In this paper, we present a robust position invariant SLR framework. A depth-sensor device (Kinect) has been used to obtain the signer’s skeleton information. The framework is capable of recognizing occluded sign gestures and has been tested on a dataset of 2700 gestures. The recognition process has been performed using Hidden Markov Model (HMM) and the results show the efficiency of the proposed framework with an accuracy of 83.77% on occluded gestures.

Similar content being viewed by others

References

Almeida S G M, Guimarães F G, Ramírez J A (2014) Feature extraction in Brazilian sign language recognition based on phonological structure and using rgb-d sensors. Expert Syst Appl 41(16):7259–7271

Athitsos V, Sclaroff S (2003) Estimating 3d hand pose from a cluttered image. In: Computer Vision and Pattern Recognition, volume 2, pp II–432

Bianne-Bernard A-L, Menasri F, Mohamad R A-H, Mokbel C, Kermorvant C, Likforman-Sulem L (2011) Dynamic and contextual information in hmm modeling for handwritten word recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence 33(10):2066–2080

Bleiweiss A, Eshar D, Kutliroff G, Lerner A, Oshrat Y, Yanai Y (2010) Enhanced interactive gaming by blending full-body tracking and gesture animation. In: ACM SIGGRAPH ASIA Sketches, p 34

Chai X, Li G, Lin Y, Xu Z, Tang Y, Chen X, Zhou M (2013) Sign language recognition and translation with kinect. In: Conference on Automatic Face and Gesture Recognition

de Campos T E, Murray D W (2006) Regression-based hand pose estimation from multiple cameras. In: International Conference on Computer Vision and Pattern Recognition, vol 1, pp 782–789

Dominio F, Donadeo M, Zanuttigh P (2014) Combining multiple depth-based descriptors for hand gesture recognition. Pattern Recogn Lett 50:101–111

Dong C, Leu M C, Yin Z (2015) American sign language alphabet recognition using microsoft kinect. In: Conference on Computer Vision and Pattern Recognition Workshops, pp 44–52

Elliott R, Cooper H, Ong E-J, Glauert J, Bowden R, Lefebvre-Albaret F (2011) Search-by-example in multilingual sign language databases. In: Sign Language Translation and Avatar Technologies Workshops

Escalera S, Gonzàlez J, Baró X, Reyes M, Lopes O, Guyon I, Athitsos V, Escalante H (2013) Multi-modal gesture recognition challenge 2013: Dataset and results. In: 15th International conference on multimodal interaction, pp 445–452

Escobedo-Cardenas E, Camara-Chavez G (2015) A robust gesture recognition using hand local data and skeleton trajectory. In: International Conference on Image Processing, pp 1240–1244

Fernando B, Efstratios G, Oramas J, Ghodrati A, Tuytelaars T (2016) Rank pooling for action recognition. IEEE transactions on pattern analysis and machine intelligence

García Incertis I, Gomez Garcia-Bermejo J, Zalama Casanova E (2006) Hand gesture recognition for deaf people interfacing 18th International Conference on Pattern Recognition, vol 2, pp 100–103

González-Ortega D, Díaz-pernas F J, Martínez-Zarzuela M, Antón-Rodríguez M (2014) A kinect-based system for cognitive rehabilitation exercises monitoring. Comput Methods Prog Biomed 113(2):620–631

Huang J, Zhou W, Li H, Li W (2015) Sign language recognition using 3d convolutional neural networks. In: International Conference on Multimedia and Expo, pp 1–6

Jiaxiang W U, Cheng J, Zhao C, Hanqing L U (2013) Fusing multi-modal features for gesture recognition. In: 15th International conference on multimodal interaction, pp 453–460

Kaur B, Singh D, Roy P P A novel framework of eeg-based user identification by analyzing music-listening behavior. Multimedia Tools and Applications

Keskin C, Kıraç F, Kara Y E, Akarun L (2013) Real time hand pose estimation using depth sensors. In: Consumer Depth Cameras for Computer Vision. Springer, pp 119–137

Kumar P, Gauba H, Roy P P, Dogra D P (2016) A multimodal framework for sensor based sign language recognition. Neurocomputing

Kumar P, Gauba H, Roy P P, Dogra D P (2016) Coupled hmm-based multi-sensor data fusion for sign language recognition. Pattern Recognition Letters

Kumar P, Saini R, Roy P, Dogra D (2016) Study of text segmentation and recognition using leap motion sensor. IEEE Sensors Journal

Kumar P, Saini R, Roy P P, Dogra D P (2016) 3d text segmentation and recognition using leap motion. Multimedia Tools and Applications

Kumar P, Saini R, Roy P P, Dogra D P (2017) A bio-signal based framework to secure mobile devices. Journal of Network and Computer Applications

Kuznetsova A, Leal-Taixé L, Rosenhahn B (2013) Real-time sign language recognition using a consumer depth camera. In: International Conference on Computer Vision Workshops, pp 83–90

Lang S, Block M, Rojas R (2012) Sign language recognition using kinect. In: International Conference on Artificial Intelligence and Soft Computing, pp 394–402

Li Y (2012) Hand gesture recognition using kinect. In: International Conference on Computer Science and Automation Engineering, pp 196–199

Lim K M, Tan A W C, Tan S C (2016) A feature covariance matrix with serial particle filter for isolated sign language recognition. Expert Syst Appl 54:208–218

Liu X, Fujimura K (2004) Hand gesture recognition using depth data. In: Automatic Face and Gesture Recognition, pp 529–534

Machida E, Cao M, Murao T, Hashimoto H (2012) Human motion tracking of mobile robot with kinect 3d sensor. In: Annual Conference of The Society of Instrument and Control Enginners, pp 2207–2211

Marin G, Dominio F, Zanuttigh P (2015) Hand gesture recognition with jointly calibrated leap motion and depth sensor. Multimedia Tools and Applications

Martínez-Camarena M, Oramas M J, Tuytelaars T (2015) Towards sign language recognition based on body parts relations. In: International Conference on Image Processing, pp 2454–2458

Miranda L, Vieira T, Martinez D, Lewiner T, Vieira A W, Campos M F M (2012) Real-time gesture recognition from depth data through key poses learning and decision forests. In: Conference on graphics, Patterns and Images, vol 25, pp 268–275

Monir S, Rubya S, Ferdous H S (2012) Rotation and scale invariant posture recognition using microsoft kinect skeletal tracking feature. In: International Conference on Intelligent Systems Design and Applications, vol 12, pp 404–409

Ong E-J, Cooper H, Pugeault N, Bowden R (2012) Sign language recognition using sequential pattern trees. In: Conference on Computer Vision and Pattern Recognition, pp 2200–2207

Patsadu O, Nukoolkit C, Watanapa B (2012) Human gesture recognition using kinect camera. In: International Joint Conference on Computer Science and Software Engineering, pp 28–32

Potter L E, Araullo J, Carter L (2013) The leap motion controller: a view on sign language. In: 25th Australian computer-human interaction conference: augmentation, application, innovation, collaboration, pp 175–178

Rabiner L (1989) A tutorial on hidden markov models and selected applications in speech recognition. Readings in Speech Recognition 77(2):257–286

Ren Z, Meng J, Yuan J, Zhang Z (2011) Robust hand gesture recognition with kinect sensor. In: 19th International Conference on Multimedia, pp 759–760

Starner T, Weaver J, Pentland A (1998) Real-time american sign language recognition using desk and wearable computer based video. IEEE Transactions on Pattern Analysis and Machine Intelligence 20(12):1371–1375

Suarez J, Murphy R R (2012) Hand gesture recognition with depth images: A review. In: International Symposium on Robot and Human Interactive Communication, vol 21, pp 411–417

Sun C, Zhang T, Bao B-K, Changsheng X U, Mei T (2013) Discriminative exemplar coding for sign language recognition with kinect. IEEE Transactions on Cybernetics 43(5):1418–1428

Uebersax D, Gall J, Van den Bergh M, Gool L V (2011) Real-time sign language letter and word recognition from depth data. In: International Conference on Computer Vision Workshops, pp 383–390

Yang H-D (2014) Sign language recognition with the kinect sensor based on conditional random fields. Sensors 15(1):135–147

Yao Y, Yun F U (2014) Contour model-based hand-gesture recognition using the kinect sensor. IEEE Transactions on Circuits and Systems for Video Technology 24 (11):1935–1944

Zafrulla Z, Brashear H, Starner T, Hamilton H, Presti P (2011) American sign language recognition with the kinect. In: International conference on multimodal interfaces, vol 13, pp 279–286

Zhang X U, Chen X, Li Y, Lantz V, Wang K, Yang J (2011) A framework for hand gesture recognition based on accelerometer and emg sensors. Transactions on Systems, Man, and Cybernetics-Part A: Systems and Humans 41(6):1064–1076

Acknowledgments

The authors would like to thank the anonymous reviewers for their constructive comments and suggestions to improve the quality of the paper. We are also thankful to our signers who are the students of an intermediate school ‘Anushruti’ at IIT Roorkee, India.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kumar, P., Saini, R., Roy, P.P. et al. A position and rotation invariant framework for sign language recognition (SLR) using Kinect. Multimed Tools Appl 77, 8823–8846 (2018). https://doi.org/10.1007/s11042-017-4776-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-4776-9