Abstract

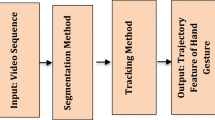

With the increase of innovations in vision-based hand gesture interaction system, new techniques and algorithms are being developed by researchers. However, less attention has been paid on the scope of dismantling hand tracking problems. There is also limited publicly available database developed as benchmark data to standardize the research on hand tracking area. For this purpose, we develop a versatile hand gesture tracking database. This database consists of 60 video sequences containing a total of 15,554 RGB color images. The tracking sequences are captured in different situations ranging from an easy indoor scene to extremely high challenging outdoor scenes. Complete with annotated ground truth data, this database is made available on the web for the sake of assisting other researchers in the related fields to test and evaluate their algorithms based on standard benchmark data.

Similar content being viewed by others

References

Asaari M, Suandi S (2010) Fusion of motion and color cue for hand detection and tracking. In: World engineering congress. Sarawak, Malaysia, pp 240–245

Asaari M, Suandi S (2010) Hand gesture tracking system using adaptive Kalman filter. In: International conference on intelligent systems design and applications. Cairo, Egypt, pp 166–171

Asaari M, Suandi S (2012) Real time hand tracking system using predictive eigenhand tracker. Aust J Basic Appl Sci 6(2):190–198

Assaleh K, Shanableh T, Hajjaj H (2009) Recognition of handwritten arabic alphabet via hand motion tracking. J Franklin Inst 346(2):175–189

Barczak ALC, Reyes NH, Abastillas M, Piccio A, Susnjak T (2011) A new 2D static hand gesture colour image dataset for asl gestures. Res Lett Inf Math Sci 15:12–20

Bhuyan MK, Bora PK, Ghosh D (2008) Trajectory guided recognition of hand gestures having only global motions. Int J Electr Comput En 3(4):222–233

Boato G, Conci N, Daldoss M, De Natale F, Piotto N (2009) Hand tracking and trajectory analysis for physical rehabilitation. In: IEEE international workshop on multimedia signal processing. MMSP’09. IEEE, Piscataway, pp 1–6

Dadgostar F, Barczak A, Sarrafzadeh A (2005) A color hand gesture database for evaluating and improving algorithms on hand gesture and posture recognition. Res Lett Inf Math Sci 7:127–134

Dreuw P, Neidle C, Athitsos V, Sclaroff S, Ney H (2008) Benchmark databases for video-based automatic sign language recognition. In: International conference on language resources and evaluation (LREC). Marrakech, Morocco, pp 1115–1121

Dreuw P, Rybach D, Deselaers T, Zahedi M, Ney H (2007) Speech recognition techniques for a sign language recognition system. In: Interspeech. Antwerp, Belgium, pp 2513–2516

Elmezain M, Al-Hamadi A, Michaelis B (2009) Hand trajectory-based gesture spotting and recognition using HMM. In: 2009 16th IEEE international conference on image processing (ICIP). IEEE, Piscataway, pp 3577–3580

Erol A, Bebis G, Nicolescu M, Boyle R, Twombly X (2007) Vision-based hand pose estimation: a review. Comput Vis Image Underst 108(1–2):52–73

Gandy M, Starner T, Auxier J, Ashbrook D (2000) The gesture pendant: a self-illuminating, wearable, infrared computer vision system for home automation control and medical monitoring. In: International symposium on wearable computers. Atlanta, Georgia, USA, p 87

Gunes H, Piccardi M (2006) A bimodal face and body gesture database for automatic analysis of human nonverbal affective behavior. In: 18th international conference on pattern recognition. ICPR 2006, vol 1, pp 1148–1153

Holte M, Stórring M (2004) Documentation of pointing and command gestures under mixed illumination conditions: video sequence database. Available online: http://www-prima.inrialpes.fr/FGnet/data/03-Pointing/index.html

Hwang B, Kim S, Lee S (2006) A full-body gesture database for automatic gesture recognition. In: 7th international conference on automatic face and gesture recognition. FGR 2006, pp 243–248

Imagawa K, Lu S, Igi S (1998) Color based hands tracking system for sign language recognition. In: International conference on face and gesture recognition. Nara, Japan, pp 462–467

Ishida H, Takahashi T, Ide I, Murase H (2010) A Hilbert warping method for handwriting gesture recognition. Pattern Recogn 43(8):2799–2806

Just A, Marcel S (2005) Idiap two-handed datasets. Available online: http://www.idiap.ch/~marcel/Databases/twohanded/main.php

Karami A, Zanj B, Sarkaleh AK (2011) Persian sign language (PSL) recognition using wavelet transform and neural networks. Expert Syst Appl 38(3):2661–2667

Khan R, Ibraheem N (2012) Survey on gesture recognition for hand image postures. Comput Inf Sci 5(3):110–121

Kim T, Wong S, Cipolla R (2007) Tensor canonical correlation analysis for action classification. In: IEEE conference on computer vision and pattern recognition. CVPR’07. IEEE, Piscataway, pp 1–8

Konrad T, Demirdjian D, Darrell T (2003) Gesture + play: full-body interaction for virtual environments. In: Extended abstracts on human factors in computing systems, pp 620–621

Lee D, Hong K (2010) Game interface using hand gesture recognition. In: International conference on computer sciences and convergence information technology. Seoul, Korea, pp 1092–1097

Marcel S (2000) Sebastien Marcel Dynamic Hand Posture Database. Available online: http://www.idiap.ch/resource/gestures/

Mitra S, Acharya T (2007) Gesture recognition: a survey. IEEE Trans Syst Man Cybern, Part C Appl Rev 37(3):311–324

Murthy G, Jadon R (2009) A review of vision based hand gestures recognition. Int J Inf Technol Knowl Manag 2(2):405–410

Nickel K, Stiefelhagen R (2007) Visual recognition of pointing gestures for human-robot interaction. Image Vis Comput 25(12):1875–1884

Pavlovic V, Sharma R, Huang T (1997) Visual interpretation of hand gestures for human–computer interaction: a review. IEEE Trans Pattern Anal Mach Intell 19(7):677–695

Rogalla O, Ehrenmann M, Zollner R, Becher R, Dillmann R (2002) Using gesture and speech control for commanding a robot assistant. In: International workshop on robot and human interactive communication. Berlin, Germany, pp 454–459

Shan C, Tan T, Wei Y (2007) Real-time hand tracking using a mean shift embedded particle filter. Pattern Recogn 40(7):1958–1970

Starner T, Leibe B, Singletary B, Pair J (2000) Mind-warping: towards creating a compelling collaborative augmented reality game. In: International conference on intelligent user interfaces. New Orleans, Louisiana, USA, pp 256–259

Starner T, Weaver J, Pentland A (1998) Real-time american sign language recognition using desk and wearable computer based video. IEEE Trans Pattern Anal Mach Intell 20(12):1371–1375

Wachs J, Kölsch M, Stern H, Edan Y (2011) Vision-based hand-gesture applications. Commun ACM 54(2):60–71

Wachs J, Stern H, Edan Y, Gillam M, Handler J, Feied C, Smith M (2008) A gesture-based tool for sterile browsing of radiology images. J Am Med Inform Assoc 15(3):321–323

Wang C, Wang K (2009) Hand posture recognition using adaboost with sift for human robot interaction. In: Recent progress in robotics: viable robotic service to human, pp 317–329

Wilbur R, Kak A (2006) Purdue RVL-SLLL American sign language database. Technical reports. School of Electrical and Computer Engineering, Technical Report TR-06-12, Purdue University

Yang J, Chen C, Jeng M (2010) Integrating video-capture virtual reality technology into a physically interactive learning environment for English learning. Comput Educ 55(3):1346–1356

Yang M, Ahuja N (1999) Recognizing hand gesture using motion trajectories. In: IEEE Computer Society conference on computer vision and pattern recognition, vol 1. IEEE, Piscataway

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Asaari, M.S.M., Rosdi, B.A. & Suandi, S.A. Intelligent Biometric Group Hand Tracking (IBGHT) database for visual hand tracking research and development. Multimed Tools Appl 70, 1869–1898 (2014). https://doi.org/10.1007/s11042-012-1212-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-012-1212-z