Abstract

Reward cues have been found to increase the investment of effort in tasks even when cues are presented suboptimally (i.e. very briefly), making them hard to consciously detect. Such effort responses to suboptimal reward cues are assumed to rely mainly on the mesolimbic dopamine system, including the ventral striatum. To provide further support for this assumption, we performed two studies investigating whether these effort responses vary with individual differences in markers of striatal dopaminergic functioning. Study 1 investigated the relation between physical effort responses and resting state eye-blink rate. Study 2 examined cognitive effort responses in relation to individually averaged error-related negativity. In both studies effort responses correlated with the markers only for suboptimal, but not for optimal reward cues. These findings provide further support for the idea that effort responses to suboptimal reward cues are mainly linked to the mesolimbic dopamine system, while responses to optimal reward cues also depend on higher-level cortical functions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

People generally invest more effort in demanding tasks if the anticipated rewards are more valuable (Brehm and Self 1989; Gendolla et al. 2012). As such, cues that indicate that valuable rewards are at stake increase effort expenditure during a wide variety of physical and cognitive tasks. These effort responses are generally considered to result from a deliberate decision making process, in which people weigh the pros and cons of potential effortful actions. In other words, people are thought to weigh the value of anticipated rewards (the benefits) against their respective effort requirements (the costs). Challenging this traditional perspective on human motivation, a recent series of studies has shown that reward cues can instigate effortful behavior even when these cues are presented suboptimally (i.e. very briefly), which makes conscious processing less likely (see for reviews Bijleveld et al. 2012b; Custers and Aarts 2010). So, the expenditure of effort may be initiated without deliberate decisions.

At this point, the neurobiological mechanisms that underlie this intriguing phenomenon are still subject to debate. In the present research, we explore the idea that effort responses to suboptimal reward cues are dependent on dopaminergic activity in the ventral striatum. Specifically, we use two physiological correlates of such activity—resting state eye-blink rate (EBR, Study 1) and error-related negativity (ERN, Study 2)—to examine the relation between effort responses and dopaminergic functioning. In doing so, we hope to further our understanding of how reward cues influence effort expenditure in the absence of deliberate decisions.

Effort responses to suboptimal reward cues

Pessiglione et al. (2007) first demonstrated that suboptimal reward cues can affect the expenditure of effort even when people cannot consciously discriminate between high and low reward cues. In their experiment, participants could earn money by squeezing forcefully into a handgrip. At the beginning of each trial of their experiment, a coin was presented that represented the amount of money that was at stake. This coin was either one British pound (high reward) or one penny (low reward). The harder participants squeezed the handgrip, the higher the proportion of the presented reward they received. Remarkably, participants not only squeezed harder on high versus low reward trials when the coins were presented long enough to be clearly visible, but also when they were presented too briefly to allow conscious discrimination. For optimal reward cues, functional MRI data showed significant brain activation for high (vs. low) reward cues (see also Van Hell et al. 2010), especially in the ventral striatum and ventral pallidum, which are output channels of the striatal dopamine system (Heimer and Van Hoesen 2006). However, although there was a significant increase in the effort people invested in the task for high versus low reward cues, no significant differences in brain activation were found for suboptimal reward cues. Hence, the neurobiological basis of the behavioral consequences of suboptimal reward cues is still unclear.

Using behavioral paradigms, though, a considerable number of studies have found reliable effects of suboptimal reward cues on effortful behavior (see for an overview Capa and Custers 2013). Although the effects on task performance are often similar to those generated by optimal reward cues (e.g., Bijleveld et al. 2009), recently researchers have started to study the circumstances under which the effects of optimal and suboptimal reward cues diverge. Bijleveld et al. (2010), for instance, looked at speed-accuracy trade-offs in a math task in which the percentage of the cued reward that was earned for correct answers declined with time. Suboptimal high (vs. low) reward cues were found to increase speed without affecting accuracy. Optimal reward cues caused a slowdown, but an increase in accuracy. Whereas optimal reward cues clearly altered behavior through strategic processes (i.e. slowing down and thus sacrificing a little bit of the reward in order to be more accurate), suboptimal reward cues seemed to only boost the effort people exerted on the trial. Such dissociations have been found in various other studies (e.g., Zedelius et al. 2011, 2012), suggesting that optimal and suboptimal reward cues affect the investment of effort through processes that are (at least partly) distinct.

To account for these findings, Bijleveld et al. (2012b) have proposed that responses to reward cues can be understood by distinguishing two phases in the processing of reward cues. Initially, rewards are valuated and processed by rudimentary brain structures, which may occur without conscious awareness. As a consequence of such initial processing, effort is increased (but only if the task demands it, Bijleveld et al. 2009), which in turn facilitates performance. This initial reward processing is thought to rely on the mesolimbic dopamine system, including the ventral striatum, which supports effort responses to rewards in animals that lack the cortical sophistication that is characteristic of humans (e.g., Phillips et al. 2007). If a reward cue is presented long enough, however, the reward is thought to undergo full reward processing which enables deliberate decision making. In this case, information carried by the reward cue becomes available to higher-order brain functions, presumably located in the cortex. Associated with conscious deliberation, these higher-order functions enable strategic reward-related decision-making processes and enable people to reflect on the reward that is at stake. Such higher-order functions can affect performance beyond the mere expense of effort. For example, they change tradeoffs in speed versus accuracy (Bijleveld et al. 2010), change the way attention is deployed to task stimuli (Bijleveld et al. 2011), and induce people to disengage from tasks altogether when the payoff is deemed too small (Bijleveld et al. 2012a).

Based on the theoretical framework laid out above, it can be hypothesized that activity in the ventral striatum is especially predictive of performance when reward cues are presented suboptimal and only processed initially (unless there is less room for strategic behavior, e.g., Pessiglione et al. 2007). After all, we suggest that initially processed rewards directly boost the expenditure of effort in demanding tasks, making use of only this subcortical infrastructure (and not of strategic functions located in the cortex). In the present paper, we test this idea. Specifically, we investigate whether individual differences in striatal dopaminergic functioning predict performance due to initially processed reward cues.

Striatal dopaminergic functioning

The ventral striatum has been identified as a key structure in the processing of reward cues by studies showing that activity in the striatum was correlated with the value of the rewards at stake (Bjork and Hommer 2007; Knutson and Greer 2008; Schultz et al. 1997). The striatum connects to various other structures, such as the pallidum, that are implicated in goal-directed behavior (Aston-Jones and Cohen 2005; Knutson et al. 2008) and has been linked to reward prediction during learning tasks (O’Doherty et al. 2004; Pessiglione et al. 2007). These findings are in line with research on rodents, demonstrating that pallidal and striatal neurons encode for rewarding properties of environmental stimuli (Tindell et al. 2006).

Key to the activity in these reward centers of the brain is the neurotransmitter dopamine (Björklund and Lindvall 1984). Stimuli signaling potential rewards as well as the presence of rewards have been found to trigger dopamine release in the striatum, which respectively increases effort directed at reward attainment and learning of stimulus-reward contingencies (Schultz 2006). Although the way in which dopamine influences reward-directed effort is not yet fully understood (Braver et al. 2014), it appears that general levels of dopamine in the striatum have to be taken into account to predict effects on motivation. That is, apart from phasic shifts in dopamine, tonic dopamine levels seem to affect the overall vigor with which rewards are pursued. These levels are affected by recently encountered rewards, general motivational states such as thirst and hunger, but also individual differences in baseline dopamine levels (Niv et al. 2007). In rats, striatal dopamine depletion (i.e. low baseline level) has been found to be associated with a lack of reward pursuit, especially when pursuit is effortful (Phillips et al. 2007). Baseline striatal dopamine levels, then, may be related to general dopaminergic functioning and moderate the effect of reward cues on effort responses. Therefore, if effort responses to suboptimal reward cues are dependent on initial processing in the striatum, these effort responses to these reward cues are likely to be correlated with striatal dopaminergic functioning.

To test this hypothesis, we present two studies in which we investigate whether effort responses to reward cues are related to two different markers of striatal dopamine functioning: EBR and ERN. In Study 1, we investigated if resting state EBR is related to physical effort responses in reaction to suboptimal reward cues. In Study 2, we used another marker of striatal dopamine functioning, individually averaged ERN, and investigated whether this measure is related to mental effort.

Study 1

Resting state EBR is strongly linked to activity in the striatal dopamine system (Karson 1983). Investigating Parkinson patients that are characterized by low EBRs and low levels of striatal dopamine, Karson showed that patients receiving levodopa medication (a dopamine agonist) exhibited twice the mean EBR of that of other Parkinson patients. Furthermore, the more symptomatic patients of the non-levodopa group showed significantly lower blink rates. Other clinical observations show elevated EBR in patients with increased levels of dopamine in the striatum, including symptomatic schizophrenic patients (Howes and Kapur 2009; Kegeles et al. 2010).

Importantly, EBR has been found to be correlated with personality traits such as impulsivity, novelty seeking, and positive emotionality, which in turn are associated with reward sensitivity (Dagher and Robbins 2009; Depue et al. 1994; Huang et al. 1994; Martin and Potts 2004). For instance, impulsive individuals tend to prefer immediate rewards, and choosing immediate rewards is associated with greater activity in areas innervated by the mesolimbic dopamine system, including the ventral striatum (Hariri et al. 2006; McClure et al. 2004). If effort responses to suboptimal reward cues indeed rely on striatal dopaminergic functioning, these responses should increase with EBR.

To examine the effects of reward cues on physical effort, we relied on a task that was used by Bijleveld et al. (2012a). In line with the research of Pessiglione et al. (2007), participants in this task are on each trial presented with either a high or low value coin that is displayed either for a brief or a long time interval. After the presentation of the reward cue, participants have to repeatedly press a button within a specific time limit in order to obtain the reward. We expected to find a reward effect (i.e. participants expending more effort in the high reward, 10-cent trials, than in the low reward, 1-cent trials) for both optimal and suboptimal reward cues, but that the occurrence of this effect would correlate positively with individual differences in EBR only for suboptimal cues.

Methods

Participants

Forty-one (33 female) healthy undergraduate and graduate students (M age = 21.24; SD = 1.61) participated in the study for a financial reward. Several selection criteria were applied (Colzato et al. 2009): All participants were healthy volunteers, and reported no psychiatric or neurologic disorders, nor brain trauma. Furthermore, participants declared not to use drugs or psychoactive medication, and did not smoke. In addition, per request participants did not consume any beverages on the day of the study that contained caffeine.

EBR measurement

EBR was recorded by using infrared videography technology (Tobii X120 Eye Tracker, 120 Hz sampling rate). Participants were asked to sit in a chair, in upright position, and look straight ahead for 5 min at a white fixation cross (35 by 35 mm) on a black screen (60 Hz LCD monitor with a resolution of 1,024 by 768, at a distance of 60 cm). An infrared eye tracker underneath the screen registered the participants’ eye blinks. After the actual thirty-second calibration, participants were told calibration of the eye tracker would continue for another 5 min, during which blinks where measured. A blink was defined using the points of missing data from both eyes between 100 and 500 ms (Aarts et al. 2012). After the removal of three outliers (>2 SD above the mean, with an unrealistically high EBR above 55 blinks/min suggesting artifacts from the equipment) and two participants suffering from hay fever, mean EBR per minute in our sample was 18.92 (SD = 10.38).

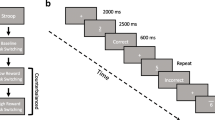

Experimental task

To assess the reward priming effect we used a ‘finger-tapping task’ in which effort expenditure was measured (Bijleveld et al. 2012a; see also Treadway et al. 2009), and this task was started directly after the EBR measurement. On each trial, participants needed to execute a specific amount of button presses (25) within a set time (3.5 s) in order to obtain a monetary reward. Finishing the button presses outside of the time limit was allowed, but simply did not lead to obtaining the reward. Each button press filled an empty circle in a bar on the screen, so that after each button press the bar filled up providing feedback. Participants received money when they successfully filled the bar within the time limit. Each trial started with a fixation cross displayed on the screen for 1,000 ms, followed by a 300 ms pre-mask, either the high or low value stimulus displayed long (300 ms) or brief (17 ms). The duration of the post mask was either 483 or 200 ms, to keep the total duration of the stimulus presentation constant. After a 1,000 ms fixation cross the response screen was visible. Trials ended with a feedback screen displaying whether the participant was fast enough in order to obtain the reward. This reward was equal to the value of the coin that was presented at the beginning of the trial. To prevent participants from using two hands, each trial was started by holding a key with the non-dominant hand while the dominant hand remained free to execute the button presses.

The study used a 2 (reward value: 1 vs. 10 cents) × 2 (reward presentation: suboptimal vs. optimal) within-subjects design. Participants started with 16 practice trials in order to get familiar with the task demands, and these were identical to the actual task but participants did not receive the money earned. The actual task thereafter consisted of 64 trials. Trials were presented in random order.

Reward cues

Following the exact same stimuli and procedure for reward presentation as Bijleveld et al. (2012a), the picture of 10 a Eurocent coin was taken as a high reward cue and the picture of a 1 Eurocent coin as a low reward cue. The pictures depicting the coin were 120 × 120 pixels in size and were presented for a brief (17 ms) or long (300 ms) time interval between masks. The suboptimal presentation time in combination with the masking proved too fast to allow conscious discrimination above change level in a standard signal detection task in previous research (Bijleveld et al. 2012a).

Results and discussion

Reward effect

The median tapping times (i.e. the time it took to press 25 times) were subjected to analysis of variance, with reward value (low vs. high) and reward presentation (suboptimal vs. optimal) as within-subjects variables. This analysis revealed a significant main effect of reward value, F(1, 35) = 24.21, p < .001, η 2 p = .41; reward presentation, F(1, 35) = 10.19, p = .003, η 2 p = .23; and the interaction effect, F(1, 35) = 21.25, p < .001, η 2 p = .38. The reward effect was stronger for optimal, t(36) = 4.78, p < .001 (one-tailed; M low = 3,775, SD = 837; M high = 3,102, SD = 281) than for suboptimal cues, t(36) = 2.03, p = .02 (one-tailed; M low = 3,222, SD = 337; M high = 3,198, SD = 315), although for both presentation times, high reward cues yielded significantly faster tapping than low reward cues. This difference seems to be mainly driven by participants refraining from investing effort on optimal low reward trials (cf., Bijleveld et al. 2010).

Eye blink rate

A Pearson correlation was computed to test for a link between EBR and the reward effect, which was calculated by subtracting the tapping times of the high reward value trials from those on the low reward value trials so that higher values indicate a speed up for higher rewards. The test revealed a positive correlation for suboptimal reward cues, r(36) = .37, p = .02 (one-tailed), but not for optimal reward cues r(36) = −.04, p = .81 (see Fig. 1). The difference between these two correlations proved significant, with a Steiger’s Z score of 1.73, p = .02 (one-tailed; Steiger 1980).

These results first of all show that participants indeed exerted significantly more effort when presented with the high value coin, regardless of whether this presentation was optimal or suboptimal. Secondly, a significant correlation between participants’ EBR and their reward effect on performance was found for suboptimal, but not for optimal reward cues. In conclusion, assuming EBR is a representative marker, the results of this first study are suggesting a connection between striatal dopaminergic functioning and people’s effort responses to reward cues, but only when these are presented suboptimally.

Study 2

To increase convergent validity, we opted for a different marker of individual differences in striatal dopamine activity in Study 2. An important potential drawback of EBR is that it cannot be measured at the actual time of the experimental task. To overcome this drawback, Study 2 used ERN instead of EBR, a neurophysiological measure that can be measured simultaneously with task performance. Moreover, we used a cognitive task instead of a physical task, where response accuracy and speed served as indicators of performance. In previous research, cognitive tasks have successfully been used to discern between the effects of optimal and suboptimal reward cues, both on the behavioral and the brain level (e.g., Bijleveld et al. 2010; Capa et al. 2012).

Electroencephalography was used to obtain event-related potentials (ERP) during the task. In the last decade, studies have identified a neural response to errors that has been termed the ERN or error negativity. Observed at fronto-central recording sites, ERN consists of a large negative shift in the response, or feedback-locked ERP occurring 50–100 ms after subjects have made an erroneous response (Holroyd and Coles 2002). Localization with dipole localization algorithms has led most researchers to conclude that ERN originates in the anterior cingulate cortex, a structure directly connected to the ventral striatum.

There are several indications that the amplitude of the ERN can be interpreted as a correlate of functioning of the dopamine system. For example, previous studies show that administering drugs that increase striatal dopaminergic activity, such as amphetamine or caffeine, also increase ERN. Moreover, patients with schizophrenia, obsessive–compulsive disorder and Parkinson’s disease, all characterized by disturbances in dopaminergic activity, show abnormal ERNs (Holroyd et al. 2002; Olvet and Hajcak 2008). Similarly, ERN is observed to decline together with weakened dopaminergic activity accompanying old age (Nieuwenhuis et al. 2010). This leads us to suggest that taking average ERN over all incorrect trials reflects dopaminergic functioning in participants, and subsequently yields a meaningful individual difference measurement.

Methods

Participants

Thirty participants were recruited (M age = 21.53, SD = 2.40, 25 females), via flyers distributed at university buildings. Subject screening was done through an online questionnaire to ensure they met the same inclusion criteria as in the previous study. One subject declared after the study to have been diagnosed with ADHD, and was therefore left out of the analysis. Another subject was left out due to equipment failure, bringing the final amount to twenty-eight (M age = 21.64, SD = 2.45, 23 females). Participants were compensated with the money they earned during the study, which was on average €12.92.

The study used a 2 (reward value: 1 vs. 50 cents) × 2 (reward presentation: brief vs. long) within-subjects design, with EEG measurements to test for between-subjects effects. After 20 practice trials, participants completed 160 trials in total, 40 repetitions per condition. Fifty-cent coins were used instead of 10 cent, in an attempt to maximize reward effects on effort as well as on ERN (see Bijleveld et al. 2010, for use of the same coins and masks).

Procedure

Upon entering the lab participants signed informed consent and received verbal instructions, after which the facial and EEG electrodes were applied. Participants were seated in front of a 60 Hz CRT monitor with a resolution of 1,024 by 768 at a viewing distance of 60 cm, which was measured at the beginning of each study. Participants were told that on each trial in the study they would see a coin (1 or 50 cents), which they could earn by correctly solving the subsequent task.

Experimental task

The task was analogous to the one used in the first study. Again, each trial started with a fixation cross visible for 1,000 ms. Then, participants saw a coin, presented between masks for 17 (suboptimal) or 300 ms (optimal), where the combined presentation time of masks and stimuli was kept constant. After the presentation of the coin, another fixation cross appeared for a random duration between 1 and 3 s. The subsequent task consisted of a field of squares and triangles displayed in a 4 by 4 grid, and participants were to assess whether there was an even or odd number of triangles (actual numbers ranging from 3 to 8). After an answer was provided using either of two keys on the keyboard, a feedback screen appeared indicating whether their answer was right or wrong. The amount of money they received for each correct trial was contingent on their response latency: the faster they were, the more money they earned. The amount was computed as M = V − (V × (T/A)), where M is the amount of money earned, V is the value of the coin that was presented (in cents), T is the response latency, and A is a participant-specific ability parameter that was computed based on participants’ performance during the practice trials. This parameter was computed so that it would roughly force an equal number of errors per participants. It generated a response window within which the reward declined to zero that was so brief that participants had to make an educated guess, rather than engage in actual counting to still be able to earn a percentage of the reward. Under this time pressure, we assumed to find reward effects mainly on accuracy. With reaction times >0, the earned amount was always less than the coins presented at the beginning of the trial. When the response was incorrect, the participant received no money. The specific amount earned per trial with their overall cumulative earnings was shown at the final screen of each trial. Then, a new trial started.

Coin visibility

In order to verify whether participants could accurately discriminate between high- and low-value coins when presented for 17 ms, a signal detection task was run after the experimental task on the same participants. They were exposed to the same coin stimuli as in the task (i.e. 1 vs. 50 cents, presented for 17 vs. 300 ms), and they were subsequently asked to indicate the identity of the stimulus using two keys representing the high and low value coin. The task consisted of 120 trials, with 30 trials per condition.

EEG recording

EEG was recorded from 32 scalp locations using to the International 10–20 EEG System with Ag–AgCl-tipped electrodes. Electro-oculogram (EOG) was recorded from bipolar montages above and below the right eye and the outer canthi of the eyes. Raw EEG recordings were made with the ActiveTwo system (BioSemi, Amsterdam, The Netherlands) relative to the common mode sense (CMS). All data were recorded with a sampling rate of 2,048 Hz, and data were stored for offline analysis.

ERPs

EEG data recorded during the task were re-referenced offline to the averaged signal of all scalp channels, and subsequently filtered with a 1 Hz high-pass filter and a slope of 24 dB/oct, and a 10 Hz low-pass filter with a slope of 24 dB/oct. Data were segmented into 2,500 ms windows with a 100 ms baseline correction with respect to the feedback stimulus onset. Ocular artifacts were corrected using the Gratton and Coles algorithm (Gratton et al. 1983) in addition to a visual inspection and segments containing artifacts were removed (difference criterion between two subsequent data points of 50 μV; differences criterion within segment of 100 μV; absolute amplitude criterion of 50 μV). For each participant the segments containing trials with an erroneous response were combined for the calculation of an average feedback-locked ERN. The average was determined on the FZ electrode within a window of 0–300 ms after the feedback, where the lowest and subsequent highest amplitudes were averaged over miss-trials per participant. Taking the difference between these two peaks acted as an additional baseline correction. These amplitudes were later on subtracted, highest minus lowest, to end up with an overall measurement of individual ERN.

Results and discussion

Coin visibility

To assess whether participants could consciously discriminate between high and low rewards for suboptimal cue trials, forced-choice signal detection test data were analyzed using a binomial test. This revealed an accuracy of 54.41 % (SD = 10.49), slightly but significantly above chance with z = 2.60, p < .01. Accuracy was significantly lower, though, than for optimally presented cues (M optimal = 96.07 %, SD = 18.52 %), t(28) = −10.87, p < .001.

Counting task

The mean accuracy scores were subjected to an analysis of variance, with reward value (low vs. high) and cue presentation (suboptimal vs. optimal) as within-participants variables. This analysis revealed a significant main effect of reward value, F(1, 27) = 13.89, p < .001, η 2 p = .34, with no effect for cue presentation, nor an interaction effect (both F’s < 1). The effect of reward value was significant for suboptimal cues (M high = 85.71 %, SD = 9.47 %; M low = 81.43 %, SD = 7.31 %), t(28) = 2.52, p = .01 (one-tailed), but not for optimal cues (M high = 85.89 %, SD = 7.79 %; M low = 82.68 %, SD = 10.43 %), t(28) = 1.67, p = .06 (one-tailed). In addition, the mean response times were also subjected to a similar analysis of variance. This analysis revealed no significant main effect of reward value, F < 1; but did show an effect for Cue presentation, F(1, 27) = 5.10, p = .03, η 2 p = .16. Although the interaction was not significant, F(1, 27) = 3.85, p = .06, η 2 p = .13, reward effects were explored for suboptimal and optimal reward cues separately. Participants did not respond faster on high reward compared to low reward trials (M high = 2,060 ms, SD = 385; M low = 2,044 ms, SD = 361) for suboptimal cues, t < 1, but did so for optimal cue trials (M high = 2,002 ms, SD = 339; M low = 2,049 ms, SD = 346), t(28) = 1.97, p = .03 (one-tailed).

With our signal detection scores slightly above chance, we investigated the relation between coin visibility and the reward effect on accuracy for brief presentations using the method recommended by Greenwald et al. (1995). The reward effect was regressed on the coin visibility variable, which was recoded so zero represented chance level detection. It was found that the slope of the regression line was not significant, b = .15, t(26) = 0.81, p = .43, indicating no relation between conscious detection of the reward cue and the reward effect. Then we tested whether the intercept was significantly above zero, b = .04, t(26) = 2.02, p = .03 (one-tailed), which it was. Hence, the regression model suggests a reward effect even when reward detection is at chance level. Together, this suggests that increases in the reward effect for suboptimal reward cues are not dependent on conscious awareness.

ERN

To test the degree to which the effects of optimal and suboptimal reward cues were modulated by dopaminergic functioning, we computed individually calculated ERN size, by averaging ERN size over all incorrect trials. First a Pearson correlation was computed between averaged ERN and the reward effect on accuracy (accuracy for low reward cues subtracted from that for high reward cues). As expected, ERN size correlated positively with the reward effect for suboptimal reward cues, r(28) = .40, p = .02 (one-tailed), but not with that for optimal reward cues, r(28) = −.08, p = .68 (see Fig. 2). These two correlations are significantly different from each other, with a Steiger’s Z score of 1.80, p = .02 (one-tailed; Steiger 1980). ERN size did not significantly correlate with the reward effect on response times for suboptimal, neither for optimal reward cues, p’s > .05. To control for potential tradeoffs between speed and accuracy, we partialed out the effects of reaction times on accuracy (see Custers and Aarts 2003 for a similar analysis). ERN size remained significantly correlated with the reward effect for brief reward cues, r(25) = .39, p = .02 (one-tailed).

Discussion

Looking at cognitive effort responses, we replicated our earlier findings and demonstrated that participants performed better when presented with a reward cue of higher value. Although there was a main effect of reward value on accuracy, there was no significant difference in this effect for the different cue presentations. The analyses of the reaction times suggest that this may be due to a shift in emphasis on reaction times on trials with optimal reward cues. Similar strategic shifts were observed by Bijleveld et al. (2010), although in that particular paradigm participants slowed down their responses in order to increase accuracy. The fact that we observe a speedup here with no significant effect on accuracy for optimal trials is probably due to the short time window in this task, which forces participants to make an educated guess. Under these circumstances, slowing down would probably not lead to a reliable increase in accuracy.

Overall, higher accuracy was linked to increased striatal dopaminergic functioning, as indicated by averaged ERN amplitude, but only for suboptimal reward cue trials. In addition, this effect was still present when controlling for speed, which shows that the accuracy effect is a genuine effect on performance and not caused by a speed accuracy trade-off.

According to the signal detection task participants were able to discriminate the briefly presented cues slightly above chance level. However, there are several reasons to assume that effort responses on the brief reward cue trials do not reflect responses that are the result of deliberate reward processing. First of all, there seemed to be a difference in the effects on reaction times for suboptimal and optimal reward cues. Although the interaction was not significant, participants were faster for high than low optimal reward cues, which may reflect strategic behavior as faster reaction times were rewarded. No such effect was observed for brief reward cues. Second, in line with our predictions, ERN only correlated with the reward effect for brief reward cues, which suggest that for these cues, the effect was not overruled or overshadowed by strategic reactions or reflections on reward value. As such, we strongly suspect that despite reward detection being slightly above chance for brief reward cues, responses to these cues could still be regarded as reflecting initial reward processing.

One could argue that at first sight, measuring of ERN on the same trials that serve as performance measure presents a confound. That is, an increase in accuracy (less errors) would cause ERNs to be calculated over fewer trials, possibly increasing the resulting mean ERN as errors would be rarer. However, if this would have caused the effect, one would expect performance to be correlated to ERN on trials with optimal reward cues as well, as they would contribute equally to overall accuracy as suboptimal reward cue trial would. Although investigating the relation between ERN and accuracy in each cell of the design would be informative in many respects, the low number of error trials contributing to the ERN average prevented us from running such analyses.

General discussion

The present research aimed to examine the role of the mesolimbic dopamine system in producing rudimentary effort responses. First of all, our studies replicate earlier findings by demonstrating that effort responses can result from reward cues that are presented suboptimally, which makes the contribution of conscious, deliberative processes unlikely. Second, reward responses were found to be correlated with both resting-state EBR and averaged ERN amplitude—both markers of striatal dopaminergic functioning, but only for suboptimal reward cues. As such, the current findings provide support for the involvement of the mesolimbic dopamine system in effort responses to suboptimal reward cues.

The results presented here support the model of reward pursuit put forward by Bijleveld et al. (2012b), in which effort responses to optimal and suboptimal reward cues are assumed to rely, at least partly, on different anatomical structures in the brain. Whereas processing of suboptimal reward cues is thought to require no awareness and to rely on subcortical brain structures—most notably the striatum—optimal reward cues enjoy full reward processing associated with conscious awareness, which allows for a host of strategic and reflective processes that are supported by higher-level cortical areas. The observation that a correlation between effort responses and striatal dopaminergic functioning was obtained only for suboptimal reward cues fits the notion that for optimal reward cues, initial effort responses produced by rudimentary reward processing can be overruled or overshadowed by these strategic processes.

The finding that individual differences in striatal dopaminergic activity were linked to effort responses solely for suboptimal cues suggests that effects of optimal reward cues rely more on the interaction between these sub-cortical areas and higher cortical processes (Cools 2011). In situations where the task constrains strategic responding, or performance does not benefit from it, initial and full reward processing may produce the same outcomes (see e.g., Bijleveld et al. 2009; cf., Pessiglione et al. 2007). As noted earlier, in previous work we found that people deliberately sacrifice speed for accuracy for when a valuable reward was at stake, causing full reward processing to diverge from the initial course (Bijleveld et al. 2010). Such responses to fully processed reward cues may in this case be very well correlated with individual differences that affect strategic responding independently of striatal dopamine functioning.

This is not to say that differences in performance between suboptimal and optimal reward cues necessarily reflect a difference in effort expenditure. As previous research has pointed out (Gendolla et al. 2012), the relation between effort and performance is not always a direct one. Strategic processes on trials with optimal reward cues could either change the relation between effort and performance, or affect the process of effort expenditure by themselves. In any case, because of the observed correlation between striatal dopamine functioning and performance for suboptimal reward cues, we assume that performance measures are a better indicator of effort expenditure in the case of suboptimal than optimal reward cues.

Although Pessiglione et al. (2007) ruled out strategic responding as suboptimal reward cues could not be consciously detected above chance level, the effect on behavior under these conditions was found to be unrelated to striatal activity. A possible reason for this may be that the activity occurs in a quicker and more transient way compared to optimal reward cues. Such an explanation is in line with the idea that conscious awareness of a stimulus keeps information carried by the stimulus active over a sustained period of time (Dehaene and Naccache 2001). If true, this accounts for why a direct link between suboptimal reward cues and striatal activation was not established, as it may be the case that ventral striatum activation occurred too quickly and too transiently to be detected with fMRI. The current research is not hindered by these methodological constraints as it relied on individual differences in striatal dopaminergic functioning in relation to overall task performance.

Although our results are consistent with our theoretical predictions, the correlational nature of our findings may allow for alternative accounts. Most notably, previous studies have demonstrated relationships between activation in the ventral striatum and individual differences in learning performance (Santesso et al. 2008; Schonberg et al. 2007; Vink et al. 2013), and learning effects might explain performance in our task without having to assume direct involvement of the dopamine system in producing the effort response. That is, experimental tasks designed to study the effects of optimal versus suboptimal reward cues involve feedback about the obtained reward. This feedback, in turn, provides a clear opportunity for basic stimulus–response learning. So, it could be argued that learning takes place only on trials with optimal, consciously processed reward cues. Effort responses to suboptimal reward cues, then, could just be learned, a-motivational responses. The present pattern of findings, however, seems incompatible with this account, as the correlations between markers of dopaminergic functioning and performance proved only to exist for suboptimal rewards. So, if anything, it seems that the dopaminergic system is involved in shaping performance especially on these trials. Nevertheless, direct evidence for this involvement is rather scarce (but see Pessiglione et al. 2008, 2011). More research is thus needed to delineate the role of the subcortical reward center in the processing of suboptimal reward cues.

The present research is in line with previous research (Bijleveld et al. 2012b), suggesting that people are able to pursue rewards that are perceived without awareness, and that reward pursuit in this case is guided by a subcortical reward system that is different from the one that dominates conscious reward pursuit. The fact that such a difference was observed in our second study even though brief reward cues were not fully shielded from conscious detection suggest that differences in conscious awareness may by associated with the operation of these different types of reward processing, but that conscious awareness itself is perhaps not the crucial factor that distinguishes them.

The general observation that different mechanisms may underlie the processing of optimal and suboptimal reward cues has larger implications for many aspects of cognition and behavior. The rudimentary reward system has been shown to play a role in processes ranging from goal pursuit (Custers and Aarts 2010), the experience of agency (Aarts et al. 2009), and financial decision-making (Knutson and Greer 2008), to the experience of intrinsic enjoyment during task performance (Murayama et al. 2010). In addition, variations in dopaminergic activity have been linked to various neurological and psychiatric disorders, including Parkinson’s disease, schizophrenia, depression, and drug addiction (Salamone et al. 2005). Exploring the functional processes affected by these conditions can help in understanding the scope of the dysfunction, and how far its symptoms reach in day-to-day functioning. Taken together, by distinguishing between two different mechanisms for reward processing, the present approach to studying motivated action may in the future prove fruitful for enhancing our understanding of human behavior across a wide variety of domains.

References

Aarts, H., Bijleveld, E., Custers, R., Dogge, M., Deelder, M., Schutter, D., et al. (2012). Positive priming and intentional binding: Eye-blink rate predicts reward information effects on the sense of agency. Social Neuroscience, 7, 105–112. doi:10.1080/17470919.2011.590602.

Aarts, H., Custers, R., & Marien, H. (2009). Priming and authorship ascription: When nonconscious goals turn into conscious experiences of self-agency. Journal of Personality and Social Psychology, 96, 967–979. doi:10.1037/a0015000.

Aston-Jones, G., & Cohen, J. D. (2005). An integrative theory of locus coeruleus-norepinephrine function: Adaptive gain and optimal performance. Annual Review of Neuroscience, 28, 403–450. doi:10.1146/annurev.neuro.28.061604.135709.

Bijleveld, E., Custers, R., & Aarts, H. (2009). The unconscious eye-opener: Pupil size reveals strategic recruitment of resources upon presentation of subliminal reward cues. Psychological Science, 20, 1313–1315. doi:10.1111/j.1467-9280.2009.02443.x.

Bijleveld, E., Custers, R., & Aarts, H. (2010). Unconscious reward cues increase invested effort, but do not change speed-accuracy tradeoffs. Cognition, 115, 330–335. doi:10.1016/j.cognition.2009.12.012.

Bijleveld, E., Custers, R., & Aarts, H. (2011). Once the money is in sight: Distinctive effects of conscious and unconscious rewards on task performance. Journal of Experimental Social Psychology, 47, 865–869. doi:10.1016/j.jesp.2011.03.002.

Bijleveld, E., Custers, R., & Aarts, H. (2012a). Adaptive reward pursuit: How effort requirements affect unconscious reward responses and conscious reward decisions. Journal of Experimental Psychology: General, 141, 728–742. doi:10.1037/a0027615.

Bijleveld, E., Custers, R., & Aarts, H. (2012b). Human reward pursuit: From rudimentary to higher-level functions. Current Directions in Psychological Science, 21, 194–199. doi:10.1177/0963721412438463.

Bjork, J. M., & Hommer, D. W. (2007). Anticipating instrumentally obtained and passively-received rewards: A factorial fMRI investigation. Behavioural Brain Research, 177, 165–170. doi:10.1016/j.bbr.2006.10.034.

Björklund, A., & Lindvall, O. (1984). Dopamine containing systems in the CNS. In A. Björklund & T. Hokfelt (Eds.), Handbook of chemical neuroanatomy, classical transmitters in the CNS, part 1 (pp. 55–122). London: Elsevier.

Braver, T. S., Krug, M. K., Chiew, K. S., Kool, W., Clement, N. J., Adcock, R. A., et al. (2014). Mechanisms of motivation–cognition interaction: Challenges and opportunities. Cognitive, Affective, & Behavioral Neuroscience, 14, 443–472. doi:10.3758/s13415-014-0300-0.

Brehm, J. W., & Self, E. A. (1989). The intensity of motivation. Annual Review of Psychology, 40, 109–131. doi:10.1146/annurev.ps.40.020189.000545.

Capa, R. L., Bouquet, C. A., Dreher, J. C., & Dufour, A. (2012). Long-lasting effects of performance-contingent unconscious and conscious reward incentives during cued task-switching. Cortex, 1–12. doi:10.1016/j.cortex.2012.05.018.

Capa, R. L., & Custers, R. (2013). Conscious and unconscious influences of money: Two sides of the same coin? In E. Bijleveld & H. Aarts (Eds.), The psychological science of money. New York: Springer.

Colzato, L. S., Wildenberg, W. P. M., Wouwe, N. C., Pannebakker, M. M., & Hommel, B. (2009). Dopamine and inhibitory action control: Evidence from spontaneous eye blink rates. Experimental Brain Research, 196, 467–474. doi:10.1007/s00221-009-1862-x.

Cools, R. (2011). Dopaminergic control of the striatum for high-level cognition. Current Opinion in Neurobiology, 3, 402–407. doi:10.1016/j.conb.2011.04.002.

Custers, R., & Aarts, H. (2003). On the role of processing goals in evaluative judgments of environments: Effects on memory–judgment relations. Journal of Environmental Psychology, 23, 289–299. doi:10.1016/S0272-4944(02)00076-2.

Custers, R., & Aarts, H. (2010). The unconscious will: How the pursuit of goals operates outside of conscious awareness. Science, 329, 47–50. doi:10.1126/science.1188595.

Dagher, A., & Robbins, T. W. (2009). Personality, addiction, dopamine: Insights from Parkinson’s disease. Neuron, 61, 502–510. doi:10.1016/j.neuron.2009.01.031.

Dehaene, S., & Naccache, L. (2001). Towards a cognitive neuroscience of consciousness: Basic evidence and a workspace framework. Cognition, 79, 1–37. doi:10.1016/S0010-0277(00)00123-2.

Depue, R. A., Luciana, M., Arbisi, P., Collins, P., & Leon, A. (1994). Dopamine and the structure of personality: Relation of agonist-induced dopamine activity to positive emotionality. Journal of Personality and Social Psychology, 67, 485–498. doi:10.1037/0022-3514.67.3.485.

Gendolla, G. H. E., Wright, R. A., & Richter, M. (2012). Effort intensity: Some insights from the cardiovascular system. In R. Ryan (Ed.), The Oxford handbook of human motivation. New York: Oxford University Press.

Gratton, G., Coles, M. G. H., & Donchin, E. (1983). A new method for off-line removal of ocular artifact. Electroencephalography and Clinical Neurophysiology, 55, 468–484. doi:10.1016/0013-4694(83)90135-9.

Greenwald, A. G., Klinger, M. R., & Schuh, E. S. (1995). Activation by marginally perceptible (“subliminal”) stimuli: Dissociation of unconscious from conscious cognition. Journal of Experimental Psychology: General, 124, 22–42. doi:10.1037/0096-3445.124.1.22.

Hariri, A. R., Brown, S. M., Williamson, D. E., Flory, D., De Wit, H., & Manuck, S. B. (2006). Preference for immediate over delayed rewards is associated with magnitude of ventral striatal activity. Journal of Neuroscience, 26, 13213–13217. doi:10.1523/JNEUROSCI.3446-06.2006.

Heimer, L., & Van Hoesen, G. W. (2006). The limbic lobe and its output channels: Implications for emotional functions and adaptive behavior. Neuroscience and Biobehavioral Reviews, 30, 126–147. doi:10.1016/j.neubiorev.2005.06.006.

Holroyd, C. B., & Coles, M. G. H. (2002). The neural basis of human error processing: Reinforcement learning, dopamine, and the error-related negativity. Psychological Review, 109, 679–709. doi:10.1037//0033-295X.109.4.679.

Holroyd, C. B., Praamstra, P., Plat, E., & Coles, M. G. (2002). Spared error-related potentials in mild to moderate Parkinson’s disease. Neuropsychologia, 40, 2116–2124.

Howes, O. D., & Kapur, S. (2009). The dopamine hypothesis of schizophrenia: Version III—The final common pathway. Schizophrenia Bulletin, 6, 1–14. doi:10.1093/schbul/sbp006.

Huang, Z., Stanford, M. S., & Barratt, E. S. (1994). Blink rate related to impulsiveness and task demands during performance of event-related potential tasks. Personality and Individual Differences, 16, 645–648. doi:10.1016/0191-8869(94)90192-9.

Karson, C. N. (1983). Spontaneous eye-blink rates and dopaminergic systems. Brain, 106, 643–653. doi:10.1093/brain/106.3.643.

Kegeles, L. S., Abi-Dargham, A., Frankle, G., Gil, R., & Cooper, T. (2010). Increased synaptic dopamine function in associative regions of the striatum in schizophrenia. Archives of General Psychiatry, 3, 231–239. doi:10.1001/archgenpsychiatry.2010.10.

Knutson, B., Delgado, M. R., & Phillips, P. E. M. (2008). Representation of subjective value in the striatum. In P. W. Glimcher, C. F. Camerer, E. Fehr, & R. A. Poldrack (Eds.), Neuroeconomics: Decision making and the brain (pp. 389–406). Oxford: Oxford University Press.

Knutson, B., & Greer, S. M. (2008). Anticipatory affect: Neural correlates and consequences for choice. Philosophical Transactions of the Royal Society B: Biological Sciences, 363, 3771–3786. doi:10.1098/rstb.2008.0155.

Martin, L. E., & Potts, G. F. (2004). Reward sensitivity in impulsivity. Cognitive Neuroscience and Neuropsychology, 15, 1519–1522. doi:10.1097/01.wnr.0000132920.12990.b9.

McClure, S. M., Laibson, D. I., Loewenstein, G., & Cohen, J. D. (2004). Separate neural systems value immediate and delayed monetary rewards. Science, 306, 503–507. doi:10.1126/science.1100907.

Murayama, K., Matsumoto, M., Izuma, K., & Matsumoto, K. (2010). Neural basis of the undermining effect of monetary reward on intrinsic motivation. Proceedings of the National Academy of Sciences, 107, 20911–20916. doi:10.1073/pnas.1013305107.

Nieuwenhuis, S., Ridderinkhof, K., Talsma, D., Coles, M. G. H., Holroyd, C. B., Kok, A., et al. (2010). A computational account of altered error processing in older age: Dopamine and the error-related negativity. Cognitive, Affective, & Behavioral Neuroscience, 6, 18–23. doi:10.3758/CABN.2.1.19.

Niv, Y., Daw, N. D., Joel, D., & Dayan, P. (2007). Tonic dopamine: Opportunity costs and the control of response vigor. Psychopharmacology (Berlin), 191, 507–520. doi:10.1007/s00213-006-0502-4.

O’Doherty, J., Hornack, J., Bramham, J., Rolls, E., Morris, G., & Bullock, P. (2004). Reward-related reversal learning after surgical excisions in orbito-frontal or dorsolateral prefrontal cortex in humans. Journal of Cognitive Neuroscience, 29, 463–478.

Olvet, D. M., & Hajcak, G. (2008). The error-related negativity (ERN) and psychopathology: Toward an endophenotype. Clinical Psychology Review, 28, 1343–1354. doi:10.1016/j.cpr.2008.07.003.

Pessiglione, M., Petrovic, P., Daunizeau, D., Palminteri, S., Dolan, R. J., & Frith, C. D. (2008). Subliminal instrumental conditioning demonstrated in the human brain. Neuron, 59, 561–567. doi:10.1016/j.neuron.2008.07.005.

Pessiglione, M., Schmidt, L., Draganski, B., Kalisch, R., Lau, H., Dolan, R. J., et al. (2007). How the brain translates money into force: A neuroimaging study of motivation. Science, 316, 904–906. doi:10.1126/science.1140459.

Pessiglione, M., Schmidt, L., Palminteri, S., & Frith, C. D. (2011). Reward processing and conscious awareness. In M. R. Delgado, E. A. Phelps, & T. W. Robbins (Eds.), Decision making, affect, and learning (pp. 329–348). New York, NY: Oxford University Press.

Phillips, P. E., Walton, M. E., & Jhou, T. J. (2007). Calculating utility: Preclinical evidence for cost-benefit analysis by mesolimbic dopamine. Psychopharmacology (Berlin), 191, 483–495. doi:10.1007/s00213-00600626-6.

Salamone, J., Correa, M., Mingote, S., & Weber, S. (2005). Beyond the reward hypothesis: Alternative functions of nucleus accumbens dopamine. Current Opinion in Pharmacology, 5, 34–41. doi:10.1016/j.coph.2004.09.004.

Santesso, D. L., Dillon, D. G., Birk, J. L., Holmes, A. J., Goetz, E., Bogdan, R., et al. (2008). Individual differences in reinforcement learning: Behavioral, electrophysiological, and neuroimaging correlates. Neuroimage, 42, 807–816. doi:10.1016/j.jpsychires.2008.03.001.

Schonberg, T., Daw, N. D., Joel, D., & O’Doherty, J. P. (2007). Reinforcement learning signals in the human striatum distinguish learners from nonlearners during reward-based decision making. Journal of Neuroscience, 27, 12860–12867. doi:10.1523/JNEUROSCI.2496-07.2007.

Schultz, W. (2006). Behavioral theories and the neurophysiology of reward. Annual Review of Psychology, 57, 87–115. doi:10.1146/annurev.psych.56.091103.070229.

Schultz, W., Dayan, P., & Montague, P. R. (1997). A neural substrate of prediction and reward. Science, 275, 1593–1599. doi:10.1126/science.275.5306.1593.

Steiger, J. H. (1980). Tests for comparing elements of a correlation matrix. Psychological Bulletin, 87, 245. doi:10.1037/0033-2909.87.2.245.

Tindell, A. J., Smith, K. S., Pecina, S., Berridge, K. C., & Aldridge, J. W. (2006). Ventral pallidum firing codes hedonic reward: When a bad taste turns good. Journal of Neurophysiology, 96, 2399–2409. doi:10.1152/jn.00576.2006.

Treadway, M. T., Buckholtz, J. W., Schwartzman, A. N., Lambert, W. E., & Zald, D. H. (2009). Worth the ‘EEfRT’? The effort expenditure for rewards task as an objective measure of motivation and anhedonia. PLoS One, 4, e6598. doi:10.1371/journal.pone.0006598.

Van Hell, H., Vink, M., Ossewaarde, L., Jager, G., Kahn, R. S., & Ramsey, N. F. (2010). Chronic effects of cannabis use on the human reward system: An fMRI study. European Neuropsychopharmacology, 20, 153–163. doi:10.1016/j.euroneuro.2009.11.010.

Vink, M., Pas, P., Bijleveld, E., Custers, R., & Gladwin, T. E. (2013). Ventral striatum is related to within-subject learning performance. Neuroscience, 250, 408–416. doi:10.1016/j.neuroscience.2013.07.034.

Zedelius, C. M., Veling, H., & Aarts, H. (2011). Boosting or choking—How conscious and unconscious reward processing modulate the active maintenance of goal-relevant information. Consciousness and Cognition, 20, 355–362. doi:10.1016/j.concog.2010.05.001.

Zedelius, C. M., Veling, H., & Aarts, H. (2012). When unconscious rewards boost cognitive task performance inefficiently: The role of consciousness in integrating value and attainability information. Frontiers in Human Neuroscience, 6, 219. doi:10.3389/fnhum.2012.00219.

Acknowledgments

The work in this paper was supported by a Neuroscience & Cognition Utrecht grant to Ruud Custers and Matthijs Vink.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Pas, P., Custers, R., Bijleveld, E. et al. Effort responses to suboptimal reward cues are related to striatal dopaminergic functioning. Motiv Emot 38, 759–770 (2014). https://doi.org/10.1007/s11031-014-9434-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11031-014-9434-1