Abstract

Many clinical studies require the follow-up of patients over time. This is challenging: apart from frequently observed drop-out, there are often also organizational and financial challenges, which can lead to reduced data collection and, in turn, can complicate subsequent analyses. In contrast, there is often plenty of baseline data available of patients with similar characteristics and background information, e.g., from patients that fall outside the study time window. In this article, we investigate whether we can benefit from the inclusion of such unlabeled data instances to predict accurate survival times. In other words, we introduce a third level of supervision in the context of survival analysis, apart from fully observed and censored instances, we also include unlabeled instances. We propose three approaches to deal with this novel setting and provide an empirical comparison over fifteen real-life clinical and gene expression survival datasets. Our results demonstrate that all approaches are able to increase the predictive performance over independent test data. We also show that integrating the partial supervision provided by censored data in a semi-supervised wrapper approach generally provides the best results, often achieving high improvements, compared to not using unlabeled data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many clinical studies require following subjects over time and measuring the time until a certain event is experienced (e.g., death, progression, hospital discharge, etc). The resulting collected datasets are typically analyzed with survival analysis techniques. Survival analysis is a branch of statistics that analyzes the expected duration until an event of interest occurs (Kleinbaum & Klein, 2010). Censoring is an essential concept in survival analysis which makes it challenging compared to other analytical methods. Censoring can occur due to various reasons, such as drop-out, and means that the observed time is different from the actual event time. In the case of right censoring, for instance, we know that the actual event time is greater than the observed time (Hosmer et al., 2011).

Traditional survival analysis methods include the Cox Proportional Hazards model (CPH) (Cox, 1992). CPH is basically a linear regression model that predicts simultaneously the effect of several risk factors on survival time. However, these standard survival models encounter some challenges when it comes to real-world datasets. For instance, they cannot easily capture nonlinear relationships between the covariates. In addition, in many applications, the presence of high-dimensional data is quite common, e.g., gene expression data; however, these traditional methods are not able to efficiently deal with such high-dimensional data. As a result, machine learning-based techniques have become increasingly popular in the survival analysis context over recent years (Wang et al., 2019). Applying machine learning methods directly to censored data is challenging since the value of a measurement or observation is only partially known. Several studies have successfully modified machine learning algorithms to make use of censored information in survival analysis, e.g., decision trees (Gordon & Olshen, 1985), artificial neural networks (ANN) (Faraggi & Simon, 1995), and support vector machines (SVM) (Khan & Zubek, 2008) to name a few. Popular ensemble-based frameworks include bagging survival trees (Hothorn et al., 2004) and random survival forests (Ishwaran et al., 2008). Also, more advanced learning tasks such as active learning (Vinzamuri et al., 2014) and transfer learning (Li et al., 2016) have been extended toward survival analysis.

Long-term follow-up of patients is often expensive, both time- and effort-wise and financially. As a result, the number of subjects that are included in a study and followed in time is often limited. However, many more subjects may exist (e.g., through retrospective data collection) that meet the inclusion/exclusion criteria of the follow-up study. If the study aims to predict outcomes based on variables collected at baseline, then we hypothesize that these extra (unlabeled) data points might actually boost the predictive performance of the resulting model, if used wisely. This corresponds to a semi-supervised learning set-up (Chapelle et al., 2009), which deals with scenarios where only a small part of the instances in the training data have an outcome label attached, but the rest is unlabeled. To our knowledge, such a semi-supervised learning set-up has never been investigated in the context of survival analysis, and with this article, we aim to fill this gap.

Including unlabeled instances in a survival analysis task leads to three distinct subsets of data, that differ in the amount of supervised information they contain: a set of (1) fully observed, (2) partially observed (censored), and (3) unobserved data points. Our goal is to look at these three subsets of data altogether. In particular, we address two research questions: (1) can the predictive performance over an independent test set be increased by including unlabeled instances (i.e., does the semi-supervised learning setting carry over to the survival analysis context)?, and (2) what is the best approach to integrate the 3 subsets of data in the analysis? To address this second question, we propose and compare three different approaches. The first approach is to treat the unlabeled instances as censored with the censoring time equal to zero and apply a machine learning-based survival analysis technique. For the second approach, we apply a standard semi-supervised learning approach. In particular, we use the widely used self-training wrapper technique (Yarowsky, 1995; Li & Zhou, 2005). This technique first builds a classifier over the labeled (in our case, observed and censored) data points and iteratively augments the labeled set with highly confident predictions over the unlabeled dataset. In the third approach, we propose an adaptation of the second one, in which we initially add the censored instances to the unlabeled set, and exploit the censored information in the data augmentation process, to decide how many instances to add to the labeled set in each iteration. In all three approaches, we use random survival forests as base learner (Ishwaran et al., 2008). In order to answer the research questions, we apply and compare the approaches using fifteen real-life datasets from the healthcare domain.

The remainder of this article is organized as follows. Section 2 introduces the background and reviews some concepts of the employed models including random survival forest and self-training approaches. Section 3 describes related work. In section 4, three proposed approaches are introduced, two of which are a self-training-based framework that copes with the survival data. Section 5 presents the experimental set-up, including dataset description, unlabeled data generation, performance evaluation, and comparison methods and parameter instantiation. Results are presented in section 6. Conclusions are drawn in section 7.

2 Background

In this section, we first review some concepts of using machine learning methods for survival analysis. Afterward, we explain the self-training technique and how one can apply it to a survival analysis problem.

2.1 Random survival forest

Random survival forests are well-known ensemble-based learning models that have been widely used in many survival applications and have been shown to be superior to traditional survival models (Miao et al., 2015). Random survival forest (RSF) (Ishwaran et al., 2008) is quite close to the original Random Forest by Breiman (2001). The random forest algorithm makes a prediction based on tree-structured models. Similar to the random forest, RSF combines bootstrapping, tree building, and prediction aggregating. However, in the splitting criterion to grow a tree and in the predictions returned in the leaf nodes, RSF explicitly considers survival time and censoring information. RSF has three main steps. As the first step, it draws B bootstrap samples from the original data. In the second step, for each bootstrap sample, a survival tree is grown. At each node of a tree, p candidate variables are randomly selected, where p is a parameter, often defined as a proportion of the original number of variables. The task is to split the node into two child nodes using the best candidate variable and split point, as determined by the log-rank test (Segal, 1988). The best split is the one that maximizes survival differences between the two child nodes. Growing the obtained tree structure is continued until a stop criterion holds (e.g., until the number of observed instances in the terminal nodes drops below a specified value). In the last step, the cumulative hazard function (CHF) associated with each terminal node in a tree is calculated by the Nelson-Aalen estimator, which is a non-parametric estimator of the CHF (Kaplan & Meier, 1958). All cases within the same terminal node have the same CHF. The ensemble CHF is constructed as the average over the CHF of the B survival trees.

Noteworthy, the survival function and cumulative hazard function as linked as follows (Miller, 1981):

where H(t) and S(t) denote the cumulative hazard function and the survival function, respectively.

2.2 Self-training method

The semi-supervised learning (SSL) paradigm is a combination of supervised and unsupervised learning and has been widely used in many applications such as healthcare (Madani et al., 2018; Rogers et al., 2019),[22]. The primary goal of SSL methods is to take advantage of the unlabeled data in addition to the labeled data, in order to obtain a better prediction model. The acquisition of labeled data is usually expensive, time-consuming, and often difficult, specifically when it comes to healthcare and follow-up data. Hence, achieving good performance with supervised techniques is challenging, since the number of labeled instances is often too small. Over the years, many SSL techniques have been proposed (Zhu, 2005; VanEngelen & Hoos, 2020). In this article, we will focus on self-training (sometimes also called self-learning) (Yarowsky, 1995), one of the most widely used algorithms for SSL. Self-training has been used in different approaches like deep neural networks (Collobert & Weston, 2008), face recognition (Roli & Marcialis, 2006), and parsing (McClosky et al., 2006). This framework overcomes the issue of insufficient labeled data by augmenting the training set with unlabeled instances. It starts with training a model using a base learner on the labeled set and then augments this set with the predictions for the unlabeled instances that the model is most confident in (see Figure 1). This procedure is repeated until a certain stopping criterion is met. This stopping criterion, the number of instances to augment in each iteration, and the definition of confidence are instantiated according to the problem at hand.

3 Related work

Semi-supervised learning (SSL) methods have been applied in many different domains (Zhu, 2005; VanEngelen & Hoos, 2020). However, few efforts have been made in order to generalize SSL algorithms to be suitable for survival analysis.

Bair and Tibshirani (2004) combine supervised and unsupervised learning to predict survival times for cancer patients. They first employ a supervised approach to select a subset of genes from a gene expression dataset that correlates with survival. Then, unsupervised clustering is applied to these gene subsets to identify cancer subtypes. Once such subtypes are identified, they apply again supervised learning techniques to classify future patients into the appropriate subgroup or to predict their survival. Although the authors call the resulting approach semi-supervised, their setting is clearly different from ours.

There has also been some work that models a survival analysis task as a semi-supervised learning problem by employing a self-training strategy to predict event times from observed and censored data points. Both (Shi & Zhang, 2011; Hassanzadeh et al., 2016) treat the censored data points as unlabeled, thereby ignoring the time-to-event information that they contain. Liang et al. (2016) do use some information from the censored times, in the sense that they disregard data points for which the model predicts a value lower than the right-censored time points. They combine Cox proportional hazard (Cox) and accelerated failure time (AFT) model in a semi-supervised set-up to predict the treatment risk and the survival time of cancer patients. Regularization is used for gene selection, which is an essential task in cancer survival analysis. The authors found that many censored data points always violate the constraint that the predicted survival time should be higher than the censored time, restricting the full exploitation of the censored data. Therefore, in follow-up work (Chai et al., 2017), they embedded a self-paced learning mechanism in their framework to gradually introduce more complex data samples in the training process, leading to a more accurate estimation for the censored samples. An important dierence between our work and the discussed studies is that we consider situations where apart from fully observed and censored instances, we also have a third category, namely extra data points that are unlabeled. To our knowledge, this is the rst study to investigate the use of unlabeled instances in the survival context.

4 Methodology

In order to predict event times in the presence of observed, censored, and unlabeled instances, we propose three approaches.

The first approach is a straightforward application of a survival analysis method (in our case, RSF), in which we add the unlabeled set as censored instances, with the corresponding event time set to zero. We call the first approach random survival forest with unlabeled data (RSF+UD). Figure 2 depicts the block diagram of the first proposed pipeline.

In the second approach, we apply a semi-supervised learning approach called self-trained random survival forest (ST-RSF). In particular, we use the widely used self-training wrapper technique (Nigam & Ghani, 2000). Figure 3 shows the learning process in our self-training algorithm. This technique first builds an initial model using RSF over the labeled (in our case, observed and censored) data points and then iteratively augments the labeled set with the most confident predictions of survival time for the unlabeled dataset. In order to predict the survival time for each individual, we calculate the expected future lifetime (\(T_p\)) which at a given time \(t_0\) is the time remaining until the event, given that the event did not occur until \(t_0\) (Miller, 1981):

where S(t) is the survival function predicted by RSF.

The aim is to boost the performance of the model using unlabeled data through an iterative process. As mentioned in Section 2, the adoption of a self-training approach requires the instantiation of three aspects. First, in order to define the confidence in a prediction, we use the variance of predictions across trees. The lower this variance, the more the trees agree, and thus, the more confidence we have in the predicted value. Second, we set the number of instances to be added to the labeled set in each iteration to 10% of the size of the unlabeled set. We set the status of these newly added instances to observed and add their predicted value as their survival time. Finally, we need to define a global stopping criterion, to terminate the iterative procedure. For this purpose, in the first iteration, we take the first quartile of the variance values and use it as the maximally allowed variance in the whole procedure. Thus, we only augment unlabeled instances if their prediction variance is smaller than this value. If no instances can be added, the algorithm stops.

The details of this approach (ST-RSF) are described in Algorithm 1.

The third approach is an adaptation of the second one, where we exploit the information contained in the censored instances to replace the arbitrarily set stopping criterion of the second approach. In particular, we use the self-training wrapper technique as before, but build the initial model over only the observed data points and iteratively augment the training set with high confidence predictions from the censored and unlabeled dataset. In other words, we treat the censored examples as unlabeled, and the observed examples as labeled, and cast the problem as a pure semi-supervised learning problem. However, in this scenario, the censored instances are not totally unlabeled, since we know that their event time is greater than the censoring time (assuming right-censored instances). As a result, we aim to exploit this information of censored instances to introduce a smarter stopping criterion in the data augmentation process.

We denote this approach as a self-trained random survival forest corrected with censored times (ST-RSF+CCT). Figure 4 shows the learning process in this self-training algorithm.

Tolerance interval corresponding to two times the standard deviation. Figures a, b, and c represent situations where the condition \(T_{c}\le T_{p}+2\sigma\) is fulfilled, where \(\sigma\) is the standard deviation of the individual tree predictions, and hence, these situations are accepted by our method. In Figure d, the condition is violated

When deciding which unlabeled (including censored) instances to add to the augmentation process, similarly to the previous approach, we assess the confidence of the ensemble predictions based on the variance of the individual tree predictions. We sort the predictions based on minimum variance (note that the resulting list contains instances both from the censored and unlabeled dataset), but instead of picking the top 10%, we use the information in the censored instances to decide when to stop adding instances. More precisely, we know that the true event time must be greater than the censoring time for those instances. As a result, whenever we encounter a censored instance with a predicted time \(T_p\) smaller than its censoring time \(T_c\), we stop the augmentation for the current iteration. When an iteration yields zero augmented instances, the whole procedure is terminated. Preliminary experiments showed that the condition \(T_c \le T_p\) is often too strict and results in premature termination. This happens when the prediction variances are high, and thus adding or removing some trees from the forest could result in a substantially different \(T_p\) value and thus a different condition outcome. For this reason, we calculate the \(95\%\) tolerance interval around \(T_p\) and require \(T_c\) to be smaller than or inside the tolerance interval. In other words, we allow \(T_c\) to be larger than \(T_p\), but only if it is within its 95% tolerance interval (see Figure 5). For the instances (censored or unlabeled) that meet the criterion to be added to the training set, we set the status to observed with the survival time equal to \(T_{p}\). Note that this removes the need to use a machine learning method that is able to work with censored instances as the base learner. In this article, in order to be consistent with and provide a fair comparison to the previous approaches, we still use RSF in this approach. The details of this approach are described in Algorithm 2.

5 Experimental set-up

In this section, first, we start with a dataset description and then explain the process of creating an unlabeled dataset. In Section 5.3, we discuss the metric of evaluation, and finally, in Section 5.4, we explain the comparison methods and parameter instantiation.

5.1 Dataset description

We investigate the performance of our proposed approaches on real-life datasets from the survival package (Therneau, 2020) in R as well as high-dimensional datasets from [35, 36], and some from the R/Bioconductor package. To assess the effectiveness of the proposed approaches in high-dimensional scenarios (\(p \gg n\)), we used ten different gene expression datasets. These datasets typically contain the expression levels of thousands of genes across a small number of samples (\(< 300\)), giving information about demographic features, disease type, survival time, etc. For convenience, in datasets with more than 10000 gene expression features, we reduced the total number of features to the top 10000 features with the largest variance across all samples. Table 1 shows the description and characteristics of the used datasets. The prediction task for all datasets is survival time (time to death).

5.2 Unlabeled data generation

Since we are not aware of survival datasets that include unlabeled instances, we artificially remove the label of a subset of instances as follows (see Figure 6). First, we take the original data and construct five folds for cross-validation, in order to have a fair evaluation of our approach. Then, for each training set in the cross-validation (i.e., for each combination of four folds), we construct the unlabeled category. We split the training data into two sets called labeled data (Ldata) and unlabeled data (Udata). To have a fair and accurate evaluation, we make sure to have the same distribution for both sets relative to the status (being censored or observed). Then, we take Udata and make the instances unlabeled by removing their time and status values.

To improve the stability of the results, we repeat the cross-validation process 10 times and report the average results. We also vary the percentage of unlabeled instances from 5% to 75% of the original training set.

5.3 Performance evaluation

In survival analysis, instead of measuring the absolute survival time for each instance, a popular way to assess a model is to estimate the relative risk of an event occurring for different instances. The Harrell’s concordance index (C-index) (Harrell et al., 1982) is a common way to evaluate a model in survival analysis (Schmid et al., 2016). C-index can be interpreted as the fraction of all pairs of subjects whose predicted survival times are correctly ordered among all subjects that can actually be ordered. In other words, it is the probability of concordance between the predicted and the observed survival time. Two subjects’ survival times can be ordered not only if (1) both of them are observed but also if (2) the observed time of one is smaller than the censored survival time of the other (Steck et al., 2008). Consider a set of observation and prediction values for two different instances, \((y_{1},\hat{y} _{1})\) and \((y_{2},\hat{y} _{2})\), where \(y_{i}\) and \(\hat{y} _{i}\) represent the actual survival time and the predicted value, respectively. The concordance probability between these two instances can be computed as \(c= Pr(\hat{y} _{1}> \hat{y} _{2}|y_{1}>y_{2})\). In this paper, we compute the C-index for each test fold in the cross-validation process and return the average value over the 5 test folds.

5.4 Comparison methods and parameter instantiation

We applied five different methods: the three methods presented in this article, namely RSF+UD, ST-RSF, ST-RSF+CCT, and standard RSF and Lasso-Cox trained on the Ldata set only. The goal to perform RSF was to address the first research question (see Section 1), i.e., to investigate if adding an unlabeled set to the training phase would increase the performance of the model. The comparison of the three proposed approaches addresses the second research question. To avoid falling into a slightly biased random survival forest comparison, we have reported results of Cox regression with LASSO regularization (Lasso-Cox) as a baseline model. Lasso-Cox introduces the L1 norm penalty in the Cox log-likelihood function (Tibshirani, 1997). Since the majority of our used datasets are high-dimensional (\(p \gg n\)), we have employed Lasso-Cox due to its capability of handling high-dimensional datasets.

In order to estimate the generalization capacity of the models, we performed a 5-fold cross-validation on each dataset and estimate test accuracy, and repeated it 10 times to achieve reliable results. It is worthwhile to mention that the optimal tuning parameter (\(\lambda\)) in Lasso-Cox is chosen by nested cross-validation while no hyperparameter tuning has been employed for the other approaches. For RSF-based methods, the number of trees was set to 500, and the number of candidate variables considered in each tree node was set to p/3, where p is the number of variables.

6 Results and discussion

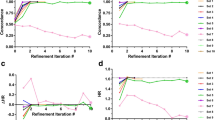

Figures 7 and 8 show the performance of the methods, for different percentages of labeled instances for twelve datasets from the fifteen. For each figure, we show six different curves. The blue and dark green curves represent the performance of RSF and Lasso-Cox using only labeled data (Ldata), respectively. The orange line (maximum) shows the performance of RSF using the complete training set as labeled data and is included as a reference to see how much performance we could gain by having access to all (observed or censored) information. The other three curves represent the proposed approaches.

The figures show that the performance of RSF can indeed be improved by adding unlabeled data to the training set. There are often big performance gains, especially with a lower percentage of labeled instances; however, this improvement does not hold for all datasets and all approaches.

From the figures, we can see that ST-RSF+CCT is the best approach overall, although it often starts in the second or even third position with very few labeled examples. This could be due to a lack of sufficient censored data to guide the augmentation process. Although in three datasets, it starts at a performance lower than RSF, on datasets with a very small number of samples (e.g., Veer, LungBeer, and GSE14764 all with less than 100 observations), ST-RSF+CCT is immediately much better than RSF. Note that 25% of labeled instances can be as low as 15 labeled examples for the Veer dataset, where ST-RSF+CCT outperforms RSF in the first part of the graph, reaching a C-index level of around 69%.

In addition, in several datasets with a high percentage of censored instances (e.g., DBCD, GSE14764, EMTAB, NHANES I, BRCA, and Veer, all with higher than 43% censoring rate), ST-RSF+CCT is performing as the best method in almost all percentages of labeled instances.

When comparing the curves for ST-RSF and ST-RSF+CCT, we see in the majority of datasets that either ST-RSF+CCT is on the winning hand over the entire curve, or ST-RSF is better in only some parts. In addition, in most cases, C-index values for ST-RSF fluctuate when changing the number of labeled instances; however, ST-RSF+CCT shows more steady behavior by feeding more labeled instances. Moreover, when comparing the range of C-indices (difference between min and max), ST-RSF varies more dramatically in most experiments; but overall, ST-RSF+CCT acts robustly. This could be due to the fact that for censored instances, ST-RSF+CCT compares the predicted survival time with the censoring time, which results in achieving more confident predictions.

While one would expect the largest gain from using unlabeled data in settings where very few labeled data are available, we see that also considerable improvements can be obtained at the other extreme, where most training instances are labeled and only a small portion, say 5 or 10%, of unlabeled instances are added. Especially the self-training approaches seem to achieve good results compared to RSF there, although the variability is high. This raises the question of how these techniques would compare to RSF in regular survival analysis tasks (i.e., without an unlabeled set) and can be an interesting direction for future work.

A related observation is that the proposed approaches (especially the semi-supervised ones) are able to beat the ‘maximum’ performance on several occasions. This demonstrates that they are able to select the most reliable instances and leave instances that can harm predictive performance (e.g., noisy instances) out of the training set.

When looking at the RSF+UD curve, we see that it often closely follows the RSF curve for a substantial part of the graph (e.g. for the datasets PBC, GSE32062, DBCD, NHANES I, and BRCA). This is due to the fact that the resulting ensembles are very similar. In fact, the trees generated by RSF are contained in the trees generated by RSF+UD, since the addition of censored data points with event time set to zero does not influence the log-rank splitting criterion, but only alters the size of the trees.

Since the visual inspection of the figures makes it difficult to draw strong conclusions, we also conducted a more aggregated comparison by comparing the areas under the plotted curves. Table 2 shows the means and standard deviations of the AUC rate on the datasets, as well as the average accuracy of each algorithm. As can be seen in Table 2, all our proposed methods provide better results than RSF for all datasets. More specifically, the third approach (ST-RSF+CCT) outperforms RSF, ST-RSF, and Lasso-Cox and manages to be statistically significantly better according to the Friedman-Nemenyi test (Figure 9) (Demšar, 2006)Footnote 1. The second best method, on average, is the RSF+UD variant, which also statistically significantly outperforms RSF and Lasso-Cox, and has a slight, non-significant, margin over ST-RSF. Furthermore, based on the reported results in Table 2, in all high-dimensional datasets, either ST-RSF+CCT or ST-RSF are the winning algorithms, meaning that both proposed algorithms are performing better in high-dimensional settings.

The use of self-training approaches may raise concerns related to overfitting. Since our algorithms have no tunable hyperparameters, they are not prone to the kind of overfitting that results from the hyperparameter tuning process in other algorithms. Moreover, random forests overall are known to be robust to overfitting (Breiman, 2001), due to the fact that by increasing the number of trees, the variance of the error gets reduced. Nevertheless, we have investigated the learning curve of the ST-RSF and ST-RSF+CCT algorithms on two datasets with 55% labeled instances (see Figure 10). We have chosen NSBCD and Veteran, as they have different censoring rates (67% versus 6% censoring rate). In Figure 10, the numbers indicated on the training curves show the number of augmented instances in each step. Due to the difference in the augmentation process, in each iteration, ST-RSF+CCT augments fewer instances compared to ST-RSF. For instance, for Veteran, from 42 unlabeled instances, after six iterations (when the stopping conditions hold), ST-RSF has augmented 29 instances, in comparison to 11 for ST-RSF+CCT. The difference in the number of augmentations for ST-RSF and ST-RSF+CCT confirms that the third approach is more conservative and hence leads to less overfitting.

Our findings demonstrate, first, that adding unlabeled data to the training set enhances the performance of the algorithm (cfr. our first research question), and second, that from the approaches that we have proposed, the self-training technique that uses the information in the censored data points to guide the data augmentation process performs best (cfr. our second research question). The concept of the idea that we proposed could be applied using other base learners and semi-supervised learning strategies, but it remains to be investigated whether the results carry over to other learners.

7 Conclusion

In this article, we have investigated the inclusion of unlabeled data points in a survival analysis task. More precisely, we have considered learning from data with three degrees of supervision: fully observed, partially observed (censored), and unobserved (unlabeled) data points. To our knowledge, this is a setting that has not been considered before. We have proposed three different approaches for this task. The first approach treats the unlabeled points as censored and applies a standard survival analysis technique. The second one applies a standard semi-supervised wrapper approach on top of a survival analysis task. The third one is an adaptation of the second, which treats the censored instances as unlabeled but manages to exploit the censored information to guide the semi-supervised approach. We have evaluated and compared the proposed approaches on fifteen real-world survival analysis datasets, including clinical and high-dimensional ones. Our results have shown that, first, adding unlabeled instances to the training set improves the predictive performance on an independent test set. Second, the third proposed approach generally outperforms the others due to its ability to integrate partial supervision information inside a semi-supervised learning approach.

Our findings can be quite helpful, especially in the healthcare area, where studies often require long-term follow-up of patients, which is costly and challenging. For instance, for the prediction of long-term outcomes after hospitalization, our results suggest that the study data could be complemented by additional routinely collected baseline data available in the hospital database management system, from patients matching the inclusion and exclusion criteria, but not included in the follow-up study. Moreover, based on the results, our proposed algorithms (ST-RSF+CCT and ST-RSF) perform better in high-dimensional settings (gene-expression datasets) which is a common dataset type in the healthcare area.

A limitation of our study is that our experiments assume that the unlabeled set is a random subset of the labeled dataset where the labels have been removed, leading to no trend or bias in the unlabeled set. When employed in a clinical setting, the unlabeled set should be carefully provided to not incorporate a biased set so that the procedure does not introduce noise through these additive iterations in the algorithm.

Availability of data and material

All of the datasets used in this article are publicly available and have been referenced

Code availability

The source code will be publicly available

Notes

In a critical distance diagram, those algorithms that are not joined by a line (i.e., their rankings differ more than a critical distance (CD)) can be regarded as statistically significantly different (Demšar, 2006).

References

Bair, E., & Tibshirani, R. (2004). Semi-supervised methods to predict patient survival from gene expression data. PLoS Biol, 2(4), e108.

Ballinger, B., Hsieh, J., Singh, A., Sohoni, N., Wang, J., Tison, G. H., et al. Deepheart: semi-supervised sequence learning for cardiovascular risk prediction. Thirty-Second AAAI Conference on Artificial Intelligence.

Breiman, L. (2001). Random forests. Machine Learning, 45(1), 5–32.

Chai, H., Li, Z.-N., Meng, D.-Y., Xia, L.-Y., & Liang, Y. (2017). A new semi-supervised learning model combined with cox and sp-aft models in cancer survival analysis. Scientific Reports, 7(1), 1–12.

Chapelle, O., Scholkopf, B., & Zien, A. (2009). Semi-supervised learning (chapelle, o. et al., eds.; 2006)[book reviews], IEEE Transactions on Neural Networks 20(3) 542–542.

Collobert, R., & Weston, J. (2008). A unified architecture for natural language processing: Deep neural networks with multitask learning, In Proceedings of the 25th international conference on Machine learning, pp. 160–167.

Cox, D.R. (1992). Regression models and life-tables. breakthroughs in statistics.

Demšar, J. (2006). Statistical comparisons of classifiers over multiple data sets, The. Journal of Machine Learning Research, 7, 1–30.

Faraggi, D., & Simon, R. (1995). A neural network model for survival data. Statistics in Medicine, 14(1), 73–82.

N.C. for Health Statistics, webpage, https://wwwn.cdc.gov/nchs/nhanes/nhanes1/.

Gordon, L., & Olshen, R. A. (1985). Tree-structured survival analysis. Cancer treatment reports, 69(10), 1065–1069.

Harrell, F. E., Califf, R. M., Pryor, D. B., Lee, K. L., & Rosati, R. A. (1982). Evaluating the yield of medical tests. Jama, 247(18), 2543–2546.

Hassanzadeh, H.R., Phan, J.H., & Wang, M.D. (2016). A multi-modal graph-based semi-supervised pipeline for predicting cancer survival, In 2016 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), IEEE, pp. 184–189.

Hosmer Jr, D.W., Lemeshow, S., & May, S. (2011). Applied survival analysis: Regression modeling of time-to-event data, Vol. 618, John Wiley & Sons.

Hothorn, T., Lausen, B., Benner, A., & Radespiel-Tröger, M. (2004). Bagging survival trees. Statistics in Medicine, 23(1), 77–91.

Ishwaran, H., Kogalur, U. B., Blackstone, E. H., Lauer, M. S., et al. (2008). Random survival forests. The annals of applied statistics, 2(3), 841–860.

Kaplan, E. L., & Meier, P. (1958). Nonparametric estimation from incomplete observations. Journal of the American Statistical Association, 53(282), 457–481.

Khan, F.M., & Zubek, V.B. (2008). Support vector regression for censored data (svrc): a novel tool for survival analysis, In 2008 Eighth IEEE International Conference on Data Mining, IEEE, pp. 863–868.

Kleinbaum, D.G., & Klein, M. (2010). Survival analysis, Springer.

Li, Y., Wang, L., Wang, J., Ye, J., & Reddy, C.K. (2016). Transfer learning for survival analysis via efficient l2, 1-norm regularized cox regression, In 2016 IEEE 16th International Conference on Data Mining (ICDM), IEEE, pp. 231–240.

Li, M., & Zhou, Z.-H. (2005). Setred: Self-training with editing, In Pacific-Asia Conference on Knowledge Discovery and Data Mining, Springer, pp. 611–621.

Liang, Y., Chai, H., Liu, X.-Y., Xu, Z.-B., Zhang, H., & Leung, K.-S. (2016). Cancer survival analysis using semi-supervised learning method based on cox and aft models with l 1/2 regularization. BMC medical genomics, 9(1), 1–11.

Madani, A., Moradi, M., Karargyris, A., & Syeda-Mahmood, T. (2018). Semi-supervised learning with generative adversarial networks for chest x-ray classification with ability of data domain adaptation, In IEEE 15th International symposium on biomedical imaging (ISBI 2018). IEEE,2018, 1038–1042.

McClosky, D., Charniak, E., & Johnson, M. (2006). Effective self-training for parsing, In Proceedings of the Human Language Technology Conference of the NAACL, Main Conference, pp. 152–159.

Miao, F., Cai, Y.-P., Zhang, Y.-T., & Li, C.-Y. (2015). Is random survival forest an alternative to cox proportional model on predicting cardiovascular disease?, In 6TH European conference of the international federation for medical and biological engineering, Springer, pp. 740–743.

Miller, R.G. (1981). Survival Analysis, Wiley-Blackwell.

Nigam, K., & Ghani, R. (2000). Analyzing the effectiveness and applicability of co-training, In Proceedings of the ninth international conference on Information and knowledge management, pp. 86–93.

Rogers, T., Worden, K., Fuentes, R., Dervilis, N., Tygesen, U., & Cross, E. (2019). A bayesian non-parametric clustering approach for semi-supervised structural health monitoring. Mechanical Systems and Signal Processing, 119, 100–119.

Roli, F., & Marcialis, G.L. (2006). Semi-supervised pca-based face recognition using self-training, In Joint IAPR International Workshops on Statistical Techniques in Pattern Recognition (SPR) and Structural and Syntactic Pattern Recognition (SSPR), Springer, pp. 560–568.

Schmid, M., Wright, M. N., & Ziegler, A. (2016). On the use of harrell’s c for clinical risk prediction via random survival forests. Expert Systems with Applications, 63, 450–459.

Segal, M.R. (1988). Regression trees for censored data, Biometrics pp. 35–47.

Shi, M., & Zhang, B. (2011). Semi-supervised learning improves gene expression-based prediction of cancer recurrence. Bioinformatics, 27(21), 3017–3023.

Steck, H., Krishnapuram, B., Dehing-Oberije, C., Lambin, P., & Raykar, V.C. (2008). On ranking in survival analysis: Bounds on the concordance index, In Advances in neural information processing systems, pp. 1209–1216.

Survlab, webpage, http://user.it.uu.se/kripe367/survlab/download.html (2010 Retrived December 7, 2014).

Therneau, T.M. (2020). A Package for Survival Analysis in R, r package version 3.2-7. https://CRAN.R-project.org/package=survival

Tibshirani, R. (1997). The lasso method for variable selection in the cox model. Statistics in Medicine, 16(4), 385–395.

VanEngelen, J. E., & Hoos, H. H. (2020). A survey on semi-supervised learning. Machine Learning, 109(2), 373–440.

Vinzamuri, B., Li, Y., & Reddy, C.K. (2014). Active learning based survival regression for censored data, In Proceedings of the 23rd ACM International Conference on Conference on Information and Knowledge Management, pp. 241–250.

Wang, P., Li, Y., & Reddy, C. K. (2019). Machine learning for survival analysis: A survey. ACM Computing Surveys (CSUR), 51(6), 1–36.

Yarowsky, D. (1995). Unsupervised word sense disambiguation rivaling supervised methods, In 33rd annual meeting of the association for computational linguistics, pp. 189–196.

Zhu, X. J. (2005). Semi-supervised learning literature survey. Tech. rep.: University of Wisconsin-Madison Department of Computer Sciences.

Acknowledgements

This work was supported by KU Leuven Internal Funds (grant 3M180314). The authors also acknowledge the Flemish Government (AI Research Program).

Funding

No funding was received to assist with the preparation of this manuscript

Author information

Authors and Affiliations

Contributions

Fateme Nateghi Haredasht: Investigation, Methodology, Software, Writing, Original draft. Celine Vens: Supervision, Conceptualization, Methodology, Writing, Review and editing

Corresponding author

Ethics declarations

Conflicts of interest/Competing interests

The authors have no conflicts of interest to declare that are relevant to the content of this article

Ethics approval

Not applicable

Consent to participate

No tests, measurements, or experiments were performed on humans as part of this work

Consent for publication

The authors have agreed to submit it in its current form for consideration for publication in Journal

Additional information

Editor: Joao Gama.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Nateghi Haredasht, F., Vens, C. Predicting Survival Outcomes in the Presence of Unlabeled Data. Mach Learn 111, 4139–4157 (2022). https://doi.org/10.1007/s10994-022-06257-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-022-06257-x