Abstract

For the regression task in a non-parametric setting, designing the objective function to be minimized by the learner is a critical task. In this paper we propose a principled method for constructing and minimizing robust losses, which are resilient to errant observations even under small samples. Existing proposals typically utilize very strong estimates of the true risk, but in doing so require a priori information that is not available in practice. As we abandon direct approximation of the risk, this lets us enjoy substantial gains in stability at a tolerable price in terms of bias, all while circumventing the computational issues of existing procedures. We analyze existence and convergence conditions, provide practical computational routines, and also show empirically that the proposed method realizes superior robustness over wide data classes with no prior knowledge assumptions.

Similar content being viewed by others

1 Introduction

Accurate prediction of response \(y \in \mathbb {R}\) from novel pattern \(\varvec{x}\in \mathbb {R}^{d}\), based on an observed sample sequence of pattern-response pairs \((\varvec{z}_{1},\ldots ,\varvec{z}_{n}),\,\varvec{z}:=(\varvec{x},y)\), is one of the most fundamental of statistical estimation tasks. Under particular assumptions such as bounded losses or sub-Gaussian residuals, a rich theory has developed in recent decades (Kearns and Schapire 1994; Bartlett et al. 1996, 2012; Alon et al. 1997; Bartlett and Mendelson 2006; Srebro et al. 2010), with variants of empirical risk minimization (ERM) routines playing a central role. The principle underlying such procedures is the use of the sample mean to approximate the risk (expected loss), which in turn functions as a location parameter of the unknown loss distribution. When the loss is concentrated around this value, this approximation is accurate, and ERM procedures perform well with appealing optimality properties (Shalev-Shwartz et al. 2010).

Unfortunately, these assumptions are stringent, and in general, without a priori evidence of the contrary, our data cannot reasonably be expected to satisfy them. The fundamental problem manifests itself clearly in the simple setting of heavy-tailed real observations, in which the sub-optimality of the empirical mean is well-known (Catoni 2012). A simple solution when using ERM is to leverage slower-growing loss functions (e.g., \(\ell _{1}\) instead of \(\ell _{2}\)), but making this decision is inherently ad hoc and requires substantial prior information. Another option is model regularization (Tibshirani 1996; Bartlett et al. 2012; Hsu et al. 2014), potentially combined with quantile regression (Koenker and Bassett 1978; Takeuchi et al. 2006), though both methods introduce new parameters and we are faced with a difficult model selection problem (Cucker and Smale 2002), whose optimal solution is in practice often very sensitive to the empirical distribution. Put simply, in a non-parametric setting, one incurs a major risk of bias in the form of minimizing an impractical location parameter (e.g., the median under asymmetric losses), in order to ensure estimates are stable.

Considering these issues, it would be desirable to design an objective function which achieves the desired stability, but pays a smaller price in terms of bias, and therefore has minimal a priori requirements (Fig. 1). It is the objective of this paper to derive a regression algorithm which utilizes such a mechanism at tolerable computational cost. In Sect. 2 we review the technical literature, giving our contributions against this backdrop. Section 3 introduces the core routine and important ideas underlying its construction in an intuitive manner, with formal justification and convergence analysis following in Sect. 4. Numerical performance tests are given in Sect. 5, with key take-always summarized in Sect. 6.

A one-dimension regression example (source in supplementary code). When additive noise is heavy-tailed (only the right half), estimating \({{\mathrm{\mathbf {E}}}}(y;\varvec{x})\) via least squares is difficult under small samples. On the other hand, estimating \({{\mathrm{med}}}(y;\varvec{x})\) often introduces an unacceptable bias. In this paper we investigate “robust objectives” which act as all-purpose parameters to be estimated under varied settings

2 Background and contributions

In this section we review the technical literature which is closely related to our work, and then within this context establish the main contributions made in this paper.

Related work Many tasks involve minimizing a function, say \(L(\cdot )\), as a function of candidate \(h \in \mathcal {H}\), which depends on the underlying distribution and is thus unknown. One line of work explicitly looks at refining the approximate objective function used. A key theme is to down-weight errant observations automatically, and to construct a new estimate \(\widehat{L}(h) \approx L(h)\) of the risk, re-coding the algorithm as \(\widehat{h}:={{\mathrm{arg\,min}}}_{h \in \mathcal {H}} \widehat{L}(h)\). The now-classic work of Rousseeuw and Yohai (1984) on S-estimators highlights important concepts in our work. They use the M-estimator of scale of the residual \(h(\varvec{x})-y\), written \(\widehat{s}(h)\), directly as objective function, setting \(\widehat{L}(h)=\widehat{s}(h)\). The idea is appealing and has (classical) robustness properties, though serious issues of stability and computational cost have been raised (Huber and Ronchetti 2009), and indeed even the fast modern routines are designed only for the rather special parametric setting where errant data can be discarded (Salibian-Barrera and Yohai 2006), which severely limits utility in our setting.

Re-weighting of extreme observations using M-estimators of the mean has been recently revisited by Catoni (2009), later revised and published as Catoni (2012). A multi-dimensional extension of this theory appears in Audibert and Catoni (2011), where they propose a function of the form

where \(\lambda > 0\) is a user-set parameter, \(l(h;\varvec{z})\) is a penalty assigned to h on the event of observing \(\varvec{z}\), and \(\psi _{C}\) is a sigmoidal truncation function

The refined loss is then \(\widehat{L}(h) = \sup \{d(h,h^{\prime }){:}\,h^{\prime } \in \mathcal {H}\}\), and is effectively a robust proxy of the “ridge risk” \({{\mathrm{\mathbf {E}}}}l(h;\varvec{z}) + \lambda \Vert h\Vert ^{2}\). Many novel results are given, but it is not established whether an algorithm realizing the desired performance actually exists or not. More precisely, they show that one requires \(\widehat{L}(\widehat{h}) = \inf _{h \in \mathcal {H}}\widehat{L}(h)+O(d/n)\) where d is model dimension. Unfortunately, construction of such a \(\widehat{h}\) is left as future work, though a sophisticated iterative attempt is proposed by the authors. Another natural extension is given by Brownlees et al. (2015), who directly apply these foundational results by using the Catoni class of M-estimators of risk, generalizing \(\psi _{C}\) above, to build \(\widehat{L}\), which amounts to minimizing the root of the sample mean of \(\{\psi _{C}(l(h;\varvec{z}_{i})-\theta )\}_{i=1}^{n}\) in \(\theta \). Novel bounds on excess risk are given, but this depends on an “optimal” scaling procedure which requires knowledge of the true variance. In addition, as this “robust loss” is defined implicitly, actually minimizing it is a non-trivial and expensive computational task.

Another interesting line of recent work revisits the merits of ensembles. A general approach is to take k subsets of the original data, \(D=\cup _{j=1}^{k} D_{j}\), constructing a candidate \(\widehat{h}_{(j)}\) on each, and finally merging \(\{\widehat{h}_{(1)},\ldots ,\widehat{h}_{(k)}\}\) to produce the final output. Well-known implementations of this strategy are bagging and boosting (Breiman 1996; Freund and Schapire 1997), which construct weak learners using bootstrap samples, and averaging the final output. One problem that this poses, however, is that when the data is contaminated or has errant observations, a non-trivial fraction of the weak learners may be quite poor, and the average learner will not behave as desired. To deal with such issues, robust aggregation techniques have been proposed in recent years, which randomly partition the data D (the \(D_{j}\) are disjoint), and aggregation is done in such a way as to ignore or downweight errant learners. One lucid example is the work of Minsker (2015), who uses

namely the geometric median of the candidates (in norm \(\Vert \cdot \Vert \)), where each \(\widehat{h}_{(i)}\) is the ERM estimate on the ith partition. The key notion here is that as long as most of the candidates are not overly poor, the aggregate will be strong. This same notion was explored by Lerasle and Oliveira (2011), where the “not overly poor” notion was made concrete with margin type conditions (section 5.1, p. 14). As well, the work of Hsu and Sabato (2014, 2016) generalizes the formulation of these two works, casting the aggregation task as a “robust distance approximation,” which is highly intuitive, is suggestive of algorithm design techniques, and yields tools applicable to many other problems (Devroye et al. 2015; Lugosi and Mendelson 2016). Once again comparing this with boosting, since bootstrapped samples lead to weak learners which are not independent, even a robust method of aggregating the weak learners would not imply the same (theoretical) guarantees that the robust aggregation methods above enjoy. Whether this distinction manifests itself in practice is an empirical question of interest. For our purposes, the major issue with robust aggregation is that when sample sizes are small, very few sub-samples can be taken. The key concern then is that when samples are large enough that k can be taken large, a less sophisticated method might already perform equally well on the full sample.

Our contributions In this work, the key idea is to use an approximate minimization technique to efficiently make use of powerful but computationally unwieldy robust losses. We propose a novel routine which is rooted in theoretical principles, but makes enough concessions to be useful in practice. Our main contributions can be summarized as follows:

-

A fast minimizer of robust losses for general regression tasks, which is easily implemented, inexpensive, and requires no knowledge of higher-order moments of the data.

-

Analysis of conditions for existence and convergence of the core routine.

-

Comprehensive empirical performance testing, illustrating dominant robustness in both simulated settings and on real-world benchmark data sets.

Taken together, the theoretical and empirical insights suggest that we have a routine which behaves as we would expect statistically, converges quickly in practice, and which achieves a superior balance between cost and performance in the non-parametric setting standard to machine learning problems.

a Schematic of two estimators of \(L_{\mu }\) (their density in n-sample space), one unbiased but with high variance (turquoise), another biased but concentrated (purple), b points along the black line are observations \(x_{1},\ldots ,x_{n} \in \mathbb {R}\) sampled from a heavy-tailed distribution (\(n=7\)). The three vertical rules are: true mean (thick grey), sample mean (turquoise), and the M-estimate of location (purple). Vertical ranges associated with each point denote weight sizes, computed by 1 / n (pale turquoise) and \(\rho ^{\prime }(x_{i}-\gamma )/(x_{i}-\gamma )\) (pale purple). Down-weighting errant observations has a clear positive impact on estimates (Color figure online)

3 Fast minimization of robust objectives

In this section, we introduce the learning task of interest and give an intuitive derivation of our proposed algorithm. More formal analysis of the convergence properties of this procedure, from both statistical and computational viewpoints, is carried out in Sect. 4.

A general learning task Given “candidate” \(h \in \mathcal {H}\), member of a class of vectors or functions, and particular input/output instance \(\varvec{z}=(\varvec{x},y)\), we assign a penalty, \(l(h;\varvec{z}) \ge 0\) via loss function l—smaller is better—and evaluate the quality of h. Assuredly, doing this for a single observation \(\varvec{z}\) is insufficient; as this is a learning task, given incomplete prior information, we must choose h such that when we draw \(\varvec{z}\) randomly from an unknown probability distribution \(\mu \), representing unknown physical or social processes in our system of interest, the (random) quantity \(l(h;\varvec{z})\) is small. If the expected value \(L_{\mu }(h) :={{\mathrm{\mathbf {E}}}}_{\mu } l(h;\varvec{z})\), also called the risk, is small, then we expect the penalty \(l(h;\varvec{z})\) to be small on average. As such, a natural strategy is to choose a “best” candidate by the following program:

At this point, we run into a problem: \(\mu \) is unknown, and thus \(L_{\mu }\) is unknown. All we have access to is n independent draws of \(\varvec{z}\), namely the sample \(\varvec{z}_{1},\ldots ,\varvec{z}_{n}\), and from this we must approximate the true objective, and then minimize this approximation as a proxy of \(L_{\mu }\).

Example 1

(Typical formulations) The pattern recognition problem has generic input space \(\mathcal {X}\) and discrete labels, namely \(\varvec{x}\in \mathcal {X}\) and \(y \in \{1,\ldots ,C\}\). Here the “zero-one” loss \(l(h;\varvec{z})=I\{h(\varvec{x}) \ne y\}\) makes for a natural penalty to classifier h. More generally, the regression problem task has response \(y \in \mathbb {R}\), and the classic metric for evaluating the quality of predictor \(h{:}\,\mathcal {X}\rightarrow \mathbb {R}\) is the quadratic loss \(l(h;\varvec{z})=(y-h(\varvec{x}))^{2}\).

Issues to overcome Intuitively, if our approximation, say \(\widehat{L}\), of \(L_{\mu }\), is not very accurate, then any minima of \(\widehat{L}\) will likely be useless. Thus the first item to deal with is making sure the approximation \(\widehat{L}\approx L_{\mu }\) is sharp. Perhaps the most typical approach is to set \(\widehat{L}(h)\) to the sample mean, \(\sum _{i=1}^{n}l(h;\varvec{z}_{i})/n\). In this case, the estimate is “unbiased” as \({{\mathrm{\mathbf {E}}}}\widehat{L}(h) = L_{\mu }(h)\), but unfortunately the variance can be highly undesirable (Catoni 2009, 2012). There is no need to constrain ourselves to unbiased estimators, as Fig. 2a illustrates; paying a small cost in term of bias [allowing \({{\mathrm{\mathbf {E}}}}\widehat{L}(h) \ne L_{\mu }(h)\)] for much stabler output (large reduction in variance of \(\widehat{L}\)) is an appealing route.

One strategy to do this is as follows. Consider a “re-weighted” average approximation, namely \(\widehat{L}(h;\varvec{\alpha })\) given as

where \(\varvec{\alpha }=(\alpha _{1},\ldots ,\alpha _{n})\) with \(0 \le \alpha _{i} \le 1\) are our weights. In the sample mean case, \(\alpha _{i}=1/n\) for all observation points. However, since n is finite, one often runs into “errant” points which, when given the same amount of weight as all other points, do not accurately reflect the true underlying distribution. Thus, down-weighting these errant points by assigning them small weights (\(\alpha _{i}\) near 0), and subsequently treating all the “typical” points as equals, should in principle allow us to overcome this issue. A mechanism which effectively does this for us is to use the M-estimate of location (Huber 1964); that is, to set

for each \(h \in \mathcal {H}\). Here \(\rho \) is a convex function which is effectively quadratic around the origin, but grows much more slowly (Fig. 3), and \(s>0\) is a scaling parameter. The re-weighting is implicit here, enacted via a “soft” truncation of errant points. Data points which are fairly close to the bulk of the sample are taken as-is (in the region where \(\rho \) is quadratic), while the impact of outlying points is attenuated (in the region where \(\rho \) is linear). We remark that such an estimator is assuredly biased in the sense that \({{\mathrm{\mathbf {E}}}}\widehat{L}(h;\rho ,s) \ne L_{\mu }(h)\) in most cases, but the desired impact is readily confirmed via simple tests, as in Fig. 2b.

From left to right, each figure houses the graphs of \(\rho (u), \rho ^{\prime }(u)\), and \(\rho (u)/u\) respectively. Colours denote different choices for \(\rho \), namely the \(\ell _{2}\) loss (turquoise), the \(\ell _{1}\) loss (green), and the Gudermannian function (purple) from Example 3 (Color figure online)

Following such a strategy, the algorithm to run is

Given knowledge of the true variance, the utility of this approach from a statistical perspective has been elegantly analyzed by Brownlees et al. (2015). That we do not know the true variance is one issue; another critical issue is that this new “robust loss” \(\widehat{L}(h;\rho ,s)\) is defined implicitly, and is thus computationally quite uncongenial. Derivatives are not available in closed form, and every call to \(\widehat{L}(h;\rho ,s)\) requires an iterative sub-routine, a major potential roadblock. In what follows, we propose a principled, practical solution to these problems.

Deriving a fast minimizer Here we pursue an efficient routine for approximately minimizing the robust loss \(\widehat{L}(h;\rho ,s)\), in the context of the general regression task (\(\varvec{z}=(\varvec{x},y)\), with \(y \in \mathbb {R}\)). A useful heuristic strategy follows from noting that given any candidate \(h \in \mathcal {H}\), and computing a central tendency metric \(\gamma \) (e.g., the median or average of \(\{l(h;\varvec{z}_{i})\}_{i=1}^{n}\)), since \(l \ge 0\), in order for \(\widehat{L}(h;\rho ,s)\) to be small, it is necessary that the deviations \(|l(h;\varvec{z}_{i})-\gamma |\) be small for most i. To see this, note that if most deviations are say larger than A, then there must be some points where \(\widehat{L}\) is far to the right, that is i where

With this condition in hand, note that the quantity

in fact directly measures these deviations. If most points are far away from \(\gamma \), then q(h) will be large; if most points are close to \(\gamma \), then q(h) will be small.

Our new task then, is to minimize \(q(\cdot )\) in h. Fortunately, this can be done efficiently, using the re-weighting idea [see \(\widehat{L}(h;\varvec{u})\)] discussed earlier. More precisely, let us set the weights to

For proper \(\rho \) (see Sect. 4), we can ensure \(0 \le \alpha _{i} \le 1\), and intuitively \(\alpha _{i}\) will be very small when \(l(h_;\varvec{z}_{i})\) is inordinately far away from \(\gamma \). Solving a re-weighted least squares problem, namely

can typically be done very quickly, as Example 2 illustrates. What does this re-weighted least squares solution have to do with minimizing \(q(\cdot )\)? Fortunately, fixing any h, if we set update F as

then using classic results from the robust statistics literature (Huber 1981, Ch. 7), we have that

meaning the update from h to F(h) is guaranteed to move us “in the right direction.” That said, as our motivating condition was necessary, but not sufficient, the simplest approach is to check if this update actually monotonically improves the objective \(\widehat{L}(\cdot ;\rho ,s)\), namely:

The merits that this technique offers are clear: if we limit the number of iterations to T, then over \(t=1,2,\ldots ,T\) we need only compute \(\widehat{L}\) once per iteration, meaning that the sub-routine for acquiring \(\widehat{L}\) will only be called upon at most T times total. Initializing some \(h_{(0)}\) and following the procedure just given, with re-centred (via the term \(\gamma \)) and re-scaled (via the factor s) observations at each step, we get Algorithm 1 below.

Example 2

(Update under linear model) In the special case of a linear model where \(h(\varvec{x}) = \varvec{w}^{T}\varvec{x}\) for some vector \(\varvec{w}\in \mathbb {R}^{d}\), then inverting a \(d \times d\) matrix and then some matrix multiplication is all that is required. Writing \(X = [\varvec{x}_{1},\ldots ,\varvec{x}_{n}]^{T}\) for the \(n \times d\) design matrix, \(\varvec{y}= (y_{1},\ldots ,y_{n})\), \(h(X)=(h(\varvec{x}_{1}),\ldots ,h(\varvec{x}_{n}))\), and \(U={{\mathrm{diag}}}(u_{1},\ldots ,u_{n})\), then the solution is \((X^{T}UX)^{\dagger }X^{T}U(\varvec{y}-h(X))\), where \((\cdot )^{\dagger }\) denotes the Moore–Penrose inverse.\(\square \)

Example 3

(Choice of \(\rho \) function) Extreme examples of \(\rho \), the convex function used in (1), are the \(\ell _{2}\) and \(\ell _{1}\) losses, namely \(\rho (u)=u^{2}\) and \(\rho (u)=|u|\). These result in estimates of the sample mean and median respectively. A more balanced choice might be \(\rho (u)=\log \cosh (u)\). We can also define \(\rho \) in terms of its derivative; for example, one useful choice is

where \(\psi \) here is the function of Gudermann (Abramowitz and Stegun 1964, Ch. 4), though there are numerous alternatives (see Appendix A).\(\square \)

Actual computation of key quantities Here we discuss precisely how we carry out the various sub-routines required in Algorithm 1, namely the tasks of initialization, re-centring, re-scaling, and finding robust loss estimates. Initialization is the first and the easiest: \(h_{(0)}\) is initialized to the \(\ell _{2}\) empirical risk minimizer. When this value is optimal, it should be difficult to improve \(\widehat{L}\), and thus the algorithm should finish quickly; when it is highly sub-optimal, this should result in a large value for \(\widehat{L}(h_{(0)};\rho ,s)\), upon which subsequent steps of the algorithm seek to improve.

The “pivot” term \(\gamma \) is computed given a set of losses \(D=\{l(h;\varvec{z}_{i})\}_{i=1}^{n}\) evaluated at some h; in particular, the losses are computed for \(h_{(t-1)}\) at iteration t of Algorithm 1. This \(\gamma (D)\) is used to centre the data; terms \(l(h;\varvec{z}_{i})\) which are inordinately far away from \(\gamma (D)\), either above or below, are treated as errant. One natural choice that requires sorting the data is the median D. A rough but fast choice is the arithmetic mean of D, which we have used throughout our tests.

As with \(\gamma \), we carry out the re-scaling of our observations using D, denoting a set of losses. While there exist theoretically optimal scaling strategies (Catoni 2012), these require knowledge of \({{\mathrm{var}}}_{\mu }l(h;\varvec{z})\) and setting of an additional confidence parameter. Since estimating second-order moments in order to estimate first-order moments is highly inefficient, we take the natural approach of using \(\gamma \) to centre the data, seeking a measure of how dispersed these losses are about this pivot, which will be our scale estimate. More concretely, for D induced by \(h \in \mathcal {H}\), we seek any s satisfying

as our choice for s(D). Here \(\chi \) is an even function, assumed to satisfy \(\chi (0)<0\) and \(\chi (u)>0\) as \(u\rightarrow \pm \infty \), ensuring that the scale is neither too big nor too small when compared with the deviations; see Fig. 4 and Hampel et al. (1986) for both theory and applications of this technique.

In the left plot, we have the graphs of three \(\chi \) choices with common value \(\chi (0)\). From light to dark brown, \(\chi \) is respectively the absolute Geman-type, quadratic Geman-type, and Tukey function (see Example 4). In the right plot, we have randomly generated data D, and solved (2) using the three \(\chi \) functions in the left plot (colours correspond), with \(\gamma (D)\) as the sample mean (turquoise rule). Coloured horizontal rules in ± direction from \(\gamma (D)\) represent s(D) for each choice of \(\chi \) (Color figure online)

Our definition of s(D) in (2) is implicit, as indeed is the robust loss computation \(\widehat{L}\) in (1). We thus require iterative procedures to acquire sufficiently good approximations to these desired quantities. Updates taking a fixed-point form are typical for this sort of exercise, and we use the following two routines. Starting with the location estimate for h and given \(s>0\), we run

noting that this has the desired fixed point, namely a stationary point of the function in (1) to be minimized in \(\theta \). For the scale updates, centred by \(\gamma \in \mathbb {R}\), we run

which has a fixed point at the desired root sought in (2).

Intuitively, for h and D, we expect that as \(k \rightarrow \infty \)

\(\widehat{\theta }_{(k)} \rightarrow \widehat{L}(h;\rho ,s)\) and \(s_{(k)} \rightarrow s(D)\),

and indeed such properties can be both formally and empirically established (see Sect. 4.4).

Example 4

(Role of scale, choice of \(\chi \)) Take the simple choice of \(\chi (u) :=u^{2}-\beta \) for any fixed \(\beta >0\). If we have data set D with \(|D|=n\), and let \(\gamma (D)\) be the sample mean, then it immediately follows from (2) that \(s(D)=(n-1){{\mathrm{sd}}}(D)/(n\beta )\), namely a re-scaled sample standard deviation. Countless alternatives exist; one simple and useful choice is the Geman type function

which originate in widely-cited image processing literature (Geman and Geman 1984; Geman and Reynolds 1992) and also appear in machine learning work (Yu et al. 2012). More classical choices include the bi-weight antiderivative of Tukey (see tuk in Appendix A), which has seen much use in robust statistics over the past half-century (Hampel et al. 1986, Section 2.6). \(\square \)

Summary of fRLM algorithm To recapitulate, we have put forward a procedure for minimizing the robust loss \(\widehat{L}(h;\rho ,s)\) in h, by using a fast re-weighted least squares technique that is guaranteed to improve a quantity (q above) very closely related to the actual unwieldy objective \(\widehat{L}\). Using the iterative nature of this routine, we can perform the re-scaling and location estimates sequentially (rather than simultaneously), making for simple and fast updates. All together, this allows us to leverage the ability of \(\rho \) to truncate errant observations, while utilizing the fast approximate minimization program to alleviate issues with \(\widehat{L}\) being implicit, all without using moment oracles for scaling as in the analysis of Catoni (2012) and Brownlees et al. (2015), which are notable merits of our proposed approach.

This algorithm makes use of statistical quantities that are defined as the minimizer of a class of estimators. As discussed in our literature review of Sect. 2, the properties of learning algorithms that leverage these statistics have been analyzed by Brownlees et al. (2015). This does not, however, capture the properties of the resulting estimator itself: how does it behave as a function of sample size? Does it converge to a readily-interpreted parameter? We address these questions in the following section.

4 Analysis of convergence

After giving some additional notation in Sect. 4.1, we provide some fundamental existence results in Sect. 4.2, and then show that robust loss minimizers converges in a manner analogous to classical M-estimators in Sect. 4.3, using computationally congenial sub-routines examined in Sect. 4.4. All proofs are relegated to Appendix B.

4.1 Preliminaries

In addition to the notation of h, l, \(\varvec{z}\), and \(L_{\mu }\) from the previous sections, we specify that \(\mu \) is a probability on \(\mathbb {R}^{d+1}\), equipped with some appropriate \(\sigma \)-field, say the Borel sets \(\mathcal {B}_{d+1}\). Let \(\mu _{n}\) denote the empirical measure supported on the sample, namely \(\mu _{n}(B) :=n^{-1}\sum _{i=1}^{n}I\{\varvec{z}_{i} \in B\}\), \(B \in \mathcal {B}_{d+1}\). Expectation of vectors is naturally element-wise, namely \({{\mathrm{\mathbf {E}}}}_{\mu }(\varvec{x},y) = ({{\mathrm{\mathbf {E}}}}_{\mu }x_{1},\ldots ,{{\mathrm{\mathbf {E}}}}_{\mu }x_{d}, {{\mathrm{\mathbf {E}}}}_{\mu }y)\), and we shall use \({{\mathrm{var}}}_{\mu }\varvec{z}\) to denote the \((d+1) \times (d+1)\) covariance matrix of \(\varvec{z}\), and so forth. \({{\mathrm{\mathbf {P}}}}\) will be used to denote a generic probability measure, though in almost all cases it will be over the n-sized data sample, and thus correspond to the product measure \(\mu ^{n}\). Let \([k] :=\{1,\ldots ,k\}\) for integer k. We shall frequently use \(\widehat{h}\) to denote the output of an algorithm, typically as \(\widehat{h}_{n}(\varvec{x}) :=\widehat{h}(\varvec{x};\varvec{z}_{1},\ldots ,\varvec{z}_{n})\), a process which takes the n-sized data sample and returns a function \(\widehat{h}_{n} \in \mathcal {H}\) to be used for prediction. Since the underlying distribution \(\mu \) is unknown, the risk \(L_{\mu }\) can either be estimated formally, using inequalities that provide high-probability confidence intervals for this error over the random draw of the sample, or via controlled simulations where the performance metrics are computed over many independent trials.

Example 5

As a concrete case, the classical linear regression model with quadratic risk has \(\varvec{z}=(\varvec{x},y)\) with \(h(\varvec{x})=\varvec{w}^{T}\varvec{x}\) for some \(\varvec{w}\in \mathbb {R}^{d}\), and \(l(h;\varvec{z})=(y-\varvec{w}^{T}\varvec{x})^{2}\). When the model is correctly specified, i.e., when we have \(y = \varvec{w}_{0}^{T}+\epsilon \) for an unknown \(\varvec{w}_{0} \in \mathbb {R}^{d}\), and noise \({{\mathrm{\mathbf {E}}}}_{\mu }\epsilon =0\), the loss takes on a convenient form, making additional results easy to obtain, though our general approach does not require such assumptions.

4.2 Existence of valid estimates

Generalization performance is completely captured by the distribution of \(l(h;\varvec{z})\). Unfortunately, inferring this distribution from a finite sample is exceedingly difficult, and so we estimate parameters of this distribution to gain insight into performance; the expected value \(L_{\mu }(h)\) is a case in point. In pursuit of a routine for estimating the risk, with low variance and controllable risk, the basic strategy ideas in Sect. 3 seem intuitively promising. Here we show that following the strategy outlined, one can create a procedure which is valid in a statistical sense, under very weak assumptions.

Our starting point is to introduce new parameters, distinct from the risk, which have controllable bias, and can be approximated more reliably than the expected value, using a finite sample. The following definition specifies such a parameter class.

Definition 6

(General target parameters) For \(\rho {:}\,\mathbb {R}\rightarrow [0,\infty )\) and scale \(s>0\), define

where s may depend on h. We require that \(\rho \) be symmetric about 0, with \(\rho (0)=0\), and further that

For clean notation, normalize such that \(K=1/2\). If \(\rho \) is differentiable, denote \(\psi :=\rho ^{\prime }\). If twice-differentiable and \(\psi ^{\prime } > 0\), say that \(\rho \) specifies a robust objective, namely \(\theta ^{*}(\cdot )\).

Remark 7

The logic here is as follows: the mean \(L_{\mu }(h)\) can be considered a good target if the data are approximately symmetric, or if (regardless of symmetry) they are tightly concentrated about the mean. In both of these cases, we have \(\theta ^{*}(h) \approx L_{\mu }(h)\). To see this, If \(l(h;\varvec{z})\) is symmetric about some \(l_{0}\), that is to say for all \(\varepsilon > 0\),

it is sufficient to minimize

on \([l_{0},\infty )\), where \(\theta =l_{0}=L_{\mu }(h)\) is a solution. Thus in the symmetric case, we end up with \(\theta ^{*}(h) = L_{\mu }(h)\), irrespective of scaling and truncating mechanisms. Here “tightly concentrated” is relative, in the sense that

with high probability. Since we have required \(\rho (u) \sim u^{2}\), tight concentration would imply \(\theta ^{*}(h) \approx L_{\mu }(h)\). As for the linear growth requirement, \(\rho (u)=o(u^{2})\) as \(u \rightarrow \pm \infty \) is necessary if we are to reduce dependence on the tails, but making the much stronger requirement of \(\rho (u) = O(u)\) is very useful as it implies that \(\psi \) is bounded. Note that of the functions \(\rho \) given in Example 3, the \(\ell _{p}\) choices do not meet our criteria, but the Gudermannian and \(\log \cosh \) choices both satisfy all conditions. \(\square \)

This \(\theta ^{*}(\cdot )\), a new parameter of the loss \(l(\cdot ;\varvec{z})\), can be readily interpreted as an alternative performance metric to the risk \(L_{\mu }(\cdot )\). Denote optimal performance in this metric on \(\mathcal {H}\) by

and the empirical estimate of these parameters by

Note that we call this the empirical estimate as we have simply replaced \(\mu \) by \(\mu _{n}\) in the definition of \(\theta ^{*}\) to derive \(\widehat{\theta }\). The procedure of Algorithm 1 outputs an approximation of

which is none other than a minimizer of the robust loss \(\widehat{\theta }\), an empirical estimate of the alternative performance metric \(\theta ^{*}\).

First, we show that these new “objectives” are indeed well-defined objective functions, which is important since our algorithm seeks to minimize them.

Lemma 8

(Existence of parameter and its estimate) Let \(\rho \) specify a robust objective \(\theta ^{*}(h)\). This function is well-defined in h, in that for each \(h \in \mathcal {H}\), the value of \(\theta ^{*}(h)\) is uniquely determined, characterized by

Analogously, the empirical estimate is uniquely defined, and almost surely given by

With a well-defined objective function, next we consider the existence of the minimizer of this new objective. While measurability is by no means our chief concern here, for completeness we include a technical result useful for proving the existence of a valid minimizer of the proxy objective.

Lemma 9

Let \(\rho \) be even and continuously differentiable with \(\rho ^{\prime }\) non-decreasing on \(\mathbb {R}\). Let \(s_{h}{:}\,\mathbb {R}^{d+1} \rightarrow \mathbb {R}_{+}\) be measurable for all \(h \in \mathcal {H}\). For any \(n \in \mathbb {N}\), denote sequence space \(\mathcal {Z}:=(\mathbb {R}^{d+1})^{n}\). Then defining

we have that \(\widehat{\theta }\) is measurable as a function on \(\mathcal {H}\times \mathcal {Z}\).

This gives us a formal definition of \(\widehat{\theta }(h)\) which has the desired property specified by (7). It simply remains to show that we can always minimize this objective in h.

Theorem 10

(Existence of minimizer) Let \(h \mapsto s_{h}\) be continuous and \(s_{h} > 0\), \(h \in \mathcal {H}\). Using \(\widehat{\theta }\) from Lemma 9, define

For any \(\rho \) specifying a robust objective (Definition 6), and any sample \(\varvec{z}_{1},\ldots ,\varvec{z}_{n}\),

and there exists a random variable \(\widehat{h}_{n}\) such that \({{\mathrm{\mathbf {P}}}}\{\widehat{\theta }(\widehat{h}_{n}) = \widehat{\theta }(\mathcal {H})\} = 1\).

There are many potential methods for carrying out the scaling in practice. Here we verify that the simple method proposed in Sect. 3 does not disrupt the assurances above. First a definition.

Definition 11

(General-purpose scale) For random variable \(x \sim \nu \), introduce even function \(\chi {:}\,\mathbb {R}\rightarrow \mathbb {R}\), non-decreasing on \(\mathbb {R}_{+}\), which satisfies

Let \(\beta \ge 0\) be the value such that \(\chi (0)=-\beta \). With the help of \(\chi \) and pivot term \(\gamma _{\nu }\) which may depend on \(\nu \), define

With this definition in place, substituting \(\nu = \mu _{n}\) yields an empirical scale estimate

with \(\sum _{i=1}^{n}l(h;\varvec{z}_{i})/n\) a natural pivot value, though we certainly have more freedom in constructing \(\gamma _{\mu _{n}}(h)\), as the following result shows.

Proposition 12

(Validity of scaling mechanism) If \(\gamma _{\mu _{n}}(h) < \infty \) almost surely for all \(h \in \mathcal {H}\), and \(\chi \) (Definition 11) is increasing on \(\mathbb {R}_{+}\), then the minimizer \(\widehat{h}_{n}\) (8) as constructed in Theorem 10 satisfies

almost surely when scaling with \(s=s_{h}\) as in (14).

Note that \(\gamma _{\mu _{n}}(h)\) here corresponds directly to \(\gamma (D)\) in Algorithm 1, where \(D=\{l(h;\varvec{z}_{i})\}_{i=1}^{n}\). With basic facts related to existence and measurability in place, we proceed to look at some convergence properties of the estimators and computational procedures concerned in the Sects. 4.3 and 4.4.

4.3 Statistical convergence

For some context, we start with a well-known consistency property of M-estimators, adapted to our setting.

Theorem 13

(Pointwise consistency under known scale) For any \(\rho \) specifying a robust objective, fixing any \(h \in \mathcal {H}\) and \(s>0\), then

Note that this strong consistency result is “pointwise” in the sense that the event of probability 1 is dependent on the choice of \(h \in \mathcal {H}\). Were we to take a different \(h^{\prime } \in \mathcal {H}\), while the probability would still be one, the events certainly need not coincide. This becomes troublesome since \(\widehat{h}_{n}\) will in all likelihood take a different h value for distinct samples \(\varvec{z}_{1},\ldots ,\varvec{z}_{n}\). Intuitively, we do expect that as n grows, the estimate \(\widehat{h}_{n}\) should get progressively better and in the limit we should have

Here we show that such a property does indeed hold, focusing on the case where \(\mathcal {H}\) is a linear model, though the assumptions on \(\varvec{x}\) and y are still completely general (agnostic). More precisely, we assume that \(\mathcal {H}\) is defined by a collection of real-valued functions \(\varphi _{1},\ldots ,\varphi _{k}\) on \(\mathbb {R}^{d}\), and a bounded parameter space \(\mathcal {W}\subset \mathbb {R}^{k}\). The model is thus of the form

Under this model, the class of parameters given in Definition 6 and the corresponding estimators (7) are such that convenient uniform convergence results are available using standard combinatorial arguments. First a general lemma of a technical nature.

Lemma 14

(Uniform strong convergence) Let \(\mathcal {H}\) satisfy (15), and \(\rho \) specify a robust objective (Definition 6). Denoting \(\varLambda :=\mathcal {H}\times \mathbb {R}\times \mathbb {R}_{+}, \lambda :=(h,u,s) \in \varLambda \), and

we have that

almost surely.

A corollary of this general result will be particularly useful.

Corollary 15

The robust objective minimizer \(\widehat{h}_{n}\) defined in (8), equipped with any scaling mechanism s depending on \(\widehat{h}_{n} (\)and thus potentially random), satisfies

almost surely.

These facts are sufficient for showing that a very natural analogue of the strong pointwise consistency of M-estimators (Theorem 13) holds in a uniform fashion for our robust objective minimizer \(\widehat{h}_{n}\).

Theorem 16

(Consistency analogue) Let \(\widehat{h}_{n}\) be determined by (8) equipped with any fixed scaling mechanism \(s_{h}{:}\,\mathbb {R}^{d+1} \rightarrow \mathbb {R}_{+}\). Let \(\rho \) specify a robust objective, with \(\rho ^{\prime }\) concave on \(\mathbb {R}_{+}\). If there exists constants \(s_{1},s_{2}, \epsilon \) such that

then it follows that

That is, \(\widehat{\theta }(\widehat{h}_{n})\) is a strongly consistent estimator of the optimal value \(\theta ^{*}(\mathcal {H})\).

With these rather natural statistical properties understood, we shift our focus to the behaviour of the computational routines used.

4.4 Computational convergence

As regards computational convergence, since Algorithm 1 is meant to be a fast approximation to a minimizer of \(\widehat{L}(\cdot )\) on \(\mathcal {H}\), we should not expect the \(\widehat{h}\) produced after \(t \rightarrow \infty \) iterations to actually converge to the true \(\widehat{h}_{n}\) in (8). What we should expect, however, is that the sub-routines (3) and (4), used to compute \(\widehat{L}_{(t)}\) and \(s(D_{(t)})\) for each t, should converge to the true values specified by (1) and (2) respectively. We show that this convergence holds.

Proposition 17

(Convergence of updates) Let \(\rho \) specify a robust objective (Definition 6). Fixing \(s>0\), and any initial value \(\widehat{\theta }_{(0)}\), the iterative update \((\widehat{\theta }_{(k)})\) specified in (3) satisfies

recalling that \(\widehat{\theta }(h) = \widehat{L}(h;\rho ,s)\) from Sect. 3. Similarly, for \(\chi \) as specified by Definition 11, under some additional regularity conditions on \(\chi \), (see proof) we have that for any initialization \(s_{(0)}>0\), the update \((s_{(k)})\) in (4) satisfies

Using \(\rho \) as in Definition 6 and \(\chi \) as in Proposition 12, note that the above convergence guarantees will not be ambiguous, since the location and scale estimates are uniquely determined.

Efficiency of iterative sub-routines As a complement to the formal convergence properties just examined, we conduct numerical tests in which we run (3) and (4) until they respectively compute the true \(\widehat{\theta }\) and s values up to a specified degree of precision. It is of practical importance to answer the following questions: Do the iterative routines reliably converge to the correct optimal value? How many iterations does this take on average? How does this depend on factors such as the data distribution, sample size, and our choice of \(\rho \) and \(\chi \)?

To investigate these points, we carry out the following procedure. Generating \(x_{1},\ldots ,x_{n} \in \mathbb {R}\) from some distribution, denote

The location task is to minimize \(f_{1}\) on \(\mathbb {R}\), and the scale task is to seek a root of \(f_{2}\) on \(\mathbb {R}_{+}\). Two choices of distribution were used. First is \(x \sim N(0,3)\), i.e., centred Normal random variables with variance of nine. The second is asymmetric and heavy-tailed, generated as \(\exp (x)\) where x is again N(0, 3); this is the log-Normal distribution. For \(f_{1}\), the s value is a parameter; this is set to the standard deviation of the \(x_{i}\). For \(f_{2}\), the \(\gamma \) value is a parameter; this is set to the sample mean of the \(x_{i}\). As for \(\rho \) and \(\chi \), we examine five choices of each, all defined in Appendix A. Initial values are \(\widehat{\theta }_{(0)}=n^{-1}\sum _{i=1}^{n}x_{i}\) and \(s_{(0)}={{\mathrm{sd}}}\{x_{i}\}_{i=1}^{n}\).

Iterations required to reach \(\varepsilon \)-accurate estimates given n sample. Left plots (blue bars) give average \(K_{\varepsilon }(\widehat{\theta })\), while the right plots (green bars) give average \(K_{\varepsilon }(s)\). Top row Normal data. Bottom row log-Normal data (Color figure online)

In Fig. 5, we show the average iterations to converge, as a function of sample size n, computed as follows. The terminating iteration for these tasks, at accuracy level \(\varepsilon \), is defined

where \(\widehat{\theta }_{OR}\) and \(s_{OR}\) are “oracle” values of the minimum/root of \(f_{1}/f_{2}\). These are obtained via uniroot in R (R Core Team 2016), an implementation of Brent’s univariate root finder (Brent 1973), recalling the \(\rho \) minimization can be cast as a root-finding problem (Lemma 8). These \(K_{\varepsilon }\) values are thus the number of iterations required; 100 independent trials are carried out, and the arithmetic mean of these values is taken. Updates \(\widehat{\theta }_{(k)}\) and \(s_{(k)}\) are precisely as in (3) and (4). Accuracy level is \(\varepsilon = 10^{-4}\) for all trials.

We have convergence at a high level of precision, requiring very few iterations, and this holds uniformly across the conditions observed. As such, the convergence of the routines is just as expected (Proposition 17), and the speed is encouraging. In general, convergence tends to speed up for larger n, and the relative difference in speed is very minor across distinct \(\rho \) choices, though slightly more pronounced in the case of \(\chi \), but even the slowest choice seems tolerable. Finally, location estimation is slightly slower in the Normal case than in the log-Normal case, while the opposite holds for scale estimation.

5 Numerical performance tests

We derived a new algorithm in Sect. 3, formally investigated statistical properties in Sects. 4.2 and 4.3, and computational properties in Sect. 4.4. Here we evaluate the actual performance of this algorithm against standard competitive algorithms in a variety of situations, including both tightly controlled numerical simulations and real-world benchmark data sets. We seek to answer the following questions.

-

1.

How well does fRLM (Algorithm 1) generalize off-sample?

-

2.

Fixing \(\rho \), can we still succeed under both light- and heavy-tailed noise?

-

3.

How does performance depend on n and d?

Our experimental setup and competing algorithms used are described in Sects. 5.1 and 5.2, and results follow in Sects. 5.3 and 5.4 where we give concrete responses to all the questions posed above. All experimental parameters, as well as source code for all methods used, are included in the supplementary source code.Footnote 1

5.1 Experimental setup

Every experimental condition and trial has us generating n training observations, of the form \(y_{i} = \varvec{w}_{0}^{T}\varvec{x}+ \epsilon _{i}, i \in [n]\). Distinct experimental conditions are specified by the setting of (n, d) and \(\mu \). Inputs \(\varvec{x}\) are assumed to follow a d-dimensional isotropic Gaussian distribution, and thus to determine \(\mu \) is to specify the distribution of noise \(\epsilon \). In particular, we look at several families of distributions, and within each family look at 15 distinct noise levels. Each noise level is simply a particular parameter setting, designed such that \({{\mathrm{sd}}}_{\mu }(\epsilon )\) monotonically increases over the range 0.3–20.0, approximately linearly over the levels.

To ensure a wide range of signal/noise ratios is spanned, for each trial, \(\varvec{w}_{0} \in \mathbb {R}^{d}\) is randomly generated as follows. Defining the sequence \(w_{k} :=\pi /4 + (-1)^{k-1}(k-1)\pi /8, k=1,2,\ldots \) and uniformly sampling \(i_{1},\ldots ,i_{d} \in [d_{0}]\) with \(d_{0}=500\), we set \(\varvec{w}_{0} = (w_{i_{1}},\ldots ,w_{i_{d}})\). As such, given our control of noise standard deviation, and noting that the signal to noise ratio in this setting is computed as \(\text {SN}_{\mu } = \Vert \varvec{w}_{0}\Vert _{2}^{2}/{{\mathrm{var}}}_{\mu }(\epsilon )\), the ratio ranges between \(0.2 \le \text {SN}_{\mu } \le 1460.6\).

Regarding the noise distribution families, the tests described above were run for 27 different families, but as space is limited, here we provide results for four representative families: log-Normal (denoted lnorm in figures), Normal (norm), Pareto (pareto), and Weibull (weibull). Even with just these four, we capture both symmetric and asymmetric families, sub-Gaussian families, as well as heavy-tailed families both with and without finite higher-order moments.

Our chief performance indicator is prediction error, computed as follows. For each condition and each trial, an independent test set of m observations is generated identically to the corresponding n-sized training set. All competing methods use common sample sets for training and are evaluated on the same test data, for all conditions/trials. For each method, in the kth trial, some estimate \(\widehat{\varvec{w}}\) is determined. To approximate the \(\ell _{2}\)-risk, compute root mean squared error \(e_{k}(\widehat{\varvec{w}}) :=(m^{-1}\sum _{i=1}^{m}(\widehat{\varvec{w}}^{T}\varvec{x}_{k,i}-y_{k,i})^{2})^{1/2}\), and output prediction error as the average of normalized errors \(e_{k}(\widehat{\varvec{w}}(k)) - e_{k}(\varvec{w}_{0}(k))\) taken over all trials. While n and d values vary, in all experiments the number of trials is fixed at 250, and test size \(m=1000\).

5.2 Competing methods

Benchmark routines used in these experiments are as follows. Ordinary least squares, denoted ols and least absolute deviations, denoted lad, represent classic methods. In addition, we look at three very modern alternatives, namely three routines directly from the references papers of Minsker (2015) (geomed), Brownlees et al. (2015) (bjl), and Hsu and Sabato (2016) (hs). The hs routine used here is a faithful R translation of the MATLAB code published by the authors. Our implementation of geomed uses the geometric median algorithm of Vardi and Zhang (2000, Eqn. 2.6), and all partitioning conditions as given in the original paper are satisfied. Regarding bjl, scaling is done using a sample-based estimate of the true variance bound used in their analysis, with optimization carried out using the Nelder–Mead gradient-free method implemented in the R function optim.

For our fRLM (Algorithm 1, Sect. 3), we tried several different choices of \(\rho \) and \(\chi \), including those in Appendix A, and overall trends were almost identical. Thus as a representative, we use the Gudermannian for \(\rho \) and \(\chi (u)={{\mathrm{sign}}}(|u|-1)\) as a particularly simple and illustrative example implementation. Estimates of location and scale were carried out by (3) and (4).

5.3 Test results: simulation

Here we assemble the results of distinct experiments which highlight different facets of the statistical procedures being evaluated.

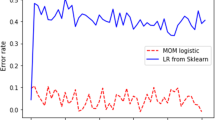

Performance over noise levels Figure 6 shows how predictive performance deteriorates as the noise magnitude (described in Sect. 5.1) grows larger, under fixed (n, d) setting. We see that our method closely follow the performance of ols only when it is strong (the Normal case), but critically remain stable under settings in which ols deteriorates rapidly (all other cases). Our method, much like the other robust methods, incurs a bias by designing objective functions using estimators for target parameters other than the true risk. It is clear, however, that the bias in the case of our method is orders of magnitude smaller than that of competing routines, suggesting that the proposed procedure for minimizing a robust loss is effective. Note that bjl needs an off-the-shelf non-linear optimizer and directly requires variance estimates; our routine circumvents these steps, and is seen to be better for it.

Impact of sample size (n grows, d fixed) In Fig. 7 we look at prediction error, at the middle noise level, for different settings of n under a fixed d. We have fixed \(d=5\) and the sample size ranges between 12 and 122. Once again we see that in the Normal case where ols is optimal, our routine closely mimics its behaviour and converge in the same way. On the other hand for the heavier-tailed settings, we find that the performance is once against extremely strong, with far better performance under small sample sizes, and a uniformly dominant rate of convergence as n gets large.

Impact of dimension The role played by model dimension is also of interest, and can highlight weaknesses in optimization routines that do not appear when only a few parameters are being determined. Such issues are captured most effectively by keeping the d / n ratio fixed and increasing the model dimension.

Prediction error results are given in Fig. 8, at the middle noise level, for different model dimensions ranging over \(5 \le d \le 140\). The sample size is determined such that \(d/n = 1/6\) holds; this is a rather generous size, and thus where we observe deterioration in performance, we infer a lack of utility in more complex models, even when a sample of sufficient size is available. We see clearly that most procedures considered see a performance drop as model dimension grows, whereas our routine performs exactly the same, regardless of dimension size. This is a particularly appealing result illustrating the scalability of our fRLM in “bigger” tasks.

5.4 Test results: real-world data

We have seen extremely strong performance in the simulated situation; let us see how this extends to a number of real-world domains. The algorithms run are precisely the same as in the simulated cases, just the data is new. We have selected four data sets from a database of benchmark data sets for testing regression algorithms.Footnote 2 Our choices were such that the data come from a wide class of domains. For reference, the response variable in bpres is blood pressure, in psych is psychiatric assessment scores, in rent is cost to rent land, and in oct is petrol octane rating. All the data sets used here are included with a description in the online code repository referred at the start of this section. Depending on the data set, the dimensionality and sample size of the data sets naturally differ. Our protocol for evaluation is as follows. If the full data set is \(\{\varvec{z}_{i}\}_{i=1}^{N}\), then we take \(n = \lceil 0.3N \rceil \) for training, and \(m=N-n\) observations for testing. We carry out 100 trials, each time randomly choosing the train/test indices, and averaging over these trials to get prediction error.

Results are given in Fig. 9. While the data sets come from wildly varying domains (economics, manufacturing of petroleum products, human physiology and psychology), it is apparent that the results here very closely parallel those of our simulations, which again are the kind of performance that the theoretical exposition of Sects. 3 and 4 would have us expect. Strong performance is achieved with no a priori information, and with no fine-tuning whatsoever. Exactly the same routine is deployed in all problems. Of particular importance here is that we are able to beat or match the bjl routine under all settings here as well; both of these routines attempt to minimize similar robust losses (defined implicitly), however our routine does it at a fraction of the cost, since we have no need to appeal to general-purpose non-linear optimizers, a very promising result.

6 Concluding remarks

In this work, we have introduced and explored a novel approach to the regression problem, using robust loss estimates and an efficient routine for minimizing these estimates without requiring prior knowledge of the underlying distribution. In addition to theoretical analysis of the fundamental properties of the algorithm being used, we showed through comprehensive empirical testing that the proposed technique indeed has extremely desirable robustness properties. In a wide variety of problem settings, our routine was shown to uniformly outperform well-known competitors both classical and modern, with cost requirements that are tolerable, suggesting a strong general approach for regression in the non-parametric setting.

Looking ahead, there are a number of interesting lines of work to be taken up. Extending this work to unsupervised learning problems is an immediate goal. Beyond this, a more careful look at the optimality of different algorithms from a cost/performance standpoint would assuredly be of interest. When is it more profitable (under some metric) to use “balanced” methods such as that of Minsker (2015), Brownlees et al. (2015) and Hsu and Sabato (2016) or ours, rather than committing to one of two extremes, say OLS or LAD? The former perform very well, but require extra computation. Characterizing such situations in terms of the underlying data distribution is both technically and conceptually interesting. Clear tradeoffs between formal assurances and extra computational cost could shed new light on precisely where traditional ERM algorithms and close variants fail to be economical.

Notes

All materials available at https://github.com/feedbackward/rtm_code.

Compiled online by J. Burkardt at http://people.sc.fsu.edu/~jburkardt/.

References

Abramowitz, M., & Stegun, I. A. (1964). Handbook of mathematical functions with formulas, graphs, and mathematical tables, National Bureau of Standards Applied Mathematics Series (Vol. 55). US National Bureau of Standards.

Alon, N., Ben-David, S., Cesa-Bianchi, N., & Haussler, D. (1997). Scale-sensitive dimensions, uniform convergence, and learnability. Journal of the ACM, 44(4), 615–631.

Ash, R. B., & Doléans-Dade, C. A. (2000). Probability and measure theory (2nd ed.). New York: Academic Press.

Audibert, J. Y., & Catoni, O. (2011). Robust linear least squares regression. Annals of Statistics, 39(5), 2766–2794.

Bartlett, P. L., Long, P. M., & Williamson, R. C. (1996). Fat-shattering and the learnability of real-valued functions. Journal of Computer and System Sciences, 52(3), 434–452.

Bartlett, P. L., & Mendelson, S. (2006). Empirical minimization. Probability Theory and Related Fields, 135(3), 311–334.

Bartlett, P. L., Mendelson, S., & Neeman, J. (2012). \(\ell _{1}\)-regularized linear regression: Persistence and oracle inequalities. Probability Theory and Related Fields, 154(1–2), 193–224.

Breiman, L. (1968). Probability. Reading, MA: Addison-Wesley.

Breiman, L. (1996). Bagging predictors. Machine Learning, 24(2), 123–140.

Brent, R. P. (1973). Algorithms for minimization without derivatives. Englewood Cliffs, NJ: Prentice-Hall.

Brownlees, C., Joly, E., & Lugosi, G. (2015). Empirical risk minimization for heavy-tailed losses. Annals of Statistics, 43(6), 2507–2536.

Catoni, O. (2009). High confidence estimates of the mean of heavy-tailed real random variables. arXiv preprint arXiv:0909.5366.

Catoni, O. (2012). Challenging the empirical mean and empirical variance: A deviation study. Annales de l’Institut Henri Poincaré, Probabilités et Statistiques, 48(4), 1148–1185.

Cucker, F., & Smale, S. (2002). On the mathematical foundations of learning. Bulletin (New Series) of the American Mathematical Society, 39(1), 1–49.

Dellacherie, C., & Meyer, P. A. (1978). Probabilities and potential, North-Holland Mathematics Studies (Vol. 29). Amsterdam: North-Holland.

Devroye, L., Lerasle, M., Lugosi, G., & Oliveira, R. I. (2015). Sub-Gaussian mean estimators. arXiv preprint arXiv:1509.05845.

Dudley, R. M. (1978). Central limit theorems for empirical measures. Annals of Probability, 6(6), 899–929.

Dudley, R. M. (2014). Uniform central limit theorems (2nd ed.). Cambridge, MA: Cambridge University Press.

Freund, Y., & Schapire, R. E. (1997). A decision-theoretic generalization of on-line learning and an application to boosting. Journal of Computer and System Sciences, 55(1), 119–139.

Geman, D., & Reynolds, G. (1992). Constrained restoration and the recovery of discontinuities. IEEE Transactions on Pattern Analysis and Machine Intelligence, 14(3), 367–383.

Geman, S., & Geman, D. (1984). Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Transactions on Pattern Analysis and Machine Intelligence, 6, 721–741.

Hampel, F. R., Ronchetti, E. M., Rousseeuw, P. J., & Stahel, W. A. (1986). Robust statistics: The approach based on influence functions. New York: Wiley.

Hsu, D., & Sabato, S. (2014). Heavy-tailed regression with a generalized median-of-means. In Proceedings of the 31st international conference on machine learning (ICML2014) (pp. 37–45).

Hsu, D., & Sabato, S. (2016). Loss minimization and parameter estimation with heavy tails. Journal of Machine Learning Research, 17(18), 1–40.

Hsu, D., Kakade, S. M., & Zhang, T. (2014). Random design analysis of ridge regression. Foundations of Computational Mathematics, 14(3), 569–600.

Huber, P. J. (1964). Robust estimation of a location parameter. Annals of Mathematical Statistics, 35(1), 73–101.

Huber, P. J. (1981). Robust statistics (1st ed.). New York: Wiley.

Huber, P. J., & Ronchetti, E. M. (2009). Robust statistics (2nd ed.). New York: Wiley.

Kearns, M. J., & Schapire, R. E. (1994). Efficient distribution-free learning of probabilistic concepts. Journal of Computer and System Sciences, 48, 464–497.

Koenker, R., & Bassett, G. (1978). Regression quantiles. Econometrica, 46(1), 33–50.

Lerasle, M., & Oliveira, R. I. (2011). Robust empirical mean estimators. arXiv preprint arXiv:1112.3914.

Lugosi, G., & Mendelson, S. (2016). Risk minimization by median-of-means tournaments. arXiv preprint arXiv:1608.00757.

Minsker, S. (2015). Geometric median and robust estimation in Banach spaces. Bernoulli, 21(4), 2308–2335.

Pollard, D. (1981). Limit theorems for empirical processes. Zeitschrift für Wahrscheinlichkeitstheorie und verwandte Gebiete, 57(2), 181–195.

Pollard, D. (1984). Convergence of stochastic processes. Berlin: Springer.

R Core Team. (2016). R: A language and environment for statistical computing. Vienna: R Foundation for Statistical Computing. https://www.R-project.org/

Rousseeuw, P., & Yohai, V. (1984). Robust regression by means of S-estimators. In Robust and nonlinear time series analysis, Lecture Notes in Statistics (Vol. 26, pp. 256–272). Berlin: Springer.

Salibian-Barrera, M., & Yohai, V. J. (2006). A fast algorithm for S-regression estimates. Journal of Computational and Graphical Statistics, 15(2), 1–14.

Shalev-Shwartz, S., Shamir, O., Srebro, N., & Sridharan, K. (2010). Learnability, stability and uniform convergence. Journal of Machine Learning Research, 11, 2635–2670.

Srebro, N., Sridharan, K., & Tewari, A. (2010). Smoothness, low noise and fast rates. In J. D. Lafferty, C. K. I. Williams, J. Shawe-Taylor, R. S. Zemel, & A. Culotta (Eds.), Advances in neural information processing systems (Vol. 23, pp. 2199–2207).

Steele, J. M. (1975). Combinatorial entropy and uniform limit laws, Ph.D thesis. Stanford University.

Takeuchi, I., Le, Q. V., Sears, T. D., & Smola, A. J. (2006). Nonparametric quantile estimation. Journal of Machine Learning Research, 7, 1231–1264.

Tibshirani, R. (1996). Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society, Series B (Methodological), 58(1), 267–288.

Vapnik, V. N., & Chervonenkis, A. Y. (1971). On the uniform convergence of relative frequencies of events to their probabilities. Theory of Probability & Its Applications, 16(2), 264–280.

Vardi, Y., & Zhang, C. H. (2000). The multivariate \(L_{1}\)-median and associated data depth. Proceedings of the National Academy of Sciences, 97(4), 1423–1426.

Yu, Y., Aslan, Ö., & Schuurmans, D. (2012). A polynomial-time form of robust regression. Advances in Neural Information Processing Systems, 25, 2483–2491.

Acknowledgements

The authors would like to thank the anonymous reviewers for their constructive comments, which resulted in substantial improvements to the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Matthew J. Holland was supported by the Grant-in-Aid for JSPS Research Fellows.

Editor: Kurt Driessens, Dragi Kocev, Marko Robnik-Šikonja, Myra Spiliopoulou.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendices

A Helper functions for M-estimation

Here we define the \(\rho \) and \(\chi \) functions referred to throughout the text. Starting with the \(\rho \) functions, we have referred to

where the text in parentheses (e.g., al) refers to the short-form used in Fig. 5. As discussed in Example 3, the Gudermannian (gud) \(\rho \) function is defined implicitly by the \(\psi \) given here.

Next are \(\chi \) functions for scaling:

Here ga and gq refer to settings \(p=1\) and \(p=2\) respectively, and the \(\psi =\rho ^{\prime }\) here is for any choice of \(\rho \) according to Definition 6. For these two \(\chi \) in our experiments, we have used lch for the \(\rho \) that specifying them.

B Proofs of results in the main text

Proof of Lemma 8

For notational simplicity, given any \(h \in \mathcal {H}\), write \(x_{i} = l(h;\varvec{z}_{i}), i \in [n]\). Taking \(u \in [\min _{i}\{x_{i}\},\max _{i}\{x_{i}\}]\), clearly the right-hand side of (7) is non-empty, i.e., an M-estimate exists. Since \(\rho \) is differentiable and strongly convex on \(\mathbb {R}\), the minimum is uniquely determined, characterized by the \({{\mathrm{\mathbf {E}}}}_{\mu _{n}}\psi \) condition in the Lemma statement, noting \(\psi \) is monotone increasing on its domain, we have that \(\widehat{\theta }(h)\) is well-defined.

Regarding \(\theta ^{*}(h)\), writing \(x=l(h;\varvec{z})\), since \(|\rho (u)| \le c|u|\) for some \(c>0\), integrability follows by monotonicity of the Lebesgue integral, that is for any \(u \in \mathbb {R}\), we have by \(x \in \mathcal {L}_{2}(\mu )\) that

Since \(\rho ^{\prime } = \psi \) is bounded, again for any u we have that

holds (Ash and Doléans-Dade 2000, Ch. 1.6). Existence of the minimum, given as a root of the right-hand side of this equation, is now immediate. Uniqueness follows from the strong convexity of \(\rho \), noting for any functions u and v of \(\varvec{z}\),

for any \(\alpha \in (0,1)\). \(\square \)

Proof of Lemma 9

Fix arbitrary values \(l_{1},\ldots ,l_{n} \in \mathbb {R}_{+}\) and \(s_{1},\ldots ,s_{n}>0\). To compactly denote these variables, write \(\varvec{a}=(l_{1},\ldots ,l_{n},s_{1},\ldots ,s_{n})\). Denote \(\mathcal {B}_{0} :=\mathcal {B}(\mathbb {R}^{2n})\) here, and define

Let \(\widehat{u}:=\inf {{\mathrm{arg\,min}}}_{u} F(u,\varvec{a})\), a map from \(\mathbb {R}^{2n}\) to \(\mathbb {R}\). If \(\rho \) specifies a robust penalty, then from Lemma 8 the minimizer is unique and thus the infimum is superfluous. More generally, even when the minimizer is not unique, the infimum \(\widehat{u}\) will be a valid minimizer. To see this, denoting \(\rho _{0} :=\min _{u} F(u,\varvec{a})\), say we have \(F(\widehat{u},\varvec{a}) > \rho _{0}\). By continuity and monotonicity, there exists \(u_{1} > \widehat{u}\) such that \(\rho _{0}< F(u_{1},\varvec{a}) < F(\widehat{u},\varvec{a})\), and thus \(u_{1}\) lower bounds the set \({{\mathrm{arg\,min}}}_{u} F(u,\varvec{a})\), a contradiction of \(\widehat{\theta }(h)\) being the greatest lower bound. Thus \(F(\widehat{u},\varvec{a}) = \rho _{0}\). It follows that \(\widehat{u}\) is also root of \(f(\cdot ,\varvec{a})\).

For arbitrary \(\alpha \in \mathbb {R}\), define events

Indexing over the rationals is to make the union countable. First note that as \(f(u,\cdot )\) is continuous, it is measurable for every u, and equivalently

As such every set indexed in \(\varvec{A}^{\prime }\) is measurable. As \(\varvec{A}^{\prime }\) is a countable intersection of a countable union of measurable sets, \(\varvec{A}^{\prime }\) itself is measurable. First, say \(\varvec{a} \in \varvec{A}^{\prime }\). On this occasion, for each integer \(k>0\), there exists a rational \(u \le \alpha \) such that the objective \(f(\cdot ,\varvec{a})\) falls within \(\pm \, k^{-1}\) of zero. Now assume \(\widehat{u}(\varvec{a}) > \alpha \) for this \(\varvec{a}\). By definition \(f(\widehat{u}(\varvec{a}),\varvec{a})=0\). As f depends monotonically on u, and \(\widehat{u}\) is infimal, we have for some \(\epsilon > 0\) that

Taking \(k \in \mathbb {N}\) large enough (so that \(1/k < \epsilon \)), we can necessarily secure a rational \(q \le \alpha \) such that \(|f(q,\varvec{a})| < \epsilon \). However as \(q < u_{1}\), this means that

which is a contradiction. Thus \(\widehat{u}(\varvec{a}) \le \alpha \). The \(\varvec{a}\) choice was arbitrary, so \(\varvec{A}^{\prime } \subseteq \varvec{A}_{\alpha }\).

The converse is even simpler. Let \(\varvec{a} \in \varvec{A}_{\alpha }\). We can always take a sequence \((q_{m})\) of \(q_{m} \in \mathbb {Q}\) where \(q_{m} \uparrow \widehat{u}(\varvec{a})\). For any \(k \in \mathbb {N}\), there exists \(m_{0} < \infty \) where

which in turn implies \(|f(q_{m},\varvec{a})| < 1/k\), that is \(\varvec{a} \in \varvec{A}^{\prime }\). We have \(\varvec{A}_{\alpha } \subseteq \varvec{A}^{\prime }\) and thus \(\varvec{A}_{\alpha } = \varvec{A}^{\prime }\), concluding that \(\varvec{A}_{\alpha } \in \mathcal {B}_{0}\) for any choice of \(\alpha \), and any \(w \in \mathcal {W}\). Note \(\varvec{A}_{\alpha }\) is just \(\widehat{u}^{-1}(-\infty ,\alpha ]\), the inverse image of this segment induced by \(\widehat{u}\). Denoting these intervals as \(\mathcal {D}= \{(-\infty ,\alpha ]{:}\,\alpha \in \mathbb {R}\}\), the \(\sigma \)-field generated by this class is \(\sigma (\mathcal {D})=\mathcal {B}(\mathbb {R})\), and the class \(\mathcal {D}^{\prime } = \{B \in \mathcal {B}{:}\,\widehat{u}^{-1}(B) \in \mathcal {B}_{0}\}\) is a \(\sigma \)-field (Breiman 1968, Ch. 2.7). We proved above that \(\mathcal {D}\subseteq \mathcal {D}^{\prime }\), and by minimality of the generated field, \(\mathcal {D}^{\prime } = \mathcal {B}^{1}\). We conclude \(\widehat{u}^{-1}(B) \in \mathcal {B}_{0}\) for all \(B \in \mathcal {B}(\mathbb {R})\). With this, and the measurability of \(l(\cdot ;\cdot )\) and \(s_{h}\), the Lemma follows; the specific requirement is \(\mathcal {B}(\mathcal {H}) \times \mathcal {B}_{d+1}\) measurability of l and either \(\mathcal {B}(\mathcal {H}) \times \mathcal {B}_{d+1}^{n}\) or \(\mathcal {B}(\mathcal {H}) \times \mathcal {B}_{d+1}\) measurability of \(s_{h}\), depending on whether it is determined by \(\mu _{n}\) or individual instances. \(\square \)

Proof of Theorem 10

Use \(\widehat{\theta }(h)\) as in the statement of Lemma 9. Fix an arbitrary set of instances \(\varvec{Z} :=(\varvec{z}_{1},\ldots ,\varvec{z}_{n}) \in \mathcal {Z}\), and

Construct a sequence \((\theta _{m})\) of \(\theta _{m} \in \{\widehat{\theta }(h){:}\,h \in \mathcal {H}\}\) such that \(\theta _{m} \downarrow \widehat{\theta }(\mathcal {H})\). To each \(\theta _{m}\), there is an accompanying \(h_{m} \in \mathcal {H}\) such that \(f(\theta _{m},h_{m},\varvec{Z})=0\). As \(\sup _{m}\Vert h_{m}\Vert < \infty \), there exists a convergent subsequence \((h_{k})\). Denote \(\widehat{h}:=\lim \limits _{k \rightarrow \infty } h_{k}\). Subsequence \(\theta _{k}\) converges to \(\widehat{\theta }(\mathcal {H})\). Continuity of L and s implies \(f(\cdot ,\cdot ,\varvec{Z})\) is continuous, and thus

which by uniqueness of the root of \(f(\cdot ,\widehat{h},\varvec{Z})\) (Lemma 8) implies that

That is, for any set of observations \(\varvec{Z}\), we can find such an \(\widehat{h}\) minimizing the new objective function.

From this point, measurability is a purely technical endeavour. Useful references are Dudley (2014, Ch. 5), Pollard (1984, Appendix C), and Dellacherie and Meyer (1978, Ch. 1–3). We assume \(\mathcal {H}\) ia separable; the special case of \(\mathcal {H}\subset \mathbb {R}^{d}\) is an archetypal example. Index and assemble all possible (random) values of our objective in \(\varvec{\varTheta } :=\{\widehat{\theta }(h){:}\,h \in \mathcal {H}\}\), with \(\varvec{z}_{1},\ldots ,\varvec{z}_{n}\) left free to vary randomly. As \(\widehat{\theta }(h)\) has been shown to be \(\mathcal {H}\times \mathcal {Z}\)-measurable (Lemma 9), under an innocuous regularity condition (Pollard 1984, Appendix C, 1(ii)), the class \(\varvec{\varTheta }\) is sufficiently regular, called “permissible.” It is readily verified that \(\widehat{\theta }(\mathcal {H})\) is \(\mathcal {B}(\mathcal {Z})\)-measurable. Next, define the set

where we have written \(\widetilde{\theta }(\varvec{Z},h) :=(\widehat{\theta }(h) - \widehat{\theta }(\mathcal {H}))\). We have already verified the measurability of the two terms being subtracted, thus \(\widetilde{\theta }\) is \(\mathcal {B}(\mathcal {H}) \times \mathcal {B}(\mathcal {Z})\) measurable. Looking at the second equality, we have that \(\varvec{A}_{3}\) is an analytic subset of \(\mathcal {Z}\times \mathcal {H}\). Taking the projection \(\pi \) of \(\varvec{A}_{3}\) onto the observation space, namely

and note that by our existence result (16), \({{\mathrm{\mathbf {P}}}}\pi (\varvec{A}_{3}) = 1\). From Pollard (1984, Appendix C(d)), it follows that there exists a random variable \(\widehat{h}(\varvec{Z})\) such that \((\varvec{Z},\widehat{h}(\varvec{Z})) \in \varvec{A}_{3}\) for almost all \(\varvec{Z} \in \pi (\varvec{A}_{3})\). Since the latter set has \({{\mathrm{\mathbf {P}}}}\)-measure 1, we conclude that this \(\widehat{h}\) realizes the properties sought in the statement of Theorem 10, concluding the argument. \(\square \)

Proof of Proposition 12

Consider any sample \(\varvec{z}_{1},\ldots ,\varvec{z}_{n}\). Write \(\gamma (h)=\gamma _{\mu _{n}}(h)\) for simplicity. Fix any \(\varepsilon > 0\). By continuity of L, exists \(\delta > 0\) where \(\Vert h - h^{\prime }\Vert \le \delta \) implies

Denote \(s :=s_{h}\) and \(s^{\prime } :=s_{h^{\prime }}\). Now assume \(|s - s^{\prime }| > \varepsilon \), say for concreteness that \(s + \varepsilon< \widetilde{s} < s^{\prime }\). This implies that for any \(\widetilde{s}\) taken such that \(s + \varepsilon< \widetilde{s} < s^{\prime }\), we have

and by the weak monotonicity of \(\chi \), and the definitions of the two roots s and \(s^{\prime }\),

and thus the middle sum is in fact zero. This implies

but since \(\widetilde{s} < s^{\prime }\), this is a contradiction of \(s^{\prime }\) as the infimum of this set. An identical argument holds for the other case of \(s^{\prime } + \varepsilon < s\), and so \(|s - s^{\prime }| \le \varepsilon \). We conclude for any \(\varepsilon > 0\), there exists \(\delta > 0\) such that \(\Vert h - h^{\prime }\Vert \le \delta \) implies \(|s_{h}-s_{h^{\prime }}| \le \varepsilon \). \(\square \)

Proof of Theorem 13

For \(h \in \mathcal {H}\), write \(x=l(h;\varvec{z})\) and \(\widehat{\theta }=\widehat{\theta }(h)\), \(\theta ^{*}=\theta ^{*}(h)\) for simplicity. Let s be either a fixed positive constant, or be generated on a per-observation basis, i.e., \(s_{1},\ldots ,s_{n}\) are independent positive random variables, where say \(s = s(\varvec{z})\) for \(\varvec{z}\sim \mu \). The existence of \(\widehat{\theta }\) and \(\theta ^{*}\) is given by Lemma 8. For convenience denote \(\psi _{u} :=\psi ((x-u)/s)\) and note that

for any choice of u. Use the typical set \(\liminf \) definition, which is to say for any given sequence of sets \(A_{m}\), let \(\liminf _{m} A_{m} :=\bigcup _{m=1}^{\infty }\bigcap _{k \ge m}A_{k}\). For arbitrary fixed \(\varepsilon > 0\), we have

The final equality holds via the strong law of large numbers, which is where we require \({{\mathrm{\mathbf {E}}}}_{\mu }x^2 < \infty \) (Breiman 1968, Theorem 3.27). The inequality prior to that holds since \({{\mathrm{\mathbf {E}}}}_{\mu } \psi _{\theta ^{*}+\varepsilon } < 0\), and the remaining equalities by \(\liminf \) definition and (17). An identical argument can be used to show \({{\mathrm{\mathbf {P}}}}\{ \lim \limits _{n}\widehat{\theta }> \theta ^{*}- \varepsilon \} = 1\), which implies

This holds for any choice of \(\varepsilon > 0\), and thus \(|\widehat{\theta }-\theta ^{*}| \rightarrow 0\) almost surely, yielding strong consistency. \(\square \)

Proof of Lemma 14

If \(\rho \) specifies a robust objective, then \(\psi \) is a bounded measurable function, and can be uniformly approximated by a sequence of weighted indicators as follows. For concreteness, say \(|\psi | \le M < \infty \). Let sequence \(\varepsilon _{m} \downarrow 0\), and for each \(m \in \mathbb {N}\) partition the range \([-M,M]\) into \(k_{m} :=2M/\varepsilon _{m}\) segments \(A_{j} :=\{t{:}\,a_{j-1} \le \psi (t) < a_{j}\}\) defined by

The approximating function \(s_{m}\) is then defined as

By strong convexity, there is no \(u \in \mathbb {R}\) where \(|\psi (u)|=M\), and thus the uniform approximation is immediate. That is, \(|s_{m}(u)-\psi (u)| \le \varepsilon _{m}\) holds uniformly in u. Note that each \(A_{j}\) can be given as an interval. Defining \(b_{j}\) to be the unique element in  where \(\psi (b_{j})=a_{j}\), the marginal sets are \(A_{1} = (-\infty ,b_{1})\) and \(A_{k_{m}} = [b_{k_{m}-1},\infty )\) respectively, and the remainder are half-closed real intervals \(A_{j}=[b_{j-1},b_{j})\).

where \(\psi (b_{j})=a_{j}\), the marginal sets are \(A_{1} = (-\infty ,b_{1})\) and \(A_{k_{m}} = [b_{k_{m}-1},\infty )\) respectively, and the remainder are half-closed real intervals \(A_{j}=[b_{j-1},b_{j})\).

Denote \({{\mathrm{\mathbf {P}}}}_{n} = {{\mathrm{\mathbf {E}}}}_{\mu _{n}}\) and \({{\mathrm{\mathbf {E}}}}= {{\mathrm{\mathbf {E}}}}_{\mu }\) for clean notation. Our interest is with the quantity

where \(s>0\), \(h \in \mathcal {H}\), and \(u \in \mathbb {R}\) when taking the supremum. For any observation \(\varvec{z}_{1},\ldots ,\varvec{z}_{n}\) an application of the triangle inequality yields

where the \(\Vert \cdot \Vert \) terms on the right-hand side denote taking the exact same suprema as on the left-hand side. The first and third terms are readily dealt with. Note for example that

whenever we set index \(m = m(n) \rightarrow \infty \) as \(n \rightarrow \infty \). An identical argument holds for the first term. This convergence is deterministic, in the sense that it holds for arbitrary observations, and thus also holds almost surely.

The second term in (18) is slightly more involved but the approach is rather standard. To get started, denoting for convenience the events

with the understanding that for the index \(j=1\) the interval is \((-\infty ,sb_{1}+u)\) and \(j=k_{m}\) it is \([sb_{k_{m}-1}+u,\infty )\). The obvious but important fact is that each event \(E_{j}\), specified by s, h, u, and the \(b_{j}\) values, is naturally captured by a larger class of sets \(\mathcal {C}\)

Note we are assuming \(\mathcal {H}\) is specified by elements of d-dimensional Euclidean space. Since each \(E_{j} \in \mathcal {C}\), we have that

where \(\Vert \cdot \Vert _{\mathcal {C}}\) denotes taking the supremum over \(C \in \mathcal {C}\). We will frequently use \(I_{C}\) to denote \(I_{C}(\cdot )\), with domain \(\mathbb {R}^{d+1}\). It remains to show the strong convergence to zero of the supremal factor in (19), with convergence rates to deal with the increasing \(k_{m}\) sequence.

A typical symmetrization inequality is of use next (Vapnik and Chervonenkis 1971, Lemma 2). Take an artificial sample \(\varvec{z}_{1}^{\prime },\ldots ,\varvec{z}_{n}^{\prime }\), independent from \(\varvec{z}_{1},\ldots ,\varvec{z}_{n}\), but identically distributed. For any \(\varepsilon >0\), whenever \(n>2/\varepsilon ^{2}\), we have