Abstract

In this paper, we present a dynamic non-diagonal regularization for interior point methods. The non-diagonal aspect of this regularization is implicit, since all the off-diagonal elements of the regularization matrices are cancelled out by those elements present in the Newton system, which do not contribute important information in the computation of the Newton direction. Such a regularization has multiple goals. The obvious one is to improve the spectral properties of the Newton system solved at each iteration of the interior point method. On the other hand, the regularization matrices introduce sparsity to the aforementioned linear system, allowing for more efficient factorizations. We also propose a rule for tuning the regularization dynamically based on the properties of the problem, such that sufficiently large eigenvalues of the non-regularized system are perturbed insignificantly. This alleviates the need of finding specific regularization values through experimentation, which is the most common approach in the literature. We provide perturbation bounds for the eigenvalues of the non-regularized system matrix and then discuss the spectral properties of the regularized matrix. Finally, we demonstrate the efficiency of the method applied to solve standard small- and medium-scale linear and convex quadratic programming test problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we are concerned with finding the solution of linear and convex quadratic programming problems, using an infeasible primal–dual interior point method. Such methods are called infeasible due to the fact that they allow intermediate iterates, produced by the algorithm, to be infeasible for the problem under consideration. They are called primal–dual, because they operate on both the primal and the dual space. Interior point methods (IPMs) deal with the inequality constraints of the problem by introducing logarithmic barriers in the objective, which penalize when any of the inequality constraints is close to being violated. At each iteration, the optimality conditions of the barrier problems are formed and one (or a few) steps of Newton method are applied to them. There is vast available literature on interior point methods, and we refer the interested reader to [1] for an extended literature review.

Most implementations transform the Newton system into a symmetric indefinite system of linear equations, which when solved determines the Newton direction. The latter constitutes the main computational effort and challenge for IPMs. At every iteration of the method, the system matrix as well as the right-hand side changes. There are three main reasons indicating why solving such a system can be challenging. The most obvious one is that the dimension of such systems can be very large, which makes the task of solving them expensive in terms of processing time and memory requirements. A second important challenge, inherent in interior point methods, is that as the algorithm approaches optimality, the systems that we have to solve become increasingly ill-conditioned. Finally, a rank- deficient constraint matrix can result in a singular Newton system matrix. It is well known that the latter two difficulties can be addressed by the use of some regularization technique, at the expense of solving a perturbed problem [2].

Such regularization techniques, embedded in the interior point framework for solving linear and convex quadratic programming problems, have been previously proposed in the literature. For example, in [3], a dynamic primal–dual regularization for interior point methods was derived. The authors solve a slightly altered symmetric indefinite system, to which a diagonal perturbation (regularization) has been introduced. This perturbation transforms the symmetric indefinite matrix into a quasi-definite one. It is proved in [4] that such matrices are strongly factorizable. Hence, the regularized system can be factorized efficiently. The authors interpreted these regularization matrices as adding proximal terms to the primal and dual objective functions. The values of these perturbations are chosen dynamically during the factorization of the system matrix, where potentially unstable pivots are regularized stronger (using some pre-specified “large” regularization value), while safer ones are almost not regularized at all. In [5], based on this proximal point interpretation given in [3], the authors proposed a primal–dual pair of regularized models, where the duality correspondence arises by setting the regularization variables as proximal terms. They observed that for specific parameter values, this primal–dual regularized model is exact, that is, it yields an optimal solution which is also an optimal solution of the respective non-regularized primal–dual pair. There, the authors introduced two uniform diagonal regularization matrices whose values were tuned experimentally over a variety of problems. A similar regularization was also used in [6]. It is worth mentioning that similar ideas have also been applied in IPMs suitable for general nonlinear optimization problems (see [7, 8]).

In this paper, we are taking a different approach. We observe that when an IPM progresses and approaches optimality, significant part of the primal–dual variables approaches zero fast and hence becomes negligible. Yet it is not straightforward how the algorithm might exploit this feature. The proposed method attempts to do so. The method dynamically chooses a suitable regularization for the symmetric indefinite system and effectively “annihilates” the effects of those parts of it, which do not contribute important information to the computation of the Newton direction. The proposed technique involves non-diagonal regularization matrices. However, their non-diagonal terms are only implicit; they do not need to be computed because they are immediately cancelled by other terms present in the linear system. Hence, the effect of adding such non-diagonal regularization is making the Newton system more sparse and therefore easier. In contrast to other previously developed approaches, this regularization is dynamically tuned based on the problem properties. We develop an approach which attempts to capture the needs of an arbitrary problem and regularize its system matrix accordingly. This alleviates the problem of finding specific regularization values that work well over a variety of problems. In general, the proposed approach is very conservative and regularizes the system as little as possible, while ensuring numerical stability.

The rest of the paper is organized as follows. In Sect. 2, we summarize our notation and present the adopted model, based on which we define our regularization matrices, firstly for linear and then for convex quadratic programming problems. For both cases, we provide arguments indicating why the proposed dynamic tuning of the regularization matrices is expected to introduce a controlled perturbation to the problem. In Sect. 3, we provide a spectral analysis, which shows the effect of the proposed regularization and gives specific bounds for the eigenvalues of the regularized system matrix. In Sect. 4, we provide the algorithmic scheme along with some implementation details and numerical results, and finally, in Sect. 5 we derive our conclusions.

2 Exact Primal–Dual Regularization

2.1 Notation

Given an arbitrary symmetric square matrix Q, we denote positive semi-definiteness (positive definiteness) by \(Q \succeq 0\) (\(Q \succ 0\)). We denote the Euclidean norm (2-norm) as \(\Vert \cdot \Vert \). Any other norm will be specified by a subscript. For example, the \(\infty \)-norm is denoted as \(\Vert \cdot \Vert _{\infty }\). We denote by e the column vector of ones of appropriate dimension. Given a set of indices, say \(\mathcal {B}\), \(e_{\mathcal {B}}\) denotes the vector of ones with dimension equal to the cardinality of \(\mathcal {B}\), that is, \(e_{\mathcal {B}} \in \mathbb {R}^{|\mathcal {B}|}\). For an arbitrary matrix, say A, \(A_{\mathcal {B}}\) denotes the submatrix whose columns and rows are indicated from the set of indices \(\mathcal {B}\). Similarly, \(A_{\mathcal {B}\mathcal {N}}\) contains rows of A that belong in \(\mathcal {B}\) and columns of A that belong in \(\mathcal {N}\). Iterates of the algorithm are denoted as \(w_k = (x_k,r_k,s_k,y_k,z_k)\), where \(k \in \mathbb {N}\) is the iteration counter. An optimal solution of the problem is denoted as \(w^* = (x^*,r^*,s^*,y^*,z^*)\). Given a vector \(x \in \mathbb {R}^n\), we denote by \(X \in \mathbb {R}^{n \times n}\) the diagonal matrix that contains x in its diagonal. To simplify the notation, if a matrix, say \(X_k\), depends on the iteration k, we will omit the subscript and state the dependence whenever it is not obvious. When an arbitrary function, say f, depends on some parameter, say \(\eta \), we denote this relation as: \(f_{\eta }(\cdot )\). Given an arbitrary square matrix B, \(\text {off}(B)\) denotes the square matrix that has the same off-diagonal elements as B and has zeros in its diagonal. Similarly, \(\text {diag}(B) = B - \text {off}(B)\). The jth diagonal element of a square matrix B will be denoted as: \((B)_{jj}\). \(B^H\) denotes the conjugate (Hermitian) transpose of matrix B. We denote the smallest (largest) eigenvalue of an arbitrary matrix B, by \(\lambda _{\min }(B)\) (\(\lambda _{\max }(B)\)). Similarly, the smallest (largest) singular value of an arbitrary matrix B is denoted by \(\sigma _{\min }(B)\) (\(\sigma _{\max }(B)\)). Finally, the set of all eigenvalues (spectrum) of an arbitrary matrix B is denoted as \(\lambda (B)\).

2.2 Problem Formulation

We consider the following primal–dual pair of convex quadratic programming problems in the standard form:

where \(c,\ x,\ z \in \mathbb {R}^n\), \(b,\ y \in \mathbb {R}^m\), \(A \in \mathbb {R}^{m\times n}\), \(Q \succeq 0 \in \mathbb {R}^{n \times n}\). Without loss of generality, we assume that \(m \le n\). Note that if \(Q = 0\), (P)–(D) is a primal–dual pair of linear programming problems. If the problems under consideration are feasible, it can easily be verified that there exists an optimal primal–dual triple (x, y, z) satisfying the Karush–Kuhn–Tucker (KKT) optimality conditions for this primal–dual pair (see, for example, Prop. 2.3.4 in [9]).

Our model is based on the developments in [3, 5] and [6]. More specifically, by applying a generalized primal–dual proximal point method on (P), as in ([5, 8]), one can get the following pair of primal–dual regularized problems:

where \(s \in \mathbb {R}^n\), \(r \in \mathbb {R}^m\) are auxiliary variables introduced from the primal–dual application of the proximal point method and \(\ R_p \succeq 0 \in \mathbb {R}^{n \times n}\), \(R_d \succeq 0\in \mathbb {R}^{m \times m}\) are the primal and dual regularization matrices, respectively, that will be specified later. The duality correspondence follows after taking \(r = y-\tilde{y}\) and \(s = x - \tilde{x}\), where \(\tilde{y}\) and \(\tilde{x}\) are estimates of the dual and primal solutions \(y^*,\ x^*\), respectively. Of course, \(R_p = 0,\ R_d = 0\) recovers the initial pair (P)–(D). In [5], the authors observe that this pair of regularized problems is exact under some conditions on the estimates \(\tilde{x},\ \tilde{y}\). In such a case, an optimal solution of (\(P_{r}\))–(\(D_{r}\)) is also an optimal solution of (P)–(D). For more information about exactness of regularization, we refer the interested reader to [10].

In [5, 7, 8], models similar to (\(P_{r}\))–(\(D_{r}\)) are used, restricted, however, in the case where \(R_p = \rho I\) and \(R_d = \delta I\), for some positive values \(\delta ,\ \rho \). It is a well-known fact, proved for the first time in [11], that these regularization schemes can be interpreted as the primal–dual application of the standard proximal point method. However, our model does not specify the structure of the regularization matrices \(R_p,\ R_d\). The only requirement is that these matrices are positive definite. As we commented previously, this model can be interpreted as the application of a generalized primal–dual proximal point method. Such methods, instead of adding the typical 2-norm in the objective function, make use of the so-called D-functions. In fact, one could easily verify that any elliptic norm (defined by an arbitrary positive definite matrix) satisfies the conditions, given in [12, 13], for being a D-function. In other words, our algorithm adds an elliptic norm in the objective, instead of the typical 2-norm. The focus of the paper, however, prevents us from going deeper into these matters. For more about proximal point methods, we refer the reader to [11,12,13,14,15], and the references therein.

2.3 The Newton System

In order to solve the problems presented in the previous subsection, using interior point methods, we proceed by replacing the non-negativity constraints with logarithmic barriers in the objective. In view of the previous, we obtain the following primal–dual regularized barrier problems:

in which non-negativity constraints \(x>0\) and \(z>0\) are implicit.

Forming the Lagrangian of the primal barrier problem, we get:

Now, we can form the first-order optimality conditions of the problems by taking the gradient of (3) and equating it to zero, giving us the following block equations:

By looking at the optimality conditions of the dual barrier problem, we see that the final two conditions are:

We write the optimality conditions in the form of a function \(F_{\tilde{x},\tilde{y},\mu }(w)\ :\ \mathbb {R}^{3n+ 2m} \rightarrow \mathbb {R}^{3n+2m}\), and we want to solve:

at each IPM iteration, where \(w = (x,r,s,y,z),\ \mu >0\) is the barrier parameter and \(\sigma \in \ ]0,1[\) is a centring parameter (which determines how fast \(\mu \) is forced to decrease). We want to force \(\mu \rightarrow 0\); since then, the solution of this system leads to the solution of (\(P_{r}\))–(\(D_{r}\)). Notice that (\(P_{r}\))–(\(D_{r}\)) is parametrized by the estimates \(\tilde{x}\) and \(\tilde{y}\). As observed in [5], if these estimates are close enough to some optimal solution of (P)–(D), then an optimal solution of (\(P_{r}\))–(\(D_{r}\)) is also an optimal solution of (P)–(D). At the beginning of the kth iteration of the IPM, we have available the iterate \(w_k = (x_k,r_k,s_k,y_k,z_k)\), the barrier parameter \(\mu _k = \frac{x_k^\mathrm{T}z_k}{n}\) and we choose a value for the centring parameter \(\sigma _{k} \in \ ]0,1[\). Following the developments in [3, 5, 7, 8], for proximal point methods, we update the estimates of \(x^*,\ y^*\) as \(\tilde{x} = x_k,\ \tilde{y} = y_k\). Next, Newton method is applied to the mildly nonlinear system (4). After evaluating the Jacobian of \(F_{\tilde{x},\tilde{y},\mu }(w)\), the Newton direction is determined at each IPM iteration by solving a system of the following form:

Notice that the matrices \(X, Z, R_p\) and \(R_d\) all depend on the iteration k of the algorithm. Once the Newton direction \(\varDelta w = (\varDelta x,\varDelta r,\varDelta s,\varDelta y,\varDelta z)\) is computed, the algorithm chooses a step length \(a_k \in \ ]0,1]\) and sets the new iterate to \(w_{k+1} = w_k + a_k \varDelta w\). In order to compute the Newton direction efficiently, we want to eliminate some variables of (5). Since we set \(\tilde{y} = y_k\), the second block equation of (5) gives:

and if \(R_d \succ 0\), we get the following relation:

Similarly, by looking at the third block equation of (5) and substituting \(\tilde{x} = x_{k}\), we get:

and if \(R_p \succ 0\), we have that:

Note that we always use either \(R_d \succ 0\) or \(R_d = 0\), and similarly, either \(R_p \succ 0\) or \(R_p = 0\). Hence, the previous two relations are either well defined or absent. Using (6) and (7) to eliminate \(\varDelta r\) and \(\varDelta s\), we can reduce (5) to the following system:

Next, we proceed by eliminating \(\varDelta z\). For that purpose, we have from the third row of (8) that:

Substituting (9) into the first row of (8), we get the following reduced symmetric system (so-called Augmented System):

where \(\varTheta = X Z^{-1}\). In the case of linear programming (\(Q = 0\)) or when solving quadratic separable problems (in which case Q is diagonal), it may be beneficial to further eliminate \(\varDelta x\) from (10), which will end up at the so-called normal equations. However, one should note that this is not a good idea when it comes to general convex quadratic programming problems, since pivoting on the (1,1) block of (10) could result in a dense system, even in cases where both A and Q are sparse. Having said that, we can eliminate \(\varDelta x\) by looking at the first block equation of (10), which gives:

and by substituting (11) into the second row of (10), we get the normal equations:

where

in which the system matrix is symmetric and positive definite.

The proposed model differs from the one derived in [5] in that it allows the use of general positive definite regularization matrices. For example, if \(R_p,\ R_d\) are non-diagonal matrices, then this would amount to the primal and dual application of a generalized proximal point method that adds an elliptic norm in the objective, instead of the typical 2-norm that is employed in standard proximal point methods. Notice that at every iteration of the algorithm, \(R_p,\ R_d,\ \tilde{x}\) and \(\tilde{y}\) are updated. In other words, (\(P_{r}\))–(\(D_{r}\)) represents a sequence of subproblems. At every such subproblem, we apply a single iteration of the interior point method. How \(R_p\) and \(R_d\) are updated will be presented in the following subsection.

2.4 The Regularization Matrices

As IPM approaches optimality, the diagonal matrix \(\varTheta \) contains elements that converge to zero and others that diverge to infinity. This is because \(\mu _k \rightarrow 0\), and we force the complementarity conditions to be approximately satisfied (\(XZe \approx \sigma _{k} \mu _k e\)) . As a consequence, the matrices in (10) and (12) become extremely ill-conditioned. On top of that, it is often the case due to modelling choices that the constraint matrix A is not of full row rank, which makes the system matrices singular. It is well known, as shown by Armand and Benoist [2], that both these problems can be addressed with the use of regularization. The most common approach in the literature is the addition of two diagonal regularization matrices, say \(R_p,\ R_d\), whose values are tuned experimentally over a variety of problems ([2, 3, 5, 6]).

Roughly speaking, the goals of a regularization method for IPMs are ([2, 3, 6,7,8, 16]):

-

1.

to improve the spectral properties of the matrices in (10) and (12),

-

2.

without significantly perturbing the previous systems,

-

3.

while preserving the sparsity of the problem and the computational efficiency of the method.

To the best of our knowledge, most of the regularization methods in literature manage to achieve the first and the third regularization goals, failing, however, to achieve the second goal with certainty. This is the case since these regularization methods are tuned experimentally. Hence, they do not rely on the properties of the problem itself, and as a consequence, such regularization values can only be good for some problems and poor for others. The proposed method takes a different approach, by introducing two non-diagonal regularization matrices \(R_p\) and \(R_d\), which are tuned based on the properties of the problem. Of course, one could argue that this may disturb the sparsity and as a consequence the computational efficiency of the method; however, these non-diagonal matrices are created implicitly. As we will show later, not only the sparsity is preserved, but in fact it is improved.

As we already mentioned, as IPM approaches optimality, the matrix \(\varTheta \) contains some very large and some very small elements. The proposed regularization exploits this inherent feature of the method and splits the columns of the problem matrix in two sets, say \(\mathcal {N}\) and \(\mathcal {B}\) such that:

where \(|\mathcal {N}| = n_1\) and \(| \mathcal {B}| = n_2\), with \(n_1 + n_2 = n\). Notice that the previous splitting captures all the columns only if the method converges to a strictly complementary solution (that is, the limit point satisfies: \(\hat{x}^\mathrm{T}\hat{z} = 0\) and \(\hat{x}_j + \hat{z}_j > 0,\ \forall \ j\)). In the quadratic programming case, a strictly complementary solution may not exist. Hence, there might exist some indices \(j \subseteq \{1,\ldots ,n\}\) for which: \(x_j \rightarrow 0\) and \(z_j \rightarrow 0\). In such a case, it is unknown whether the value of \(\varTheta _{jj}\) will be small or large. We can assume, without loss of generality, that any such indices will be classified as elements of \(\mathcal {B}\) (although in practice this would depend on the value of \(\varTheta _{jj}\), as we will show later). Of course for the case of linear programming (\(Q = 0\)), it is a well-known fact (see, for example, [17]) that a strictly complementary solution always exists, if the problems are feasible. Moreover, as shown in [18, 19], primal–dual IPMs converge to such an optimal solution. If a strictly complementary solution exists for the quadratic programming case, it is shown in [20] that an infeasible primal–dual IPM which reduces the constraints violation at the same rate as \(\mu \) is reduced produces iterates that converge to a strictly complementary solution.

In what follows, we present the construction of the regularization for the case of linear programming, and then, we suggest an extension for convex quadratic programming.

2.4.1 Linear Programming

For the case of linear programming, we employ a dual regularization, that is, in (5) we set \(R_p = 0\) and only use \(R_d \succ 0\) to improve the spectral properties of the problem. Given this set-up and by permuting the columns so that the first \(n_1\) of them corresponds to indices in \(\mathcal {N}\) while the remaining correspond to indices in \(\mathcal {B}\), the augmented system in (10) takes the form:

where \(A_{\mathcal {N}} \in \mathbb {R}^{m \times n_1}\) and \(A_{\mathcal {B}} \in \mathbb {R}^{m\times n_2}\). Pivoting on the first \(n_1\) columns of (13) gives the partially reduced augmented system:

Since we know that \(\varTheta _{\mathcal {N}} \rightarrow 0\), we expect that the magnitude of \(\Vert A_{\mathcal {N}} \varTheta _{\mathcal {N}} A_{\mathcal {N}}^\mathrm{T}\Vert \) will be small when the method approaches optimality. Intuitively, our goal is to create a regularization matrix that will implicitly absorb the off-diagonal elements of \(A_{\mathcal {N}} \varTheta _{\mathcal {N}} A_{\mathcal {N}}^\mathrm{T}\) (promoting sparsity) and regularize the system with values having a slightly larger magnitude to that of the elements which were absorbed. For this class of problems, we will focus on solving the normal equations. Given (14), we can form the normal equations by eliminating \(\varDelta x_{\mathcal {B}}\), which gives the following system:

We choose the following dual regularization matrix:

where \(\varDelta _d\) is a diagonal matrix chosen such that \(R_d \succ 0\) and diagonally dominant, that is:

For computational efficiency and numerical stability, we choose \(\varDelta _d = \delta _{d,k} I_m\), with:

Observe that the regularization matrix given in (15) strongly depends on the properties of the problem as well as on the iteration k of the IPM. In order to control which elements enter the set \(\mathcal {N}\), at every iteration k, we enforce the following condition:

where \(\text {reg}_{thr,k}\) is set to 1 at the beginning of the optimization (\(k = 0\)) and is decreased at the same rate as \(\mu _k\) (i.e. \(\text {reg}_{thr,k} = O(\mu _k)\)). Once \(\text {reg}_{thr,K}\) becomes smaller than a predefined value, say \(\epsilon > 0\), for some large \(K \ge 1\), we fix it to this value (\(\text {reg}_{thr,k} = \epsilon ,\ \forall \ k \ge K\)). The choice of \(\epsilon \) will be specified later. Note that (17) ensures that \(\delta _{d,k} < \text {reg}_{thr,k}\), at every iteration. In order to show that sparsity is improved, we form again the normal equations’ matrix using the definition of \(R_d\) to get:

From the previous, one can easily observe that the sparsity of the normal equations is improved, since some off-diagonal elements of the matrix have been absorbed by the regularization.

Since \(\text {reg}_{thr,k}\) is not allowed to go to zero as \(\mu _k \rightarrow 0\), we would like to know how much we perturb the Newton system, by having it fixed to some value \(\epsilon > 0\), when the method is close to optimality. In the rest of this subsection, we compute some perturbation bounds, which depend on the value of \(\text {reg}_{thr}\).

Motivation

Now that we have defined the regularization matrix for the case of linear programming problems; let us provide a motivation for this choice. Firstly, note that the proposed regularization has multiple objectives. On the one hand, we want to find a good criterion for tuning a uniform dual regularization matrix \(\delta _{d,k} I\) based on the properties of the problem, such that the non-regularized problem matrix is not perturbed significantly while its spectral properties are improved. On the other hand, we use this uniform dual regularization value as a cut-off point, for dropping the smallest off-diagonal elements in the normal equations matrix, improving the computational efficiency of the method. In what follows, we will provide an analysis indicating why the uniform dual regularization that we introduce is expected not to perturb the problem significantly. Then, we will show that further dropping the off-diagonal elements introduces a controlled perturbation.

Based on the previous, let us assume for now that \(R_d = \delta _{d,k} I\), where \(\delta _{d,k}\) is defined as in (16). For simplicity of notation, we omit the iteration subscript in \(\delta _d\) and we let:

We want to analyse the difference in the eigenvalues of the matrices M and \(M+E\). For the rest of this subsection, let \(\lambda _i\) denote the ith smallest eigenvalue of M, \(\tilde{\lambda }_i\) the ith smallest eigenvalue of \(M+E\) and \(\lambda _i(t)\) the ith smallest eigenvalue of \(M+tE\), with \(t \in [0,1]\). The smallest eigenvalues of M (in the absolute value sense) will be increased after the addition of E, and this is of course desirable, since this was the main motivation for introducing the regularization. The following analysis provides perturbation bounds only for eigenvalues of M that satisfy \(|\lambda _i |> 2\Vert E\Vert \). We will assume also that the eigenvalues that we analyse are simple (i.e. their algebraic multiplicity is 1). The analysis can be extended to multiple eigenvalues; however, it gets unnecessarily complicated. Such an analysis is derived in the appendix of [21]. Let us now state a lemma derived in [22].

Lemma 2.1

Let M, E be square Hermitian matrices. Denote by \(\lambda _i(t)\) the ith smallest eigenvalue of \(M+tE\), and consider the eigenvector function x(t) such that: \((M+tE)x(t) = \lambda _i(t)x(t)\), with \(\Vert x(t)\Vert = 1\), for some \(t \in [0,1]\). If \(\lambda _i(t)\) is simple, then:

As observed in [21], if the eigenvector x(t) has small components in the positions corresponding to the dominant elements of E, then \(\frac{\partial \lambda _i(t)}{\partial t}\) is expected to be small. Let us now provide the following lemma, based on the developments in [23].

Lemma 2.2

Let \(\lambda _i \ne 0\) be an eigenvalue of M and \(Mx = \lambda _i x\), with \(\Vert x\Vert = 1\). Partitioning \(x = [x_1^H\ x_2^H]^H\), we have:

Proof

The proof follows exactly the developments in [23], but we provide it here for completeness. From the second block equation of \(M x = \lambda _i x\), we have:

where the latter is well defined since we have assumed that \(\lambda _i \ne 0\). By taking norms on both sides in the previous equation, we get:

But \(\Vert x\Vert = 1 \Rightarrow \Vert x_1\Vert = \sqrt{1 - \Vert x_2\Vert ^2}\). Hence, we have:

By solving the previous inequality, we get:

which completes the proof. \(\square \)

The following lemma will be a useful tool for the analysis. We omit its trivial proof.

Lemma 2.3

Let \(f(x) = \frac{x}{\sqrt{a+x^2}}\), where \(a > 0\). Then, f(x) is a monotone increasing function for \(x > 0\).

Let us now bound the second block of the eigenvector function \(x_2(t)\) based on the developments in [23].

Lemma 2.4

Assume that \(\lambda _i \ne 0\) is the ith smallest eigenvalue of M. Consider the eigenvector function x(t) such that: \((M+ tE)x(t) = \lambda _i(t)x(t)\), with \(\Vert x(t)\Vert = 1\), \(\forall \ t \in [0,1]\). Partitioning \(x(t) = [x_1(t)^H\ x_2(t)^H]^H\) and assuming that \(|\lambda _i| > 2\Vert E\Vert \), we have that:

Proof

We omit the proof which follows from Lemma 2.3 combined with the previous developments. The interested reader can view [23], Lemma 2.8, for a detailed derivation which can directly be applied in our context. \(\square \)

Let us now derive the following theorem which bounds the difference between the ith smallest eigenvalues of the matrices M and \(M+E\), respectively.

Theorem 2.1

Let \(\lambda _i\) and \(\tilde{\lambda }_i\) be the respective ith smallest eigenvalues of M and \(M+E\) and define \(\phi _i = \frac{\Vert A\Vert }{\sqrt{(|\lambda _i| - 2\Vert E\Vert )^2 + \Vert A\Vert ^2}}\). For every i such that \(|\lambda _i| > 2\Vert E\Vert \), we have that:

Proof

From Lemma 2.1 and Lemma 2.4, it follows that:

The proof is complete. \(\square \)

Note that, since \(\phi _i < 1\), the latter is a tighter bound than the general bound provided by Weyl’s inequality, given that the eigenvalue under consideration is larger than \(2\Vert E\Vert \). From the previous results, we can draw several useful observations. As we already stated, the smaller the components of \(x_2(t)\) are, the smaller \(\frac{\partial \lambda _i(t)}{\partial t}\) is expected to be. But \(x_2(t)\) is bounded by \(\phi _i\). Hence, the smaller \(\phi _i\) is, the more insensitive the eigenvalue \(\lambda _i\) is to the perturbation \(\Vert E\Vert = \delta _d\). In fact, in the previous theorem we proved that the error in the eigenvalue is bounded by \(\Vert E\Vert \phi _i^2\).

Let us now examine the nature of \(\phi _i\). Firstly, one can see that it depends on the norm of the constraint matrix A, and from Lemma 2.3 we can observe that it is monotone increasing with respect to the norm of A. What this tells us is that the smaller the norm of the constraint matrix A is, the more insensitive the eigenvalues of matrix M are to the perturbation E. Of course, the latter holds only for eigenvalues that are sufficiently larger than \(2\Vert E\Vert \). On the other hand, from the definition of \(\phi _i\), we can see that it is beneficial to have a small \(\Vert E\Vert \); since then, most of the eigenvalues of M are expected to satisfy: \(|\lambda _i| > 2\Vert E\Vert \).

We now shift our attention to the proposed tuning of the regularization parameters. From (17), the set of indices \(\mathcal {N}\) is such that: \(\max _j (\varTheta _{\mathcal {N}})_{jj} \Vert AA^\mathrm{T}\Vert _{\infty } \le \text {reg}_{thr}\). Also, from (16), we have that \(\delta _d = \max _j (\varTheta _{\mathcal {N}})_{jj} \Vert A_{\mathcal {N}} A_{\mathcal {N}}^\mathrm{T}\Vert _{\infty }\). By combining the previous, we get:

Observe that if \(\Vert AA^\mathrm{T}\Vert _{\infty }\) is large, we allow few columns to enter the partition \(\mathcal {N}\). In this case, \(\phi _i\) is expected to be close to 1 for most of the eigenvalues \(\lambda (M)\). On the other hand, \(|\mathcal {N}|\) is increased if the infinity norm of \(AA^\mathrm{T}\) is small, and in such a case, \(\phi _i\) is expected to be small for many eigenvalues of the system matrix M. A more sophisticated choice for the regularization value based on the derived bounds is possible; however, the proposed regularization has two goals, that is, not to perturb the system significantly while introducing sparsity to the problem, and hence, the definition of \(\delta _d\) is computationally advantageous for that. Note that taking advantage of the previously presented bounds indicates that the sufficiently large (in the absolute value sense) eigenvalues of the system matrix (\(\gg 2\delta _d\)) will be perturbed almost insignificantly. If some eigenvalues of the matrix are very small, the previous arguments break down. We will derive lower bounds for these eigenvalues in the next section.

Having introduced the diagonal uniform regularization \(\delta _dI\), let us examine the effect of further dropping the off-diagonal elements \(\text {off}(A_{\mathcal {N}} \varTheta _{\mathcal {N}} A_{\mathcal {N}}^\mathrm{T})\) from the normal equations (12). For that, we define \(K = A\varTheta A^\mathrm{T} + \delta _d I\) and \(R = \text {off}(A_{\mathcal {N}} \varTheta _{\mathcal {N}} A_{\mathcal {N}}^\mathrm{T})\) and consider the following generalized eigenvalue problem:

The previous is well defined since \(K \succ 0\). We will analyse the eigenvalues of \(K^{-\frac{1}{2}}RK^{-\frac{1}{2}}\), which is similar to \(K^{-1}R\). Now assume by contradiction that \(\lambda _{\max }(K^{-\frac{1}{2}}RK^{-\frac{1}{2}}) \ge 1\). Then from (18) and for some eigenvector u corresponding to the maximum eigenvalue, we would have:

By adding \(u^\mathrm{T} \text {diag}(A_{\mathcal {N}} \varTheta _{\mathcal {N}} A_{\mathcal {N}}^\mathrm{T})u\) to both sides of the previous inequality, we get:

which is a contradiction. Hence, \(\lambda _{\max }(K^{-\frac{1}{2}}RK^{-\frac{1}{2}}) < 1\). On the other hand, if we assume by contradiction that \(\lambda _{\min }(K^{-\frac{1}{2}}RK^{-\frac{1}{2}} )\le -1\), from (18) and for an eigenvector u corresponding to the minimum eigenvalue, we would get:

However, using (16), we get \(\delta _d + R \succ 0\); hence, \(-\delta _d u^\mathrm{T} u < u^\mathrm{T}Ru\), which contradicts the previous inequality. Hence, \(\lambda _{\min }(K^{-\frac{1}{2}}RK^{-\frac{1}{2}} )> -1\). Now, one can easily observe that:

where \(\rho (\cdot )\) is the spectral radius, and hence, the eigenvalues of \(K^{-1}(K-R)\) are clustered around 1. This supports the claim that further dropping the off-diagonal elements of the part of the normal equations corresponding to indices in \(\mathcal {N}\), after adding a uniform dual regularization, introduces a controlled perturbation.

2.4.2 Quadratic Programming

Unlike the case of linear programming, for the case of quadratic programming we employ a primal–dual regularization, that is, we use both \(R_p \succ 0\) and \(R_d \succ 0\), as shown in (5), to improve the spectral properties of the problem. For this case, we modify the condition for allowing a column to enter the set \(\mathcal {N}\), and at each iteration k, in place of (17), we require:

where \(\text {reg}_{thr,k}\) is updated as indicated in the linear programming case (Sect. 2.4.1). As before, by permuting the columns so that the first \(n_1\) correspond to indices in \(\mathcal {N}\) while the remaining ones correspond to indices in \(\mathcal {B}\), the augmented system in (10) takes the form:

where

and the permuted matrix Q is:

with \(Q_{\mathcal {N}} \in \mathbb {R}^{n_1 \times n_1}\), \(Q_{\mathcal {B}\mathcal {N}} \in \mathbb {R}^{n_2 \times n_1}\) and \(Q_{\mathcal {B}} \in \mathbb {R}^{n_2 \times n_2}\) being the respective blocks of the matrix Q, while \(R_{p\mathcal {N}} \in \mathbb {R}^{n_1 \times n_1}\) and \(R_{p\mathcal {B}} \in \mathbb {R}^{n_2 \times n_2}\) are the only two nonzero blocks of the block diagonal primal regularization matrix \(R_p\). As we mentioned earlier, when we solve general convex quadratic programming problems, it is dangerous to eliminate the (1,1) block of (10) and solve the problem using (12), since the latter system may become dense. However, in the linear programming case, our regularization matrix was tuned based on the properties of the normal equations. In order to overcome this problem, we introduce a primal regularization that can absorb the non-diagonal elements of the (1,1) block of the permuted augmented system (20). This allows us to safely (from the sparsity and computational point of view) pivot on this block and perform the analysis in a similar manner as in the linear programming case. Hence, we define:

with

where \(\varDelta _{p\mathcal {N}} \in \mathbb {R}^{n_1 \times n_1}\) is a uniform diagonal matrix, which ensures that \(R_{p\mathcal {N}} \succ 0\) and diagonally dominant. Although \(\varDelta _{p\mathcal {N}}\) can have sizeable values, (19) ensures that the respective elements in \(\varTheta _{\mathcal {N}}^{-1}\) have significantly larger values, making this perturbation acceptable. Using (21), the (1,1) block of (20) becomes:

where \(D_{p\mathcal {N}} = \text {diag}(Q_{\mathcal {N}}) + \varDelta _{p\mathcal {N}}\) is a diagonal matrix. For simplicity of notation, let

Pivoting on the (1,1) block of (20) results in the following partially reduced augmented system:

where

Using a similar reasoning as before, we will tune the matrix \(R_{p\mathcal {B}}\) so that sparsity is promoted. By looking at the (1,1) block of (23), one can see that an obvious choice for this matrix would be:

with

where \(\varDelta _{p\mathcal {B}} \in \mathbb {R}^{n_2 \times n_2}\) is a uniform diagonal matrix, which ensures that \(R_{p\mathcal {B}} \succ 0\) and diagonally dominant. Finally, by looking at the (2,2) block of (23), we can define \(R_d\) in a similar manner as in the linear programming case as:

with

where again \(\varDelta _d \in \mathbb {R}^{m \times m}\) is a uniform diagonal matrix, which ensures that \(R_d \succ 0\) and diagonally are dominant. Note that condition (19), which defines columns qualified to enter \(\mathcal {N}\), ensures that the positive elements of the diagonal matrices \(\varDelta _{p\mathcal {B}},\ \varDelta _d\) will be strictly less than \(\text {reg}_{thr,k}\), at every iteration k of the algorithm.

Motivation

As in the linear programming case, let us provide the motivation for the previously presented regularization scheme. We will derive some useful bounds that extend those provided in the motivation paragraph for the linear programming regularization. All the bounds stated here are direct applications of the results obtained in [23] and for simplicity are given without proofs. Let:

and denote by \(\lambda _i\) and \(\tilde{\lambda }_i\) the ith smallest eigenvalues of M and \(M +E\), respectively. Note that \(\varDelta _p\) is a permuted \(n\times n\) diagonal matrix, comprised of the two uniform primal regularization matrices \(\delta _{p\mathcal {N}}I_{n_1},\ \delta _{p\mathcal {B}}I_{n_2}\), with \(n_1 + n_2 = n\). Let \(\zeta _i = \min _{\mu \in \lambda (-Q-\varTheta ^{-1})} |\lambda _i - \mu |\), where \(\lambda _i \in \lambda (M)\), \(\lambda _i \not \in \lambda (-Q-\varTheta ^{-1})\) and \(\lambda _i \ne 0\). Let also \(Mx = \lambda _i x\), with \(\Vert x\Vert =1\). Partitioning \(x = [x_1^H\ x_2^H]^H\), it can be proved as before that:

A counterpart of Lemma 2.4 for this case follows from [23] and states that if \(|\lambda _i| > \delta _d + \Vert E\Vert \), then, \(\forall \ t \in [0,1]\):

Similarly, if \(\zeta _i > \Vert \varDelta _p\Vert + \Vert E\Vert \), then \(\forall \ t \in [0,1]\) we have:

where \(x(t) = [x_1(t)^H\ x_2(t)^H]^H\) solves the problem \((M+tE)x(t) = \lambda _i(t) x(t)\), for some \(t \in [0,1]\). For a detailed derivation of the previous results, the interested reader can look at [23], Lemmas 2.8, 2.9. Finally, the counterpart of Theorem 2.1 for this case states that for each i such that: \(|\lambda _i| > \delta _d + \Vert E\Vert \) and \(\zeta _i > \Vert \varDelta _p\Vert + \Vert E\Vert \), we have:

These bounds are slightly less intuitive than the ones provided for the linear programming case; however, similar arguments to those used in the linear programming case can be employed here, supporting the claim that the uniform regularization that we introduce does not perturb the sufficiently large (in the absolute value sense) eigenvalues of the non-regularized system significantly. The main reason why we provide these bounds is for completeness. We could proceed by showing, as in the linear programming case, that further dropping \(\text {off}(Q_{\mathcal {N}}),\ \text {off}(Q_{\mathcal {B}\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1}Q_{\mathcal {B}\mathcal {N}}^\mathrm{T})\) and \(\text {off}(A_{\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1}A_{\mathcal {N}}^\mathrm{T})\) (as the proposed non-diagonal regularization suggests) alters the eigenvalues of the diagonally regularized system in a controlled way, but for ease of presentation we omit this for a future study.

Rank-Deficient Matrices and the Value of \(\epsilon \)

Notice that both in the linear and in the quadratic programming cases, during some iterations of the IPM, no columns will satisfy the respective conditions for entering \(\mathcal {N}\). In order to ensure that rank deficiency will not get in the way of the proposed method, at every such iteration k, we apply a uniform dual regularization \(R_d = \text {reg}_{thr,k} I_m\), where \(\text {reg}_{thr,k}\) is updated as stated in Sect. 2.4.1. In the quadratic programming case, we also include a uniform primal regularization \(R_p = \text {reg}_{thr,k}I_n\). We expect that sufficiently large (in the absolute value sense) eigenvalues (\(\gg 2 \cdot \text {reg}_{thr,k}\)) of the system are perturbed insignificantly by using such a uniform regularization. Once at least one column enters \(\mathcal {N}\), we drop this uniform regularization and start using the regularization matrices presented in this paper.

Notice that \(\text {reg}_{thr}\) is not allowed to decrease more than a pre-specified value \(\epsilon > 0\). We set this to: \(\epsilon = \max \{\frac{0.1 \cdot \text {tol}}{\Vert A\Vert ^2},10^{-13}\}\), where \(\text {tol}\) is the error tolerance for successful termination of the algorithm and is usually set to the values \(10^{-6}\) or \(10^{-8}\). This value is based on the bounds derived in the motivation paragraphs presented both for the linear and for the quadratic programming cases, so that \(\epsilon \phi _i^2\) is small.

3 Spectral Analysis

This section focuses on analysing the spectral properties of the regularized systems provided in the previous section. As before, the analysis is split into linear and quadratic programming, respectively. For each of these cases, we will provide the spectral properties of the respective augmented and partially reduced augmented system, showing the effectiveness of the proposed regularization method.

3.1 Linear Programming

For linear programming problems, we employ only dual regularization, that is, we set \(R_p = 0\) and use only \(R_d \succ 0\). In Sect. 2.4.1, it was noted that \(\varDelta _{d}\) is chosen such that \(R_d \succ 0\) and diagonally dominant. This is very easy to see, by looking at the definition of (16) combined with (15). Since \(R_d\) is diagonally dominant, we are able to invoke the Gershgorin Circle Theorem, which states that if:

then any eigenvalue of \(R_d\) is positive and lies in one of the following discs:

where \(\delta _d\) is defined in (16), \(i = 1,\ldots ,m\). This yields: \(0 < \lambda _i \le \delta _d + r_i, \ \forall \ i = 1,\ldots ,m\), where \(\lambda _i\) represents the ith eigenvalue of \(R_d\). On the other hand, by construction, we know that \(\delta _d \ge r_i + \min _{j: (A_{\mathcal {N}}\varTheta _{\mathcal {N}} A_{\mathcal {N}}^\mathrm{T})_{jj} > 0} ( (A_{\mathcal {N}}\varTheta _{\mathcal {N}} A_{\mathcal {N}}^\mathrm{T})_{jj})\), \(\forall \ i = 1,\ldots ,m\) and hence,

Let us now analyse the spectral properties of the matrix in (13). For that we provide the following theorem, which gives bounds for the eigenvalues of the system. The proof is based on the developments in [24] and [25].

Theorem 3.1

For all \((x,z) > 0\) and \(R_d\) as defined in (15), the coefficient matrix of (13) has exactly n negative and m positive eigenvalues. Order and denote them as:

These eigenvalues satisfy the following bounds:

In case \(\text {rank} (A) < m\), the eigenspace of the eigenvalues originating only from \(R_d\) is \(\{0\}\times \text {Null}(A^\mathrm{T})\) and there are \(m - \text {rank}(A)\) such eigenvalues.

Proof

Firstly, from Sylvester’s law of inertia we know that since \(\varTheta \) and \(A \varTheta A^\mathrm{T} + R_d\) are positive definite, the regularized augmented system matrix of (13) possesses precisely n negative and m positive eigenvalues. If \(\mu \) is an eigenvalue of the linear system matrix of (13), then there are vectors \(u \in \mathbb {R}^n\) and \(p \in \mathbb {R}^m\) that cannot both be zero, using which the eigenvalue problem can be written in the following form:

As observed in [5], if \(\text {rank}(A) < m\), there are some eigenvalues of the matrix in (13) that satisfy: \(R_d p = \mu p\). The associated eigenspace is \(\{0\}\times \text {Null}(A^\mathrm{T})\).

If \(\mu < 0\), then \(u \ne 0\) since otherwise \(p = 0\) because \(R_d \succ 0\). On the other hand, if \(\mu > 0\), then \(p \ne 0\) since otherwise \(u = 0\) because \(\varTheta ^{-1} \succ 0\). Taking the inner product of the first equation of (29) with u and the second equation with p and subtracting the former from the latter give:

Using the fact that \(\varTheta ^{-1} \succ 0\), along with \(R_d \succ 0\), and assuming that \(\mu < 0\) (i.e. \(u \ne 0\)):

where the inequality follows because the left-hand side is as small as possible and we dropped the positive term \(p^\mathrm{T} R_d p\). But since \(\mu <0 \) in this case, we know that \(-\min _j(\varTheta ^{-1})_{jj} > \mu =\mu _{-1} \). Furthermore, if \(\mu < 0\), then we know that \(R_d-\mu I \succ 0\). Hence, it is invertible and we can solve the second equation of (29) with respect to p, substitute the result in the first equation and take the inner product with u to get:

Hence,

where we observed that the left-hand side has negative terms, took the most negative possible values for these terms and divided by \(u^\mathrm{T}u\). Note that for the second term of the left-hand side, we used the fact that for two positive definite matrices \(A,\ B\), we have that \(\lambda _{\min }(A+B) \ge \lambda _{\min }(A) + \lambda _{\min }(B)\). Solving the previous inequality with respect to \(\mu \) (and using the roots of the second-order equation), we get that:

Now, for the case where \(\mu > 0\) (where we know that \(p \ne 0\)), we solve the first equation of (29) with respect to u, substitute the result in the second one and take the inner product with p, to get:

Observe that \(\lambda _{\max } ((\frac{1}{\mu } \varTheta ^{-1} + I)^{-1}) \le 1\). Given that all the terms on the left-hand side are positive, we can take upper bounds for every term, multiply everything by \(\mu \) (since \(\mu >0\)) and divide both sides by \(p^\mathrm{T}p\). This gives us the following second-order inequality with respect to \(\mu \):

Solving the previous quadratic inequality gives:

where we used the rightmost upper bound given in (28). Working similarly using the same equation but slightly altered, that is:

and by taking lower bounds on each term of the left-hand side and rearranging them, we get the following inequality:

Solving the previous gives us the last bound:

which completes the proof. \(\square \)

Below we provide an analogous theorem applied to the matrix of (14). Again, we use the definition of \(R_d\) that is given in (15). With this in mind, we know that the (2,2) block of (14) is comprised of two diagonal matrices, i.e.:

where \(\varDelta _d\) is defined in (16). The proof is similar to that of the previous theorem, and hence, it is not provided here.

Theorem 3.2

For all \((x,z) > 0\) and \(R_d\) as defined in (15), the coefficient matrix of (14) has exactly \(n_2\) negative and m positive eigenvalues. Order and denote them as:

These eigenvalues satisfy the following bounds:

In case \(\text {rank} (A_{\mathcal {B}}) < m\), the eigenspace of the eigenvalues originating only from \(D^*\) is \(\{0\}\times \text {Null}(A_{\mathcal {B}}^\mathrm{T})\) and there are \(m - \text {rank}(A_{\mathcal {B}})\) such eigenvalues.

Now we can compare the bounds given in Theorems 3.1 and 3.2 and observe clear advantages of using the partially reduced augmented system (14) over the full augmented system (13). Firstly, one can easily note that \(-\min _j(\varTheta _{\mathcal {B}}^{-1})_{jj} = -\min _j(\varTheta ^{-1})_{jj}\); hence, the bound for the largest negative eigenvalue is identical for both systems. However, there are two main differences:

-

1.

We have that \(\max _j(\varTheta _{\mathcal {B}}^{-1})_{jj} \le \max _j(\varTheta ^{-1})_{jj} \) (and usually \(\max _j(\varTheta _{\mathcal {B}}^{-1})_{jj} \ll \max _j(\varTheta ^{-1})_{jj} \)). As a consequence, the bound on the most negative eigenvalue of (13) will be larger (in the absolute value sense) than the bound on the respective eigenvalue of (14).

-

2.

Our guaranteed lower bound for the minimum eigenvalue of \(R_d\) is smaller than the respective lower bound for the minimum eigenvalue of \(D^*\). In fact,

$$\begin{aligned}&\min _i D^*_{ii} \ge \delta _d ,\\&\lambda _{\min }(R_d) \ge \min _{j: (A_{\mathcal {N}}\varTheta _{\mathcal {N}} A_{\mathcal {N}}^\mathrm{T})_{jj} > 0} ((A_{\mathcal {N}}\varTheta _{\mathcal {N}} A_{\mathcal {N}}^\mathrm{T})_{jj}), \end{aligned}$$where \(\delta _d\) is defined in (16) and the second lower bound is given in (28). By construction, the first bound is better. As a consequence, the smallest positive eigenvalue of (14) is guaranteed to be at least as large as \(\delta _d\).

3.2 Quadratic Programming

For quadratic programming problems, we employ a primal–dual regularization. In subsection 2.4.2, it was noted that \(\varDelta _{d}\) is chosen such that \(R_d \succ 0\) and diagonally dominant, while \(\varDelta _{p\mathcal {B}}\) is chosen such that \(R_{p\mathcal {B}} \succ 0\) and diagonally dominant. This can be seen by looking at (27) combined with (26) and (25) combined with (24), respectively. Similarly, positive definiteness and diagonal dominance of \(R_{p\mathcal {N}}\) follows immediately by construction, i.e. by looking at equations (21) and (22). For notational convenience, we define:

-

For \(R_d\), we are able to invoke the Gershgorin circle theorem as in the linear programming case stating that if:

$$\begin{aligned} r_i = \sum _{j=1,j\ne i}^{m} | (A_{\mathcal {N}} \bar{Q}_{\mathcal {N}}^{-1} A_{\mathcal {N}}^\mathrm{T})_{ij} |, \end{aligned}$$then any eigenvalue of \(R_d\) is positive and lies in one of the following discs:

$$\begin{aligned} \{\lambda : |\lambda - \delta _d| \le r_i \}, \end{aligned}$$where \(\delta _d = \max _{j}(\bar{Q}_{\mathcal {N}}^{-1})_{jj} \Vert A_{\mathcal {N}} A_{\mathcal {N}}^\mathrm{T}\Vert _{\infty } \), \(i = 1,\ldots ,m\). This yields: \(0 < \lambda _{i} \le \delta _d + r_i, \ \forall \ i = 1,\ldots ,m\), where \(\lambda _i\) is the ith eigenvalue of \(R_d\). On the other hand, by construction we know that \(\delta _d \ge r_i + \min _{j: (A_{\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1} A_{\mathcal {N}}^\mathrm{T})_{jj} > 0} ((A_{\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1} A_{\mathcal {N}}^\mathrm{T})_{jj})\), \(\forall \ i = 1,\ldots ,m\), and hence,

$$\begin{aligned} \min _{j:(A_{\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1} A_{\mathcal {N}}^\mathrm{T})_{jj} > 0} ((A_{\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1} A_{\mathcal {N}}^\mathrm{T})_{jj}) \le \lambda _{i} \le \delta _d + r_i < 2 \delta _d. \end{aligned}$$(30) -

For \(R_{p\mathcal {B}}\), we apply the same theorem; however, in this case we have:

$$\begin{aligned} r_i = \sum _{j=1,j\ne i}^{n_2} | (Q_{\mathcal {B}\mathcal {N}} \bar{Q}_{\mathcal {N}}^{-1} Q_{\mathcal {B}\mathcal {N}}^\mathrm{T})_{ij} |, \end{aligned}$$and any eigenvalue of \(R_{p\mathcal {B}}\) is positive and lies in one of the following discs:

$$\begin{aligned} \{\lambda : |\lambda - \delta _{pB}| \le r_i \}, \end{aligned}$$where \(\delta _{p\mathcal {B}} = \max _{j}(\bar{Q}_{\mathcal {N}}^{-1})_{jj} \Vert Q_{\mathcal {B}\mathcal {N}} Q_{\mathcal {B}\mathcal {N}}^\mathrm{T}\Vert _{\infty } \), \(i = 1,\ldots ,n_2\). As before, we know that:

$$\begin{aligned} \min _{j:(Q_{\mathcal {B}\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1} Q_{\mathcal {B}\mathcal {N}}^\mathrm{T})_{jj} > 0} ((Q_{\mathcal {B}\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1}Q_{\mathcal {B}\mathcal {N}}^\mathrm{T})_{jj}) \le \lambda _{i} \le \delta _{p\mathcal {B}} + r_i < 2 \delta _{p\mathcal {B}},\ \ \forall \ i =1,\ldots ,n_2=|\mathcal {B}|, \end{aligned}$$(31)where \(\lambda _i\) is the ith eigenvalue of \(R_{p\mathcal {B}}\).

-

Finally, we can work similarly to examine the spectral properties of \(R_{p\mathcal {N}}\). Again by letting:

$$\begin{aligned} r_i = \sum _{j=1,j\ne i}^{n_1} (Q_{\mathcal {N}})_{ij}, \end{aligned}$$any eigenvalue of \(R_{p\mathcal {N}}\) is positive and lies in one of the following discs:

$$\begin{aligned} \{\lambda :|\lambda -\delta _{p\mathcal {N}}| \le r_i \}, \end{aligned}$$where \(\delta _{p\mathcal {N}} = \Vert Q_{\mathcal {N}}\Vert _{\infty }\), \(i = 1,\ldots ,n_1\). This yields: \(0 < \lambda _i \le \delta _{p\mathcal {N}} + r_i\), \(\forall \ i =1,\ldots ,n_1\), where \(\lambda _i\) is the ith eigenvalue of \(R_{p\mathcal {N}}\). But since \(Q_{\mathcal {N}} \succeq 0\) as a principal minor of \(Q \succeq 0\), we know that if a diagonal element of \(Q_{\mathcal {N}}\) is zero, then its respective column and row are also zero. Hence, this implies tighter final bounds, that is:

$$\begin{aligned} \min _{j:(Q_{\mathcal {N}})_{jj} > 0} ( (Q_{\mathcal {N}})_{jj})< \lambda _i \le \delta _{p\mathcal {N}} + r_i < 2\delta _{p\mathcal {N}},\ \ \forall \ i =1,\ldots ,n_1=|\mathcal {N}|. \end{aligned}$$(32)

Let us now analyse the spectral properties of (20). For that, we provide the following theorem, which is the extension of Theorem 3.1 for the QP case. The proof is almost identical, and hence, it is not provided here. For notational convenience, let:

Theorem 3.3

For all \((x,z) > 0\) and \(R_d,\ R_{p\mathcal {B}},\ R_{p\mathcal {N}}\) as defined in (26), (24) and (21), respectively, the coefficient matrix of (20) has exactly n negative and m positive eigenvalues. Order and denote them as:

These eigenvalues satisfy the following bounds:

In case \(\text {rank} (A) < m\), the eigenspace of the eigenvalues originating only from \(R_d\) is \(\{0\}\times \text {Null}(A^\mathrm{T})\) and there are \(m - \text {rank}(A)\) such eigenvalues.

Below we provide a similar theorem, applied to (23). For that, we will use \(R_d,\ R_{p\mathcal {B}},\ R_{p\mathcal {N}}\) as defined in Sect. 2.4.2 as well as the respective eigenvalue bounds given in (30), (31) and (32). Using the definitions of the regularization matrices, we know that the matrix in the (1,1) block of (23) takes the form:

while the (2,2) block of (23) becomes:

Theorem 3.4

For all \((x,z) > 0\) and \(R_d,\ R_{p\mathcal {B}},\ R_{p\mathcal {N}}\) as defined in (26), (24) and (21), respectively, the coefficient matrix of (23) has exactly \(n_2\) negative and m positive eigenvalues. Order and denote them as:

These eigenvalues satisfy the following bounds:

In case \(\text {rank} (A_{\mathcal {B}}-A_{\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1}Q_{\mathcal {B}\mathcal {N}}^\mathrm{T}) < m\), the eigenspace of the eigenvalues originating only from \(D^*\) is \(\{0\} \times \text {Null} (A_{\mathcal {B}}^\mathrm{T}-Q_{\mathcal {B}\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1}A_{\mathcal {N}}^\mathrm{T})\) and there are \(m - \text {rank} (A_{\mathcal {B}}-A_{\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1}Q_{\mathcal {B}\mathcal {N}}^\mathrm{T})\) such eigenvalues.

Let us compare the bounds given in Theorems 3.3 and 3.4 to observe once again the advantages of using the partially reduced augmented system (23) over the full augmented system (20). There are three significant differences in the eigenvalue bounds of these two systems:

-

1.

For the bound on the largest negative eigenvalue of the two systems, we know that:

$$\begin{aligned} \lambda _{\min }(H) \ge \min _j (\varTheta ^{-1})_{jj} + \lambda _{\min }(R_p), \end{aligned}$$where

$$\begin{aligned} \lambda _{\min }(R_p) \ge \min \bigg \{ \min _{j: (Q_{\mathcal {B}\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1} Q_{\mathcal {B}\mathcal {N}}^\mathrm{T})_{jj}> 0} ( (Q_{\mathcal {B}\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1}Q_{\mathcal {B}\mathcal {N}}^\mathrm{T})_{jj}),\ \min _{j: (Q_{\mathcal {N}})_{jj} > 0} ( (Q_{\mathcal {N}})_{jj}) \bigg \}, \end{aligned}$$from (31) and (32), respectively. However, since \(\min _j (\varTheta _{\mathcal {B}}^{-1})_{jj} \ll \min _j (\varTheta _{\mathcal {N}}^{-1})_{jj}\) we can conclude that:

$$\begin{aligned} \lambda _{\min }(H) \ge \min _j (\varTheta _{\mathcal {B}}^{-1})_{jj} + \lambda _{\min }(R_p), \end{aligned}$$while

$$\begin{aligned} \lambda _{\min }(\bar{H}) \ge \min _j (\varTheta _{\mathcal {B}}^{-1})_{jj} +\max _{j}(\bar{Q}_{\mathcal {N}}^{-1})_{jj} \Vert Q_{\mathcal {B}\mathcal {N}} Q_{\mathcal {B}\mathcal {N}}^\mathrm{T}\Vert _{\infty } - \max _j (Q_{\mathcal {B}\mathcal {N}} \bar{Q}_{\mathcal {N}}^{-1} Q_{\mathcal {B}\mathcal {N}}^\mathrm{T})_{jj}, \end{aligned}$$where we used (25) as the definition of \(\varDelta _{p\mathcal {B}}\). We observe that the difference:

$$\begin{aligned} \max _{j}(\bar{Q}_{\mathcal {N}}^{-1})_{jj} \Vert Q_{\mathcal {B}\mathcal {N}} Q_{\mathcal {B}\mathcal {N}}^\mathrm{T}\Vert _{\infty } - \max _j (Q_{\mathcal {B}\mathcal {N}} \bar{Q}_{\mathcal {N}}^{-1} Q_{\mathcal {B}\mathcal {N}}^\mathrm{T})_{jj} \end{aligned}$$increases as more elements enter the set \(\mathcal {N}\). On the other hand, \(\lambda _{\min }(R_p)\) is expected to decrease at every iteration of the interior point method. Hence, the bound on \(\bar{\mu }_{-1}\) is expected to be better than that on \(\mu _{-1}\), as more elements enter the partition \(\mathcal {N}\).

-

2.

For the bound on the most negative eigenvalue of the two systems, we know that:

$$\begin{aligned} \lambda _{\max }(H) \le \lambda _{\max }(Q) +\max _j (\varTheta ^{-1})_{jj} + \lambda _{\max }(R_p), \end{aligned}$$where \(\lambda _{\max }(R_p) \le 2\max \{\delta _{p\mathcal {N}},\delta _{p\mathcal {B}}\}\). However, since \(\max _j (\varTheta _{\mathcal {N}}^{-1})_{jj} \ge \max _j (\varTheta _{\mathcal {B}}^{-1})_{jj}\), we observe that:

$$\begin{aligned} \lambda _{\max }(H) \le \lambda _{\max }(Q) + \max _j (\varTheta _{\mathcal {N}}^{-1})_{jj} + \lambda _{\max }(R_p), \end{aligned}$$where we used the definition of \(\varDelta _{p\mathcal {N}}\) given in (22). On the other hand,

$$\begin{aligned} \lambda _{\max }(\bar{H})\le \lambda _{\max }(Q_{\mathcal {B}}) + \max _j (\varTheta _{\mathcal {B}}^{-1})_{jj} + (\varDelta _{p\mathcal {B}})_{ii},\quad \forall \ i \in \{1,\ldots ,n\}. \end{aligned}$$where, from (25), we know that \((\varDelta _{p\mathcal {B}})_{ii} = \max _j (\bar{Q}_{\mathcal {N}}^{-1})_{jj} \Vert Q_{\mathcal {B}\mathcal {N}} Q_{\mathcal {B}\mathcal {N}}^\mathrm{T}\Vert _{\infty },\ \forall \ i \in \{1,\ldots ,n\}\). Clearly the bound on \(\lambda _{\max }(\bar{H})\) is significantly smaller than that on \(\lambda _{\max }(H)\), since it is usually the case that \(\max _j (\varTheta _{\mathcal {N}}^{-1})_{jj} \gg \max _j (\varTheta _{\mathcal {B}}^{-1})_{jj}\), while \(\lambda _{\max }(R_p) > \max _j (\bar{Q}_{\mathcal {N}}^{-1})_{jj} \Vert Q_{\mathcal {B}\mathcal {N}} Q_{\mathcal {B}\mathcal {N}}^\mathrm{T}\Vert _{\infty }\). Hence, the most negative eigenvalue of (23) is expected to have a significantly smaller magnitude than that of (20).

-

3.

As in the LP case, our guaranteed lower bound for the minimum eigenvalue of \(R_d\) is smaller than the respective lower bound for the minimum eigenvalue of \(D^*\). In fact,

$$\begin{aligned}&\min _i D^*_{ii} \ge \delta _d\\&\lambda _{\min }(R_d) \ge \min _{j: (A_{\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1} A_{\mathcal {N}}^\mathrm{T})_{jj} > 0} ((A_{\mathcal {N}}\bar{Q}_{\mathcal {N}}^{-1} A_{\mathcal {N}}^\mathrm{T})_{jj}), \end{aligned}$$where we use \(\delta _d\) as defined in (27), while the last inequality follows from (30). By construction, the first bound is better. As a consequence, the smallest positive eigenvalue of (23) is guaranteed to be at least as large as \(\delta _d\).

4 Implementation and Numerical Results

4.1 The Algorithmic Framework

At this point, we are providing a generic algorithm (IPM-NDR), summarizing the infeasible primal–dual IPM with non-diagonal regularization. The algorithm solves the Newton system arising from the optimality conditions of (1)–(2), at each iteration, using a direct method. Note that this is just a general outline and does not contain the actual details of the implemented method. Implementation details will be presented in the next subsection. Note that in the algorithm, we make the distinction between linear and quadratic programming problems, by using the logical variables LP and QP, respectively.

4.2 Implementation Details

We implemented the algorithm in MATLAB. Our implementation solves linear and convex quadratic programming problems in the standard form. However, all the free variables are treated as variables bounded by some box constraints. We set some initial bounds,

for all the free variables. If the method pushes some of these variables to take values outside of this box, then the respective bounds are increased to give space for variables to increase their values. Note that this heuristic causes that extra iterations are needed to converge for a few problems; since every time the box constraints are changed, the method loses primal feasibility.

Regularization

We set \(\text {reg}_{thr,0} = 1\), and we decrease it at the same rate as \(\mu _k\) decreases, until it becomes smaller than \(\epsilon = \max \big \{\frac{\text {tol}\cdot 10^{-1}}{\Vert A\Vert _2^2},10^{-13} \big \}\). Then, it takes this value and stays constant for the rest of the optimization process. As before, \(\text {tol}\) is the error tolerance specified by the user. At every iteration, we enable columns to enter the set \(\mathcal {N}\) only if: \(\max _{j \in \mathcal {N}} (\varTheta )_{jj} \max \big \{\Vert AA^\mathrm{T}\Vert _{\infty },\Vert QQ^\mathrm{T}\Vert _{\infty }\big \} \le \text {reg}_{thr,k}\). This ensures that \((\varDelta _{d})_{ii}\), as defined in (16) and (27) for linear and convex quadratic problems, respectively, is smaller than \(\text {reg}_{thr,k}, \forall i \in \{1,\ldots ,m\},\ \forall \ k \ge 0\). The latter also holds for \((\varDelta _{p\mathcal {B}})_{ii}\) as in (25) \(, \forall i \in \{1,\ldots ,n_2\}\), which is only defined for quadratic programming problems. Of course, for linear programming problems we have \(R_p = 0\). Note that during the first iterations of the method, \(\mathcal {N}\) is usually empty. In order to avoid instability, we include a uniform dual regularization \(R_d = \text {reg}_{thr,k} I_m\). For the quadratic programming case, we also include a uniform primal regularization, that is: \(R_p = \text {reg}_{thr,k} I_n\). This uniform regularization is dropped when \(\mathcal {N}\) is non-empty. As an extra safeguard, when the factorization of the system fails, we increase \(\text {reg}_{thr}\) by a factor of 10 and repeat the factorization. If this process is repeated for 6 consecutive times, we stop the method. All other implementation details concerning the regularization follow from Sect. 2.

Newton Step Computation

For general convex quadratic problems, the Newton direction is calculated from system (23), after computing its symmetric \(LDL^\mathrm{T}\) decomposition, where L is a lower triangular matrix and D is diagonal. For that, we use the build-in MATLAB symmetric decomposition (i.e. ldl). We know that such a decomposition always exists, with D diagonal, for the aforementioned system, since after introducing the regularization, the matrix of (23) is guaranteed to be quasi-definite, a class of matrices known to be strongly factorizable, [4]. For that reason, we change the default pivot threshold of ldl to \(10^{-14}\). We use such a small pivot threshold in order to avoid any \(2 \times 2\) pivots during the factorization routine. For linear programming problems, we solve the system (12) (with \(Q = 0\)), using the build-in Cholesky decomposition of MATLAB (i.e. chol). \(\varDelta x\) is then recovered from (11). In the quadratic programming case, \(\varDelta s\) is recovered from (7). In both cases, \(\varDelta z\) is recovered from (9) and \(\varDelta r\) from (6).

Starting Point

We have already mentioned that the method is infeasible, and hence, the starting point does not need to be primal and dual feasible. The only requirement is that the initial values of the variables \(x,\ z\) are strictly positive. We use a starting point that was proposed in [26]. Here we will only state it for completeness. To construct this point, we try to solve the pair of problems (P), (D), but we ignore the non-negativity constraints. Such relaxed problems have closed form solutions:

Then, in order to guarantee positivity and sufficient magnitude of x, z, we compute the expressions \(\delta _x = \text {max}(-1.5 \text {min}\{\tilde{x}_i\},0)\) and \(\delta _z = \text {max}(-1.5 \text {min}\{\tilde{z}_i\},0)\) and we obtain:

where e is the vector of ones of appropriate dimension. Finally, we define the starting point by setting:

Centring Parameter

As minimum and maximum centring parameters, we fix \(\sigma _{\min } = 0.05\) and \(\sigma _{\max } = 0.95\). In the first iteration, we use \(\sigma _0 = 0.5\). Then, at each iteration k, in order to determine the centring parameter \(\sigma _k\), we perform the following operations:

where \(a_x^{k-1}, a_z^{k-1}\) are the step lengths in directions \(\varDelta x,\ \varDelta z\) of the previous iteration, respectively. Then, we assign:

and finally

The latter is a heuristic which performs well in infeasible IPM implementations.

Step Length Computation

In order to calculate the step length, we apply the fraction to the boundary rule, that is, we compute the largest step lengths to the boundary of the non-negative orthant, i.e.:

and we set:

where \(\tau \in \ ]0,1[\) is set to \(\tau = 0.995\). The constant \(\tau \) acts as a safeguard against bad directions. Taking a full step towards a direction can potentially push the iterates of the algorithm close to the boundary. This in turn can significantly slow down the convergence of the method. The primal variables x, r are updated using the step length \(a_x\) while the dual variables \(y,\ s,\ z\) are updated using the step length \(a_z\).

Termination Criteria

Finally, the algorithm is terminated either if the number of maximum iterations specified by the user is reached or when all the following three conditions are satisfied:

and

where \(\text {tol}\) is the tolerance specified by the user.

4.3 Numerical Results

We have made a particular effort to keep the implementation as simple as possible, so that the regularization effects can easily be seen and analysed. For that reason, we applied scaling only to problems which required it to converge and this was needed only for 5 out of the 218 problems solved. On the other hand, no predictor-corrector technique was included. We tested our method on problems coming from the Netlib collection [27] as well as on a set of convex quadratic programming problems given in [28]. We present the numerical results, firstly for linear programming problems and then for quadratic programming ones. In order to demonstrate the effects of the proposed regularization method, we will compare it with an interior point method that uses a uniform regularization. This uniform regularization scheme can be interpreted as the application of a standard proximal point method, in contrast to the proposed method, which can be interpreted as the application of a generalized proximal point method. The experiments were conducted on a PC with a 2.2 GHz Intel Core i5 processor (dual core) and 4 GB RAM, run under Linux operating system. The MATLAB version used was R2018a.

Linear Programming Problems

As we have already stated, for linear programming problems we use only dual regularization, that is, we set \(R_p = 0\) and \(s = 0\) in (\(P_{r}\))–(\(D_{r}\)). For that reason, we will compare our method with an algorithm that uses a uniform dual regularization, \(R_d = \text {reg}_{thr,k} I_m,\ \forall k \ge 0\), where \(\text {reg}_{thr,k}\) is updated as indicated in the previously presented Regularization paragraph. If \(\mathcal {N} = \emptyset \), the two methods are exactly the same. Hence, the difference between the methods arises when some columns of the constraint matrix have entered the set \(\mathcal {N}\). The tolerance used in the experiments for the linear programming problems was \(\text {tol}= 10^{-6}\). We will not use a smaller tolerance because our method does not have primal regularization. As a consequence, if some elements of \(\varTheta _{\mathcal {B}}\) become very large, this can create numerical instability if there is no primal regularization to keep such entries manageable in terms of machine precision. As an extra safeguard, when the factorization fails, we increase the uniform regularization value by a factor of 10 until the factorization is completed successfully. Finally, we set the maximum iterations of the method to be \(\text {maxit} = 200\). If this number is reached, the algorithm stops, indicating that the optimal solution was not found. To conclude, we use:

The statistics of runs of the proposed IPM with non-diagonal regularization and of the previously mentioned IPM with uniform regularization, over the Netlib test set, are collected in Table 1. Notice that Table 1 contains only a subset of the 96 problems of the Netlib collection. All problems for which the set \(\mathcal {N}\) stayed empty throughout the whole optimization process have been excluded. In this case, the two methods are completely equivalent.

Both IPM with non-diagonal regularization and IPM with uniform regularization solved all 96 problems of the Netlib collection. The former did so in 146,6 s and a total of 3322 IPM iterations. The latter needed 165,7 s and a total of 3442 iterations. In other words, the IPM using the proposed regularization solved the whole set in 11.5% less time, requiring 3% less iterations. The computational benefits of the non-diagonal regularization become obvious in the larger instances of the Netlib collection. See, for example, problems DFL001, QAP15 in Table 1.

We also include Table 2, in which the factorization times are compared when using non-diagonal and uniform regularization, respectively, over the last four iterations of problems DFL001 and GREENBEA. The size of the respective constraint matrices also includes columns which were added to transform the problems to the standard form. Extra information, concerning the cardinality of the partition \(\mathcal {N}\), the iteration count as well as the time needed to compute the Cholesky factorization of the system matrix at the respective iteration, is collected in Table 2.

Analysing the results reported in Tables 1 and 2, one can observe that while the proposed non-diagonal regularization matrix does not affect the convergence of the method, it can accelerate the factorization significantly through the sparsity that it introduces in the system matrix. Notice that for both DFL001 and GREENBEA, almost half of their columns lie in the partition \(\mathcal {N}\) and this does not prevent the algorithm from converging.

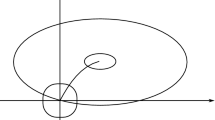

Finally, in order to present the importance of regularization, as well as the overall comparison of the two different regularization schemes, we also include Fig. 1, which contains the performance profiles, over the whole Netlib set, of three different methods. The green triangles correspond to the IPM with non-diagonal regularization. The red stars correspond to the IPM with uniform regularization, and finally, the blue crosses correspond to an IPM without regularization. In Fig. 1a, we present the performance profiles with respect to the total time to convergence, while in Fig. 1b the performance profiles with respect to the total number of iterations. The horizontal axis (in logarithmic scale) represents the performance ratio with respect to the best performance achieved by one of the three methods for each problem. For example, 2 in the horizontal axis is interpreted as: “what percentage of problems was solved by each method, in at most 2 times the best achieved time for each problem”. The vertical axis shows the percentage of problems solved by each method for different values of the performance ratio. Efficiency is measured by the rate at which each of the lines increases, as the ratio increases. Robustness is measured by the maximum percentage achieved by each of the methods. For more information about performance profiles, we refer the reader to [29], where this benchmarking scheme was proposed.

By looking at Fig. 1, one can observe the importance of regularization in terms of robustness of the method. The IPM scheme that does not employ any regularization fails to solve 18.75% of the problems in the Netlib collection. On the other hand, the IPM with non-diagonal regularization is more efficient in terms of time to convergence, when compared to the other two methods. Notice that this is not the case for the IPM using uniform regularization, which is less efficient than the other two methods for 70% of the problems. As expected, the IPM that does not use regularization converges in less iterations for most of the problems that it successfully solves. This is expected, since in the regularized schemes, we are perturbing the Newton system. Obviously, this perturbation is benign, in the sense that it allows us to significantly improve the robustness of the method.

Convex Quadratic Programming Problems

For this class of problems, we employ a primal–dual dynamic regularization. Hence, we will compare our method with an algorithm that uses a uniform primal–dual regularization. Such a method adds two uniform diagonal matrices \(R_p = \text {reg}_{thr,k} I\) and \(R_d = \text {reg}_{thr,k} I\) to the (1,1) and (2,2) blocks of the augmented system, respectively. This scheme can be interpreted as the primal and dual application of the standard proximal point method, in contrast to the proposed regularization scheme, which is the primal and dual application of a generalized proximal point method. As an extra safeguard, when the factorization fails, we increase the uniform regularization value by a factor of 10 until the factorization is completed successfully. The tolerance used in the experiments for this class of problems was \(\text {tol}= 10^{-8}\). As in the linear programming case, we set the maximum iterations of the method to be \(\text {maxit} = 200\). To conclude, we use:

For this problem set, the algorithm did not employ any scaling in the problem matrices. The computational results, obtained with the proposed non-diagonally regularized IPM and with the previously mentioned uniformly regularized IPM, over the Maros and Mészáros repository of convex quadratic programming problems, are presented in Table 3. As before, Table 3 contains only a subset of the 122 problems of the collection. All problems for which the set \(\mathcal {N}\) stayed empty throughout the whole optimization process have been excluded.

In contrast to the linear programming case, the results collected in Table 3 do not demonstrate any significant advantage in terms of sparsity of linear systems achievable by the new regularization technique. This is a consequence of the fact that the problems under consideration are of small to medium size, while the overhead of setting up the partially reduced augmented system (23) is time-consuming in MATLAB, where manipulating a permuted matrix is costly, due to MATLAB’s default mechanism to store matrices by columns. Nevertheless, both IPM with non-diagonal regularization and IPM with uniform regularization solved all 122 problems. The former required 386,1 s and a total of 4162 IPM iterations. The latter required 400,2 s and a total of 4170 iterations. In other words, the non-diagonal scheme required 3% less time and a similar number of iterations, as compared to the uniform scheme, for this test set.

As before, in order to illustrate the effect of the non-diagonal regularization in terms of factorization performance, we provide Table 4, in which the factorization times obtained when using non-diagonal and uniform regularization, respectively, are compared, over the last four iterations of problems LISWET1, FORPLAN and SHELL. The size of the constraint matrix in each case also includes columns which were added to transform the problem to the standard form. Information concerning the cardinality of the partition \(\mathcal {N}\), the iteration count as well as the time needed to compute the \(LDL^\mathrm{T}\) factorization of the system matrix at the respective iteration is gathered in Table 4.

The examples presented in Table 4 confirm the previous observations drawn from the linear programming examples. In particular, we can observe the benefits of the proposed non-diagonal regularization, in terms of factorization performance. On the other hand, the convergence of the method does not seem to be affected when big part of the columns of the constraint matrix lie in partition \(\mathcal {N}\).

Following the linear programming case, we include Fig. 2, which contains the performance profiles, over the whole Maros–Mészáros repository of convex quadratic programming problems, of three methods: the proposed IPM with non-diagonal regularization, the IPM with uniform regularization (which was previously presented) and the same IPM but without regularization. In Fig. 2a, a comparison of the total time to convergence is presented, while Fig. 2b contains the comparison of the total number of iterations.