Abstract

Guiding teachers to customize curriculum has shown to improve science instruction when guided effectively. We explore how teachers use student data to customize a web-based science unit on plate tectonics. We study the implications for teacher learning along with the impact on student self-directed learning. During a professional development workshop, four 7th grade teachers reviewed logs of their students’ explanations and revisions. They used a curriculum visualization tool that revealed the pedagogy behind the unit to plan their customizations. To promote self-directed learning, the teachers decided to customize the guidance for explanation revision by giving students a choice among guidance options. They took advantage of the web-based unit to randomly assign students (N = 479) to either a guidance Choice or a no-choice condition. We analyzed logged student explanation revisions on embedded and pre-test/post-test assessments and teacher and student written reflections and interviews. Students in the guidance Choice condition reported that the guidance was more useful than those in the no-choice condition and made more progress on their revisions. Teachers valued the opportunity to review student work, use the visualization tool to align their customization with the knowledge integration pedagogy, and investigate the choice option empirically. These findings suggest that the teachers’ decision to offer choice among guidance options promoted aspects of self-directed learning.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

Teachers continually customize the curriculum based on their students’ needs, classroom logistics, new standards, and their memories of learning or teaching the topic in the past (Drake & Sherin, 2006; Remillard, 2005). Successful customizations benefit from dedicated time to review student progress through the curriculum (Davis et al., 2017; Kerr et al., 2006). When teachers draw on their expertise to strengthen researcher designed materials, their customizations can enhance the materials along with supporting teacher learning (Gerard et al., 2010; Davis & Krajcik, 2005; Debarger et al., 2017; Littenberg-Tobias et al., 2016; Matuk et al., 2015; Penuel, 2017; Ye et al., 2015). Research teams vary as to their support for customization with some primarily concerned about fidelity of implementation (Harn et al., 2013). Customizing engages teachers in reflecting on specific instructional practices relative to evidence of student learning. This can support teachers to develop links between pedagogical moves within the curriculum and student engagement or scientific thinking (Gerard et al., 2011; Gess-Newsome et al., 2019; Matuk et al., 2015).

To investigate teachers’ curriculum customization for self-directed learning (SDL), a key aspect of lifelong learning (Bolhuis, 2003), we designed a 2-day workshop. It supported teachers to customize a unit by jointly analyzing logged student explanations and revisions from their teaching of the unit the year prior and applying a pedagogical framework. We designed the workshop using the knowledge integration (KI) pedagogical framework which also governed the design of the unit and the assessment (Kali, 2006; Linn & Eylon., 2011) and research on professional development (Lewis et al., 2016; Penuel & Gallagher, 2009). Participating middle school teachers from two schools started with a research-tested web-based curriculum unit they used the year prior and reviewed their students’ explanations and revisions to formulate their customization goals. We made the KI pedagogical framework underlying the curriculum design visible to support teachers in jointly planning pedagogically robust customization designs. We report on how the teachers developed curriculum customizations to promote SDL, planned an empirical study of guidance Choice in explanation revision, and implemented the study in their classrooms. We describe the impact of the customized unit on students’ learning.

Rationale

Knowledge Integration, Self-Directed Learning, and Explanation Revision

The KI framework guided the design of the activities in the workshop, the web-based curriculum unit that the teachers used and customized in this study, and the assessments of their students’ explanations. Building on constructivism (Inhelder & Piaget, 1958), the KI framework capitalizes on the variation in learners’ ideas about a topic. Longitudinal and experimental investigations carried out over 25 years revealed how learners refine the reasoning strategies they use to build their own ideas (Linn & Hsi et al., 2000), while also developing strategies for discovering alternative ideas and reconciling these ideas into a coherent, evidence-based understanding (Linn & Eylon, 2011). Four key processes involved in developing integrated science understanding emerged from these investigations: (1) eliciting learners’ current ideas, including encouraging learners to generate the reasoning behind their ideas (Gunstone & Champagne, 1990); (2) discovering new ideas, for example, from scientific models or peers (Sampson & Clark, 2009; White & Frederiksen, 1998); (3) distinguishing among current ideas and new discoveries (Clark, 2006; Wen et al., 2020), and (4) reflecting on this repertoire of ideas and connecting and sorting them into a coherent view (Liu et al., 2008; Winne, 2018).

These KI processes are central to revision of scientific explanations in science classes. Students’ first approach to forming an explanation elicits their ideas and the links they make among them. Revision then involves students in analyzing their understanding as reflected in their explanation, distinguishing among their own and new ideas as they discern gaps or overlaps in their ideas, discovering new ideas as they seek evidence to resolve inconsistencies or gaps in their explanation, and ultimately forming new connections among their ideas to generate a more coherent and robust, revised explanation (Berland et al., 2016; Duncan et al., 2018; Rivard, 1994). Revision calls for self-directed learning (Hmelo-Silver, 2004; Zimmerman, 2002). To revise, students need to proactively evaluate the ideas in their explanation, select and seek evidence to fill gaps or distinguish among alternatives, and grapple with how new ideas link to their initial expression (Berland et al., 2016; Freedman et al., 2016; Hmelo-Silver, 2004; Zhu et al., 2020; Zimmerman, 2002). Promoting students’ SDL in explanation revision hence involves supporting students to take ownership of their ideas and their use of KI processes to deliberately refine their understanding.

Challenges of Guiding SDL in the Science Classroom

In most middle school classrooms, support for students to self-direct their learning, particularly in the context of scientific explanation, is rare (Bolhuis, 2003; Freedman et al., 2016). Rather than encouraging students to take ownership for refining their knowledge, classroom culture often encourages students to express a new or different idea that they think is correct without connecting or contrasting this idea with their initial explanation (Chinn & Malhotra, 2002; Jimenez-Aleixandre et al., 2000). In science classrooms, for example, research suggests that students often make minimal or superfluous revisions to their written explanations (Crawford et al., 2008; Sun et al., 2016). Further, when students encounter conflicting information, without support to integrate their ideas, they often hold onto both views simultaneously or defend and strengthen their own idea, rather than reformulate their perspective (Gerard & Linn et al., 2022; Mercier & Sperber, 2011; Nickerson, 1998). Reinforcing accumulation of ideas rather than integrating ideas can lead to a fragmented perspective where students may revert back to their initial views and miss the opportunity to develop ownership about refining their understanding (diSessa, 1988).

Designing Professional Development for Teacher Customization of Curriculum

The professional development in this study drew on a research practice partnership model to support teachers in curriculum customization of a research-based Web-Based Inquiry Science Environment (WISE) unit. We draw on previous studies that guided science teachers in curriculum customization within research practice partnerships and have demonstrated positive impacts both for teacher learning and instructional design (Gerard et al., 2010; Brown & Livstrom, 2020; Forbes & Davis, 2010; Lewis et al., 2016; Leary et al., 2016; Penuel, 2017; Penuel & Gallagher, 2009). We focus on empowering teachers to customize curriculum because teacher expertise can strengthen the effectiveness of the curriculum materials, and engaging in the customization process may augment teachers’ knowledge of specific instructional practices for SDL in science.

One line of work demonstrates the advantages of starting the curriculum customization process with research-based curriculum materials and supporting teachers to adapt them, rather than guiding teachers to create new materials from scratch (Forbes & Davis, 2010; Penuel & Gallagher, 2009). Other studies demonstrate that engaging teachers in analyzing the knowledge and research underlying activities within research-based curriculum materials can support teachers to develop customizations and future lesson plans that incorporate the research-based pedagogical strategies (Roseman et al., 2017; Tekkumru-Kisa et al., 2020).

Research has further documented the success of professional development programs that support teachers to engage in cycles of planning and teaching a lesson, reflecting on student work, customizing the lesson, and teaching the refined version again (Fallik et al., 2008; Voogt et al., 2015). The use of student work to support teachers in distinguishing which curriculum customizations to pursue is a common factor in programs that resulted in positive impacts on teacher learning (Gerard et al., 2011; Burton, 2013; Lewis et al., 2016; Gess-Newsome et al., 2019).

The Present Study: Workshop for Curriculum Customization

Based on the research reviewed in the Rationale, we designed a 2-day workshop to support teachers to collaboratively customize web-based science units, with a focus on enhancing support for students to engage in SDL. It focused on customization of the WISE Plate Tectonics unit as the participating teachers had taught this unit during the previous school year and planned to teach it again in the upcoming spring.

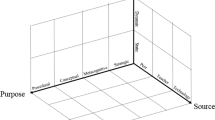

The workshop design implements the KI processes to help teachers design customizations to promote SDL, as shown in Table 1. First, the workshop elicited teachers’ ideas to develop a shared definition of SDL. Next, the workshop engaged teachers in reviewing their student work from the prior year and identifying their goals for customization. Teachers distinguished between aspects of the curriculum that supported SDL and those that needed improvement. They generated customizations that aligned with the definition of SDL they had formulated. Finally, the workshop made the KI framework guiding the design of the focal unit visible using the curriculum visualizer, as shown in Fig. 1, to facilitate teachers in distinguishing how different customization designs fit in the unit sequence for promoting student directed engagement in KI processes.

A digitized version of the curriculum visualizer notecard and post-its for one lesson in plate tectonics. A white note card represented the lesson in the unit and described the target standard. Post-it notes, color coded for their KI pedagogical role, represented each activity within the lesson [pink = elicit ideas; orange = discover new ideas; green = distinguish ideas; blue = connect or reflect on ideas]. By making the KI framework visible, the workshop sought to encourage teachers to consider how their customization design would engage students’ in self-directing their KI process. For example, while outlining a customization design on color-coded post-its, the teacher may ask themself when selecting which colored post-it to use: Is the goal of this step in my customization to help students take ownership for discovering new ideas or distinguishing among their current set of ideas?

Research Questions

In this study, we investigate (1) how did teachers in the workshop develop customizations to a web-based curriculum unit to improve support for SDL in revising scientific explanations? and (2) what was the impact of the teachers’ customization design, guidance Choice, on their students’ learning outcomes?

Methods

Teacher and Student Participants

Four 7th grade teachers from two public middle schools participated in this study. Teachers were selected because they (a) had taught the WISE Plate Tectonics unit the year prior as a part of the research practice partnership, and they (b) planned to implement the customized unit in the spring after the workshop, and (c) they worked in public schools typical of the state in that they serve diverse student populations. All of the teachers were relatively new to WISE and the customization process (Table 2). This was the first WISE workshop and introduction to the customization process for two of the four teachers; it was the second workshop for the other two teachers (the prior workshop focused on a different unit).

The students of the three teachers who implemented the unit (from school A) participated in this study [teacher 1 = 155 students; teacher 2 = 170; teacher 3 = 154]. Students of the teacher in school B were excluded from the study because the teacher unexpectedly needed to have a substitute teacher implement the customized unit in the spring.

Web-Based Science Curriculum

This research used a WISE unit “Plate Tectonics: What Causes Mountains, Earthquakes and Volcanoes?” as the focus of teacher customization in the workshop. The Plate Tectonics unit has been tested and refined in prior research (Gerard et al., 2010, 2019). In Plate Tectonics, students investigate why there are more mountains, earthquakes, and volcanoes on the West Coast (where this study takes place) than on the East Coast of the USA. Activities are designed to engage students in KI processes, as shown in Appendix, Table 8.

Explanation Revision in WISE Plate Tectonics

The Plate Tectonics unit incorporates automated guidance for two embedded explanation prompts to support students in refining their understanding. Both prompts call for students to link key ideas from the unit to form a robust response. The lava lamp item for example prompts students: Use your ideas about heat and density to explain how a lava lamp works [video of lava lamp given]. WISE uses the natural language processing tool c-raterML™, developed by the Educational Testing Service (ETS), to automatically score students’ written explanations to these prompts based on a 5-point KI rubric. The rubric rewards students for using evidence to make links among scientifically normative ideas, as shown in Table 3. After a student’s explanation is scored, WISE instantaneously assigns KI guidance based on the score level.

Testing the Teachers’ Curriculum Customization: Guidance Choice

The teachers developed a curriculum customization in the workshop, guidance Choice, to strengthen student engagement in SDL. This grew out of teachers’ definition of SDL in the middle school science classroom, jointly formulated on day 1 of the workshop (see Table 1). This definition was: “When a learner is motivated to: ask questions, reason towards evidence-based conclusions, select strategies that work best for them, determine when and from where to seek guidance and feedback, communicate their understanding, evaluate and modify their strategies to meet their learning goals.” Drawing on this definition, the teachers identified guidance Choice and revision of scientific explanations as illustrative of their SDL definition. Prompting students to select guidance from a set of options and use that guidance to revise would call for students to assess their knowledge of plate tectonics, identify gaps in their reasoning, determine what type of support would help them revise, and utilize available resources in the unit to improve their understanding.

To test the teachers’ conjecture that giving students guidance options to choose among would enhance SDL, the teacher-researcher team created a comparison study. It included two guidance conditions - guidance Choice or guidance Assign.

The guidance Choice and guidance Assign conditions were implemented in the teachers’ customized version of the WISE Plate Tectonics unit as follows (see Fig. 2): All students engaged in the two embedded explanation revision activities in the Plate Tectonics unit, mountain and lava lamp. For both mountain and lava lamp, all students began by writing their explanation to the prompt. After writing their explanation, students were randomly assigned to a condition, guidance Choice or guidance Assign, based on their unique WISE ID.

In choice, the student was prompted to “Review your explanation. Choose which guidance would best help you to improve your explanation: (a) Clarify the connections among my ideas by helping a peer to revise, or, (b) Gather new ideas by analyzing graphs of density and movement” (lava lamp item prompt). Students were then branched based on their selection. Students, who chose to help a peer to revise, used the annotator (see Fig. 3). Students who chose to analyze evidence were guided to explore a resource selected by the teachers—an interactive animation of plate boundaries (mountain) or graphs of a blob’s density and movement (lava lamp). In assign, the student was assigned by the computer to one of these two guidance pathways. On the mountain item, students were assigned the annotator; on lava lamp, students were assigned the graphs.

Next, all students were prompted to use what they learned from the guidance to revise their explanation. They then submitted the revision to receive one round of automated guidance, and revise again.

Finally, all students, in both guidance conditions, responded to a teacher-designed prompt asking students to reflect on the value of the guidance they used in helping them revise. Students reported if they felt the guidance was helpful or not, and why.

Data Sources

Teacher Data

To analyze how teachers’ developed knowledge of SDL in the workshop, we collected detailed field notes from two researchers, individual teacher written reflections at three time points, audio files collected during the workshop, teacher generated artifacts in the workshop, and individual teacher interviews conducted after teachers implemented the customized unit.

We examined teachers’ individually written responses to open-ended prompts asked in a pre-workshop survey administered via Google (completed one week prior to the workshop) and in end-of-the day questions embedded in a WISE Workshop unit on day 1 and on day 2. At the end of the workshop day 1 and day 2, teachers were asked to spend 20–30 min writing responses to the prompts. We selected the prompts for analysis that elicited comparable ideas about SDL at each time point to analyze.

We transcribed the audio-recording of the teachers’ discussion as they analyzed their students’ logged explanations and revisions from the year prior to formulate a rubric of SDL behavior and then began to generate unit customizations. This recording was selected for analysis because it included the clearest focus on the teachers’ conceptualization of SDL in the Plate Tectonics unit, and we could best hear each teacher’s voice in the discussion. A second audio file from one pair of teachers as they used the post-its and notecards to design a customization was used to supplement the field notes. This recording was used supplementally with the teachers’ digitized notecard/post-it design, and the field notes, as the teachers’ voices were inaudible in some sections.

Student Data: Learning and Reflections

To examine student learning and reflection, we collected [in this order] a pre-test, lava lamp embedded assessment, embedded assessment of student reflection on using guidance to revise, and a post-test. We selected the lava lamp embedded assessment and students’ reflections following the lava lamp item for analysis based on the assumption that students would make a more informed guidance Choice after having experienced the guidance Choices one time prior (on the mountain explanation revision activity).

Lava Lamp Embedded Assessment

The lava lamp embedded assessment occurred three-quarters of the way through the unit (approx. day 6 of 8). All students generated their initial explanations in response to the lava lamp prompt and had two opportunities to revise. In the Choice condition, students wrote their initial work, selected guidance, and then revised. Students in the Assign condition followed the same sequence, except that instead of choosing their first round of guidance, the guidance was assigned by the computer. Students in both conditions received one additional round of automated KI guidance on their revised response (after choosing or being assigned guidance and revising). The KI guidance was assigned adaptively, based on the automated score of their revised response. We used the students’ second opportunity to revise as the measure of SDL. The first opportunity to revise varied the guidance (choice/assign), with the choice condition hypothesized to build student ability to use SDL in revision. Thus, the student response to the second round of guidance provided an equal comparison across conditions for students to demonstrate SDL in revision, thereby capturing the impact of choice on SDL in explanation revision.

Reflection on Self-directed Learning When Using Guidance to Revise

After students received two rounds of guidance on the lava lamp embedded assessment and had two opportunities to revise, they were asked to reflect on the value of the guidance that they chose, or that they were assigned, in helping them to revise. Students in the choice condition were asked: “Did the guidance you chose help you in the process of revising your explanation? [select among: helpful, sort-of helpful, not really helpful]” and “Explain why.” We chose these three options by envisioning how students might respond if we asked them this question in person. We also limited the options to three levels to ensure that students would be able to discriminate among them. This question was slightly modified for students in the assign condition, to ask about the guidance they were assigned by the computer.

Mt. Hood Pre-test/post-test Item

Students completed the pre-test 1 day prior to all students starting the unit in a class, and the post-test 1 day after all students completed the unit. The item was designed to measure students’ KI by asking students to explain how Mt. Hood was formed given a photograph and location. The item calls for students to integrate ideas about plate density, plate interaction, and convection processes into a coherent written response, as shown in Appendix Table 9.

Data Analysis

Teacher Data

Two researchers used emergent coding to develop understanding of how teachers supported by a workshop developed knowledge of SDL in the science classroom, and how they used this knowledge to customize a web-based unit to strengthen opportunities for students’ SDL. To examine how teachers developed knowledge of SDL, the researchers jointly selected questions from the pre-workshop survey and WISE workshop unit that were designed to elicit teachers’ conceptions of SDL and what was illustrative of SDL in their science classroom. We dropped two of the questions after the initial round of coding, because the responses were too different at each time point to make comparisons. This resulted in a data corpus including individual teacher written responses to a total of 5 questions across 3 timepoints: 2 questions at pre-workshop, 2 questions at workshop day 1, and 1 question at workshop day 2 (questions in Appendix, Table 10). Because we wanted to examine changes in teachers’ conceptions of SDL from before to during the workshop, we used the teacher as the unit of analysis. This meant that when a teacher gave two responses at a single time point, in response to different prompts for instance, we coded the two responses holistically to characterize the teacher’s views about SDL in science.

First, the two researchers reviewed all of the data together using an initial draft of an emergent coding rubric that was developed by one of the researchers and had undergone refinement based on meetings with the larger research team. Researchers used this rubric to jointly code the data from one teacher across all three time points and refined the rubric while doing so. This results in the rubric shown in Table 4. Next, we used this refined rubric to code the data separately. Each researcher gave each teacher response at least two codes: one for “who is mostly directing the behavior” (teacher/computer or student) and one (or multiple) for “each type of behavior” they identified as illustrative of self-directed learning. This meant there were a total of 22 data cells with codes: two cells per four teachers at each of three time points (there were two missing codes from one teacher who did not respond on the pre-survey). Researchers compared coding; there was agreement on the codes for 19 of the 22 data cells resulting in an agreement level of 86%. The 3 cells containing codes on which researchers disagreed, involved disagreement on categorization of one of the multiple SDL behaviors reported by a teacher; researchers discussed these 3 to come to consensus.

After coding the written workshop responses, the two researchers separately applied the same coding rubric to the transcript of teachers discussion as they jointly analyzed their students’ logged explanation revisions from the year prior to generate an SDL rubric and curriculum customization ideas (see Table 1). More specifically, researchers coded the transcript to identify how teachers distinguished their criteria for what counts as an SDL behavior versus more teacher or computer directed behavior. Using the rubric each of the two researchers, separately, identified episodes in the transcript where they saw evidence of teachers distinguishing a self-directed learning behavior and coded that episode with what kind of behaviors teachers were distinguishing between (e.g., student following directions versus student selecting a pathway). The researchers also used the teacher-generated rubric to inform interpretations of how teachers were distinguishing characteristics of SDL in the transcript. Researchers compared coding. Agreement was at 100% for identification of the episodes in the transcript giving evidence of distinguishing SDL behaviors (identification of 6 episodes), and 83% on the coding of what SDL behaviors teachers were distinguishing between within those episodes (agreement on five of the six episodes). Researchers discussed the coding to reach agreement on the one disagreement and to elaborate the reasoning for the coding for all. Last, researchers jointly examined the post-implementation teacher interviews to gain deeper insight into the teachers’ perceptions on the customization design and its application in their classrooms.

Student Data: Student Reflections on Guidance Used to Revise

To analyze how students perceived the value of the guidance they selected or were assigned, we coded students’ logged reports of whether they found the guidance helpful (coded 1) or, sort-of helpful or not really helpful (both coded 0), in terms of supporting them to revise. We coded “sort of helpful” as a 0 since the majority of the written reflections suggested uncertainty about the utility of the guidance (e.g., “I am not sure because it gave me no helpful feedback; I wasn’t sure on how the feedback related to my answer; I am not very sure because there wasn’t much explanation on what to improve on the feedback given”). A chi-square test of independence was then used to examine if there was a relationship between the frequency of students’ reporting that the guidance was helpful in supporting them to revise and the guidance condition (choice/assigned).

Student Data: Impact of Guidance Choice on Student Learning

Students’ initial and final explanations for the lava lamp embedded assessment and pre-test and post-test explanations for the Mt. Hood items were scored using KI rubrics [Table 3, Appendix Table 9]. Rubrics measure the links students make among normative science ideas (Liu et al., 2008). For both items, the data were first scored by the computer using c-raterML™.Footnote 1 The data were then sorted into score-based categories, and a researcher checked the scores, modifying when inaccurate. If questions arose, the researcher discussed the score with one other researcher until reaching consensus.

To examine the impact of guidance condition and time (initial to revised; pre-test to post-test), we used repeated measures ANOVA for both students’ initial and revised scores on the embedded item and their scores on the pre-/post-test item. In both, we used the score as the within subjects repeated variable, and the assigned or choice guidance condition as the between subjects variable. STATA 13.1 statistical analysis software was used.

Results

How Did Teachers Develop Knowledge of SDL in a Workshop?

Teachers’ Conceptualizations of Self-Directed Learning in the Classroom

The analysis of the teachers’ written responses at pre-workshop and workshop days 1 and 2 indicate a shift in teachers’ conception of SDL in the middle school science classroom. As shown in Table 5, teachers moved from an initial characterization that emphasized students demonstrating on-task behavior or following directions (e.g., correcting answers, following a computer-directed pathway) as well as engaging in teacher-directed tasks aligned with science learning such as analyzing provided data or summarizing information.

At the end of workshop day 2, their conception had shifted to emphasizing student ownership of the science learning process. This was expressed in their articulation of behaviors such as students making choices about the next step in their investigation or selecting what guidance would help them progress. This was also expressed in the teachers’ expressed rationale for these behaviors, in that they identified students were driven by their own motivation and engagement in learning the science topic. Teachers’ characterizations recognized similar science learning behaviors such as analyzing evidence and refining their understanding as they had articulated in the pre-workshop survey. These behaviors were modified however to place the reflection and decision making on the student rather than the teacher or computer.

Distinguishing Student SDL Behaviors: Analyzing Student Work

Teachers distinguished the types of behaviors illustrative of student directed learning, versus “doing school” in their science classrooms, as they analyzed their students’ logged explanation revisions from the year prior to generate an SDL rubric. As illustrated in Table 6 teachers distinguish specific examples of how students grappled with integrating their existing and new ideas, or did not, when revising their explanations.

Teachers distinguished among student behaviors to characterize each level of the rubric. They distinguished between examples of when students are motivated to refine their understanding, compared to motivated for a score or completion. This led to their definition of their students’ SDL as absent when “They [students] review their explanation and decide it is OK, they don’t see any gaps. They may add a sentence for example just to move forward.”

Teachers distinguished between examples of students using guidance to explore a new idea or integrate a new idea into their explanation, versus adding an idea they were told to. They characterized this level for SDL behavior as “Emergent.” “[Students] answer the question in the automated guidance but they don’t fluidly connect to what they said in their first response.” One teacher expressed that in the “emergent” level, students “show directed learning. They answer the question in the feedback, there is no synthesis of the new ideas.”

The teachers contrasted emergent SDL behaviors, with what they described as evidence of students “Developing SDL.” This was evidenced by students who “add a partial idea about the mechanism” when revising their explanation, or “they incorporated evidence or some kind of data.” Teachers defined the highest level of student-led behaviors when students engage in self-assessment and spontaneously seek evidence, distinct from when a student follows directions to examine certain information. Coupling these SDL criteria with technology affordances in the WISE platform, the teachers began rapidly generating ideas for customizing the computer-based guidance in the Plate Tectonics unit.

Linking SDL Criteria to Customization: Generating the Guidance Choice Design

In their first design ideas, teachers suggested modifying previous teaching strategies they had used in the classroom (e.g., sentence frames; claim evidence reasoning), to create guidance that responded to common writing challenges they observed in their students’ work. The customization designs were considerate of the challenges they observed in students’ logged revisions when developing the rubric. This was mainly that many students were not likely to engage in self-directed revision spontaneously. The designs hence offered students more structure and guidance—and subsequently, less opportunity for student decision making than the teachers’ conceptualizations of SDL had expressed. For example, as one teacher suggested, “I am thinking we can use these explanation writing tools…So before they write their explanation, they have a step by step activity to fill out, to refresh their memory. What boundary? What mechanism? Like a guided writing.”

Teachers then moved to an idea to offer students guidance Choices, as they recognized the many different types of guidance students may benefit from. Rather than only having the computer assign guidance, guidance Choice, the teachers conjectured, would enable students to reflect on their initial explanation and select what type of guidance (among a set of given options) would best help them to improve. The WISE technology could facilitate students’ choice using branching, allowing each student to select their type of guidance to revise.

Teachers then used the color-coded KI post-its to outline their customization ideas, and while doing so, distinguishing what KI process each guidance approach targeted (Figs. 1 and 3). They connected a writing guidance option as a method for helping students to distinguish among the ideas in their explanation to determine which are supported by evidence and which need reformulation. This would ultimately help students modify their initial explanation to incorporate new ideas when revising. Teachers connected a content guidance approach as a method for helping students discover new ideas to incorporate by examining relevant evidence. This design reframed guidance Choice as a way to help the student determine where in the KI process they needed the most support: distinguishing among their existing ideas, or discovering new ideas.

The digitized version of teachers’ guidance Choice customization, drafted using the color coded post-its that make the KI pedagogy and opportunities for self-directed learning visible [pink = elicit ideas; orange = discover ideas; green = distinguish ideas; blue = connect or reflect on ideas; smiley face = self-directed learning opportunity]

Researchers and teachers discussed the possible technology tools to utilize, showing examples of tools embedded within other web-based units. To scaffold students in distinguishing among their ideas, teachers selected the annotator. It guided students to help a fictional peer revise their explanation to the same prompt, modeling distinguishing which ideas in an explanation to modify (Fig. 3; Gerard et al., 2015). The teachers chose the annotator because it also aligned with one of their guidance for SDL criterion: encourage students to take advantage of their peers’ ideas (Fig. 4).

The annotator prompts a student to critique a fictional peer’s explanation written in response to the same prompt that they responded to. Pre-authored comments are provided in the label boxes on the bottom. The student drag-and-drops each comment to the part of the peer’s explanation where they think the peer should consider revising their explanation to incorporate a new idea suggested by the comment. Prior research demonstrated that the Annotator helped clarify for students, how to revise a written explanation in science (Gerard & Linn, 2022).

For the “discovering ideas” guidance Choice, the teachers identified what they viewed as the most promising resource aligned with the scientific gaps they had observed in their students’ explanations. They reviewed multiple open-education resources looking particularly for web-based animations. They evaluated each of the animations based on their scientific accuracy, alignment with instruction, novelty to students, and grade-level reading requirements. They selected one from the PBS website using these criteria.

Last, to encourage students to reflect on how they choose guidance that addresses their needs when revising, teachers also designed an additional step for students to complete after they revised. A prompt would ask students to reflect on how the guidance they chose supported them in improving their explanation.

Teachers Explore Guidance Choice Impact on Students’ Learning

The teachers’ hypothesized that giving students the opportunity to select between these two guidance Choices would enable their students to take greater ownership for revising their scientific explanations and lead to greater knowledge integration in their revised responses. We examine how guidance Choice, versus having the computer assign guidance, influenced what guidance students’ used, their reflections on the value of the guidance, and the impact of the guidance on their learning.

Students’ Guidance Selections

On the lava lamp explanation, students in the guidance Choice condition were more likely to select guidance to “gather new ideas by reviewing graphs” (n = 154, 64%), than to “clarify ideas by reviewing a peer’s explanation” (n = 87, 36%). Students’ reported that they believed graphs would better help them elaborate their explanation with additional information (e.g., “I chose to review graphs to make my explanation better with more research and facts”). Students also reported choosing graphs, because they believed graphs were a more objective and hence useful resource, whereas they framed peers as a less reliable resource. For example, one student reflected: “I chose this because I think charts tell the right information rather than a peer telling their own personal option.”

Student Reflections on the Value of the Guidance

Students in the guidance Choice condition were significantly more likely to report that the guidance was helpful in supporting them to revise (n = 240, 65%) than students in the assign condition (54%) [X2(1) = 5.48, p = .02]. In written reflections, students in the choice condition reported that they selected guidance that aligned with their needs. A student who selected to review evidence distinguished why this was more helpful for them than reviewing a peer’s explanation: “When I look at images, or animations, I can …actually see and connect how the density of the blob and the position of the blob over time is connected. The way someone else describes it may not be the way I would describe it myself so it would be harder to understand.” Another student who chose to annotate a peer’s work reflected, “Yes [the guidance was helpful]. I realized I had enough ideas, I just needed to express more.”

Student Learning Gains on the Lava Lamp Embedded Assessment.

Across all participants in both guidance conditions there was no significant difference between the types of guidance: reviewing evidence or clarifying writing. Thus, participants were able to use each type of guidance for learning.

For the lava lamp explanation, a repeated measures ANOVA indicated that students in both the choice and assign guidance conditions used the guidance to significantly improve their lava lamp explanation from initial to revised score [F(1, 471) = 185.46, p < 0.001, d = 1.26; see Table 7 for scores]. Further, there was a significant interaction between the guidance condition [choice/assign] and student KI score from their initial to revised explanation [F(1,470) = 4.41, p = .036, d = .19]. The Cohen’s d of 0.19 is a medium effect size based on distribution of 1,942 effect sizes from educational interventions (< 0.05 = small; 0.05–0.2 = medium; 0.2 ≥ large; Kraft, 2020).

As shown in Fig. 5, both guidance formats enable students to improve their explanations. Students displayed a partial understanding at their initial explanation meaning they expressed a normative but isolated idea (KI of approximately 3.5). They used the guidance in both conditions to move to an integrated understanding, meaning they linked two normative ideas in their revised explanation (KI score of 4).

Also shown in Fig. 6, the choice of guidance options increase the quality of students’ explanation revisions. The range of gain scores is more concentrated at zero in the assign condition meaning students revised but did not move up a level in the KI rubric. The gains are spread wider above zero in the choice condition meaning more students were able to use the guidance to integrate a new idea. In sum, it appears that adaptive guidance overall impacts student progress, and choice of guidance options can strengthen student learning in the revision process. This may be because students take more ownership in revision when they are able to decide what type of guidance to pursue, as suggested by the analysis of student reflections on the impact of guidance.

To examine whether the students’ initial level of KI moderated the effect of the guidance conditions as in previous studies on automated guidance (e.g., Beal et al, 2010; Razzaq & Heffernan, 2009; Roll et al., 2014), we split students into two prior KI groups: those who initially displayed low KI on their lava lamp explanation (initial score 1–2; meaning they expressed only inaccurate or overly vague ideas about convection) and those who initially demonstrated partial or full KI on their explanation (initial score 3–5, meaning they displayed at least one accurate and relevant idea). Among the students who displayed low KI on their initial lava lamp explanation, the revision gains of those in the choice condition were substantially larger than those in the assign condition [assign, n = 41, M = 0.59, SD = 0.84; choice, n = 44, M = 0.91, SD = 1.05]. In contrast, for students who initially demonstrated partial or full KI on their explanation, the revision gains in guidance Choice and assigned were more comparable [assign, n = 190, M = 0.36, SD = 0.67; choice, n = 197, M = 0.46, SD = 0.70]. This suggests that guidance Choice may be most beneficial for students who demonstrate incorrect or vague ideas on their initial explanation. Replication is needed to further test this finding.

Student Learning Gains on the Mt. Hood Pre-Test/Post-Test Assessment

A repeated measures ANOVA indicated that students in both conditions improved significantly from pre-test to post-test [F(1, 465) = 1393.06, p = .0000; see Appendix Table 11 for scores]. There was no effect of the guidance condition.

Teacher Reflections on Impact of Guidance Choice Customization

In their interviews post implementation of guidance Choice, teachers reported their belief that student choice strengthened students’ engagement in explanation revision. One teacher remarked: “Students felt empowered and excited by the choice. They eagerly engaged in their chosen activity.” Teachers also observed limitations of the guidance Choice design. All teachers, for example, reported that when choosing the guidance option to “clarify the connections among their ideas by helping a peer revise”, students were disappointed that they were helping a fictional student and not an actual classmate. Teachers identified ways to improve the guidance Choices for the next use. One suggestion focused on making the description of the choices more accessible; some students did not know what “clarify connections” meant. Both teachers suggested making the annotator guidance adaptive, so a student would be given a fictional peer’s explanation to review that included ideas, different or more sophisticated than their own. Teachers also suggested adding a third option to adaptively assign students to work directly with a classmate.

Limitations

The exclusion of student data from school B due to the teacher not being able to implement the customized unit limited the diversity of the student population with whom the unit was tested. To increase generalizability, the results of this study would benefit from replication with additional teachers and schools.

Discussion and Implications

Customization of web-based materials to strengthen self-directed learning (SDL) enabled both teacher and student learning. When teachers used evidence of student work and a research-based instructional framework, they formulated customizations that responded to their students’ challenges in revising scientific explanations. Further, they were empowered to design a guidance comparison study, and they gained insight into ways to support student engagement in directing their revision process to promote SDL.

Promoting SDL during classroom instruction has challenged educators, researchers, and curriculum designers (Bolhuis, 2003; Fallik et al., 2008; Hmelo-Silver, 2004). This study shows the benefit of enabling students to choose among guidance options designed by their teachers based on evidence of student work and KI pedagogy. Students were more likely to judge the guidance as helpful when they made a choice rather than having guidance assigned. Further, those making a choice made significantly more integrated initial revisions than those assigned guidance.

This work identified professional development workshop activities that advance teachers’ ability to customize web-based curriculum for SDL. First, collaboratively developing a definition of SDL by drawing on their own contexts, building on each teacher’s analysis of their students’ prior work, and incorporating KI pedagogy led to a set of shared criteria for SDL. These criteria enabled the teachers to develop a rubric for scoring student SDL in the context of revision. Second, applying the KI pedagogy underlying the curriculum design to rearticulate and refine their customization designs reinforced the teachers’ pedagogical content knowledge. This finding aligns with studies documenting the positive impacts of principled teacher customization on teaching practices and lesson plan design (Debarger et al., 2017; Penuel & Gallagher, 2009).

This study extends prior research by demonstrating the benefits of customization on both teacher and student learning. It demonstrates how teachers advance their knowledge during customization within a research practice partnership as they link evidence of student learning, with specific goals for SDL, and pedagogical choices. It shows how teachers’ customization designs impacted student science learning.

Teachers in partnership with researchers implemented a comparison study to investigate the impact of their guidance Choice customization. The use of a comparison study to test the teachers’ pedagogical conjecture instantiated in their curriculum design provides a springboard for teachers’ continued learning from the customization process. When teachers engage in cycles of curriculum customization and testing, they have the potential to develop what Bereiter described as “practical principled knowledge” (Bereiter, 2013; Janssen et al., 2015). By designing customizations that instantiate teachers’ conjectures about instruction, testing them in their classrooms, and reflecting on the results, teachers may form new insights and become empowered to continue to test new ideas to improve instruction in their classroom.

Previous research has identified the benefits of instruction that promotes SDL, yet also the challenges of facilitating this approach in science classrooms (Fallik et al., 2008; Hmelo-Silver, 2004). This study suggests that teachers can take advantage of advances in web-based authoring tools coupled with the broadening repertoire of research-tested, web-based science activities to make opportunities for student choice in classrooms more feasible. Empowering students to be self-directed in the activities they pursue and the way they communicate may motivate students to take responsibility for developing knowledge (Cordova & Lepper, 1996; Kamii, 1991). In this study, teachers’ implementation of choice in the revision process led to greater student learning gains on the embedded assessment, consistent with teacher and student reflections that choice increased student engagement or motivation. Overall, pre-test to post-test student gains were significant, and the same across guidance conditions. This may reflect teachers’ attention to student thinking during customization and subsequently when teaching the unit. This suggests that the teachers’ customization of their instruction when teaching the customized unit had a greater impact on student outcomes than the guidance conditions. The conjecture that engaging in the customization process impacts teaching strategies when implementing the customized unit has evidence from prior research (Gerard et al., 2010). We will further explore this impact, utilizing observations and interviews during implementation to disentangle the impacts of the curriculum and teaching strategies. This will inform in future workshops if and how to allocate time to instantiating the curriculum customizations using the authoring tools, versus planning and negotiating the design.

Future research is needed to investigate how refining the guidance Choice options and increasing opportunities for students to choose their pathway could strengthen learning outcomes. This includes refinement of the peer review guidance Choice, building on teacher input and prior research. For example, assigning students a peer’s explanation with a view that is different from their own (as compared to similar) has shown to positively impact students’ perceptions of learning from others (Matuk & Linn, 2018). We will explore advances to NLP modeling to detect science ideas within an explanation, allowing assignment of an explanation for peer review that was assigned a similar KI score (to discourage copying) and a dissimilar idea. This may highlight for students the role of collaboration in science learning, encouraging the student to sort out which ideas are supported by valid evidence, and how ideas that appear conflicting or idiosyncratic may relate (Chi et al., 2018; Raviv et al., 2019; Vogel et al., 2017).

Further research will pursue designs for web-based tools to support the teacher customization process including reflecting on student work, making a research-based instructional framework visible, and leveraging open-education resources. This would amplify support for teachers to develop pedagogical conjectures and test them through curriculum customization. We will explore how engaging in this curriculum customization process over multiple years in a research practice partnership impacts teachers’ instructional decisions when implementing the unit, particularly support for self-directed science learning. Our prior work found that as teachers engaged in repeated cycles of customization over 2 years, they increased and refined their guidance strategies at specific points during instruction due to their anticipation of the likely student ideas to emerge (Gerard et al., 2011).

Data Availability

Not applicable.

Code Availability

All curriculum materials featured in this research are open-source.

Notes

The crater ML models used to automatically score the student explanations in plate tectonics have demonstrated sufficient quadratic-weighted kappas (KQW) [Mt. Hood KQW = .861, Mountain KQW = .75, Lava Lamp KQW = .81], indicating sufficient agreement between the computer-assigned score and a human-assigned score (Author et al., 2016a).

References

Beal, C. R., Arroyo, I., Cohen, P. R., & Woolf, B. P. (2010). Evaluation of Animalwatch: An intelligent tutoring system for arithmetic and fractions. Journal of Interactive Online Learning, 9(1), 64–77.

Bereiter, C. (2013). Principled practical knowledge: Not a bridge but a ladder. Journal of the Learning Sciences, 23(1), 4–17.

Berland, L. K., Schwarz, C. V., Krist, C., Kenyon, L., Lo, A. S., & Reiser, B. J. (2016). Epistemologies in practice: Making scientific practices meaningful for students. Journal of Research in Science Teaching, 53(7). https://doi.org/10.1002/tea.21257

Bolhuis, S. (2003). Towards process-oriented teaching for self-directed lifelong learning: A multidimensional perspective. Learning and Instruction, 13, 327–347.

Brown, J., & Livstrom, I. (2020). Secondary science teachers’ pedagogical design capacities for multicultural curriculum design. Journal of Science Teacher Education, 31(8), 821–840. https://doi.org/10.1080/1046560X.2020.1756588

Burton, E. P. (2013). Student work products as a teaching tool for nature of science pedagogical knowledge: A professional development project with in-service secondary science teachers. Teaching and Teacher Education, 29, 156–166.

Chi, M. T. H., Adams, J., Bogusch, E. B., Bruchok, C., Kang, S., Lancaster, M., Levy, R., Li, N., McEldoon, K. L., Stump, G. S., Wylie, R., Xu, D., & Yaghmourian, D. L. (2018). Translating the ICAP theory of cognitive engagement into practice. Cognitive Science, 42(6), 1777–1832.

Chinn, C. A., & Malhotra, B. A. (2002). Epistemologically authentic inquiry in schools: A theoretical framework for evaluating inquiry tasks. Science Education, 86(2), 175–218.

Clark, D. (2006). Longitudinal conceptual change in students’ understanding of thermal equilibrium: An examination of the process of conceptual restructuring. Cognition and Instruction, 24(4), 467–563. https://doi.org/10.1207/s1532690xci2404_3

Cordova, D. I., & Lepper, M. R. (1996). Intrinsic motivation and the process of learning: Beneficial effects of contextualization, personalization, and choice. Journal of Educational Psychology, 88(4), 715–730.

Crawford, L., Lloyd, S., & Knoth, K. (2008). Analysis of student revisions on a state writing test. Assessment for Effective Intervention, 33(2), 108–119.

Davis, E. A., & Krajcik, J. S. (2005). Designing educative curriculum materials to promote teacher learning. Educational Researcher, 34(3), 3–14. https://doi.org/10.3102/0013189x034003003

Davis, E. A., Palinscar, A. S., Smith, P. S., Arias, A. M., & Kademian, S. M. (2017). Educative curriculum materials: Uptake, impact, and implications for research and design. Educational Researcher, 46(6), 293–304. https://doi.org/10.3102/0013189X17727502

Debarger, A. H., Penuel, W. R., Moorthy, S., Beauvineau, Y., Kennedy, C. A., & Boscardin, C. K. I. M. (2017). Investigating purposeful science curriculum adaptation as a strategy to improve teaching and learning. Science Education, 101(1), 66–98. https://doi.org/10.1002/sce.21249

diSessa, A. A. (1988). Knowledge in pieces. In G. Forman & P. Pufall (Eds.), Constructivism in the computer age (pp. 49–70). Lawrence Erlbaum Associates.

Drake, C., & Sherin, M. G. (2006). Practicing change: Curriculum adaptation and teacher narrative in the context of mathematics education reform. Curriculum Inquiry, 32, 153–187. https://doi.org/10.1111/j.1467-873X.2006.00351.x

Duncan, R. G., Chinn, C. A., & Barzilai, S. (2018). Grasp of evidence: Problematizing and expanding the next generation science standards’ conceptualization of evidence. Journal of Research in Science Teaching, 55, 907–937. https://doi.org/10.1002/tea.21468

Fallik, O., Eylon, B.-S., & Rosenfeld, S. (2008). Motivating teachers to enact free choice project-based learning in science and technology: Effects of a professional development model. Journal of Science Teacher Education, 19(6), 565–591.

Forbes, C. T., & Davis, E. A. (2010). Curriculum design for inquiry: Preservice elementary teachers’ mobilization and adaptation of science curriculum materials. Journal of Research in Science Teaching, 47, 820–839. https://doi.org/10.1002/tea.20379

Freedman, S., Hull, G., Higgs, J., & Booten, K. (2016). Teaching writing in a digital and global age: Toward access, learning, and development for all. In Handbook of Research on Teaching (5th ed., pp. 1389–1449). American Educational Research Association.

Gerard, L., Kidron, A., & Linn, M. C. (2019). Teacher guidance for collaborative revision of science explanations. International Journal of Computer Supported Collaborative Learning, 14(3), 291–324.

Gerard, L., & Linn, M. C. (2016). Using automated scores of student essays to support teacher guidance in classroom inquiry. Journal of Science Teacher Education., 27(1), 111–129.

Gerard, L., & Linn, M. C. (2022). Computer-based guidance to support students’ revision of their science explanations. Computers & Education, 176. https://doi.org/10.1016/j.compedu.2021.104351

Gerard, L., Ryoo, K., McElhaney, K., Liu, L., Rafferty, A., & Linn, M. C. (2015). Automated guidance for student inquiry. Journal of Educational Psychology, 108(1), 60–81.

Gerard, L. F., Spitulnik, M., & Linn, M. C. (2010). Teacher use of evidence to customize inquiry science instruction. Journal of Research in Science Teaching, 47(9), 1037–1063.

Gerard, L. F., Varma, K., Corliss, S. C., & Linn, M. C. (2011). A review of the literature on professional development in technology-enhanced inquiry science. Review of Educational Research, 81(3), 408–448.

Gess-Newsome, J., Taylor, J. A., Carlson, J., Gardner, A. L., Wilson, C. D., & Stuhlsatz, M. A. M. (2019). Teacher pedagogical content knowledge, practice, and student achievement. International Journal of Science Education, 41(7), 944–963.

Gunstone, R. F., & Champagne, A. B. (1990). Promoting conceptual change in the laboratory. In E. Hegarty-Hazel (Ed.), The student laboratory and the science curriculum. New York: Routledge.

Harn, B., Parisi, D., & Stoolmiller, M. (2013). Balancing fidelity with flexibility and fit: What do we really know about fidelity of implementation in schools? Exceptional Children, 79(2), 181–193.

Hmelo-Silver, C. (2004). Problem-based learning: What and how do students learn? Educational Psychology, 16(3), 235–266.

Inhelder, B., & Piaget, J. (1958/1972). The growth of logical thinking from childhood to adolescence, an essay on the construction of formal operational structures. New York: Basic Books.

Janssen, F., Westbroek, H., & Doyle, W. (2015). Practicality studies: How to move from what works in principle to what works in practice. Journal of the Learning Sciences, 24(1), 176–186. https://doi.org/10.1080/10508406.2014.954751

Jimenez-Aleixandre, M. P., Rodriguez, A. B., & Duschl, R. A. (2000). “Doing the lesson” or “doing science”: Argument in high school genetics. Science Education, 84(3), 757–792.

Kali, Y. (2006). Collaborative knowledge-building using the design principles database. International Journal of Computer Support for Collaborative Learning, 1(2), 187–201. http://link.springer.com/article/10.1007%2Fs11412-006-8993-x

Kamii, C. (1991). Toward autonomy: The importance of critical thinking and choice making. School Psychology Review, 20(3), 382–388.

Kerr, K. A., Marsh, J. A., Ikemoto, G. S., Darilek, H., & Barney, H. (2006). Strategies to promote data use for instructional improvement: Actions, outcomes, and lessons from three urban districts. American Journal of Education, 112, 496–520. https://doi.org/10.1086/505057

Kraft, M. A. (2020). Interpreting effect sizes of education interventions. Educational Researcher, 49(4), 241–253.

Leary, H., Severance, S., Penuel, W., Quigley, D., Sumner, T., & Devaul, H. (2016) Designing a deeply digital science curriculum: Supporting teacher learning and implementation with organizing technologies. Journal of Science Teacher Education, 27(1), 61–77. https://doi.org/10.1007/s10972-016-9452-9

Lewis, C., Perry, R., Friedkin, S., & Roth, J. (2016). How does lesson study improve mathematics instruction? Theory and Practice of Lesson Study in Mathematics, 48(4), 541–554.

Linn, M., & Eylon, B.-S. (2011). Science learning and instruction: Taking advantage of technology to promote knowledge integration. New York: Routledge.

Linn, M. C., & Hsi, S. (2000). Computers, teachers, and peers: Science learning partners. Mahwah, NJ: Erlbaum.

Littenberg-Tobias, J., Beheshti, E., & Staudt, C. (2016). To customize or not to customize? Exploringscience teacher customization in an online lesson portal. Journal of Research in Science Teaching, 53(3), 349–367. https://doi.org/10.1002/tea.21300

Liu, O. L., Lee, H.-S., Hofstetter, C., & Linn, M. C. (2008). Assessing knowledge integration in science:Construct, measures and evidence. Educational Assessment, 13(1), 33–55. https://doi.org/10.1080/10627190801968224

Matuk, C., & Linn, M. C. (2018). Why and how do middle school students exchange ideas during science inquiry? International Journal of Computer-Supported Collaborative Learning, 13(3), 263–299.

Matuk, C. F., Linn, M. C., & Eylon, B.-S. (2015). Technology to support teachers using evidence from student work to customize technology-enhanced inquiry units. Instructional Science, 43, 229–257.

Mercier, H., & Sperber, D. (2011). Why do humans reason? Arguments for an argumentative theory. Behavioral and Brain Sciences, 34, 57–111. https://doi.org/10.1017/S0140525X1000096

Nickerson, R. (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology, 2(2), 175–220. https://doi.org/10.1037/1089-2680.2.2.175

Penuel, W. R. (2017). Research–practice partnerships as a strategy for promoting equitable science teaching and learning through leveraging everyday science. Science Education, 101(4), 520–525.

Penuel, W. R., & Gallagher, L. P. (2009). Preparing teachers to design instruction for deep understanding in middle school earth science. Journal of the Learning Sciences. https://doi.org/10.1080/10508400903191904

Raviv, A., Cohen, S., & Aflalo, E. (2019). How should students learn in the school science laboratory? The benefits of cooperative learning. Research in Science Education, 49(2), 331–345.

Razzaq, L., & Heffernan, N. (2009). To tutor or not to tutor: that is the question. In V. Dimitrova, R. Mizoguchi, B. D. Boulay, & A. C. Graesser (Eds.), Artificial Intelligence in Education: Building Learning Systems that Care: From Knowledge Representation to Affective Modelling, Proceedings of the 14th International Conference on Artificial Intelligence in Education, AIED 2009 (pp. 457–464): IOS Press.

Remillard, J. T. (2005). Examining key concepts in research on teachers’ use of mathematics curricula. Review of Educational Research, 75(2), 211–246.

Rivard, L. P. (1994). A review of writing to learn in science: Implications for practice and research. Journal of Research in Science Teaching, 31(9), 969–983.

Roll, I., Baker, R. S. D., Aleven, V., & Koedinger, K. R. (2014). On the benefits of seeking (and avoiding) help in online problem-solving environments. Journal of the Learning Sciences, 23(4), 537–560. https://doi.org/10.1080/10508406.2014.88397

Roseman, J., Herrmann-Abell, C., & Koppal, M. (2017). Designing for the next generation science standards: Educative curriculum materials and measures of teacher knowledge. Journal of Science Teacher Education, 28(1), 111–141. https://doi.org/10.1080/1046560X.2016.1277598

Sampson, V., & Clark, D. (2009). The impact of collaboration on the outcomes of scientific argumentation. Science Education, 93, 448–484. https://doi.org/10.1002/sce.20306

Sun, D., Looi, C.-K., & Xie, W. (2016). Learning with collaborative inquiry: A science learning environment for secondary students. Technology, Pedagogy and Education, 26(3).

Tekkumru-Kisa, M., Stein, M.K. & Doyle, W. (2020). Theory and research on tasks revisited: Task as a context for students’ thinking in the era of ambitious reforms in mathematics and science. Educational Researcher.

Vogel, F., Wecker, C., Kollar, I., et al. (2017). Socio-cognitive scaffolding with computer-supported collaboration scripts: A meta-analysis. Educational Psychology Review, 29, 477–511.

Voogt, J., Laferriere, T., Breuleux, A., Itow, R. C., Hickey, D. T., & McKenney, S. (2015). Collaborative design as a form of professional development. Instructional Science, 43(2), 259–282. https://doi.org/10.1007/s11251-014-9340-7

Wen, C.-T., Liu, C.-C., Chang, H.-Y., Chang, C.-J., Chang, M.-H., Fan Chiang, S.-H., Yang, C.-W., & Hwang, F.-K. (2020). Students’ guided inquiry with simulation and its relation to school science achievement and scientific literacy. Computers & Education. https://doi.org/10.1016/j.compedu.2020.103830

White, B., & Frederiksen, J. (1998). Inquiry, modeling, and metacognition: Making science accessible to all students. Cognition and Instruction, 16, 3–118. https://doi.org/10.1207/s1532690xci1601_2

Winne, P. H. (2018). Cognition and metacognition within self-regulated learning. In D. H. Schunk & J. A. Greene (Eds.), Educational psychology handbook series. Handbook of self-regulation of learning and performance (pp. 36–48). Routledge/Taylor & Francis Group.

Ye, L., Recker, M., Walker, A., Leary, H., & Yuan, M. (2015). Expanding approaches for understanding impact: Integrating technology, curriculum, and open educational resources in science education. Educational Technology Research and Development, 63(3), 355–380.

Zhu, M., Liu, O. L., & Lee, H. S. (2020). The effect of automated feedback on revision behavior and learning gains in formative assessment of scientific argument writing. Computers & Education. https://doi.org/10.1016/j.compedu.2019.103668

Zimmerman, B. (2002). Becoming a self-regulated learning. An Overview. Theory into Practice, 41, 64–70.

Funding

We received funding from the William and Flora Hewlett Foundation under POWERED: Personalized Open Web-based Educational Resources-Evaluator & Designer (Grant 2019–9759). It is also based upon work supported by the National Science Foundation under STRIDES: Supporting Teachers in Responsive Instruction for Developing Expertise in Science (NSF project 1813713). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation or the Hewlett Foundation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics Approval and Consent to Participate

The research involved human participants and informed consent. The Committee for Protection of Human Subjects (CPHS) of [name of institution withheld for blinding] has reviewed and approved this research.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gerard, L., Bradford, A. & Linn, M.C. Supporting Teachers to Customize Curriculum for Self-Directed Learning. J Sci Educ Technol 31, 660–679 (2022). https://doi.org/10.1007/s10956-022-09985-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10956-022-09985-w