Abstract

Large linear reservoirs, while not necessarily of practical utility, might provide insight to large nonlinear reservoirs. Our study of large linear reservoirs in the context of improving predictive capabilities suggests that: one desires to be near the edge of instability; and random matrix theory guarantees that the performance of large linear random matrices is only dependent on how weights in the weight matrix are chosen and not the individual weights. It also seems as though dynamic and static weights are quite different in performance. We comment on how these lessons may or may not apply to the large nonlinear reservoirs that are typically used for prediction applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Time series prediction is of importance for evolved and engineered systems. There are reasons to believe that evolved biological systems wish to predict their input [1,2,3,4], and there are a number of reasons to design an engineered system to predict its input. For example, one might wish to predict the weather or climate, written language, or biological signals. Time series prediction is related to time series modeling, in that a model can often be turned into a predictor, but the two are not quite the same [5].

Discrete-time prediction is a well-worn problem. The common solutions are certain recurrent neural networks (RNNs), and other techniques can be mapped onto RNNs [6]. These networks are designed so that they implicitly have memory of past input that they use to predict future input. To train RNNs, backpropagation through time (BPTT) [7, 8] is used; the network is unrolled so that it looks feedforward with static weights. Training these networks used to be quite difficult, in that gradients either vanished or exploded [9], but recent advances have allowed for amelioration of these difficulties [10]. Both gated recurrent units [11] and long short-term memory units [12] are easier to train and have longer memory of the input.

Reservoir computers (RCs) are a special case of RNNs, in which recurrent weights are usually completely fixed and only readout weights (a small fraction of total weights) are trained. Universal approximation guarantees allow a random reservoir to do as well as a fully-trained network, given enough nodes and a sufficiently rich readout function [13]. This makes training faster, as opposed to an RNN trained from scratch.

The advantages of reservoir computing become more pronounced in continuous-time prediction tasks. One can still use discrete-time RNNs to approach these problems if the input data is discretized. Then, BPTT requires larger and larger unrolled networks as the time discretization becomes smaller and smaller, hence becoming more and more computationally expensive. This large expense limits the applications for which discrete-time RNNs can be utilized. Continuous-time RCs have no such issues, as the training of the readout weights still usually requires no more than regularized linear regression. As such, RCs are a competitive alternative to discrete-time RNNs for continuous-time prediction tasks.

In this manuscript, we study a theoretically tractable version of RCs– linear RCs– in the hopes that the analysis gives insight into the performance of nonlinear RCs, as studying linear feedforward networks gave insight into nonlinear feedforward networks [14]. We aim to discover how, in the linear limit, the chosen weight matrices matter for predictive performance. First we find that the performance of the linear RC is surprisingly insensitive to the actual weight matrix, and that dynamic RCs cannot be used to analyze static RCs (reservoirs in which weights are fixed). Next, we find that there is a local maximum in predictive capability when a node is at the edge of instability, answering an age-old question of whether or not criticality in biology [15], though see [16, 17], is useful for computation for reasons that do not relate to maximization of susceptibility [18]. Previous researchers have shown that being at this edge allows for increased memory [19, 20], although see Ref. [21]. These studies are newer versions of an old debate about computation at the edge of chaos [22,23,24]. No one has shown that being at the edge of instability allows for greater predictive capability, as prediction and memory are not always aligned [25].

The article proceeds as follows. In Sect. 2, we introduce Wiener filters and then RNNs, RCs, and linear RCs. In Sect. 3, we first show insensitivity of predictive performance to the actual weight matrix, and then outline a short proof of the benefits of near-instability for prediction. Finally, we discuss implications for engineered and evolved predictive systems in Sect. 4. Altogether, we provide theoretical justification for two best practices in nonlinear RC design.

2 Background

In Sect. 2.1, we describe the setup of the linear RC. In Sect. 2.2, we describe the filter to which we compare the linear RC– the Wiener filter.

2.1 Recurrent Neural Networks

Let \(h\in \Re ^d\) be the state of the system, or the sensor, and let \(s\in \Re \) be a time-varying input. We study continuous-time systems of the form

where \(f_{\theta }(\cdot ,\cdot )\) is some function of both sensor state and input with parameters \(\theta \). These parameters are essentially weights that govern how h and s affect future sensor states. This is a continuous-time RNN, also known as an input-dependent dynamical system. One can choose any form of f that one wishes, and different RNN architectures result from different choices of f. In discrete-time applications, Long Short-Term Memory units and Gated Recurrent Units are often used to optimize prediction of input. Continuous-time RNNs are less well-studied.

In general, RNNs are hard to train [9, 26], and continuous-time RNNs are much harder to train than discrete-time RNNs, though one could approximate them as discrete-time RNNs and unroll to large depths. RCs were invented to address this issue. RCs involve two components [27]: a reservoir, which is some input-dependent dynamical system with high dimensionality d as in Eq. 1; and a readout layer, which is usually a linear function of h, so that the readout \(\hat{u}\) is

Note that \(\hat{u}\) is often an estimate of a future value of the input. The parameters a and b are then chosen by linear regression, potentially with regularization, so that \(\hat{u}\) mimics whatever aspect of the input signal or other signal one wishes. We have mean-zeroed both the input and the relevant variable so that \(b=0\). Sometimes, nonlinear readouts are considered; we do not consider nonlinear readouts in this paper. Because only linear regression is required to train most RCs, RCs are incredibly easy to train.

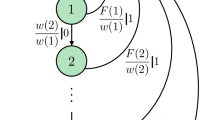

Linear RCs are a limited subset of RCs in which f takes a linear form, so that

We call W the weight matrix, and in this manuscript, it is allowed to vary in time for certain subsections of the results. The vector v governs how the signal s is sent to each of the nodes, and remains static throughout this manuscript. Again, one trains the linear RC to minimize the mean-squared error between \(\hat{u}\) and some signal u. There are closed-form expressions for a and b that we will not use in this paper [25].

2.2 Optimal Linear Filters

In trying to predict u(t) optimally from \(\overleftarrow{s_t}\), the values of s prior to time t, using a linear filter, we have the best linear prediction

The exact equation for \(K^*(t)\) is given in Ref. [28]. Note that \(u^*\) is often an optimal linear estimate of a future value of the input. We assume ergodic input and output. For ease in the appendix, we denote the cross correlation between our input and the signal

and the autocorrelation function

One can show using calculus of variations that to minimize the mean-squared error between u(t) and \(u^*(t)\), it must be the case that

In Sect. 3.2, we assume that \(R_{su}(t)\) and \(R_{ss}(t)\) are everywhere nonnegative, although weaker conditions could be employed. To actually find the optimal linear filter, one uses Wiener-Hopf factorization, but we will not need the explicit solution for this work.

3 Results

In what follows, we study large linear reservoirs. Linearity may seem like a limitation, as the most effective reservoirs are typically mostly nonlinear, though see Ref. [6]. But if we approximate the activation function as having a linear regime and saturation regime(s) in which how the node value changes is roughly constant, it seems plausible that each node is equipped with an automatic forget gate(s), in that once saturation is reached, past information about the input is forgotten. Hence, the memory trace is more of a step function than the exponential decay you see with linear RCs. Once out of the saturation regime, the saturation level and the linearized dynamics determine (in a mean-field manner) the future state of the node, until the next saturation regime is reached. In discrete-time, we can partition nodes into those nodes who have reached saturation 1 timestep ago, 2 timesteps ago, and so on. In continuous-time, to implement an automatic forget gate, we rely on the node staying in the saturation region for some time and thus effectively forgetting past information.

This view of reservoir computers is, to the best of our knowledge, new. This picture is likely only approximate.

Even if this viewpoint is only approximately correct, the study of large linear recurrent networks aids the study of nonlinear ones in that it helps us understand the linearized dynamics of some nodes in a nonlinear recurrent network.

This all leads us to study large linear reservoirs as a guide to the performance of large nonlinear reservoirs. Furthermore, time cells in the brain– which essentially take an approximate inverse Laplace transform of an approximate Laplace transform of input to the hippocampus– can be thought of as linear reservoirs [3, 29], and so a study of linear reservoirs may provide insight into the prediction in the hippocampus.

3.1 Large Randomly-Weighted Linear Reservoirs

First, we study large linear networks with random weights. First, in Sect. 3.1.1, we explain a concentration of measure phenomenon. Next, in Sect. 3.1.2, we showcase the difference between dynamic and static weights, i.e. the difference between when the weights change with time rapidly and when they are static. Although the theoretical study of the difference between static red and dynamic weights is only done in the limit that the input process is a Gaussian process and that the weights of the reservoir are i.i.d., numerical results show that the input process can be non-Gaussian, and a qualitative conclusion still seems to hold.

3.1.1 Concentration of Measure for Static Weights

As remarked upon in Ref. [3], the linear networks that we use here are equivalent to “simple networks” in performance in that one can do a linear transformation and recover nodes that all evolve independently. Precisely,

upon diagonalization of \(W=PDP^{-1}\) becomes

so that the original network is really a network of nodes who independently evolve with timescales based on the eigenvalues of W. Random matrix theory guarantees that different W, if generated by the same prescription, will have similar eigenvalues [30]. Hence, we should expect to see that the performances of large linear networks are governed only by aspects of the prescription for the weights in the weight matrix.

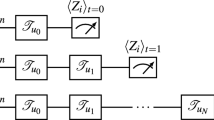

We can see this numerically from the following example. The x-trajectory of the nonlinear Lorenz equations stimulates a linear reservoir that is designed to predict what happens a time \(\tau =0.05\) from the present with N nodes, where N can be 5, 50, or 500. We see not only better performance when there are more nodes, but we also see a concentration of measure phenomenon in Fig. 1 in which the squared correlation coefficients (or prediction function \(m(\tau )\)) of the reservoir state and the future Lorenz input are highly concentrated around 0.9999 for \(N=500\).

Linear reservoirs of sizes \(N=5,~50,~500\) are impinged upon by input form the x-coordinate of the Lorenz attractor and asked to predict what will happen a time 0.05 from now. Shown are squared correlation coefficients between reservoir states (black circles are static weights, blue crosses are dynamic weights) for 15 different reservoirs with weight matrices generated as described below a time 0.05 previous to the x-coordinate of the Lorenz attractor, also known as the prediction function at \(\tau =0.05\), \(m(\tau )\) [25]. The weight matrices are generated so that every entry is drawn i.i.d. from a standard normal distribution, and then the entire matrix is shifted so that the matrix is on the edge of instability. A concentration of measure phenomenon is observed not at all at \(N=5\), already at \(N=50\), and strongly observed at \(N=500\); and dynamic weight reservoirs perform worse than static weight reservoirs

In other words, results in random matrix theory guarantee that the performance of linear RCs with the same weight matrix prescription will be nearly identical as long as the linear RCs are large. Arguments of this type may well apply to nonlinear RCs.

3.1.2 Dynamic Weights Versus Static Weights

There are two options for these weights: they can either change with time, or remain static. If they change with time rapidly, we say that the weights are “dynamic”. If they are static, we say that the weights are “static”. Assuming that weights are dynamic makes mathematical analysis of networks simpler, as in Ref. [31], but we will show here that dynamic weights result in worse performance.

Suppose that the weights are dynamic, so that they change randomly and quite quickly. Furthermore, let us assume that s(t) is generated by a Gaussian process. We will show that the nodes sample independently from an identical Gaussian distribution, leaving only two pieces of information from N nodes.

More precisely, \(h_i\) independently samples from a normal distribution with mean \(\mu (\overleftarrow{s}^t)\) and variance \(\sigma ^2(\overleftarrow{s}^t)\) where \(\overleftarrow{s}^t\) is the past of the input up to time t. As this is a linear system with Gaussian noise, it is easy to see that the statistics of \(\textbf{h}\) are Gaussian. We have to assume that elements of a column in W are uncorrelated and if \(\sum _k \langle W_{ik} W_{jk}\rangle = 0\), conditions that are easily satisfied by weights that are chosen i.i.d. from a mean-zero normal distribution, a standard prescription.

In effect, the features that the linear reservoir is then using to predict are entirely based on the mean and variance of the Gaussian distribution sampled by a node \(h_i\). The crux of the argument is an argument that \(\sum _j W_{ij} h_j\) acts like Gaussian noise, and that the injected noise into each node is uncorrelated. See appendix.

This analysis does not hold for RCs with static weights, as numerics not shown here confirm that there are many nonzero eigenvalues of the covariance matrix instead of just two. In other words, the difference between dynamic and static weights, theoretically, is stark. Dynamic weights allow one to store much less information about the past of the input in the network; nodes are used redundantly. With static, random weights, each node carries information on several timescales in a distributed way.

This leads to better predictive performance for the static system. See Fig. 1. Note that this better performance holds even though the Lorenz attractor does not produce a Gaussian process.

3.2 Local Maximum in Predictive Capability at the Edge of Instability

While in applications RCs have random weight matrices, one could imagine tuning some of the eigenvalues of the weight matrix so as to send RCs to the edge of instability. In this subsection, we show a surprising result: that if all eigenvalues are tuned to the edge of instability, that the RC is locally (but not likely globally) maximizing its predictive power. In other words, the mean is a powerful statistic for prediction. This is shown to be true only when there are positive correlations for \(R_{ss},~R_{su}\).

Our first step will be to view linear RCs as linear filters of the input \(\overleftarrow{s}_t\). It has been shown that these linear RCs are equivalent in predictive capacity to simple versions in which

where \(h_i\) is the \(i^{th}\) node of the reservoir. Hence, we consider these simple versions only. With this simplification, it is straightforward to show that

so that

Our goal is to compare \(\hat{u}(t)\) to some signal, and minimize the average squared difference between them. We allow for the possibility that all eigenvalues are different. Optimizing these parameters \(\lambda _i\) can be difficult, and in general, depends on the statistics of the input process. (This can be seen by applying a scaling argument with a time change to the stochastic process and rescaling the \(\lambda _i\) accordingly.)

There are two steps to this process. First, we choose coefficients \(A_i\) so as to minimize the average squared difference; next, we ask what weights \(\lambda _i\) result in minimal mean-squared error. If the chosen \(\lambda _i\) are near 0 but negative, we can say that prediction is maximized near instability.

The signal that we compare \(\hat{u}\) to can be anything. For instance, we could compare \(\hat{u}(t)\) to \(s(t+\tau )\), where \(\tau >0\); this would imply that we are trying to predict the input signal wholesale, removed by a time of \(\tau \). We will try to be as general as possible. We imagine that there is some signal u(t) that we are trying to predict. We make one change in concept to the RC program and minimize the mean-squared error between our signal \(\hat{u}(t)\) and the optimal linear filtered estimated \(u^*(t)\) that uses \(\overleftarrow{s}_t\) to predict u(t),

Thus, we try to choose \(A_i\) and \(\lambda _i\) so as to minimize

where the key quantity here is the difference between the optimal linear filter and our constrained linear filter,

Some straightforward algebra reveals that

where R(t) is the autocorrelation function of the input. As the optimal linear filter is best expressed as an inverse Laplace transform, we do the same to \(\Delta K(t)\) and write

where \(C_1\) is a line Ti in the complex plane such that all singularities of \(\tilde{K}(s)\) are to the left of the line, so that

If we define a new function

one can show that

using Cauchy’s residue theorem. If we look for the coefficients \(A_i\)– essentially the weights of the readout layer– that lead to minimal MSE, we demand that

and if we define a vector \(g_i = \int _{C_1} w(s,-\lambda _i) K^*(s) ds\) and matrix \(\overleftrightarrow {w}_{i,j} = w(-\lambda _i,-\lambda _j)\) we find that

With this substitution, we find that

Details are contained in the appendix.

The first term is not something we can change by varying the reservoir, but we can vary the reservoir and minimize the second term. In other words, our strategy will be to first choose a \(\textbf{g}\) such that the second term is minimized, and then choose \(\overleftrightarrow {w}\) such that both remaining terms are minimized. This is allowable because for any choice of \(\overleftrightarrow {w}\), we can improve our MSE by choosing \(\textbf{g}\) to minimize just the second term. Even though \(\overleftrightarrow {w}\) and \(\textbf{g}\) vary together as we change the reservoir parameters, we can imagine that for any fixed matrix \(\overleftrightarrow {w}\), MSE is minimized when \(\textbf{g}\) is the eigenvector of \(\overleftrightarrow {w}\) corresponding to the maximal eigenvalue of that matrix. This gives us a lower bound on the error–it follows from Rayleigh’s quotient that \(\textbf{g}^{\top } \overleftrightarrow {w}^{-1}\textbf{g} \ge \sigma _{max}^{-1} \textbf{g}^{\top } \textbf{g}\) where \(\sigma _{max}\) is the maximum eigenvalue of \(\overleftrightarrow {w}\). We can saturate this inequality in the limit that all of the \(\lambda _i\)’s are the same \(\lambda \), although interestingly, for the argument to hold, they cannot be exactly the same \(\lambda \). We show this by writing that \(\overleftrightarrow {w}\) is a perturbed rank 1 matrix \(\overleftrightarrow {w} = w(-\lambda ^*,-\lambda ^*) \textbf{1}\textbf{1}^{\top } + \delta \overleftrightarrow {w}\) where \(\textbf{1}\) is the length-N vector of all 1’s. See appendix. In this case,

where N is the number of nodes. We conjecture that the entries of \(\textbf{g}\) and \(\overleftrightarrow {w}\) are all continuous functions of the eigenvalues and so the achievable lower bound on MSE likewise has a nonsingular limit. In the appendix, we show that \(\frac{dMSE}{d\lambda }|_{0}>0\). Hence, one wishes to at least locally set \(\lambda \) to as close to 0 as possible.

This is not a global maximum of prediction; it is typically only a local maximum. Unless the signal is memoryless, one benefits from having nodes pick up on information besides the mean. For example, a better RC might involve combining the node at the edge of instability that picks up the mean with the nodes that result from the filters of the previous subsection.

4 Conclusion

Although large linear RCs may seem impotent, some arguments suggest that they may be good at prediction [6] and may provide insight into prediction in the brain [3]. Additionally, we asserted in the Results foreword that typical nonlinear reservoirs could be viewed as having automatic forget gates with large numbers of nodes containing information since the last m timesteps. We therefore studied large linear reservoirs in the hopes of discovering which weight matrices led to best predictive performance.

First, we found a concentration of measure result for large randomly-weighted linear reservoirs in which only the eigenvalues of the weight matrix in this picture seems to matter, which means that different weight matrices that are generated from the same prescription or recipe will have identical performance.

Second, we found that dynamic and static reservoirs have very different performance. Untying the reservoir appears to wash out information so that N features turn into only 2 independent features. For nonlinear dynamic reservoirs, we might expect 2m independent features– 2 features per group of nodes. As some people have analyzed the performance of neural networks by assuming that they are dynamic, this result may be of theoretical interest to the mathematical arm of the machine learning community [31].

Finally, we have shown that sitting at the edge of instability results in a local, but not necessarily global, maximum in prediction of a large class of time series. At this edge of instability, a nudge in one direction leads the reservoir state sans input to increase in magnitude without bound, while a nudge in the other direction leads the reservoir sans input to decay to a stable fixed point at the origin. This is not criticality in the sense that autocorrelation functions are power law, and so it would be interesting to see if predictive capabilities are maximized at the edge of criticality for more typical notions of criticality.

One could imagine that a biological system would poise itself near instability if information about the input were hidden, since the locations of the global maxima change depending on input and poising oneself near instability is a local maxima no matter what. In other words, if the environment is adversarial, one might expect the biological system to poise itself near criticality, as it will be nearly ready to predict whatever is thrown at it. This contrasts with evidence that certain algorithms operate best when data is at a critical point between order and disorder [32], as it asks of the properties of the algorithm itself and not the data fed into it.

Future biophysical experiments could focus on subjecting organisms to adversarial, artificial environments to see if they move towards criticality. These results, if found, could be explained by this work. This would require fine-tuning, but may happen nonetheless; indeed, this requires less fine-tuning than matching the timescales of the fluctuating environments seen by the organism.

In conclusion, the study of large linear reservoirs would say that we apparently need only care about eigenvalues of weight matrices and whether or not the weight matrix is at the edge of instability. Surprisingly, this agrees nicely with current best practices for reservoir computing. Best practices for reservoirs assert that we desire a uniform distribution of eigenvalues in the complex plane in which the maximal eigenvalue is placed at the edge of instability. The study of large linear reservoirs has therefore provided theoretical justification for some best practices of nonlinear reservoirs.

Data Availability

Data sharing not applicable—no data was generated during this study.

References

Palmer, S.E., Marre, O., Berry, M.J., Bialek, W.: Predictive information in a sensory population. Proc Natl Acad Sci USA 112(22), 6908–6913 (2015)

Singer, Y., Teramoto, Y., Willmore, B.D.B., Schnupp, J.W.H., King, A.J., Harper, N.S.: Sensory cortex is optimized for prediction of future input. Elife 7, e31557 (2018)

Hsu, A., Marzen, S.E.: Time cells might be optimized for predictive capacity, not redundancy reduction or memory capacity. Phys Rev E 102(6), 062404 (2020)

Rao, R.P.N., Ballard, D.H.: Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat. Neurosci. 2(1), 79–87 (1999)

Ruebeck, J.B., James, R.G., Mahoney, J.R., Crutchfield, J.P.: Prediction and generation of binary Markov processes: can a finite-state fox catch a Markov mouse? Chaos 28(1), 013109 (2018)

Bollt, E.: On explaining the surprising success of reservoir computing forecaster of chaos: the universal machine learning dynamical system with contrast to var and dmd. Chaos 31(1), 013108 (2021)

Rumelhart, D.E., Hinton, G.E., Williams, R.J.: Learning internal representations by error propagation. Technical report, California Univ San Diego La Jolla Inst for Cognitive Science (1985)

Werbos, P.J., et al.: Backpropagation through time: what it does and how to do it. Proc. IEEE 78(10), 1550–1560 (1990)

Pascanu, R., Mikolov, T., Bengio, Y.: On the difficulty of training recurrent neural networks. In: International Conference on Machine Learning, pp 1310–1318. PMLR (2013)

Kanai, S., Fujiwara, Y., Iwamura, S.: Preventing gradient explosions in gated recurrent units. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 435–444 (2017)

Chung, J., Gulcehre, C., Cho, K.H., Bengio, Y.: Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint arXiv:1412.3555 (2014)

Schmidhuber, J., Hochreiter, S.: Long short-term memory. Neural Comput 9(8), 1735–1780 (1997)

Maass, W., Markram, H.: On the computational power of circuits of spiking neurons. J. Comput. Syst. Sci. 69(4), 593–616 (2004)

Saxe, A.M., McClelland, J.L., Ganguli, S.: Exact solutions to the nonlinear dynamics of learning in deep linear neural networks. arXiv preprint arXiv:1312.6120 (2013)

Mora, T., Bialek, W.: Are biological systems poised at criticality? J. Stat. Phys. 144(2), 268–302 (2011)

Saremi, S., Sejnowski, T.J.: On criticality in high-dimensional data. Neural Comput. 26(7), 1329–1339 (2014)

Mastromatteo, I., Marsili, M.: On the criticality of inferred models. J. Stat. Mech. 2011(10), P10012 (2011)

Kardar, M.: Statistical Physics of Particles. Cambridge University Press, Cambridge (2007)

Schrauwen, B., Buesing, L., Legenstein, R.: On computational power and the order-chaos phase transition in reservoir computing. In: 22nd Annual conference on Neural Information Processing Systems (NIPS 2008), vol 21, pp 1425–1432. NIPS Foundation (2009)

Toyoizumi, T., Abbott, L.F.: Beyond the edge of chaos: amplification and temporal integration by recurrent networks in the chaotic regime. Phys. Rev. E 84(5), 051908 (2011)

Carroll, T.L.: Do reservoir computers work best at the edge of chaos? Chaos 30(12), 121109 (2020)

Packard, N.H.: Adaptation toward the edge of chaos. Dyn. Patterns Complex Syst. 212, 293–301 (1988)

Langton, C.G.: Computation at the edge of chaos: phase transitions and emergent computation. Physica D 42(1–3), 12–37 (1990)

Mitchell, M., Hraber, P., Crutchfield, J.P.: Revisiting the edge of chaos: evolving cellular automata to perform computations. arXiv preprint arXiv:adap-org/9303003 (1993)

Marzen, S.: Difference between memory and prediction in linear recurrent networks. Phys. Rev. E 96(3), 032308 (2017)

Bengio, Y., Simard, P., Frasconi, P.: Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 5(2), 157–166 (1994)

Jaeger, H.: Echo state network. scholarpedia 2(9), 2330 (2007)

Wiener, N., Wiener, N., Mathematician, C., Wiener, N., Wiener, N., Mathématicien, C.: Extrapolation, Interpolation, and Smoothing of Stationary Time Series: With Engineering Applications, vol. 113. MIT Press, Cambridge (1949)

Howard, M.W., MacDonald, C.J., Tiganj, Z., Shankar, K.H., Du, Q., Hasselmo, M.E., Eichenbaum, H.: A unified mathematical framework for coding time, space, and sequences in the hippocampal region. J. Neurosci. 34(13), 4692–4707 (2014)

Tao, T., Van, V., Krishnapur, M.: Random Matrices: Universality of ESDS and the Circular Law. Ann. Probab. 38(5), 2023–2065 (2010)

Ngiam, J., Chen, Z., Chia, D., Koh, P., Le, Q., Ng, A.: Tiled convolutional neural networks. Adv. Neural Inf. Process. Syst. 23 (2010)

Ngampruetikorn, V., Sachdeva, V., Torrence, J., Humplik, J., Schwab, D.J., Palmer, S.E.: Inferring couplings in networks across order-disorder phase transitions. Phys. Rev. Res. 4(2), 023240 (2022)

Acknowledgements

This study was supported by the Air Force Office of Scientific Research, Award FA9550-19-1-0411.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Thierry Mora.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Proving Dynamic Weights Lead to Just Two Predictive Features Represented

We are now arguing that the nodes contain only two pieces of information about the input: a mean, and a standard deviation, because the nodes are all drawn i.i.d. from the same Gaussian distribution.

The reasoning is as follows: \(\eta _i := \sum _j W_{ij} h_j\) according to the Central Limit Theorem acts like Gaussian noise, independently injected into each node. We suppose that \(h_i \sim \mathcal {N}(\mu (\overleftarrow{s}^t),\sigma ^2(\overleftarrow{s}^t))\) and confirm that the nodes remain independent under this assumption. We assume that the weights are dynamic, so that there are no correlations between \(W_{ij}\) and \(h_k\):

for \(i\ne j\). If row sums are uncorrelated and if \(\sum _k \langle W_{ik} W_{jk}\rangle = 0\), then the noise into the \(i^{th}\) node is independent of the noise into the \(j^{th}\) node. The noise injected into the \(i^{th}\) node has a mean

and a variance of

As long as each row sum has the same variance and the row sum of the square of W is identical across rows, all noise injections are equivalent. Hence, under these somewhat stringent conditions, all nodes act as independent draws from an identical normal distribution. Note that this analysis holds for both large discrete-time and continuous-time linear reservoirs.

Appendix B: Proving that there is a Local Maximum in Predictive Capability

In this appendix, we first describe the manipulations using Cauchy’s residue theorem that allow us to find the form in Eq. 21. We have

Using Cauchy’s residue theorem, we have

When we take derivatives with respect to \(A_i\) and set them equal to 0, we have

which is solved by Eq. 23 in the main text. We then find Eq. 24.

We now show that there is a local maximum when all the \(\lambda _i\) are essentially the same \(\lambda \). In that limit, we have

where \(\delta w_{ij}\) is small relative to \(w(-\lambda ^*,-\lambda ^*)\). From the Sherman-Morrison formula, we have

We also have

where \(\delta g_i\) is small compared to \(g_i(-\lambda ^*)\). We then have

We Taylor expand to lowest nonzero order in \(\delta w_{ij}\) and \(\delta g_i\) and find

We have that \(\delta \overleftrightarrow {w}_{ij}\le 0\) for \(\lambda ^*=0\) and that \(\delta \overleftrightarrow {w}_{ij}=\delta \overleftrightarrow {w}_{ji}\) from the definition of \(w_{ij}\) and using the condition that the autocorrelation function is everywhere nonnegative. Therefore all eigenvalues of \(\delta \overleftrightarrow {w}\) are negative and small, since as each \(\lambda _i\) approaches \(\lambda ^*\), the entries of \(\delta w\) increase to 0 continuously. Using Rayleigh’s quotient,

The other term is easier to understand. We have that

and so \(g_i \le g_i(-\lambda ^*)\). Hence, \(\delta g_i \le 0\) and \(1^{\top }\delta \textbf{g} \le 0\), and the third term is always positive. Put together, we have

The second term is dwarfed by the third term in the large N limit, as \(\lambda _{max}\) at worst scales as N by the Gershgorin Circle Theorem, and so this is a local max in prediction as long as the reservoir is large.

Finally, we wish to show that the derivative of MSE with respect to \(\lambda ^*\) at \(\lambda ^*=0\) is positive.

The multiplying factor is always positive, as the autocorrelation function integrated is equivalent to the total amount of power by Parseval’s theorem, which is positive; and the numerator is the square of a real number. The factor in the bracket on the right hand side is positive, as the denominator is positive by aforementioned logic and we have assumed that \(R_{ss}(t)\) is everywhere positive. Then we have

and

so that the ratio is

As discussed in Sect. 2, we have that

This could be negative or positive, depending on \(u(t+t')\)’s relation to \(s(t')\). Then

Thus, we find that

Whenever the ratio of integrals on the right hand side is well-defined and positive, \(\frac{\partial MSE}{\partial \lambda }|_{\lambda =0}\) is positive, meaning that criticality results in a local maximum for prediction. For example, this would hold whenever the cross correlation function \(R_{su}(t)\) is everywhere positive and when both numerator and denominator are integrable.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hsu, A., Marzen, S.E. Strange Properties of Linear Reservoirs in the Infinitely Large Limit for Prediction of Continuous-Time Signals. J Stat Phys 190, 32 (2023). https://doi.org/10.1007/s10955-022-03040-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-022-03040-z