Abstract

This paper considers three classes of interacting particle systems on \({{\mathbb {Z}}}\): independent random walks, the exclusion process, and the inclusion process. Particles are allowed to switch their jump rate (the rate identifies the type of particle) between 1 (fast particles) and \(\epsilon \in [0,1]\) (slow particles). The switch between the two jump rates happens at rate \(\gamma \in (0,\infty )\). In the exclusion process, the interaction is such that each site can be occupied by at most one particle of each type. In the inclusion process, the interaction takes places between particles of the same type at different sites and between particles of different type at the same site. We derive the macroscopic limit equations for the three systems, obtained after scaling space by \(N^{-1}\), time by \(N^2\), the switching rate by \(N^{-2}\), and letting \(N\rightarrow \infty \). The limit equations for the macroscopic densities associated to the fast and slow particles is the well-studied double diffusivity model. This system of reaction-diffusion equations was introduced to model polycrystal diffusion and dislocation pipe diffusion, with the goal to overcome the limitations imposed by Fick’s law. In order to investigate the microscopic out-of-equilibrium properties, we analyse the system on \([N]=\{1,\ldots ,N\}\), adding boundary reservoirs at sites 1 and N of fast and slow particles, respectively. Inside [N] particles move as before, but now particles are injected and absorbed at sites 1 and N with prescribed rates that depend on the particle type. We compute the steady-state density profile and the steady-state current. It turns out that uphill diffusion is possible, i.e., the total flow can be in the direction of increasing total density. This phenomenon, which cannot occur in a single-type particle system, is a violation of Fick’s law made possible by the switching between types. We rescale the microscopic steady-state density profile and steady-state current and obtain the steady-state solution of a boundary-value problem for the double diffusivity model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Section 1.1 provides the background and the motivation for the paper. Section 1.2 defines the model. Section 1.3 identifies the dual and the stationary measures. Section 1.4 gives a brief outline of the remainder of the paper.

1.1 Background and Motivation

Interacting particle systems are used to model and analyse properties of non-equilibrium systems, such as macroscopic profiles, long-range correlations and macroscopic large deviations. Some models have additional structure, such as duality or integrability properties, which allow for a study of the fine details of non-equilibrium steady states, such as microscopic profiles and correlations. Examples include zero-range processes, exclusion processes, and models that fit into the algebraic approach to duality, such as inclusion processes and related diffusion processes, or models of heat conduction, such as the Kipnis-Marchioro-Presutti model [9, 17, 22, 30, 37]. Most of these models have indistinguishable particles of which the total number is conserved, and so the relevant macroscopic quantity is the density of particles.

Turning to more complex models of non-equilibrium, various exclusion processes with multi-type particles have been studied [24, 25, 41], as well as reaction-diffusion processes [7, 8, 18,19,20], where non-linear reaction-diffusion equations are obtained in the hydrodynamic limit, and large deviations around such equations have been analysed. In the present paper, we focus on a reaction-diffusion model that on the one hand is simple enough so that via duality a complete microscopic analysis of the non-equilibrium profiles can be carried out, but on the other hand exhibits interesting phenomena, such as uphill diffusion and boundary-layer effects. In our model we have two types of particles, fast and slow, that jump at rate 1 and \(\epsilon \in [0,1]\), respectively. Particles of identical type are allowed to interact via exclusion or inclusion. There is no interaction between particles of different type that are at different sites. Each particle can change type at a rate that is adapted to the particle interaction (exclusion or inclusion), and is therefore interacting with particles of different type at the same site. An alternative and equivalent view is to consider two layers of particles, where the layer determines the jump rate (rate 1 for the bottom layer, rate \(\epsilon \) for the top layer) and where on each layer the particles move according to exclusion or inclusion, and to let particles change layer at a rate that is appropriately chosen in accordance with the interaction. In the limit as \(\epsilon \downarrow 0\), particles are immobile on the top layer.

We show that the hydrodynamic limit of all three dynamics is a linear reaction-diffusion system known under the name of double diffusivity model, namely,

where \(\rho _i\), \(i\in \{0,1\}\), are the macroscopic densities of the two types of particles, and \(\Upsilon \in (0,\infty )\) is the scaled switching rate. The above system was introduced in [1] to model polycrystal diffusion (more generally, diffusion in inhomogeneous porous media) and dislocation pipe diffusion, with the goal to overcome the restrictions imposed by Fick’s law. Non-Fick behaviour is immediate from the fact that the total density \(\rho =\rho _0+\rho _1\) does not satisfy the classical diffusion equation.

The double diffusivity model was studied extensively in the PDE literature [2, 33, 35], while its discrete counterpart was analysed in terms of a single random walk switching between two layers [34]. The same macroscopic model was studied independently in the mathematical finance literature in the context of switching diffusion processes [49]. Thus, we have a family of interacting particle systems whose macroscopic limit is relevant in several distinct contexts. Another context our three dynamics fit into are models of interacting active random walks with an internal state that changes randomly (e.g. activity, internal energy) and that determines their diffusion rate and or drift [3, 16, 28, 32, 38, 39, 44, 46].

An additional motivation to study two-layer models comes from population genetics. Individuals live in colonies, carry different genetics types, and can be either active or dormant. While active, individuals resample by adopting the type of a randomly sampled individual in the same colony, and migrate between colonies by hopping around. Active individuals can become dormant, after which they suspend resampling and migration, until they become active again. Dormant individuals reside in what is called a seed bank. The overall effect of dormancy is that extinction of types is slowed down, and so genetic diversity is enhanced by the presence of the seed bank. A wealth of phenomena can occur, depending on the parameters that control the rates of resampling, migration, falling asleep and waking up [6, 31]. Dormancy not only affects the long-term behaviour of the population quantitatively. It may also lead to qualitatively different equilibria and time scales of convergence. For a panoramic view on the role of dormancy in the life sciences, we refer the reader to [42].

From the point of view of non-equilibrium systems driven by boundary reservoirs, switching interacting particle systems have not been studied. On the one hand, such systems have both reaction and diffusion and therefore exhibit a richer non-equilibrium behaviour. On the other hand, the macroscopic equations are linear and exactly solvable in one dimension, and so these systems are simple enough to make a detailed microscopic analysis possible. As explained above, the system can be viewed as an interacting particle system on two layers. Therefore duality properties are available, which allows for a detailed analysis of the system coupled to reservoirs, which is dual to an absorbing system. In one dimension the analysis of the microscopic density profile reduces to a computation of the absorption probabilities of a simple random walk on a two-layer system absorbed at the left and right boundaries. From the analytic solution we can identify both the density profile and the current in the system. This leads to two interesting phenomena. The first phenomenon is uphill diffusion (see e.g. [12,13,14, 21, 40]), i.e., in a well-defined parameter regime the current can go against the particle density gradient: when the total density of particles at the left end is higher than at the right end, the current can still go from right to left. The second phenomenon is boundary-layer behaviour: in the limit as \(\epsilon \downarrow 0\), in the macroscopic stationary profile the densities in the top and bottom layer are equal, which for unequal boundary conditions in the top and bottom layer results in a discontinuity in the stationary profile. Corresponding to this jump in the macroscopic system, we identify a boundary layer in the microscopic system of size \(\sqrt{\epsilon }\,\log (1/\epsilon )\) where the densities are unequal. The quantification of the size of this boundary layer is an interesting corollary of the exact macroscopic stationary profile that we obtain from the microscopic system via duality.

1.2 Three Models

For \(\sigma \in \{-1,0,1\}\) we introduce an interacting particle system on \({{\mathbb {Z}}}\) where the particles randomly switch their jump rate between two possible values, 1 and \(\epsilon \), with \(\epsilon \in [0,1]\). For \(\sigma =-1\) the particles are subject to the exclusion interaction, for \(\sigma =0\) the particles are independent, while for \(\sigma =1\) the particles are subject to the inclusion interaction. Let

The configuration of the system is

where

We call \(\eta _0=\{\eta _0(x)\}_{x\in {{\mathbb {Z}}}}\) and \(\eta _1=\{\eta _1(x)\}_{x\in {{\mathbb {Z}}}}\) the configurations of fast particles, respectively, slow particles. When \(\epsilon = 0\) we speak of dormant particles (see Fig. 1).

Definition 1.1

(Switching interacting particle systems) For \(\epsilon \in [0,1]\) and \(\gamma \in (0,\infty )\), let \(L_{\epsilon ,\gamma }\) be the generator

acting on bounded cylindrical functions \(f:{\mathcal {X}}\rightarrow {{\mathbb {R}}}\) as

The Markov process \(\{\eta (t):\,t\ge 0\}\) on state space \({\mathcal {X}}\) with

hopping rates \(1,\epsilon \) and switching rate \(\gamma \) is called switching exclusion process for \(\sigma =-1\), switching random walks for \(\sigma =0\) (see Fig. 1), and switching inclusion process for \(\sigma =1\).

\(\square \)

1.3 Duality and Stationary Measures

The systems defined in (1.2) can be equivalently formulated as jump processes on the graph (see Fig. 1) with vertex set \(\{(x,i) \in {{\mathbb {Z}}}^d \times I\}\), with \(I = \{0,1\}\) labelling the two layers, and edge set given by the nearest-neighbour relation

In this formulation the particle configuration is

and the generator L is given by

Thus, a single particle (when no other particles are present) is subject to two movements:

-

(i)

Horizontal movement In layer \(i=0\) and \(i=1\) the particle performs a nearest-neighbour random walk on \({{\mathbb {Z}}}\) at rate 1, respectively, \(\epsilon \).

-

(ii)

Vertical movement The particle switches layer at the same site at rate \(\gamma \).

It is well known (see e.g. [47]) that for these systems there exists a one-parameter family of reversible product measures

with \(\Theta =[0,1]\) if \(\sigma =-1\) and \(\Theta =[0,\infty )\) if \(\sigma \in \{0,1\}\), and with marginals given by

Moreover, the classical self-duality relation holds, i.e., for all configurations \(\eta , \, \xi \in {\mathcal {X}}\) and for all times \(t\ge 0\),

with \(\{\xi (t): \ t\ge 0\}\) and \(\{\eta (t):\ t\ge 0\}\) two copies of the process with generator given in (1.2) and self-duality function \(D:\,{\mathcal {X}} \times {\mathcal {X}} \rightarrow {{\mathbb {R}}}\) given by

with

and

Remark 1.2

(Possible extensions) Note that we could allow for more than two layers, for inhomogeneous rates and for non-nearest neighbour jumps as well, and the same duality relation would still hold (see e.g. [26] for an inhomogeneous version of the exclusion process). More precisely, let \(\{\omega _i(\{x,y\})\}_{x,y\in {{\mathbb {Z}}}}\) and \(\{\alpha _i(x)\}_{x\in {{\mathbb {Z}}}}\) be collections of bounded weights for \(i\in I_M=\{0,1,\ldots , M\}\) with \(M<\infty \). Then the interacting particle systems with generator

with \(\eta =(\eta _i(x))_{(x,i)\in {{\mathbb {Z}}}\times I_M}\), \(\{D_i\}_{i\in I_M}\) a bounded decreasing collection of weights in [0, 1] and \(\gamma _{\{i,i+1\}}\in (0,\infty )\), are still self-dual with duality function as in (1.5), but with I replaced by \(I_M\) and single-site duality functions given by \(d_{(x,i)}(k,n)=\frac{n!}{(n-k)!}\frac{1}{w_{(x,i)}(k)}\,{\mathbf {1}}_{\{k\le n\}}\) with

In the present paper we prefer to stick to the two-layer homogeneous setting in order not to introduce extra notations. However, it is straightforward to extend many of our results to the inhomogeneous multi-layer model.\(\square \)

Duality is a key tool in the study of detailed properties of interacting particle systems, since it allows for explicit computations. It has been used widely in the literature (see, e.g., [20, 43]). In the next section, self-duality (which implies microscopic closure of the evolution equation for the empirical density field) will be used to derive the hydrodynamic limit of the switching interacting particle systems described above. More precisely, we will use self-duality with one and two dual particles to compute the expectation of the evolution of the occupation variables and of the two-point correlations. These are needed, respectively, to control the expectation and the variance of the density field.

1.4 Outline

Section 2 identifies and analyses the hydrodynamic limit of the system in Definition 1.1 after scaling space, time and switching rate diffusively. In doing so, we exhibit a class of interacting particle systems whose microscopic dynamics scales to a macroscopic dynamics called the double diffusivity model. We provide a discussion on the solutions of this model, thereby connecting mathematical literature applied to material science and to financial mathematics. Section 3 looks at what happens, both microscopically and macroscopically, when boundary reservoirs are added, resulting in a non-equilibrium flow. Here the possibility of uphill diffusion becomes manifest, which is absent in single-layer systems, i.e., the two layers interact in a way that allows for a violation of Fick’s law. We characterise the parameter regime for uphill diffusion. We show that, in the limit as \(\epsilon \downarrow 0\), the macroscopic stationary profile of the type-1 particles adapts to the microscopic stationary profile of the type-0 particles, resulting in a discontinuity at the boundary for the case of unequal boundary conditions on the top layer and the bottom layer. Appendix A provides the inverse of a certain boundary-layer matrix.

2 The Hydrodynamic Limit

In this section we scale space, time and switching diffusively, so as to obtain a hydrodynamic limit. In Sect. 2.1 we scale space by 1/N, time by \(N^2\), the switching rate by \(1/N^2\), introduce scaled microscopic empirical distributions, and let \(N\rightarrow \infty \) to obtain a system of macroscopic equations. In Sect. 2.2 we recall some known results for this system, namely, there exists a unique solution that can be represented in terms of an underlying diffusion equation or, alternatively, via a Feynman-Kac formula involving the switching diffusion process.

2.1 From Microscopic to Macroscopic

Let \(N \in {{\mathbb {N}}}\), and consider the scaled generator \(L_{\epsilon ,\gamma _N}\) (recall (1.2)) with \(\gamma _N = \Upsilon /N^2\) for some \(\Upsilon \in (0,\infty )\), i.e., the reaction term is slowed down by a factor \(N^2\) in anticipation of the diffusive scaling we are going to consider.

In order to study the collective behaviour of the particles after scaling of space and time, we introduce the following empirical density fields, which are Radon measure-valued cádlág (i.e., right-continuous with left limits) processes:

where \(\delta _y\) stands for the Dirac measure at \(y\in {{\mathbb {R}}}\).

In order to derive the hydrodynamic limit for the switching interacting particle systems, we need the following set of assumptions. In the following we denote by \(C^\infty _c({{\mathbb {R}}})\) the space of infinitely differentiable functions with values in \({{\mathbb {R}}}\) and compact support, by \(C_b({{\mathbb {R}}};\sigma )\) the space of bounded and continuous functions with values in \({{\mathbb {R}}}_+\) for \(\sigma \in \{0,1\}\) and with values in [0, 1] for \(\sigma =-1\), by \(C_0({{\mathbb {R}}})\) the space of continuous functions vanishing at infinity, by \(C^2_0({{\mathbb {R}}})\) the space of twice differentiable functions vanishing at infinity and by M the space of Radon measure on \({{\mathbb {R}}}\).

Assumption 2.1

(Compatible initial conditions) Let \( {\bar{\rho }}_i\in C_b({{\mathbb {R}}};\sigma )\) for \(i\in \{0,1\}\) be two given functions, called initial macroscopic profiles. We say that a sequence \((\mu _N)_{N\in {{\mathbb {N}}}}\) of measures on \({\mathcal {X}}\) is a sequence of compatible initial conditions when:

-

(i)

For any \(i\in \{0,1\}\), \(g\in C^\infty _c({{\mathbb {R}}})\) and \(\delta >0,\)

$$\begin{aligned} \lim _{N\rightarrow \infty }\mu _N\left( \left| \langle {\mathsf {X}}^{N}_i(0),g\rangle - \int _{{\mathbb {R}}}\mathrm{d}x\ {\bar{\rho }}_i(x)g(x) \right| >\delta \right) =0. \end{aligned}$$ -

(ii)

There exists a constant \(C<\infty \) such that

$$\begin{aligned} \sup _{(x,i) \in {{\mathbb {Z}}}\times I} {{\mathbb {E}}}_{\mu _N}[\eta _i(x)^2] \le C. \end{aligned}$$(2.1)

\(\square \)

Note that Assumption 2.1(ii) is the same as employed in [11, Theorem 1, Assumption (b)] and is trivial for the exclusion process.

Theorem 2.2

(Hydrodynamic scaling) Let \({\bar{\rho }}_0,{\bar{\rho }}_1 \in C_b({{\mathbb {R}}};\sigma )\) be two initial macroscopic profiles, and let \((\mu _N)_{N\in {{\mathbb {N}}}}\) be a sequence of compatible initial conditions. Let \({{\mathbb {P}}}_{\mu _N}\) be the law of the measure-valued process

induced by the initial measure \(\mu _N\). Then, for any \(T, \delta >0\) and \(g\in C^\infty _c({{\mathbb {R}}})\),

where \(\rho _0\) and \(\rho _1\) are the unique continuous and bounded strong solutions of the system

with initial conditions

Proof

The proof follows the standard route presented in [48, Section 8] (see also [11, 20]). We still explain the main steps because the two-layer setup is not standard. First of all, note that the macroscopic equation (2.2) can be straightforwardly identified by computing the action of the rescaled generator \(L^N = L_{\epsilon ,\Upsilon /N^2}\) on the cylindrical functions \(f_i(\eta ):=\eta _i(x), \ i\in \{0,1\}\), namely ,

and hence, for any \(g\in C^{\infty }_c({{\mathbb {R}}})\),

where we moved the generator of the simple random walk to the test function by using reversibility w.r.t. the counting measure. By the regularity of g, we thus have

which is the discrete counterpart of the weak formulation of the right-hand side of (2.2), i.e., \(\int _0^t\mathrm{d}s\int _{{\mathbb {R}}}\mathrm{d}x\) \(\rho _{i}(x,s)\Delta g(x) + \Upsilon \int _0^t\mathrm{d}s\int _{{\mathbb {R}}}\mathrm{d}x\, [\rho _{1-i}(x,s)-\rho _i(x,s)]\,g(x)\). Thus, as a first step, we show that

In order to prove the above convergence we employ the Dynkin’s martingale formula for Markov processes (see, e.g., [48, Theorem 4.8]), which gives that the process defined as

is a martingale w.r.t. the natural filtration generated by the process \(\{\eta _t\}_{t\ge 0}\) and with predictable quadratic variation expressed in terms of the carré du champ, i.e.,

with

We then have, by Chebyshev’s inequality and Doob’s martingale inequality (see, e.g., [36, Section1.3]),

where in the last equality we explicitly computed the carré du champ. Let \(k\in {{\mathbb {N}}}\) be such that the support of g is in \([-k,k]\). Then, by the regularity of g, (2.4) is bounded by

We now show that, as a consequence of (2.1), for any \((x,i),\ (y,j)\in {{\mathbb {Z}}}\times I,\)

from which we obtain

and the desired convergence follows. In order to prove (2.7), first of all note that, by the Cauchy-Schwartz inequality, it follows from (2.1) that, for any \((x,i),\ (y,j)\in {{\mathbb {Z}}}\times I\),

Moreover, recalling the duality functions given in (1.5) and defining the configuration \(\xi =\delta _{(x,i)}+\delta _{(y,j)}\) for \((x,i)\ne (y,j)\), we have that \(D(\xi ,\eta _t)=\eta _i(x,t)\eta _j(y,t)\) and thus, using the classical self-duality relation,

Labeling the particles in the dual configuration as \((X_t,i_t)\) and \((Y_t,j_t)\) with initial conditions \((X_0,i_0)=(x,i)\) and \((Y_0,j_0)=(y,j)\), we obtain

where we used (2.9) in the last inequality. Similarly, for \(\xi =\delta _{(x,i)}\) and \((X_t,i_t)\) the dual particle with initial condition \((X_0,i_0)=(x,i)\), we have that \({{\mathbb {E}}}_{\mu _N}\left[ \eta _i(x,t) \right] \le {{\mathbb {E}}}_{\mu _N}\left[ D(\xi ,\eta _t )\right] ={{\mathbb {E}}}_\xi [ {{\mathbb {E}}}_{\mu _N} [\eta _{i_t}(X_t) ] ]\). Using that \(\eta _i(x)\le \eta _i(x)^2\) for any \((x,i)\in {{\mathbb {Z}}}\times I\) and using (2.1), we obtain (2.7). The proof is concluded after showing the following:

-

(i)

Tightness holds for the sequence of distributions of the processes \(\{{\mathsf {X}}^N_i\}_{N\in {{\mathbb {N}}}}\), denoted by \(\{Q_N\}_{N\in {{\mathbb {N}}}}\).

-

(ii)

All limit points coincide and are supported by the unique path \({\mathsf {X}} _i(t, \mathrm{d}x)=\rho _i(x,t)\,\mathrm{d}x\), with \(\rho _i\) the unique weak (and in particular strong) bounded and continuous solution of (2.2).

While for (i) we provide an explanation, we skip the proof of (ii) because it is standard and is based on PDE arguments, namely, the existence and the uniqueness of the solutions in the class of continuous-time functions with values in \(C_b({{\mathbb {R}}},\sigma )\) (we refer to [48, Lemma 8.6 and 8.7] for further details), and the fact that Assumption 2.1(i) ensures that the initial condition of (2.2) is also matched.

Tightness of the sequence \(\{Q_N\}_{N\in {{\mathbb {N}}}}\) follows from the compact containment condition on the one hand, i.e., for any \(\delta >0\) and \(t>0\) there exists a compact set \(K\subset M\) such that \({{\mathbb {P}}}_{\mu _N}(X^N_i\in K)>1-\delta \), and the equi-continuity condition on the other hand, i.e., \(\limsup _{N\rightarrow \infty }{{\mathbb {P}}}_{\mu _N}( \omega ({\mathsf {X}}_i^N,\delta ,T))\ge {\mathfrak {e}})\le {\mathfrak {e}}\) for \(\omega (\alpha ,\delta ,T):=\sup \{ d_M(\alpha (s),\alpha (t)): s,t\in [0,T], |s-t|\le \delta \}\) with \(d_M\) the metric on Radon measures defined as

for an appropriately chosen sequence of functions \(( \phi _j )_{j\in {{\mathbb {N}}}}\) in \(C^{\infty }_c({{\mathbb {R}}})\). We refer to [48, Section A.10] for details on the above metric and to the proof of [48, Lemma 8.5] for the equi-continuity condition. We conclude by proving the compact containment condition. Define

with \(A>0\) such that \(\frac{C\pi }{6A}<\delta \). By [48, Proposition A.25], we have that K is a pre-compact subset of M. Moreover, by the Markov inequality and Assumption 2.1(ii), it follows that

from which it follows that \(Q_N({{\bar{K}}})>1-\delta \) for any N. \(\square \)

Remark 2.3

(Total density)

-

(i)

If \(\rho _0,\rho _1\) are smooth enough and satisfy (2.2), then by taking extra derivatives we see that the total density \(\rho :=\rho _0+\rho _1\) satisfies the thermal telegrapher equation

$$\begin{aligned} \partial _t \left( \partial _t \rho + 2\Upsilon \rho \right) = - \epsilon \Delta (\Delta \rho ) + (1+\epsilon )\Delta \left( \partial _t \rho + \Upsilon \rho \right) , \end{aligned}$$(2.11)which is second order in \(\partial _t\) and fourth order in \(\partial _x\) (see [2, 33] for a derivation). Note that (2.11) shows that the total density does not satisfy the usual diffusion equation. This fact will be investigated in detail in the next section, where we will analyse the non-Fick property of \(\rho \).

-

(ii)

If \(\epsilon =1\), then the total density \(\rho \) satisfies the heat equation \(\partial _t\rho = \Delta \rho \).

-

(iii)

If \(\epsilon =0\), then (2.11) reads

$$\begin{aligned} \partial _t\left( \partial _t \rho + 2\Upsilon \rho \right) = \Delta \left( \partial _t \rho + \Upsilon \rho \right) , \end{aligned}$$which is known as the strongly damped wave equation. The term \(\partial _t(2\lambda \rho )\) is referred to as frictional damping, the term \(\Delta (\partial _t \rho )\) as Kelvin-Voigt damping (see [10]).

\(\square \)

Remark 2.4

(Literature) We mention in passing that in [38] hydrodynamic scaling of interacting particles with internal states has been considered in a different setting and with a different methodology. \(\square \)

2.2 Existence, Uniqueness and Representation of the Solution

The existence and uniqueness of a continuous-time solution \((\rho _0(t),\, \rho _1(t))\) with values in \(C_b({{\mathbb {R}}},\sigma )\) of the system in (2.2) can be proved by standard Fourier analysis. Below we recall some known results that have a more probabilistic interpretation.

Stochastic representation of the solution

The system in (2.2) fits in the realm of switching diffusions (see e.g. [49]), which are widely studied in the mathematical finance literature. Indeed, let \(\{i_t:\,t \ge 0\}\) be the pure jump process on state space \(I=\{0,1\}\) that switches at rate \(\Upsilon \), whose generator acting on bounded functions \(g:\,I \rightarrow {{\mathbb {R}}}\) is

Let \(\{X_t:\,t \ge 0\}\) be the stochastic process on \({{\mathbb {R}}}\) solving the stochastic differential equation

where \(W_t=B_{2t}\) with \(\{B_t:\, t \ge 0\}\) standard Brownian motion, and \(\psi :\,I\rightarrow \{D_0,D_1\}\) is given by

with \(D_0=1\) and \(D_1=\epsilon \) in our setting. Let \({\mathcal {L}} = {\mathcal {L}}_{\epsilon ,\Upsilon }\) be the generator defined by

for \(f:\,{{\mathbb {R}}}\times I \rightarrow {{\mathbb {R}}}\) such that \(f(\cdot ,i)\in C^2_0({{\mathbb {R}}})\). Then, via a standard computation (see e.g. [29, Eq.(4.4)]), it follows that

We therefore have the following result that corresponds to [29, Chapter 5, Section 4, Theorem 4.1](see also [49, Theorem 5.2]).

Theorem 2.5

(Stochastic representation of the solution) Suppose that \({\bar{\rho }}_i:\,{{\mathbb {R}}}\rightarrow {{\mathbb {R}}}\) for \(i\in I\) are continuous and bounded. Then (2.2) has a unique solution given by

Note that if there is only one particle in the system (1.2), then we are left with a single random walk, say \(\{Y_t:\,t \ge 0\}\), whose generator, denoted by \({\mathsf {A}}\), acts on bounded functions \(f:\,{{\mathbb {Z}}}\times I\rightarrow {{\mathbb {R}}}\) as

After we apply the generator to the function \(f(y,i)=y\), we get

i.e., the position of the random walk is a martingale. Computing the quadratic variation via the carré du champ, we find

Hence the predictable quadratic variation is given by

Note that for \(\epsilon =0\) the latter equals the total amount of time the random walk is not dormant up to time t.

When we diffusively scale the system (scaling the reaction term was done at the beginning of Sect. 2), the quadratic variation becomes

As a consequence, we have the following invariance principle:

-

Given the path of the process \(\{i_t:\, t\ge 0\}\),

$$\begin{aligned} \lim _{N\rightarrow \infty } \frac{Y_{N^2t}}{N} = W_{\int _0^{t} \mathrm{d}r\,\sqrt{\psi (i_r)}}, \end{aligned}$$where \(W_t=B_{2t}\) with \(\{B_t:\, t \ge 0\}\) is standard Brownian motion.

Thus, if we knew the path of the process \(\{i_r:\, r\ge 0\}\), then we could express the solution of the system in (2.2) in terms of a time-changed Brownian motion. However, even though \(\{i_r:\, r\ge 0\}\) is a simple flipping process, we cannot say much explicitly about the random time \(\int _0^{t} \mathrm{d}r\,\sqrt{\psi (i_r)}\). We therefore look for a simpler formula, where the relation to a Brownian motion with different velocities is more explicit. We achieve this by looking at the resolvent of the generator \({\mathcal {L}}\). In the following, we denote by \(\{S_t, \, t\ge 0\}\) the semigroup on \(C_b({{\mathbb {R}}})\) of \(\{W_{t}:\,t\ge 0\}\).

Proposition 2.6

(Resolvent) Let \(f:\,{{\mathbb {R}}}\times I\rightarrow {{\mathbb {R}}}\) be a bounded and smooth function. Then, for \(\lambda >0\), \(\epsilon \in (0,1]\) and \(i\in I\),

where \( c_\epsilon (\Upsilon ,\lambda )= \sqrt{\left( \tfrac{1-\epsilon }{\epsilon }\right) ^2\ell (\Upsilon ,\lambda )^2+\frac{\Upsilon ^2}{\epsilon }}\) and \(\ell (\Upsilon ,\lambda )=\frac{\Upsilon +\lambda }{2}\), while for \(\epsilon =0\),

Proof

The proof is split into two parts.

\(\underline{{\mathrm{Case}}\,\,\epsilon >0.}\) We can split the generator \({\mathcal {L}}\) as

i.e., we decouple \(X_t\) and \(i_t\) in the action of the generator. We can now use the Feynman-Kac formula to express the resolvent of the operator \({\mathcal {L}}\) in terms of the operator \(\tilde{{\mathcal {L}}}\). Denoting by \({{\tilde{{{\mathbb {E}}}}}}\) the expectation of the process with generator \(\tilde{{\mathcal {L}}}\), we have, for \(\lambda \in {{\mathbb {R}}}\),

and by the decoupling of \(X_t\) and \(i_t\) under \(\tilde{{\mathcal {L}}}\), we get

Defining

and using again the Feynman-Kac formula, we have

with \(K_{\epsilon }(t,\lambda ) = \mathrm{e}^{t\psi _\epsilon ^{-1}(-\lambda {\varvec{I}} +A)}\psi _\epsilon ^{-1}\).

Using the explicit formula for the exponential of a \(2\times 2\) matrix (see e.g. [4, Corollary 2.4]), we obtain

with \( c_\epsilon (\Upsilon ,\lambda )= \sqrt{\left( \tfrac{1-\epsilon }{\epsilon }\right) ^2\ell (\Upsilon ,\lambda )^2+\frac{\Upsilon ^2}{\epsilon }} \) and \(\ell (\Upsilon ,\lambda )=\frac{\Upsilon +\lambda }{2}\), from which we obtain (2.12).

\(\underline{{\mathrm{Case}}\,\,\epsilon =0.}\) We derive \(K_0(t,\lambda )\) by taking the limit \(\epsilon \downarrow 0\) in the previous expression, i.e., \(K_0(t,\lambda )=\lim _{\epsilon \downarrow 0}\) \(K_\epsilon (t,\lambda )\). We thus have that \(K_0(t,\lambda )\) is equal to

from which (2.12) follows. \(\square \)

Remark 2.7

(Symmetric layers) Note that for \(\epsilon =1\) we have

\(\square \)

We conclude this section by noting that the system in (2.2) was studied in detail in [2, 33]. By taking Fourier and Laplace transforms and inverting them, it is possible to deduce explicitly the solution, which is expressed in terms of solutions to the classical heat equation. More precisely, using formula [33, Eq.2.2], we have that

and

where \(\upsilon (s)=\frac{2\Upsilon }{1-\epsilon }((t-s)(s-\epsilon t))^{1/2}\), and \(I_0(\cdot )\) and \(I_1(\cdot )\) are the modified Bessel functions.

3 The System with Boundary Reservoirs

In this section we consider a finite version of the switching interacting particle systems introduced in Definition 1.1 to which boundary reservoirs are added. Section 3.1 defines the model. Section 3.2 identifies the dual and the stationary measures. Section 3.3 derives the non-equilibrium density profile, both for the microscopic system and the macroscopic system, and offers various simulations. In Sect. 3.4 we compute the stationary horizontal current of slow and fast particles both for the microscopic system and the macroscopic system. Section 3.5 shows that in the macroscopic system, for certain choices of the rates, there can be a flow of particles uphill, i.e., against the gradient imposed by the reservoirs. Thus, as a consequence of the competing driving mechanisms of slow and fast particles, we can have a flow of particles from the side with lower density to the side with higher density.

3.1 Model

We consider the same system as in Definition 1.1, but restricted to \(V:=\{1,\ldots ,N\}\subset {{\mathbb {Z}}}\). In addition, we set \({\hat{V}} := V\cup \{L,R\}\) and attach a left-reservoir to L and a right-reservoir to R, both for fast and slow particles. To be more precise, there are four reservoirs (see Fig. 2):

-

(i)

For the fast particles, a left-reservoir at L injects fast particles at \(x=1\) at rate \(\rho _{L,0}(1+\sigma \eta _0(1,t))\) and a right-reservoir at R injects fast particles at \(x=N\) at rate \(\rho _{R,0}(1+\sigma \eta _0(N,t))\). The left-reservoir absorbs fast particles at rate \(1+\sigma \rho _{L,0}\), while the right-reservoir does so at rate \(1+\sigma \rho _{R,0}\).

-

(ii)

For the slow particles, a left-reservoir at L injects slow particles at \(x=1\) at rate \(\rho _{L,1}(1+\sigma \eta _1(1,t))\) and a right-reservoir at R injects slow particles at \(x=N\) at rate \(\rho _{R,1}(1+\sigma \eta _1(N,t))\). The left-reservoir absorbs fast particles at rate \(1+\sigma \rho _{L,1}\), while the right-reservoir does so at rate \(1+\sigma \rho _{R,1}\).

Inside V, the particles move as before.

For \(i\in I\), \(x \in V\) and \(t\ge 0\), let \(\eta _{i}(x,t)\) denote the number of particles in layer i at site x at time t. For \(\sigma \in \{-1,0,1\}\), the Markov process \(\{\eta (t):\,t \ge 0\}\) with

has state space

and generator

with

acting on bounded cylindrical functions \(f:\,{\mathcal {X}}\rightarrow {{\mathbb {R}}}\) as

and

acting as

3.2 Duality

In [9] it was shown that the partial exclusion process, a system of independent random walks and the symmetric inclusion processes on a finite set V, coupled with proper left and right reservoirs, are dual to the same particle system but with the reservoirs replaced by absorbing sites. As remarked in [27], the same result holds for more general geometries, consisting of inhomogeneous rates (site and edge dependent), and for many proper reservoirs. Our model is a particular instance of the case treated in [27, Remark 2.2]), because we can think of the rate as conductances attached to the edges.

More precisely, we consider the system where particles jump on two copies of

and follow the same dynamics as before in V, but with the reservoirs at L and R absorbing. We denote by \(\xi \) the configuration

where \(\xi _i(x)\) denotes the number of particles at site x in layer i. The state space is \(\hat{{\mathcal {X}}} = {{\mathbb {N}}}_0^{{{\hat{V}}}}\times {{\mathbb {N}}}_0^{{{\hat{V}}}}\), and the generator is

with

acting on cylindrical functions \(f:\,{\mathcal {X}} \rightarrow {{\mathbb {R}}}\) as

and

acting as

Proposition 3.1

(Duality) [9, Theorem 4.1] and [27, Proposition 2.3] The Markov processes

with generators L in (3.1) and \({{\hat{L}}}\) in (3.4) are dual. Namely, for all configurations \(\eta \in {\mathcal {X}}\), \(\xi \in \hat{{\mathcal {X}}}\) and times \(t\ge 0\),

where the duality function is given by

where, for \(k,n\in {{\mathbb {N}}}\) and \(i\in I\), \(d(\cdot , \cdot )\) is given in (1.6) and

The proof boils down to checking that the relation

holds for any \(\xi \in {\mathcal {X}}\) and \(\xi \in \hat{{\mathcal {X}}}\), as follows from a rewriting of the proof of [9, Theorem 4.1].

Remark 3.2

(Choice of reservoir rates)

-

(i)

Note that we have chosen the reservoir rates to be 1 both for fast and slow particles. We did this because we view the reservoirs as an external mechanism that injects and absorbs neutral particles, while the particles assume their type as soon as they are in the bulk of the system. In other words, in the present context we view the change of the rate in the two layers as a change of the viscosity properties of the medium is which the particles evolve, instead of a property of the particles themselves.

-

(ii)

If we would tune the reservoir rate of the slow particles to be \(\epsilon \), then the duality relation mentioned above would still holds, with the difference that the dual system would have \(\epsilon \) as the rate of absorption for the slow particles. This change of the reservoir rates does not affect our results on the non-Fick properties of the model (see Sect. 3.5 below) and on the size of the boundary layer (see Sect. 3.6 below). Indeed, the limiting macroscopic properties we get by changing the rate of the reservoir of the slow particles are the same as the ones we derive later (i.e., the macroscopic boundary-value problem is the same for any choice of reservoir rate). Note that we do not rescale the reservoir rate when we rescale the system to pass from microscopic to macroscopic, which implies that our macroscopic equation has a Dirichlet boundary condition (see (3.44) below).

\(\square \)

Also in the context of boundary-driven systems, duality is an essential tool to perform explicit computations. We refer to [37] and [9], where duality for boundary-driven systems was used to compute the stationary profile, by looking at the absorption probabilities of the dual. This is the approach we will follow in the next section. We remark that, for the inclusion process and for generalizations of the exclusion process, duality is the only available tool to characterize properties of the non-equilibrium steady state (such as the stationary profile), whereas other more direct methods (such as the matrix formulation in e.g. [17]) are not applicable in this setting.

3.3 Non-equilibrium Stationary Profile

Also the existence and uniqueness of the non-equilibrium steady state has been established in [27, Theorem 3.3] for general geometries, and the argument in that paper can be easily adapted to our setting.

Theorem 3.3

(Stationary measure) [27, Theorem 3.3(a)] For \(\sigma \in \{-1,0,1\}\) there exists a unique stationary measure \(\mu _{stat} \ \) for \(\{\eta (t):\,t \ge 0\}\). Moreover, for \(\sigma =0\) and for any values of \(\{\rho _{L,0}, \ \rho _{L,1}, \ \rho _{R,0}, \ \rho _{R,1}\}\),

while, for \(\sigma \in \{-1,1\}\), \(\mu _{stat}\) is in general not in product form, unless \(\rho _{L,0}= \rho _{L,1}=\rho _{R,0}= \rho _{R,1}\), for which

where \(\nu _{(x,i), \theta }\) is given in (1.4).

Proof

For \(\sigma =-1\), the existence and uniqueness of the stationary measure is trivial by the irreducibility and the finiteness of the state space of the process. For \(\sigma \in \{0,1\}\), recall from [27, Appendix A] that a probability measure \(\mu \) on \({\mathcal {X}}\) is said to be tempered if it is characterized by the integrals \(\big \{{{\mathbb {E}}}_\mu [D(\xi ,\eta )]\,:\,\ \xi \in \hat{{\mathcal {X}}} \big \}\) and that if there exists a \(\theta \in [0,\infty )\) such that \({{\mathbb {E}}}_\mu [ D(\xi ,\eta )]\le \theta ^{|\xi |}\) for any \(\xi \in \hat{{\mathcal {X}}}\). By means of duality we have that, for any \(\eta \in {\mathcal {X}}\) and \(\xi \in \hat{{\mathcal {X}}}\),

from which we conclude that \(\lim _{t\rightarrow \infty }{{\mathbb {E}}}_\eta [D(\xi ,\eta _t)]\le \max \{\rho _{L,0}, \rho _{R,0}, \rho _{L,1}, \rho _{R,1} \}^{|\xi |}\). Let \(\mu _s\) be the unique tempered probability measure such that for any \(\xi \in \hat{{\mathcal {X}}},\) \({{\mathbb {E}}}_{\mu _{stat}}[D(\xi ,\eta )]\) coincides with (3.7). From the convergence of the marginal moments in (3.7) we conclude that, for any \(f:{\mathcal {X}}\rightarrow {{\mathbb {R}}}\) bounded and for any \(\eta \in {\mathcal {X}}\),

Thus, a dominated convergence argument yields that for any probability measure \(\mu \) on \({\mathcal {X}}\),

giving that \(\mu _{stat}\) is the unique stationary measure. The explicit expression in (3.5) and (3.6) follows from similar computations as in [9], while, arguing by contradiction as in the proof of [27, Theorem 3.3], we can show that the two-point truncated correlations are non-zero for \(\sigma \in \{-1,1\}\) whenever at least two reservoir parameters are different. \(\square \)

3.3.1 Stationary Microscopic Profile and Absorption Probability

In this section we provide an explicit expression for the stationary microscopic density of each type of particle. To this end, let \(\mu _{stat}\) be the unique non-equilibrium stationary measure of the process

and let \(\{\theta _0({x}),\theta _1({x})\}_{x\in V}\) be the stationary microscopic profile, i.e., for \(x\in V\) and \(i\in I\),

Write \({{\mathbb {P}}}_\xi \) (and \({{\mathbb {E}}}_\xi \)) to denote the law (and the expectation) of the dual Markov process

starting from \(\xi =\{\xi _{0}(x),\xi _{1}(x)\}_{x\in {{\hat{V}}}}\). For \(x\in V\), set

where

and let

Note that \({{\hat{p}}}(\delta _{(x,i)}, \cdot )\) is the probability of the dual process, starting from a single particle at site x at layer \(i\in I\), of being absorbed at one of the four reservoirs. Using Proposition 3.1 and Theorem 3.3, we obtain the following.

Corollary 3.4

(Dual representation of stationary profile) For \(x\in V\), the microscopic stationary profile is given by

where \(\vec {p}_x,\vec {q}_x\) and \(\vec {\rho }\) are as in (3.10)–(3.12).

We next compute the absorption probabilities associated to the dual process in order to obtain a more explicit expression for the stationary microscopic profile \(\{\theta _0({x}),\theta _1({x})\}_{x\in V}\). The absorption probabilities \({\hat{p}}(\cdot \,,\cdot )\) of the dual process satisfy

where \({{\hat{L}}}\) is the dual generator defined in (3.4), i.e., they are harmonic functions for the generator \({\hat{L}}\).

In matrix form, the above translates into the following systems of equations:

where

We divide the analysis of the absorption probabilities into two cases: \(\epsilon =0\) and \(\epsilon >0\).

Case \(\epsilon =0\) .

Proposition 3.5

(Absorption probability for \(\epsilon =0\)) Consider the dual process

with generator \({\hat{L}}_{\epsilon ,\gamma ,N}\) (see (3.4)) with \(\epsilon =0\). Then for the dual process, starting from a single particle, the absorption probabilities \({\hat{p}}(\cdot ,\cdot )\) (see (3.11)) are given by

and

and

Proof

Note that, for \(\epsilon =0\), from the linear system in (3.14) we get

Thus, if we set \(\vec {c}=\vec {p}_{2}-\vec {p}_{1}\), then it suffices to solve the following 4 linear equations with 4 unknowns \(\vec {p}_1,\vec {c},\vec {q}_1\), \(\vec {q}_N\):

Solving the above equations we get the desired result. \(\square \)

As a consequence, we obtain the stationary microscopic profile for the original process \(\{\eta (t):\,t \ge 0\}, \ \eta (t) = \{\eta _0(x,t),\eta _{1}(x,t)\}_{x \in V}\) when \(\epsilon =0\).

Theorem 3.6

(Stationary microscopic profile for \(\epsilon =0\)) The stationary microscopic profile \(\{\theta _0(x),\theta _1(x)\}_{x\in V}\) (see (3.9)) for the process \(\{\eta (t):\,t \ge 0\}\) with \(\eta (t) = \{\eta _0(x,t),\eta _{1}(x,t)\}_{x \in V}\) with generator \(L_{\epsilon ,\gamma ,N}\) (see (3.1)) and \(\epsilon =0\) is given by

and

Proof

The proof directly follows from Corollary 3.4 and Proposition 3.5. \(\square \)

Case \(\epsilon >0\) .

We next compute the absorption probabilities for the dual process and the stationary microscopic profile for the original process when \(\epsilon >0\).

Proposition 3.7

(Absorption probability for \(\epsilon >0\)) Consider the dual process

with generator \({\hat{L}}_{\epsilon ,\gamma }\) (see (3.4)) with \(\epsilon >0\). Let \({\hat{p}}(\cdot ,\cdot )\) (see (3.11)) be the absorption probabilities of the dual process starting from a single particle, and let \((\vec {p}_x,\vec {q}_x)_{x\in V}\) be as defined in (3.10). Then

where \(\alpha _1,\alpha _2\) are the two roots of the equation

and \(\vec {c}_1,\vec {c}_2,\vec {c}_3,\vec {c}_4\) are vectors that depend on the parameters \(N,\epsilon ,\alpha _1,\alpha _2\) (see (A.4) for explicit expressions).

Proof

Applying the transformation

we see that the system in (3.14) decouples in the bulk (i.e., the interior of V), and

The solution of the above system of recursion equations takes the form

where \(\alpha _1,\alpha _2\) are the two roots of the equation

Rewriting the four boundary conditions in (3.14) in terms of the new transformations, we get

where \(M_\epsilon \) is given by

Since \(\vec {p}_x = \frac{1}{1+\epsilon }(\vec {\tau }_x+\epsilon \vec {s}_x)\) and \(\vec {q}_x = \frac{1}{1+\epsilon }(\vec {\tau }_x-\vec {s}_x)\), by setting

we get the desired identities. \(\square \)

Without loss of generality, from here onwards, we fix the choices of the roots \(\alpha _1\) and \(\alpha _2\) of the quadratic equation in (3.24) as

Note that, for any \(\epsilon , \gamma >0\), we have

As a corollary, we get the expression for the stationary microscopic profile of the original process.

Theorem 3.8

(Stationary microscopic profile for \(\epsilon >0\)) The stationary microscopic profile \(\{\theta _0(x),\theta _1(x)\}_{x\in V}\) (see (3.9)) for the process \(\{\eta (t):\,t \ge 0\}\) and \(\eta (t) = \{\eta _0(x,t),\eta _{1}(x,t)\}_{x \in V}\) with generator \(L_{\epsilon ,\gamma ,N}\) (see (3.1)) with \(\epsilon >0\) is given by

where \((\vec {c}_i)_{1\le i \le 4}\) are as in (A.4), and

Proof

The proof follows directly from Corollary 3.4 and Proposition 3.7. \(\square \)

Remark 3.9

(Symmetric layers) For \(\epsilon =1,\) the inverse of the matrix \(M_\epsilon \) in the proof of Proposition 3.7 takes a simpler form. This is because for \(\epsilon =1\) the system is fully symmetric. In this case, the explicit expression of the stationary microscopic profile is given by

and

However, note that

which is linear in x only when \(\epsilon =1\), and

which is purely exponential in x.\(\square \)

3.3.2 Stationary Macroscopic Profile and Boundary-Value Problem

In this section we rescale the finite-volume system with boundary reservoirs, in the same way as was done for the infinite-volume system in Sect. 2 when we derived the hydrodynamic limit (i.e., space is scaled by 1/N and the switching rate \(\gamma _N\) is scaled such that \(\gamma _N N^2\rightarrow \Upsilon >0\)), and study the validity of Fick’s law at stationarity on macroscopic scale. Before we do that, we justify below that the current scaling of the parameters is indeed the proper choice, in the sense that we obtain non-trivial pointwise limits (macroscopic stationary profiles) of the microscopic stationary profiles found in previous sections, and that the resulting limits (when \(\epsilon > 0\)) satisfy the stationary boundary-value problem given in (2.2) with boundary conditions \( \rho ^{\text {stat}}_0(0) = \rho _{L,0}, \ \rho ^{\text {stat}}_0(1) = \rho _{R,0},\ \rho ^{\text {stat}}_1(0) = \rho _{L,1}\) and \(\rho ^{\text {stat}}_1(1) = \rho _{R,1}\).

We say that the macroscopic stationary profiles are given by functions \(\rho ^{\text {stat}}_i: (0,1)\rightarrow {{\mathbb {R}}}\) for \(i\in I\) if, for any \(y\in (0,1)\),

Theorem 3.10

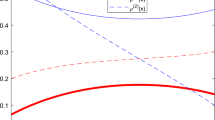

(Stationary macroscopic profile) Let \((\theta _0^{(N)}(x),\theta _1^{(N)}(x))_{x\in V}\) be the stationary microscopic profile (see (3.9)) for the process \(\{\eta (t):\,t \ge 0\}, \ \eta (t) = \{\eta _0(x,t),\eta _{1}(x,t)\}_{x \in V}\) with generator \(L_{\epsilon ,\gamma _N,N}\) (see (3.1)), where \(\gamma _N\) is such that \(\gamma _NN^2\rightarrow \Upsilon \) as \(N\rightarrow \infty \) for some \(\Upsilon >0\). Then, for each \(y\in (0,1)\), the pointwise limits (see Fig. 3)

exist and are given by

when \(\epsilon =0\), while

when \(\epsilon >0\), where \(B_{\epsilon ,\Upsilon }:=\sqrt{\Upsilon (1+\tfrac{1}{\epsilon })}\). Moreover, when \(\epsilon >0\), the two limits in (3.37) are uniform in (0, 1).

Proof

For \(\epsilon =0\), it easily follows from (3.21) plus the fact that \(\gamma _N N^2\rightarrow \Upsilon >0\) and \(\tfrac{\lceil yN\rceil }{N}\rightarrow y\) uniformly in (0, 1) as \(N\rightarrow \infty \), that

and since \(\theta _1(x) = \theta _0(x)\) for all \(x\in \{2,\ldots ,N-1\}\), for fixed \(y\in (0,1)\), we have

When \(\epsilon >0,\) since \(\gamma _N N^2\rightarrow \Upsilon >0\) as \(N\rightarrow \infty ,\) we note the following:

Consequently, from the expressions of \((\vec {c}_i)_{1\le i \le 4}\) defined in (A.4), we also have

Combining the above equations with (3.33), and the fact that \(\tfrac{\lceil yN\rceil }{N}\rightarrow y\) uniformly in (0, 1) as \(N\rightarrow \infty \), we get the desired result. \(\square \)

Remark 3.11

(Non-uniform convergence) Note that for \(\epsilon >0\) both stationary macroscopic profiles, when extended continuously to the closed interval [0, 1], match the prescribed boundary conditions. This is different from what happens for \(\epsilon =0\), where the continuous extension of \({\rho _1^{\mathrm{{stat}}}}\) to the closed interval [0, 1] equals \({\rho _0^{\mathrm{{stat}}}}(y)= \rho _{L,0}+(\rho _{R,0}-\rho _{L,0})y\), which does not necessarily match the prescribed boundary conditions unless \(\rho _{(L,1)}=\rho _{(L,0)}\) and \( \rho _{(R,1)}=\rho _{(R,0)}\). Moreover, as can be seen from the proof above, for \(\epsilon >0,\) the convergence of \(\theta _i\) to \(\rho _i\) is uniform in [0, 1], i.e.,

while for \(\epsilon =0\), the convergence of \(\theta _1\) to \(\rho _1\) is not uniform in [0, 1] when either \(\rho _{(L,0)}\ne \rho _{(L,1)}\) or \(\rho _{(R,0)}\ne \rho _{(R,1)}\).

Also, if \(\rho _i^{\text {stat}, \epsilon }(\cdot )\) denotes the macroscopic profile defined in (3.39)-(3.40), then for \(\epsilon >0\) and \(i\in \{0,1\}\), we have

for fixed \(y\in (0,1)\) and \(i\in \{0,1\}\), where \(\rho _i^{\text {stat}, 0}(\cdot )\) is the corresponding macroscopic profile in (3.38) for \(\epsilon =0\). However, this convergence is also not uniform for \(i=1\) when \(\rho _{(L,0)}\ne \rho _{(L,1)}\) or \(\rho _{(R,0)}\ne \rho _{(R,1)}\). \(\square \)

In view of the considerations in Remark 3.11, we next concentrate on the case \(\epsilon >0.\) The following result tells us that for \(\epsilon >0\) the stationary macroscopic profiles satisfy a stationary PDE with fixed boundary conditions and also admit a stochastic representation in terms of an absorbing switching diffusion process.

Theorem 3.12

(Stationary boundary value problem) Consider the boundary value problem

with boundary conditions

where \(\epsilon ,\Upsilon >0\), and the four boundary parameters \(\rho _{(L,0)},\, \rho _{(L,1)},\,\rho _{(R,0)},\,\rho _{(R,1)}\) are also positive. Then the PDE admits a unique strong solution given by

where \(({\rho _0^{\mathrm{{stat}}}}(\cdot ),{\rho _1^{\mathrm{{stat}}}}(\cdot ))\) are as defined in (3.37). Furthermore, \(({\rho _0^{\mathrm{{stat}}}}(\cdot ),{\rho _1^{\mathrm{{stat}}}}(\cdot ))\) has the stochastic representation

where \(\{i_t:\,t \ge 0\}\) is the pure jump process on state space \(I=\{0,1\}\) that switches at rate \(\Upsilon \), the functions \(\Phi _0,\Phi _1:\,I\rightarrow {{\mathbb {R}}}_+\) are defined as

\(\{X_t:\,t \ge 0\}\) is the stochastic process [0, 1] that satisfies the SDE

with \(W_t=B_{2t}\) and \(\{B_t:\, t \ge 0\}\) standard Brownian motion, the switching diffusion process \(\{(X_t,i_t):\,t\ge 0\}\) is killed at the stopping time

and \(\psi :\,I\rightarrow \{1,\epsilon \}\) is given by \(\psi := {\varvec{1}}_{\{0\}} + \epsilon \,{\varvec{1}}_{\{1\}}\).

Proof

It is straightforward to verify that for \(\epsilon >0\) the macroscopic profiles \(\rho _0,\rho _1\) defined in (3.39)−(3.40) are indeed uniformly continuous in (0, 1) and thus can be uniquely extended continuously to [0, 1], namely, by defining \({\rho _i^{\mathrm{{stat}}}}(0)=\rho _{(L,i)},\, {\rho _i^{\mathrm{{stat}}}}(1)=\rho _{(R,i)}\) for \(i\in I\). Also \({\rho _i^{\mathrm{{stat}}}}\in C^\infty ([0,1])\) for \(i\in I\) and satisfy the stationary PDE (3.44), with the boundary conditions specified in (3.45).

The stochastic representation of a solution of the system in (3.44) follows from [29, p385, Eq.(4.7)]. For the sake of completeness, we give the proof of uniqueness of the solution of (3.44). Let \(u=(u_0,u_1)\) and \(v=(v_0,v_1)\) be two solutions of the stationary reaction diffusion equation with the specified boundary conditions in (3.45). Then \((w_0,w_1) :=(u_0-v_0,u_1-v_1)\) satisfies

with boundary conditions

Multiplying the two equations in (3.48) with \(w_0\) and \(w_1\), respectively, and using the identity

we get

Integrating both equations by parts over [0, 1], we get

Adding the above two equations and using the zero boundary conditions in (3.49), we have

Since both \(w_0\) and \(w_1\) are continuous and \(\epsilon>0,\Upsilon >0\), it follows that

and so \(w_0=w_1\equiv 0\). \(\square \)

Note that, as a result of Theorem 3.12, the four absorption probabilities of the switching diffusion process \(\{(X_t,i_t)\,:\,t\ge 0\}\) starting from \((y,i)\in [0,1]\times I\) are indeed the respective coefficients of \(\rho _{(L,0)},\rho _{(L,1)},\rho _{(R,0)}\), \(\rho _{(R,1)}\) appearing in the expression of \({\rho _i^{\mathrm{{stat}}}}(y)\). Furthermore note that, as a consequence of Theorem 3.12 and the results in [33, Section 3], the time-dependent boundary-value problem

with initial conditions

and boundary conditions

admits a unique solution given by

where

\(\upsilon (s)=\frac{2\Upsilon }{1-\epsilon }((t-s)(s-\epsilon t))^{1/2}\), \(I_0(\cdot )\) and \(I_1(\cdot )\) are the modified Bessel functions, \(h_0(x,t)\), \(h_1(x,t)\) are the solutions of

and \({\rho _0^{\mathrm{{stat}}}}(x)\), \({\rho _1^{\mathrm{{stat}}}}(x)\) are given in (3.40).

We conclude this section by proving that the solution of the time-dependent boundary-value problem in (3.54) converges to the stationary profile in (3.40).

Proposition 3.13

(Convergence to stationary profile) Let \( {\rho _0^{\mathrm{{hom}}}}(x,t)\) and \({\rho _1^{\mathrm{{hom}}}}(x,t)\) be as in (3.58) and (3.59), respectively, i.e., the solutions of the boundary-value problem (3.54) with zero boundary conditions and initial conditions given by \({\rho _0^{\mathrm{{hom}}}}(x,0)= {\bar{\rho }}_0(x)-{\rho _0^{\mathrm{{stat}}}}(x)\) and \({\rho _1^{\mathrm{{hom}}}}(x,0)= {\bar{\rho }}_1(x) -{\rho _1^{\mathrm{{stat}}}}(x)\). Then, for any \(k\in {{\mathbb {N}}}\),

Proof

We start by showing that

Multiply the first equation of (3.54) by \(\rho _0\) and the second equation by \(\rho _1\). Integration by parts yields

Summing the two equations and defining \(E(t):= \int _0^1 \mathrm{d}x\,(\rho _0(x,t)^2 +\rho _1(x,t)^2)\), we obtain

By the Poincaré inequality (i.e., \(\int _0^1 \mathrm{d}x\,|\partial _x \rho _i(x,t)|^2 \ge C_p \int _0^1 \mathrm{d}x\ |\rho _i(x,t)|^2\), with \(C_p>0\)) we have \(\partial _tE(t)\le -\epsilon C_p E(t)\), from which we obtain

and hence (3.61).

From [45, Theorem 2.1] it follows that

with domain \(D(A)=H^2(0,1)\cap H_0^1(0,1)\), generates a semigroup \(\{{\mathcal {S}}_t:\, t\ge 0\}\). If we set \(\vec {\rho }(t)={\mathcal {S}}_t (\vec {\bar{\rho }}^{\text {hom}})\), with \(\vec {\bar{\rho }}^{\text {hom}}=\vec {\bar{\rho }}-\vec {\rho }^{\text {stat}}\), then by the semigroup property we have

and hence \(A^k\vec {\rho }(t)={\mathcal {S}}_{t-1}(A{\mathcal {S}}_{1/k})^k(\vec {\bar{\rho }}^{\text {hom}})\). If we set \(\vec {p}:=(A{\mathcal {S}}_{1/k})^k(\vec {\bar{\rho }}^{\text {hom}})\), then we obtain, by [45, Theorem 5.2(d)],

where \(\lim _{t\rightarrow \infty }\Vert {\mathcal {S}}_{t-1}\vec {p}\Vert _{L^2(0,1)}=0\) by the first part of the proof. The compact embedding

concludes the proof. \(\square \)

3.4 The Stationary Current

In this section we compute the expected current in the non-equilibrium steady state that is induced by different densities at the boundaries. We consider the microscopic and macroscopic systems, respectively.

Microscopic system

We start by defining the notion of current. The microscopic currents are associated with the edges of the underlying two-layer graph. In our setting, we denote by \({{{\mathcal {J}}}}^0_{x,x+1}(t)\) and \({{{\mathcal {J}}}}^1_{x,x+1}(t)\) the instantaneous current through the horizontal edge \((x,x+1)\), \(x\in V\), of the bottom layer, respectively, top layer at time t. Obviously,

We are interested in the stationary currents \({J}^0_{x,x+1}\), respectively, \({J}^1_{x,x+1}\), which are obtained as

where \({{\mathbb {E}}}_{stat}\) denotes expectation w.r.t. the unique invariant probability measure of the microscopic system \(\{\eta (t):\, t \ge 0\}\) with \(\eta (t) = \{\eta _{0}(x,t),\eta _{1}(x,t)\}_{x\in V}\). In other words, \(J^0_{x,x+1}\) and \(J^1_{x,x+1}\) give the average flux of particles of type 0 and type 1 across the bond \((x,x+1)\) due to diffusion.

Of course, the average number of particle at each site varies in time also as a consequence of the reaction term:

Summing these equations, we see that there is no contribution of the reaction part to the variation of the average number of particles at site x:

The sum

with \(J^0_{x,x+1}\) and \(J^1_{x,x+1}\) defined in (3.64), will be called the stationary current between sites at \(x,x+1\), \(x\in V\), which is responsible for the variation of the total average number of particles at each site, regardless of their type.

Proposition 3.14

(Stationary microscopic current) For \(x \in \{2,\ldots ,N-1\}\) the stationary currents defined in (3.64) are given by

when \(\epsilon =0\) and by

when \(\epsilon >0,\) where \(\vec {c}_1, \vec {c}_3, \vec {c}_4\) are the vectors defined in (A.4) of Appendix A, and \(\alpha _1,\alpha _2\) are defined in (3.31). As a consequence, the current \(J_{x,x+1}= J^0_{x,x+1} + J^1_{x,x+1}\) is independent of x and is given by

when \(\epsilon = 0\) and

when \(\epsilon >0\), where

Proof

From (3.64) we have

where \(\theta _0(\cdot ),\theta _1(\cdot )\) are the average microscopic profiles. Thus, when \(\epsilon =0\), the expressions of \(J^0_{x,x+1},J^1_{x,x+1}\) and consequently \(J_{x,x+1}\) follow directly from (3.21).

For \(\epsilon >0\), using the expressions of \(\theta _0(\cdot ),\theta _1(\cdot )\) in (3.33), we see that

where \(\vec {c}_1, \vec {c}_3, \vec {c}_4\) are the vectors defined in (A.4) of Appendix A, and \(\alpha _1,\alpha _2\) are defined in (3.31). Adding the two equations, we also have

where \(C_1,C_2\) are as in (3.70). \(\square \)

Macroscopic system

The microscopic current scales like 1/N. Indeed, the currents associated to the two layers in the macroscopic system can be obtained from the microscopic currents, respectively, by defining

Below we justify the existence of the two limits and thereby provide explicit expressions for the macroscopic currents.

Proposition 3.15

(Stationary macroscopic current) For \(y \in (0,1)\) the stationary currents defined in (3.74) are given by

when \(\epsilon =0\) and by

and

when \(\epsilon > 0\). As a consequence, the total current \(J(y)= J^0(y) + J^1(y)\) is constant and is given by

Proof

For \(\epsilon =0\) the claim easily follows from the expressions of \(J^0_{x,x+1}, J^1_{x,x+1}\) given in (3.66) and the fact that \(\gamma _N\rightarrow 0\) as \(N\rightarrow \infty \).

When \(\epsilon >0\), we first note the following:

Consequently, from the expressions for \((\vec {c}_i)_{1\le i \le 4}\) defined in (A.4), we also have

Combining the above equations with (3.67), we have

and, similarly,

Adding \(J^0(y)\) and \(J^1(y)\), we obtain the total current

which is indeed independent of y. \(\square \)

Remark 3.16

(Currents) Combining the expressions for the density profiles and the current, we see that

\(\square \)

3.5 Discussion: Fick’s Law and Uphill Diffusion

In this section we discuss the behaviour of the boundary-driven system as the parameter \(\epsilon \) is varied. For simplicity we restrict our discussion to the macroscopic setting, although similar comments hold for the microscopic system as well.

In view of the previous results, we can rewrite the equations for the densities \(\rho _0(y,t),\rho _1(y,t)\) as

which are complemented with the boundary values (for \(\epsilon >0\))

We will be concerned with the total density \(\rho = \rho _0 +\rho _1\), whose evolution equation does not contain the reaction part, and is given by

with boundary values

Non-validity of Fick’s law From (3.84) we immediately see that Fick’s law of mass transport is satisfied if and only if \(\epsilon =1\). When we allow diffusion and reaction of slow and fast particles, i.e., \(0 \le \epsilon <1\), Fick’s law breaks down, since the current associated to the total mass is not proportional to the gradient of the total mass. Rather, the current J is the sum of a contribution \(J^0\) due to the diffusion of fast particles of type 0 (at rate 1) and a contribution \(J^1\) due to the diffusion of slow particles of type 1 (at rate \(\epsilon \)). Interestingly, the violation of Fick’s law opens up the possibility of several interesting phenomena that we discuss in what follows.

Equal boundary densities with non-zero current In a system with diffusion and reaction of slow and fast particles we may observe a non-zero current when the total density has the same value at the two boundaries. This is different from what is observed in standard diffusive systems driven by boundary reservoirs, where in order to have a stationary current it is necessary that the reservoirs have different chemical potentials, and therefore different densities, at the boundaries.

Let us, for instance, consider the specific case when \(\rho _{L,0} =\rho _{R,1} = 2\) and \(\rho _{L,1} = \rho _{R,0} = 4\), which indeed implies equal densities at the boundaries given by \(\rho _{L} =\rho _{R} = 6\). The density profiles and currents are displayed in Fig. 3 for two values of \(\epsilon \), which shows the comparison between the Fick-regime \(\epsilon = 1\) (left panels) and the non-Fick-regime with very slow particles \(\epsilon = 0.001\) (right panels).

On the one hand, in the Fick-regime the profile of both types of particles interpolates between the boundary values, with a slightly non-linear shape that has been quantified precisely in (3.39)–(3.40). Furthermore, in the same regime \(\epsilon =1\), the total density profile is flat and the total current J vanishes because \(J^0(y) = -J^1(y)\) for all \(y\in [0,1]\).

On the other hand, in the non-Fick-regime with \(\epsilon = 0.001\), the stationary macroscopic profile for the fast particles interpolates between the boundary values almost linearly (see (3.43)), whereas the profile for the slow particles is non-monotone: it has two bumps at the boundaries and in the bulk closely follows the other profile. This non-monotonicity in the profile of the slow particles is due to the non-uniform convergence in the limit \(\epsilon \downarrow 0\), as pointed out in the last part of Remark 3.11. As a consequence, the total density profile is not flat and has two bumps at the boundaries. Most strikingly, the total current is \(J=-2\), since now the current of the bottom layer \(J^0\) is dominating, while the current of the bottom layer \(J^1\) is small (order \(\epsilon \)).

Macroscopic profiles of the densities for slow and fast particles (top panels), macroscopic profile of the total density (central panels), and the currents (bottom panels). Here, \(\rho _{(L,0)}=2,\,\rho _{(L,1)}=4,\,\rho _{(R,0)}=4\) and \(\rho _{(R,1)}=2, \Upsilon =1\). For the panels in the left column, \(\epsilon =1\) and for the panels in the right column, \(\epsilon =0.001\)

Unequal boundary densities with uphill diffusion As argued earlier, since the system does not always obey Fick’s law, by tuning the parameters \(\rho _{(L,0)}\), \(\rho _{(L,1)}\), \(\rho _{(R,0)}\), \(\rho _{(R,1)}\) and \(\epsilon \), we can push the system into a regime where the total current is such that \(J<0\) and the total densities are such that \(\rho _R < \rho _L \), where \(\rho _R = \rho _{(R,0)}+\rho _{(R,1)}\) and \(\rho _L = \rho _{(L,0)}+\rho _{(L,1)}\). In this regime, the current goes uphill, since the total density of particles at the right is lower than at the left, yet the average current is negative.

Macroscopic profiles of the densities for slow and fast particles (top panels), macroscopic profile of the total density (central panels), and the currents (bottom panels). Here, \(\rho _{(L,0)}=2,\,\rho _{(L,1)}=6,\,\rho _{(R,0)}=4\) and \(\rho _{(R,1)}=2, \Upsilon =1\). For the panels in the left column, \(\epsilon =1\) and for the panels in the right column, \(\epsilon =0.001\)

Macroscopic profiles of the densities for slow and fast particles (top panels), macroscopic profile of the total density (central panels), and the currents (bottom panels) in the “mild" downhill and the “mild" uphill regime. Here, \(\rho _{(L,0)}=2,\,\rho _{(L,1)}=6,\,\rho _{(R,0)}=4\) and \(\rho _{(R,1)}=2, \Upsilon =1\). For the panels in the left column, \(\epsilon =0.75\) and for the panels in the right column, \(\epsilon =0.25\)

For an illustration, consider the case when \(\rho _{L,1} =6, \rho _{R,0}=4\) and \(\rho _{L,0} =\rho _{R,1} = 2\), which implies \(\rho _{L} = 8\) and \(\rho _{R} = 6\) and thus \(\rho _{R} < \rho _{L}\). The density profiles and currents are shown in Fig. 4 for two values of \(\epsilon \), in particular, a comparison between the Fick-regime \(\epsilon = 1\) (left panels) and the non-Fick-regime with very slow particles \(\epsilon = 0.001\) (right panels). As can be seen in the figure, when \(\epsilon =1,\) the system obeys Fick’s law: the total density linearly interpolates between the two total boundary densities 8 and 6, respectively. The average total stationary current is positive as predicted by Fick’s law. However, in the uphill regime, the total density is non-linear and the gradient of the total density is not proportional to the total current, violating Fick’s law. The total current is negative and is effectively dominated by the current of the fast particles. It will be shown later that the transition into the uphill regime happens at the critical value \(\epsilon = \tfrac{|\rho _{(R,0)}-\rho _{(L,0)}|}{|\rho _{(R,1)}-\rho _{(L,1)}|} = \tfrac{1}{2}\). In the limit \(\epsilon \downarrow 0\) the total density profile and the current always get dominated in the bulk by the profile and the current of the fast particles, respectively. When \(\epsilon <\tfrac{1}{2}\), even though the density of the slow particles makes the total density near the boundaries such that \(\rho _R < \rho _L\), it is not strong enough to help the system overcome the domination of the fast particles in the bulk, and so the effective total current goes in the same direction as the current of the fast particles, producing an uphill current.

The transition between downhill and uphill We observe that for the choice of reservoir parameters \(\rho _{L,1} =6, \rho _{R,0}=4\) and \(\rho _{L,0} =\rho _{R,1} = 2\), the change from downhill to uphill diffusion occurs at \(\epsilon = \tfrac{|\rho _{(R,0)}-\rho _{(L,0)}|}{|\rho _{(R,1)}-\rho _{(L,1)}|} = \tfrac{1}{2}\). The density profiles and currents are shown in Fig. 5 for two additional values of \(\epsilon \), one in the “mild” downhill regime \(J>0\) for \(\epsilon = 0.75\) (left panels), the other in the “mild” uphill regime \(J<0\) for \(\epsilon = 0.25\) (right panels). In the uphill regime (right panel), i.e., when \(\epsilon =0.75\), the “mild" non-linearity of the total density profile is already visible, indicating the violation of Fick’s law.

Identification of the uphill regime We define the notion of uphill current below and identify the parameter ranges for which uphill diffusion occurs.

Definition 3.17

(Uphill diffusion) For parameters \(\rho _{(L,0)},\rho _{(L,1)},\rho _{(R,0)},\rho _{(R,1)}\) and \(\epsilon >0,\) we say the system has an uphill current in stationarity if the total current J and the difference between the total density of particles in the right and the left side of the system given by \(\rho _R - \rho _L\) have the same sign, where it is understood that \(\rho _R = \rho _{(R,0)}+\rho _{(R,1)}\) and \(\rho _L = \rho _{(L,0)}+\rho _{(L,1)}\).

\(\square \)

Proposition 3.18

(Uphill regime) Let \(a_0 : = \rho _{(R,0)}-\rho _{(L,0)}\) and \(a_1:= \rho _{(R,1)}-\rho _{(L,1)}\). Then the macroscopic system admits an uphill current in stationarity if and only if

If, furthermore, \(\epsilon \in [0,1]\), then

-

(i)

either

$$\begin{aligned} a_0+a_1> 0 \text { with } a_0<0,\,a_1> 0 \end{aligned}$$or

$$\begin{aligned} a_0+a_1< 0 \text { with } a_0 > 0,\,a_1 < 0, \end{aligned}$$ -

(ii)

\(\epsilon \in \big [0,-\tfrac{a_0}{a_1}\big ]\).

Proof

Note that, by (3.78), there is an uphill current if and only if \(a_0 + a_1\) and \(a_0 + \epsilon a_1\) have opposite signs. In other words, this happens if and only if

The above constraint forces \(a_0a_1<0\). Further simplification reduces the parameter regime to the following four cases:

-

\(a_0+a_1 > 0\) with \(a_0<0,\,a_1> 0\) and \(\epsilon <-\tfrac{a_0}{a_1}\),

-

\(a_0+a_1 < 0\) with \(a_0>0,\,a_1< 0\) and \(\epsilon <-\tfrac{a_0}{a_1}\),

-

\(a_0+a_1 > 0\) with \(a_0>0,\,a_1< 0\) and \(\epsilon >-\tfrac{a_0}{a_1}\),

-

\(a_0+a_1 < 0\) with \(a_0<0,\,a_1> 0\) and \(\epsilon >-\tfrac{a_0}{a_1}\).

Under the assumption \(\epsilon \in [0,1]\), only the first two of the above four cases survive. \(\square \)

3.6 The Width of the Boundary Layer

We have seen that for \(\epsilon =0\) the microscopic density profile of the fast particles \(\theta _0(x)\) linearly interpolates between \(\rho _{L,0}\) and \(\rho _{R,0}\), whereas the density profile of the slow particles satisfies \(\theta _1(x) = \theta _0(x)\) for all \(x\in \{2,\ldots ,N-1\}\). In the macroscopic setting this produces a continuous macroscopic profile \({\rho _0^{\mathrm{{stat}}}}(y) = \rho _{L,0} + (\rho _{R,0}-\rho _{L,0})y\) for the bottom-layer, while the top-layer profile develops two discontinuities at the boundaries when either \(\rho _{(L,0)}\ne \rho _{(L,1)}\) or \(\rho _{(R,0)}\ne \rho _{(R,1)}\). In particular,

for \(y\in [0,1]\). For small but positive \(\epsilon ,\) the curve is smooth and the discontinuity is turned into a boundary layer. In this section we investigate the width of the left and the right boundary layers as \(\epsilon \downarrow 0\). To this end, let us define

Note that, the profile \(\rho _1\) develops a left boundary layer if and only if \(W_L > 0\) and, similarly, a right boundary layer if and only if \(W_R > 0\).

Definition 3.19

We say that the left boundary layer is of size \(f_L(\epsilon )\) if there exists \(C>0\) such that, for any \(c>0,\)

where \(R_L(\epsilon , c)=\sup \left\{ y\in \big (0,\tfrac{1}{2}\big ): \left| \frac{d^2}{d y^2}{\rho _1^{\mathrm{{stat}}}}(y)\right| \ge c\right\} \). Analogously, we say that the right boundary layer is of size \(f_R(\epsilon )\) if there exists \(C>0\) such that, for any \(c>0,\)

where \(R_R(\epsilon , c)=\inf \left\{ y\in \big (\tfrac{1}{2},1\big ): \left| \frac{d^2}{d y^2}{\rho _1^{\mathrm{{stat}}}}(y)\right| \ge c\right\} \).

The widths of the two boundary layers essentially measure the deviation of the top-layer density profile (and therefore also the total density profile) from the bulk linear profile corresponding to the case \(\epsilon = 0\). In the following proposition we estimate the sizes of the two boundary layers.

Proposition 3.20

(Width of boundary layers) The widths of the two boundary layers are given by

where \(f_L(\epsilon ),f_R(\epsilon )\) are defined as in Definition 3.19.

Proof

Note that, to compute \(f_L(\epsilon )\), it suffices to keep \(W_L>0\) fixed and put \(W_R = 0\), where \(W_L,W_R\) are as in (3.87). Let \({\overline{y}}(\epsilon , c)\in (0,\tfrac{1}{2})\) be such that, for some constant \(c>0\),

or equivalently, since \(\epsilon \Delta \rho _1 =\Upsilon (\rho _1-\rho _0)\),

Recalling the expressions of \({\rho _0^{\mathrm{{stat}}}}(\cdot )\) and \({\rho _1^{\mathrm{{stat}}}}(\cdot )\) for positive \(\epsilon \) given in (3.39)−(3.40), we get

Using (3.87) plus the fact that \(W_R = 0,\) and setting \(B_{\epsilon ,\Upsilon }:=\sqrt{\Upsilon \left( 1+\tfrac{1}{\epsilon }\right) }\), we see that

Because \(\sinh (\cdot )\) is strictly increasing, (3.92) holds if and only if

Thus, for small \(\epsilon >0\) we have

where \(R_L(\epsilon , c)\) is defined as in Definition 3.19. Since \(\sinh ^{-1} x = \log (x+\sqrt{x^2+1})\) for \(x\in {{\mathbb {R}}}\), we obtain

where \(N_{\epsilon ,\Upsilon }:=\sinh \Big (\tfrac{B_{\epsilon ,\Upsilon }}{2}\Big ), C:=\tfrac{c}{\Upsilon W_L}\), and the error term is

Note that, since \(\epsilon N_{\epsilon ,\Upsilon }\rightarrow \infty \) as \(\epsilon \downarrow 0\), we have

Hence, combining (3.95)−(3.96), we get

and so, by Definition 3.19, \(f_L(\epsilon ) = \sqrt{\epsilon }\log (1/\epsilon )\).

Similarly, to compute \(f_R(\epsilon )\), we first fix \(W_L = 0, W_R>0\) and note that, for some \(c>0\), we have, by using (3.91),

Hence, by appealing to the strict monotonicity of \(\sinh (\cdot ),\) we obtain

Finally, by similar computations as in (3.95)–(3.97), we see that

and hence \(f_R(\epsilon )=\sqrt{\epsilon }\log (1/\epsilon )\). \(\square \)

References

Aifantis, E.C.: A new interpretation of diffusion in high-diffusivity paths: a continuum approach. Acta Metall. 27, 683–691 (1979)

Aifantis, E.C., Hill, J.M.: On the theory of diffusion in media with double diffusivity I. Basic mathematical results. Q. J. Mech. Appl. Math. 33, 1–21 (1980)

Amir, G., Bahadoran, C., Busani, O., Saada, E.: Invariant measures for multilane exclusion process. Preprint https://arxiv.org/abs/2105.12974

Bernstein, D.S., So, W.: Some explicit formulas for the matrix exponential. IEEE Trans. Autom. Control 38(8), 1228–1232 (1993)

Blath, J., González Casanova, A., Kurt, N., Wilke-Berenguer, M.: The seed bank coalescent with simultaneous switching. Electron. J. Probab. 25, 1–21 (2020)

Blath, J., Kurt, N.: Population genetic models of dormancy. In: Baake, E., Wakolbinger, A. (eds.) Probabilistic Structures in Evolution. EMS Series of Congress Reports, pp. 247–265. European Mathematical Society Publishing House, Zurich (2021)

Bodineau, T., Lagouge, M.: Large deviations of the empirical currents for a boundary-driven reaction diffusion model. Ann. Appl. Probab. 22, 2282–2319 (2012)

Boldrighini, C., De Masi, A., Pellegrinotti, A.: Nonequilibrium fluctuations in particle systems modelling reaction-diffusion equation. Stoch. Proc. Appl. 42, 1–30 (1992)

Carinci, G., Giardinà, C., Giberti, C., Redig, F.: Duality for stochastic models of transport. J. Stat. Phys. 152, 657–697 (2013)

Cavalcanti, M., Cavalcanti, V.D., Tebou, L.: Stabilization of the wave equation with localized compensating frictional and Kelvin–Voigt dissipating mechanism. Electron. J. Differ. Equ. 83, 1–18 (2017)

Chen, J.P., Sau, F.: Higher order hydrodynamics and equilibrium fluctuations of interacting particle systems. Preprint https://arxiv.org/abs/2008.13403

Cividini, J., Mukamel, D., Posch, H.A.: Driven tracer with absolute negative mobility. J. Phys. A 51, 085001 (2018)

Colangeli, M., De Masi, A., Presutti, E.: Microscopic models for uphill diffusion. J. Phys. A 50, 435002 (2017)

Colangeli, M., Giardinà, C., Giberti, C., Vernia, C.: Non-equilibrium two dimensional Ising model with stationary uphill diffusion. Phys. Rev. E 96, 052137 (2017)

Crampé, N., Mallick, K., Ragoucy, E., Vanicat, M.: Open two-species exclusion processes with integrable boundaries. J. Phys. A 48, 175002 (2015)

Demaerel, T., Maes, C.: Active processes in one dimension. Phys. Rev. E 97, 032604 (2018)

Derrida, B., Evans, M.R., Hakim, V., Pasquier, V.: Exact solution of a \(1\)D asymmetric exclusion model using a matrix formulation. J. Phys. A 26, 1493–1517 (1993)

De Masi, A., Ferrari, P.A., Lebowitz, J.L.: Rigorous derivation of reaction-diffusion equations with fluctuations. Phys. Rev. Lett. 55, 1947–1949 (1985)

De Masi, A., Ferrari, P.A., Lebowitz, J.L.: Reaction-diffusion equations for interacting particle systems. J. Stat. Phys. 44, 589–644 (1986)

De Masi, A., Presutti, E.: Mathematical Methods for Hydrodynamic Limits. Lecture Notes in Mathematics, vol. 1501. Springer-Verlag, Berlin (1991)

De Masi, A., Merola, A., Presutti, E.: Reservoirs, Fick law and the Darken effect. J. Math. Phys. 62, 073301 (2021)

Derrida, B., Lebowitz, J.L., Speer, E.R.: Large deviation of the density profile in the steady state of the open symmetric simple exclusion process. J. Stat. Phys. 107, 599–634 (2002)

Dhar, A., Kundu, A., Majumdar, S.N., Sabhapandit, S., Schehr, G.: Run-and-tumble particle in one-dimensional confining potential: steady state, relaxation and first passage properties. Phys. Rev. E 99, 032132 (2019)

Ferrari, P.A., Martin, J.B.: Multiclass processes, dual points and M/M/1 queues. Markov Proc. Relat. Fields 12, 273–299 (2006)

Ferrari, P.A., Martin, J.B.: Stationary distributions of multi-type totally asymmetric exclusion processes. Ann. Probab. 35, 807–832 (2007)

Floreani, S., Redig, F., Sau, F.: Hydrodynamics for the partial exclusion process in random environment. Stoch. Proc. Appl. 142, 124–158 (2021)

Floreani, S., Redig, F., Sau, F.: Orthogonal polynomial duality of boundary driven particle systems and non-equilibrium correlations. Preprint https://arxiv.org/abs/2007.08272

Fodor, É., Marchetti, C.: The statistical physics of active matter: from self-catalytic colloids to living cells. Physica A 504, 106–120 (2018)

Friedlin, M.: Functional Integration and Partial Differential Equations. Annals of Mathematics Studies, vol. 109. Princeton University Press, Princeton (1985)