Abstract

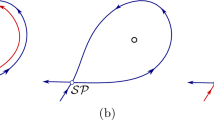

We study the mean-field limit and stationary distributions of a pulse-coupled network modeling the dynamics of a large neuronal assemblies. Our model takes into account explicitly the intrinsic randomness of firing times, contrasting with the classical integrate-and-fire model. The ergodicity properties of the Markov process associated to finite networks are investigated. We derive the large network size limit of the distribution of the state of a neuron, and characterize their invariant distributions as well as their stability properties. We show that the system undergoes transitions as a function of the averaged connectivity parameter, and can support trivial states (where the network activity dies out, which is also the unique stationary state of finite networks in some cases) and self-sustained activity when connectivity level is sufficiently large, both being possibly stable.

Similar content being viewed by others

References

Asmussen, S.: Applied Probability and Queues. Wiley, Chichester (1987)

Brunel, N.: Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. J. Comput. Neurosci. 8, 183–208 (2000)

Brunel, N.: Dynamics of networks of randomly connected excitatory and inhibitory spiking neurons. J. Physiol. Paris 94(5–6), 445–463 (2000)

Burkitt, A.N.: A review of the integrate-and-fire neuron model: I. Homogeneous synaptic input. Biol. Cybern. 95(1), 1–19 (2006)

Burkitt, A.N.: A review of the integrate-and-fire neuron model: II. Inhomogeneous synaptic input and network properties. Biol. Cybern. 95(2), 97–112 (2006)

Cáceres, M.J., Perthame, B.: Beyond blow-up in excitatory integrate and fire neuronal networks: refractory period and spontaneous activity. J. Theor. Biol. 350, 81–89 (2014)

Cáceres, M., Carrillo, J., Perthame, B.: Analysis of nonlinear noisy integrate & fire neuron models: blow-up and steady states. J. Math. Neurosci. 1, 1–33 (2011)

Chichilnisky, E.J.: A simple white noise analysis of neuronal light responses. Netw. Comput. Neural Syst. 12(2), 199–213 (2001)

Corless, R.M., Gonnet, G.H., Hare, D.E.G., Jeffrey, D.J., Knuth, D.E.: On the Lambert W function. Adv. Comput. Math. 5(4), 329–359 (1996)

Dawson, D.A.: Measure-valued Markov processes, École d’Été de Probabilités de Saint-Flour XXI—1991, Lecture Notes in Math., vol. 1541, pp. 1–260. Springer, Berlin (1993)

De Masi, A., Galves, A., Löcherbach, E., Presutti, E.: Hydrodynamic limit for interacting neurons. J. Stat. Phys. 158, 866–902. doi:10.1007/s10955-014-1145-1

Delarue, F., Inglis, J., Rubenthaler, S., Tanré, E.: Global solvability of a networked integrate-and-fire model of Mckean–Vlasov type. Ann. Appl. Probab. 25(4), 2096–2133 (2015)

Fournier N., Löcherbach, E.: On a toy model of interacting neurons. J. Stat. Phys. 1–37

Gerstein, G.L., Mandelbrot, B.: Random walk models for the spike activity of a single neuron. Biophys. J. 4, 41–68 (1964)

Has’minskii, R.Z.: Stochastic stability of differential equations. Kluwer Academic Publishers, Norwell (1980)

Kingman, J.F.C.: Poisson Processes. Oxford Studies in Probability. Oxford University Press, New York (1993)

Knight, B.W.: Dynamics of encoding in a population of neurons. J. Gen. Physiol. 59, 734–766 (1972)

Lapique, L.: Recherches quantitatives sur l’excitation des nerfs traitee comme une polarisation. J. Physiol. Paris 9, 620–635 (1907)

Levin, D.A., Peres, Y., Wilmer, E.L.: Markov Chains and Mixing Times. American Mathematical Society, Providence (2009)

Loynes, R.M.: The stability of queues with non independent inter-arrival and service times. Proc. Cambridge Philos. Soc. 58, 497–520 (1962)

Nummelin, E.: General Irreducible Markov Chains and Nonnegative Operators. Cambridge University Press, Cambridge (1984)

Pakdaman, K., Perthame, B., Salort, D.: Dynamics of a structured neuron population. Nonlinearity 23(1), 55 (2010)

Pakdaman, K., Perthame, B., Salort, D., et al.: Adaptation and fatigue model for neuron networks and large time asymptotics in a nonlinear fragmentation equation. J. Math. Neurosci. 4(14) (2014). doi:10.1186/2190-8567-4-14

Pakdaman, K., Perthame, B., Salort, D.: Relaxation and self-sustained oscillations in the time elapsed neuron network model. SIAM J. Appl. Math. 73(3), 1260–1279 (2013)

Pillow, J.W., Paninski, L., Uzzell, V.J., Simoncelli, E.P., Chichilnisky, E.J.: Prediction and decoding of retinal ganglion cell responses with a probabilistic spiking model. J. Neurosci. 25(47), 11003–11013 (2005)

Pillow, J.W., Shlens, J., Paninski, L., Sher, A., Litke, A.M., Chichilnisky, A.J., Simoncelli, E.P.: Spatio-temporal correlations and visual signalling in a complete neuronal population. Nature 454(7207), 995–999 (2008)

Robert, P.: Stochastic Networks and Queues, Stochastic Modelling and Applied Probability Series, vol. 52. Springer, New York (2003)

Rogers, L.C.G., Williams, D.: Diffusions, Markov Processes, and Martingales. Vol. 2: Itô calculus. Wiley, New York (1987)

Rolls, E.T., Deco, G.: The Noisy Brain: Stochastic Dynamics as a Principle of Brain Function. Oxford University Press, New York (2010)

Scheutzow, M.: Periodic behavior of the stochastic brusselator in the mean-field limit. Probab. Theory Relat. Fields 72, 425–462 (1986)

Stein, R.B.: A theoretical analysis of neuronal variability. Biophys. J. 5, 173–194 (1965)

Sznitman, A.S.: Topics in propagation of chaos, École d’Été de Probabilités de Saint-Flour XIX—1989, Lecture Notes in Maths, vol. 1464, pp 167–243. Springer-Verlag, Berlin (1989)

Touboul, J.: Mean-field equations for stochastic firing-rate neural fields with delays: derivation and noise-induced transitions. Phys D Nonlinear Phenom 241(15), 1223–1244 (2012)

Touboul, J.: Spatially extended networks with singular multi-scale connectivity patterns. J. Stat. Phys. 156(3), 546–573 (2014)

Touboul, J.: The propagation of chaos in neural fields. Ann. Appl. Probab. 24(3), 1298–1328 (2014)

Touboul, J., Hermann, G., Faugeras, O.: Noise-induced behaviors in neural mean field dynamics. SIAM J. Appl. Dynam. Syst. 11(1), 49–81 (2012)

Acknowledgments

The authors acknowledge N. Fournier for his comments on a preliminary version.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Some Technical Results

Lemma 8

We assume that b is such that there exists \(\gamma >0\) and \(c>0\) such that

Then, for any \(\varepsilon >0\) and \(p\in [1,3+\varepsilon ]\), there exist a constant \(\gamma _1<(3+2\varepsilon )\gamma \), \(c_1 >0\) and a value \(\eta _b>0\) such for any \(a\in (0,\eta _b)\) and \(x\ge 0\),

Proof

Let us start by noting that the inequality is trivial for b bounded. We will therefore assume in the rest of the proof that b diverges at infinity. We also remark that for any \(1\le p<3+\varepsilon \), the map \(b^p\) satisfies an inequality of type (44) where \(\gamma \) is multiplied by p. Indeed, for any \(\delta >0\), we can find \(c_{\delta }>0\) such that:

We will therefore demonstrate without loss of generality the proposition for \(p=1\), and i.e. control the modulus of continuity of b under assumption (44). For an arbitrary \(x_0>0\) and any \(x\ge x_0\), we have:

with \(\tilde{\gamma }=\gamma + {c}/{b(x_0)}\). We conclude that for \(x\ge x_0\),

The map \(a\mapsto (e^{a\tilde{\gamma }}-1)/a\) is smooth, non-decreasing and tends to \(\tilde{\gamma }\) at \(a=0\), which can be made arbitrarily close from \(\gamma \) for sufficiently large \(x_0\) (since b is unbounded). Therefore, there exists \(x_0>0\) and \(\eta >0\) such that for any \(x\ge x_0\) and \(a\in [0,\eta ]\),

Denoting \(c_1\) the Lipschitz constant of b over the interval \([0,x_0+\eta ]\), we readily obtain (45) with \(\gamma _1=\gamma (1+\varepsilon )\). \(\square \)

Another elementary property that is useful in our developments is the following:

Proposition 12

If x(t) is a non-negative càdlàg function on \(\mathbb {R}_+\) and \(\kappa>\delta >0\) such that, for A, \(B\in \mathbb {R}\),

holds for any \(0\le s\le t\), then x(t) is uniformly bounded on any bounded time intervals.

Moreover, if (x(t)) is \(C^1\) on \(\mathbb {R}_+\) and \(B=0\), we have a uniform bound for all times:

where \(C_0=x(0)\wedge A^{\kappa -\delta }\).

The proof is elementary once noted that the map \(x\mapsto -x^\kappa + A x^\delta \) is upperbounded by a finite value \(M>0\) and is strictly negative for any \(x>A^{\kappa -\delta }\). The upperbound readily implies that \(x(t)\le x_0+B+ M \,t\). For x continuously differentiable and \(B=0\), the negativity of the integrand for \(x>A^{\kappa -\delta }\) ensures that no trajectory exceeds \(C_0=x(0) \wedge A^{\kappa -\delta }\).

1.2 Poisson Processes

The third elementary technical result used is related to the martingales associated to marked Poisson processes.

Proposition 13

If \(\mathcal{N}\) is a Poisson process on \(\mathbb {R}_+^3\) with intensity measure \(\mathrm {d}u\otimes V(\mathrm {d}z)\otimes \mathrm {d}t\), f is a continuous function on \(\mathbb {R}_+^3\) and \((Y(t)=(Y_1(t),Y_2(t))\) is a càdlàg adapted processes then the process (M(t)) defined by

is a local martingale whose previsible increasing process is given by

See Rogers and Williams [28] and Appendix B of Robert [27] for example.

1.3 Uniqueness

In the main text, we have shown tightness of the sequence of empirical measures, ensuring that the sequence is relatively compact. Moreover, we showed that the possible limits are time-dependent measures \(\Lambda (t)\) that satisfy, for all \(f\in C^{1}(\mathbb {R})\), Eq. (36) that we write here as:

In the above notations, x is a generic symbolic variable, which we use for simplicity of notations, with the convention \(\left\langle \Lambda (t),\, f(x)\right\rangle = \left\langle \Lambda (t),\, f\right\rangle \). We know that the law of the solution of the mean-field McKean–Vlasov Eq. (4) satisfies this system. We aim at showing there is a unique positive Radon measure such that the nonlinear Eq. (46) holds. We first remark that the differential equation conserves the total mass \(\left\langle \Lambda (t),\, 1\right\rangle =\left\langle \Lambda (0),\, 1\right\rangle \). We are therefore searching for \(\Lambda \) a probability measure satisfying the nonlinear Eq. (46). The proof of uniqueness uses the following properties:

Lemma 9

For any initial probability measure \(\Lambda (0)\) of \(\mathbb {R}_+\) with bounded support, if \(\Lambda (t)\) is a solution of Eq. (46), there exists C and K such that

-

(1)

\(\displaystyle \sup _{t\ge 0} \left\langle \Lambda (t),\, b\right\rangle \le C < \infty \).

-

(2)

\(\Lambda (t)\) has its support in [0, K] for all \(t\ge 0\).

Proof

The proof of (i) is similar to the analogous property shown on the possible solutions of the McKean–Vlasov equation. Denoting \(B(t)=\left\langle \Lambda (t),\, b\right\rangle \) and using the inequality \(b'(x)< \gamma b(x)+c\), we have:

and we conclude using Proposition 12.

We now prove that any solution \(\Lambda (t)\) to Eq. (46) has a uniformly bounded support. Let us assume that the support of \(\Lambda (0)\) is contained in the interval \([0,K_0]\) and pick f a continuously differentiable and non-decreasing function such that

with \(K=\max (K_0, \;C\,E(V))\) with C an upperbound of \(\sup _{t\ge 0} \left\langle \Lambda (t),\, b\right\rangle \). Applying Eq. (46) to f and using the fact that \(\left\langle \Lambda (t),\, b\right\rangle <C\), \(fb\ge 0\) and \(f'\ge 0\), we obtain the inequality:

hence \(\left\langle \Lambda (t),\, f\right\rangle =0\) for all \(t\ge 0\), implying that the support of \(\Lambda (t)\) is contained within the compact set [0, K]. \(\square \)

With these a priori estimates on \(\Lambda \) in hand, we can now show the uniqueness of possible solutions to the mean-field equation. For two probability measures \(\lambda _1\) and \(\lambda _2\), we define the distance:

with

and note that the subset of functions of \(\mathcal {S}\) with bounded support is dense in the set of continuous functions with bounded support.

Proposition 14

Let \(\Lambda (0)\) be a probability measure with bounded support, then Eq. (46) has a unique solution with initial condition \(\Lambda (0)\).

Proof

We show that \(\Vert \Lambda _1(t)-\Lambda _2(t)\Vert _\mathcal{S} =0\) for all times. Indeed, for any \(f\in \mathcal {S}\), we have, denoting \(\Delta (t)=\Lambda _1(t)-\Lambda _2(t)\),

and therefore using the fact that \(\Delta \) has a support included in the compact [0, K], we have:

with

We therefore have for all \(f\in \mathcal {S}\) the inequality:

which is therefore also valid for the norm of \(\Delta \):

We conclude, by immediate recursion, that:

hence \(\Vert \Delta (t)\Vert _{\mathcal {S}}=0\) for all \(t\ge 0\). \(\square \)

1.4 Simulation Algorithms

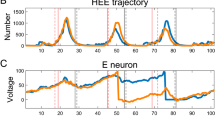

This appendix describes the simulation algorithms used to obtain our plots for linear and quadratic rate functions in Sect. 7. We used two distinct algorithms: an exact simulation algorithm for the simulation of the extinction time, and for the sake of computational efficiency an approximate algorithm for large networks.

The algorithm we used in order to perform efficient simulations for large networks implements the evolution of the process at discrete times \(t_k=k \delta t\) with \(\delta t\) a small time step. In each time interval, we compute the probability that a spike occurs within the interval. We then draw a Bernoulli random variable with this probability, and update the network state accordingly.

This approximate dynamics allows to perform fast simulations and therefore to reach very large network size. However, computing the extinction time of the network is much more delicate. To this end, we performed, for small network sizes, exact simulations of the jump process in the case of the linear firing function \(b(x)=\lambda \,x\). In the specific model we treat here, the particularly simple form of the dynamics of the variables \(X_i(t)\) between spikes and the simplicity of the firing map b allows to derive the cumulative density function of the spikes:

provided that \(X_i(0)=X_i\). From this expression, one obtains the probability that neuron i stops firing \(p_i=\exp (-\lambda X_i)\), and also the probability of firing at time t provided that the neuron does not stop firing. Therefore, although we deal with state-dependent Poisson processes, these formulae allow to simulate exactly the process and the extinction time, reached when all neurons stop firing.

Rights and permissions

About this article

Cite this article

Robert, P., Touboul, J. On the Dynamics of Random Neuronal Networks. J Stat Phys 165, 545–584 (2016). https://doi.org/10.1007/s10955-016-1622-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-016-1622-9