Abstract

Objectives

We provide a critical review of empirical research on the deterrent effect of capital punishment that makes use of state and, in some instances, county-level, panel data.

Methods

We present the underlying behavioral model that presumably informs the specification of panel data regressions, outline the typical model specification employed, discuss current norms regarding “best-practice” in the analysis of panel data, and engage in a critical review.

Results

The connection between the theoretical reasoning underlying general deterrence and the regression models typically specified in this literature is tenuous. Many of the papers purporting to find strong effects of the death penalty on state-level murder rates suffer from basic methodological problems: weak instruments, questionable exclusion restrictions, failure to control for obvious factors, and incorrect calculation of standard errors which in turn has led to faulty statistical inference. The lack of variation in the key underlying explanatory variables and the heavy influence exerted by a few observations in state panel data regressions is a fundamental problem for all panel data studies of this question, leading to overwhelming model uncertainty.

Conclusions

We find the recent panel literature on whether there is a deterrent effect of the death penalty to be inconclusive as a whole, and in many cases uninformative. Moreover, we do not see additional methodological tools that are likely to overcome the multiple challenges that face researchers in this domain, including the weak informativeness of the data, a lack of theory on the mechanisms involved, and the likely presence of unobserved confounders.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In 1976, the Supreme Court ruled that a carefully-crafted death penalty statute that meets specific criteria defined by the Court does not violate the Eighth Amendment prohibition against cruel and unusual punishment.Footnote 1 The decision effectively ended a 4-year death penalty moratorium created by an earlier 1972 Supreme Court ruling. Since the reinstatement of the death penalty, 7,773 inmates have been sentenced to death in the United States. Of these inmates, roughly 15% have been executed, 39% have been removed from death row due to vacated sentences or convictions, commutation, or a death sentence being struck down on successful appeal, while 5% have died from other causes (Snell 2010).

During this time, the legality of the death penalty and the frequency of death penalty sentences and executions have varied considerably across U.S. states and, to a lesser degree, for individual states over time. A small number of states contribute disproportionately to both the total number of executions as well as the number of inmates held under a death sentence. For example, between 1977 and 2008, a total of 1,185 inmates have been executed. Eight states accounted for 80% of these executions, with Texas alone accounting for 38%.Footnote 2 Meanwhile 16 states have not executed a single individual. Similarly, as of December 31, 2008, 3,159 state prison inmates were being held under a sentence of death. Six states (Alabama, California, Florida, Ohio, Pennsylvania, and Texas) accounted for 64% of these inmates (Snell 2010), while 15 states did not have a single inmate on death row.

Certainly, some of this variation is driven by the handful of states that do not impose the death penalty. As of 2009, 36 states authorize the use of the death penalty in criminal sentencing. However, even among those that do, there is considerable heterogeneity in actual outcomes. For example, of the 927 inmates sentenced to death in California between 1977 and 2009, 13 (1%) have been executed, 73 (8%) have died of other causes, while 157 (17%) have had their sentence commuted or overturned. The remaining inmates (684, 74%) are still awaiting execution. By contrast, of the 1,040 inmates sentenced to death in Texas over the same period, 447 (43%) have been executed, 38 (4%) have died of other causes, 224 (22%) have had their sentences overturned or commuted, while 331 (32%) remain on death row (Snell 2010, Table 20). Clearly, being sentenced to death in Texas means something different than being sentenced to death in California.

Over the last decade, empirical research has focused on the differential experiences of states with a death penalty regime to study whether capital punishment deters murder among the public at large. In particular, these studies have exploited the fact that in addition to cross-state differences in actual sentencing policy, there is also variation over time for individual states in the official sentencing regime, in the propensity to seek the death penalty in practice, and in the application of the ultimate punishment. With variation in de facto policy occurring both within states over time as well as between states, researchers have utilized state-level panel data sets to control for geographic and time fixed effects that may otherwise confound inference regarding the relationship between capital punishment and murder rates.

While some researchers pursuing this path conclude that there is strong evidence of a deterrent effect of capital punishment on murder (e.g., Dezhbakhsh et al. 2003; Dezhbakhsh and Shepherd 2006; Mocan and Gittings 2003; Zimmerman 2004) there are also several forceful critiques of these findings (e.g., Berk 2005; Donohue and Wolfers 2005, 2009; Kovandzic et al. 2009). In turn, this has led to responses to those critiques (Dezbakhsh and Rubin 2010; Zimmerman 2009; Mocan and Gittings 2010) by the original authors. Across these and additional research papers, the varying results and wide-ranging discussion of these results has lead to uncertainty regarding the degree to which this body of research is substantively informative for policy makers.

In this paper, we provide a critical review of empirical research on the deterrent effect of capital punishment that makes use of state and, in some instances county-level, panel data. We begin with a conceptual discussion of the underlying behavioral model that presumably informs the specification of panel data regressions and outline the typical model specification employed in many of these studies. This is followed by a brief discussion of current norms regarding “best-practice” in the analysis of panel data and with regards to robust statistical inference. Finally, we engage in a critical review of much of the recent panel data research on the deterrent effect of capital punishment.

Regarding the conceptual discussion, we are of the opinion that the connection between the theoretical reasoning underlying general deterrence and the regression models typically specified in this literature is tenuous. Most studies estimate the empirical relationship between murder rates and measures of death penalty enforcement, including the number of death sentences relative to murder convictions, and the number of executions relative to varying lags of past death sentences. Variation in these explanatory variables is driven either by changes in de jure policy, changes in de facto policy, variation in crime rates, and/or changes in the composition of crimes that occur within a stable policy environment. Presumably, a rational offender would only be deterred by changes in policy or practice that alter the risk of actually being put to death, and the expected time to execution conditional on being caught and convicted. It is not clear to us that the typical specification of the murder “cost function” as a linear function of these explanatory variables accurately gauges actual or perceived variation in such risks. This point takes on particular importance as variants in the basic specification lead to quite different results and we do not find strong theoretical reasoning justifying one specification over the other.

Aside from the specification of key explanatory variables, these studies face a number of econometric or statistical challenges that lie at the center of the dispute regarding the interpretation of the results. The first challenge concerns identification of a causal effect of the death penalty using observational data as opposed to data from a randomized experiment. Identifying the deterrent effect of capital punishment requires exogenous variation in the application of the death penalty, whether at the sentencing or execution phase. To the extent that discrete changes in policy are correlated with unobservable time-varying factors (for example, other changes in the sentencing regime or underlying crime trends) or are themselves being reverse caused by homicide trends, inference from regression analysis will be compromised. The extant body of evidence addresses identification by controlling for observables, employing time and place-specific fixed effects, and through the use of instrumental variables. The effectiveness of these identification strategies will be a key area of focus in this review.

The second modeling issue concerns drawing statistical inferences that are robust to non-spherical disturbances. We argue that there is strong consensus regarding the need to address this issue in panel data settings and, while there are many possible fixes, there are several standard approaches that guard against faulty inference.

The third modeling issue concerns the robustness of the results to variants in model specification. On this point, we consider issues with the underlying distribution of the key measures of deterrence that lead to unstable estimation and discuss the implications for interpreting deterrence findings as average effects.

In our assessment, we find the panel data evidence offered regarding a deterrent effect of capital punishment to be inconclusive. There is a weak connection between theory and model specification and subsequent research has demonstrated that results are sensitive to changes in specification. There are many threats to the internal validity of the empirical specification presented in these papers, and the identification strategies proposed to deal with them are based on questionable exclusion restrictions. Perhaps the easiest issue to address is the calculation of standard errors that are robust to within-state serial correlation and cross-state heterosckedasticity. Most, though not all of the papers finding deterrent effects do not adequately address this issue. Moreover, it has been demonstrated that the finding of statistically significant deterrent effects in several of the papers disappear when estimator variance is calculated correctly. Finally, in many cases, the results reported in this literature are driven entirely by a small and highly selected group of states or, in some cases, by a small number of state-years. This suggests that it is inappropriate to interpret model results as national average effects and casts additional doubt on whether the estimated effects can be attributed to capital punishment.

On the whole, we find the current research literature that uses panel data to test for a deterrent effect of capital punishment to be uninformative for a policy or judicial audience. This is to a small degree due to minor but important issues of appropriate model estimation and to a large degree due to unconvincing identification of causal effects and unconvincing theoretical justification for the model specifications employed. Given the difficulty in specifying a theoretically correct relationship between execution risk and murder rates, we argue that future research at the state level should focus on a discrete change in policy (for example, an execution moratorium or the abolition or reinstatement of the death penalty) and employ recent econometric advances in counterfactual estimation and robust inference. Absent an identifiable policy change, it is nearly impossible to assess whether variation in executions is driven by crime trends, overall shifts in composition of a state’s judiciary, or changes in de facto policy regarding capital cases and hence whether the variation is relevant to future execution risk. We are of the opinion that additional studies based on state panel data models are unlikely to shed much light on this question as such policy changes are too rare to provide robust evidence.

Model Specification

Most panel data models relating murder rates to death penalty policy measures are motivated by a straightforward theoretical paradigm. The “rational offender” model as described in Becker (1968) and Ehrlich (1975) posits that offenders or potential offenders weigh the expected costs and benefits when deciding whether or not to commit a crime, and that the likelihood of offending will depend inversely on the expected value of the costs. Stated simply, to the extent that the death penalty increases the “costs” of committing capital murder, capital punishment may reduce murder rates through general deterrence. An alternative theoretical approach places weight on the demonstration value of a relatively rare event (that is to say an execution). Specifically, to the extent that potential offenders are not particularly good at forming accurate expectations regarding the likelihood of being executed, the occasional demonstration that executions occur may reinforce the notion that a completed death sentence is a real possibility, and in the process, perhaps deter murder. In such a framework, the details of the executions themselves (how long it takes, whether it is botched or the extent of media coverage) may also impact behavior. Thus there are two components of this theory. The rational offender model posits that potential murderers weigh the ‘costs’ and ‘benefits’ of committing homicide. The second posits that individuals place weight on specific signals generated by the sanctioning regime, such as an actual execution or a change from the historic execution rate, that provide salient proxies for the likelihood of a the sanction.

Much of the empirical panel data research is informed by the rational offender approach and estimates a model of the form

where murder it is the number of murders per 100,000 residents in state i in year t, f(Z it ) is an expected cost function of committing a capital homicide that depends on the vector of policy and personal preference variables Z it with corresponding parameter \( \gamma \), X it is a vector of control variables with the corresponding parameter vector \( \delta \), \( \varepsilon_{it} \) is a mean-zero disturbance term, \( \alpha_{i} \) is a state-specific fixed effect and \( \beta_{t} \) is a year-specific fixed effect.

There are several features of this model specification that bear mention. First, the inclusion of state-level fixed effects indicates that the estimated impact on state murder rates of the expected costs associated with committing murder is estimated using only variation in murder rates and expected costs that occur within states over time (i.e., between-state variation in average costs and average murder rates do not contribute to the estimate). Restricting to state variation over time ensures that the models do not attribute changes in the murder rate to the death penalty when they are, in fact, due to state level differences in other dimensions. We note that restricting to state variation over time has considerable consequences for the degree of variation in the capital punishment variables. Second, the inclusion of year fixed effects also removes year-to-year variation in the data that are common to all states included in the panel, ensuring that the models do not attribute changes in the murder rate to the death penalty when they are, in fact, driven by national-level shocks.

Finally, we have defined a general relationship between the expected costs of committing murder and the vector of determinants of costs given in Z via the function f(.) and, for simplicity, a linear relationship between murder rates and the expected cost function. Of course, expected costs of committing capital murder may impact the murder rate non-linearly.

To operationalize this empirical strategy one needs to explicitly choose the functional form of f(.) and articulate the set of covariates to be included in the vector of cost determinants. Functional form issues aside, expected utility theory suggests a number of candidate control variables that could be included in Z. First, the perceived likelihood of being apprehended and convicted should positively contribute to the cost of committing murder. Moreover, the perceived probability distribution pertaining to the adjudication of one’s case should distinguish between the likelihood of being sentenced to death and the likelihood of alternative sanctions. Beyond these probabilities, the effect of a specific sentencing outcome on an individual’s per-period utility flow as well as the effect on the expected remaining length of one’s life should certainly matter. Finally, an individual’s time preferences—i.e., the extent to which the potential offender discounts the future—will impact expected costs.

The effect of capital punishment on this cost function can occur through several channels. First, to the extent that receiving a death sentence shortens one’s expected time until death, the presence of a death penalty statute will increase the expected costs of capital murder. In addition, death row inmates serve time under different conditions than those sentenced to life without parole. The physical and social isolation of death row inmates, as well as having to exist under a cloud of uncertainty regarding whether and when one will be executed, likely diminishes welfare while incarcerated and thus increases costs. As the death penalty is only a small part of the sanction regime for murder, the incremental effect of the death penalty on the cost of being convicted will also depend on the alternative sanctions should one not be sentenced to death. For example, life without parole in a designated high security facility may be more welfare diminishing than a sentence of 20 years to life with no mandatory-minimum security placement. Hence, a complete specification of the cost function would require specification of the discounted present value of one’s future welfare stream under a death sentence, the comparable present value under the most likely alternative non-capital sanctions, and some assessment of the conditional probability of each. In this specification stage, additional assumptions are often made that on average, perceived risk of these sanction costs will equate to actual risk. While complications of estimating these actual risks are discussed in detail below, we note here that there is no research on the sanction risk perceptions of potential murderers on which to base these assumptions, and, by definition, there is no mechanism for learning over time through experience with the death penalty to enforce such an assumption.

The existing body of panel data studies often specify the function f(Z it ) using either an indicator variable for the presence of a death-penalty statute or as a linearly-additive combination of (1) a gauge of murder arrest rates, (2) a gauge of capital convictions relative to murder arrests, and (3) a variable measuring executions in the state-year relative to convictions, either measured contemporaneously or lagged 6 or 7 years.Footnote 3 The first specification effectively punts on the questions regarding how the death penalty specifically impacts the expected costs of committing murder. In the second specification, measures (2) and (3) are intended to measure within-state variation over time in the application of the death penalty.

Prior to evaluating specific studies, we believe that there are three primary issues that require some general discussion. First, to what extent do the two general approaches outlined above correctly specify the expected cost function f(Z it )? Second, is it possible to identify exogenous variation occurring within state over time in the elements of Z it that pertain to the death penalty? Finally, what steps should be taken to ensure that statistical inferences are robust to non-spherical disturbance issues that are common in panel data applications?

Regarding the issue of specifying the cost function, the two specifications that we have identified clearly employ incomplete and indirect gauges of the effect of capital punishment on expected costs. The first specification (whether or not the state has a death penalty statute) makes no effort to characterize variation across or within states over time in the zeal with which the death penalty is applied. Moreover, there is relatively little variation in this variable as few states have switched death penalty regimes since the key 1976 Supreme Court decision. On the plus side, if one were able to identify exogenous variation in the presence of a death penalty statute through either instrumental variables or appropriate control-function methods, the interpretation of the coefficient is quite straightforward. In particular, assuming identification of a causal effect is achieved, the coefficient on an indicator variable for the presence of a death penalty statute provides an estimate of the (local) average treatment effect of having such a law on the books that operates through unknown mechanisms. While ultimately mediated through a black box with unknown determinants and an unspecified functional form, one can clearly connect policy to outcomes in such a research design.

We find the second approach (controlling for murder arrest rates, capital convictions relative to murders, and executions relative to lagged convictions) problematic due to the incomplete specification of avenues through which the death penalty impacts relative expected costs and the arbitrary specification choices commonly made. To be fair, one could situate each of these measures within the expected cost function that we have specified. Specifically, the murder arrest rate provides a rough measure of the likelihood of capture, while the capital conviction rate provides a gauge of the likelihood of receiving a death sentence. Both of these variables may change over time with changes in de facto enforcement and sentencing policy. However, within-state variation over time in both of these variables is also likely to reflect changes in the volume or nature of crime in addition to changes in policy with regards to resource allocation towards murder investigation and the willingness to pursue capital convictions. Short term changes in factors such as the volume or nature of crime are unlikely to be predictive of the probability of arrest or capital conviction in future periods and hence would not be appropriate measures of expected risk. Given that policy is only one determinant of these outcomes and especially the fact that much of the state variation over time in these variables occurs within states with stable de jure death penalty regimes, it becomes difficult to interpret the meaning of the regression coefficients.

The most problematic variable in this specification is the commonly included measure of executions relative to lagged death sentences, meant to convey information about the probability of execution conditional on receiving a death sentence. In several studies, executions are measured relative to capital convictions lagged 6 or 7 years usually justified by the statement that the typical time to execution among those executed is 6 years. Perhaps the thinking here is that if, on average, it takes 6 years to be executed then the rational offenders should be monitoring this ratio in deciding whether or not to commit murder. We find this specification choice to be puzzling and arbitrary for a number of reasons.

First, basing the lag length of the denominator of this variable on the average time-to-death for those who are actually executed ignores the fact that only 15% of those sentenced to death since 1977 have actually been executed. If one wanted to normalize by the expected value of time to death for those sentenced to death, one would need to account for the 85% of death row inmates with right-censored survival times. As of December 31, 2009 the average death row inmate has been held under sentence of death for approximately 13 years (Snell 2010). Taking these censored spells for death row inmates into account along with the fact that for the 40% of inmates who have been removed from death row for reasons other than execution life expectancy is closer to that of inmates sentenced to life suggests that the time-to-death of the typical inmate with a capital sentence is much greater than 6 years.

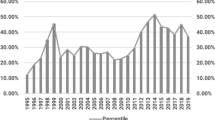

Second, the commonly stated stylized fact used to justify the lag-length choice (that the average time to execution is 6 years among the executed) is incorrect for most years analyzed in the typical panel data analysis. Snell (2010, Table 12) presents estimates of the average elapsed time between sentencing and execution for each year from 1984 to 2009. For the 21 people executed in 1984, the average elapsed time was approximately 6.2 years. Since then, this statistic has increased dramatically. For example, the average for the 52 individuals executed in 2009 stood at approximately 14.1 years. The lowest value for this statistic since 2000 occurred in 2002 (10.6 years).

Third, aside from being incorrect for the United States as a whole, the time-to-execution lag commonly employed most certainly varies across states that have a death penalty statute. As the statistics cited in the introduction to the paper suggest, California has sentenced many inmates to death (approximately 1,000), but has executed relatively few of these inmates (roughly 1.4%). Texas on the other hand, has sentenced slightly more than 1,000 inmates to death and since 1977 and has executed roughly 43% of these inmates. Clearly, the expected time to death for an inmate on California’s death row differs from the comparable figure for Texas. To the extent that the same lag is utilized for each state, the coefficient on the execution rate variable will be measured with error that is most likely to be non-classical. In addition, as the models presume that annually updated and state specific changes in murder arrest rates, death penalty conviction rates, and number of executions are relevant for deterrence, it seems inconsistent to assume that a fixed national average lag from early in the time period is the relevant lag.

Perhaps the most important problem concerns the tenuous theoretical connection between this measure of “execution risk” and the expected perceived cost of committing murder. If we define the variable T as the duration between the date of the death sentence and the actual date of death with cumulative distribution function G(T), the execution risk variable as commonly measured seeks to estimate G(7)–G(6). Putting aside the fact that this discrete change in the cumulative density function is measured using synthetic rather than actual cohorts (none of the papers measure actual executions of those particular offenders sentenced to death 6 years ago and our best guess is that with longitudinal data this variable would equal zero in most state-years), there is no theoretical reason to believe that a rational offender considering committing capital murder would pay particular attention to this specific point in the cumulative distribution function. On the other hand, summary measures of the distribution of T, such as E(T) or var(T), would have a stronger theoretical justification for inclusion in the cost function.

Finally, it is not immediately obvious that variation in this variable reflects contemporaneous changes in policy that a rational offender should properly take into account when deciding whether or not to commit capital murder. As with the murder arrest rate and the capital conviction rate, one can certainly envision year-to-year variation in the execution risk variable occurring within a perfectly stable policy environment insofar as the underlying societal determinants of crime vary from year to year. In such an environment, the fact that there are more executions this year relative to last year does not necessarily convey information regarding a change in the expected costs of murder. This problem is exacerbated by the fact that potential murderers would be interested in the potential costs of murder as they would be manifest several or many years into the future, when they would potentially be prosecuted for the murder they are considering committing. It is particularly unclear whether year to year variation in executions or execution rates are relevant to the risk of execution 5, 10, or 15 years in the future.

Besides measurement and functional form issues, these common specifications of the murder cost function are certainly incomplete since none of the studies that we review attempt to articulate, measure, and control for the time-varying severity of the considerably more common alternative sanctions for convicted murderers who do not receive a death sentence. This may be a particularly important shortcoming of this research to the extent that sentencing severity positively co-varies with capital sentencing practices. This brings us to the more general issue of whether changes in current execution rates or capital conviction rates are either correlated with contemporaneous changes in other sanctions that influence crime rates or are correlated with past changes in sentencing policy that exert current effects on crime.Footnote 4 For example, a current increase in executions may reflect a toughening of sentencing policy across the board that exerts a general deterrence and incapacitation effect on murder independently of any general deterrent effect of capital punishment. Similarly, given the long lag between conviction and execution in the United States, a spate of executions today may reflect past increases in enforcement that, in addition to increasing capital convictions, increased convictions of all sorts. Such past enforcement efforts may have long lasting impacts on murder rates to the extent that those apprehended and convicted in the past for other crimes are still incarcerated and are candidate capital offenders. It is also important to note that such factors are likely to be time varying within state and hence not eliminated by the inclusion of year and state fixed effects. With this in mind, the list of covariates as well as the instrumental variables employed to identify causal effects deserve particular attention.

The final issue that is a particular point of contention in this literature pertains to the correct measurement of standard errors. One of the key papers in this debate uses county-level panel data to estimate the effects of various gauges of capital punishment policy measures at the state-year level. The higher level of aggregation in they key explanatory variable suggests that the standard errors tabulated under conventional OLS assumptions will be substantially biased downwards to the extent that there is intra-county correlation in the error terms within state.Footnote 5 A second critique levied at several of the other papers in this literature concerns adjusting standard errors for potential serial correlation within cross sectional units and heteroskedasticity across units. Bertrand et al. (2004) demonstrate the tendency for unadjusted panel data difference-in-difference estimators to substantially over-reject the null hypothesis in the presence of serial correlation within cross-sectional clusters. This problem seems to persist both when tabulating standard errors under the assumption of spherical disturbances as well as when employing parametric corrections for serial correlation in the residuals.

Several recent textbooks devoted to the practice of applied econometrics place great emphasis on the importance of taking measures to guard against incorrect inference due to the misspecification of the variance–covariance matrix of the error terms in panel regression models. For example, in their discussion of serial correlation in microdata panels, Cameron and Trivedi (2005) note “the importance of correcting standard errors for serial correlation in errors at the individual level cannot be overemphasized” (p. 708). Likewise, in their treatise on econometric practice, Angrist and Pischke (2009) advocate for calculating conventional standard errors and cluster robust standard errors, and making inference based on the larger of the two. In reviewing the studies below, we will return to these prescriptions.

Existing Panel Data Studies

There are several alternative frameworks that one could use to organize a review of panel data research studies on the death penalty. For example, we could group studies based on methodological approach (OLS, IV), particular specifications of the expected cost function (execution risk measures, overall execution levels, competing risks for those sentenced to death including commutation or being murdered while incarcerated), or general findings (either supporting or failing to support deterrent effects). Alternatively, one could segment the small set of panel data studies by loosely defined research teams. Our reading of this literature is that there are several “research groups” that have contributed multiple papers to the extant evidence and that one can discuss each team’s output to illustrate the evolution of how underlying models have been specified. Moreover, there have been several major challenges to the findings of these teams and written responses to the challenges.

In what follows, we organize our review by research group. We begin with the research from teams that draw the strongest conclusions regarding the deterrent effects of capital punishment and then proceed to researchers whose findings and interpretation of the findings are more tentative. Throughout, we interweave discussion of the challenges raised for each paper. In the following section, we discuss published critiques of this research and the responses of these research teams to the published criticisms of their work.

Dezhbakhsh, Rubin and Shepherd

A series of papers written by various combinations of Hashem Dezhbakhsh, Joanna Shepherd, and Paul Rubin are commonly offered as providing the strongest evidence of a deterrent effect of the death penalty. There are two initial papers (Dezhbakhsh and Shepherd 2006; Dezbakhsh et al. 2003) which we will discuss in this section, followed by a response to critiques of these papers published in 2010 that will be discussed after the critiques are summarized later in this paper.

Dezhbakhsh and Shepherd (2006) exploit variation in state-level death penalty statutes, some of which is driven by the 1972 and 1976 Supreme Court decisions. The authors conduct a number of tests for deterrent effects. First, the study presents an analysis of national time series for the period 1960–2000 and for the sub-periods 1960–1976 as well as 1977–2000. Second, the authors calculate the percentage change in murder rates pre-post the introduction of a death-penalty moratorium by state as well as pre-post percent changes in murder rates surrounding the lifting of death-penalty moratorium and analyze whether the distribution of these changes (mean, median, proportion of states with a specific sign) differ when moratoria are imposed relative to when moratoria are lifted. Finally, the authors estimate state-level panel regressions for the period 1960–2000 where the dependent variable is the state murder rate and the key explanatory variables are either the number of executions in the state or the number of lagged executions, as well as an indicator variable indicating a state moratorium.

To summarize their findings, national level time series regression analysis demonstrates significant negative correlations between the national murder rate and the number of executions (both contemporaneous and lagged one period) as well as a positive association with years when there was a national death-penalty moratorium (1972–1976). With respect to the pre-post moratorium analysis, the authors find an increase in murder rates following the introduction of a moratorium in 33 of the 45 states. They also find that in state years surrounding the lifting of a death-penalty moratorium, murder rates, on average, decline. Finally, the state-level panel regressions find significant positive effects of the death penalty moratoria on homicide rates and negative coefficients on the number of executions and the number of executions lagged.

While the authors interpret this collage of empirical results as strong evidence of a deterrent effect of the death penalty, there are several problems with each component of the empirical analysis that we believe precludes such a conclusion. We distinguish here between two categories of problems, with the first and more important being plausible identification of an effect of capital punishment and the second being appropriate specification of the uncertainty of the estimates. Beginning with the national time series evidence, the main challenge to identification of an effect of capital punishment is the possible confounding effect of national trends in murder that are unrelated to capital punishment. On this point, we note that the stated positive effect of the national moratoria is driven by relatively high murder rates in the years between the 1972 Furman and 1976 Gregg decisions. A visual inspection of the national murder rate time series reveals that the rate began to climb in the early 1960s, was already at a historic high by 1970 and increased a bit further in the years surrounding the Furman decision. The high murder rate in the aftermath of the national moratorium clearly reflects the end-stages of a secular increase in the U.S. murder rate with a starting year for this transition that long predates 1972. This pre-existing trend provides sufficient challenge to the identification of an effect of the moratorium.

Aside from the analysis of the effects of the national moratorium, the authors also draw conclusions from the apparent negative relationship between murder rates and execution totals. However, there is reason to believe that the national level murder rate time series is non-stationary, calling into question any inference that one might draw from a simple national-level regression. In Table 1, we present results from various specifications of the augmented Dickey-Fuller unit root test using the two alternative data series for national murder rates that are used in this literature: the series constructed from vital statistics and the series based on FBI Uniform Crime Reporting data. In all specifications, we cannot reject the null that the homicide rate follows a random walk. As is well known, hypothesis tests arising from regressions with non-stationary dependent variables are prone to over-rejecting the null hypothesis.Footnote 6 Hence, we place little weight on these national-level results.

Turning to the analysis of pre-post moratoria changes in state level homicide rates, the strongest evidence of a deterrent effect comes from the increases in homicide associated with the introduction of a moratorium. However, we believe that this pattern is less supportive of a deterrent effect than the authors argue due to identification concerns. Most of the state-years where a moratorium is introduced correspond in time to the 1972 Furman decision. As we have already noted, the national time series clearly reveals homicide rates that are trending upward. One plausible interpretation of this evidence is that the authors have documented the continuation of an existing trend in homicide rates occurring both at the state and national level. Indeed, this point is made quite forcefully in Donohue and Wolfers (2005). In a reanalysis of these data, the authors first reproduce the state-level distribution of the percent change in homicide associated with moratoria reported in Dezhbakhsh and Shepherd (2006). However, they go one step further and show that contemporary changes in murder rates in states not experiencing a policy change parallel those that do. Specifically, in their reanalysis, the authors construct comparison distributions for the percentage change in state murder rates for states that do not experience the introduction of a moratorium. Donohue and Wolfers find changes in murder rates for these “control” states that are nearly identical to those introducing a moratorium. They also find similar results when they reanalyze the effects of death–penalty reinstatements. Notably, Dezhbakhsh and Shepherd (2006) make no effort to construct a comparison group that would permit some assessment of the counterfactual distribution of homicide changes under no moratorium.

Finally, the authors estimate panel data regression models, weighted by state population, in which the murder rate is specified as a function of the number of executions (both contemporaneous and once lagged), an indicator variable that captures whether a state has a death penalty regime, and a host of other covariates.Footnote 7 These panel regression estimates suffer from a number of methodological problems that again limit the strength of this evidence. First, the authors intimate that their panel data regressions adjust for year fixed effects, yet in replicating their results Donohue and Wolfers discovered that the authors have instead included decade fixed effects. The result is that national-level murder shocks within the 1970s, the decade in which the national murder rate peaked, are uncontrolled in the regression. Second, there are concerns regarding whether the standard errors in their panel data regression are correctly estimated. The authors note adjusting for possible “clustering effects” but do not indicate the manner in which they cluster the standard errors. Donohue and Wolfers find that clustering on the state to address correlation over time within state yields standard errors that are nearly three times the size of those reported in an earlier working paper draft by Dezhbakhsh et al. (2003).Footnote 8

A final issue concerns the manner in which the authors specify the expected cost function that we discuss in the previous section. The authors enter the count of executions on the right hand side of the regression model. This certainly is a poor choice if the intention is to gauge the relative frequency with which convicted murderers are put to death in a specific state. One possible justification for such a specification would be if executions have a “demonstration effect” whereby the execution or news coverage of it temporarily deters homicidal activity (and this temporary deterrence is not offset by a later increase, and the timing of executions within the year doesn’t confound the estimates using an annual unit of analysis). An implication of this however is that a single execution in a very large state would deter more murders than an execution in a small state. Functionally, such specification would also require that potential offenders in large states draw the same inference that potential offenders in small states would draw from a single execution regarding the likelihood that they will be punished similarly. This seems like a particularly restrictive functional form to impose absent a strong theoretical reason for doing so.Footnote 9 Moreover, experimentation with various normalizations of the number of executions in Donohue and Wolfers leads to drastically different results. The specification of the cost function in this paper is also clearly incomplete as it does not include any information on state-year changes in other much more frequent sanctions for murder such as the likelihood of life without parole or the average length of prison sentences. This incomplete and restrictive specification of the cost function raises strong concerns about identification and related model uncertainty. In conjunction, we are of the opinion that this cluster of concerns limits what one can infer from the results of this particular analysis.

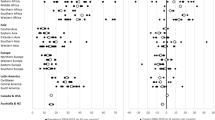

Given the apparent sensitivity of the results to small changes in specification, we performed further exploration of the underlying data used in this paper with an eye towards identifying the source of this model specification sensitivity. In particular, we explored whether there are issues related to the distribution of the data underlying the model that could explain the swings in coefficients of interest under changes in specification. Figure 1 presents a concise summary of this analysis. The figure presents a partial regression plot of residual annual state murder rates against residual annual number of state executions after accounting for state fixed effects, year fixed effects, and a commonly-employed set of control variables. As can be seen, there is a large degree of variation in annual state homicide rates remaining (although more than 80% of the variation in this outcome can be attributed to state fixed effects)—this is the variable displayed on the vertical axis of the graph. However, there is little remaining variation in executions, with almost all data points clustered closely around zero on the horizontal axis. The coefficient estimated by Dezhbakhsh and Shepherd (2006) is, roughly speaking, the simple linear regression coefficient between these two sets of residuals.

Figure 1 demonstrates that this coefficient estimate is heavily influenced by the rare data points that fall outside this data cloud. In fact, the simple lowess (dashed line) of the data suggests no effect in the bulk of the data and a decreasing trend in the sparse data regions outside the data cloud that is determined entirely by the handful of points designated by triangles. These data points are all observations from Texas, with the particularly influential data point in the lower right being Texas in 1997. With the removal of this single data point the slope remains negative albeit with much reduced statistical significance (when no adjustment is made to standard errors in parallel to the original analysis). The removal of all Texas data points yields a slope that is very close to zero and clearly non-significant.

Thus, while the panel model suggests that the execution effect estimated is an average effect over many states and years, it in fact is entirely determined by a small handful of data points all from one state. This carries two implications for the main findings in the paper under review. First, the estimated effect of executions is not an average effect but a Texas effect; it generalizes to other states only by assumption, not by empirical evidence. Second, this reanalysis raises additional concerns about identification in this case—were there other issues that may have been affecting homicide rates in Texas, particularly in 1997? It also carries implications more broadly for model sensitivity. With the vast majority of the data in a vertical cloud with zero slope, the coefficient of interest will be determined by whatever small set of data points fall outside of this cloud—and which data points have this characteristic is likely to vary across specifications leading to wildly differing estimates. In contrast, any set of models that keeps the set of points outside the data cloud relatively fixed will appear to be robust. This exploration suggests that through use of a linear specification with little variation in the explanatory variable of interest, except for a handful of outliers, many of the models employed in this literature may be over-fitting the data.

The second oft-cited paper by this research team is that of Dezhbakhsh et al. (2003).Footnote 10 This study analyzes a county-level panel data set and provides a more careful specification of the expected cost of committing murder. Specifically, the authors assume that the expected cost function is an additive linear function of the risk of arrest, the risk of receiving a death sentence conditional on arrest, and a measure described as the risk of being executed conditional on receiving a death sentence. The measure of execution risk is operationalized as the number of executions in the current year normalized by the number of death penalty sentences 6 years prior. This specification is copied with some variation in several other papers in this literature. The key innovation of the paper is to estimate the model using two-stage least squares where state-level police payroll, judicial expenditures, Republican vote shares in presidential elections, and prison admissions are used as instruments for the three variables that capture the expected costs of committing murder. The authors identify their key variable of interest as the effect of the state execution risk measure on county-level homicide rates.

While there are a number of issues regarding the manner in which the authors characterize the execution risk (a factor that we discussed at length in “Model Specification”), there are several fundamental problems with this analysis that limit the need for further discussion. First, some researchers have raised concerns with the quality of county-level crime data from the FBI’s Uniform Crime Reports. In particular, Maltz and Targonski (2002) find that these data have major gaps and make use of imputation algorithms that are inadequate, inconsistent and prone to either double counting or undercounting of crimes depending upon the jurisdiction.Footnote 11

Second, the dependent variable in this paper is measured at the county level while the key explanatory variable (execution risk) varies by state-year. At first blush, this might appear to be an advantage with over 3,000 counties in the United States and only 50 cross-sectional units in the typical state-level panel. However, given the unit of variation of the key explanatory variable, the authors have fewer degrees of freedom than is implied by the number of counties multiplied by the number of years. The authors make no attempt to adjust their standard errors to reflect the higher level of aggregation of their death penalty variables despite the well-known result that the OLS standard errors are, in this case, downward biased (Moulton 1990).

Finally, a more fundamental problem concerns their choice of instruments used to identify the effect of execution risk on murder rates. These instruments are police expenditures, judicial expenditures, Republican vote share, and prison admissions.Footnote 12 For these instruments to be valid, they must impact murder rates only indirectly; to be specific, only through their effects on the mean risk of being executed. Certainly, policing, judicial expenditures, and prison admissions (either directly or through their correlation with lagged values of prison admissions) may impact murder rates through many alternative channels (incapacitation of the criminally active, deterrence, greater social order, a smoother functioning court system etc). Prison admissions, through incapacitating criminally active people, will impact crime directly beyond the indirect effects operating through murder arrest rates, conviction rates, and execution rates.

The multitude of alternative paths through which the instruments may be impacting homicide and crime in general render the results of this analysis highly suspect. As this entire analysis rests on the validity of the instrumental variables, again we find this analysis to be thoroughly unconvincing.Footnote 13 Donohue and Wolfers (2005) suggest a falsification test to check the validity of the exclusion restriction underlying the analysis of Dezhbakhsh, Rubin, and Shepherd. Specifically, they restrict the sample to include only those states that did not have an operational death penalty statute. They re-specify the models employed by Dezhbakhsh, Rubin, and Shepherd to estimate “the effect of exogenously executing prisoners in states that have no death penalty” and find significant deterrence effects in five of six specifications. As the authors note, the most obvious interpretation of these data is that the instruments have a direct effect on the homicide rate independent of its effect through changes in the number of executions.

Zimmerman

Paul R. Zimmerman has authored three articles that reach mixed conclusions regarding the deterrent effect of capital punishment. The first two articles are research papers on different but related questions surrounding capital punishment while the third article was written in response to the critique by Donohue and Wolfers (2005) of the earlier research papers. In this section, we discuss the first two papers.

Zimmerman (2004) estimates state panel data regressions covering the period 1977–1998 with a specification of the expected cost function that is somewhat similar to that in Dezhbakhsh et al. (2003). Specifically, Zimmerman models state murder rates as a function of the murder arrest rate (murder arrests divided by total murders), the likelihood of receiving a death sentence conditional on being arrested for murder (death sentences divided by murder arrests), and a measure described as the probability of execution conditional on conviction. A departure from Dezhbakhsh et al. (2003) is that instead of normalizing executions by death sentences lagged 6 years, Zimmerman defines the execution rate as the number of contemporaneous executions divided by the number of contemporaneous death sentences. Zimmerman then estimates model specifications with the three cost function variables measured in the same time period as the murder rate and models where the three cost functions variables are lagged one period. The models employ a fairly standard set of socio-demographic and crime-related, time-varying covariatesFootnote 14 and are weighted by state population.

The author presents two sets of results: estimation results employing ordinary least squares and 2SLS results specifying instruments for each of the three key explanatory variables. With three endogenous variables, the author needs at least three instruments, and specifies a set of eight, resulting in an overidentified model. The instruments include (1) the proportion of a state’s murders in which the assailant and victim are strangers, measured contemporaneously and once-lagged, (2) the proportion of a state’s murders that are non-felony, measured contemporaneously and once-lagged, (3) the proportion of murders by non-white offenders, measured contemporaneously and once-lagged, (4) a one-period lag of an indicator variable that captures whether there were any releases from death row due to a vacated sentence, and (5) a one-period lag of an indicator variable that captures whether there was a botched execution.

According to the text of this article and the written specifications of the first-stage regressions, the proportion of murders that are committed by strangers and the lag of that variable are used as an instrument for murder arrest only. The variables measuring the proportion non-felony and the proportion committed by non-white offenders are used as instruments for death sentences per murder arrest, while the lagged indicator variables for releases from death row and botched executions are used as instruments for the execution rate. However, the printed equations indicate that the murder rate (the dependent variable) is included as an explanatory variable in each of the first-stage models. We presumed this to be a typographical error and decided to seek additional information from the underlying computer code to see the exact specification of the first stage.Footnote 15 Indeed the dependent variable was not entered on the right hand side of the first-stage regressions. However, in contrast to the text, all eight instrumental variables are included in each first-stage model.

Both the OLS and 2SLS regressions include complete sets of state and year fixed effects as well as state-specific linear time trends. No effort is made to adjust the standard errors for possible serial correlation of the error term within state, although in a follow-up paper that we will discuss later, Zimmerman (2009) re-estimates these models with parametric adjustments for auto-correlation.

The OLS regression yields no evidence of an impact of the execution risk on murder rates though the murder arrest rate is consistently statistically significant with a sizeable negative coefficient. The 2SLS results yield statistically significant and much larger negative effects of the murder arrest rate and a significant negative coefficient on the execution risk variable. Zimmerman also experiments with a log–log specification for the first stage model and reports finding no evidence of a significant effect of the execution risk or arrest risk variables using this specification.

The 2SLS results supportive of a deterrent effect suffer from many of the same problems that have been raised in response to the work of Dezhbakhsh et al. (2003). We identify five such problems and then discuss each in turn. First, the key execution risk variable is constructed in a manner that is difficult to interpret and does not clearly relate to the actual risk of execution for an individual convicted at a given point in time or to the expected amount of time until execution. However, we note, although the author does not, that as little is known about the perceptions of sanction risk by potential murderers, risk estimates based upon recent information on death sentences may be just as plausible as the other specifications presented. Second, a key coefficient of interest in one of the first stage regressions, the effect of a botched execution on execution rates in the following year, is of opposite sign to what Zimmerman hypothesizes that it should be, yet this is reported nowhere in the published paper. Likewise, the first stage relationship (in a prior working paper version of the paper but not reported in the final version of the paper contrary to current standard practice) between the endogenous regressors and the instruments is very weak and does not pass standard econometric tests of instrument relevance. Third, mechanical relationships between each of the types of murder (felony versus non-felony, murders committed by white versus non-white offenders) potentially threaten the validity of the instruments in these models. Finally, the significance of the 2SLS results is not robust to standard adjustments for auto-correlated disturbances.

Beginning with the key explanatory variable, it is not clear how a change in the ratio of executions to new death sentences would impact the expected cost of committing capital murder. If one were to multiply this ratio by the inverse of the proportion of the state population on death row, then the ratio could be interpreted as the ratio of the transition probability off death row though execution to the transition probability onto death row from the general population. However, even this ratio wouldn’t have a meaningful interpretation. Absent a clear articulation of the theoretical reasoning that would justify measuring the execution risk in this manner, it is difficult to interpret what the 2SLS result is telling us.

Regarding the instruments, an inspection of the actual first stage results reveals several patterns that would lead one to question the exclusion restrictions that the author is imposing. In the first-stage model for the execution risk variable, the instrument with the largest t statistic (over 3.5 in both 2SLS models estimated in the paper) is the lagged variable indicating a botched execution. The lag of the variable indicating commutations or vacated death sentences is never significant. The author states that the botched execution indicator is one of the key variables identifying execution risk, and argues that a botched execution last period should reduce future execution risk either through judicial action or reluctance on the part of key policy makers to risk another botched execution. To the contrary, the variable measuring botched execution exerts a significant positive effect on the execution risk. Given that the observed effect is opposite to the author’s initial hypothesis, the underlying quasi-experiment driving the key finding in this paper is certainly not what the author suggests.

A related problem is that of instrument relevance. As Zimmerman notes, in order for the instruments employed to be valid they must be both excludable from the structural equation and relevant. In this context, relevance refers to the explanatory power of the instruments in the first stage regression. The F-statistics on the excluded instruments reported in an earlier version of the paper were very small (never greater than 5). With three endogenous regressors and eight excluded instruments, the corresponding Stock-Yogo critical value for this F test is 15.18 (Stock and Yogo 2005). Thus, the instruments employed in this analysis should be viewed as weak. In 2SLS estimation, two issues arise in the presence of weak instruments. First, in the event that the instruments are even weakly correlated with the errors in the structural equation, 2SLS estimators perform especially poorly since the bias of 2SLS is scaled by the inverse of the explanatory power of the instrument in the first stage regression (Bound et al. 1995).Footnote 16 Second, even if the exclusion restriction is satisfied, weak instruments typically lead to over-rejection of hypothesis tests in the second stage regression.

A third problem with the 2SLS models in Zimmerman (2004) involves the validity of the remainder of the instruments. The remainder of the instruments can be described as the proportion or share of murders of different types: those committed by strangers, those committed by non-whites, and non-felony murders. There are two concerns with this set of instruments. The first is that if some of these categories of murder are more variable than others, then the share of murders of that type will be directly correlated with the total homicide rate. For instance, if the rate of murders (to population) committed by strangers and non-whites is fairly stable but the non-felony murder rate varies considerably over time, then variations in the homicide rate will largely be due to variations in the non-felony murder rate and increases in the share of murders that are non-felony will be directly correlated with increases in the murder rate. The second concern is that variation in the sanction risk probabilities due to shifts in the share of each of these types of murder doesn’t affect an individual potential offender’s risk of sanctions. An individual potential offender either is a stranger to their potential victim or not, either is non-white or not, and either potentially commits a felony or nonfelony murder. The sanction risk associated with each of these states is not varying, just the proportion of murders of these types, and thus not the sanction risk for an individual offender. Consequently, these variables only operate as successful instruments if potential offenders adjust their perception of sanction risks in response to the portion of variation in total sanction probability that is due to changes in the composition of murders but without knowing that this is the source of those variations. If the source of the variations is known to potential offenders then it should not change their perception of their sanction risk.

A final concern raised by Donohue and Wolfers (2005) and readdressed in Zimmerman (2009) pertains to the robustness of the statistical inference. In his later analysis of the same data, Zimmerman (2009) tests for autocorrelation in the murder rate error terms within states and finds evidence, as one might expect that the errors are not independent of one another. As we discussed above, auto-correlated errors in panel data models tend to bias standard error estimates tabulated under the assumption of homoscedastic independent and identically distributed error terms towards zero. In replicating Zimmerman’s results, Donohue and Wolfers (2005) find that clustering the standard errors in the 2SLS model by state leads to an appreciable increase in standard errors and resulting insignificant coefficient on the execution risk variable.Footnote 17

As with the prior sets of papers, we find the evidence for deterrence from this paper to be uninformative. Again, this is due in small part to model estimation issues such as adjustment of standard errors and in large part to substantial identification challenges arising from unconvincing instruments and problematic specification of capital punishment variables.

Zimmerman (2006) uses a similar framework as the OLS specification implemented in Zimmerman (2004) but replaces the execution risk variable with a vector of variables giving the risk of being executed by each execution method. These are specified with two lag structures, the numerator is the number of executions by a specific method carried out in the current year with either current or once-lagged denominators of the number of death penalty sentences. Further specifications vary the denominator, using all murders instead of those sentenced to the death penalty and filling in missing values of the deterrence variables as described in Zimmerman (2004; missing values are due to zero death sentences in the relevant year for the denominator of the execution risk variables). The once-lagged murder rate is included as a covariate in the models to account for autocorrelation in the error term over time within state. The author reports that only executions by electrocution are associated with reductions in the homicide rate.

We note that it is consistent with Zimmerman (2004) that the most common method of execution, lethal injection, is not found to have a significant relationship with homicide when using the same concurrent lag specification. The rarity of the other execution methods, including the one found to have a significant deterrent effect in these models, adds to concerns about identification beyond those given in the discussion of the more general model with the same specification used in the 2006 paper and would lead to an exaggeration of the issues demonstrated in Fig. 1.

Mocan and Gittings

There are two papers by Mocan and Gittings (2003, 2010) that estimate a more extensive variant of the model specified in Dezhbakhsh et al. (2003) but without instruments. Specifically, the authors estimate state panel data regressions where the dependent variable is the state murder rate and the key independent variables are the murder arrest rates, the death penalty conviction rates, and measures described as execution risk and the risk of being removed from death row for reasons other than execution. There are several key departures from the papers that we have reviewed thus far. First, Mocan and Gittings cluster the standard errors by state in all models, addressing a key weakness that pervades much of the extant literature. Second, this team makes an effort to specify some other components of the expected cost function that may confound variation in the execution risk. Third, the authors add a more extensive set of time varying control variables to the specification.Footnote 18 Finally, the authors estimate associations not only of executions but also of commutations and removals from death row with homicide rates.

The specification of the key cost-function variables follows a somewhat complex schema. The murder arrest rate is measured as the ratio of the number of murder arrests to total number of murders in a given year (with the ratio lagged one period in all specifications in order to minimize endogeneity bias). The ratio of prisoners per violent crime is also included and is meant to further control for the arrest and conviction risk. To capture changes in the expected cost of a murder that arise under a capital punishment regime the authors employ three additional deterrence variables. First the authors include a variable measuring the ratio of death sentences in year t − 1 to murder arrests in year t − 3. The 2-year lag is justified by the average observed empirical lag between arrest and sentencing in capital cases. Second, the authors include the ratio of the number of executions in t − 1 to death sentences in year t − 7.Footnote 19 They justify this lag length based on the statement that the average time to execution among the executed is 6 years. Third, the authors include the ratio of the number of death sentence commutations in year t − 1 to death sentences in year t − 6. In some specifications, the authors substitute overall removals from death row through avenues other than execution for commutation in the numerator of this variable. The shorter time lag for the removal risk (relative to the definition of the execution risk variable) is justified by the shorter average time to removal relative to time to execution. As in the prior papers reviewed here, no measures of the risks associated with other more common sanctions for murder are included.

Similar to the earlier papers, the authors estimate panel data regressions by weighted least squares where the observations are weighted by each state’s share of the U.S. population. The authors find significant negative associations between the execution risk variable and murder rates, and significant positive associations of the removal risk and murder rates. In summarizing the numerical magnitude of the results, the authors report that each additional execution is associated with five fewer homicides, that each additional commutation is associated with five more homicides, and that each additional removal from death row is associated with one additional homicide. In regression models that also include an indicator variable for the existence of a capital punishment statute, they find that a death penalty law has an independent negative effect on murder, above and beyond the effect of an execution.Footnote 20 Finally, as a robustness check, the authors demonstrate that the execution, commutation and removal rates are not associated with the subsequent crime rates for robbery, burglary, assault and motor vehicle theft which they interpret as evidence that these rates may be considered exogenous in their effects on crime rates for homicide.

Mocan and Gittings (2003) are consistently careful in their model estimation and present clear descriptions of their regression models. That said, the lack of any measures of the risk of other sanctions for murder is a glaring omission that threatens the identification of effects of the death penalty. In addition, we have raised a number of issues with the specification of the execution risk in this manner in “Model Specification” that generate additional substantial concerns regarding the appropriate interpretation of the related coefficient even if one were willing to assume that variation in this measure were exogenous. As Donohue and Wolfers (2005) note, perhaps the most obvious critique of the models employed by Mocan and Gittings concerns the complex temporal structure of the explanatory variables of interest—the probability of an execution, a commutation and a removal are estimated as the ratio of executions to death sentences issued five or 6 years earlier. The authors argue that these ratios are the most accurate measures of risk and state their belief that potential murderers will be not just rational in their decision making but also accurate in their risk perceptions and hence propose that these measures are good proxies for the risk perceptions of potential murderers. The authors state that the choice of 6 years is made for two reasons: (1) because the average time from a death sentence until execution is reported by Bedau (1997) to be 6 years for prisoners who are actually executed and (2) in order to maintain consistency with prior research by Dezhbakhsh, Rubin and Shepherd.

In response, Donohue and Wolfers suggest that a better measure of the deterrence probability would use a 1-year lag under the supposition of Zimmerman (2004) that offenders are likely to utilize the most recent information available to inform their behavior. We find no greater theoretical justification for a one year lag than a six year lag given the lack of information on risk perceptions of potential murderers but note that the results are sensitive to the lag employed. When the model presented in Mocan and Gittings (2003) is re-specified using 1 year lags, the coefficients on executions and removals become insignificant.Footnote 21

Mocan and Gittings (2010) provide a multi-faceted and detailed defense of their choice of lags, arguing that the once lagged versions of the deterrence variables suggested by Zimmerman are uninterpretable and have no meaning since individuals sentenced to death are almost never executed within 1 year. Among the robustness checks included in this follow-up paper, the authors employ alternative normalizations of executions using either 4 or 5 year lags of death sentences and find that doing so does not alter their conclusions. While we will discuss their 2010 response in greater detail in “Responses to These Challenges”, here we discuss this particular set of robustness checks.

Given that the average time to execution for those receiving a death sentence is clearly closer to 6 years than to 1 year, there is some theoretical justification for employing a longer lag, although we again note that it is unknown how potential murderers construct their perceptions of sanction risks. However, the choice of a 6-year lag remains problematic for several reasons. First, we have pointed out that the chosen lag length to execution is based on a statistic calculated on a select sample of death row inmates—i.e., those 15% who have actually been executed. This grossly under-estimates the average time to death for those who will eventually be executed, or released, given the large number of inmates sitting on death row for a decade or more. Second, as noted earlier, the 6-year figure is an incorrect measure of the national average of time until execution for those executed in the time period studied. According to the figures presented in Snell (2010), the raw average of time to execution for executions occurring between 1984 and 2009 is 10.06 years. Calculating this average using the number of executions in each year as weights yields the higher value of 10.86 years. The comparable figures calculated over the sub-period 1984–1997 (corresponding roughly to the time period analyzed by Mocan and Gittings) are 8.6 and 9.4 years respectively. We replicated the results of Mocan & Gittings, utilizing 7, 8, 9 and 10 year lags of the sentencing variable to normalize state execution totals. Using 7 lags, the coefficient on executions remains negative and significant (P < 0.02). However, for 8, 9 and 10 year lags, the coefficient is not significantly different from zero.Footnote 22 Having raised this concern over the lag length, both theoretically and empirically, and the incomplete specification of the cost function more generally, we reserve further discussion of the degree to which the Mocan and Gittings results are informative to the critiques and response to the critiques that follow.

Katz, Levitt, and Shustorovich

In an analysis of state-level panel data for the period 1950–1990, Katz et al. (2003) estimate the impacts of overall prison death rates and deaths by execution on murder rates, violent crime rates, and property crime rates. The authors argue from the outset that it is difficult to believe that the additional risk through execution during the post-1977 period is sufficient to have a measurable deterrent effect on a group of generally highly present-oriented people. They make this argument based on the relative rarity of an execution, the small fraction of convicted murders who are punished by execution, and the relatively high mortality rate through other causes faced by those who are perhaps the most likely to commit criminal homicide in the United States.

Their principal model estimates involve fixed effect panel regressions of crime rates on inmate deaths for all causes normalized by the state prison population and executions also normalized by the prison population; the latter being a key difference in specification relative to the papers we have reviewed thus far. The models include fixed effects for states and years. All models are estimated by weighted least squares with state population share as weights and the authors tabulate standard errors clustered at the state-decade level.

The authors find fairly stable parameter estimates for the prison death rate’s association with homicide but unstable, sometimes positive, sometimes negative estimates of the execution rate’s association with homicide. The evidence with regards to violent crime, however, yields more consistent results. The effect of prison death rates is negative and statistically significant in most models, while the execution rate is unstable across specification. Similar findings are observed for property crime. Finally, the authors explore model results where they allow for several lags of the prison death rate and prison execution rate. The results here parallel the findings from the models without additional lags.

Donohue and Wolfers (2005) reproduce the original results in Katz, Levitt and Shustorovich and provide additional point estimates with the data extended from 1950 to 2000 and an even larger data set covering the period from 1934 to 2000. Their reproduction of the original model specifications similarly shows execution rate effects that are highly unstable across specification (with negative significant effects of the execution rate in three of the eight model specifications estimated and insignificant effects in the remainder). When the data are extended through 2000, none of the execution effects are significant while model estimates on the longer time period from 1934 to 2000 yield several coefficients indicative of a positive significant effect of the execution rate on murder rates.

The specification of the execution risk in this paper has been criticized by Mocan and Gittings (2010) as not accurately reflecting the risk of execution for an individual who is considering committing murder. Certainly, as the overwhelming majority of inmates in prison are not on death row, an execution risk normalized by the overall prison population does not provide a gauge of the execution risk faced by the average prison inmate. Again we note that it is unknown how potential murderers perceive execution risks. We will defer a more complete discussion of alternative normalizations until later in this paper.

Challenges to Initial Studies