Abstract

Smiles are universal but nuanced facial expressions that are most frequently used in face-to-face communications, typically indicating amusement but sometimes conveying negative emotions such as embarrassment and pain. Although previous studies have suggested that spatial and temporal properties could differ among these various types of smiles, no study has thoroughly analyzed these properties. This study aimed to clarify the spatiotemporal properties of smiles conveying amusement, embarrassment, and pain using a spontaneous facial behavior database. The results regarding spatial patterns revealed that pained smiles showed less eye constriction and more overall facial tension than amused smiles; no spatial differences were identified between embarrassed and amused smiles. Regarding temporal properties, embarrassed and pained smiles remained in a state of higher facial tension than amused smiles. Moreover, embarrassed smiles showed a more gradual change from tension states to the smile state than amused smiles, and pained smiles had lower probabilities of staying in or transitioning to the smile state compared to amused smiles. By comparing the spatiotemporal properties of these three smile types, this study revealed that the probability of transitioning between discrete states could help distinguish amused, embarrassed, and pained smiles.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Among all the possible combinations of facial components, the smile is the most pervasive facial pattern in our daily lives, and many researchers interested in emotional communication have focused on this expression (e.gMartin et al., 2017; Miles, 2009; Perusquía-Hernández et al., 2019). Although smiles tend to correspond to positive experiences such as amusement, joy, and happiness (e.g., Ekman, 2003; Reisenzein et al., 2013), they have been observed in a variety of contexts, including unpleasant situations. For example, people smile when experiencing embarrassment (Keltner, 1995), pain (Kunz et al., 2013), discomfort (LeResche et al., 1990), and distress (Ansfield, 2007) and to facilitate social interactions (e.g., Kraut & Johnston, 1979), to mask negative emotions (Ekman et al., 1988) or to signal affiliation, dominance (Martin et al., 2017, 2021; Orlowska et al., 2018; Rychlowska et al., 2017), and trustworthiness (Krumhuber et al., 2007). In addition, people use smiles to achieve many ends in conversations (Bavelas & Chovil, 2018; Chovil, 1991).

Despite the variety of contexts where smiles occur, the morphological/anatomical/spatial differences between smiles and internal states, including emotional ones, remain elusive. Ekman (1985) proposed that anatomical differences characterized several smiles (e.g., dampened and miserable smiles), but Bavelas and Chovil (2018) clearly indicated that the criteria applied were not convincing. Several researchers have suggested that Ekman’s criteria do not seem to be based on data from actual emotional situations (for details, see Leys, 2017; Mandal & Awasthi, 2015), and the reliance of Ekman’s work on emotion labeling has been criticized (Barrett, 2017; Barrett et al., 2019). In the art field, smiles with different morphological properties based on Ekman’s work have been described in terms of facial movement levels (Faigin, 2012; Mascaró et al., 2021), but empirical and academic evidence is lacking. Given the lack of research on the spatial patterns of different smiles, it is important to obtain more facial data corresponding to different internal states.

What are the spatial properties that distinguish various smiles? The Duchenne marker is the most common spatial marker used to identify smiles driven by genuine positive feelings (Duchenne, 1862/1990; Ekman et al., 1990). Facial muscle movement can be described as eye constriction, which creates crow’s feet around the eyes and slightly lowers the outer eyebrow (Ekman et al., 2002). However, many studies have shown that a smile with eye constriction, termed the Duchenne smile, does not necessarily correspond to positive emotional states (Crivelli et al., 2015; Krumhuber & Manstead, 2009; Namba et al., 2017b). In a recent and notable study, Girard et al. (2021) investigated many types of smiles (amusement, embarrassment, fear, and physical pain) and concluded that the view that eye constriction in smiles is associated only with positive experiences lacks support. In other words, even when people were not experiencing positive emotions (e.g., during physical pain), they showed Duchenne smiles. Thus far, it has been difficult to map specific internal states to facial patterns on an anatomical basis. Although we acknowledge that facial patterns are associated in various ways with emotional contexts (Fridlund, 1994, 2017; Scarantino, 2017), collecting data related to commonly observed patterns of facial expression that correlate with distinct emotions is important for a better understanding of facial expressions.

As well as spatial patterns, such as the Duchenne marker, the temporal properties of emotional expressions are also likely to be statistically correlated with internal states. For example, Namba et al. (2021) used cross-correlation analysis to reveal that genuine surprise differs from deliberate displays of surprise, and deliberate displays also differ from one another in terms of their temporal properties. Temporal indicators that can distinguish between spontaneous and posed smiles, such as duration (Schmidt et al., 2006), synergies (Perusquía-Hernández et al., 2021), and sequences (Namba et al., 2017b), have been reported. Recent studies have emphasized the importance of dynamic aspects of facial expressions (Jack & Schyns, 2017; Krumhuber et al., 2013; Sato et al., 2019b), as dynamic facial expressions can capture more attention (Caudek et al., 2017), induce more mimicry behavior (Philip et al., 2018), and activate the visual and social-related areas of the brain (Sato et al., 2015). When investigating the relationship between the internal state and emotional expression, it is important to consider not only spatial but also temporal properties.

When studying the spatiotemporal properties of facial expressions, the ecological validity of the expressions is an important issue. As recent technology has improved, research considering dynamic facial expressions has employed a data-driven approach, rooted in the perceptual emotion categorizations of the observer (Jack et al., 2014; Jack & Schyns, 2017). However, the question of whether randomly generated facial expressions observed via a data-driven approach can be observed in daily life remains unresolved. In fact, Ambadar et al. (2009) indicated that the meanings of particular smile characteristics perceived by decoders did not necessarily agree with the messages intended by encoders. Many studies have also investigated acted/deliberated facial expressions of emotion to describe the correspondence between facial components and inner states or emotion perceptions (Carroll & Russell, 1997; Cordaro et al., 2018; Gosselin et al., 2010; Le Mau et al., 2021). Posed data (facial expressions made by actors) may include noise due to “expressive controls” (Kunzmann et al., 2005), and it is difficult to clarify what constitutes a sincere expression. Therefore, this study focused on natural facial expressions, i.e., spontaneous responses to emotion-eliciting stimuli.

In summary, the spatial and temporal properties of the multiple types of smiles remain unclear. To clarify whether distinct spatiotemporal dynamics exist among different types of smiles, the present study used spontaneous facial expressions to ensure ecological validity. For this purpose, we used an expanded version of the BP4D+ database (termed EB+ below; Ertugrul et al., 2019a; Zhang et al., 2016). This database comprises spontaneous behaviors performed in response to a series of emotions induced by an experimenter. Specifically, participants engaged in a task that evoked multiple emotions such as amusement, embarrassment, and pain, and the facial responses were manually coded, frame by frame, using the Facial Action Coding System (FACS), which is the most objective and comprehensive system available for describing facial movements (Ekman et al., 2002). FACS can describe all facial movements by combining facial components, termed action units (AUs). Two or more coders in a team of five expert FACS coders coded each facial movement in a video clip. In general, this database has been used when developing the automated detection algorithm of AUs (e.g., Ertugrul et al., 2020; Ertugrul et al., 2019b; Yang et al., 2019). To investigate the spatiotemporal features of different smiles, we analyzed annotation data indicating whether an AU occurred and applied a hidden Markov model, which can estimate the transition from one latent discrete state to another. Because such transitions between states reflect the spatiotemporal dynamics of facial expressions, it is expected that the transition matrix for each smile will include features distinguishable from other types of smiles.

To our knowledge, this study constitutes the first exploration of the spatiotemporal properties of three types of smiles: amused, embarrassed, and pained. Although no specific hypothesis is proposed, some predictions can be made based on previous related studies. Several studies have reported that the smile during a depressed or embarrassed state included more tense facial movements, such as a dimpler, lip corner depressor, and lip press (Girard et al., 2013; Keltner, 1995). Moreover, Girard et al. (2021) showed that the frequency of Duchenne smiles was decreased when in pain. Prkachin (1992) suggested that the prototypical pain expression includes lowering of the brows, constriction of the eyes, and raising of the upper lip. Therefore, compared to amused smiles, embarrassed/pained smiles are expected to be more tense, and pained smiles are also hypothesized to show activation of prototypical facial muscles (Prkachin, 1992) instead of the Duchenne smile. Regarding temporal properties, the exploratory nature of the study did not permit directional hypotheses.

Method

Data

To clarify the spatiotemporal properties of different smiles, this study used a spontaneous facial behavior database, the EB+ (Ertugrul et al., 2019a; Zhang et al., 2016). Participants engaged in 14 emotion-elicitation tasks in a laboratory environment, and their facial reactions were recorded. Because the availability of facial data with sufficient manual FACS annotations was limited, we analyzed facial responses to three tasks: listening to an amusing joke (amusement condition), improvising a “silly” song (embarrassment condition), and submerging a hand in ice water (physical pain condition). The EB+ study was approved by the governing institutional review board, and all participants consented to having their data used in further research and their images published in scientific journals. The EB+ database does not include age information, but a previous study using the BP4D+ with 140 participants (Zhang et al., 2016) suggests that the age range was approximately 18–30 years (Girard et al., 2021).

The EB+ was manually annotated, frame by frame, by teams of highly qualified, certified FACS coders (Ertugrul et al., 2019a). Each AU was assigned one of three values: 0 indicated absence of the target AU, 1 indicated its presence, and 9 indicated missing data. The focus of the current study was the major components of facial behaviors, so the following 23 AUs were analyzed: 1, 2, 4, 5, 6, 7, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 22, 23, 24, 27, and 28 (Fig. 1). According to Zhang et al. (2016), the average score of reliability (S) for AU1, 2, 4, 6, 7, 10, 11, 12, 14, 15, 16, 17, 20, and 23 was 0.79. All available data, which had been manually coded, were used in the current study. The number of participants with data differed among conditions: amusement = 193 (113 females, 80 males), embarrassment = 186 (112 females, 74 males), physical pain = 194 (113 females, 81 males). The coded number differed between the conditions due to some unexpected error (e.g., human error and data corruption) that occurred during the annotation phase. All of the following results used these data as raw data.

Action units (AUs) analyzed in this study. Various facial configurations were created using FaceGen (Singular Inversions, Inc., Toronto, ON, CA) and FACSGen (Krumhuber et al., 2012), with the AUs set to maximum intensity. Two AUs were not included in these applications: AU19 (“tongue show”) and AU28 (“lip suck”)

Statistical Analysis

The spatiotemporal properties of each facial AU are presented as high-dimensional data (in our case, 23 dimensions). Therefore, it is helpful to apply a dimensionality reduction technique to obtain interpretable features in a low-dimensional space (e.g., Nguyen & Holmes, 2019). Indeed, dimensionality-reduction algorithms based on nonnegative matrix factorization have been used successfully to describe dynamic facial expression patterns that reliably and distinctly correspond with synergies in facial movements (Delis et al., 2016). Perusquía-Hernández et al. (2021) categorized facial movement synergies as groups of AUs moving together, thus demonstrating the advantage of nonnegative matrix factorization as a tool to identify discrete patterns from streams of smiles. To perform dimension reduction for binary data, we used Bayesian mean-parameterized nonnegative binary matrix factorization (NBMF: Lumbreras et al., 2020). This model can provide factors comparable to previous approaches, such as logistic principal component analysis (e.g., Landgraf & Lee, 2020), and the number of used latent dimensions (i.e., AU spatial patterns) can be automatically determined by the observed data. To reveal the spatial properties of different smiles, we estimated the number of AU patterns that reliably describe observed facial dynamics using the Beta–Dirichlet model with a collapsed Gibbs sampler, then applied the default settings (\(\alpha ,\, \beta ,\, \gamma\,=\,1, K \,=\, 10,\text{ iterations} \,=\, 1000\)). This NBMF resulted in the number and properties of AU patterns for efficiently describing the observed dynamic facial movements.

To compare the effects of the three conditions (amusement, embarrassment, and physical pain) on the AU patterns computed by NBMF, we further explored differences in spatial properties among the conditions using a hierarchical linear model, which controlled for differences between expressers. In this model, dependent variables are the loadings of AU patterns. Given that previous studies often emphasized the correspondence between smiles and positive states, such as amusement rather than embarrassment and pain (e.g., Reisenzein et al., 2013), the current study used the amusement condition as the intercept. To control for Type 1 errors, all p-values were adjusted according to the number of conditions, as with Bonferroni procedures (0.05/3 = 0.016).

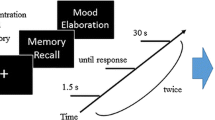

To investigate temporal properties, a hidden Markov model was applied to classify the latent discrete states based on the NBMF values. Hidden Markov models can provide latent discrete states via time-series data. This approach has been used to describe the structure of dynamics in animal behavior (e.g., Anderson & Perona, 2014; Tao et al., 2019; Wiltschko et al., 2015). In general, hidden Markov models generate a sequence of several latent states (Eddy, 2004); they can be used to calculate the transition probabilities for a person’s facial expression sequence (i.e., the Markov chain distribution), as shown in Fig. 2. We created an underlying Markov chain distribution to indicate the transitions from one discrete state to another (\({p}_{staying}\) in Fig. 2: the probability of staying in a given state; \({p}_{transition}\) in Fig. 2: the probabilities of transitions to individual states). To clarify the probability of transitioning between states for the different types of smiles, we also used the hierarchical linear model, which controlled for differences between expressers.

All analyses, except the hidden Markov model, were performed using R statistical software (version 4.0.3; https://www.r-project.org/) and the “data.table” (Dowle & Srinivasan, 2021), “tidyverse” (Wickham et al., 2019), “rMMLEDirBer” (Lumbreras et al., 2020), “markovChain” (Spedicato, 2017), and “lmerTest” packages (Kuznetsova et al., 2017). The hidden Markov model was generated using the Python module “hmmlearn” (https://hmmlearn.readthedocs.io/en/latest/index.html). The codes used have been made available online as Supplementary Material (https://osf.io/2n7tv/?view_only=66ec64a61e1847e18b8f9e020a9f1a11).

Results

Spatial Properties

To perform dimension reduction of the binary data, we used Bayesian mean-parameterized NBMF (Lumbreras et al., 2020). The results revealed six AU patterns for amused, embarrassed, and pained smiles (Fig. 3, Supplemental Fig. 1). Analysis of the relative contribution of each AU showed that AU patterns 1, 3, 4, 5, and 6 exhibited AU6 (cheek raiser), AU7 (lid tightener), AU10 (upper lip raiser), and AU12 (lip corner puller). These AUs can result in the Duchenne smile, i.e., a smile with eye constriction. AU patterns 1, 4, 5, and 6 also showed AU11 (nasolabial deepener). While AU pattern 5 exhibited a tensed smile that included AU15 (lip corner puller) and AU23 (lip tightener), AU patterns 1, 4, and 6 also exhibited AU16 (lower lip depressor), which can be considered to correspond to opening the mouth in this database. AU patterns 1, 4, and 6 had very similar AU combinations, but AU pattern 6 had a slightly smaller AU11, while AU pattern 4 a larger horizontal movement (AU20: lip stretcher). The results revealed five variations of the Duchenne smile. Only AU pattern 2 exhibited a unique expression, comprising AU4 (brow lowerer), AU7 (lid tightener), AU10 (upper lip raiser), AU14 (dimpler), AU15 (lip corner depressor), AU17 (chin raiser), AU23 (lip tightener), and AU24 (lip pressor). We defined this spatial pattern as facial tension, because all movements induce specific tension on the face. As a preliminary analysis, we confirmed the gender differences in their AU patterns using a 2 (gender: female, male) × 3 (condition: amusement, embarrassment, and pain) mixed-design analysis of variance. We found that female participants tended to more smile than did male participants [for AU patterns 1 and 3–6: Fs > 3.85, ps < 0.05; AU pattern 2: F (1, 181) = 0.57, p = 0.45]. This result was consistent with the findings in previous studies (e.g., LaFrance et al., 2003; McDuff et al., 2017). However, there was no interaction effect between the condition and gender (Fs < 2.32, ps > 0.10), so subsequent analyses compounding gender variables are reported.

The average value of each AU pattern was calculated and analyzed by a hierarchical model, to assess differences among the conditions (Fig. 4). Hierarchical models with Bonferroni correction showed that all intercepts were significantly greater than zero (t > 46.29, p < 0.001). The amusement condition elicited expressions corresponding to all facial patterns among the six AU patterns. No difference between the amusement and embarrassment conditions was observed among the AU patterns (t < 1.39, p > 0.16). However, all AU patterns showed a significant difference between pain and amusement conditions. The pain condition was associated with significantly greater facial tension in AU pattern 2 (t = 6.78, p < 0.001); all other AU patterns showed significantly higher values in the amusement condition (t > 8.84, p < 0.001).

In summary, the pain condition was associated with a lower rate of Duchenne smiles and increased facial tension. As a complementary analysis, we compared the number of frames in which AU12 (lip corner puller) appeared and found similar results (amused: 78%; embarrassed: 78%; and pained: 32%). However, there was no spatial difference between the amusement and embarrassment conditions.

Temporal Properties

To assess the temporal properties of the different smiles, the latent discrete states were clustered by applying NBMF to all data in the hidden Markov model. To determine the number of latent states, we plotted the changes in the Bayesian information criterion (BIC) (Fig. 5). The number of latent states was set to five because the difference between four and six BIC appeared small, which can be interpreted as reflecting the evidence lower bound (ELBO).

Figure 6 shows each discrete state computed by the hidden Markov model. Visual inspection of Fig. 6 indicates that three AU patterns (i.e., 1, 4, and 6: all strong open-mouth Duchene smiles) exhibited State 1, which can be interpreted as a strong smile state. Two AU patterns (i.e., 5 and 2, tensed smile and facial tension, respectively) exhibited State 2, which may be regarded as a nervous smile (tensed smile state). State 3 was clearly seen in AU pattern 2; it can be interpreted as facial tension (facial tension state). State 4 is characterized by little expression compared to other states (relatively neutral state). State 5 is characterized by a weak smile (weak smile state).

Table 1 shows the mean values of the transition matrix for each condition, and Supplemental Fig. 2 visually compares the amused condition to the other two conditions. A hierarchical model with Bonferroni correction allowed the three conditions to be compared in terms of the probability of staying in a given state; the probabilities of transition to each state were also compared.

Probability of Staying in Each State

For all states, the probability of staying in that state was statistically greater than zero (intercept: t > 16.32, p < 0.001). For States 1, 2, 4 and 5, the probabilities of staying in the pain condition were lower than that of staying in the amusement condition (t > 7.51, ps < 0.001), and there were no significant differences with respect to the embarrassment condition (t < 1.83, p > 0.20). More specifically, the probabilities of staying in States 1, 2, and 5 (related to smiles) were lower only in the pain condition, whereas the probability of staying in State 4 (relatively neutral expression) was higher under the pain condition. As for State 3, the probabilities of staying in the embarrassment and pain conditions were significantly higher than that of staying in the amusement condition (t > 2.66, ps < 0.03).

Taken together, these findings indicate that, in the amusement condition, staying in a smile-related state was likely (i.e., States 1, 2, and 5), whereas in the pain condition there was a high probability of staying in a relatively neutral state (State 4) or facial tension state (State 3). The embarrassed condition had dynamic properties related to engaging in a smile-related state, similar to the amusement condition, as well as in a tense state (State 3; consistent with the pain condition).

Probability of Transition

Given that the analysis of the probability of transitioning produced many comparisons, we report only the main comparisons between conditions (amusement vs. embarrassment/pain) to avoid redundancy. Detailed statistical results can be found in Supplemental Table 1. The probability of transitioning from State 2 (tensed smile) to State 1 (strong smile) was reduced only under the pain condition (t = 4.42, p < 0.001). In State 3 (facial tension), the embarrassment condition had a significantly higher probability of transitioning to States 2 (tensed smile) and 5 (weak smile) than did the amusement condition (t > 2.55, p < 0.04). Also, the probability of transitioning to State 4 (relatively neutral) was increased only under pain (t = 2.64, p = 0.03). The probability of transitioning from State 4 (relatively neutral) to State 5 (weak smile) was increased only by embarrassment (t = 2.65, p = 0.03). In State 5 (weak smile), the pain condition showed a low probability of transitioning to State 1 (t = 3.77, p < 0.001) and a high probability of transitioning to State 4 (t = 3.34, p < 0.003). No other probability of transitioning was significant (t < 2.03, p > 0.12).

Compared to an amused smile, an embarrassed smile tended to transition from facial tension and the relatively neutral state to a weak smile state. The embarrassed smile also tended to change from facial tension to a tensed smile state. Regarding the pained smile, the probability of transition from a tensed or weak smile state to a strong smile state was low, although a weak smile changed readily to a relatively neutral state.

Discussion

This study aimed to clarify the spatiotemporal properties of amused, embarrassed, and pained smiles via analysis of spontaneous facial behaviors. The results revealed the key spatiotemporal properties of the different smiles. First, NBMF identified six facial patterns. One of these was based on a combination of AUs that could be interpreted as facial tension: AU4 (brow lowerer), AU7 (lid tightener), AU10 (upper lip raiser), AU14 (dimpler), AU15 (lip corner depressor), AU17 (chin raiser), AU23 (lip tightener), and AU24 (lip pressor). The other five combinations comprised several types of Duchenne smiles, with various degrees of nasolabial deepener (AU11), dimpler (AU14), mouth stretching (AU20), and mouth opening (AU16). Second, while there was no spatial difference between amused and embarrassed smiles, the pained smile showed more tension compared to the amused one. Finally, a hidden Markov model revealed the probability of transitioning from one specific state to another. The embarrassed and pained smiles had high probabilities of remaining in a state of facial tension, and the pained smile had a low probability of staying in a state related to smiling. Furthermore, the embarrassed smile was more inclined to transition from facial tension to tensed smile states, and from facial tension and relatively neutral states to the weak smile state, than the amused smile. The pained smile changed from a weak smile state to a relatively neutral state more frequently than from a tensed/weak smile state to a strong smile state.

NBMF identified five smile and facial tension patterns, in a data-driven way. All five smiling AU patterns exhibited the Duchenne marker (AU6) and eye constriction (AU7), consistent with previous studies (Crivelli et al., 2015; Girard et al., 2021; Krumhuber & Manstead, 2009; Namba et al., 2017b). The Duchenne marker is important for discerning meaning (Ambadar et al., 2009; Malek et al., 2019); however, our study provides further evidence that correspondence between genuine positive emotional states and the Duchenne marker is not always present. In terms of facial tension, some of the observed facial action units in the pain condition were shared with the pain “prototype” (e.g., AU4, 7, and 10: Prkachin, 1992). However, the observed facial tension in this study and Prkachin’s prototypical pain expression are not fully in accordance with each other, particularly for the lower part of the face: AU14, 15, 17, 23, and 24. Namba et al. (2017a) proposed that these lower facial actions might serve to suppress negative experiences in spontaneous facial behavior. Consistent with this notion, when submerging a hand into ice water, there is a high possibility that facial tension associated with enduring pain may occur instead of prototypical pain expressions. Further research using an “elicitation-based approach” is needed to better understand spontaneous facial behavior (Zloteanu & Krumhuber, 2021).

Interestingly, there was no clear difference in spatial properties between amused and embarrassed smiles. This raises the possibility that the same smile was produced during embarrassment and amusement. Although recent research encoding aspects of facial expressions has relied on static facial images (e.g., Le Mau et al., 2021; Sato et al., 2019a), the present study suggests that it is challenging to identify differences among emotion categories based only on static morphological properties, particularly for smiles. With regard to morphological differences between amused and embarrassed behaviors, Keltner et al. (2019) emphasized the multimodality of nonverbal emotional behavior. Keltner (1995) indicated that gaze direction was an important marker of embarrassment. Therefore, it may be useful to include gaze direction and facial patterns in future studies of nonverbal expressions of emotions.

In contrast to static spatial properties, temporal properties, which were revealed by the hidden Markov model of the probability of transitioning between discrete states, showed different results depending on smile type. High probabilities of remaining in smile-related states were seen for both amused and embarrassed smiles (State 1: strong smile state, State 2, tensed smile state, State 5: weak smile state), but this was not the case for the pained smile. As people often laugh under conditions of amusement and embarrassment, this result is not surprising. On the other hand, the probability of staying in State 3 (facial tension state) was equally high for embarrassed and pained smiles, and was higher for both of those smile types than for the amused smile. To our knowledge, this is the first study showing that amused smiles release facial tension relatively easily, whereas embarrassed and pained smiles do not. Additionally, whereas the inevitable pain of cold water elicits persistent facial tension, embarrassment may “stiffen” the face (e.g., when improvising a silly song). Analysis of the probability of remaining in a given state can help differentiate among the three smile types.

The analysis of the probability of transitioning from one state to another revealed some interesting results. Compared to amused smiles, embarrassed smiles tended to transition from facial tension and relatively neutral states to weak and tensed smile states. Thus, embarrassed smiles tend to change from a tense state via a weak or slightly tensed smile, suggesting a more gradual change from tension to a smile state. This supports earlier evidence of dynamic differences between spontaneously occurring embarrassed and amused smiles. The duration of spontaneous expressions differs depending on the nature of the task. In addition, multiple “peaks” can coexist in spontaneous expressions (e.gEkman & Rosenberg, 2005; Komori & Onishi, 2021). By comparing the probability of transitioning between discrete states, this study provides a useful framework for analyzing the spatiotemporal dynamics of natural facial expressions.

For pained smiles, transition from a tensed or weak smile state to a strong smile state was infrequent in this study, whereas transition from a weak smile state to a relatively neutral state was common. This finding is consistent with the spatial properties of pained smiles. The degree of difficulty of transitioning to a strong smile state when experiencing pain may depend on the reason for smiling. Amused smiles reflect a desire to convey a positive emotional state, to increase rapport with another person (Martin et al., 2017), whereas pain smiles may be expressed as a form of social appeasement (Singh & Manjaly, 2021) or facial feedback (which shows intraindividual differences) (Coles et al., 2019; Kraft & Pressman, 2012; Pressman et al., 2020). The current study corroborated evidence that differences in communicative gestures elicited by a given emotion can result in idiosyncratic state transitions.

The current study provided insight into the mechanisms of spontaneous amused, embarrassed, and pained smiles. However, the study also had several limitations. First, the types of smiles considered only represent a small proportion of all smile types. People may smile to signal affiliation or dominance (Martin et al., 2017, 2021; Orlowska et al., 2018; Rychlowska et al., 2017), to indicate no pain (Wong & Baker, 2001), to convey the “syntax” of a conversation (Bavelas & Chovil, 2018), or to mask negative affect (Ekman & Friesen, 1982; Gunnery et al., 2013). Clarifying the spatiotemporal properties of these types of smiles is important to improve understanding of facial expressions. Second, it was not clear as to what emotions the expressers actually experienced as their spontaneous facial expressions unfolded, as this study used an elicitation-based approach. For example, singing a silly song may have induced not only embarrassment but also amusement. To address these issues, it is important to obtain first-person accounts of emotional experiences. Given that emotional experience itself can never be empirically measured (e.g., Crivelli & Fridlund, 2019), emotion researchers should find an alternative method of measuring emotion. In this study, we analyzed about 600 spontaneous facial behaviors and aimed to reveal the spatiotemporal properties of three smiles using an elicitation-based approach. With respect to the elicitation situation, mouth movements such as speaking might have influenced observable facial movements. Indeed, visual inspection of the facial database used in the current study indicated that expressers’ mouths substantially moved when singing a song under the embarrassment condition; participants often said something before the punch line under the amusement condition. To further leverage ecological validity for investigation of spontaneous actions, it would be useful to analyze spontaneous facial behaviors during conversation (e.g., Sayatte Group Formation Task Spontaneous Facial Expression Database, Girard et al., 2017; the Stanford Emotional Narrative Dataset, Ong et al., 2019). Finally, the reliance on a single database limits the generalizability of the findings. It will be useful to investigate whether the observed spatiotemporal features of amused, embarrassed, and pained smiles occur under different elicitation conditions. For that purpose, the development of more facial expression databases is expected. For EB+, only binary data indicates the presence or absence of the coded AU. In the pain facial expressions that data-driven approach clarified (Fig. 2 in Chen et al., 2018), there may be asymmetric patterns in upper lip raiser movements. Moreover, AU intensity scores would be important, because it is assumed that the strength of the expression covaries with the strength of the experience (Tourangeau & Ellsworth, 1979). Although BP4D+ (not EB+) has AU intensity scores that can be referenced, the types of AUs are also limited (AU6, 10, 12, 14 and 17). Thus, it is desirable to have asymmetry and intensity information concerning multiple AUs in the facial database in future research studies.

In conclusion, this study provides data on the spatiotemporal properties of amused, embarrassed, and pained smiles. There was no difference in static spatial properties between embarrassed and amused smiles, whereas the pain condition was associated with a lower rate of Duchenne smiles and greater facial tension compared to amused smiles. Moreover, embarrassed and pained smiles were more likely to remain in a state of facial tension than were amused smiles. Embarrassed smiles showed a more gradual change from tension to smile states than amused smiles. Pained smiles were less likely to transition to other smiling states than amused smiles. The present study provides the first empirical data on the spatiotemporal patterns that differentiate amused, embarrassed, and pained smiles.

References

Ambadar, Z., Cohn, J. F., & Reed, L. I. (2009). All smiles are not created equal: Morphology and timing of smiles perceived as amused, polite, and embarrassed/nervous. Journal of Nonverbal Behavior, 33(1), 17–34.

Anderson, D. J., & Perona, P. (2014). Toward a science of computational ethology. Neuron, 84(1), 18–31.

Ansfield, M. E. (2007). Smiling when distressed: When a smile is a frown turned upside down. Personality and Social Psychology Bulletin, 33(6), 763–775.

Barrett, L. F. (2017). How emotions are made: The secret life of the brain. Houghton Mifflin Harcourt.

Barrett, L. F., Adolphs, R., Marsella, S., Martinez, A. M., & Pollak, S. D. (2019). Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychological Science in the Public Interest, 20(1), 1–68.

Bavelas, J., & Chovil, N. (2018). Some pragmatic functions of conversational facial gestures. Gesture, 17(1), 98–127.

Carroll, J. M., & Russell, J. A. (1997). Facial expressions in Hollywood’s protrayal of emotion. Journal of Personality and Social Psychology, 72(1), 164–176.

Caudek, C., Ceccarini, F., & Sica, C. (2017). Facial expression movement enhances the measurement of temporal dynamics of attentional bias in the dot-probe task. Behaviour Research and Therapy, 95, 58–70.

Chen, C., Crivelli, C., Garrod, O. G., Schyns, P. G., Fernández-Dols, J. M., & Jack, R. E. (2018). Distinct facial expressions represent pain and pleasure across cultures. Proceedings of the National Academy of Sciences, 115(43), E10013–E10021.

Chovil, N. (1991). Discourse-oriented facial displays in conversation. Research on Language & Social Interaction, 25(1–4), 163–194.

Coles, N. A., Larsen, J. T., & Lench, H. C. (2019). A meta-analysis of the facial feedback literature: Effects of facial feedback on emotional experience are small and variable. Psychological Bulletin, 145(6), 610–651.

Cordaro, D. T., Sun, R., Keltner, D., Kamble, S., Huddar, N., & McNeil, G. (2018). Universals and cultural variations in 22 emotional expressions across five cultures. Emotion, 18(1), 75–93.

Crivelli, C., Carrera, P., & Fernández-Dols, J. M. (2015). Are smiles a sign of happiness? Spontaneous expressions of judo winners. Evolution and Human Behavior, 36(1), 52–58.

Crivelli, C., & Fridlund, A. J. (2019). Inside-out: From basic emotions theory to the behavioral ecology view. Journal of Nonverbal Behavior, 43(2), 161–194.

Delis, I., Chen, C., Jack, R. E., Garrod, O. G., Panzeri, S., & Schyns, P. G. (2016). Space-by-time manifold representation of dynamic facial expressions for emotion categorization. Journal of Vision, 16(8), 14.

Dowle, M. & Srinivasan, A. (2021). data.table: Extension of ‘data.frame’. R package version 1.14.0. Retrieved from https://CRAN.R-project.org/package=data.table

Duchenne, B. (1862/1990). The mechanism of human facial expression or an electro-physiological analysis of the expression of the emotions (A. Cuthbertson, Trans.) Cambridge University Press.

Eddy, S. R. (2004). What is a hidden Markov model? Nature Biotechnology, 22(10), 1315–1316.

Ekman, P. (1985). Telling lies. Berkeley Books.

Ekman, P. (2003). Emotions revealed: Recognizing faces and feelings to improve communication and emotional life. Times Books/Henry Holt.

Ekman, P., Davidson, R. J., & Friesen, W. V. (1990). The Duchenne smile: Emotional expression and brain physiology: II. Journal of Personality and Social Psychology, 58(2), 342–353.

Ekman, P., & Friesen, W. V. (1982). Felt, false, and miserable smiles. Journal of Nonverbal Behavior, 6, 238–252.

Ekman, P., Friesen, W. V., & Hager, J. C. (2002). Facial action coding system (2nd ed.). Research Nexus eBook.

Ekman, P., Friesen, W. V., & O’Sullivan, M. (1988). Smiles when lying. Journal of Personality and Social Psychology, 54, 414–420.

Ekman, P., & Rosenberg, E. L. (2005). What the face reveals: Basic and applied studies of spontaneous expression using the Facial Action Coding System. Oxford University Press.

Ertugrul, I. O., Cohn, J. F., Jeni, L. A., Zhang, Z., Yin, L., & Ji, Q. (2019a). Cross-domain au detection: Domains, learning approaches, and measures. 2019 14th IEEE international conference on automatic face & gesture recognition (FG 2019) (pp. 1–8). IEEE.

Ertugrul, I. O., Cohn, J. F., Jeni, L. A., Zhang, Z., Yin, L., & Ji, Q. (2020). Crossing domains for au coding: Perspectives, approaches, and measures. IEEE Transactions on Biometrics, Behavior, and Identity Science, 2(2), 158–171.

Ertugrul, I. O., Jeni, L. A., Ding, W., & Cohn, J. F. (2019b). AFAR: A deep learning based tool for automated facial affect recognition. 2019 14th IEEE international conference on automatic face & gesture recognition (FG 2019). IEEE.

Faigin, G. (2012). The artist’s complete guide to facial expression. Watson-Guptill.

Fridlund, A. J. (1994). Human facial expression: An evolutionary view. Academic Press.

Fridlund, A. J. (2017). The behavioral ecology view of facial displays, 25 years later. The science of facial expression (pp. 77–92). Oxford University Press.

Girard, J. M., Chu, W. S., Jeni, L. A., & Cohn, J. F. (2017). Sayette group formation task (GFT) spontaneous facial expression database. 2017 12th IEEE international conference on automatic face & gesture recognition (FG 2017) (pp. 581–588). IEEE.

Girard, J. M., Cohn, J. F., Mahoor, M. H., Mavadati, S., & Rosenwald, D. P. (2013). Social risk and depression: Evidence from manual and automatic facial expression analysis. 2013 10th IEEE international conference and workshops on automatic face and gesture recognition (FG) (pp. 1–8). IEEE.

Girard, J. M., Cohn, J. F., Yin, L., & Morency, L. P. (2021). Reconsidering the Duchenne smile: Formalizing and testing hypotheses about eye constriction and positive emotion. Affective Science, 2(1), 32–47.

Gosselin, P., Perron, M., & Beaupré, M. (2010). The voluntary control of facial action units in adults. Emotion, 10(2), 266–271.

Gunnery, S. D., Hall, J. A., & Ruben, M. A. (2013). The deliberate Duchenne smile: Individual differences in expressive control. Journal of Nonverbal Behavior, 37(1), 29–41.

Jack, R. E., Garrod, O. G., & Schyns, P. G. (2014). Dynamic facial expressions of emotion transmit an evolving hierarchy of signals over time. Current Biology, 24(2), 187–192.

Jack, R. E., & Schyns, P. G. (2017). Toward a social psychophysics of face communication. Annual Review of Psychology, 68, 269–297.

Keltner, D. (1995). Signs of appeasement: Evidence for the distinct displays of embarrassment, amusement, and shame. Journal of Personality and Social Psychology, 68(3), 441–454.

Keltner, D., Sauter, D., Tracy, J., & Cowen, A. (2019). Emotional expression: Advances in basic emotion theory. Journal of Nonverbal Behavior, 43, 133–160.

Komori, M., & Onishi, Y. (2021). Investigating spatio-temporal features of dynamic facial expressions. Emotion Studies, 6(1), 77–83.

Kraft, T. L., & Pressman, S. D. (2012). Grin and bear it: The influence of manipulated facial expression on the stress response. Psychological Science, 23(11), 1372–1378.

Kraut, R. E., & Johnston, R. E. (1979). Social and emotional messages of smiling: An ethological approach. Journal of Personality and Social Psychology, 37(9), 1539–1553.

Krumhuber, E. G., Kappas, A., & Manstead, A. S. (2013). Effects of dynamic aspects of facial expressions: A review. Emotion Review, 5(1), 41–46.

Krumhuber, E. G., & Manstead, A. S. (2009). Can Duchenne smiles be feigned? New evidence on felt and false smiles. Emotion, 9(6), 807–820.

Krumhuber, E., Manstead, A. S., Cosker, D., Marshall, D., Rosin, P. L., & Kappas, A. (2007). Facial dynamics as indicators of trustworthiness and cooperative behavior. Emotion, 7(4), 730–735.

Krumhuber, E. G., Tamarit, L., Roesch, E. B., & Scherer, K. R. (2012). FACSGen 2.0 animation software: generating three-dimensional FACS-valid facial expressions for emotion research. Emotion, 12(2), 351–363.

Kunz, M., Prkachin, K., & Lautenbacher, S. (2013). Smiling in pain: Explorations of its social motives. Pain Research and Treatment, 2013, 1–87.

Kunzmann, U., Kupperbusch, C. S., & Levenson, R. W. (2005). Behavioral inhibition and amplification during emotional arousal: A comparison of two age groups. Psychology and Aging, 20(1), 144–158.

Kuznetsova, A., Brockhoff, P. B., & Christensen, R. H. (2017). lmerTest package: Tests in linear mixed effects models. Journal of Statistical Software, 82(1), 1–26.

LaFrance, M., Hecht, M. A., & Paluck, E. L. (2003). The contingent smile: A meta-analysis of sex differences in smiling. Psychological Bulletin, 129(2), 305–334.

Landgraf, A. J., & Lee, Y. (2020). Dimensionality reduction for binary data through the projection of natural parameters. Journal of Multivariate Analysis, 180, 104668.

Le Mau, T., Hoemann, K., Lyons, S. H., Fugate, J., Brown, E. N., Gendron, M., & Barrett, L. F. (2021). Professional actors demonstrate variability, not stereotypical expressions, when portraying emotional states in photographs. Nature Communications, 12(1), 1–13.

LeResche, L., Ehrlich, K. J., & Dworkin, S. F. (1990). Facial expressions of pain and masking smiles: Is “grin and bear it” a pain behavior? Pain, 41, S286.

Leys, R. (2017). The ascent of affect. University of Chicago Press.

Lumbreras, A., Filstroff, L., & Févotte, C. (2020). Bayesian mean-parameterized nonnegative binary matrix factorization. Data Mining and Knowledge Discovery, 34(6), 1898–1935.

Malek, N., Messinger, D., Gao, A. Y. L., Krumhuber, E., Mattson, W., Joober, R., ... Martinez-Trujillo, J. C. (2019). Generalizing Duchenne to sad expressions with binocular rivalry and perception ratings. Emotion, 19(2), 234–241.

Mandal, M. K., & Awasthi, A. (2015). Understanding facial expressions in communication: Cross-cultural and multidisciplinary perspectives. Springer.

Martin, J. D., Wood, A., Cox, W. T., Sievert, S., Nowak, R., Gilboa-Schechtman, E., ... Niedenthal, P. M. (2021). Evidence for distinct facial signals of reward, affiliation, and dominance from both perception and production tasks. Affective Science, 2(1), 14–30.

Martin, J., Rychlowska, M., Wood, A., & Niedenthal, P. (2017). Smiles as multipurpose social signals. Trends in Cognitive Sciences, 21(11), 864–877.

Mascaró, M., Serón, F. J., Perales, F. J., Varona, J., & Mas, R. (2021). Laughter and smiling facial expression modelling for the generation of virtual affective behavior. PLoS ONE, 16(5), e0251057.

McDuff, D., Girard, J. M., & El Kaliouby, R. (2017). Large-scale observational evidence of cross-cultural differences in facial behavior. Journal of Nonverbal Behavior, 41(1), 1–19.

Miles, L. K. (2009). Who is approachable? Journal of Experimental Social Psychology, 45(1), 262–266.

Namba, S., Kagamihara, T., Miyatani, M., & Nakao, T. (2017a). Spontaneous facial expressions reveal new action units for the sad experiences. Journal of Nonverbal Behavior, 41(3), 203–220.

Namba, S., Makihara, S., Kabir, R. S., Miyatani, M., & Nakao, T. (2017b). Spontaneous facial expressions are different from posed facial expressions: Morphological properties and dynamic sequences. Current Psychology, 36(3), 593–605.

Namba, S., Matsui, H., & Zloteanu, M. (2021). Distinct temporal features of genuine and deliberate facial expressions of surprise. Scientific Reports, 11(1), 1–10.

Nguyen, L. H., & Holmes, S. (2019). Ten quick tips for effective dimensionality reduction. PLoS Computational Biology, 15(6), e1006907.

Ong, D. C., Wu, Z., Tan, Z. X., Reddan, M., Kahhale, I., Mattek, A., & Zaki, J. (2019). Modeling emotion in complex stories: The Stanford Emotional Narratives Dataset. IEEE Transactions on Affective Computing, 12(3), 579–594.

Orlowska, A. B., Krumhuber, E. G., Rychlowska, M., & Szarota, P. (2018). Dynamics matter: Recognition of reward, affiliative, and dominance smiles from dynamic vs. static displays. Frontiers in Psychology, 9, 938.

Perusquía-Hernández, M., Ayabe-Kanamura, S., & Suzuki, K. (2019). Human perception and biosignal-based identification of posed and spontaneous smiles. PLoS ONE, 14(12), e0226328.

Perusquía-Hernández, M., Dollack, F., Tan, C. K., Namba, S., Ayabe-Kanamura, S., & Suzuki, K. (2021). Smile Action Unit detection from distal wearable Electromyography and Computer Vision. IEEE International Conference on automatic face and gesture recognition 2021 (FG 2021). IEEE.

Philip, L., Martin, J. C., & Clavel, C. (2018). Rapid facial reactions in response to facial expressions of emotion displayed by real versus virtual faces. i-Perception, 9(4), 2041669518786527.

Pressman, S. D., Acevedo, A. M., Hammond, K. V., & Kraft-Feil, T. L. (2020). Smile (or grimace) through the pain? The effects of experimentally manipulated facial expressions on needle-injection responses. Emotion. https://doi.org/10.1037/emo0000913

Prkachin, K. M. (1992). The consistency of facial expressions of pain: A comparison across modalities. Pain, 51, 297–306.

Reisenzein, R., Studtmann, M., & Horstmann, G. (2013). Coherence between emotion and facial expression: Evidence from laboratory experiments. Emotion Review, 5(1), 16–23.

Rychlowska, M., Jack, R. E., Garrod, O. G., Schyns, P. G., Martin, J. D., & Niedenthal, P. M. (2017). Functional smiles: Tools for love, sympathy, and war. Psychological Science, 28(9), 1259–1270.

Sato, W., Hyniewska, S., Minemoto, K., & Yoshikawa, S. (2019a). Facial expressions of basic emotions in Japanese laypeople. Frontiers in Psychology, 10, 259.

Sato, W., Kochiyama, T., & Uono, S. (2015). Spatiotemporal neural network dynamics for the processing of dynamic facial expressions. Scientific Reports, 5(1), 1–13.

Sato, W., Krumhuber, E. G., Jellema, T., & Williams, J. H. (2019b). Dynamic emotional communication. Frontiers in Psychology, 10, 2836.

Scarantino, A. (2017). How to do things with emotional expressions: The theory of affective pragmatics. Psychological Inquiry, 28(2–3), 165–185.

Schmidt, K. L., Ambadar, Z., Cohn, J. F., & Reed, L. I. (2006). Movement differences between deliberate and spontaneous facial expressions: Zygomaticus major action in smiling. Journal of Nonverbal Behavior, 30(1), 37–52.

Singh, A., & Manjaly, J. A. (2021). The distress smile and its cognitive antecedents. Journal of Nonverbal Behavior, 45(1), 11–30.

Spedicato, G. A. (2017). Discrete time Markov chains with R. The R Journal, 9, 84.

Tao, L., Ozarkar, S., Beck, J. M., & Bhandawat, V. (2019). Statistical structure of locomotion and its modulation by odors. eLife, 8, e41235.

Tourangeau, R., & Ellsworth, P. C. (1979). The role of facial response in the experience of emotion. Journal of Personality and Social Psychology, 37(9), 1519–1531.

Wickham, H., Averick, M., Bryan, J., Chang, W., McGowan, L. D. A., François, R., ... Yutani, H. (2019). Welcome to the Tidyverse. Journal of Open Source Software, 4(43), 1686.

Wiltschko, A. B., Johnson, M. J., Iurilli, G., Peterson, R. E., Katon, J. M., Pashkovski, S. L., ... Datta, S. R. (2015). Mapping sub-second structure in mouse behavior. Neuron, 88(6), 1121–1135.

Wong, D. L., & Baker, C. M. (2001). Smiling face as anchor for pain intensity scales. Pain, 89(2), 295–297.

Yang, L., Ertugrul, I. O., Cohn, J. F., Hammal, Z., Jiang, D., & Sahli, H. (2019). Facs3d-net: 3d convolution based spatiotemporal representation for action unit detection. 2019 8th International conference on affective computing and intelligent interaction (ACII) (pp. 538–544). IEEE.

Zhang, Z., Girard, J. M., Wu, Y., Zhang, X., Liu, P., Ciftci, U., ... Yin, L. (2016). Multimodal spontaneous emotion corpus for human behavior analysis. In: Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3438–3446).

Zloteanu, M., & Krumhuber, E. G. (2021). Expression authenticity: The role of genuine and deliberate displays in emotion perception. Frontiers in Psychology, 11, 4001.

Funding

This research was supported by JSPS KAKENHI (Grant No. JP20K14256 to SN, JP21J00063 to HM) and Japan Science and Technology Agency-Mirai Program (Grant No. JPMJMI20D7 to WS).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Namba, S., Sato, W. & Matsui, H. Spatio-Temporal Properties of Amused, Embarrassed, and Pained Smiles. J Nonverbal Behav 46, 467–483 (2022). https://doi.org/10.1007/s10919-022-00404-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10919-022-00404-7