Abstract

Educators, school psychologists, and other professionals must evaluate student progress and decide to continue, modify, or terminate instructional programs to ensure student success. For this purpose, progress-monitoring data are often collected, plotted graphically, and visually analyzed. The current study evaluated the impact of three common formats for visual analysis (scatter plots, scatter plots with trend lines, and scatter plots with trend and aim lines) on the decision accuracy of 52 novice analysts. All participants viewed 18 time-series graphs that depicted student growth on a continuous metric (e.g., oral reading fluency). Participants rated each graph as depicting substantial progress, minimal progress, or no progress. The magnitude of the true slope for each graph was fixed to 3.00 (substantial progress), 0.75 (minimal progress), or 0 (no progress). Inferential analyses were used to determine the probability of a participant correctly identifying different magnitudes of trend in the presence of different visual aids (no visual aid, trend line, and trend line with aim line). The odds of correctly identifying trend were influenced by visual aid (p < .01) and trend magnitude (p < .01). The addition of a trend line resulted in a sharp increase in the probability of making a correct decision. Graphs depicting minimal progress reduced the probability of a correct decision.

Similar content being viewed by others

References

Allison, D. B., Franklin, R. D., & Heshka, S. (1992). Reflections on visual inspection, response guided experimentation, and Type I error rate in single-case designs. The Journal of Experimental Education, 61(1), 45–51.

Allison, D. B., & Gorman, B. S. (1993). Calculating effect sizes for meta-analysis: The case of the single-case. Behavior, Research, and Therapy, 31, 621–631.

Ardoin, S. P., & Christ, T. J. (2009). Curriculum-based measurement of oral reading: Standard errors associated with progress monitoring outcomes from DIBELS, AIMSweb, and an experimental passage set. School Psychology Review, 38, 266–283.

Ardoin, S. P., Christ, T. J., Morena, L. S., Cormier, D. C., & Klingbeil, D. A. (2013). Exploring the evidence behind Curriculum Based Measurement of oral Reading (CBM-R) decision rules. Journal of School Psychology.

Baer, D. M. (1977). Perhaps it would be better not to know everything. Journal of Applied Behavior Analysis, 10, 167–172.

Begeny, J. C., & Martens, B. K. (2006). Assessing pre-service teachers’ training in empirically-validated behavioral instruction practices. School Psychology Quarterly, 21(3), 262–285.

Bergan, J. R., & Kratochwill, T. R. (1990). Behavioral consultation. Columbus, OH: Merrill.

Betts, J., Pickart, M., & Heistad, D. (2009). An investigation of the psychometric evidence of CBM-R passage equivalence: Utility of readability statistics and equating for alternate forms. Journal of School Psychology, 47(1), 1–17.

Brossart, D. F., Parker, R. I., Olson, E. A., & Mahadevan, L. (2006). The relationship between visual analysis and five statistical analyses in a simple AB single-case research design. Behavior Modification, 30, 531–563.

Busk, P. L., & Marascuilo, L. A. (1992). Statistical analysis in single-case research: Issues, procedures, and recommendations, with applications to multiple behaviors. In T. R. Kratochwill & J. R. Levin (Eds.), Single-case research design and analysis (pp. 159–185). Hillsdale, NJ: Erlbaum.

Campbell, D. T., Stanley, J. C., & Gage, N. L. (1963). Experimental and quasi-experimental designs for research: By Donald T. Campbell and Julian C. Stanley. London: R. McNally.

Cates, G. L. (2009). Making decisions with data in response to intervention schools. Boston, MA: Workshop presented at the National Association of School Psychologists.

Christ, T. J. (2006). Short-term estimates of growth using curriculum-based measurement of oral reading fluency: Estimating standard error of the slope to construct confidence intervals. School Psychology Review, 35, 128–133.

Christ, T. J. (2008). Best practices in problem analysis. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology (Vol. V, pp. 159–176). Bethesda, MD: National Association of School Psychologists.

Christ, T. J., & Ardoin, S. P. (2009). Curriculum-based measurement of oral reading: Passage equivalence and probe-set development. Journal of School Psychology, 47, 55–75. doi:10.1016/j.jsp.2008.09.004.

Deno, S. L. (1985). Curriculum-based measurement: The emerging alternative. Exceptional Children, 52, 219–232.

Deno, S. L. (1986). Formative evaluation of individual student programs: A new role for school psychologists. School Psychology Review, 15, 358–374.

Deno, S. L. (2003). Developments in curriculum-based measurement. The Journal of Special Education, 37(3), 184–192. doi:10.1177/00224669030370030801.

Deno, S. L., Fuchs, L. S., Marston, D. B., & Shin, J. (2001). Using curriculum-based measurement to establish growth standards for students with learning disabilities. School Psychology Review, 30, 507–524.

DeProspero, A., & Cohen, S. (1979). Inconsistent visual analysis of intrasubject data. Journal of Applied Behavior Analysis, 12, 285–296.

Derr-Minneci, T. F. (1990). A behavioral evaluation of curriculum-based assessment for reading: tester, setting and task demand effects on high vs. average vs. low level readers (Doctoral dissertation, Lehigh University).

Franklin, R. D., Gorman, B. S., Beasley, T. M., & Allison, D. B. (1997). Graphical display and visual analysis. In R. D. Franklin, D. B. Allison, & B. S. Gorman (Eds.), Design and analysis of single case research (pp. 119–158). Mahwah, NJ: Lawrence Erlbaum.

Fuchs, L. S., & Fuchs, D. (1986). Effects of systematic formative evaluation: A meta-analysis. Exceptional Children, 53, 199–208.

Fuchs, L. S., Fuchs, D., Hamlett, C. L., & Ferguson, C. (1992). Effects of expert system consultation within curriculum-based measurement using a reading maze task. Exceptional children, 58, 436–450.

Fuchs, L. S., Fuchs, D., Hosp, M., & Jenkins, J. R. (2001). Oral reading fluency as an indicator of reading competence: A theoretical, empirical, and historical analysis. Scientific Studies of Reading, 5, 239–256.

Furlong, M. J., & Wampold, B. E. (1982). Intervention effects and relative variation as dimensions in expertise of visual inference. Journal of Applied Behavior Analysis, 15(3), 415–421.

Gibson, G., & Ottenbacher, K. (1988). Characteristics influencing the visual analysis of single-subject data: An empirical analysis. The Journal of Applied Behavior Science, 24, 298–314.

Glass, G. V., Wilson, V. L., & Gottman, J. M. (1975). Design and analysis of time-series experiments. Boulder, CO: Colorado Associate University Press.

Good, R. H., & Shinn, M. R. (1990). Forecasting accuracy of slope estimates for reading curriculum-based measurement: Empirical evidence. Behavioral Assessment, 12, 179–193.

Graney, S. B. (2008). General education teacher judgments of their low-performing students’ short-term reading progress. Psychology in the Schools, 45(6), 537–549.

Grigg, N. C. (1987). The process of visual analysis of time series data in applied settings. Doctoral Dissertation University of Virginia.

Harbst, K. B., Ottenbacher, K. J., & Harris, R. (1991). Interrater reliability of therapists’ judgements of graphed data. Journal of the American Physical Therapy Association, 71, 107–115.

Hintze, J. M., Daly, E. J., III, & Shapiro, E. S. (1998). An investigation of the effects of passage difficulty level on outcomes of oral reading fluency progress monitoring. School Psychology Review, 15(1), 52–68.

Holvoet, J. (1983). Hey, Do we really have to take data? Journal of the Association for People with Severe Handicaps (JASH), 8(3), 56–70.

Horner, R. H., Carr, E. G., Halle, J., Mcgee, G., Odom, S., & Wolery, M. (2005). The use of single-subject research to identify evidence-based practice in special education. Exceptional Children, 71, 165–179.

Huitema, B. E. (1986). Autocorrelation in behavioral research: Wherefore art thou? In A. Poling & R. W. Fuqua (Eds.), Research methods in applied behavior analysis: Issues and advances (pp. 187–208). New York: Plenum.

Kahng, S. W., Chung, K., Gutshall, K., Pitts, S. C., Kao, J., & Girolami, K. (2010). Consistent visual analyses of intrasubject data. Journal of Applied Behavior Analysis, 43, 35–45.

Kazdin, A. (1982). Single-case research designs: Methods for clinical and applied settings. New York: Oxford University Press.

Kazdin, A. E. (2011). Single-case research designs: Methods for clinical and applied setting (2nd ed.). Oxford: Oxford University Press.

Kennedy, C. H. (2005). Single-case designs for educational research. New Jersey: Pearson Education Inc.

Keppel, A. E. (1982). Design and analysis: A researcher’s handbook (2nd ed.). New Jersey: Prentice Hall.

Knapp, T. J. (1983). Behavior analysts’ visual appraisal of behavior change in graphic display. Behavioral Assessment, 55, 155–164.

Kratochwill, T. R., & Brody, G. H. (1978). Single-subject designs: A perspective on the controversy over employing statistical inference and implication for research and training in behavior modification. Behavior Modification, 2, 291–307.

Maggin, D. M., Swaminathan, H., Rogers, H. J., O’Keeffe, B. V., Sugai, G., & Horner, R. H. (2011). A generalized least squares regression approach for computing effect sizes in single-case research: Application examples. Journal of School Psychology, 49(3), 301–321.

Matyas, T. A., & Greenwood, K. M. (1990). Visual analysis of single-case time series: Effects of variability, serial dependence, and magnitude of intervention effects. Journal of Applied Behavior Analysis, 23(3), 341–351.

Mercer, S. H., & Sterling, H. E. (2012). The impact of baseline trend control on visual analysis of single-case data. Journal of School Psychology, 50, 403–419.

Normand, M. P., & Bailey, J. S. (2006). The effects of celebration lines of visual data analysis. Behavior Modification, 30, 295–314.

Ottenbacher, K. (1986). Reliability and accuracy of visually analyzing graphed data from single-subject designs. American Journal of Occupational Therapy, 40, 464–469.

Ottenbacher, K. J. (1990). Visual inspection of single-subject data: An empirical analysis. Mental Retardation, 28, 283–290.

Ottenbacher, K. J., & Cusick, A. (1991). An empirical investigation of interrater agreement for single-subject data using graphs with and without trend lines. Journal of the Association for Persons with Severe Handicaps, 16(1), 48–55.

Park, H., Marascuilo, L., & Gaylord-Ross, R. (1990). Visual inspection and statistical analysis in single-case designs. Journal of Experimental Education, 58, 311–320.

Parker, R. I., Cryer, J., & Byrns, G. (2006). Controlling baseline trend in single-case research. School Psychology Quarterly, 21, 418–443.

Parker, R. I., & Tindal, G. (1992). Estimating trend in progress monitoring data: A comparison of simple line-fitting methods. School Psychology Review, 21, 300–312.

Parsonson, B. S., & Baer, D. M. (1986). The graphic analysis of data. In A. Poling & R. W. Fuqua (Eds.), Research methods in applied behavior analysis: Issues and advances (pp. 157–186). New York: Plenum Press.

Poncy, B. C., Skinner, C. H., & Axtell, P. K. (2005). An investigation of the reliability and standard error of measurement of words read correctly per minute using curriculum-based measurement. Journal of Psychoeducational Assessment, 23(4), 326–338.

Reschly, A. L., Busch, T. W., Betts, J., Deno, S. L., & Long, J. D. (2009). Curriculum-based measurement oral reading as an indicator of reading achievement: A meta-analysis of the correlational evidence. Journal of School Psychology, 47(6), 427–469.

Rojahn, J., & Schulze, H. H. (1985). The linear regression line as a judgmental aid in visual analysis of serially dependent AB time-series data. Journal of psychopathology and behavioral assessment, 7(3), 191–205.

Sharpio, E. S. (2004). Academic skills problems: Direct assessment and intervention (3rd ed.). New York: Guilford Press.

Shinn, M. R. (2002). Best practices in using curriculum-based measurement in a problem- solving model. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology (Vol. 4, pp. 671–697). Silver Spring, MD: National Association of School Psychologists.

Shinn, M. R. (2008). Best practices in using curriculum based measurement and its use in the problem-solving model. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology (Vol. V, pp. 243–262). Bethesda, MD: National Association of School Psychologists.

Shinn, M., Good, R., Knutson, N., Tilly, W., & Collins, V. (1992). Curriculum-based measurement reading fluency: A confirmatory factor analysis of its relation to reading. School Psychology Review, 21, 459–479.

Shinn, M. L., Good, R. H., & Stein, S. (1989). Summarizing trend in student achievement: A comparison of models. School Psychology Review, 18, 356–370.

Skiba, R., Deno, S., Marston, D., & Casey, A. (1989). Influence of trend estimation and subject familiarity on practitioners’ judgments of intervention effectiveness. The Journal of Special Education, 22, 433–445.

Stecker, P. M., & Fuchs, L. S. (2000). Effecting superior achievement using curriculum-based measurement: The importance of individual progress monitoring. Learning Disabilities Research & Practice, 15(3), 128–134.

Tindal, G., Deno, S. L., & Ysseldyke, J. (1983). Visual analysis of time series data: Factors of influence and level of reliability. Institute for Research on Learning Disabilities, University of Minnesota, Minneapolis. Technical Report # 112.

vanDerHeyden, A. M., Witt, J. C., & Barnett, D. W. (2005). The emergence and possible futures of response to intervention. Journal of Psychoeducational Assessment, 23(4), 339–361.

Wayman, M. M., Wallace, T., Wiley, H. I., Tichá, R., & Espin, C. A. (2007). Literature synthesis on curriculum-based measurement in reading. The Journal of Special Education, 41(2), 85–120.

Wolery, M., & Harris, S. R. (1982). Interpreting results of single-subject research design. Journal of the American Physical Therapy Association, 62, 445–452.

Wright, J. (1992). Curriculum-based measurement: A manual for teachers. Retrieved from http://www.jimwrightonline.com/pdfdocs/cbaManual.pdf.

Ximenes, V. M., Manolov, R., Solanas, A., & Quera, V. (2009). Factors affecting visual inference in single-case designs. The Spanish Journal of Psychology, 12, 823–832.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1

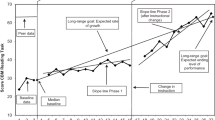

Note X values represent weeks and Y values represent words read correct per minute (WRCM).

Appendix 2

Context

Mr. Nelson is a grade school reading teacher. Every week, he has his students read out loud for one minute from a reading passage. Mr. Nelson then draws a dot on a graph for that student showing how many words the student read correct for that week. By keeping track of how students are performing every week, Mr. Nelson can determine whether he needs to change his instruction if students are not improving or improving slightly, or keep his instruction the same if students are substantially improving.

The Problem

Mr. Nelson has found that it is difficult to determine how a student is doing by just plotting how many words they have read each week.

Mr. Nelson has asked fellow teachers how they decide whether a student is making progress or not. He received three suggestions: (1) make a decision just looking at the data points, (2) use a computer program to plot a line of best fit (in other words a trend line), or (3) plot a trend line with a line of how you think students should be performing (a goal or aim line). As a part of this study, you are going to determine whether students are making substantial progress, minimal progress, or no progress with each of the suggestions Mr. Nelson received. You will also indicate how confident you feel in your decision.

Rights and permissions

About this article

Cite this article

Van Norman, E.R., Nelson, P.M., Shin, JE. et al. An Evaluation of the Effects of Graphic Aids in Improving Decision Accuracy in a Continuous Treatment Design. J Behav Educ 22, 283–301 (2013). https://doi.org/10.1007/s10864-013-9176-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10864-013-9176-2