Abstract

Entropy-regularized optimal transport and its multi-marginal generalization have attracted increasing attention in various applications, in particular due to efficient Sinkhorn-like algorithms for computing optimal transport plans. However, it is often desirable that the marginals of the optimal transport plan do not match the given measures exactly, which led to the introduction of the so-called unbalanced optimal transport. Since unbalanced methods were not examined for the multi-marginal setting so far, we address this topic in the present paper. More precisely, we introduce the unbalanced multi-marginal optimal transport problem and its dual and show that a unique optimal transport plan exists under mild assumptions. Furthermore, we generalize the Sinkhorn algorithm for regularized unbalanced optimal transport to the multi-marginal setting and prove its convergence. For cost functions decoupling according to a tree, the iterates can be computed efficiently. At the end, we discuss three applications of our framework, namely two barycenter problems and a transfer operator approach, where we establish a relation between the barycenter problem and the multi-marginal optimal transport with an appropriate tree-structured cost function.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the last decades, optimal transport (OT) has attracted increasing attention in various applications, e.g., barycenter problems [1, 4], image matching [48, 49], and machine learning [3, 29, 37]. As the OT minimization problem is numerically difficult to solve, a common approach is to add an entropy regularization term [18]. This enables us to approximately solve the problem using Sinkhorn iterations [46] by exploiting an explicit relation between the solutions to the corresponding primal and dual problems. These iterations can be implemented in parallel on GPUs, which makes even large-scale problems solvable within reasonable time. Recently, a debiased version of the Sinkhorn divergence was investigated in [25, 41, 44], which has the advantage that it characterizes the weak convergence of measures. However, in many applications, the assumption that the marginal measures are matched exactly appears to be unreasonable. To deal with this issue, unbalanced optimal transport (UOT) [12, 13, 39] was introduced. Here, the hard marginal constraints are replaced by penalizing the \(\varphi \)-divergences between the given measures and the corresponding marginals. By making minimal modifications, we can use Sinkhorn-like iterations and hence the computational complexity and scalability remain the same. For the unbalanced case, the mentioned debiasing was discussed in [47]. For Gaussian distributions, the corresponding divergence even has a closed form [34].

So far, we have commented on OT between two measures. For certain practical tasks such as matching for teams [10], particle tracking [11], and information fusion [19, 24], it is useful to compute transport plans between more than two-marginal measures. This is done in the framework of multi-marginal optimal transport (MOT) [42], where again entropy regularization is possible. The problem was tackled numerically for Coulomb cost in [5] and more general repulsive costs in [17, 31]. Later, an efficient solution for tree-structured costs using Sinkhorn iterations was established in [32]. More recently, these results were extended to even more general cost functions in [2].

In this paper, we want to combine UOT and MOT by investigating unbalanced multi-marginal optimal transport (UMOT). We can build upon the previous papers, but we will see that this has to be done carefully, since various generalizations that seem to be straightforward at the first glance appear to be not. Inspired by the papers [20] and [47], we formulate Sinkhorn iterations for the UMOT problem. Our approach differs from that in [47] as we cannot rely on the 1-Lipschitz continuity of the \((c,\varepsilon )\)-transform, which only holds in the two-marginal case. Instead, we establish uniform boundedness of the iterates and then exploit the compactness of the Sinkhorn operator. As in the two-marginal case, we retain the excellent scalability of the algorithm for tree-structured costs. Furthermore, we prove that these iterations are convergent under mild assumptions.

As one possible application, we discuss the computation of regularized UOT barycenters based on the regularized UMOT problem. The OT barycenter problem was first introduced in [1] and then further studied, e.g., in [4, 16, 21, 40] for the balanced setting. As soon as entropic regularization is applied, we usually obtain a blurred barycenter. One possible solution is to use the debiased Sinkhorn divergence instead [33, 43]. Similarly as in [32] for the balanced case, we observe that solving an UMOT problem instead of minimizing a sum of UOT “distance” reduces the blur considerably. We also validate this observation theoretically. For this purpose, we show that for tree-structured costs UMOT transport plans are already determined by their two-dimensional marginals. A complementary approach for computing barycenters in an unbalanced setting is based on the Hellinger–Kantorovich distance [14, 28].

Furthermore, we provide a numerical UMOT example with a path-structured cost function. We observe that in comparison with a sequence of UOT problems with the same input measures, the UMOT approach has several advantages since the target measure from some optimal UOT plan is not necessarily equal to the source measure of the subsequent UOT plan. For the (single) UMOT plan, this is impossible by construction. Additionally, coupling the problems allows information to be shared between them. Note that there is almost no computational overhead compared to solving the problems sequentially.

Outline of the Paper Section 2 contains the necessary preliminaries. The regularized UMOT problem, in particular the existence and uniqueness of a solution as well as its dual problem, is provided in Sect. 3. In Sect. 4, we derive a Sinkhorn algorithm for solving the regularized UMOT problem and prove its convergence. Then, in Sect. 5, we investigate the barycenter problem with respect to regularized UOT and establish a relation to the regularized UMOT problem with a tree-structured cost function, where the tree is simply star-shaped. Furthermore, we discuss an extension of these considerations to more general tree-structured cost functions. Additionally, we outline an efficient implementation of the Sinkhorn algorithm for tree-structured costs. Numerical examples, which illustrate our theoretical findings, are provided in Sect. 6. Finally, we draw conclusions in Sect. 7.

2 Preliminaries

Throughout this paper, let \({\mathbb {X}}\) be a compact Polish space with associated Borel \(\sigma \)-algebra \({\mathcal {B}}({\mathbb {X}})\). By \({\mathcal {M}}({\mathbb {X}})\), we denote the space of finite signed real-valued Borel measures, which can be identified via Riesz’ representation theorem with the dual space of the continuous functions \(C({\mathbb {X}})\) endowed with the norm \(\Vert f\Vert _{C({\mathbb {X}})}\, {:}{=}\max _{x \in {\mathbb {X}}} |f(x)|\). Denoting the associated dual pairing by \(\langle \mu , f \rangle ~{:}{=}\int _{\mathbb {X}}f \, d\mu \), the space \({\mathcal {M}}({\mathbb {X}})\) can be equipped with the weak*-topology, i.e., a sequence \((\mu _n)_{n \in {\mathbb {N}}} \subset {\mathcal {M}}({\mathbb {X}})\) converges weakly to \(\mu \in {\mathcal {M}}({\mathbb {X}})\), written \(\mu _n \rightharpoonup \mu \), if

The associated dual norm of \(\mu \in {\mathcal {M}}({\mathbb {X}})\), also known as total variation, is given by \(\mathrm {TV} (\mu ) = \sup _{\Vert f\Vert _{C({\mathbb {X}})} \le 1} \langle \mu , f \rangle \). By \({\mathcal {M}}^+({\mathbb {X}})\), we denote the subset of nonnegative measures. The support of \(\mu \in {\mathcal {M}}^+({\mathbb {X}})\) is defined as the closed set

For \(\mu \in \, {\mathcal {M}}^+({\mathbb {X}})\) and \(p \in [1,\infty ]\), let \(L^p({\mathbb {X}},\mu )\) be the Banach space (of equivalence classes) of real-valued measurable functions with norm \(\Vert f\Vert _{L^p({\mathbb {X}},\mu )} < \infty .\)

A measure \(\nu \in {\mathcal {M}}({\mathbb {X}})\) is called absolutely continuous with respect to \(\mu \), and we write \(\nu \ll \mu \), if for every \(A \in {\mathcal {B}}({\mathbb {X}})\) with \(\mu (A) = 0\) we have \(\nu (A) = 0\). For any \(\mu , \nu \in {\mathcal {M}}^+({\mathbb {X}})\) with \(\nu \ll \mu \), the Radon–Nikodym derivative

exists and \(\nu = \sigma _\nu \mu \). Furthermore, \(\mu , \nu \in {\mathcal {M}}({\mathbb {X}})\) are called mutually singular, denoted by \(\mu \perp \nu \), if there exist two disjoint sets \(X_\mu , X_\nu \in {\mathcal {B}}({\mathbb {X}})\) such that \({\mathbb {X}}= X_\mu {\dot{\cup }} X_\nu \) and for every \(A \in {\mathcal {B}}({\mathbb {X}})\) we have \(\mu (A) = \mu (A \cap X_\mu )\) and \(\nu (A) = \nu (A \cap X_\nu )\). Given \(\mu ,\nu \in {\mathcal {M}}^+({\mathbb {X}})\), there exists a unique Lebesgue decomposition \(\mu = \sigma _\mu \nu + \mu ^\perp \), where \(\sigma _\mu \in L^1({\mathbb {X}},\nu )\) and \(\mu ^\perp \perp \nu \). Let \({\mathbb {Y}}\) be another compact Polish space and \(T:{\mathbb {X}}\rightarrow {\mathbb {Y}}\) be a measurable function, i.e., \(T^{-1}(A) \in {\mathcal {B}}({\mathbb {X}})\) for all \(A \in {\mathcal {B}}({\mathbb {Y}})\). Then, the push-forward measure of \(\mu \) by T is defined as \(T_\# \mu ~{:}{=}~\mu \circ T^{-1}\).

Let V be a real Banach space with dual \(V^*\) and dual pairing \(\langle v,x \rangle = v(x)\), \(v \in V^*, x \in V\). For \(F:V \rightarrow (-\infty ,+\infty ]\), the domain of F is given by \( \mathrm {dom} F \,{:}{=}\, \{x \in V: F(x) \in {\mathbb {R}} \}\). If \(\mathrm {dom} F \not = \emptyset \), then F is called proper. By \(\Gamma _0(V)\) we denote the set of proper, convex, lower semi-continuous (lsc) functions mapping from V to \((-\infty ,+\infty ]\). The subdifferential of \(F:V \rightarrow (-\infty ,+\infty ]\) at a point \(x_0 \in \mathrm {dom} F\) is defined as

and \(\partial F(x_0) = \emptyset \) if \(x_0 \not \in \mathrm {dom} F\). For a function \(F:V^n \rightarrow (-\infty ,\infty ]\), \(\partial _i F\) denotes the subdifferential of F with respect to the ith component. The Fenchel conjugate \(F^*:V^* \rightarrow (-\infty ,+\infty ]\) is given by

A nonnegative function \(\varphi \in \Gamma _0({\mathbb {R}})\) satisfying \(\varphi (1) = 0\) and \(\varphi |_{(-\infty ,0)} = \infty \) is called entropy function with recession constant \(\varphi ^\prime _\infty = \lim _{x \rightarrow \infty } \varphi (x)/x\). In this case, \({{\,\mathrm{dom}\,}}(\varphi ^*) = (-\infty , \varphi ^\prime _\infty ]\). For every \(\mu ,\nu \in {\mathcal {M}}^+({\mathbb {X}})\) with Lebesgue decomposition \(\mu = \sigma _\mu \nu + \mu ^\perp \), the \(\varphi \)-divergence \(D_\varphi :{\mathcal {M}}^+({\mathbb {X}}) \times {\mathcal {M}}^+({\mathbb {X}}) \rightarrow [0,\infty ]\) is given in its primal and dual form by

with the convention \(0 \cdot \infty = 0\). The mapping \(D_\varphi \) is jointly convex, weakly lsc and fulfills \(D_\varphi (\mu ,\nu ) \ge 0\), see [39, Cor. 2.9]. Furthermore, we have for \(t > 0\) that \(D_{t\varphi } = t D_{\varphi }\). We will use the following \(\varphi \)-divergences, see also [47].

Example 2.1

-

(i)

Let \(\varphi ~{:}{=}~\iota _{\{1\}}\), where \(\iota _{{\mathcal {S}}}\) denotes the indicator function of the set \({{\mathcal {S}}}\), i.e., \(\iota _{{\mathcal {S}}}(x) = 0\) if \(x \in {{\mathcal {S}}}\), and \(\iota _{{\mathcal {S}}}(x) = +\infty \) otherwise. Then, \(\varphi ^*(q) = q\), \(\varphi '_\infty = \infty \), and

$$\begin{aligned} D_{\varphi }(\mu ,\nu ) = \left\{ \begin{array}{ll} 0 &{}\mathrm {if} \; \mu =\nu ,\\ +\infty &{}\mathrm {otherwise}. \end{array} \right. \end{aligned}$$ -

(ii)

For \(\varphi ~{:}{=}~\iota _{[0,\infty )}\), we get \(\varphi ^*(q) = \iota _{(-\infty ,0]}\), \(\varphi '_\infty = 0\), and \(D_{\varphi }(\mu ,\nu ) = 0\).

-

(iii)

Consider the Shannon entropy with \(\varphi (x)~{:}{=}~x\ln (x) - x +1\) and the agreement \(0 \ln 0 = 0\). Then, we have that \(\varphi ^*(q) = \exp (q) -1\), \(\varphi '_\infty = \infty \), and the \(\varphi \)-divergence is the Kullback–Leibler divergence \(\mathrm {KL}:{{\mathcal {M}}^+}({\mathbb {X}}) \times {{\mathcal {M}}^+}({\mathbb {X}}) \rightarrow \mathbb [0, +\infty ]\). For \(\mu ,\nu \in {{\mathcal {M}}^+}({\mathbb {X}})\), if the Radon–Nikodym derivative \(\sigma _\mu = \frac{\,\mathrm {d}\mu }{\,\mathrm {d}\nu }\) exists, then

$$\begin{aligned} \mathrm {KL} (\mu ,\nu )~{:}{=}~\int _{{\mathbb {X}}} \ln (\sigma _\mu ) \, \,\mathrm {d}\mu + \nu ({\mathbb {X}}) - \mu ({\mathbb {X}}), \end{aligned}$$and otherwise, we set \(\mathrm {KL} (\mu ,\nu )~{:}{=}~+ \infty \). Note that the \(\mathrm {KL}\) divergence is strictly convex with respect to the first variable.

-

(iv)

For \(\varphi (x)~{:}{=}~|x-1|\), we get \(\varphi ^*(q) = \max (-1,q)\) if \( q \le 1\) and \(\varphi ^*(q) = +\infty \) otherwise, \(\varphi '_\infty = 1\), and \( D_{\varphi }(\mu ,\nu ) = \mathrm {TV}(\mu - \nu ) \).

3 Unbalanced Multi-Marginal Optimal Transport

Throughout this paper, we use the following abbreviations. For compact Polish spaces \({\mathbb {X}}_i \ne \emptyset \), \(i=1,\ldots , N\), and measures \(\nu _i\in {\mathcal {M}}^+({\mathbb {X}}_i)\), \(i=1,\ldots , N\), we set \(\nu ~{:}{=}~(\nu _1,\ldots ,\nu _N)\) and

Furthermore, for \(p \in [1,\infty ]\) and \(f_i \in L^p({\mathbb {X}}_i, \nu _i)\), \(i=1,\ldots ,N\), we write \(f~{:}{=}~(f_1,\ldots ,f_N)\) and

where the product space \(L^{p,\times }({\mathbb {X}},\nu )\) is equipped with the \(L^p\) norm of the components. For example, if \({\mathbb {X}}={\mathbb {X}}_1\times {\mathbb {X}}_2\times {\mathbb {X}}_3\), then for \(f=(f_1, f_2, f_3)\in L^{p,\times }({\mathbb {X}},\nu )\) we have

If the domains and measures are clear from the context, we abbreviate the associated norms by \(\Vert \cdot \Vert _p\), \(p \in [1,\infty ]\).

Given \(\mu _i\in {\mathcal {M}}^+({\mathbb {X}}_i)\), \(i=1,\ldots , N\), the measures \(\gamma _i\in {\mathcal {M}}^+({\mathbb {X}}_i)\), \(i=1,\ldots , N\), are called reference measures for \(\mu \) if

Definition 3.1

Let \(\varepsilon >0\). Given a nonnegative cost \(c \in C ({\mathbb {X}})\), fully supported measures \(\mu _i \in {\mathcal {M}}^+({\mathbb {X}}_i)\), \(i=1,\ldots ,N\), with reference measures \(\gamma _i \in {\mathcal {M}}^+({\mathbb {X}}_i)\), and entropy functions \(\varphi _i \in \Gamma _0({\mathbb {R}})\), \(i=1,\ldots ,N\), the associated regularized unbalanced multi-marginal optimal transport problem \(({{\,\mathrm{UMOT}\,}})\) reads

where \(P_{{\mathbb {X}}_{i\#}} \pi \) is the ith marginal of \(\pi \).

Note that the full support condition is no real restriction as we can choose \({\mathbb {X}}_i = \text {supp}(\mu _i)\). Furthermore, we can implicitly incorporate weights for the marginal penalty terms in (2) by rescaling the entropy functions \(\varphi _i\).

Remark 3.2

(Regularization and reference measures) Typical choices for the reference measures \(\gamma _i\) are

-

(i)

\(\gamma _i = \mu _i\) then \({{\,\mathrm{KL}\,}}(\mu ^\otimes , \gamma ^\otimes ) = 0\) and we regularize in \({{\,\mathrm{UMOT}\,}}_\varepsilon \) by \({{\,\mathrm{KL}\,}}(\pi , \mu ^\otimes )\).

-

(ii)

\(\gamma _i\) the Lebesgue measure on \({\mathbb {X}}_i \subset {\mathbb {R}}^{d_i}\). Then \({{\,\mathrm{KL}\,}}(\mu ^\otimes , \gamma ^\otimes ) < \infty \) is equivalent to \(\mu _i\) having a density in a so-called Orlicz space, see [15, 41] for details. Furthermore, the regularizer in \({{\,\mathrm{UMOT}\,}}_\varepsilon \) is the entropy \({{\,\mathrm{KL}\,}}(\pi , \gamma ^\otimes ) = E (\pi )\) for continuous measures.

-

(iii)

\(\gamma _i\) the counting measure if the \({\mathbb {X}}_i\) are finite. Here, \({{\,\mathrm{KL}\,}}(\mu ^\otimes , \gamma ^\otimes ) < \infty \) is equivalent to \(\mu \) being positive. Then, the regularizer is the entropy for discrete measures \({{\,\mathrm{KL}\,}}(\pi , \lambda ^\otimes )= E(\pi )\).

Definition (2) includes the following special cases:

-

If \(\varphi _i = \iota _{\{1\}}\) for all \(i=1,\ldots ,N\), then we have by Example 2.1 i) the regularized multi-marginal optimal transport (\(\mathrm {MOT}_{\varepsilon }\)) with hard constraints for the marginals. For \(\varepsilon =0\), we deal with the plain multi-marginal optimal transport (MOT) formulation.

-

If \(N=2\), then we are concerned with regularized unbalanced optimal transport (\(\mathrm {UOT}_{\varepsilon }\)). If \(\varphi _1 = \varphi _2 = \iota _{\{1\}}\), we get regularized optimal transport (\(\mathrm {OT}_{\varepsilon }\)), and if \(\varepsilon = 0\), we arrive at the usual optimal transport (OT) formulation.

Regarding existence and uniqueness of minimizers for \({{\,\mathrm{UMOT}\,}}_\varepsilon \), we have the following proposition.

Proposition 3.3

The \({{\,\mathrm{UMOT}\,}}_\varepsilon \) problem (2) admits a unique optimal plan.

Proof

The problem is feasible due to \({{\,\mathrm{KL}\,}}(\mu ^\otimes , \gamma ^\otimes ) < \infty \). Existence follows since \({{\,\mathrm{KL}\,}}(\cdot ,\gamma ^\otimes )\) and hence the whole functional (2) is coercive, and since all involved terms are lsc. For the uniqueness note that all terms in (2) are convex in \(\pi \) and that KL is moreover strictly convex in its first argument. \(\square \)

For applications, the dual formulation of \({{\,\mathrm{UMOT}\,}}_\varepsilon \) is important.

Proposition 3.4

The \({{\,\mathrm{UMOT}\,}}_\varepsilon \) problem (2) admits the dual representation

where

The optimal plan \({\hat{\pi }} \in {\mathcal {M}}^+({\mathbb {X}})\) for the primal problem (2) is related to any tuple of optimal dual potentials \({{\hat{f}}} \in L^{\infty ,\times }({\mathbb {X}},\gamma )\) by

Furthermore, any pair of optimal dual potentials \({{\hat{f}}}, {{\hat{g}}} \in L^{\infty ,\times }({\mathbb {X}},\gamma )\) satisfies \({{\hat{f}}}^\oplus = {{\hat{g}}}^\oplus \). Moreover, if \(N-1\) of the \(\varphi _i^*\), \(i =1,\dotsc ,N\), are strictly convex, then it holds \({\hat{f}} = {\hat{g}}\).

Proof

First, we set \(V~{:}{=}~ L^{\infty ,\times }({\mathbb {X}},\gamma )\) and \(W ~{:}{=}~ L^\infty ({\mathbb {X}}, \gamma ^\otimes )\), and define \(A:V \rightarrow W\), \(F\in \Gamma _0(V)\) and \(G\in \Gamma _0(W)\) via

Note that \(V^*\) and \(W^*\) are the respective dual spaces of finitely additive signed measures that are absolutely continuous with respect to \(\gamma \) and \(\gamma ^\otimes \), respectively. From [45, Thm. 4] with the choice \(g:{\mathbb {X}}\times {\mathbb {R}}\rightarrow {\mathbb {R}}\),

such that \(g^*(x, q)=c(x)q + \varepsilon (\ln (q)q-q+1)\) if \(q\ge 0\) with the convention \(0\ln 0=0\) and \(g^*(x, q)=\infty \) otherwise, we get that the Fenchel conjugate \(G^* \in \Gamma _0(W^*)\) is given by

if there exists nonnegative \(\sigma _\pi \in L^1({\mathbb {X}},\gamma ^\otimes )\) with \(\langle \pi , f \rangle = \int _{\mathbb {X}}f \sigma _\pi \,\mathrm {d}\gamma ^\otimes \) for all \(f \in W\) and \(G^*(\pi ) = \infty \) for all other \(\pi \in W^*\).

Using the definition of the Fenchel conjugate and (1), the function \(F^* \circ A^*\in \Gamma _0(W^*)\) can be expressed for any such \(\pi \) as

Now, we obtain the assertion by applying the Fenchel–Rockafellar duality relation

see [23, Thm. 4.1, p. 61]. Due to the definition of \(G^*\), it suffices to consider elements from \(W^*\) that can be identified with elements in \(L^1({\mathbb {X}},\gamma ^\otimes )\). Hence, the problem (6) coincides with (2).

The second assertion follows using the optimality conditions. More precisely, let \(\hat{\pi }\) and \({\hat{f}}\) be optimal. By [23, Chap. 3, Prop. 4.1], this yields \(A {{\hat{f}}} \in \partial G^* ({\hat{\pi }})\) which is equivalent to \(\hat{\pi } \in \partial G(A{\hat{f}})\). Since G is Gâteaux-differentiable with \(\nabla G(f) = \exp \bigl (\frac{f - c}{\varepsilon }\bigr ) \gamma ^\otimes \), we obtain

Finally, let \({\hat{f}},{\hat{g}} \in L^{\infty ,\times }({\mathbb {X}},\gamma )\) be two optimal dual potentials. The second summand is concave in f and strictly concave in \({{\hat{f}}}^\oplus \). The first summand in (4) is concave. Moreover, if \(N-1\) of the \(\varphi _i\), \(i=1,\dotsc ,N\), are strictly convex, then (4) is strictly concave. Hence, both claims follow. \(\square \)

4 Sinkhorn Algorithm for Solving the Dual Problem

In this section, we derive an algorithm for solving the dual problem (3). We prove its convergence under the assumption that for all \(i = 1, \ldots , N\), we have \(\ln (\sigma _{\mu _i}) \in L^\infty ({\mathbb {X}}_i,\gamma _i)\), where \(\sigma _{\mu _i}\) is the Radon–Nikodym derivative of \(\mu _i\) with respect to the reference measures \(\gamma _i\), and some mild assumptions on the entropy functions \(\varphi _i\).

First, we introduce two operators that appear in the optimality conditions of the dual problem, namely the \((c,\varepsilon )\)-transform and the anisotropic proximity operator.

Definition 4.1

For \(j = 1,\dotsc ,N\), the j-th \((c,\varepsilon )\)-transform \({{\,\mathrm{F}\,}}^{(c,\varepsilon ,j)}:L^{\infty ,\times }({\mathbb {X}},\gamma )\rightarrow L^\infty ({\mathbb {X}}_j,\gamma _j)\) is given by

This transform was discussed in relation with \({{\,\mathrm{MOT}\,}}_\varepsilon \) in [20], where the following two properties were shown.

Lemma 4.2

Let \(\ln (\sigma _{\mu _i}) \in L^\infty ({\mathbb {X}}_i,\gamma _i)\), \(i = 1,\dotsc ,N\), and \(f \in L^{\infty ,\times }({\mathbb {X}},\gamma )\). Then, the following holds:

-

(i)

For every \(j = 1,\dotsc ,N\) it holds

$$\begin{aligned}&\Vert f^{(c,\varepsilon ,j)} \!+ \lambda _{f,j} \Vert _{\infty } \!\le \! \Vert c \Vert _\infty \!+ \varepsilon \Vert \ln (\sigma _{\mu _j}) \Vert _{\infty }, \end{aligned}$$where

$$\begin{aligned} \lambda _{f,j} ~{:}{=}~ \varepsilon \ln \biggl (\int _{{\mathbb {X}}_{\setminus j}} \exp \biggl (\frac{f_{\setminus j}^\oplus }{\varepsilon }\biggr ) \,\mathrm {d}\gamma _{\setminus j}^\otimes \biggr ). \end{aligned}$$In particular, we get that \(f^{(c,\varepsilon ,j)}\) has bounded oscillation

$$\begin{aligned} \sup _{y \in {\mathbb {X}}_j} f^{(c,\varepsilon ,j)}(y) - \inf _{y \in {\mathbb {X}}_j} f^{(c,\varepsilon ,j)}(y) < \infty . \end{aligned}$$ -

(ii)

The nonlinear and continuous operator \({{\,\mathrm{F}\,}}^{(c,\varepsilon ,j)}:L^{\infty ,\times }({\mathbb {X}},\gamma )\rightarrow L^p({\mathbb {X}}_j,\gamma _j)\) is compact for \(p \in [1, \infty )\), i.e., it maps bounded sets to relatively compact sets.

Definition 4.3

For any entropy function \(\varphi \in \Gamma _0({\mathbb {R}})\) and \(\varepsilon > 0\), the anisotropic proximity operator \(\text {aprox}_{\varphi ^*}^{\varepsilon }:{\mathbb {R}}\rightarrow {\mathbb {R}}\) is given by

Remark 4.4

This operator is indeed well defined. Furthermore, it is 1-Lipschitz and can be given in analytic form for various conjugate entropy functions, see [47].

Example 4.5

Let us have a closer look at the functions from Example 2.1.

-

(i)

For \(\varphi = \iota _{\{1\}}\) it holds \(\text {aprox}_{\varphi ^*}^{\varepsilon }(p) = p\).

-

(ii)

For \(\varphi =\iota _{[0,\infty )}\), we get \(\text {aprox}_{\varphi ^*}^{\varepsilon }(p) = 0\).

-

(iii)

For \(\varphi (x) = t (x \ln (x) -x+1)\) corresponding to the Kullback–Leibler divergence, we have

$$\begin{aligned} \text {aprox}_{\varphi ^*}^{\varepsilon }(p) = \frac{t}{t+\varepsilon } \, p. \end{aligned}$$ -

(iv)

For \(\varphi (x) = t |x-1|\) belonging to the \({{\,\mathrm{TV}\,}}\) distance, it holds

$$\begin{aligned} \text {aprox}_{\varphi ^*}^{\varepsilon }(p) = \left\{ \begin{array}{rl} -t,&{} p < -t,\\ p, &{} p \in [-t,t],\\ t, &{} p > t. \end{array} \right. \end{aligned}$$

Definition 4.6

The \((c,\varepsilon )\)-transform and the anisotropic proximity operator are concatenated to the j-th Sinkhorn mapping

defined as \(S^{(c,\varepsilon ,\varphi ,j)}(f) ~{:}{=}~ f^{(c,\varepsilon ,\varphi ,j)}\) with

where the operator \(\text {aprox}_{\varphi _j^*}^\varepsilon \) is applied pointwise.

Now, we derive the maximizer of \({\mathcal {D}}_\varepsilon ^\varphi \) defined in (4).

Proposition 4.7

Let \(\ln (\sigma _{\mu _i}) \in L^\infty ({\mathbb {X}}_i,\gamma _i)\), \(i=1,\ldots ,N\).

Then, it holds for all \(j = 1,\dotsc ,N\) and \(f \in L^{\infty ,\times }({\mathbb {X}},\gamma )\) that

with equality if and only if \(f_j = f^{(c,\varepsilon ,\varphi ,j)}\). Furthermore, f is a maximizer of \({\mathcal {D}}_\varepsilon ^\varphi \) if and only if \(f_j = f^{(c,\varepsilon ,\varphi ,j)}\) for all \(j=1,\ldots ,N\).

Proof

We fix \(j \in \{1,\dotsc ,N\}\) and rewrite

By rearranging the definition (7) of the \((c,\varepsilon )\)-transform, we get that

Plugging this into the equation above, we get

The integrand on the right-hand side has the form of the functional in (8), so that we obtain

Since the minimization problem (8) admits a unique solution, strict inequality holds if and only if \(f_j \ne f^{(c,\varepsilon ,\varphi ,j)}\).

By the first part of the proof, the relation \(f_j = f^{(c,\varepsilon ,\varphi ,j)}\) is equivalent to \(0 \in \partial _j {\mathcal {D}}_\varepsilon ^\varphi (f)\), and the last statement follows if we show that

Using \(F_i:L^\infty ({\mathbb {X}}_i, \gamma _i) \rightarrow [-\infty ,\infty )\) as well as \(G:L^{\infty ,\times }({\mathbb {X}},\gamma ) \rightarrow {\mathbb {R}}\) given by

respectively, we can decompose \({\mathcal {D}}_\varepsilon ^\varphi \) as \({\mathcal {D}}_\varepsilon ^\varphi = F^\oplus + G\). As G is Gâteaux-differentiable, we obtain that

. By continuity of G and since \(0\in {{\,\mathrm{dom}\,}}\varphi ^*\), it holds \(0\in {{\,\mathrm{dom}\,}}G \cap {{\,\mathrm{dom}\,}}F^\oplus \), such that the subdifferentials are additive by [23, Ch. 1, Prop. 5.6]. Thus, using

. By continuity of G and since \(0\in {{\,\mathrm{dom}\,}}\varphi ^*\), it holds \(0\in {{\,\mathrm{dom}\,}}G \cap {{\,\mathrm{dom}\,}}F^\oplus \), such that the subdifferentials are additive by [23, Ch. 1, Prop. 5.6]. Thus, using  , we obtain

, we obtain

This concludes the proof. \(\square \)

Inspired by the Sinkhorn iterations for \(\mathrm {MOT}_\varepsilon \) in [20] and \(\mathrm {UOT}_\varepsilon \) in [47], we propose Algorithm 1 for solving \(\mathrm {UMOT}_\varepsilon \) in its dual form (3). By Proposition 4.7, every fixed point of the sequence \((f^{(rN)})_{r \in {\mathbb {N}}}\) generated by Algorithm 1 is a solution of (3).

Remark 4.8

It holds \(\varphi ^*(0)=0\). Hence, we can choose \(f^{(0)}=0\) as an initialization with \({\mathcal {D}}_\varepsilon ^\varphi (f^{(0)}) > -\infty \).

Next, we want to show that the sequence converges. Note that in [20, Thm. 4.7] convergence of the (rescaled) Sinkhorn algorithm was shown by exploiting the property \({\mathcal {D}}_\varepsilon ^\varphi (f_1,\dotsc ,f_N) = {\mathcal {D}}_\varepsilon ^\varphi (f_1 + \lambda _1,\dotsc ,f_N + \lambda _N)\) for all \(\lambda _1,\dotsc ,\lambda _N \in {\mathbb {R}}\) with \(\sum _{i=1}^N \lambda _i = 0\), which holds exclusively in the balanced case where \(\varphi _i^*(q) = q\). Hence, significant modifications of the proof are necessary. Albeit taking several ideas from [47], our approach differs as we cannot rely on the 1-Lipschitz continuity of the \((c,\varepsilon )\)-transform, which only holds for \(N=2\). Instead, we exploit the compactness of the Sinkhorn operator as in [20], for which we need to establish uniform boundedness of the iterates. To this end, we need the following two lemmata.

Lemma 4.9

Let \(f^{(n)} \in L^{\infty , \times } ({\mathbb {X}}, \gamma )\), \(n \in {\mathbb {N}}\), satisfy \(\Vert (f^{(n)})^\oplus \Vert _{\infty } \overset{n\rightarrow \infty }{\longrightarrow } \infty \) and have uniformly bounded oscillations (see Lemma 4.2). Then, it holds \({\mathcal {D}}_\varepsilon ^\varphi (f^{(n)}) \rightarrow -\infty \).

Proof

Since the entropy functions \(\varphi _i\), \(i=1,\ldots ,N\), satisfy \(\varphi _i(1) = 0\), we have \(\varphi _i^*(x) \ge x\) for all \(x \in {\mathbb {R}}\). Hence, we can estimate

Since the \(\mu _i\) are absolutely continuous with respect to \(\gamma _i\) with density \(\sigma _{\mu _i}\), we obtain

Clearly, \((f^{(n)})^\oplus \) has uniformly bounded oscillation. Hence, for \(\Vert (f^{(n)})^\oplus \Vert _{\infty } \rightarrow \infty \) the integrand diverges to \(-\infty \) on a set of positive measure, which yields the assertion. \(\square \)

Lemma 4.10

Let \(\ln (\sigma _{\mu _i}) \in L^\infty ({\mathbb {X}}_i,\gamma _i)\), \(i = 1,\dotsc ,N\), and \({\mathcal {D}}_\varepsilon ^\varphi (f^{(0)}) > -\infty \). For the Sinkhorn sequence \((f^{(n)})_{n\in {\mathbb {N}}} \subset L^{\infty ,\times }({\mathbb {X}},\gamma )\) generated by Algorithm 1, there exists a constant \(M>0\) and a sequence \((\lambda ^{(n)})_{n\in {\mathbb {N}}}\), \(\lambda ^{(n)} \in {\mathbb {R}}^N\), with \(\sum _{i=1}^N \lambda _i^{(n)} = 0\) such that

Proof

For \(i = 1,\dotsc ,N-1\), \(j = 0,\dotsc ,N-1\) and \(r \in {\mathbb {N}}\) set

where \(\lambda _{f,i}\) is defined as in Lemma 4.2. Since \(\text {aprox}\) is 1-Lipschitz, by Lemma 4.2 i) and the definition of the iterates, for \(i \le j\) we obtain that

for some \(M_1<\infty \). In the case \(N>i>j\), we have

such that this case works similarly. Thus, it remains to estimate the last component. By Proposition 4.7, it holds for \(n \in {\mathbb {N}}\) that \({\mathcal {D}}_\varepsilon ^\varphi (f^{(0)}) \le {\mathcal {D}}_\varepsilon ^\varphi (f^{(n)})\). Due to Lemma 4.9, this ensures the existence of \(M_2 > 0\) such that \(\Vert \bigoplus _{i=1}^N f^{(n)}_i \Vert _{\infty } \le M_2\) for all \(n \in {\mathbb {N}}\). Thus,

For \(M ~{:}{=}~ M_2 + (N-1)M_1\) the assertion follows. \(\square \)

Now, we can prove convergence of the Sinkhorn iterates under mild additional assumptions on the entropy functions.

Theorem 4.11

Let \(\ln (\sigma _{\mu _i}) \in L^\infty ({\mathbb {X}}_i,\gamma _i)\), \(i = 1,\dotsc ,N\). Assume that \([0, \infty )\subset {{\,\mathrm{dom}\,}}\varphi _i\) for all \(i=1, \dots , N-1\), and that \({\mathcal {D}}_\varepsilon ^\varphi (f^{(0)}) > -\infty \). Then, the sequence \((f^{(n)})_{n \in {\mathbb {N}}}\) induced by Algorithm 1 satisfies \(\Vert (f^{(n)})^\oplus - {\hat{f}}^\oplus \Vert _p \xrightarrow []{n \rightarrow \infty } 0\) for any optimal solution \({{\hat{f}}} \in L^{\infty ,\times }({\mathbb {X}},\gamma )\) of (3) for every \(p \in [1,\infty )\). Moreover, if \(N-1\) of the \(\varphi _i^*\) are strictly convex, then the optimal \({\hat{f}}\) is unique and \(\Vert f^{(n)} - {\hat{f}} \Vert _p \rightarrow 0\) for every \(p \in [1,\infty )\).

Proof

First, we show that the sequence \((f^{(n)})_{n \in {\mathbb {N}}}\) is uniformly bounded. By Lemma 4.10, there exists a sequence \((\lambda ^{(n)})_{n\in {\mathbb {N}}} \subset {\mathbb {R}}^N\), with \(\sum _{i=1}^N \lambda _i^{(n)} = 0\) and \(M > 0\) such that

for all \(i=1,\ldots ,N\), \(n\in {\mathbb {N}}\). Define \(g_i^{(n)} ~{:}{=}~ f_i^{(n)} + \lambda _i^{(n)}\). To obtain uniform boundedness, it suffices to show that \(\max _i |\lambda _i^{(n)}|\) is uniformly bounded in n. We have for any \(q_i \in {{\,\mathrm{dom}\,}}{\varphi _i^*}\) and \(p_i \in \partial \varphi _i^*(q_i)\) by the first-order convexity condition and since elements of \(\partial \varphi _i^*(q_i)\) are nonnegative, that

Consequently, we obtain by (9) that

Setting \(m_i ~{:}{=}~ \mu _i ({\mathbb {X}}_i)\), \(i=1,\ldots ,N\), and using that the \(\lambda ^{(n)}_i\) sum up to zero, we conclude

First, since \({{\,\mathrm{dom}\,}}\varphi _N^* =(-\infty , (\varphi _N)'_\infty )\) with \((\varphi _N)'_\infty \ge 0\), we can fix some \(q_N\in \mathrm {int}({{\,\mathrm{dom}\,}}\varphi _N^*)\) and some \(p_N\in \partial \varphi _N^*(q_N)\ne \emptyset \). Assume that there exists at least one \(i \in \{1,\ldots ,N\}\) such that \({(\lambda _i^{(n)})_{n \in {\mathbb {N}}}}\) is unbounded. Since the \({\lambda _i^{(n)}}\) sum to zero, there then also exists at least one such \(i \in \{1,\ldots ,N-1\}\). For each of these i, we can extract a subsequence \((n_{k})_k\) such that either \(\lambda _i^{(n_k)} \rightarrow -\infty \) or \(\lambda _i^{(n_k)} \rightarrow \infty \). In the first case, choose some \(p_i > 0\) small enough such that \(m_N p_N > m_i p_i\). By assumption, \(p_i\in \mathrm {int}({{\,\mathrm{dom}\,}}\varphi _i)\), such that there exists \(q_i\in \partial \varphi _i(p_i)\). Since \(\varphi \in \Gamma _0({\mathbb {R}})\), it follows that \(p_i\in \partial \varphi _i^*(q_i)\). Moreover, since

and \(p_i\in {{\,\mathrm{dom}\,}}\varphi _i\), we also have \(q_i\in {{\,\mathrm{dom}\,}}(\varphi _i^*)\). Similarly, if \(\lambda _i^{(n_k)} \rightarrow \infty \), choose some \(p_i<\infty \) such that \(m_N p_N < m_i p_i\) and \(q_i\in {{\,\mathrm{dom}\,}}\varphi _i^*\) with \(p_i\in \partial \varphi _i^*(q_i)\). Now, we have by Proposition 4.7 that \({\mathcal {D}}_\varepsilon ^\varphi (f^{(0)}) \le {\mathcal {D}}_\varepsilon ^\varphi (f^{(n_{k})})\). Since \((f^{(n_k)})^\oplus = (g^{(n_k)})^\oplus \) and the \(g_i^{(n_k)}\), \(i=1,\ldots ,N\), are uniformly bounded, the second summand in \({\mathcal {D}}_\varepsilon ^\varphi (f^{(n_k)})\) remains bounded as \(k\rightarrow \infty \), while the first summand in \({\mathcal {D}}_\varepsilon ^\varphi (f^{(n_k)})\) goes to \(-\infty \) by (10) with the above chosen \((q_i, p_i)\). This is a contradiction to \({\mathcal {D}}_\varepsilon ^\varphi (f^{(0)}) > -\infty \). Thus, there is \({\tilde{M}}>0\) such that for \(i \in \{1,\dotsc ,N\}\) and \(n \in {\mathbb {N}}\) it holds

Hence, \((f^{(n)})_n\) is a uniformly bounded sequence. By Lemma 4.2 ii), we know that the operator \(S^{(c,\varepsilon ,\varphi ,j)} :L^{\infty ,\times }({\mathbb {X}},\gamma )\rightarrow L^p({\mathbb {X}}_j,\gamma _j)\) is compact for every j and \(p \in [1,\infty )\). Consequently, we get existence of a converging subsequence \((f^{(n_k)})_{k \in {\mathbb {N}}}\) in \(L^{p,\times }({\mathbb {X}},\gamma )\). As \(f^{(n_k)}\) is uniformly bounded in \(L^{\infty ,\times }({\mathbb {X}},\gamma )\) and since \(\infty \)-norm balls are closed under \(L^p\) convergence, we get that the limit \({{\hat{f}}}\) additionally satisfies \({{\hat{f}}} \in L^{\infty ,\times }({\mathbb {X}},\gamma )\).

Now, we prove optimality of \({\hat{f}}\). Note that there is \(j \in \{0,\dotsc ,N-1\}\) so that \(n_k \equiv j \mod N\) for infinitely many \(k \in {\mathbb {N}}\). Without loss of generality, assume \(j = 0\). Then, we restrict \((n_k)_k\) to \(n_k \equiv 0 \mod N\) for all \(k \in {\mathbb {N}}\). Using the Lipschitz continuity of \(\ln \) and \(\exp \) on compact sets and the 1-Lipschitz continuity of \(\text {aprox}\) together with the uniform boundedness of the sequence \((f^{(n)})_{n \in {\mathbb {N}}}\), we obtain that

for every \(j=1,\ldots ,N\), where C stands for some unspecified, possibly changing constant. In particular, it holds

As all \(\varphi _i^*\) are lsc, the dominated convergence theorem implies that \({\mathcal {D}}_\varepsilon ^\varphi (f^{(n_j)}) \rightarrow {\mathcal {D}}_\varepsilon ^\varphi ({{\tilde{f}}})\) for any a.e. convergent subsequence \((f^{(n_j)})_{j \in {\mathbb {N}}}\) of \((f^{(n)})_{n \in {\mathbb {N}}}\) with limit \({{\tilde{f}}}\). Due to this continuity property and since \(({\mathcal {D}}_\varepsilon ^\varphi (f^{(n)}))_n\) is a convergent sequence, we get

Consequently, Proposition 4.7 implies \({\hat{f}}_1 = {\hat{f}}^{(c,\varepsilon ,\varphi ,1)}\). In the same way, we obtain

Due to \({\hat{f}}_1 = {\hat{f}}^{(c,\varepsilon ,\varphi ,1)}\), this gives \({\hat{f}}_2 = {\hat{f}}^{(c,\varepsilon ,\varphi ,2)}\). Proceeding iteratively this way, we obtain that \({\hat{f}}_i = {\hat{f}}^{(c,\varepsilon ,\varphi ,i)}\) for all \(i=1, \dots , N\). Hence, Proposition 4.7 implies that \({\hat{f}}\) is an optimal dual vector.

If \(N-1\) of the \(\varphi ^*\) are strictly convex, then the maximizer in (3) is unique and \((f^{(n)})_{n \in {\mathbb {N}}}\) converges. Otherwise, we obtain convergence of \(((f^{(n_j)})^\oplus )_{j \in {\mathbb {N}}}\) to \({\hat{f}}^\oplus \) in \(L^p({\mathbb {X}},\gamma ^\otimes )\). Since \({\hat{f}}^\oplus \) is the same for all possible limit points \({\hat{f}}\), convergence of \(((f^{(n)})^\oplus )_{n \in {\mathbb {N}}}\) follows. \(\square \)

Remark 4.12

(Relation to UOT in [47]) For entropy functions satisfying the assumptions of Theorem 4.11, our result can be seen as a generalization of the UOT result in Séjourné et al. [47] to the multi-marginal case and non-Lipschitz costs. Notably, the result by Séjourné et al. covers some additional entropy functions.

Finally, we want to remark that all results of this section also hold true if we do not assume that the spaces \({\mathbb {X}}_i\), \(i=1,\ldots ,N\), are compact as long as the cost function c remains bounded.

5 Barycenters and Tree-Structured Costs

In this section, we are interested in the computation of \(\mathrm {UOT}_\varepsilon \) barycenters and their relation to \(\mathrm {UMOT}_\varepsilon \) with tree-structured costs. An undirected graph \({{\,\mathrm{{\mathcal {G}}}\,}}= ({{\,\mathrm{{\mathcal {V}}}\,}},{{\,\mathrm{{\mathcal {E}}}\,}})\) with N nodes \({{\,\mathrm{{\mathcal {V}}}\,}}= \{1,\ldots ,N\}\) and edges \({{\,\mathrm{{\mathcal {E}}}\,}}\) is a tree if it is acyclic and connected. We write \(e = (j,k)\) if e joins the nodes j and k, where we agree that \(j < k\) in order to count edges only once. Let \(\deg (i)\) denote the number of edges in node \(i \in {\mathcal {V}}\). A node \(i \in {\mathcal {V}}\) is called a leaf if \(\deg (i) = 1\). By \({\mathcal {N}}_j\), we denote the set of neighbors of node j. For a given tree, let \(t = (t_e)_{e \in {{\,\mathrm{{\mathcal {E}}}\,}}}\) with \(t_{e} \in [0,1]\) and \(\sum _{e \in {{\,\mathrm{{\mathcal {E}}}\,}}} t_e = 1\). A cost function \(c_t\) is said to be tree-structured, if it is of the form

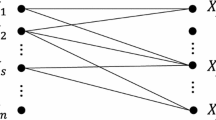

In Sect. 5.1, we consider the case where the tree is star-shaped, i.e., \({{\,\mathrm{{\mathcal {E}}}\,}}=\{ (1, N), \dots , (N-1, N) \}\), see Fig. 1 left. General tree-structured costs as, for example, those in Fig. 1 middle and right are addressed in Sect. 5.2. We restrict our attention to \({\mathbb {X}}_i\), \(i=1,\ldots ,N\), which are either finite or compact subsets of \({\mathbb {R}}^{d_i}\). Moreover, all references measures \(\gamma _i\), \(i=1,\ldots ,N\), are counting measures, respectively, Lebesgue measures, so that we regularize exclusively with the entropy from Rem. 3.2 ii) or iii), respectively.

5.1 Barycenters

For the barycenter problem with respect to \(\mathrm {UOT}_\varepsilon \), we introduce an additional finite set \({\mathbb {Y}}\) (or compact set \({\mathbb {Y}}\subset {\mathbb {R}}^{d_{N+1}}\)) and use for \(\xi \in {\mathcal {M}}^+({\mathbb {Y}})\) the entropy function

from Example 2.1 i). Let \(c_i \in C({\mathbb {X}}_i \times {\mathbb {Y}})\), \(i=1,\ldots ,N\), be nonnegative cost functions. The corresponding tree is star-shaped, i.e., given by \({{\,\mathrm{{\mathcal {V}}}\,}}=\{ 1, \dots , N+1\}\) and \({{\,\mathrm{{\mathcal {E}}}\,}}=\{ (1, N+1), \dots , (N,N+1) \}.\) To emphasize the dependence of \({{\,\mathrm{UOT}\,}}_\varepsilon \) on these functions, we write \(\mathrm {UOT}_\varepsilon ^{(c_i,\varphi _i,\psi )}\). We use an analogous notation for \({{\,\mathrm{UMOT}\,}}_\varepsilon \). Let

be the \((N-1)\)-dimensional probability simplex. For given barycentric coordinates \(t \in \Delta _N\), the barycenter \(\hat{\xi } \in {\mathcal {M}}^+({\mathbb {Y}})\) of \(\mu \) with respect to \(\mathrm {UOT}_{\varepsilon }\) is given by

Note that by the choice of \(\psi \) the barycentric marginal \(\xi \) is exactly matched. By Proposition 3.3, the involved \({{\,\mathrm{UOT}\,}}\) problems have unique solutions. Moreover, it was shown in [12, Sec. 5.2] that a unique barycenter exists due to the regularization. However, these barycenters do not correspond to a shortest path since \({{\,\mathrm{UOT}\,}}_\varepsilon \) is not a metric.

To establish a relation with the multi-marginal setting, we exploit that the optimal plan of the multi-marginal problem with cost function

is readily determined by its marginals. We need the following auxiliary lemma.

Lemma 5.1

Let \(\pi \in {\mathcal {M}}^+({\mathbb {X}}\times {\mathbb {Y}})\) be absolutely continuous with respect to Lebesgue measure, respectively, counting measure, on \({\mathbb {X}}\times {\mathbb {Y}}\) with density \(\sigma _\pi \). If there exists \(a_i \in L^\infty ({\mathbb {X}}_i \times {\mathbb {Y}})\), \(i = 1,\ldots ,N\), such that \(\sigma _{\pi } = \prod _{i=1}^N a_i\), then \(\pi \) is related to its marginals \(\pi _i ~{:}{=}~ {P_{{\mathbb {X}}_i \times {\mathbb {Y}}}}_\# \pi \), \(i = 1,\ldots ,N\), and \(\pi _{N+1} ~{:}{=}~ {P_{{\mathbb {Y}}}}_\# \pi \) via

where \(\sigma _{\pi _i}\) denotes the density of \(\pi _i\) with respect to the Lebesgue, respectively, counting measure, on \({\mathbb {X}}_i \times {\mathbb {Y}}\).

Proof

By abuse of notation, we denote the Lebesgue measure, respectively, counting measure, by \(\lambda \). The underlying space becomes clear from the context. By assumption, the marginal densities of \(\pi \) read

\(j = 1,\dotsc ,N\). Since \(P_{{\mathbb {Y}}\#} \pi = \pi _{N+1}\), we have \(\sigma _{\pi _{N+1}} = \prod _{i = 1}^N \int _{{\mathbb {X}}_i} a_i (x_i, \cdot ) \,\mathrm {d}\lambda (x_i)\), which finally yields

Now, we can draw the aforementioned connection between the barycenter problem (13) and \({{\,\mathrm{UMOT}\,}}_\varepsilon ^{(c_t,t\varphi ,\varphi _{N+1})}\), where

see Example 2.1ii). Due to the special form of this entropy, the \((N+1)\)th input of \({{\,\mathrm{UMOT}\,}}_\varepsilon ^{(c_t,t\varphi ,\varphi _{N+1})}\) has no effect on the functional. To emphasize this, we use the notation

The next theorem establishes the relation between the barycenter problem and this multi-marginal optimal transport problem.

Theorem 5.2

For \(i = 1,\ldots ,N\), let \(\mu _i \in {\mathcal {M}}^+({\mathbb {X}}_i)\) and cost functions \(c_i\in C({\mathbb {X}}_i \times {\mathbb {Y}})\) be given. Define \(c_t \in C({\mathbb {X}}\times {\mathbb {Y}})\) by (14), \(\psi \) by (12) and \(\varphi _{N+1}\) by (15). If \(\hat{\pi }\) is optimal for (16) then \(P_{{\mathbb {Y}}\#} \hat{\pi }\) minimizes the functional \(F(\xi )\) given by

Proof

Again, let \(\lambda \) denote the Lebesgue or counting measure, respectively. Set

Estimating in both directions, we show that

1. Fix \(\xi \in {\mathcal {M}}^+({\mathbb {Y}})\) such that optimal plans \({\hat{\pi }}^{(i)} \in {\mathcal {M}}^+({\mathbb {X}}_i \times {\mathbb {Y}})\) for \({{\,\mathrm{UOT}\,}}_\varepsilon ^{(c_i,\varphi _i,\psi )}(\mu _i,\xi )\), \(i = 1,\dotsc ,N\), exist. Then, we define

which yields \(P_{{\mathbb {X}}_i \times {\mathbb {Y}}\#} \pi = {\hat{\pi }}^{(i)}\) and \(P_{{\mathbb {Y}}\#} \pi = \xi \). Consequently, we get

Using the definition of \(\pi \), we obtain

Incorporating \(t_i D_{\varphi _i} = D_{t_i \varphi _i}\), we obtain

Thus, minimizing the right-hand side over all \(\pi \in {\mathcal {M}}^+({\mathbb {X}}\times {\mathbb {Y}})\), we get

such that minimizing the left-hand side over all \(\xi \in {\mathcal {M}}^+({\mathbb {Y}})\) yields the desired estimate.

2. Next, we show the converse estimate. Let \({\hat{\pi }} \in {\mathcal {M}}^+({\mathbb {X}}\times {\mathbb {Y}})\) be the optimal plan and \(({{\hat{f}}}, {{\hat{g}}}) \in L^{\infty ,\times }({\mathbb {X}}) \times L^\infty ({\mathbb {Y}})\) be optimal dual potentials for \({{\,\mathrm{UMOT}\,}}_\varepsilon ^{ (c_t,t\varphi ,\varphi _{N+1})}\left( \mu ,*\right) \). For \(i = 1,\dotsc ,N\), define \(a_i \in L^\infty ({\mathbb {X}}_i \times {\mathbb {Y}})\) by

The definition of \(c_t\) together with (5) yields

Then, Lemma 5.1 implies

where \(\hat{\pi }_i ~{:}{=}~ {P_{{\mathbb {X}}_i \times {\mathbb {Y}}}}_\# \hat{\pi }\), \(i = 1,\ldots ,N\), and \(\xi ~{:}{=}~ P_{{\mathbb {Y}}\#}{\hat{\pi }}\). Similarly as for the previous considerations, this results in

Minimizing the right-hand side over all \(\xi \in {\mathcal {M}}^+({\mathbb {Y}})\) yields

and thus the desired equality. As a direct consequence, we get \(P_{{\mathbb {Y}}\#} {\hat{\pi }}\) minimizes (17). This concludes the proof. \(\square \)

Remark 5.3

The previous result directly generalizes to \({{\,\mathrm{UMOT}\,}}\) problems that also regularize the marginal on \({\mathbb {Y}}\). More precisely, let \(\mu _{N+1} \in {\mathcal {M}}^+({\mathbb {Y}})\) and \(\varphi _{N+1} \in \Gamma _0({\mathbb {R}})\) be an arbitrary entropy function. If \(\hat{\pi }\) is the optimal plan for \(\mathrm {UMOT}_\varepsilon ^{(c_t,t\varphi ,\varphi _{N+1})}\left( \mu ,\mu _{N+1}\right) \), then \(P_{{\mathbb {Y}}\#} \hat{\pi }\) solves

Remark 5.4

(Comparison of formulations (13) and (16)) The proof of Theorem 5.2 reveals that the barycenter \({\hat{\xi }}\) in (13) is “over-regularized,” since it appears as the marginal measure of \(\pi ^{(i)}\) in each of the N entropy terms \(E(\pi ^{(i)})\). On the other hand, the proposed multi-marginal approach does not involve these \(N-1\) superfluous regularizers. This ensures that the minimizer \({\hat{\xi }}\) is less “blurred” compared to the original \({{\,\mathrm{UOT}\,}}_\varepsilon \) barycenter, which is favorable for most applications. A numerical illustration of this behavior is given in Sect. 6. We will see in Subsect. 5.3 that for tree-structured costs the computation of optimal transport plans for the multi-marginal case has the same complexity per iteration as for the “barycentric” problems.

Furthermore, the computation of barycenters with an additional penalty term as outlined in Rem. 5.3 is possible with the Sinkhorn-type algorithm detailed in Sect. 5.3. In contrast, we are unaware of an efficient algorithm to solve the corresponding pairwise coupled formulation.

On the other hand, we only obtain the equivalence of the “pairwise coupled” formulation (13) and the multi-marginal approach for the choices made in (12) and (15). These enforce that all marginals of the plans in (13) coincide with the barycenter, which is not necessary in general. Although this generalization comes at the cost of a nested optimization problem, the pairwise coupled formulation is thus more flexible than \({{\,\mathrm{UMOT}\,}}\).

Remark 5.5

(Barycenters and MOT) By Theorem 5.2, formula (17) with \(\varepsilon = 0\) and \(\varphi _i = \iota _{\{1\}}\), \(i=1,\ldots ,N\), our MOT formulation with the cost

is equivalent to the OT barycenter problem. There is another reformulation of the OT barycenter problem via the McCann interpolation using the cost function

if a unique minimizer exists, see [10]. To the best of our knowledge, there is no similar reformulation for our setting.

5.2 General Tree-Structured Costs

In the above barycenter problem, we have considered \({{\,\mathrm{UMOT}\,}}_\varepsilon \) with a tree-structured cost function, where the tree was just star-shaped. In the rest of this section, we briefly discuss an extension of the \({{\,\mathrm{UMOT}\,}}_\varepsilon \) problem to costs of the form (11), where \({{\,\mathrm{{\mathcal {G}}}\,}}=({{\,\mathrm{{\mathcal {V}}}\,}}, {{\,\mathrm{{\mathcal {E}}}\,}})\) is a general tree graph with cost functions \(c_{(j,k)} \in C({\mathbb {X}}_j\times {\mathbb {X}}_k)\) for all \((j, k)\in {{\,\mathrm{{\mathcal {E}}}\,}}\). For the balanced case, this topic was addressed in [32, Prop. 4.2].

For a disjoint decomposition

where V contains only leaves, and measures \(\mu _v \in {\mathcal {M}}^+({\mathbb {X}}_v)\), \(v \in V\), we want to find measures \(\mu _u \in {\mathcal {M}}^+({\mathbb {X}}_u)\), \(u \in U\), that solve the problem

Again, we assume that the unknown marginals \(\mu _u\) are exactly matched, i.e., \(\varphi _u = \iota _{\{1\}}\), \(u \in U\).

Example 5.6

For the barycenter problem (13), we have \(V = \{1,\ldots ,N\}\) and the tree is star-shaped, meaning that

see Fig. 1 left. In Fig. 1 middle, we have an H-shaped tree with \(N=7\), edge set \({\mathcal {E}} = \{(1,2),(2,3),(2,4),(4,6), (5,6),(6,7)\}\), and we consider problem (18) with \(V = \{1,3,5,7\}\). Finally, Fig. 1 right shows a line-shaped tree with \(N=4\), edge set \({\mathcal {E}} = \{(1,2),(2,3),(3,4)\}\) and \(V = \{1,4\}\). This graph is related to a so-called multiple barycenter problem, and its solution was discussed for the balanced case, e.g., in [9].

In general, it is unclear how to solve problem (18) using Sinkhorn iterations. Therefore, we propose to solve a related multi-marginal problem

where again \(\varphi _u ~{:}{=}~ \iota _{[0,\infty )}\) for all \(u \in U\) and \(t_v = t_{e}\) if e in \({\mathcal {E}}\) joins v with some other node (indeed well defined for leaves). Then, we can prove in analogy to Lemma 5.1 that the optimal plan \({\hat{\pi }}\) is related to its marginals \({\hat{\pi }}_e\) and \({\hat{\mu }}_u ~{:}{=}~ P_{{\mathbb {X}}_u\#} {\hat{\pi }}\) by

Furthermore, we can show similarly as in the proof of Theorem 5.2 the following corollary.

Corollary 5.7

Under the above assumptions, if \({\hat{\pi }}\) is the optimal plan in (19), then the \({\hat{\mu }}_u= P_{{\mathbb {X}}_u\#} {\hat{\pi }}\), \(u\in U\), solve

5.3 Efficient Sinkhorn Iterations for Tree-Structured Costs

Throughout this section, let \({\mathbb {X}}_1,\dotsc ,{\mathbb {X}}_N\) be finite subsets of \({\mathbb {R}}^d\) of size \(M_i ~{:}{=}~ |{\mathbb {X}}_i |> 0\), \(i = 1,\ldots ,N\). Furthermore, let \(\mu _i \in {\mathcal {M}}^+({\mathbb {X}}_i)\) be positive measures that are identified with vectors in \({\mathbb {R}}_+^{M_i}\). Hence, the reference measures \(\gamma _i\) are chosen as the counting measure. Recall that the Sinkhorn mapping for a cost function \(c \in {\mathbb {R}}_{\ge 0}^{M_1 \times \dotsc \times M_N}\) is the concatenation of the \(\text {aprox}\) operator and the multi-marginal \((c,\varepsilon )\)-transform. As the former is applied pointwise, its computational cost is negligible. Hence, it suffices to discuss the efficient implementation of the multi-marginal \((c,\varepsilon )\)-transform. For vectors and matrices, we denote pointwise multiplication by \(\odot \) and pointwise division by \(\oslash \). Set

For efficiency reasons, we perform computations in the \(\exp \)-domain, i.e., instead of the Sinkhorn iterates \((f^{(n)})_{n \in {\mathbb {N}}}\) in Algorithm 1 we consider

Convergence of Algorithm 1 implies convergence of \(u^{(n)}\) to some \({{\hat{u}}} \in {\mathbb {R}}_+^{M_1 \times \dotsc \times M_N}\). By Proposition 3.4, the optimal plan \(\hat{\pi }\) is given by \(\hat{\pi } = K \odot {{\hat{u}}}\). For \(n \equiv j \mod N\) the Sinkhorn updates in the \(\exp \)-domain can be written as

where division has to be understood componentwise. Note that in this context \(P_{{\mathbb {X}}_j\#}(\cdot )\) corresponds to summing over all but the jth dimension. Although the involved expression \(\exp ( -\frac{1}{\varepsilon } \text {aprox}_{\varphi _i^*}^\varepsilon (-\varepsilon \ln (\cdot )))\) appears to be complicated, it simplifies for all the entropies from Example 4.5, see also [12].

As recently discussed for the balanced case in [32], multi-marginal Sinkhorn iterations can be computed efficiently if the cost function decouples according to a tree, see also [2] for a wider class of cost functions. In this section, we generalize the approach for tree-structured costs to the unbalanced setting. As in the balanced case, computing the projections \(P_{{\mathbb {X}}_j\#}(K \odot u^{(n)})\), \(j = 1,\dotsc ,N\), is the computational bottleneck of the Sinkhorn algorithm. Fortunately, the Sinkhorn iterations reduce to standard matrix-vector multiplications in our particular setting.

Consider a tree \({{\,\mathrm{{\mathcal {G}}}\,}}= ({{\,\mathrm{{\mathcal {V}}}\,}},{{\,\mathrm{{\mathcal {E}}}\,}})\) as in Subsect. 5.2 and corresponding cost functions

Then, it holds \(K_{i_1, \dots , i_N} = \prod _{(j, k)\in {{\,\mathrm{{\mathcal {E}}}\,}}}K^{(j, k)}_{i_j, i_k}\), where

The next result, c.f. [32, Thm. 3.2], is the main ingredient for an efficient implementation of Algorithm 1 for solving \(\mathrm {UMOT}_{\varepsilon }\) with tree-structured cost functions.

Theorem 5.8

The projection onto the jth marginal of \(K \odot u\) is given by

Here, the \(\alpha _{(j,k)}\) are computed recursively for \((j,k) \in {\bar{{{\,\mathrm{{\mathcal {E}}}\,}}}} ~{:}{=}~ \{(v,w)| (v,w) \in {\mathcal {E}} \text { or } (w,v) \in {\mathcal {E}} \}\) starting from the leaves by

with the usual convention that the empty product is 1.

First, we traverse the tree \({{\,\mathrm{{\mathcal {G}}}\,}}\) by a pre-order depth-first search with respect to a root \(v_0\). This results in a strict ordering of the nodes, which is encoded in the list \({{\,\mathrm{{\mathcal {V}}}\,}}_{\rightarrow }\). Every node \(k \in V\) except the root has a unique parent, denoted by p(k). We denote by \({{\,\mathrm{{\mathcal {V}}}\,}}_{\leftarrow }\) the reversed list \({{\,\mathrm{{\mathcal {V}}}\,}}_{\rightarrow }\). Note that the order in which we update the vectors \((\alpha _{(j,k)})_{(j,k) \in \bar{{{\,\mathrm{{\mathcal {E}}}\,}}}}\) and potentials \((u_j)_{j \in {\mathcal {V}}}\) in Algorithm 2 fits to the underlying recursion in (20). Furthermore, the computational complexity of Algorithm 2 is linear in N. More precisely, \(2(N-1)\) matrix-vector multiplications are performed to update every \(u_j\) once, which is in alignment with the two-marginal case. In particular, solving N decoupled problems has the same complexity per iteration with the disadvantage that the marginals of the obtained transport plans do not necessarily fit to each other. Although we discussed Algorithm 2 mainly for computing barycenters, it can also be applied without free marginals, see Sect. 6.3 for a numerical example.

6 Numerical Examples

In this section, we present three numerical examples, where the first two confirm our theoretical findings from Section 5. Part of our Python implementation is built upon the POT toolbox [27].

From now on, all measures are of the form \(\sum _{k=1}^m \mu ^k \delta _{x_k}\) with support points \(x_k\in {\mathbb {R}}^d\), \(k=1,\ldots ,m\). We always use the cost functions \(c(x, y) = \left\| x-y\right\| ^2\) with corresponding cost matrices \(c = c(x_j,x_k)_{j,k=1}^m\). All reference measures are the counting measure, see Remark 3.2iii), i.e., we exclusively deal with entropy regularization.

6.1 Barycenters of 1D Gaussians

We start with computing the barycenter for two simple measures \(\mu _1\), \(\mu _2\), which are produced by sampling truncated normal distributions \({\mathcal {N}}(0.2,0.05)\) and \(2{\mathcal {N}}(0.8,0.08)\) on [0, 1] on a uniform grid. Clearly, this choice makes an unbalanced approach necessary. As before, we denote the discrete spaces and those of the barycenter by \({\mathbb {X}}_1 = {\mathbb {X}}_2 = {\mathbb {Y}}\). To approximate the marginals, we use the Shannon entropy functions \(\varphi = \varphi _1 = \varphi _2\) so that \(D_\varphi = {{\,\mathrm{KL}\,}}\). First, we solve the barycenter problem (13), which reads for \(t_1 = 1 - t\) and \(t_2 = t \in (0,1)\) as

The resulting barycenter for \(\varepsilon =0.005\) is computed using the Sinkhorn iterations described in [12, Sec. 5.2] and is shown in Fig. 2 for different \(t \in (0,1)\) together with the marginals \({\tilde{\mu }}_i ~{:}{=}~ P_{{\mathbb {X}}_{i\#}} {\hat{\pi }}^{(i)}\), \(i=1,2\).

We compare these barycenters with the marginal \(P_{{\mathbb {Y}}\#} {\hat{\pi }}\) of the corresponding optimal plan \({\hat{\pi }}\) for the multi-marginal problem

computed by Algorithm 2. The resulting marginals are provided in Fig. 2. As explained in Remark 5.4, the barycenters \({\hat{\xi }}\) appear smoothed compared to \(P_{{\mathbb {Y}}\#} {\hat{\pi }}\). As already mentioned, we do not have relations with shortest paths due to the missing metric structure.

Comparison between the unbalanced barycenter \({\hat{\xi }}\) of two Gaussians (left) and the marginal \(P_{{\mathbb {Y}}\#} {\hat{\pi }}\) of the optimal transport plan for the corresponding multi-marginal problems (right) with different \(t \in (0,1)\). The input measures \(\mu _i\), \(i=1,2\) and the corresponding marginals \({\tilde{\mu }}_i\), \(i=1,2\), of the respective transport plans are shown as well

6.2 H-tree Shaped Cost Functions

Next, we turn our attention to the “interpolation” of four gray value images of size \(100 \times 100\) considered as probability measures \(\mu _v\), \(v = 1,3,5,7\), along a tree that is H-shaped, see Fig. 1. The images are depicted in the four corners of Fig. 3a. In this example, the measures corresponding to the inner nodes with \(u = 2,4,6\) have to be computed. For this purpose, we choose \(\varepsilon =4\cdot 10^{-4}\) and \(D_{\varphi _v}(\cdot , \mu _v)=0.05{{\,\mathrm{KL}\,}}(\cdot , \mu _v)\).

Comparison with \({{\,\mathrm{MOT}\,}}_\varepsilon \) As the measures have the same mass, we can compare our proposed \({{\,\mathrm{UMOT}\,}}_\varepsilon \) approach with the balanced \({{\,\mathrm{MOT}\,}}_\varepsilon \) method. Equal costs c as well as equal weights are assigned to the edges. Note that the total cost decouples according to the H-shaped tree. The obtained results for \({{\,\mathrm{MOT}\,}}_\varepsilon \) and \({{\,\mathrm{UMOT}\,}}_\varepsilon \) are depicted in Figs. 3a and b, respectively. For \({{\,\mathrm{UMOT}\,}}_\varepsilon \), the corners contain the marginals \(P_v {\hat{\pi }} = P_{{\mathbb {X}}_v\#} {\hat{\pi }}\) instead of the given measures. As the mass in the different image regions is different, mass is transported between them in the \({{\,\mathrm{MOT}\,}}_\varepsilon \) interpolation. In contrast, only a minimal amount of mass is transported between the images regions for \({{\,\mathrm{UMOT}\,}}_\varepsilon \), where the mass difference is compensated by only approximately matching the prescribed marginals. This behavior can be controlled by adjusting the weights in the \(\varphi \)-divergences.

While \({{\,\mathrm{MOT}\,}}\) becomes numerically unstable for smaller \(\varepsilon \) than \(4\cdot 10^{-4}\), the \({{\,\mathrm{UMOT}\,}}\) problem remains solvable for smaller values of \(\varepsilon \). In our numerical experiments, which are not reported here, this led to less blurred images.

Different “interpolations” between the four given images at the corners of Fig. 3a. Top: H-tree structured cost function. Bottom: Star-shaped decomposition approach. A common color coding is used for all images

Comparison with Multiple Star Graph Barycenters Next, we provide a heuristic comparison with an alternative interpolation approach. Instead of computing three interpolating measures simultaneously, we solve three individual barycenter problems based on star-shaped graphs with leaves corresponding to \(\mu _v\), \(v = 1,3,5,7\). This is done both with the \({{\,\mathrm{UOT}\,}}_\varepsilon \) and corresponding \({{\,\mathrm{UMOT}\,}}_\varepsilon \) approach. More precisely, we solve (13) and (16) three times with weights \(1/12\cdot (5, 5, 1, 1)\), \(1/4\cdot (1, 1, 1, 1)\) and \(1/12\cdot (1, 1, 5, 5)\), respectively. These weights have been derived from the solution of the balanced H-graph problem with Dirac measures at the leaves, which is easy to obtain from a linear system corresponding to the first-order optimality conditions. The results are provided in Figs. 3c and d. Noteworthy, both interpolations have an even less pronounced mass transfer between the different image structures. However, the computed images look considerably smoother than their counterparts in Fig. 3b. Again, as expected, we observe that the \({{\,\mathrm{UOT}\,}}_\varepsilon \) results in Fig. 3d are more blurred than the corresponding \({{\,\mathrm{UMOT}\,}}_\varepsilon \) interpolations in Fig. 3c. As they are all marginals of multi-marginal transport plans, the images in Figs. 3a and b have the same mass. In contrast, the images in Figs. 3c and d do not necessarily have the same mass as they are marginals of different transport plans. Hence, depending on the application, one or the other approach might be preferable.

Note that in order to compute the multiplications with the matrices K in Algorithm 2, we exploit the fact that the Gaussian kernel convolution K is separable in the two spatial dimensions and can be performed over the rows and columns of the images one after another, such that we never actually store the matrix \(K\in {\mathbb {R}}^{10000\times 10000}\). Consequently, we cannot use stabilizing absorption iterations as proposed in [12].

6.3 Particle Tracking and Transfer Operators

Top: Clean dataset. Middle upper: Added noise to get the measures \(\mu _i\), \(i=1,\ldots ,5\). Middle lower: Marginals \(P_{{\mathbb {X}}_{i\#}} {\hat{\pi }}\), \(i=1,\ldots ,5\), of optimal transport plan \({\hat{\pi }}\) for \({{\,\mathrm{UMOT}\,}}_\varepsilon (\mu )\). Bottom: Remaining noise and artifacts (marginals – clean)

Zoom into marked patch in Fig. 4. Note that the brightness scale differs from Fig. 4 for better contrast. Left: Ground truth \(\mu _{ \mathrm {clean},5 }\). Middle left: Noisy \(\mu _{ 5 }\). Middle right: Transferred image \(K_{{{\,\mathrm{UMOT}\,}}}^\mathrm {T}\, \mu _{\mathrm {clean},1}\). Right: Transferred image \(K_{{{\,\mathrm{UOT}\,}}}^\mathrm {T}\, \mu _{\mathrm {clean},1}\)

Finally, we investigate whether computing a single joint \({{\,\mathrm{UMOT}\,}}_\varepsilon \) solution can be beneficial compared to computing several \({{\,\mathrm{UOT}\,}}_\varepsilon \) plans sequentially, e.g., for particle tracking. To this end, we create a time series of five images. Each image has size \(100\times 100\) pixels and consists of “dots” by sampling uniform noise, setting all values above a small threshold to zero, and applying a Gaussian filter with small variance. One time step corresponds to shifting the image by two pixels downward filling with the small constant background value from the top, which results in images \(\mu _{\mathrm {clean},i}\), \(i=1, \cdots ,5\). We modify this time series of five images by adding dots randomly for every time step in a similar manner. Consequently, the data consist of a drift component and some random dots popping up and disappearing again. The resulting data \(\mu _i\), \(i=1, \cdots ,5\), are shown in Fig. 4. As we want to extract only the drift component, we apply \({{\,\mathrm{UMOT}\,}}_\varepsilon (\mu )\) for a line-tree-structured cost function with the same costs \(c_i\) along the path, regularization parameter \(\varepsilon = 10^{-4}\), and \(D_{\varphi _i}(\cdot ,\mu _i)=7\cdot 10^{-4} {{\,\mathrm{TV}\,}}(\cdot , \mu _i)\), \(i=1,\cdots ,5\). We expect that the hard thresholding of the corresponding \(\text {aprox}\)-operators for \({{\,\mathrm{TV}\,}}(\cdot , \mu _i)\), \(i=1,\cdots ,5\), is particularly well suited for removing the noise in our example, see also [8, 26]. The resulting marginals of the optimal transport plan \(P_{{\mathbb {X}}_{i\#}} {\hat{\pi }}\) are shown in Fig. 4. Indeed, the method manages to remove most of the noise dots.

Transfer Operators For our next comparison, we use OT-related transfer operators, which have recently been discussed in [36]. We abuse notation in this section by sometimes identifying empirical measures with their vectors or matrices of weights for simplicity. In a nutshell, assuming a discrete, two-marginal setting of probability measures with optimal transport matrix \({\hat{\pi }}\) and left marginal vector \(\mu _\ell = (\mu _\ell ^k)_{k=1}^m\), we can define a row-stochastic transition matrix K by setting

This concept allows us to propagate other measures than \(\mu _\ell \) forward in time by multiplication with \(K^\mathrm {T}\). Note that there is a continuous analog in terms of Frobenius–Perron operators, Markov kernels, and the disintegration theorem; see [6, 7, 30, 35, 38] for details.

Now, we compute the marginal \({\hat{\pi }}_{1,5} ~{:}{=}~ P_{{\mathbb {X}}_1 \times {\mathbb {X}}_5\#} {\hat{\pi }}\) of the optimal \({{\,\mathrm{UMOT}\,}}_\varepsilon (\mu )\) transport plan \({\hat{\pi }}\). Using the marginal \({\tilde{\mu }}_1 ~{:}{=}~ P_{{\mathbb {X}}_{1\#}} {\hat{\pi }}_{1,5} = P_{{\mathbb {X}}_{1\#}} {\hat{\pi }}\), we get the transfer operator

Then, we propagate the first clean image \(\mu _{\mathrm {clean},1}\) by this transfer operator, i.e., we compute \(K_{{{\,\mathrm{UMOT}\,}}}^\mathrm {T}\, \mu _{\mathrm {clean},1}\). The result is shown in Fig. 5.

For comparison, we also compute \(N-1\) successive \({{\,\mathrm{UOT}\,}}_\varepsilon (\mu _i,\mu _{i+1})\) plans \({\hat{\pi }}^{(i)}\), \(i=1, \cdots , 4\). Denoting the marginals by \({\tilde{\mu }}_i ~{:}{=}~ P_{{\mathbb {X}}_{i\#}} {\hat{\pi }}^{(i)}\), \(i=1, \cdots , 4\), we consider the transfer kernel

Then, we transfer the clean first image by this operator, i.e., we compute \(K_{{{\,\mathrm{UOT}\,}}}^\mathrm {T}\, \mu _{\mathrm {clean},1}\). The obtained results are shown in Fig. 5. Note that similarly as described in the previous subsection, the computations can be carried out using separable convolutions without ever storing the large matrix K.

As we wanted to extract the drift behavior using only the noisy images, the propagated images should be compared to \(\mu _{\mathrm {clean},5}\), i.e., the last image of the first row in Fig. 4, which is just a shifted version of the first image. In some sense, we can interpret this image as the propagation using the “true” transfer operator. There are considerably less artifacts visible in the \({{\,\mathrm{UMOT}\,}}_\varepsilon \) propagated image compared to the \({{\,\mathrm{UOT}\,}}_\varepsilon \) version. This is particularly pronounced in the middle left part of the images. As an error measure, we also computed the squared Euclidean distances between the propagated images and the ground truth, which are 2.98 and 6.48 for the \({{\,\mathrm{UMOT}\,}}_\varepsilon \) and \({{\,\mathrm{UOT}\,}}_\varepsilon \) version, respectively.

From an intuitive point of view, the results are not surprising. If we are only provided with a single pair of images from the sequence, it is much harder to judge which points correspond to noise than for a whole sequence of images. Note that a single Sinkhorn iteration for the coupled \({{\,\mathrm{UMOT}\,}}_\varepsilon \) problem has the same computational complexity as for all of the decoupled \({{\,\mathrm{UOT}\,}}_\varepsilon \) problems together. Hence, the \({{\,\mathrm{UMOT}\,}}_\varepsilon \) approach appears to be better suited for this application, as it incorporates more information about the problem without significant additional computational cost.

7 Conclusions

In this paper, we introduced a regularized unbalanced multi-marginal optimal transport framework, abbreviated \({{\,\mathrm{UMOT}\,}}_\varepsilon \), bridging the gap between regularized unbalanced optimal transport and regularized multi-marginal optimal transport. We outlined how the Sinkhorn algorithm can be adapted to solve \({{\,\mathrm{UMOT}\,}}_\varepsilon \) efficiently for tree-structured costs. For this case, we have also shown how \({{\,\mathrm{UMOT}\,}}_\varepsilon \) provides alternative solutions of barycenter-like problems with desirable properties, such as improved sharpness. In the future, we plan to examine \({{\,\mathrm{UMOT}\,}}_\varepsilon \) in connection with particle cluster tracking methods, e.g., following the ideas in [36]. Furthermore, it would be interesting to examine a reformulation of the regularized unbalanced barycenter problem as UMOT problem using a cost function similar to that in Remark 5.5. Additionally, we want to investigate the theoretical relation between the two interpolation approaches from Sect. 6.2. Finally, we are interested in \({{\,\mathrm{UMOT}\,}}_\varepsilon \) for measures having the same moments up to a certain order and for measures living on special manifolds such as, e.g., torus or spheres, see also [22].

References

Agueh, M., Carlier, G.: Barycenters in the Wasserstein space. SIAM J. Math. Anal. 43(2), 904–924 (2011)

Altschuler, J.M., Boix-Adsera, E.: Polynomial-time algorithms for multimarginal optimal transport problems with decomposable structure. arXiv:2008.03006, (2020)

Arjovsky, M., Chintala, S., Bottou, L.: Wasserstein generative adversarial networks. In Proc. of Machine Learning, volume 70, pages 214–223. PMLR, (2017)

Benamou, J.-D., Carlier, G., Cuturi, M., Nenna, L., Peyré, G.: Iterative Bregman projections for regularized transportation problems. SIAM J. Sci. Comput. 37(2), A1111–A1138 (2015)

Benamou, J.-D., Carlier, G., Nenna, L.: A numerical method to solve multi-marginal optimal transport problems with Coulomb cost. In: Splitting Methods in Communication, Imaging, Science, and Engineering, pp. 577–601. Springer, Cham (2016)

Boyarsky, A., Góra, P.: Laws of Chaos. Probability and its Applications. Birkhäuser, Boston (1997)

Brin, M., Stuck, G.: Introduction to Dynamical Systems. Cambridge University Press, UK (2002)

Caffarelli, L.A., McCann, R.J.: Free boundaries in optimal transport and Monge-Ampère obstacle problems. Ann. of Math. (2),171(2), 673–730 (2010)

Caillaud, C.: Asymptotical estimates for some algorithms for data and image processing: a study of the Sinkhorn algorithm and a numerical analysis of total variation minimization. PhD Thesis, École Polytechnique Paris, (2020)

Carlier, G., Ekeland, I.: Matching for teams. Econ. Theory 42(2), 397–418 (2010)

Chen, Y., Karlsson, J.: State tracking of linear ensembles via optimal mass transport. IEEE Contr. Syst. Lett. 2(2), 260–265 (2018)

Chizat, L., Peyré, G., Schmitzer, B., Vialard, F.-X.: Scaling algorithms for unbalanced optimal transport problems. Math. Comp. 87(314), 2563–2609 (2018)

Chizat, L., Peyré, G., Schmitzer, B., Vialard, F.-X.: Unbalanced optimal transport: dynamic and Kantorovich formulations. J. Funct. Anal. 274(11), 3090–3123 (2018)

Chung, N.-P., Phung, M.-N.: Barycenters in the Hellinger–Kantorovich space. Appl. Math. Optim, to appear

Clason, C., Lorenz, D., Mahler, H., Wirth, B.: Entropic regularization of continuous optimal transport problems. J. Math. Anal. Appl. 494(1), 124432 (2021)

Cohen, S., Kumar, K.S.S., Deisenroth, M.P.: Sliced multi-marginal optimal transport. arXiv:2102.07115, (2021)

Colombo, M., De Pascale, L., Di Marino, S.: Multimarginal optimal transport maps for one-dimensional repulsive costs. Canad. J. Math. 67(2), 350–368 (2015)

Cuturi, M.: Sinkhorn distances: Lightspeed computation of optimal transport. In Advances in Neural Information Processing Systems 26, pages 2292–2300. Curran Associates, Inc., (2013)

Cuturi, M., Doucet, A.: Fast computation of Wasserstein barycenters. In Proc. of Machine Learning Research 32(2), 685–693. PMLR, (2014)

Di Marino, S., Gerolin, A.: An optimal transport approach for the Schrödinger bridge problem and convergence of Sinkhorn algorithm. J. Sci. Comput. 85, 27 (2020)

Dvurechenskii, P., Dvinskikh, D., Gasnikov, A., Uribe, C., Nedich, A.: Decentralize and randomize: Faster algorithm for Wasserstein barycenters. In Advances in Neural Information Processing Systems 31, pages 10760–10770. Curran Associates, Inc., (2018)

Ehler, M., Gräf, M., Neumayer, S., Steidl, G.: Curve based approximation of measures on manifolds by discrepancy minimization. Foundations of Computational Mathematics, (2021)

Ekeland, I., Témam, R.: Convex Analysis and Variational Problems. SIAM, Philadelphia (1999)

Elvander, F., Haasler, I., Jakobsson, A., Karlsson, J.: Multi-marginal optimal transport using partial information with applications in robust localization and sensor fusion. Signal Process. 171, 107474 (2020)

Feydy, J., Séjourné, T., Vialard, F.-X., Amari, S., Trouvé, A., Peyré, G.: Interpolating between optimal transport and MMD using Sinkhorn divergences. In Proc. of Machine Learning Research 89, 2681–2690. PMLR, (2019)

Figalli, A.: The optimal partial transport problem. Arch. Ration. Mech. Anal. 195(2), 533–560 (2010)

Flamary, R., Courty, N.: POT Python Optimal Transport library. https://github.com/PythonOT/POT, (2017). Accessed: 03.03.2021

Friesecke, G., Matthes, D., Schmitzer, B.: Barycenters for the Hellinger-Kantorovich distance over \({\mathbb{R} }^d\). SIAM J. Math. Anal. 53(1), 62–110 (2021)

Frogner, C., Zhang, C., Mobahi, H., Araya, M., Poggio, T.A.: Learning with a Wasserstein loss. In Advances in Neural Information Processing Systems 28, pages 2053–2061. Curran Associates, Inc., (2015)

Froyland, G.: An analytic framework for identifying finite-time coherent sets in time-dependent dynamical systems. Phys. D 250, 1–19 (2013)

Gerolin, A., Kausamo, A., Rajala, T.: Multi-marginal entropy-transport with repulsive cost. Calc. Var. Partial Differ. Equ. 59(3), 90 (2020)

Haasler, I., Ringh, A., Chen, Y., Karlsson, J.: Multimarginal optimal transport with a tree-structured cost and the Schrödinger bridge problem. SIAM J. Control and Optimiz. 59(4), 2428–2453 (2021)

Janati, H., Cuturi, M., Gramfort, A.: Debiased Sinkhorn barycenters. In Proc. of Machine Learning Research 119, 4692–4701.PMLR, (2020)

Janati, H., Muzellec, B., Peyré, G., Cuturi, M.: Entropic optimal transport between unbalanced Gaussian measures has a closed form. In Advances in Neural Information Processing Systems 33, pages 10468–10479. Curran Associates, Inc., (2020)

Klus, S., Nüske, F., Koltai, P., Wu, H., Kevrekidis, I., Schütte, C., Noé, F.: Data-driven model reduction and transfer operator approximation. J. Nonlinear Sci. 28(3), 985–1010 (2018)

Koltai, P., von Lindheim, J., Neumayer, S., Steidl, G.: Transfer operators from optimal transport plans for coherent set detection. Phys. D 426, 132980 (2021)

Kusner, M., Sun, Y., Kolkin, N., Weinberger, K.: From word embeddings to document distances. In Proc. of Machine Learning Research 37, 957–966. PMLR, (2015)

Lasota, A., Mackey, M.: Chaos, Fractals, and Noise: Stochastic Aspects of Dynamics. Applied Mathematical Sciences, vol. 97. Springer, New York (1994)

Liero, M., Mielke, A., Savaré, G.: Optimal entropy-transport problems and a new Hellinger-Kantorovich distance between positive measures. Invent. Math. 211(3), 969–1117 (2018)

Luise, G., Salzo, S., Pontil, M., Ciliberto, C.: Sinkhorn barycenters with free support via Frank–Wolfe algorithm. In: Advances in Neural Information Processing Systems 32, pages 9322–9333. Curran Associates, Inc., (2019)

Neumayer, S., Steidl, G.: From optimal transport to discrepancy. To appear in Handbook of Mathematical Models and Algorithms in Computer Vision and Imaging, (2020)

Pass, B.: Multi-marginal optimal transport: theory and applications. ESAIM Math. Model. Numer. Anal. 49(6), 1771–1790 (2015)

Quang, M.H.: Entropic regularization of Wasserstein distance between infinite-dimensional Gaussian measures and Gaussian processes. J. Theor. Probab., (2022)

Ramdas, A., Trillos, N.G., Cuturi, M.: On Wasserstein two-sample testing and related families of nonparametric tests. Entropy, 19(2), (2017)

Rockafellar, R.T.: Integrals which are convex functionals. Pacific J. Math. 24, 525–539 (1968)

Sinkhorn, R.: A relationship between arbitrary positive matrices and doubly stochastic matrices. Ann. Math. Statist. 35(2), 876–879 (1964)

Séjourné, T., Feydy, J., Vialard, F.-X., Trouvé, A., Peyré, G.: Sinkhorn divergences for unbalanced optimal transport. arXiv:1910.12958, (2019)

Wang, W., Slepčev, D., Basu, S., Ozolek, J., Rohde, G.: A linear optimal transportation framework for quantifying and visualizing variations in sets of images. Int. J. Comput. Vis. 101, 254–269 (2013)

Zhu, L., Yang, Y., Haker, S., Tannenbaum, A.: An image morphing technique based on optimal mass preserving mapping. IEEE Trans. Image Process. 16, 1481–95 (2007)

Acknowledgements

The datasets generated during and analyzed during the current study are not publicly available, but are available from the corresponding author on reasonable request. Funding by the DFG under Germany’s Excellence Strategy – The Berlin Mathematics Research Center MATH+ (EXC-2046/1, Projektnummer: 390685689) and by the DFG Research Training Group DAEDALUS (RTG 2433) is acknowledged.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Beier, F., von Lindheim, J., Neumayer, S. et al. Unbalanced Multi-marginal Optimal Transport. J Math Imaging Vis 65, 394–413 (2023). https://doi.org/10.1007/s10851-022-01126-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-022-01126-7

Keywords

- Entropy regularization

- Multi-marginal optimal transport

- Sinkhorn algorithm