Abstract

Today the topic of incremental sheet forming (ISF) is one of the most active areas of sheet metal forming research. ISF can be an essential alternative to conventional sheet forming for prototypes or non-mass products. Single point incremental forming (SPIF) is one of the most innovative and widely used fields in ISF with the potential to form sheet products. The formed components by SPIF lack geometric accuracy, which is one of the obstacles that prevents SPIF from being adopted as a sheet forming process in the industry. Pillow effect and wall displacement are influential contributors to manufacturing defects. Thus, optimal process parameters should be selected to produce a SPIF component with sufficient quality and without defects. In this context, this study presents an insight into the effects of the different materials and shapes of forming tools, tool head diameters, tool corner radiuses, and tool surface roughness (Ra and Rz). The studied factors include the pillow effect and wall diameter of SPIF components of AlMn1Mg1 aluminum alloy blank sheets. In order to produce a well-established study of process parameters, in the scope of this paper different modeling tools were used to predict the outcomes of the process. For that purpose, actual data collected from 108 experimentally formed parts under different process conditions of SPIF were used. Neuron by Neuron (NBN), Gradient Boosting Regression (GBR), CatBoost, and two different structures of Multilayer Perceptron were used and analyzed for studying the effect of parameters on the factors under scrutiny. Different validation metrics were adopted to determine the quality of each model and to predict the impact of the pillow effect and wall diameter. For the calculation of the pillow effect and wall diameter, two equations were developed based on the research parameters. As opposed to the experimental approach, analytical equations help researchers to estimate results values relatively speedily and in a feasible way. Different partitioning weight methods have been used to determine the relative importance (RI) and individual feature importance of SPIF parameters for the expected pillow effect and wall diameter. A close relationship has been identified to exist between the actual and predicted results. For the first time in the field of incremental forming study, through the construction of Catboost models, SHapley Additive exPlanations (SHAP) was used to ascertain the impact of individual parameters on pillow effect and wall diameter predictions. CatBoost was able to predict the wall diameter with R2 values between the range of 0.9714 and 0.8947 in the case of the training and testing dataset, and between the range of 0.6062 and 0.6406 when predicting pillow effect. It was discovered that, depending on different validation metrics, the Levenberg–Marquardt training algorithm performed the most effectively in predicting the wall diameter and pillow effect with R2 values in the range of 0.9645 and 0.9082 for wall diameter and in the range of 0.7506 and 0.7129 in the case of the pillow effect. NBN has no results worthy of mentioning, and GBR yields good prediction only of the wall diameter.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Sheet metal forming technology forms parts through a series of localized incremental plastic deformations called Incremental Sheet Forming (ISF) (Jackson & Allwood, 2009; Kumar & Kumar, 2015). ISF is a moderate, innovative sheet-forming technology without employing classic punch and dies (Najm et al., 2030). The components are formed by way of a predefined strategy called path, which guides a simple tool that performs incremental movements over the clamped sheet to form the desired final shape (Azaouzi & Lebaal, 2012; M. Amala Justus Selvam, R. Velu, & T. Dheerankumar, 1050; Szpunar et al., 2021). The first dieless incremental forming process can be traced back to Leszak (Leszak, 1967). The main two types of ISF are Single Point Incremental Forming (SPIF) and Two Point Incremental Forming (TPIF). The first patent mentioned before can be considered a SPIF, and TPIF was first presented by Matsubara (Matsubara, 1994). SPIF is more appropriate than conventional sheet-forming methods to manufacture prototypes, small production batches, and customized components (Mezher et al., 2018; Paniti et al., 2020; Trzepieciński et al., 2022a). SPIF is flexible and with its help it is very simple to produce complex geometries by following a programmed path strategy using a CNC machine with at least three controlled axes (Trzepieciński et al., 2022b). A very up-to-date paper briefly overviewed recent developments in SPIF of lightweight materials (Trzepieciński et al., 2021a). SPIF is one of the promising technologies in the future of sheet forming for aerospace applications (Trzepieciński et al., 2021b). However, the quality, surface finish, and geometric accuracy of products formed by SPIF are affected by different process parameters. At the same time, improper selection of process parameters can cause deviation and inaccuracy with respect to desired shapes. Still, ISF in general, and SPIF in particular, have disadvantages in terms of geometric accuracy and pillow effect. Due to the elastic–plastic deformation throughout the forming process, which is apt to instability, the formed sheet is prone to be impacted by a springback (Khan et al., 2015; Zhang et al., 2016) and pillow effect (Bai et al., 2019; Najm & Paniti, 2020). Matching the wall profile of the product to the CAD model based on the path strategy is one of the challenges of this process. One of the main factors that affects the accuracy of the components in terms of geometric accuracy is springback (Mezher et al., 2021a, 2021b). Wall displacement due to springback is the difference between the actual wall angle or diameter and the angle or diameter of the CAD model. The springback of component walls is induced mainly by two factors: local springback and global springback. Due to the sheet’s position following the use of the advanced forming tool, the local forming that occurred while the sheet returns to its initial position after the tool has passed causes local springback. On the other hand, global springback occurs as a result of residual stresses, which stem from the unconstrained material in the formed sheet after releasing the sheet from clamping (Edwards et al., 2017). In addition to the two types of springback mentioned above, Gates et al. (2016) noted that another type of springback ensues upon the displacement of the forming tool, which they named continuous local springback. Kiridena et al. (2016) invented innovative tools to increase the geometric accuracy of products formed incrementally. They described that dimensional accuracy is significantly impacted by the lengthening of the tool shank and by the decrease of the tool diameter, while the underside flat part of the donut-shaped tool increases the formed part’s accuracy. The papers (Najm & Paniti, 2018, 2020) stated that, as compared to the hemispherical tool of components formed by SPIF, flat tools led to better formability, increased homogeneity in thickness distribution, and a minimized pillow effect.

The pillow effect—also called bulge—is a concave surface developing on the unformed bottom area in the center of the parts (Nasulea & Oancea, 2021); pillow effect is the influential forming error that negatively impacts the geometric accuracy of SPIF components and limits formed part’s formability (Wei et al., 2020). The pillow effect occurs due to the springback of the wall between the obtained geometry and the CAD model, in which the forming tool generates tension on the unformed surface from the corner, and the middle part remains free to become puffy. The pillow appearing in the form of a bulge on the remaining unformed surface must stay flat during and after the forming process. Researchers have attempted to find a proper method to prevent the formation of pillow effect in products formed using SPIF. Ambrogio et al. (2007) suggest an ANOVA analysis based equation which allows for identifying quadratic model equations for the purpose of predicting geometrical errors between an ideal surface and real surfaces in the case of truncated pyramid shape aluminum alloy AA1050–O sheets. The researchers state that pillow effect is strongly influenced by tool diameter and product height. Micari et al. (2007) indicated that two types of errors in the typologies result in inaccurately formed parts of ISF: the two typologies’ errors are springback and pillow effect. Pillow effect increases forming force, which results in the inaccuracy of parts. Thus, work hardening during multi‐point forming decreases the pillow effect in the case of multi‐point forming (Zhang et al., 2017). Al-Ghamdi and Hussain (2015) have studied the mechanical properties of formed parts on pillow effect. They state that the tensile fracture affecting and controlling formability has an insignificant impact on bulge. However, a decrease in hardening exponent, which controls stretchability, decreases bulge, and this can be considered a significant property that affects the pillow effect. Furthermore, higher forming depth leads to bigger billowing but not in a linear way: certain specified depths relieve the pillow effect because of the property of the hardening exponent. Afzal (2021) sees this differently: he claims that the pillow effect sets in because of two different states in the formed sheet: the unformed base is in an elastic state, while the formed wall is in a plastic state. Isidore et al. (Isidore, 2014; Isidore et al., 2016) found that parts formed with the help of a hemispherical tool caused more oversized pillows because strains and compressive stresses were generated due to the compression of the material. Also, it was observed that smaller pillows resulted when a flat tool was used because tensile stress and strains impacted in transverse directions. Essa and Hartley (2011) discovered various ways to improve geometric accuracy by executing FE in SPIF. They reduced the bending of components via flanking using a support plate, and thus minimized springback by stationing a supporting tool and by eliminating the pillow effect through modifying the last stage of the tool path.

Due to their excellent formability and resistance to corrosion, general-purpose 3xxx’s alloys are used for architectural applications and for the manufacturing of various products. 3xxx’s alloys are non-heat-treatable but exhibit about 20% more strength than 1xxx series alloys. Manganese is the principal alloying element of 3xxx alloys, which is added either of its own or with magnesium. However, magnesium is considerably more effective than manganese as a hardener: about 0.8% Mg is equal to 1.25% Mn (Davis, 2001). In the experiments of single point incremental forming conducted in the scope of this study, an AlMn1Mg1 aluminum alloy blank sheet with an initial thickness of 0.22 mm was used. This alloy belongs to the 3xxx series based on its sequence of elements. Examples of common AlMn1Mg1 aluminum alloy applications include beer & beverage cans (Hirsch, 2006), where good formability is achieved by (Mg) with strengthening effects by (Mn). AlMn1Mg1 aluminum alloy is also used in automotive radiator heat exchangers and as tubing in commercial power plant heat exchangers (Kaufman, 2000), as well as for the following specific purposes: sheet metal work, storage tanks, agricultural applications, building products, containers, electronics, furniture, kitchen equipment, recreation vehicles, trucks and trailers (Davis, 2001).

Recently, various techniques of artificial intelligence have been used in many industries including the metal forming industry. In the last decade, machine learning has been applied using various Artificial Neural networks (ANN) techniques in a number of applications and industries. Furthermore, machine learning has dominated manufacturing with a view to designing the most practical, sufficient and adequate predictive models (Hussaini et al., 2014; Kondayya & Gopala Krishna, 2013; Lela et al., 2009; Li, 2013; Marouani & Aguir, 2012). A recent state-of-the-art review discovered analytical and numerical models of incremental formation (IF). IF-related issues have been solved using artificial intelligence AI-based computational approaches. This research evaluates IF literature. Artificial neural networks, support vector regression, decision trees, fuzzy logic, evolutionary algorithms, and particle swarm optimization solve IF-related issues. Hybrid approaches integrate some of the previous strategies (Nagargoje et al., 2021). Different intelligences with or without controlled manufacturing have been generated or developed as predictive models in end-milling machining, high-speed machining, and powder metallurgy (Amirjan et al., 2013; Ezugwu et al., 2005; Zain et al., 2010). Artificial neural network architecture has generated tool paths directly from a digital component model for ISF components. Multiple training techniques, network topologies, and training sets were examined in a feedforward network structure with backpropagation. They prove neural networks can generate tool routes for sheet metal free forming (Hartmann et al., 2019). Notably with respect to SPIF, different studies have developed ANN, support Victor Regression (SVR), Gradient Boosting Regressions (GBR) models for the prediction of formability (Najm & Paniti, 2021a), surface roughness (Najm & Paniti, 2021b; Trzepieciński et al., 2021c) and hardness (Najm et al., 2021) of components formed using SPIF under various forming conditions. Low et al. (2022) predict this error distribution from input CAD geometry of SPIF components using Convolutional Neural Networks-Forming Prediction (CNN-FP). For the untrained wall angle, the CNN-FP model had an RMSE (Root mean squared error) of 0.381 mm at 50 mm depth. The CNN-FP performance for the untrained complicated geometry was determined to be 0.391 mm at 30 mm depth. However, there was considerable degradation at 50 mm depth of the complicated geometry when the model's prediction had an RMSE of 0.903 mm.

In light of the literature, the above-detailed concerns in connection with SPIF as well as the lacking standardization of SPIF process parameters and the scarcity of referent mathematical models have motivated the authors of this paper to deal with the investigation and prediction of the pillow effect and wall diameter of truncated frustums processed by SPIF. To the authors’ knowledge, such an experimental process has not been tested or described in the literature so far. The researchers consider SPIF process parameters on geometric accuracy (pillow effect and wall diameter) as one of the main significant drawbacks of the SPIF process. Geometric accuracy in the form of components’ wall and pillow effect can be influenced by various factors including tool materials, tool shape and size and the surface finish of the tooltip. Therefore, in the present paper, geometric accuracy with respect to components’ wall accuracy and pillow effect have been investigated experimentally and have been studied in the scope of the above-mentioned parameters. Furthermore, as an aim and novelty in the scope of this paper, various models have been built along different combinations of parameters for both pillow effect and wall diameter datasets, and accordingly prediction equations based on weights and biases have been derived. The combined partitioning weight of the NN was adopted to estimate the relative importance (RI) of SPIF parameters on model output. In addition, and for the first time in ISF, process parameters have been interpreted via SHapley Additive exPlanations (SHAP), which were utilized to establish parameters’ relevance on the pillow effect and wall diameter.

Material properties

In this section material properties of the investigated sheet and properties of the applied tools are presented. In the scope of this study, an initial 0.22 mm thick AlMn1Mg1 aluminum alloy square-shaped blank sheets of 150 mm × 150 mm were used, the tensile of the specimens cut from the blank sheet was tested according to the EN ISO 6892-1:2010 standard and the tensile was conducted using an INSTRON 5582 universal testing machine at room temperature. Based on the rolling direction, the specimens were cut from three directions: 0°, 45°, and 90°, and three samples were produced for each direction. The relative standard deviation did not exceed 3% based on the test procedure. Furthermore, the planar anisotropy values (r10) were established using an Advanced Video Extensometer (AVE). The average values of the mechanical properties are listed in Table 1. The chemical composition of the sheet material was analyzed with the help of a WAS FOUNDRY-MASTER Optical Emission Spectroscopy (OES), and the pertaining data are found in Table 2.

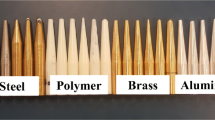

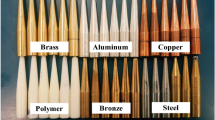

For the formation of the sheet in the experiments of SPIF in the scope of this research design, various forming tools were used as far as tool shapes, tool materials, the tip radius (R), and the corner radius (r) of the tool are concerned. Two different tool designs have been selected: hemispherical with variety in tip radius (R) (see Fig. 1a) and flat tools with changes in corner radius (r) (see Fig. 1b). The (R) values are 1 mm, 2 mm, and 3 mm, while the (r) values are 0.1 mm, 0.3 mm, and 0.5 mm. Each set of tools was created using six different materials: Table 3 details the metal tools and their properties, and Table 4 lists the tools created using polymer provided by STRATASYS. Hardness was experimentally tested with the help of a Wolpert Diatronic 2RC S hardness tester according to ISO 6506-1:2014. Consequently, the corresponding hardness value for each tool was adopted for matching with the ISO code of the tested materials in question. Thus, the mechanical properties were determined based on the ISO code of the forming tool’s material. For the determination of the ISO code, a FOUNDRY-MASTER Pro2 Optical Emission Spectrometer was used to measure each forming tool material.

Experiments

A frustum geometry shape with the dimensions shown in Fig. 2 was created experimentally during the forming process. The failure criterion was defined as the end of the forming process, and an example of a failed part is shown in Fig. 3. Two different tool shapes (spherical and flat), with three different tooltip sizes (three different radii (R) for the hemispherical and three different corner radii (r)) for the flat tool, and six different materials (see Fig. 4)—(Steel (C45), Brass (CuZn39Pb3), Bronze (CuSn8), Copper (E-Cu57), Aluminum (AlMgSi 0.5) and polymer VeroWhitePlus (RGD835))—altogether produce 36 various forming process conditions. For reasons of reliability and correct measurement assurance, three parts were formed for each process condition for the purpose of ensuring the accuracy of the obtained results; the total number of formed components thus totaled 108. The Design of Experiments (DOE) was not applied for minimizing the number of experiments because the resulting data were collected and used as an actual dataset (input and output) for the predictive models. A SIEMENS Topper TMV-510T 4-axis CNC milling machine combined with sinumerik 840-D controller was used in the forming process (see Fig. 5 for the machine and clamping design). The fixed forming process parameters are shown in Table 5. A step size with a value of 0.05 mm in direction Z was used as a constant value for the whole forming path. The application of a smaller step size results in an accurate geometry and better surface finish of final parts formed by SPIF (Lu et al., 2014; Mulay et al., 2018). A strategy of spiral path moves inward to the sheet center, with the center adopted to form the frustum parts incrementally, as shown in Fig. 6. This strategy was developed by Skjoedt et al. (2007) to reach the maximum axial loads in step size and to achieve a better surface of the impacted inner surface with the help of the forming tool. Also, this strategy aids the more successful and precise formation of components (Kumar & Gulati, 2020).

In each part of forming, the surface roughness of the forming tool was measured before and after starting the forming process. The forming tool’s surface roughness measured before the forming process was adopted as an input value of the upcoming formed part. For this value and the upcoming forming process, the surface roughness of the tool was adopted as input and so on. The above-mentioned scenario was applied to all forming tools used in the scope of this study. Nevertheless, a new polymer tool was employed in each forming process because of the wear on the forming tool developed by the polymer. The surface roughness of each polymer tool was measured before the start of the process.

The profiles of all formed parts and their unformed bases (Wall diameter and Pillow effect) were measured using a Mitutoyo Coordinate Machine CV-1000 (MCM), see Fig. 7. The average deviation of the three parts experimentally formed under the same SPIF condition was compared to the CAD model in terms of wall diameter and unformed base, and this was adopted as values of the geometrical accuracy and pillow effect studied in this research.

The MCM instrument was operated in the measurement processes along 49 mm at a speed of 0.5 mm per second together with a pitch size of 0.005 mm. MCM automatically creates a component profile with the possibility of measuring each point, as shown in Fig. 8. This figure shows examples only for 20 points. For each part, more than 9000 points were measured automatically with the help of axis X and Z coordinates. The measured data points were used to build an actual profile so that the CAD model, the measurement of the wall diameter and the pillow effect could be compared with this resulting profile. The pillow effect is the difference between the highest peak and the lowest valley values measured. In an ideal case of forming, the lower bottom unformed surface should be flat between the peak and the lowest valley value.

Artificial neural networks

The notion of the neural network is often traced back to Warren McCulloch and Walter Pitts’s 1940 study. Their basic idea was that neural networks could compute any logical functions or mathematical formulations. Furthermore, the invention of the Perceptron Network at the end of 1950 can be considered as the first practical application of ANN (Hagan et al., 2014). Recently, neural networks have become the main interest for thousands of researchers in different fields of science. As a whole, it can be established that there is no scientific field that does not have any links with neural networks. Scientists in different areas including healthcare, aerospace, defense, arts, filmmaking, music and various industry technologies adopted ANN.

Neuron by Neuron (NBN)

Using Visual Studio 6.0 and C + + language, Hao Yu and Wilamowski (Yu & Wilamowski, 2009a, 2009b) developed a Neuron by Neuron (NBN) trainer. NBN can work with Fully Connected Neurons (FCN) and needs a lower number of neurons than Multilayer Perceptron (MLP). Figure 9 shows a scheme of five inputs fully connected with three neurons as well as one output and its topology. The developed tool supports three different types of neurons. The neurons are bipolar (“mbip”), unipolar (mu), and linear (“mlin”). Both the mbip and mu have outputs not exceeding 1, with negative and positive values for mbip, and only positive values for mu. The equations of the three types of neurons are presented in Eqs. 1, 2, and 3 (Yu & Wilamowski, 2009a, 2009b), respectively. In this study, running time was 100, and iteration was 500 with a maximum error of 0.001. The training tool provides a direct sum squared error (SSE) with the plotting area on the interface, which can indicate the accuracy of the prediction. All other parameters were set as default because they gave the best results. All the neuron types were tried with different numbers of connection neurons. It is worth mentioning that categorical encoding was used because the collected data from the 108 samples contained numerical and categorical datasets (see Categorical Encoding).

where “gain” and “der” are parameters of activation functions.

Gradient boosting regression (GBR)

Gradient Boosting is one of the most common and powerful tree algorithms (Pedregosa, 2011). Gradient boosting is an ML method used as a classifier and regressor. This is a prediction model of collective learning, where each level attempts to correct the errors of a previous level in a formation called decision trees. Gradient Boosting model (Chen et al., 2021) with least-squares loss and 1000 regression trees with a depth of 6 and a minimum samples split of 2 was run to predict the pillow effect and wall diameter as shown in Fig. 10. Least-squares loss tries to locate predictive points and fits them on the already fitted line in an attempt to minimize error. The regression tree depth shows how many splits a tree allows to be created before a prediction is made. The boosting method is about consecutively learning learners’ nodes on the basis of previous ones by way of fitting the data set and analyzing the ensuing errors. In other words, this means that the boosting method works as a cycle consisting of learning nodes, fitting results to the data set, analyzing errors between the actual and predicted values, and re-starting learning the nodes that have been learned before. Moreover, this cycle is repeated until the previously set iteration number is reached.

CatBoost

CatBoost is a high-performance open-source library for gradient boosting of decision trees (Prokhorenkova et al., 2018). A machine learning algorithm called CatBoost was developed by Yandex researchers and engineers. CatBoost has many features. One of the main features allows using categorical data (non-numeric factors), for which pre-processing of data is not needed. In addition, turning such data into numbers by encoding is not necessary, either (Dorogush et al., 2018). Furthermore, CatBoost aptly predicts with default parameters, so parameter tuning is unnecessary (Ibragimov & Gusev, 2019). By default, CatBoost builds 1000 trees, i.e., full symmetric binary trees with six in-depth and two leaves. The learning rate is determined automatically according to the properties of the trained dataset and the number of iterations. The automatically selected learning rate should be close to the optimal one. The number of iterations can be lowered for faster training, but in this case learning rate must be increased.

Multilayer Perceptron MLP

Network elements’ organization or structure, interconnections, inputs, and outputs constitute net topology. The topology of an ANN can be defined according to the number of input and output layers, with the transfer functions between these layers and the number of neurons in each layer (Nabipour & Keshavarz, 2017). ANN structure consists of input and output layers with a minimum of one hidden layer. Each layer of the net contains a number of neurons. Neurons in the input are equal to the number of input variables, and output neurons are equal to the number of outputs associated with each input. Based on the transfer function or so-called activation function (Beale et al., 2013), these neurons in the layers allow for the transfer of weight between the layers backward and forward. The current study adopted the multilayer perceptron (MLP) structure for its ANN model using a backpropagation learning algorithm. The idea of the MLP was initiated by Werbos in 1974, and Rumelhart, McClelland and Hinton in 1986 (Riedmiller). Equation 1 defines the multilayer perceptron as follows:

where \(y\) is the output and \(x\) is input, \({w}_{i}\) are the weights, and \(b\) is the bias (Principe et al., 1997).

Using MATLAB R2020a (Beale et al., 2019), two different MLP structures were created to predict wall diameter and pillow effect. The inputs and targets dataset were obtained from actual measured data from the formed parts by SPIF. The main difference between the two trained structures is the number of prediction outputs: one of the networks deals with one target, and the second deals with two targets. The outputs are wall diameter or pillow effect, or wall diameter together with the pillow effect. However, the inputs have 5 neurons: tool materials, tool shapes, tool radius, and tool surface roughness values Ra and Rz. In the scope of this research, each net structure exhibited one hidden layer with ten neurons connected to the input and output layers, as shown in the illustrated scheme in Fig. 11a and b. The other main parameters selected for the purpose of training in this study were as follows: learning rate 0.01, performance goal 0.001, and 1000 as the number of epochs. It is noteworthy that different training and transfer functions were tried and trained for finding the best model and structure (see Training function and Transfer function).

The training flowchart of the developed model and the checking process using the test data are presented in Fig. 12. Two main conditions make decisions during the running process. The first loop in light blue color saves the model and all variables with low limits of condition. The second loop in light red is activated after the first condition occurs and stops. The second loop finds the training and variables, compares these with the variables saved from the previous training, and continues to do so until 1000 iterations. Shared step loops are displayed as light green arrows.

Training function

In neural networks, dataset training utilizes the optimization technique for tuning and finding a set of network weights for building a good prediction map. There are various optimization algorithms also called training functions. The training function is an algorithm for training the network to identify a specific input and for mapping such input to an output. The training function depends on many characteristics, including the trained dataset, weights and biases, as well as the performance goal. One challenge of building good, fast and really accurate predictions lies in selecting a fitted training function for the network. With this in mind, in the scope of this paper, ten different types of training function “learning algorithms” were executed in both MLP nets for the purpose of mapping outputs associated with inputs. Levenberg–Marquardt (Trainlm) is one of these implemented training functions and is considered the fastest compared to the others. Similarly, the BFGS Quasi-Newton algorithm is quite fast (Beale et al., 2019). The corresponding training functions are listed in Table 6.

Transfer function

In machine learning, the sums of each node are weighted, and the sum is passed through a function known as activation function or transfer function. The summed weights undergo a transfer function, and the transfer function computes the output of each layer through adopting summed weights entering the given layer. Setting proper transfer functions is a challenging task and is based on many factors but mainly on network structure. Usually, in multilayer perceptron (MLP) Log-sigmoid (Logsig) is used, and there are alternative functions like Hyperbolic tangent sigmoid (Tansig), which is generally used for pattern recognition (Beale et al., 2019). In this study, besides the aforementioned two functions, thirteen different transfer functions were performed to improve prediction accuracy. Eventually, the linear (Purelin) transfer function was chosen for the output layer in all cases. Table 7 lists all the transfer function algorithms with related Eqs. 5–18 used for this study (Demuth, 2000).

Dataset distribution

The actual data of SPIF components were used as input data for all the structures and models built and trained in the scope of this study to predict pillow effect and wall diameter as network outputs. Predicting results when forming new parts without executing any new process of forming is both economical and more practical. However, existing data must be separated into various subsets: i.e., training, validation, and testing datasets. In fact, prediction accuracy and training performance are significantly influenced by dividing the dataset into training and testing subsets (Zhang et al., 1998). Inappropriate subsets negatively affect benchmark performance. On the other hand, Shahin (Shahin et al., 2000) claimed there is no apparent association between the splitting ratio of the dataset, but Zhang et al. (Zhang et al., 1998) explain that the splitting ratio is one of the main problems. Yet, no general setting is available as a solution. Based on their surveys, most researchers split the datasets into lines with a different ratio of subsets. The most broadly adopted ratios are 90% vs. 10%, 80% vs. 20%, or 70% vs. 30% for training and testing. As part of the training run in the scope of this paper, optimal prediction was obtained by adopting a dividing ratio of 80% vs. 20% of the actual data (108 samples) concerning training and testing datasets, respectively. Regarding the actual dataset, 108 rows were taken from the SPIF components formed experimentally, and the recorded rows were used as training and testing datasets.

Categorical encoding

There are three typical methods for altering categorical variables to numerical values. One of them represents categorical data sets: this is the One-Hot Encoding variables (Guido, 1997). The other two are Ordinal Encoding and Dummy Encoding. The categorical variables will sparse-binarize and can be integrated into training in different machine learning models. The idea of one-hot-encoding is to replace categorical data with one or more new features. Each parameter takes a new numerical feature, and one of these features is always active through their substitution by 0 and 1 by way of creating one binary for each category. In ordinal encoding, each category is given an Integer value and sequences up to the number of the actual features. The dummy encoding is a slightly improved version of one-hot-encoding. In one-hot-encoding the numerical values are equal to the number of categories and the dummy is equal to the number of categories minus 1. Table 8 shows different ways of encoding the categorical data as part of the current study. In the scope of this research, two categorical sets were encoded. Tool materials and tool shapes were binarized as sparse matrices. However, ordinal encoding was adopted for tool materials because the other methods were in conflict either with feature importance calculation (see Contribution analysis of input variables) or one-hot-encoding concerning the tool shape; in the scope of latter the flat tool is replaced by 0 and the hemispherical tool is represented by 1.

Overfitting

A neural network is a practical tool used in various applications, but it has several drawbacks. One of these drawbacks is underfitting or overfitting. Underfitting happens when a model is too simple for training the selected dataset, and overfitting is when the network gives a larger error for the new data set than concerning the set trained before. In other words, the trained net can memorize the learned data set but is not trained to generalize those new data sets that are not fitted. Boosting algorithms are generally supplied with regularization methods to avert overfitting (Ibragimov & Gusev, 2019) because the number of samples in an ensemble set does not always improve the accuracy but can reduce generalization ability (Mease & Wyner, 2008). There are various ways to improve and handle network generalization, such as: using a large network to provide a good fit, training several nets to guarantee that good generalization is found, averaging the outputs of trained multiple neural networks, separating data randomly, and tuning the complexity of a net through regularization (Bishop, 1995).

In the MLP network created for the purpose of prediction in this study, generalization has been improved by the so-called early stopping method. The early stopping technique is a default method automatically provided for all supervised network creation functions, including backpropagation networks. This method splits the training dataset into three subsets: training, validation, and testing (see Fig. 13). During network training, training subset data will be utilized for calculating the gradient and for updating weights and biases to fit the models. Also, the validation subset estimates prediction error during the training process; and finally, the test subset will be utilized to test the learned network as well as to assess generalization errors and plot them instantly as the training is running. However, if the overfitting of data starts over during the training of the net, the errors will be larger in the validation subset. In addition, if the validation error increases above the minimum at a significantly different iteration number of iterations and, at the same time, becomes larger than the error of the test subset, the training stops, and network weights and biases return to the smallest validation error (Beale et al., 2020).

Investigation of accuracy

There are numerous validation metrics, but using the appropriate validation metric is essential for evaluating a predictive model, and physical observations are also necessary for improving model performance. This study compared and validated different structures and various training and transferring algorithms for assessing and measuring the agreement between actual and predicted values. Picking the suitable validation metric is crucial and challenging for assessing results and for minimizing errors. In the scope of assessing results to test performance, all structures and models trained and tested in this study were compared based on their evaluation by appropriate metrics. Root Mean Square Error (RMSE), Mean Absolute Error (MAE), and the Coefficient of Determination (R2), together with the primary metrics used to extract these above-mentioned metrics, were adopted. An R2 value close to 1 indicates good performance, and RMSE and also MAE near 0 means lower error. In this scenario reliable performance can be ensured; and vice versa: a large deviance between RMSE and MAE values points to significant variations in the distribution of error. However, the limitations of R2 are listed in Misra and He (2020); furthermore, RMSE and MAE have an accurate evaluation compared to other validation metrics because if MAE has more stability, RMSE is more sensitive to error. For our purposes and for prediction values validation, Standard Error Mean (SEM) was used, which is the original Standard Deviation (SD) of the sample size divided by the square root of the sample size. The other metrics like Error (E), Mean Error (ME), and Mean Square Error (MSE) were involved in originating validation equations. The Total Sum of Square (SStot) and the Sum of the Square of Residuals (SSres) were adopted for the derivation of R2 and adj. R2. Analytically, pertaining validation equations are as follows:

Thus

Contribution analysis of input variables

The contribution analysis of input variables on the associated outputs is called feature importance, variable importance, or relative importance. However, this analysis indicates each feature’s relative importance in the model driving a prediction by showing changes in the averages of predictions in the event that the feature value changes. The substitute of the input variables with high relative importance (RI) values significantly affects results as compared to variables that have lower RI values (Nabipour & Keshavarz, 2017; Rezakazemi et al., 2011; Vatankhah et al., 2014). Concerning this, there are various techniques to calculate feature importance and such techniques include Garson (Garson, 1991), Most Squares (Ibrahim, 2013), and Connection Weights (Olden & Jackson, 2002). These methods are established based on the connection weights of neurons and are depicted in Eqs. (36)–(38), respectively. In addition, there is also built-in feature importance in Gradient Boost and CatBoost regression. In the literature, different studies adopted feature importance to calculate and evaluate their impacts on variables (Ding, 2019; Rezakazemi et al., 2011; Shabanzadeh et al., 2015; Vatankhah et al., 2014; Zarei et al., 2020; Zhou et al., 2015), but the first time feature importance was adopted to find relative importance in ISF was in Najm & Paniti, 2021b and later in Najm & Paniti, 2021a; Najm et al., 2021). The equations below (36–38) describe the above as follows:

where nv number of neurons in the input layer, nh number of neurons in the hidden layer, yj absolute value of connection weights between the input and the hidden layers, hOj absolute value of connection weights between the hidden and the output layers, \(\sum_{j=1}^{{n}_{v}}{\left({y}_{vj}^{i}-{y}_{vj}^{f}\right)}^{2}\) sum squared difference between initial connection weights and final connection weights from the input layer to the hidden layer, \(\sum_{j=1}^{{n}_{v}}\sum_{v=1}^{n}{\left({y}_{vj}^{i}-{y}_{vj}^{f}\right)}^{2}\) total of sum squared difference of all inputs. \(\sum_{j=1}^{{n}_{v}}{y}_{vj}{y}_{jo}\) sum of product of final weights of connection from input neuron to hidden neurons with the connection from hidden neurons to output neurons. \(j\) total number of hidden neurons. o output neurons.

Another way to present the input variables is the SHAP value, which shows a features’ importance by quantifying the contributions of each feature and by calculating the contributions of those features that have contributed to the prediction to the greatest extent. SHAP (SHapley Additive exPlanations) presented by Lundberg and Lee (Lundberg & Lee, 2017) is a unified framework for interpreting predictions, and its interpretation has been inspired by several methods (Bach et al., 2015; Ribeiro et al., 2016; Štrumbelj & Kononenko, 2014). The SHAP principal calculation (Lundberg et al., 2018) is shown in Eq. 39.

where: M number of input features. N set of all input features. S set of non-zero feature indices (features observed and not unknown). \({f}_{x}\left(S\right)=E\left[f\left(X\right)\left|{X}_{s}\right.\right]\) is the model’s prediction for input xx, where \(E\left[f\left(X\right)\left|{X}_{s}\right.\right]\) is the expected value of the function conditioned on a subset S of input features.

Results and discussion

Regarding the prediction of pillow effect and wall diameter by way of applying different models and structures, Table 9 depicts values of different validation metrics used for checking performance.

It is imperative to distinguish between training and test errors. Training errors are calculated using the same data as the ones used for training the model, but a stored full dataset unknown to the model is used for calculating test error. It can be concluded that the R2 value of the training dataset implies variance within the trained samples through the model, whereas the R2 value of the testing dataset indicates the predictive quality of the model. From Table 9, it is clear that there is a significant disparity between the different techniques in favor of the developed model as far as the prediction of the pillow effect is concerned. Using the features to predict a pillow and using the same features to predict the wall diameter are two different problems. The features can be readily used to predict the wall diameter. However, not all the prediction models can learn the connections among the data provided and use such information for the prediction of the pillow. A few possible reasons for this are the following: the problem has a stochastic nature, the data set lacks some critical features, the data are insufficient, the model is too simple for the problem, or the combination of any of the preceding causes. All the above-mentioned issues would cause a disparity in results with respect to the estimation of the model’s real predicting capabilities in the case of unseen data. Therefore, upon comparing the R2 of the testing with respect to all models and algorithms, it can be noted that the developed MLP model offered the best performance in the prediction of the pillow effect. The best performance of predicting the pillow effect was achieved by using BFGS Quasi-Newton (BFG)—Trainbfg as a training function and Symmetric Sigmoid (Tansig) as a transfer function. Regarding the prediction of wall diameter, the Gradient Boosting Regression (GBR) has the largest R2 value as a model performance in terms of wall diameter prediction, and the developed MLP model with one output comes in second in terms of R2 (see Fig. 14, which exhibits the techniques of the ANN used for predicting the pillow effect and wall diameter values of SPIF components). Due to the fact that the R2 value of testing using the NBN technique is negative, R2 was converted to zero for illustration purposes in Fig. 14. Since R2 is defined as the percentage of variance explained by the fit, the fit can be worse than the simple application of a horizontal line for this purpose, in which case R2 will be negative. Nevertheless, from a logical point of view, GBR’s R2 value is slightly larger than the R2 value of the developed model, and all other validation metrics indicate that the developed model is better than GBR in terms of performance. The best performance of the developed MLP model to predict wall diameter was obtained by using the Levenberg–Marquardt (LM)—Trainlm training function and Softmax transfer function. It is noteworthy that the two outputs model was not able to record good performance concerning the other techniques. For checking the results of all the training and transfer functions, see Table 11, 12, 13, 14 in the Appendix.

Predictive testing data for both pillow effect and wall diameter using different ANN techniques versus the actual data are presented in Figs. 15a–d and 16a–d. The solid line displays an exact theoretical fit of actual and predicted values, with superimposed data over them. The distribution and deviation situated far away from the solid line are based on the model’s ability to predict the pillow effect or wall diameter values with the lowest errors.

For discovering an alternative approach of predicting pillow effect and wall diameter in an easy, practical and faster way—instead of building, running, and evaluating a new ANN model each time recurringly—analytical equations for the prediction of pillow effect and wall diameter of parts formed by SPIF were extracted from the best model. This gave rise to a new method: by way of substituting only the process parameters, the obtained equations can be directly used for predicting either pillow effect or the wall diameter. Therefore, Eqs. 42 and 45 were formed, which needed constant weights and biases imported from the ANN network with the best performance. The extracted ANN network weights and biases functioned as one set of input weight (IW) and layer weight (LW). The IW is between the inputs and the hidden layer, and the LW is between the hidden and the output layers. The biases for each layer are (b1) and (b2). In the Appendix, Table 15 for pillow effect and Table 16 for wall diameter provide b1, b2, IW, and LW obtained from the best trained ANN model.

With respect to relative importance and weights analysis, considerable significant factors affecting pillow effect and wall diameter are shown in Figs. 17a–d and 18a–d. Different methods based on weights and biases for finding the contributions of the input variables affecting output are pillow effect and wall diameter. Regarding pillow effect (see Fig. 17a–d), all of the methods show that changes in tool materials and tool shapes have a significant effect with insignificant variance on the Garson method (see Fig. 17a). Tooltip roughness (Rz) is listed at the end of the importance list as tooltip roughness has the lowest impact in all cases except for the connection weights method (see Fig. 17b). Concerning wall diameter (see Fig. 18a–d), tooltip roughness (Ra) has the most significant impact. On the other hand, the Catboost method indicates that tool end radius is the most significant impact on wall diameter (see Fig. 18d). Changes in tool shapes are always affected on the second level of the contribution’s impact. Below, tool materials and tool end radius are listed with the exception of the CatBoost method.

In an attempt to do away with this controversy in the variation of the relative importance of input parameters as yielded by the different calculation methods, Fig. 19 was created, which show the average relative importance of the four previously mentioned methods. It has been concluded that tool materials and shapes have the most significant influence on pillow effect. Importantly, the difference in tool end radius recorded the lowest value. As for wall diameter, the surface roughness of the tool (Ra) is the highest effective value, followed by the change in tool shape. The least influential parameter was change of tool materials.

SHAP is a theoretical method that explains prediction by models. It can estimate and explain how each feature contributes and influences the model. This technique estimates each feature’s contribution to each row of the dataset. The summary plots in Fig. 20 for pillow effect and Fig. 21 for wall diameter illustrate the individual feature’s importance with respect to their feature effects. Each point on the summary plot represents a Shapley value for a feature. Axis Y defines the feature’s level, and axis X shows Shapley values. The color of the plots denotes the value of the feature from lower to higher importance. The features are listed based on their importance. Shapley values represent the relative distribution of predictions among the features. It is worth stating that the same values of a certain feature can have different contributions towards an output, as dictated by other feature values for that same row.

Figures 20 and 21 represent every data point in each dataset feature as a single SHAP value on axis X and each feature on axis Y. The color bar indicates the feature’s value: red means high values, and blue indicates low values. Grey points represent categorical inputs. Values on the right have a “positive” effect on the output, and values on the left have a “negative” effect. Positive and negative are merely directional terms and are related to the direction in which the model’s output is affected. This, however, does not indicate the model’s performance. For example, the most distant left point for tool radius is a high value for the tool radius feature in the first raw, therefore it appears in red. This high value for tool radius influenced wall diameter as a model output by approximately − 4. The predictive model without that feature would have predicted a value of 4 or higher. Similarly, the rightmost red point of the surface roughness feature (Ra) with a value of 2 means that the absence of this value leads to the prediction of a wall diameter with a value below − 2. That means that broader extensions of the data point indicate the most effective features.

To understand how sharp value changes during the prediction process, three different examples shown in Table 10 illustrate three different behaviors: one on the left side (negative), one on the right side (positive), and one balanced between the negative and positive sides. The colors used are only for purposes of demonstration.

The prediction models in Table 10 were visualized using a SHAP decision plot, which uses cumulative SHAP values. Each plotted line explains a single model prediction. Figure 22a plots all the pillow effect predictions, and Fig. 22b plots three prediction values as mentioned earlier.

SHAP decision plot a all prediction values, and b three prediction values in Table 10

Each value is represented individually: Fig. 23 shows total positive features values, Fig. 24 total negative features values, and Fig. 25 displays both positive and negative features values. The three figures mentioned earlier are presented on (a) a SHAP decision plot, (b), a SHAP bar plot, and (c and d) on a SHAP force plot. The SHAP force plot shows exactly which features had the most extensive influence on the model’s prediction concerning individual observation values. The difference between (c and d) for all the figures is that (c) presents the value of the features, and (d) presents the feature SHAP value for each feature value.

As attested by the waterfall plots (Figs. 23, 24, 25) of various SPIF components selected in different conditions, the order of the impacts of parameters varied in the different components. This signifies that the estimation of parameters is the result of multiple factors. One single effective parameter that sufficiently affects output (pillow effect or wall diameter) cannot be captured, given that other parameters could affect the outcome of the model. Furthermore, complexity in the formability of parts using SPIF, which consists in stretching, bending, and shearing with cyclic effects, varies according to conditions, which thereby yields varying orders of impacting parameters in the individual components. Hence, this analysis reveals that each process condition is unique and that multiple factors interact and differently impact outcomes in individual parts. Also, forming tools with various materials show different hardness, which generates different surface strains. The same also holds true regarding different tool geometry. However, it is essential to point out that different effects on components occur if two tools made from two different materials but with identical geometry are used. This phenomenon is caused by different surface strains. Differing hardness values result in varying tool tip surface roughness, which thereby affects parts accuracy in terms of pillow effect and wall diameter. Furthermore, elastic deformation at the tooltip will produce dimensional inaccuracies of the formed part, and the plastic deformations will permanently damage the forming tool thereby excessively impacting the accuracy of the components, as explained by Kiridena et al. (2016).

Conclusions

This paper offered and described different algorithms and model structures of machine learning to predict pillow effect and wall diameter concerning the geometric accuracy of SPIF components produced from foil aluminum alloy sheets. The study’s primary goal was to analyze the feature importance of SPIF parameters involved in the forming process with a view to determining the best model and architecture. Consequently, findings were adopted to derive two analytic equations for theoretically calculating pillow effect and wall diameter. The most significant findings of the study are as follows:

-

1.

In the case of the training and testing datasets, with the help of R2 values, CatBoost successfully predicted the wall diameter ranging from 0.9714 to 0.8947, and also successfully predicted pillow effect between the range of 0.6062 and 0.6406. Concerning R2 values between 0.9645 and 0.9082 for wall diameter and between 0.7506 and 0.7129 for pillow effect, respectively, it was shown that the Levenberg–Marquardt training algorithm yielded the best performance as a prediction model based on different validation metrics. NBN offers no notable results, while GBR provides a reliable prediction of the wall diameter.

-

2.

A one-output multilayer perceptron (MLP) solution network showed better results than a network with two outputs.

-

3.

The most promising performance of predicting pillow effect was achieved by way of using BFGS Quasi-Newton (BFG)—Trainbfg as a training function, and Symmetric sigmoid (Tansig) as a transfer function.

-

4.

The best performance of the developed MLP model to predict wall diameter was achieved by way of the Levenberg–Marquardt (LM)—Trainlm training function and softmax transfer function.

-

5.

This research project marks the first time the relative importance (RI) method using SHapley Additive exPlanations (SHAP) was used to assess SPIF factors on outputs.

-

6.

Relative importance (RI) revealed that tool materials and shapes are the most influential factors impacting the pillow effect. Surface roughness of the tool (Ra), followed by changes in tool shapes with the highest effective values on wall diameter.

-

7.

The lowest effective parameter on pillow effect was tool end radius, and the lowest effective parameter for wall diameter was changes in tool materials.

-

8.

Computation of the parameters is achieved by accumulating many factors; in reality, individual parameters are not sufficient enough to affect output (pillow effect or wall diameter). In other words, with respect to other parameter values in the same row, identical values of one parameter may contribute to an outcome in a number of different ways.

Data availability

Not applicable.

References

A. C. M. & Guido, S. (1997). Introduction to with python learning machine.

Afzal, M. J. (2021). Study on the single point incremental sheet forming of AISI 321 variable wall angle geometry. https://doi.org/10.21203/rs.3.rs-836822/v1.

Al-Ghamdi, K., & Hussain, G. (2015). The pillowing tendency of materials in single-point incremental forming: Experimental and finite element analyses. Journal of Engineering Manufacture, 229(5), 744–753. https://doi.org/10.1177/0954405414530906

Amala Justus Selvam, M., Velu, R., & Dheerankumar, T. (2017). Study of the influence of the process variables on formability and strain distribution in incremental sheet metal working of AA 1050 Sheets, pp. 493–505.

Ambrogio, G., Cozza, V., Filice, L., & Micari, F. (2007). An analytical model for improving precision in single point incremental forming. Journal of Materials Processing Technology, 191(1–3), 92–95. https://doi.org/10.1016/j.jmatprotec.2007.03.079

Amirjan, M., Khorsand, H., Siadati, M. H., & Eslami Farsani, R. (2013). Artificial neural network prediction of Cu–Al2O3 composite properties prepared by powder metallurgy method. The Journal of Materials Research and Technology, 2(4), 351–355. https://doi.org/10.1016/j.jmrt.2013.08.001

Azaouzi, M., & Lebaal, N. (2012). Tool path optimization for single point incremental sheet forming using response surface method. Simulation Modelling Practice and Theory, 24, 49–58. https://doi.org/10.1016/j.simpat.2012.01.008

Bach, S., Binder, A., Montavon, G., Klauschen, F., Müller, K.-R., & Samek, W. (2015). On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLoS ONE, 10(7), e0130140. https://doi.org/10.1371/journal.pone.0130140

Bai, L., Li, Y., Yang, M., Lin, Y., Yuan, Q., & Zhao, R. (2019). Modeling and analysis of single point incremental forming force with static pressure support and ultrasonic vibration. Materials (basel), 12(12), 1899. https://doi.org/10.3390/ma12121899

Beale, M. H., Hagan, M., & Demuth, H. (2019). Deep Learning Toolbox Getting Started Guide. Deep Learn. Toolbox. https://doi.org/10.1016/j.neunet.2005.10.002

Beale, M. H., Hagan, M. T., & Demuth, H. B. (2013). Neural network toolboxTM user’s guide R2013b. Mathworks Inc.

Beale, M. H., Hagan, M. T., & Demuth, H. B. (2020) Deep learning toolboxTM user’s guide how to contact MathWorks.

Bishop, C. M. (1995). Neural networks for pattern recognition. Oxford University Press Inc.

Chen, M., et al. (2021). Task-wise split gradient boosting trees for multi-center diabetes prediction. In Proc. ACM SIGKDD int. conf. knowl. discov. data min., pp. 2663–2673. https://doi.org/10.1145/3447548.3467123.

Davis, J. R. (2001). Aluminum and aluminum alloys. ASM International, 42(351–416), 2001. https://doi.org/10.1136/oem.42.11.746

Demuth, H. (2000). Neural network toolboxTM 6 user’s guide, vol. 9, no. 4.

Ding, H., Luo, W., Yu, Y., & Chen, B. (2019). Construction of a Robust cofactor self-sufficient bienzyme biocatalytic system for dye decolorization and its mathematical modeling. International Journal of Molecular Science, 20(23), 6104. https://doi.org/10.3390/ijms20236104

Dorogush, A. V., Ershov, V., & Gulin, A. (2018). CatBoost: Gradient boosting with categorical features support. pp. 1–7. http://arxiv.org/abs/1810.11363.

Edwards, W. L., Grimm, T. J., Ragai, I., & Roth, J. T. (2017). Optimum process parameters for Springback reduction of single point incrementally formed polycarbonate. Procedia Manufacturing, 10, 329–338. https://doi.org/10.1016/j.promfg.2017.07.002

Essa, K., & Hartley, P. (2011). An assessment of various process strategies for improving precision in single point incremental forming. International Journal of Material Forming, 4(4), 401–412. https://doi.org/10.1007/s12289-010-1004-9

Ezugwu, E. O., Fadare, D. A., Bonney, J., Da Silva, R. B., & Sales, W. F. (2005). Modelling the correlation between cutting and process parameters in high-speed machining of Inconel 718 alloy using an artificial neural network. International Journal of Machine Tools and Manufacture, 45(12–13), 1375–1385. https://doi.org/10.1016/j.ijmachtools.2005.02.004

Garson, G. D. (1991). Interpreting neural-network connection weights. AI Expert, 6(4), 46–51.

Gatea, S., Ou, H., & McCartney, G. (2016). Review on the influence of process parameters in incremental sheet forming. International Journal of Advanced Manufacturing Technology, 87(1–4), 479–499. https://doi.org/10.1007/s00170-016-8426-6

Hagan, M. T., Demuth, H. B., Beale, M. H., & De Jesús, O. (2014). Neural network design. Martin Hagan.

Hartmann, C., Opritescu, D., & Volk, W. (2019). An artificial neural network approach for tool path generation in incremental sheet metal free-forming. Journal of Intelligent Manufacturing, 30(2), 757–770. https://doi.org/10.1007/s10845-016-1279-x

Hirsch, J. (2006). AlMn1Mg1 for beverage cans. Weinheim Wiley-VCH Verlag.

Hussaini, S. M., Singh, S. K., & Gupta, A. K. (2014). Experimental and numerical investigation of formability for austenitic stainless steel 316 at elevated temperatures. J. Mater. Res. Technol., 3(1), 17–24. https://doi.org/10.1016/j.jmrt.2013.10.010

Ibragimov, B., & Gusev, G. (2019). Minimal variance sampling in stochastic gradient boosting. Advances in Neural Information Processing Systems, 32, 15061–15071.

Ibrahim, O. M. (2013). A comparison of methods for assessing the relative importance of input variables in artificial neural networks. Journal of Applied Sciences Research, 9(11), 5692–5700.

Isidore, B. B. L. (2014). Controlling pillow defect in single point incremental forming through varying tool geometry

Isidore, B. B. L., Hussain, G., Shamchi, S. P., & Khan, W. A. (2016). Prediction and control of pillow defect in single point incremental forming using numerical simulations. Journal of Mechanical Science and Technology, 30(5), 2151–2161. https://doi.org/10.1007/s12206-016-0422-0

Jackson, K., & Allwood, J. (2009). The mechanics of incremental sheet forming. Journal of Materials Processing Technology, 209(3), 1158–1174. https://doi.org/10.1016/j.jmatprotec.2008.03.025

Kaufman, J. G. (2000). Applications for aluminum alloys and tempers. Introduction to Aluminum Alloys and Their Tempers, 1100, 242.

Khan, M. S., Coenen, F., Dixon, C., El-Salhi, S., Penalva, M., & Rivero, A. (2015). An intelligent process model: Predicting springback in single point incremental forming. International Journal of Advanced Manufacturing Technology, 76(9–12), 2071–2082. https://doi.org/10.1007/s00170-014-6431-1

Kiridena, V. S., Xia, Z. C., & Ren, F. (2016). High stiffness and high access forming tool for incremental sheet forming, United States, Patent Application Publication [US8021501B2]. US8021501B2, vol. 1, no. 19.

Kondayya, D., & Gopala Krishna, A. (2013). An integrated evolutionary approach for modelling and optimization of laser beam cutting process. International Journal of Advanced Manufacturing Technology, 65(1–4), 259–274. https://doi.org/10.1007/s00170-012-4165-5

Kumar, A., & Gulati, V. (2020). Optimization and investigation of process parameters in single point incremental forming. Indian Journal of Engineering and Materials Science, 27(2), 246–255.

Kumar, Y., & Kumar, S. (2015). Incremental sheet forming (ISF), pp. 29–46.

Lela, B., Bajić, D., & Jozić, S. (2009). Regression analysis, support vector machines, and Bayesian neural network approaches to modeling surface roughness in face milling. International Journal of Advanced Manufacturing Technology, 42(11–12), 1082–1088. https://doi.org/10.1007/s00170-008-1678-z

Leszak, E. (1967). Apparatus and process for incremental dieless forming, [US3342051A].

Li, E. (2013). Reduction of Springback by intelligent sampling-based LSSVR metamodel-based optimization. International Journal of Material Forming, 6(1), 103–114. https://doi.org/10.1007/s12289-011-1076-1

Low, D. W. W., Chaudhari, A., Kumar, D., & Kumar, A. S. (2022). Convolutional neural networks for prediction of geometrical errors in incremental sheet metal forming. Journal of Intelligent Manufacturing. https://doi.org/10.1007/s10845-022-01932-1

Lu, H. B., Le Li, Y., Liu, Z. B., Liu, S., & Meehan, P. A. (2014). Study on step depth for part accuracy improvement in incremental sheet forming process. Advances in Materials Research, 939, 274–280. https://doi.org/10.4028/www.scientific.net/AMR.939.274

Lundberg, S. M., Erion, G. G., & Lee, S.-I. (2018). Consistent individualized feature attribution for tree ensembles, no. 2, 2018. http://arxiv.org/abs/1802.03888.

Lundberg, S. M., & Lee, S.-I. (2017). A unified approach to interpreting model predictions. In Advances in neural information processing systems, vol. 30. https://proceedings.neurips.cc/paper/2017/file/8a20a8621978632d76c43dfd28b67767-Paper.pdf.

Marouani, H., & Aguir, H. (2012). Identification of material parameters of the Gurson–Tvergaard–Needleman damage law by combined experimental, numerical sheet metal blanking techniques and artificial neural networks approach. International Journal of Material Forming, 5(2), 147–155. https://doi.org/10.1007/s12289-011-1035-x

Matsubara, S. (1994). Incremental backward bulge forming of a sheet metal with a hemispherical head tool-a study of a numerical control forming system II. Journal of Japan Society for Technology of Plasticity, 35(406), 1311–1316.

Mease, D., & Wyner, A. (2008). Evidence contrary to the statistical view of boosting. Journal of Machine Learning Research, 9, 131–156.

Mezher, M. T., Barrak, O. S., Nama, S. A., & Shakir, R. A. (2021a). Predication of forming limit diagram and spring-back during SPIF process of AA1050 and DC04 sheet metals. Journal of Mechanical Engineering Research and Developments, 44(1), 337–345.

Mezher, M. T., Khazaal, S. M., Namer, N. S. M., & Shakir, R. A. (2021b). A comparative analysis study of hole flanging by incremental sheet forming process of AA1060 and DC01 sheet metals. Journal of Engineering Science and Technology, 16(6), 4383–4403.

Mezher, M. T., Namer, N. S. M., & Nama, S. A. (2018). Numerical and experimental investigation of using lubricant with nano powder additives in SPIF process. International Journal of Mechanical Engineering and Technology, 9(13), 968–977.

Micari, F., Ambrogio, G., & Filice, L. (2007). Shape and dimensional accuracy in single point incremental forming: State of the art and future trends. Journal of Materials Processing Technology, 191(1–3), 390–395. https://doi.org/10.1016/j.jmatprotec.2007.03.066

Misra, S., & He, J. (2020). Stacked neural network architecture to model the multifrequency conductivity/permittivity responses of subsurface shale formations. In Machine learning for subsurface characterization (pp. 103–127). Elsevier.

Mulay, A., Ben, B. S., Ismail, S., Kocanda, A., & Jasiński, C. (2018). Performance evaluation of high-speed incremental sheet forming technology for AA5754 H22 aluminum and DC04 steel sheets. Arch. Civ. Mech. Eng., 18(4), 1275–1287. https://doi.org/10.1016/j.acme.2018.03.004

Nabipour, M., & Keshavarz, P. (2017). Modeling surface tension of pure refrigerants using feed-forward back-propagation neural networks. International Journal of Refrigeration, 75, 217–227. https://doi.org/10.1016/j.ijrefrig.2016.12.011

Nagargoje, A., Kankar, P. K., Jain, P. K., & Tandon, P. (2021). Application of artificial intelligence techniques in incremental forming: A state-of-the-art review. Journal of Intelligent Manufacturing. https://doi.org/10.1007/s10845-021-01868-y

Najm, S. M., & Paniti, I. (2018). Experimental investigation on the single point incremental forming of AlMn1Mg1 foils using flat end tools. In IOP conf. ser. mater. sci. eng., vol. 448, p. 012032. https://doi.org/10.1088/1757-899X/448/1/012032

Najm, S. M., & Paniti, I. (2020). Study on Effecting Parameters of Flat and Hemispherical end Tools in SPIF of Aluminium Foils. Tehnički vjesnik – Technical Gazette. https://doi.org/10.17559/TV-20190513181910.

Najm, S. M., & Paniti, I. (2021a). Artificial neural network for modeling and investigating the effects of forming tool characteristics on the accuracy and formability of thin aluminum alloy blanks when using SPIF. International Journal of Advanced Manufacturing Technology, 114(9–10), 2591–2615. https://doi.org/10.1007/s00170-021-06712-4

Najm, S. M., & Paniti, I. (2021b). Predict the effects of forming tool characteristics on surface roughness of aluminum foil components formed by SPIF using ANN and SVR. International Journal of Precision Engineering and Manufacturing, 22(1), 13–26. https://doi.org/10.1007/s12541-020-00434-5

Najm, S. M., Paniti, I., Trzepieciński, T., Nama, S. A., Viharos, Z. J., & Jacso, A. (2021). Parametric effects of single point incremental forming on hardness of AA1100 aluminium alloy sheets. Materials (basel), 14(23), 7263. https://doi.org/10.3390/ma14237263

Najm, S. M., Paniti, I., & Viharos, Z. J. (2020). Lubricants and affecting parameters on hardness in SPIF of AA1100 aluminium. In 17th IMEKO TC 10 EUROLAB Virtual Conf. “global trends testing, diagnostics insp. 2030,” pp. 387–392.

Nasulea, D., & Oancea, G. (2021). Achieving accuracy improvements for single-point incremental forming process using a circumferential hammering tool. Metals (basel), 11(3), 482. https://doi.org/10.3390/met11030482

Olden, J. D., & Jackson, D. A. (2002). Illuminating the ‘black box’: A randomization approach for understanding variable contributions in artificial neural networks. Ecol. Modell., 154(1–2), 135–150. https://doi.org/10.1016/S0304-3800(02)00064-9

Paniti, I., Viharos, Z. J., Harangozó, D., & Najm, S. M. (2020). Experimental and numerical investigation of the single-point incremental forming of aluminium alloy foils. Acta IMEKO, 9(1), 25–31.

Pedregosa, F., et al. (2011). Scikit-learn: Machine learning in {P}ython”. The Journal of Machine Learning Research, 12, 2825–2830.

Principe, J., Euliano, N. R., & Lefebvre, W. C. (1997). Neural and adaptive systems: Fundamentals through simulation: multilayer perceptrons. In Neural adapt. syst. fundam. through simulation©, pp. 1–108. https://doi.org/10.1002/ejoc.201200111.

Prokhorenkova, L., Gusev, G., Vorobev, A., Dorogush, A. V., & Gulin, A. (2018). Catboost: Unbiased boosting with categorical features. Advances in Neural Information Processing Systems., 208(4), 6638–6648.

Rezakazemi, M., Razavi, S., Mohammadi, T., & Nazari, A. G. (2011). Simulation and determination of optimum conditions of pervaporative dehydration of isopropanol process using synthesized PVA–APTEOS/TEOS nanocomposite membranes by means of expert systems. Journal of Membrane Science, 379(1–2), 224–232. https://doi.org/10.1016/j.memsci.2011.05.070

Ribeiro, M. T., Singh, S., & Guestrin, C. (2016). Why should I trust you?. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, pp. 1135–1144. https://doi.org/10.1145/2939672.2939778.

Riedmiller, P. M. Machine learning: Multi layer perceptrons. Albert-Ludwigs-University Freibg. AG Maschinelles Lernen. Retrieved from http://ml.informatik.uni-freiburg.de/_media/documents/teaching/ss09/ml/mlps.pdf.

Shabanzadeh, P., Yusof, R., & Shameli, K. (2015). Artificial neural network for modeling the size of silver nanoparticles’ prepared in montmorillonite/starch bionanocomposites. Journal of Industrial and Engineering Chemistry, 24, 42–50. https://doi.org/10.1016/j.jiec.2014.09.007

Shahin, M., Maier, H. R., & Jaksa, M. B. (2000). Evolutionary data division methods for developing artificial neural network models in geotechnical engineering Evolutionary data division methods for developing artificial neural network models in geotechnical engineering by M A Shahin M B Jaksa Departmen.

Skjoedt, M., Hancock, M. H., & Bay, N. (2007). Creating helical tool paths for single point incremental forming. Key Engineering Materials, 344, 583–590. https://doi.org/10.4028/www.scientific.net/KEM.344.583

Štrumbelj, E., & Kononenko, I. (2014). Explaining prediction models and individual predictions with feature contributions. Knowledge and Information Systems, 41(3), 647–665. https://doi.org/10.1007/s10115-013-0679-x

Szpunar, M., Ostrowski, R., Trzepieciński, T., & Kaščák, Ľ. (2021). Central composite design optimisation in single point incremental forming of truncated cones from commercially pure titanium grade 2 sheet metals. Materials (basel), 14(13), 3634. https://doi.org/10.3390/ma14133634

Trzepieciński, T., Kubit, A., Dzierwa, A., Krasowski, B., & Jurczak, W. (2021c). Surface finish analysis in single point incremental sheet forming of rib-stiffened 2024–T3 and 7075–T6 alclad aluminium alloy panels. Materials (basel), 14(7), 1640. https://doi.org/10.3390/ma14071640

Trzepieciński, T., Najm, S. M., Oleksik, V., Vasilca, D., Paniti, I., & Szpunar, M. (2022a). Recent developments and future challenges in incremental sheet forming of aluminium and aluminium alloy sheets. Metals (basel), 12(1), 124. https://doi.org/10.3390/met12010124

Trzepieciński, T., Najm, S. M., Sbayti, M., Belhadjsalah, H., Szpunar, M., & Lemu, H. G. (2021b). New advances and future possibilities in forming technology of hybrid metal-polymer composites used in aerospace applications. Journal of Composites Science, 5(8), 217. https://doi.org/10.3390/jcs5080217

Trzepieciński, T., Oleksik, V., Pepelnjak, T., Najm, S. M., Paniti, I., & Maji, K. (2021a). Emerging trends in single point incremental sheet forming of lightweight metals. Metals (basel), 11(8), 1188. https://doi.org/10.3390/met11081188

Trzepieciński, T., Szpunar, M., & Ostrowski, R. (2022b). Split-plot I-optimal design optimisation of combined oil-based and friction stir rotation-assisted heating in SPIF of Ti-6Al-4V titanium alloy sheet under variable oil pressure. Metals (basel), 12(1), 113. https://doi.org/10.3390/met12010113

Vatankhah, E., Semnani, D., Prabhakaran, M. P., Tadayon, M., Razavi, S., & Ramakrishna, S. (2014). Artificial neural network for modeling the elastic modulus of electrospun polycaprolactone/gelatin scaffolds. Acta Biomaterialia, 10(2), 709–721. https://doi.org/10.1016/j.actbio.2013.09.015

Wei, H., Hussain, G., Shi, X., Isidore, B. B. L., Alkahtani, M., & Abidi, M. H. (2020). Formability of materials with small tools in incremental forming. Chinese Journal of Mechanical Engineeing, 33(1), 55. https://doi.org/10.1186/s10033-020-00474-y

Yu, H., & Wilamowski, B. M. (2009a). C++ implementation of neural networks trainer. In Proc. - 2009a int. conf. intell. eng. syst. INES 2009a, pp. 257–262. https://doi.org/10.1109/INES.2009.4924772.

Yu, H., & Wilamowski, B. M. (2009b) Efficient and reliable training of neural networks. In Proc. - 2009b 2nd conf. hum. syst. interact. HSI ’09, pp. 109–115. https://doi.org/10.1109/HSI.2009.5090963.

Zain, A. M., Haron, H., & Sharif, S. (2010). Prediction of surface roughness in the end milling machining using Artificial Neural Network. Expert Systems with Applications, 37(2), 1755–1768. https://doi.org/10.1016/j.eswa.2009.07.033

Zarei, M. J., Ansari, H. R., Keshavarz, P., & Zerafat, M. M. (2020). Prediction of pool boiling heat transfer coefficient for various nano-refrigerants utilizing artificial neural networks. Journal of Thermal Analysis and Calorimetry, 139(6), 3757–3768. https://doi.org/10.1007/s10973-019-08746-z

Zhang, Z., et al. (2016). Springback reduction by annealing for incremental sheet forming. Procedia Manuf., 5, 696–706. https://doi.org/10.1016/j.promfg.2016.08.057

Zhang, G., Eddy-Patuwo, B., & Hu, M. Y. (1998). Forecasting with artificial neural networks: The state of the art. International Journal of Forecasting, 14(1), 35–62.

Zhang, H., Lu, B., Chen, J., Feng, S., Li, Z., & Long, H. (2017). Thickness control in a new flexible hybrid incremental sheet forming process. Journal of Engineering Manufacture, 231(5), 779–791. https://doi.org/10.1177/0954405417694061

Zhou, B., et al. (2015). Relative importance analysis of a refined multi-parameter phosphorus index employed in a strongly agriculturally influenced watershed. Water, Air, and Soil Pollution, 226(3), 25. https://doi.org/10.1007/s11270-014-2218-0

Acknowledgements

The research reported in this paper was carried out in cooperation with the Budapest University of Technology and Economics (BME) and the Institute for Computer Science and Control (SZTAKI) and received support from the European Union project RRF-2.3.1-21-2022-00004 within the framework of the Artificial Intelligence National Laboratory. In addition, Imre Paniti would like to thank the project ‘Thematic Excellence Programme – National Challenges Subprogramme – Establishment of the Center of Excellence for Autonomous Transport Systems at Széchenyi István University (TKP2020-NKA-14)’ for its support. This research was also supported by Hungary’s TEMPUS Public Foundation and Stipendium Hungaricum Scholarship. Also, the corresponding author expresses his gratitude to research engineers Andor Bálint Viharos and Donát Takács at Budapest University of Technology and Economics, Department of Manufacturing Science and Engineering, for their help in the implementation of the CatBoost code.

Funding

Open access funding provided by Budapest University of Technology and Economics.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest and have no competing interests.

Ethical approval

Not applicable.

Inform consent

Not applicable.

Consent to publish

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix