Abstract

The Poisson process is an often employed model for the activity of neuronal populations. It is known, though, that superpositions of realistic, non- Poisson spike trains are not in general Poisson processes, not even for large numbers of superimposed processes. Here we construct superimposed spike trains from intracellular in vivo recordings from rat neocortex neurons and compare their statistics to specific point process models. The constructed superimposed spike trains reveal strong deviations from the Poisson model. We find that superpositions of model spike trains that take the effective refractoriness of the neurons into account yield a much better description. A minimal model of this kind is the Poisson process with dead-time (PPD). For this process, and for superpositions thereof, we obtain analytical expressions for some second-order statistical quantities—like the count variability, inter-spike interval (ISI) variability and ISI correlations—and demonstrate the match with the in vivo data. We conclude that effective refractoriness is the key property that shapes the statistical properties of the superposition spike trains. We present new, efficient algorithms to generate superpositions of PPDs and of gamma processes that can be used to provide more realistic background input in simulations of networks of spiking neurons. Using these generators, we show in simulations that neurons which receive superimposed spike trains as input are highly sensitive for the statistical effects induced by neuronal refractoriness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Stochastic point processes are widely used in computational neuroscience to model the spiking of single neurons and neuronal populations (van Vreeswijk 2010). In simulation studies of neuronal networks, external spike input is commonly modeled as a Poisson process (Brunel 2000; Gerstner and Kistler 2002). However, neurons recorded in many areas of the brain (Shinomoto et al. 2003; Maimon and Assad 2009) as well as integrate-and-fire model neurons in a stationary background show inter-spike intervals that clearly deviate from the Poisson assumption. Immediately after a neuron produces an action potential, ion channel kinetics prohibit the generation of another spike for a couple of milliseconds, which is addressed as the absolute refractoriness of the neuron. But even after the period of absolute refractoriness has passed, eliciting another spike through further input is rather unlikely, because following an action potential the membrane potential is hyperpolarized far below threshold. As the membrane potential successively depolarizes, firing becomes more probable. This leads to a progressive recovery of firing probability, which takes place on a time scale of tens of milliseconds after each spike, as illustrated in Fig. 1(a). We call this time span the effective refractoriness. From a statistical point of view, the effective refractoriness and the absolute refractoriness contribute to the statistics of a single spike train in exactly the same way.

Effective refractoriness and superposition spike trains. (a) Membrane potential trajectories obtained during an in vivo intracellular recording (neuron 1). Shown are cutouts of the inter-spike intervals of the first 15 s of the recording. In the first 40 ms after each spike hardly any spikes occur, because the membrane is still recovering from reset. Only when the potential is close enough to the spiking threshold, excitatory input can trigger a new spike. We call this phenomenon “effective refractoriness”. (b) Superposition of three spike trains. Adapted from Cox and Smith (1954)

A neuron embedded in a cortical network receives incoming spike trains from thousands of presynaptic neurons (Binzegger et al. 2004) (for a recent review on cortical connectivity see Boucsein et al. 2011). In order to model the summed input a neuron receives from its presynaptic partners it is therefore required to study superpositions of spike trains (see Fig. 1(b)). Refractoriness in a single spike train can be described in the framework of renewal processes (Cox 1962). In contrast to the superposition of Poisson processes, however, the superposition of renewal processes with refractoriness is not a Poisson process (Lindner 2006; Câteau and Reyes 2006) nor is it a renewal process (Cox and Smith 1954), complicating the analysis. The gamma process is an often employed renewal process that can model single spike trains with effective refractoriness (Kuffler et al. 1957). Recently Ostojic (2011) demonstrated that spike trains of spiking neurons driven by fluctuating input generally resemble gamma processes. Superpositions of gamma processes, however, are hard to analyze and simulation results only provide limited insights. Thus it is desirable to find a description of the spiking of cortical neurons at an intermediate level of detail between the Poisson process, which neglects all properties of the inter-spike-interval (ISI) distribution except for the mean, and the gamma process, which allows a good fit of the neuronal ISI histograms. Here, we use the Poisson process with dead time (PPD) as such an intermediate model (Johnson 1996). The PPD is a simple extension of the Poisson process, which produces spikes with equal probability at any time, except for a fixed duration of silence after each event. This time span is called the dead-time. The PPD has been used successfully before as a model of the discharges of auditory nerve fibers (Johnson and Swami 1983). Note, however, that in the current work the dead-time is used to model the effective refractoriness of a cortical neuron, which is on the order of tens of milliseconds. The simplicity of the PPD alone, in contrast to the gamma process and other renewal processes, enables us to obtain the analytical results on the statistics of superimposed processes which are presented here.

Stochastic point processes can be described by a hazard function, which defines the stochastic intensity, typically conditioned on time and the spike history. Therefore, the hazard function is often also called the conditional intensity of the process. In the special case of a renewal processes the hazard function only depends on the time that has passed since the last spike, which is also called the age. Given a hazard function that depends only on the age, an ensemble of processes will tend towards an equilibrium distribution of ages, which is called the stochastic equilibrium of the process. However, renewal processes can be generalized to inhomogeneous renewal process by introducing a time dependence in the hazard function. For instance, in case of the PPD, the dead-time can be fixed, while the rate parameter can be made time-dependent to model non-stationary input to a neuron. For such time-dependent input, ensembles of PPDs display stochastic transients, like overshoots of the firing rate in response to rapid changes of the input, which are caused by the dead-time. Due to the changing hazard function this point process operates far from its stochastic equilibrium, but can still be understood and analyzed quantitatively (Deger et al. 2010) because of its relative simplicity. Stochastic transients caused by refractoriness have been found to contribute to the precision of the neuronal response to fluctuating input (Berry and Meister 1998). Effective refractoriness is, by means of a spike history term in the conditional intensity function, commonly incorporated into nonstationary point process models (Kass and Ventura 2001; Meyer and van Vreeswijk 2002) and into generalized linear point process models of neuronal stimulus encoding (Paninski 2004; Pillow et al. 2008). Also in multivariate point process models, the spike history was found to be important for the statistical prediction of spike times (Truccolo et al. 2010), see Truccolo (2010) for an overview. In the absence of a stimulus, however, the spontaneous neuronal activity can often be well described by stationary point processes. For spontaneous activity, the concept of encoding is not applicable since it is unclear which quantities are encoded in the neuronal activity. But also beyond applications in neuronal coding, point process theory is instrumental to characterize neuronal spiking, in particular when it comes to comparing real brains with network models. Recurrent networks must be self-consistent: Superimposed spike trains constitute the input to a neuron, the response (output) of which must be compatible with the properties of its input (Câteau and Reyes 2006).

Here we investigate the statistics of superposition spike trains with stationary rates, both analytically for PPDs and numerically for superimposed spike trains from in vivo recordings. In Section 3.1 we demonstrate how the parameters of the PPD can be chosen to accurately reproduce first- and second order statistics of the spike trains of single neurons recorded in vivo by the method of moments (Tuckwell 1988). We investigate second-order statistical properties, in particular the Fano factor, the coefficient of variation of the ISIs, and the serial correlations between subsequent ISIs. These quantities are called second-order statistics since they involve first and second moments of the respective probability distributions. In Section 3.2 we introduce the auto-correlation function of the PPD, and Section 3.3 presents an analytical expression for the Fano factor depending on the counting window. In Section 3.4 we study the pooled spike trains from populations of neurons with effective refractoriness. We compare superpositions of the recorded spike trains and find that the corresponding superimposed model spike trains match their statistics remarkably well, much in contrast to the Poisson process. In models of recurrent networks, mean field theory can be applied to theoretically estimate the spike rate in the network Brunel (2000), but relies on the assumption that individual neurons spike like Poisson processes. In Section 3.5 we show that the firing rate of integrate-and-fire model neurons is in fact sensitive to refractoriness in the single spike train and explain the observed deviation compared to Poisson input.

To date, the superpositions of point processes other than Poisson had to be generated by superimposing numerous realizations of the single point process. If each simulated neuron is to receive independently generated superposition spike trains (corresponding to the Poisson spike trains used, for example, in Brunel (2000)) this generation procedure would slow down the simulation to an unbearable extent. Here we present novel algorithms which efficiently generate superpositions of arbitrary numbers of PPDs (Algorithm 1) and of gamma processes (Algorithm 2) in discretized time. The two generators require on the order of 10 to 100 times the number of computations that a Poisson process generator does. This factor is independent of the number of superimposed processes, which makes it feasible to use superpositions of PPD or gamma processes as population models in contemporary and future simulation studies.

2 Materials and methods

2.1 In vivo neuron recordings

We use spike train data recorded intracellularly with sharp electrodes from neocortical neurons in the primary somatosensory cortex (S1) of Long–Evans rats in vivo, as published in Nawrot et al. (2007). We estimate the time-dependent spike rate with a Gaussian filter kernel with parameter σ = 2.5 s. From the original dataset consisting of the spike trains of eight neurons, we select the three spike trains which show the lowest rate variability and at least 500 spikes. These three spike trains are labeled neurons 1, 2 and 3 in the following. Table 1 lists the parameters characterizing the spike trains. Neurons 1 and 2 were recorded from female rats, neuron 3 from a male rat.

To check whether the serial interval correlations affect the statistical quantities we compute from the spike trains throughout the manuscript, we also shuffled the original spike trains, which removes serial interval correlations (Nawrot et al. 2007). To shuffle the spike trains, we compute the inter-spike-intervals (ISI), randomly permute them, and consider the cumulative sum of the permuted ISI as the shuffled spike train.

2.2 Superposition spike train and surrogate data generation

To construct superposition spike trains of n component processes from the recorded in vivo data, we split the neuron spike trains into n fragments of equal duration. In each of the fragments, the time of the beginning of the fragment is subtracted from each spike time. Then the fragments are superimposed, as depicted in Fig. 1(b). Thereby we consider the fragments of the spike train of the neuron as independent realizations of the same point process in equilibrium. We match the parameters of three different point processes to the recorded spike trains: a PPD as described in Section 3.1, a gamma process as in Appendix A and a Poisson process, which is defined by the rate of spikes only. In Figs. 2, 3 and 4 the error-bars denote the standard deviation from the mean across multiple realizations of the matched processes. Each of these realizations has the same duration as the original, unfragmented recording. Since the recorded spike trains are of finite duration, all statistical quantities we compute for the spike trains are estimates. We quantify their variance due to the finite duration of the recording from the statistics across many realizations of the matched processes of the same length.

Inter-spike-interval density and auto-correlation function. (a)–(c) Estimated probability density of the inter-spike-interval (ISI, kernel density estimation with Gaussian kernel) of a neuron (black), ISI density of the Poisson process (1) with rate \(\lambda=1/\hat{\mu}\), d = 0 (blue), ISI density of the PPD matched to the mean (\(\hat{\mu}\)) and standard deviation (\(\hat{\sigma}\)) of the neural ISI (green) using Eq. (6), ISI density of gamma process matched to \(\hat{\mu}\) and \(\hat{\sigma}\) (orange, see Appendix A). The inset shows the same data on a logarithmic scale. (d)–(f) Auto-correlation functions of single spike trains. Theoretical result (8) for the PPD (green curve), numerically computed auto-correlation of gamma process (8) (blue curve), in vivo data (black crosses), shuffled in vivo data (black circles), estimate of mean and standard deviation from repeated realization of PPD and gamma processes of the same duration as the neural recording (error-bars, colors as in (a)–(c)). Same parameters and data as in (a)–(c). The left, middle, and right column correspond to neurons 1, 2, and 3, respectively

Spike count variability in dependence of the counting window. Fano factor of neuronal spike trains and associated point processes as a function of the counting window length l. Theoretical result (14) (green curve) and simulated data of the PPD (green dots), neuronal data (black crosses), shuffled neuronal data (black circles), gamma process (orange dots), Poisson process (blue dots). Black arrows mark the points with l = nd, n = 1, 2, 3, .... (a)–(c) Data of neurons 1, 2, and 3, respectively. Same data and parameters as in Fig. 2

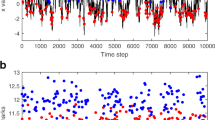

Variability of the ISI and serial correlations in superimposed spike trains. (a)–(c) Coefficient of variation of the ISI depending on the number n of superimposed neural spike trains (black crosses, black circles: shuffled neuronal data) and associated PPDs (green curve), gamma processes (orange error bars) and Poisson processes (blue error bars). Point processes realizations have the same duration as the neural recording, error-bars show standard deviation across realizations. (d)–(f) Total serial correlation S n Eq. (22) of the ISI of n pooled neuronal spike trains (black crosses) and matched processes. Same colors and symbols as in (a)–(c). Theoretical limit Eq. (23) for large numbers of superimposed PPDs (dashed green line). The left, middle, and right column correspond to neurons 1, 2, and 3, respectively. Same data and parameters as in Fig. 2

3 Results

3.1 Inter-spike interval statistics

In this section we match the Poisson process with dead-time (PPD) to recorded neural spiking activity by means of the inter-spike interval (ISI) statistics (Tuckwell 1988). The PPD is a renewal process, which means each ISI is drawn independently from the same distribution. The three recorded spike trains show serial interval correlations, as can be seen in Fig. 4(d)–(f) for n = 1, which has also been reported in Nawrot et al. (2007). Since renewal processes by definition do not have serial interval correlations, they can only be an approximate model of the spike trains’ statistics. We will evaluate this model a posteriori using surrogate methods. In Figs. 2, 3 and 4 results computed from the shuffled spike trains are shown as circles, whereas results computed from the original spike trains are shown as crosses. As can be seen in Fig. 4(d)–(f) for n = 1, ISI shuffling efficiently removed the serial correlations in the single spike trains.

We define the PPD by its ISI density

where θ(x) = {1 for x ≥ 0, 0 else} denotes the Heaviside function, λ ≥ 0 is the rate parameter and d ≥ 0 is the dead-time for which no spikes can occur. The first two central moments of the ISI density are

where μ denotes the mean and σ 2 denotes the variance of the ISI. The equilibrium rate of the process is

which is generally smaller than the rate parameter λ. The coefficient of variation (CV) of the ISI is

Since d ≤ μ it follows that CV ≤ 1, which means that the PPD is generally more regular than the Poisson process. A PPD can be associated to the stationary spiking of a neuron given empirical estimates of mean and standard deviation of the neural ISI, \(\hat{\mu}\) and \(\hat{\sigma}\). By matching the central moments of the ISI (Eqs. (2) and (3)), we obtain the parameters of the PPD as

Figure 2(a)–(c) show the normalized ISI histogram of recorded neural spike trains and the ISI density of the associated point process models, PPD, gamma process and Poisson process. The matched dead-time of the PPD accounts for the effective refractoriness in the spike train. On the level of the ISI density, the approximation by using a PPD instead of the true density consists in a relocation of the probability mass of the neural ISI histogram below the dead-time into the initial exponential decay of the density of the PPD just after the dead-time. Typically, the tail of the density of the matched PPD and of the neuron coincide very well. The gamma process, on the other hand, properly fits the density of the ISI.

3.2 Auto-correlation function

The superposition of PPDs is a simple model for the combined synaptic input a neuron receives in a network. In the following we therefore need to determine the statistics of the PPD and of superpositions thereof. The auto-correlation function of a single spike train is an experimentally observable feature of neural activity. Furthermore, the auto-correlation function is required to compute the statistics of superposition spike trains in later sections. Along with Gerstein and Kiang (1960) we define the auto-correlation function of a spike train as the firing rate conditional on a spike at t = 0,

which is also called the renewal density (Cox 1962). For any stationary point process, the renewal density is given by

where f k (t) is the density of the k-th order interval (Holden 1976). In case of a renewal process the density of the k-th order interval can be written as \(f_{k}=f^{\ast k}\), where f is the first-order ISI density which here is given by Eq. (1) and

It can be shown (see also Picinbono 2009) that for the PPD defined by Eq. (1),

The terms Eq. (9) make up the auto-correlation function (8) when summed up. Due to the factor θ(t − kd) each of the terms is restricted to t > kd, which explains the distinct domains of the function. For t ∈ (0, d) the auto-correlation is zero, followed by a jump at t = d to the value λ, from which it decays exponentially for t ∈ [d, 2d). In the following intervals [kd, (k + 1)d), k > 1, higher order terms of the type t k − 1 e − λt are added sequentially. For large t, the effect of the spike at t = 0 becomes negligible, therefore lim t→∞ γ(t) = ν. The auto-correlation function of a recorded neuron and the one of the associated PPD are shown in Fig. 2(d)–(f). Discrepancies of this model to the actual shape of the neuron’s autocorrelation are obvious. As in Fig. 2(a)–(c), the PPD captures the fact that neurons are refractory and neglects the details how the neuron returns from refractoriness. The auto-correlation functions of the matched gamma processes are very similar to the ones of the recorded neuronal spike trains. Shuffling of the neuronal spike trains resulted in minor improvements by removing serial correlations. The matched Poisson processes are not shown in Fig. 2(b)–(f) since their auto-correlation functions are constant and equal to ν for t > 0, not showing any refractoriness.

3.3 Count variance and Fano factor

Some statistical measures of spike trains are based on the spike count in a certain time window. For instance the Fano factor, a frequently invoked measure to quantify irregularity of neuronal activity, is defined as the variance over the mean of the spike count. Since electrophysiological recordings are necessarily of limited duration, a limited length of the counting window must be chosen. However, the choice of the counting window can influence the value of the Fano factor. Typically, the dependence of the Fano factor on the counting window cannot easily be computed for an arbitrary point process. Recently Farkhooi et al. (2011) presented a general formula for the Fano factor of stationary point processes in dependence of the counting window and its limit for large windows (Eq. (21)). Here we use a different method where we consider the spike count as a shot noise with a rectangular filter kernel and compute the variance of the count based on Campbell’s theorem (Campbell 1909; Tetzlaff et al. 2008). By this approach we obtain the same result as Farkhooi et al. (2011) for the Fano factor of the spike count. However, our approach can also be used with other kernels and will allow us to compute the variance of the membrane potential of neurons driven by PPDs below. In Nawrot et al. (2008) the Fano factor of gamma processes has been computed by the same method, which in that case required numerical integration. The simplicity of the PPD enables us to analytically compute this dependence here.

First we compute the mean and variance of the count variable X l in a counting window of length l for the PPD. Counting events is equivalent to evaluating a shot noise (Papoulis 1991) with a rectangular filter kernel h(t) = 1 t ∈ [0,l], where {1 if Z, 0 else}, driven by the spike train S(t), such that

The expected bin count is

We determine the variance of the count via the auto-covariance function of the counting shot noise, based on the auto-covariance function of the PPD. See Appendix C for the explicit derivation. The variance of the count variable turns out to be

with \(\xi_{k}\!=\!-(kd\!+\!\frac{k}{\lambda}-l)\!+\!(kd-l) z(k,\lambda(l\!-\!kd))\!+\!\frac{k}{\lambda}z(k+1,\lambda(l-kd))\), where \(z(a,b)=\frac{\Gamma(a,b)}{\Gamma(a)}\) and Γ(a, b) denotes the incomplete gamma function. If l < d the count variance simplifies to

The Fano factor, a measure of the irregularity of a spike train, for the PPD follows from its definition as

for a counting window of length l. For l → 0, Eq. (13) yields FF0 = 1. Expression (14) is particularly interesting for small counting windows l < d, since in this case the sum over ξ k on the right of Eq. (14) is empty. As l increases, more terms ξ k are added to Eq. (14). For l → ∞ we use the identity (21) and the property of vanishing serial interval correlations valid for any renewal process to obtain

where CV is given by Eq. (5).

The dependence of the Fano factor on the length of the counting window for neural spike trains compared to matched PPDs and gamma processes is shown in Fig. 3. Apart from slight deviations around the kink of the curve at l ≈ 1.3d, neurons 1 and 3 and their associated gamma processes follow Eq. (14) exactly. For neuron 2 the deviations are larger, but Eq. (14) still gives a reasonable estimate. We attribute the increased deviations in neuron 2 to the fact that the inter-spike intervals of neuron 2 have stronger serial correlations than those of neurons 1 and 3, as shown in Fig. 4(e) for n = 1, which is incompatible with a renewal process model.

3.4 Superpositions

Superpositions of PPDs are a model of the summed synaptic input of neurons in neuronal networks. From the assumption that each presynaptic neuron spikes according to a PPD, the statistics of the summed input follow. Consider the superposition \(\sum_{i=1}^{n}S_{i}(t)\) of n independent and identically distributed renewal processes S i (t). The variance of the superposition’s count in the window l is by Eq. (10) \({\rm{Var}}[\sum_{i=1}^{n}(S_{i}\star h)]=n{\rm{Var}}[X_{l}]\), and the mean count is nE[X l ]. It follows that the Fano factor of an independent superposition does not depend on n, so it is identical to the Fano factor of the component processes. This, however, does not hold for all statistics of the superimposed spike train.

Here we compute the distribution of inter-spike-intervals (ISI) for the superposition of n PPDs, from which we obtain the coefficient of variation of the ISI, enabling us to determine the serial correlations in the superposition as well. The ISI density f of a component process is given by Eq. (1). According to Cox (1962) the ISI density of an n-fold superposition of independent and identically distributed renewal processes in equilibrium is

where \(\mathcal{F}\) is the survivor function of each component process. For superpositions of PPDs we evaluate this formula and obtain

The detailed computations can be found in Appendix B. As one would expect, the mean ISI of the superposition is

The variance of the ISI of the superposition is

We obtain the coefficient of variation of the superposition as

Equation (20) shows that the CV n of the superposition only depends on the relative refractoriness d/μ and the number of component processes. As is easily seen, in the limit of large numbers of component processes,

in accordance with the Palm–Khintchine theorem (Heyman and Sobel 1982). Figure 4(a)–(c) show the CV as a function of the number of superimposed processes for three different single spike train statistics; gamma, PPD and the experimentally measured data. Apparently the CV for the neuron data, the gamma process and the PPD show the same dependence on the number of processes, with only slight deviations in the range of the expected standard deviation. This suggests that Eq. (20) describes a rather universal property of superpositions of independent and identically distributed point processes with refractoriness.

The superposition of n renewal processes is, in general, not a renewal process, because serial correlations of subsequent ISIs occur. We can use the previous results to quantify the magnitude of serial correlations in the superposition spike train: The sum over serial correlations of all orders is accessible through the relation (Cox and Lewis 1966)

where ρ k is the correlation coefficient of k-th neighbor ISIs. Relation (21) is valid for any stationary point process. With Eqs. (20) and (15) this yields for the total serial correlation in the superposition of PPDs

A plot of this quantity in comparison to neuronal data and matched process realizations is shown in Fig. 4(d)–(f). For n = 1 the figures show the total serial correlation of the single neuronal spike train. For neuron 1 this is small and negative but non-zero, neuron 2 has larger negative serial correlations, and neuron 3 has small positive serial correlations (Nawrot et al. 2007). The matched renewal processes have independent subsequent ISIs and hence for these S 1 = 0 by construction. Nonetheless, for n > 1 the data for neuron 1 and the matched gamma process agree with the analytical result for the PPD (22) within two standard errors or better. For neurons 2 and 3 the larger serial correlations of the recorded spike trains introduce systematic deviations, but the data still follow Eq. (22) approximately. In order to investigate to what extent serial interval correlations of the single spike trains cause these deviations we shuffled the intervals of the original spike trains, removing serial interval correlations altogether (Nawrot et al. 2007). For the shuffled spike trains the serial correlations of the superpositions are now closer to the analytical result for the PPD for all three neurons. These results show that the superposition of PPDs is an appropriate model for serial correlations of superpositions of spike trains with small serial correlations. In contrast, for the Poisson process model these serial correlations do not exist, since a superposition thereof is again a Poisson process.

For large n, which is the case of superpositions of many component processes, we obtain from Eq. (22)

which, in particular, means that there are the bounds \(-\frac{1}{2}\leq S_{\infty}\leq0\) on the total serial correlation of superimposed PPDs. The lower bound of \(S_{\infty}\geq-\frac{1}{2}\) follows immediately from Eq. (21) since \({\rm{FF}}_{\infty},\,{\rm{CV}}^{2}\geq0\). The upper bound might be a specific property of superpositions of PPDs. These bounds are confirmed in Fig. 4(d)–(f), where we observe that the limiting value Eq. (23) is approached quite fast. A neuron in a cortical network receives about n ≈ 5,000 synaptic inputs (Binzegger et al. 2004; Boucsein et al. 2011). The serial correlation magnitude of the superimposed spike trains can be well approximated by Eq. (23) in these cases.

3.5 Effects on integrate-and-fire neurons

As we have shown, superpositions of PPDs show several statistical properties that are not shared by Poisson processes (and by superpositions of Poisson processes, because these remain Poisson processes). Nonetheless, in simulations of cortical neuronal networks, external input is typically modeled as a Poisson process (Brunel 2000). The study Câteau and Reyes (2006) has shown that the statistics of the single input spike trains influences the neuronal activity. Here we demonstrate that choosing an appropriate superposition of PPDs instead of the Poisson process affects the statistics of the membrane potential and the firing rate of the neurons. Consider the leaky integrate-and-fire (LIF) neuron with exponential post-synaptic potentials (Gerstner and Kistler 2002). The membrane potential obeys the differential equation

with the membrane time constant τ and resistance R. Whenever the membrane potential reaches the threshold U θ , the neuron elicits a spike and the membrane potential is reset to U r = 0. After producing a spike the neuron cannot receive input for the duration of absolute refractory period τ r . The input current I(t) is brought about by excitatory and inhibitory point events. Each input spike elicits a δ-shaped postsynaptic current, which leads to a jump of the membrane potential that relaxes back exponentially. The jump amplitude of excitatory input spikes is w, of inhibitory input spikes it is − gw,

where i and j index the excitatory and inhibitory input spikes that the neuron receives, respectively. The input is scaled by τ to let the membrane potential jump by w or − gw, respectively, upon each input spike.

If we neglect the spiking and the reset for a moment, the membrane potential described by Eq. (24) is a linear system. The trajectory of the potential U(t) upon the impulse input RI(t) = δ(t) is called the impulse response h(t), which in this case evaluates to

Equivalent to Eq. (24) the impulse response Eq. (26) fully describes the system, such that

which is the convolution of the input with the impulse response of the membrane. In fact, the membrane potential is a shot noise driven by the input spike train just as the counting process we considered earlier. Analogously, the variance of the membrane potential driven by a superposition of n PPDs with mean ISI μ, dead-time d and synaptic amplitude w follows from a similar calculation as in the case before (see Appendix D for details)

which takes its maximum value \(\frac{1}{2}nw^{2}\frac{\tau}{\mu}\) in the case of d = 0. So relative to a membrane potential U′ driven by a superposition of n Poisson processes, with the same mean ISI μ, the dead-time in the input processes reduces the variance by a factor of

Note that here we only considered uniform synaptic weights w corresponding to g = 0 in Eq. (25). A mixture of inputs with different synaptic weights is considered below in Eq. (34).

In order to understand the dependence of the variance reduction on the rate of the single component we consider the limits of Eq. (29) for large and small input component rates 1/μ, while keeping the relative dead-time \(\bar{d}=d/\mu\) constant, with \(\bar{d}\in[0,1]\), to obtain

Both limits are independent of the membrane time constant τ. Another interesting limit of Eq. (29) is the completely regular process with d = μ for which we obtain the relative variance

In the following we consider a neuron receiving excitatory and inhibitory synaptic input, as illustrated in Fig. 5(a). We used two PPD superposition generators (as described in Algorithm 1) for each neuron to produce excitatory and inhibitory input, and two populations (named 1 and 2) of 1,000 LIF neurons each. In population 1 we disabled the spike mechanism to be able to record the free membrane potential. The input rates ν e and ν i were chosen to bring the neuron into the fluctuation driven regime (van Vreeswijk and Sompolinsky 1996), see also Table 2. Given these parameters, in case of Poisson process inputs and in the absence of a spiking threshold, the free membrane potential has the moments E[U] = τw(ν e − gν i) = 10.0 mV and \({\rm{Var}}[U]=\frac{\tau}{2}w^{2}(\nu_{\rm{e}}+g^{2}\nu_{\rm{i}})=12.5\,{\rm{mV}}^{2}\) in equilibrium.

Effect of refractoriness in the input activity on integrate-and-fire model neurons. (a) Scheme of the simulation. PPD superposition generators produce excitatory and inhibitory input to the neurons. Neurons in population 1 do not have a spiking mechanism, we record the free membrane voltage without threshold and reset. Neurons in population 2 are spiking LIF neurons used to simulate the firing rate and the membrane distribution in the presence of the threshold. (b) Estimate of the stationary distribution of the membrane potential of population 2 (kernel density estimation with Gaussian kernel) with 1/μ = 14 s − 1. Color denotes dead-time \(\bar{d}=d/\mu\) of input component processes, (back, blue, green, red, yellow): \(\bar{d}=\)(0, 0.2, 0.4, 0.6, 0.8) (ms). The inset shows how the value of the density at the spiking threshold depends on \(\bar{d}\). (c) Firing rate ν of the LIF neurons (population 2) depending on the effective dead-time d of the component input processes, keeping the mean ISI and the total input rate constant. Simulation results are obtained using PPD superposition generators (diamonds) and gamma process superposition generators (circles). The colors distinguish different rates 1/μ of the input component processes, (blue, green, red, yellow, cyan, orange): 1/μ =(5, 8, 11, 14, 17, 20) (s − 1). To maintain the same total rate of input spikes for different rates of the component input processes, the number of superimposed processes was adjusted accordingly, see Eq. (33) and text. Error-bars denote the standard deviation of the rate across simulated neurons. (d) Reduction r of the variance of the free membrane potential (population 1) relative to the case of Poisson input. Theoretical result (29) as solid curve. Limits for infinite component rate (dotted line, Eq. (30)) and for fully regular input components (dashed lines, Eq. (32)); color and symbol code as in (c). Remaining parameters are given in Table 2

To realize the input rates ν e and ν i as superpositions of PPD components, each with rate 1/μ and dead-time d, we choose superpositions of

PPDs, respectively, where \(\lfloor\rfloor\) denotes rounding down to the next integer. The remaining rates \(\nu_{\rm{e}}^{\rm{rem.}}=\nu_{\rm{e}}-n_{\rm{e}}/\mu\) and \(\nu_{\rm{i}}^{\rm{rem.}}=\nu_{\rm{i}}-n_{\rm{i}}/\mu\) were additionally injected as Poisson processes to have the same total input rates for all choices of μ and d. In the absence of a spiking mechanism (Fig. 5(a), population 1) of the receiving neuron, refractoriness in the input spike trains decreases the variance of the membrane potential. Driving input composed of independent excitatory and inhibitory superpositions of PPDs results in the membrane potential variance

where \({\rm{Var}}\left[U\right]_{\rm{e}}=\frac{1}{2}w^{2}\tau(n_{\rm{e}}r(d,\mu,\tau)/\mu+\nu_{\rm{e}}^{\rm{rem.}})\) and \({\rm{Var}}\left[U\right]_{\rm{i}}=\frac{1}{2}g^{2}w^{2}\tau(n_{\rm{i}}r(d,\mu,\tau)/\mu+\nu_{\rm{i}}^{\rm{rem.}})\). The relative reduction of the variance compared to the case of Poisson input is shown in Fig. 5(d). The analytical results agree very well with direct simulation of the free membrane potential.

To determine how the refractoriness in the input spike trains affects the spiking of neurons, we simulated LIF neurons that emit an action potential if the voltage reaches a threshold U θ as defined above (Fig. 5(a), population 2). Figure 5(c) shows the dependence of the firing rate of the LIF neurons on the relative dead-time d/μ of the input processes, keeping the total input rate and the rate 1/μ of a single process constant. Data are shown for six different values of the component process rate 1/μ, indicated by the colors of the curves. The case of d = 0 here reflects the commonly used Poisson process. With increasing dead-time the firing rate of the LIF neurons first rapidly decreases. This corresponds to a decrease in variance of the free membrane potential as can be seen in Fig. 5(d). The initial decrease in firing rate of the LIF neurons is followed by a slight increase that saturates (for all but the yellow and cyan curves, see below) as the component spike trains become completely clock-like as d → μ.

Figure 5(b) shows the estimated probability density of membrane potentials in simulations with fixed 1/μ for five values of d. We observe that d changes the shape of the stationary distribution of the membrane potentials, most visible around the peak of the distribution and at the threshold. The distribution of membrane potentials determines the rate and response properties of the neuron (Helias et al. 2010b), ultimately giving rise to the rate dependence shown in Fig. 5(c). From the inset in Fig. 5(b) it can be seen that close to the spiking threshold, the distribution of membrane potentials does not go to zero linearly, as a diffusion approximation would predict (Gerstner and Kistler 2002). This is also the case for Poisson input with d = 0 (blue curve) and can be explained by the time-discretization of the simulation (Helias et al. 2010a) and the small but non-vanishing synaptic weight (Helias et al. 2010b). Apart from this phenomenon, all three curves with d > 0 show a decreased probability density close to threshold. The firing rate of the neuron depends strongly on the values of the distribution in this range, as we recently illustrated in a focused review article (Helias et al. 2011). The changes of the shape of the probability density close to threshold explains the significant decrease in the firing rates in Fig. 5(c). However, the yellow and cyan curves in Fig. 5(c), where 1/μ = 14 s − 1 and 17 s − 1, deviate from the other three since they do not saturate after the initial decrease, but continue to rise. A similar trend can also be seen in the green (8 s − 1), red (11 s − 1) and orange (20 s − 1) curves, which ultimately saturate, but rise a little at first. This effect is related to the auto-covariance function of the input process, cf. Fig. 2(d)–(f), and will be discussed in detail based on Fig. 6 below.

Oscillations in input due to refractoriness and resonance of integrate-and-fire model neurons. (a) Power spectral density (PSD) of the superposition of exc. and inh. PPDs which are used as input, theoretical curves Eq. (46) computed analogously to Eq. (34). Subplots correspond to different relative dead-times \(\bar{d}=d/\mu\) of the input components as indicated on the left. Colors distinguish the rate of component input PPDs as in Fig. 5(c). (b) PSD of the membrane potential of population 1 in units of mV2 s − 1 driven by PPD superpositions. Error-bars show the standard deviation of the estimate across LIF neurons. Solid line shows the analytical power spectrum computed via Eq. (47), computed analogously to Eq. (34). Subplots and colors as in (a). (c) Estimated PSD of the output spike trains of population 2 in units of s − 1 driven by PPD superpositions. Subplots and colors as in (a). Error-bars display standard deviation of the estimate across neurons. (d) Interval statistics (mean ISI μ n and coefficient of variation CVn) and matched PPD parameters (λ n, d n) of population 2 neurons’ output spike trains for several \(\bar{d}\) (blue, green, red, cyan, orange: 0.0, 0.2, 0.4, 0.6, 0.8) as a function of the rate 1/μ of the component input PPDs. Same parameters and simulation setup as in Fig. 5

The relative variances shown in Fig. 5(d) can further be related to the asymptotics of Eq. (29) derived above. All four curves show the same maximum of the variance for d = 0, which also corresponds to the limit of small rates of the input processes (Eq. (31)). For small d the curves then follow the limiting case of infinite component rate Eq. (30) (dotted line), but the slopes soon decrease in magnitude to saturate at their respective limiting value Eq. (32) (dashed lines). Note that the PPDs matched to the recorded neurons above have d/μ ≈ 0.6 (see Table 1), which is well described by the limiting case Eq. (32). The parameters of the neuron model and the input processes are shown in Table 2.

In both the Fig. 5(c) and (d) we also included the results we obtained by using a gamma process superposition generator instead of the PPD one. Here we used gamma processes with integer shape parameter p which ranged from 1 to 10. When matched to the moments of a PPD, this corresponds to a relative dead time of \(\bar{d}=1-p^{-1/2}\) irrespective of the scale parameter of the gamma process. The results for the ten different gamma process superposition inputs are displayed as circles in the figures, showing a very similar trend both concerning the membrane potential variance and the firing rate of the stimulated neurons. Because the gamma process has a different auto-correlation function than the PPD, the analytical result for the reduction of the membrane potential Eq. (29) is not valid for the superposition of gamma processes. Still the variance reduction follows a similar law in this case. The error bars in Fig. 5(c) display the standard deviation of the firing rate estimate across simulated neurons in population 2.

To better understand the non-monotonous effect of increasing dead-time of the component input processes on the firing rate of LIF neurons which is displayed in Fig. 5(c) we investigated the power spectral densities (PSD) of input, membrane potential and neuronal output spike trains, for five values of \(\bar{d}=d/\mu\) and the previously chosen input component rates in Fig. 6. The PSD of the PPD, of independent superpositions of PPDs and of the membrane potential driven by them, is known analytically as described in Appendix D. Figure 6(a) shows the PSD of the superpositions of excitatory and inhibitory PPDs which are used as input to the simulated LIF neurons. For \(\bar{d}=0\) the PSD is flat, as it should be for a Poisson process. As \(\bar{d}\) increases, peaks emerge in the power spectrum at frequencies which are roughly multiples of 1/d. These correspond to the oscillations in the auto-correlation function, shown in Fig. 2(d)–(f), because the auto-covariance Eq. (38) is the Fourier transform of the PSD according to the Wiener–Khintchine theorem. The colors of the six curves correspond to different component process rates 1/μ as in Fig. 5(c) and (d). Note that the maximum value of the PSD is identical for all rates at a fixed \(\bar{d}\). Figure 6(b) displays the PSD of the membrane potential of neurons in population 1 (which do not spike). The membrane acts as a low-pass filter with a gain decreasing as ∼ 1/f 2 beyond cutoff frequency 2π/τ. Accordingly, the peaks in the input PSD (Fig. 6(a)) are diminished more and more for larger frequencies.

The output spike trains of the LIF neurons in population 2, however, show a different characteristic, as can be seen from their PSD shown in Fig. 6(c). For the Poisson input case \(\bar{d}=0\) the PSD of the spike trains is low for small frequencies and gradually approaches the stationary firing rate, which is a sign of the effective refractoriness of these neurons. As the oscillatory components in the input signal increase for rising \(\bar{d}\), the peaks in the input PSD (Fig. 6(a)) become visible in the neuronal spike trains (Fig. 6(c)), indicating that the oscillatory input modulates the outgoing firing rate. However, although the input amplitude at peak frequency is invariant, the output amplitude at peak frequency depends on the peak frequency, showing maximum transmission at about 15 Hz in the red curve in subplot \(\bar{d}=0.6\) and in the yellow curve in subplot \(\bar{d}=0.8\). This effect might be at least partly explained by linear response theory of the LIF neuron (Ledoux and Brunel 2011), which has shown that in the regime of sufficiently low fluctuations of the membrane, resonances of the transmission gain appear near the firing rate of the neuron.

The resonance of the LIF neuron, however, coincides with an increase of the mean firing rate (Fig. 5(c)) when the position of the peak of the input PSD comes close to the resonance frequency. An increase in mean rate generally cannot be a linear effect of oscillatory input—there the mean input is unchanged by the oscillatory components in the input. Still a qualitative explanation can be given here by considering the PPD with a time-dependent hazard function (sine-modulated) and dead-time d n as a simple model for the LIF neuron. In Deger et al. (2010) this model system has been analyzed for general periodic inputs, revealing multiplicative couplings of input frequency components in the output rate. In particular Fig. 3(c) of Deger et al. (2010) shows that the mean rate (β 0) of a PPD with sine-modulated hazard has a local maximum at frequencies of about 0.41/d n and 0.88/d n. For a more quantitative argument, in the following we need to relate the statistics of the modulated PPD to the spiking of the LIF neurons.

Results of the analysis of the inter-spike-intervals of the LIF neurons for several \(\bar{d}\) (colors) as a function of input component rate 1/μ are shown in Fig. 6(d). As the input component rate 1/μ increases, the mean neuronal ISI μ n grows. This general trend is due to the continuous decrease in relative variance of the membrane potential (Eq. (29)) with increasing 1/μ. In the range between 8 and 20 Hz, shown in the inset, there are local minima of μ n for larger \(\bar{d}\), which corresponds to the rise of ν = 1/μ n with growing \(\bar{d}\) in Fig. 5(c). The second subplot shows the coefficient of variation of the neurons. For the larger values of \(\bar{d}\), 0.6 and 0.8, it changes non-monotonically. In the region 1/μ < 10 s − 1, the CVn decreases, presumably because the variance of the input is continuously reduced. The mean integration time of the LIF neuron here is μ n ≈ 0.1 s, so on average it integrates less than one spike of each component input process. However, for 1/μ > 10 s − 1, the LIF neuron integration period μ n, which also grows slowly, covers an increasing number of spikes of each input PPD on average, which seems to gradually increase the CVn.

Matching a PPD to the LIF neurons’ spike trains via Eq. (6) yields the parameters λ n and d n shown in the bottom two subplots of Fig. 6(d). The matched value of d n hence depends on \(\bar{d}\) and 1/μ. Local maxima of the output spike rate are expected around frequencies of 0.41/d n and 0.88/d n. Given the range of values of d n which are matched to the LIF neurons, the resonances of the mean rate are located in the frequency ranges between 8.1 and 17.4 Hz and between 17.3 and 37.4 Hz, respectively. The peaks of the input PSD (Fig. 6(a)) lie well within these frequency ranges. Hence the existence of resonances of the mean firing rate in Fig. 5(c) can be explained by the resonance properties of the non-stationary matched PPD. The same arguments extends to the resonance observed in the first harmonic (Fig. 6(c)) and higher harmonics, which also show local maxima of the transmission gain for certain frequencies in the PPD model (Deger et al. 2010). Further studies, which investigate the effects of component dead-time in the input spike trains on the dynamics of LIF neurons in more detail, are necessary to quantitatively explain this phenomenon.

4 Discussion

We have demonstrated how a PPD can be associated to a stationary neuronal spike train by matching of mean and variance of the ISI (Tuckwell 1988). The PPD is the simplest possible extension of the Poisson process to capture effective refractoriness. Due to the simplicity of the PPD, we uncovered the functional dependence of the Fano factor (FF) on the length of the counting window. Our analytical result for the PPD is in good agreement both with the gamma process and the neuronal data, which suggests that effective refractoriness is the key issue in understanding this functional dependence. In contrast to the Poisson process which has FF = 1, the FF of the PPD, and of independent superpositions of PPDs, is generally smaller than unity. As a model for a population of independently spiking neurons the independent superposition of PPDs is therefore more accurate in terms of count variability.

Considering the ISI density of a superposition of PPDs, we find that it converges rapidly to the exponential distribution. Correspondingly, the coefficient of variation (CV) of the ISI converges to 1 for large numbers of superimposed processes. This, however, does not mean that the process becomes a Poisson process. The superposition of PPDs still differs from the Poisson process with respect to its FF, its auto-correlation function and its serial interval correlations. For large counting windows, the deviations of the FF can be explained by the serial interval correlations through Eq. (21). But already for small counting windows the FF of PPDs differs from the Poisson process, see Eq. (14). Moreover, the analytical dependence of the CV on the number of superimposed processes agrees with the neuronal spike data and the gamma realizations, which hints again at effective refractoriness being the key issue to understand second order statistics of the process.

Finally, the total serial interval correlation between subsequent ISIs in superpositions of neural spike trains are accurately predicted by our analytic result. Serial correlations in neuronal spike trains have been reported frequently (see Farkhooi et al. 2009 for an overview). As has been shown by Muller et al. (2007) and Schwalger et al. (2010) they can result from spike-frequency adaptation. However, in superimposed spike trains the total serial correlation is due to another effect, which can be illustrated by the example of the superposition of two spike trains: Given the spike train of one neuron, superimposed spikes of another neuron will divide an ISI of the first neuron in two parts that add up to a fixed length. Because one of the parts is generally longer than the other, the two intervals have negative serial correlation. For a superposition of n spike trains, a similar argument holds. The detailed mechanisms of how serial correlations and effective refractoriness in the input spike train affect the membrane potential dynamics of LIF neurons remain to be investigated. Our simulation results show that the variance and the shape of the equilibrium distribution of membrane potentials and the stationary firing rate of integrate-and-fire neurons with balanced excitatory and inhibitory input are significantly affected.

We have applied the PPD as a model for the spike trains of three somatosensory cortical neurons with a coefficient of variation CV < 1. This means that the modeled spike trains are more regular than Poisson processes. Only neurons with this property can be modeled with a stationary PPD. In contrast, neurons in the prefrontal cortex of monkeys typically show CV > 1 (Shinomoto et al. 2003), which can not be achieved with the stationary PPD according to Eq. (5). It might be possible, though, to capture such increased irregularity by a PPD with a time-dependent rate parameter, see for example (Turcott et al. 1994; Deger et al. 2010). Regularly spiking neurons with a CV < 1, for which the presented results apply, are the majority in motor and premotor regions (Shinomoto et al. 2003) and somatosensory regions (Nawrot et al. 2007) of the cortex.

Another vividly debated topic is the ability of spiking neurons to transmit correlations in the input spike trains to output spikes (De la Rocha et al. 2007; Rosenbaum and Josic 2011; Renart et al. 2010). It has been shown that correlation transmission depends on the auto-correlation functions of the input spike trains (Tetzlaff et al. 2008). Generally, the auto-correlation function of a superposition of independent spike trains is the sum of the auto-correlation functions of the single processes. The latter are, as we demonstrated, closely linked to the effective refractoriness of the neurons.

In models of recurrent networks, mean field theory can be applied to theoretically estimate the spike rate of leaky integrate-and-fire (LIF) neurons in a recurrent neuronal network (Brunel 2000). Thereby the spike rate of each neuron is assumed to be the same and is obtained as the self-consistent solution of the input to output rate mapping of a single neuron. Obviously, in the analytical derivation of the firing rate of a neuron given its input rates, several assumptions are made (Brunel 2000). One of them is that the spike trains of the neurons in the network have Poisson statistics. In fact, it has been shown that the choice of a particular point process as an input to a neuron has impact on the dynamics of the membrane potential of neurons (Câteau and Reyes 2006). In Section 3.5 we have shown that the firing rate of LIF neurons is sensitive to the refractoriness in the single spike trains and we explain the observed deviation compared to Poisson input. Theoretical estimates of the self-consistent mean-field firing rate of recurrent neuronal networks could thus be improved by taking into account the refractoriness of the single neurons.

Refractoriness in the input processes of LIF neurons can also be interpreted as a “colored-noise” problem. As can be seen in Fig. 6(a), the PSD of the input to the neurons is not flat (“white”) as for driving Poisson processes. For small dead-time in the input (\(\bar{d}=0.2\)) the PSD is reduced for small frequencies and gradually increases towards 1/d, which is a similar PSD as that of a high-pass filtered white noise (also called “green” noise) . The complementary case of LIF neurons driven by low-pass filtered “white” noise (“red” noise) has been dealt with previously (Brunel and Sergi 1998; Lindner 2004; Moreno-Bote and Parga 2010). An extended Fokker–Planck equation to treat the case of “green” noise effectively as “white minus red” noise has been suggested in Câteau and Reyes (2006). For larger input dead-time (\(\bar{d}\geq0.4\)), however, the oscillatory character of the input signal becomes more influential and a description based on “green” noise alone does not suffice, since the PSD of “green” noise does not contain the pronounced peaks in the input PSD of PPD superpositions (Fig. 6(a)).

We found that the spike trains of LIF neurons driven by superpositions of PPDs show resonances to certain frequency components of the input 6(c)). When the input power at the resonance frequency becomes large (for large \(\bar{d}=d/\mu\)) the mean firing rate of the neurons also increases (Fig. 5(c)). This effect cannot be explained by linear response theory of the LIF neuron. Qualitatively, we explained the change of the mean firing rate by regarding the LIF neuron itself effectively as a PPD with a time-dependent hazard function, which transmits signals non-linearly (Deger et al. 2010). However, this effect might be visible here only since the PSD of the input contains high power at a narrow frequency band. If the dead-time of the input component processes is heterogeneous, the input PSD is less concentrated and might not provoke this non-linear transmission effect. For neurons in cortical networks, it is more reasonable to assume heterogeneous as opposed to homogeneous input processes, suggesting that the change of the mean firing rate for large \(\bar{d}\) that we see here is a hallmark of a rather extreme scenario.

To summarize, the PPD is a reasonable approximate model for the spike train statistics of stationary single neurons in vivo, and a very good model for the pooled spike trains of homogeneous neuronal populations. This is in contrast to the established Poisson process (without dead-time), which does not account for the correct auto-correlation, count variability, ISI variability, and serial interval correlations. We showed that these properties indeed affect the dynamics of the membrane potential of LIF neurons. For simulations in discrete time, homogeneous superpositions of PPDs and of gamma processes can be efficiently generated by the methods we present in Algorithms 1 and 2. The PPD and gamma superposition generators have been implemented in the Neural Simulation Tool (NEST, Gewaltig and Diesmann 2007), which was used to obtain the simulation results presented in this work.

References

Berry, M. J., & Meister, M. (1998). Refractoriness and neural precision. Journal of Neuroscience, 18(6), 2200–2211.

Binzegger, T., Douglas, R. J., & Martin, K. A. C. (2004). A quantitative map of the circuit of cat primary visual cortex. Journal of Neuroscience, 39(24), 8441–8453.

Boucsein, C., Nawrot, M. P., Schnepel, P., & Aertsen, A. (2011). Beyond the cortical column: Abundance and physiology of horizontal connections imply a strong role for inputs from the surround. Frontiers in Neuroscience, 5(32), 1–13.

Brunel, N. (2000). Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. Journal of Computational Neuroscience, 8(3), 183–208.

Brunel, N., & Sergi, S. (1998). Firing frequency of leaky intergrate-and-fire neurons with synaptic current dynamics. Journal of Theoretical Biology, 195(1), 87–95.

Campbell, N. (1909). The study of discontinuous phenomena. Proceedings of the Cambridge Philological Society, 15, 117–136.

Câteau, H., & Reyes, A. (2006). Relation between single neuron and population spiking statistics and effects on network activity. Physical Review Letters, 96(5), 058101.

Cox, D. R. (1962). Renewal theory. London: Methuen.

Cox, D. R., & Lewis, P. A .W. (1966). The statistical analysis of series of events. Methuen’s monographs on applied probability and statistics. London: Methuen.

Cox, D. R., & Smith, W. L. (1954). On the superposition of renewal processes. Biometrika, 41, 1–2, 91–99.

Deger, M., Helias, M., Cardanobile, S., Atay, F. M., & Rotter, S. (2010). Nonequilibrium dynamics of stochastic point processes with refractoriness. Physical Review E, 82(2), 021129.

De la Rocha, J., Doiron, B., Shea-Brown, E., Kresimir, J., & Reyes, A. (2007). Correlation between neural spike trains increases with firing rate. Nature, 448(16), 802–807.

Farkhooi, F., Muller, E., & Nawrot, M. P. (2011). Adaptation reduces variability of the neuronal population code. Physical Reviews E, 83(5), 050905.

Farkhooi, F., Strube-Bloss, M. F., & Nawrot, M. P. (2009). Serial correlation in neural spike trains: Experimental evidence, stochastic modeling, and single neuron variability. Physical Review E, 79(2), 021905.

Gerstein, G. L., & Kiang, N. Y. S. (1960). An approach to the quantitative analysis of electrophysiological data from single neurons. Biophysical Journal, 1(1), 15–28.

Gerstner, W., & Kistler, W. (2002). Spiking neuron models: Single neurons, populations, plasticity. Cambridge: Cambridge University Press.

Gewaltig, M. O.,& Diesmann, M. (2007). NEST (NEural Simulation Tool). Scholarpedia, 2, 1430.

Helias, M., Deger, M., Diesmann, M., & Rotter, S. (2010a). Equilibrium and response properties of the integrate-and-fire neuron in discrete time. Frontiers in Computational Neuroscience, 3(29), 1–17.

Helias, M., Deger, M., Rotter, S., & Diesmann, M. (2010b). Instantaneous non-linear processing by pulse-coupled threshold units. PLoS Computation Biology, 6(9), e1000929.

Helias, M., Deger, M., Rotter, S., & Diesmann, M. (2011). Finite post synaptic potentials cause a fast neuronal response. Frontiers in Neuroscience, 5(19), 1–16.

Heyman, D. P., & Sobel, M. J. (1982). Stochastic models in operations research (Vol. I). New York: McGraw-Hill.

Holden, A. V. (1976). Models of the stochastic activity of neurones. In Lecture notes in biomathematics. Berlin: Springer.

Johnson, D. H. (1996). Point process models of single-neuron discharges. Journal of Computational Neuroscience, 3(4), 275–299.

Johnson, D. H., & Swami, A. (1983). The transmission of signals by auditory-nerve fiber discharge patterns. Journal of the Acoustical Society of America, 74(2), 493–501.

Kass, R., & Ventura, V. (2001). A spike-train probability model. Neural Computation, 13(8), 1713–1720.

Kuffler, S. W., Fitzhugh, R., & Barlow, H. B. (1957). Maintained activity in the cat’s retina in light and darkness. Journal of General Physiology, 40(5), 683–702.

Ledoux, E., & Brunel, N. (2011). Dynamics of networks of excitatory and inhibitory neurons in response to time-dependent inputs. Frontiers in Computational Neuroscience, 5(25), 1–17.

Lindner, B. (2004). Interspike interval statistics of neurons driven by colored noise. Physical Review E, 69, 0229011.

Lindner, B. (2006). Superposition of many independent spike trains is generally not a Poisson process. Physical Review E, 73(2), 022901.

Maimon, G., & Assad, J. A. (2009). Beyond Poisson: Increased spike-time regularity across primate parietal cortex. Neuron, 62(3), 426–440.

Meyer, C., & van Vreeswijk, C. (2002). Temporal correlations in stochastic networks of spiking neurons. Neural Computation, 14(2), 369–404.

Moreno-Bote, R., & Parga, N. (2010). Response of integrate-and-fire neurons to noisy inputs filtered by synapses with arbitrary timescales: Firing rate and correlations. Neural Computation, 22(6), 1528–1572.

Muller, E., Buesing, L., Schemmel, J., & Meier, K. (2007). Spike-frequency adapting neural assemblies: Beyond mean adaptation and renewal theories. Neural Computation, 19(11), 2958–3010.

Nawrot, M. P., Boucsein, C., Rodriguez Molina, V., Aertsen, A., Grün, S., et al. (2007). Serial interval statistics of spontaneous activity in cortical neurons in vivo and in vitro. Neurocomputing, 70(10–12), 1717–1722.

Nawrot, M. P., Boucsein, C., Rodriguez Molina, V., Riehle, A., Aertsen, A., et al. (2008). Measurement of variability dynamics in cortical spike trains. Journal of Neuroscience Methods, 169(2), 374–390.

Ostojic, S. (2011). Interspike interval distributions of spiking neurons driven by fluctuating inputs. Journal of Neurophysiology, 106(1), 361–373

Paninski, L. (2004). Maximum likelihood estimation of cascade point-process neural encoding models. Network: Computation in Neural Systems, 15(4), 243–262.

Papoulis, A. (1991). Probability, random variables, and stochastic processes (3rd ed.). New York: McGraw-Hill.

Picinbono, B. (2009). Output dead-time in point processes. Communications in Statistics - Simulation and Computation, 38(10), 2198–2213.

Pillow, J. W., Shlens, J., Paninski, L., Sher A, Litke, A. M., et al. (2008). Spatio-temporal correlations and visual signalling in a complete neuronal population. Nature, 454(7207), 995–999.

Renart, A., De La Rocha, J., Bartho, P., Hollender, L., Parga, N., et al. (2010). The asynchronous state in cortical cicuits. Science, 327(5965), 587–590.

Rosenbaum, R., & Josic, K. (2011). Mechanisms that modulate the transfer of spiking correlations. Neural Computation, 23(5), 1261–1305.

Schwalger, T., Fisch, K., Benda, J., & Lindner, B. (2010). How noisy adaptation of neurons shapes interspike interval histograms and correlations. PLoS Computational Biology, 6(12), e1001026.

Shinomoto, S., Shima, K., & Tanji, J. (2003). Differences in spiking patterns among cortical neurons. Neural Computation, 15(12), 2823–2842.

Tetzlaff, T., Rotter, S., Stark, E., Abeles, M., Aertsen, A., et al. (2008). Dependence of neuronal correlations on filter characteristics and marginal spike-train statistics. Neural Computation, 20(9), 2133–2184.

Truccolo, W. (2010). Stochastic models for multivariate neural point processes: Collective dynamics and neural decoding. In S. Rotter, & S. Grün (Eds.), Analysis of parallel spike trains. Berlin: Springer.

Truccolo, W., Hochberg, L. R., & Donoghue, J. P. (2010). Collective dynamics in human and monkey sensorimotor cortex: Predicting single neuron spikes. Nature Neuroscience, 13(1), 105–113.

Tuckwell, H. C. (1988). Introduction to theoretical neurobiology (Vol. 2). Cambridge: Cambridge University Press.

Turcott, R. G., Lowen, S. B,. Li, E., Johnson, D. H., Tsuchitani, C., et al. (1994). A nonstationary Poisson point process describes the sequence of action potentials over long time scales in lateral-superior-olive auditory neurons. Biological Cybernetics, 70(3), 209–217.

van Vreeswijk, C. (2010). Stochastic models of spike trains. In S. Rotter, & S. Grün (Eds.), Analysis of parallel spike trains. Berlin: Springer.

van Vreeswijk, C., & Sompolinsky, H. (1996). Chaos in neuronal networks with balanced excitatory and inhibitory activity. Science, 274(5293), 1724–1726.

Acknowledgements

We thank Martin Nawrot and Stefano Cardanobile for helpful comments, and two anonymous reviewers for suggesting substantial improvements. Partially funded by BMBF grant 01GQ0420 to BCCN Freiburg, and DFG grant to SFB 780, subproject C4.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Additional information

Action Editor: Nicolas Brunel

M.D. and M.H. contributed equally to this work.

Appendices

Appendix A: Matching of the gamma process

The stationary gamma process is defined by its inter-spike-interval (ISI) density

with parameters p > 0 (shape) and b > 0 (1/b: scale). The mean ISI is μ = p/b and the variance of the ISI is σ 2 = p/b 2. Hence the gamma process can be matched to the ISIs of a neuron by plugging in empirical estimates \(\hat{\mu}\) and \(\hat{\sigma}\),

The auto-correlation function of the gamma process that is shown in Fig. 2(d)–(f) has been computed numerically by truncating the series Eq. (8) after 25 terms, with f given by Eq. (36).

Appendix B: ISI density of homogeneous superpositions

Consider a component process at a random point in time. Then the probability density of the waiting time x to the next spike is given by the forward recurrence time (Cox 1962),

with f(t) as defined in Eq. (1). Here \(\mathcal{F}(t)\) denotes the survivor function. In the following we will also need

where we used μ as defined in Eq. (2). We obtain the probability density function for the inter-spike-interval of an n-fold superposition by the general formula (Cox 1962)

Plugging in what we computed above we find

Hence the inter-spike-intervals generated by the superposition of n Poisson processes with dead-time obey the probability density function

The mean inter-spike-interval (ISI) of the superposition is accordingly

The variance of the ISI of the superposition is

Appendix C: Variance of the spike count

The auto-correlation function γ(t) of the PPD is given by Eq. (8). Consequently (Holden 1976) its auto-covariance is

The count X in a window of length l is the shot noise X(t) = (S ⋆ h)(t), given the stationary spike train S(t) and the rectangular kernel \(h(t)\stackrel{\mathrm{def}}{=}1_{t\in[0,l]}\) with \(1_{\rm{Z}}=\{1\text{ if }Z,\;0\text{ else}\}\). In the following we decorate mean-subtracted random variables with a bar, \(\bar{x}\stackrel{\rm{def}}{=} x-\mathrm{E}[x]\), for all random variables x. The auto-covariance function of the shot noise X(t) is, analogous to computations in Nawrot et al. (2008) and Tetzlaff et al. (2008),

where in the third step we identified the auto-covariance function of the PPD \(\int_{-\infty}^{\infty}\bar{S}(s)\bar{S}(t+s-v+u)\, ds=c_{\mathrm{PPD}}(t-v+u)\), and in the last step we introduced the symbol H(x) for the correlation of the kernel with itself. In case of the rectangular (counting) kernel h(t) this evaluates to

So we obtain

where we used the convention \(\int_{0}^{t}\delta(x)dx=\frac{1}{2}\) for t > 0 and the symmetry of the auto-covariance function (38). We insert c PPD which yields

We evaluate the χ k using Eq. (9)

where we defined

Here we used \(z(a,b)\stackrel{\mathrm{def}}{=}\Gamma(a,b)/\Gamma(a)\) and \(\Gamma(a,b)=\int_{b}^{\infty}t^{a-1}e^{-t}dt\), which is the incomplete gamma function. Finally the count variance is

Appendix D: Variance of the membrane potential

We consider a shot noise U(t) = R(I ⋆ h)(t) with the kernel \(h(t)=\theta(t)\frac{1}{\tau}e^{-\frac{t}{\tau}}\) (Eq. (26)), the membrane’s impulse response, driven by the input process RI(t) = τwS(t). Here S(t) is a superposition of n independent and identically distributed PPDs S k (t), \(S(t)=\sum_{k=1}^{n}S_{k}(t)\). The auto-covariance function of S k is given as c PPD in Eq. (38). The auto-covariance function of the superposition S then is

where we used the mutual independence of the S k in the second step. This relation generally holds for independent superpositions of stationary point processes with auto-covariance function c PPD.

As in Appendix C we obtain the variance of the membrane potential U(t) from its auto-covariance function. We write out Eq. (39) and find

where we used

Using Eqs. (38) and (8) we can write

which, although we used the symbol c PPD above, holds for superpositions of any renewal process with ISI density f. We evaluate Eq. (42) at t = 0 given the ISI density of the PPD (9) to obtain the variance

From Eq. (42) we may also compute the power spectrum of the membrane potential. According to the Wiener–Khintchine theorem it is given by the Fourier transform \(\tilde{c}_{U}(\omega)\) of the auto-covariance function. So we obtain

with the Fourier transform of the membrane kernel

and the power spectrum of the PPD \(\tilde{c}_{\mathrm{PPD}}(\omega)\). It can be shown (Gerstner and Kistler 2002) that

Thus the power spectrum of the membrane potential Eq. (45) is

We have used this formula to compute the power spectrum displayed in Fig. 5(d), weighted by the contribution of excitation and inhibition and remaining Poisson input analogous to Eq. (34).

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Deger, M., Helias, M., Boucsein, C. et al. Statistical properties of superimposed stationary spike trains. J Comput Neurosci 32, 443–463 (2012). https://doi.org/10.1007/s10827-011-0362-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10827-011-0362-8