Abstract

Purpose: Telemedicine approaches to autism (ASD) assessment have become increasingly common, yet few validated tools exist for this purpose. This study presents results from a clinical trial investigating two approaches to tele-assessment for ASD in toddlers. Methods: 144 children (29% female) between 17 and 36 months of age (mean = 2.5 years, SD = 0.33 years) completed tele-assessment using either the TELE-ASD-PEDS (TAP) or an experimental remote administration of the Screening Tool for Autism in Toddlers (STAT). All children then completed traditional in-person assessment with a blinded clinician, using the Mullen Scales of Early Learning (MSEL), Vineland Adaptive Behavior Scales, 3rd Edition (VABS-3), and Autism Diagnostic Observation Schedule (ADOS-2). Both tele-assessment and in-person assessment included a clinical interview with caregivers. Results: Results indicated diagnostic agreement for 92% of participants. Children diagnosed with ASD following in-person assessment who were missed by tele-assessment (n = 8) had lower scores on tele- and in-person ASD assessment tools. Children inaccurately identified as having ASD by tele-assessment (n = 3) were younger than other children and had higher developmental and adaptive behavior scores than children accurately diagnosed with ASD by tele-assessment. Diagnostic certainty was highest for children correctly identified as having ASD via tele-assessment. Clinicians and caregivers reported satisfaction with tele-assessment procedures. Conclusion: This work provides additional support for the use of tele-assessment for identification of ASD in toddlers, with both clinicians and families reporting broad acceptability. Continued development and refinement of tele-assessment procedures is recommended to optimize this approach for the needs of varying clinicians, families, and circumstances.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Increasingly, telemedicine-based assessment (i.e., tele-assessment) has been adopted as an approach for evaluation and diagnosis of autism spectrum disorder (ASD) in young children (Berger et al., 2021; Stavropoulos et al., 2022; Wagner et al., 2022). Over the past decade, tele-assessment has been described as a promising strategy for increasing access to diagnostic services (Juárez et al., 2018; Reese et al., 2013; Zwaigenbaum & Warren, 2020), particularly in the context of the recognized importance of early identification of ASD (Hyman et al., 2020). The COVID-19 pandemic hastened the uptake of ASD tele-assessment as service systems sought to meet clinical demand and family needs while in-person clinical care was paused (Dahiya et al., 2021; Stavropoulos et al., 2022; Wagner et al., 2020). This recent tele-assessment research has emphasized broad family and clinician satisfaction while also highlighting need for ongoing studies of diagnostic accuracy, performance of tele-assessment tools, and characteristics of children and families best served by tele-assessment.

Initial research on ASD tele-assessment focused on either (1) caregiver administration of traditional ASD assessments tools (Reese et al., 2013, 2015) or (2) clinic-based tele-assessment that included a remote psychologist as well as a trained, on-site para-professional who completed assessment activities with the child (Juárez et al., 2018). These initial studies demonstrated feasibility and acceptability of tele-assessment, as well as diagnostic accuracy (Juárez et al., 2018) and system-level benefits related to reduced travel burden, decreased wait time, and a shift in referral patterns toward tele-assessment rather than assessment in tertiary care centers (Stainbrook et al., 2019). This early work also recognized barriers to large-scale replication, especially challenges related to involvement of multiple trained professionals or detailed training of caregivers to implement structured activities.

The next phase of tele-assessment investigation focused on the development and deployment of instruments specifically adapted or designed for clinician-guided, caregiver-mediated use (Corona, Wagner et al., 2021; Corona, Weitlauf, et al., 2021). Prior to the pandemic, our team published preliminary interim data from a clinical trial (NCT03847337) on the use of either the TAP (previously TELE-ASD-PEDS; Corona et al., 2020) or remote administration of the prompts of the Screening Tool for Autism in Toddlers and Young Children (STAT; Stone et al., 2000) in a clinical research setting (Corona, Weitlauf, et al., 2021). The remote STAT administration, an unstandardized use of the instrument beyond the scope intended by the authors (hereafter referred to as the TELE-STAT), was employed as an experimental procedure to benchmark accuracy of the TAP. These tools were also chosen for study based on their relatively brief administration time (i.e., approximately 15–20 min), accessibility of materials, use in previous work (Juárez et al., 2018; Stainbrook et al., 2019), and potential for clinicians to guide caregivers to complete activities without need for prior caregiver training or practice. Interim data highlighted caregiver satisfaction with caregiver-mediated tele-assessment procedures and noted diagnostic agreement of 86% when comparing remote clinicians’ diagnostic impressions to participants’ diagnoses from traditional in-person assessment.

At the onset of the COVID-19 pandemic, one of the tools included in this study, the TAP, was deployed in direct-to-home settings (i.e., within families’ homes, as opposed to tele-assessment completed within a medical or clinical research setting) and disseminated broadly across the United States and beyond. Analysis of clinician-reported data both within (Wagner et al., 2020) and external to our institution (Wagner et al., 2022) indicated that clinicians were able to make definitive diagnostic decisions via tele-assessment for a majority of children with a high degree of diagnostic certainty. Additional external studies of tele-assessment using the TAP highlighted caregiver satisfaction with tele-assessment procedures, including logistical convenience, positive interactions with clinicians, and satisfaction with diagnostic feedback and recommendations (Jones, 2022; Stavropoulos et al., 2022). However, the rapid roll-out of the TAP in response to the urgent need for telemedicine tools preceded controlled investigation of the TAP in comparison to traditional, in-person assessment.

To date, limited data are available regarding the psychometric properties of tele-assessment tools, the characteristics of children evaluated via tele-assessment, and the diagnostic agreement between tele- and traditional in-person assessments. This study presents complete data from our original TAP clinical trial (NCT03847337). Specifically, this study evaluates tele-assessment procedures incorporating either the TAP or remote administration of the STAT (TELE-STAT), with a focus on diagnostic accuracy in comparison to traditional in-person assessment, clinical characterization of children identified vs. not identified as having ASD via tele-assessment, and overall clinician and caregiver feedback on tele-assessment procedures. The primary purpose of this study was not to directly compare the performance of the TAP to the TELE-STAT, but rather to compare tele-assessment using clinician-guided, caregiver-led procedures to traditional in-person ASD assessment procedures. Given broad use and uptake of the TAP in recent years, analysis of TAP scoring procedures is also presented, including a comparison of the dichotomous and Likert scoring procedures.

Methods

Participants

Eligible participants were toddlers between 15 and 36 months of age with concerns for ASD or developmental delays, with at least one primary caregiver with sufficient English facility to complete study measures. Exclusion criteria included significant sensory impairments or medical complexity that would preclude use of the study assessment battery.

Participants included 144 children (29% female, 71% male) between 17 and 36 months of age (mean = 2.5 year, SD = 0.33 years) and their caregivers. All children were recruited from a clinical waitlist in which they had been referred by a regional medical or early intervention provider specifically due to concern for possible ASD or other developmental differences. Participating caregivers included 124 mothers, 13 fathers, and seven other caregivers (e.g., grandparents, foster parents, legal guardians). Additional demographic information is presented in Table 1.

Tele-assessment Measures

TAP. The TAP (formerly TELE-ASD-PEDS; Corona et al., 2020) is designed for evaluating characteristics of ASD in toddlers via parent-mediated tele-assessment. The TAP was designed for use with children between the ages of 14–36 months (Wagner et al., 2021). It includes eight activities, including free play, physical play routines, social responding (e.g., name calling, directing attention), and activities that may prompt a child to request (e.g., snack). Immediately following administration, clinicians rate child behavior on seven distinct behavioral anchors (i.e., socially directed speech and sounds; frequent and flexible eye contact; unusual vocalizations; unusual or repetitive play; unusual or repetitive body movements; combines gestures, eye contact, and speech/vocalization; unusual sensory exploration or reaction). Scoring instructions include the use of both dichotomous ratings (presence/absence) as well as Likert scoring (3 = behaviors characteristic of ASD clearly present; 2 = possible atypical behavior; 1 = behaviors characteristic of ASD not present). Total Likert scores of ≥ 11 are considered to indicate increased likelihood of ASD.

TELE-STAT. This experimental procedure was adapted from the Screening Tool for Autism in Toddlers and Young Children (STAT). The STAT is a Level 2 screening instrument validated for in-person use with children between 14 and 47 months of age (Stone et al., 2000, 2004, 2008). It includes 12 activities assessing a child’s skills related to play, requesting, directing attention, and imitating adult actions. Activities are scored as “pass” (score of 0), indicating that the behavior or skill was observed, or “fail” (score of 0.25, 0.5, or 1 depending on domain), indicating that the behavior or skill was not observed. Total scores range from 0 to 4, with scores of 2 or higher indicating increased likelihood of ASD.

In the TELE-STAT, participating caregivers were asked to complete STAT activities with guidance and instruction from remote clinicians. Remote clinicians scored each activity as it was completed, seeking clarification from caregivers about child behaviors including eye contact and vocalizations as needed. This was considered a non-standardized administration of the STAT, but thought to be a reasonable alternative to use in preliminary evaluation of the TAP, particularly given the dearth of caregiver-led tele-assessments available at the time (i.e., assessments such as the Brief Observation of Symptoms of Autism (BOSA; Dow et al., 2021) were not yet published or broadly available at the onset of this trial).

Clinical interview. Clinicians completed a clinical interview with caregivers to gather information about child social and communication skills, as well as restricted, repetitive behaviors. Clinical interviews were not standardized but were based on DSM-5 criteria for ASD.

Caregiver questionnaire. Following tele-assessment, caregivers completed a questionnaire designed specifically for the current study to assess their perceptions of the tele-assessment process. Questions asked about caregivers’ understanding of clinician-delivered instructions, their comfort during the tele-assessment, and their perceptions of the duration and content of the activities.

Clinician questionnaire. Following tele-assessment, clinicians completed questionnaires documenting their diagnostic impression, diagnostic certainty, and observed and reported characteristics of ASD according to DSM-5 criteria. Clinicians also provided information about any factors that impacted the assessment (e.g., child behavior, parent behavior, technology challenges).

In-person Assessment Measures

Mullen Scales of Early Learning (MSEL). The MSEL (Mullen, 1995) is a standardized, normed developmental assessment for children through age 68 months. It provides an overall index of ability, the Early Learning Composite, as well as subscale scores (Receptive/Expressive Language, Visual Reception, Gross/Fine Motor).

Vineland Adaptive Behavior Scales, Third Edition (VABS-3). The VABS-3 (Sparrow, 2016) is a clinician-administered caregiver interview that assesses adaptive functioning in social, communication, motor, and daily living skills. Norms-based standard scores are provided for birth through adulthood for each domain, as well as an overall Adaptive Behavior Composite score.

Autism Diagnostic Observation Schedule, Second Edition (ADOS-2). The ADOS-2 (Lord, 2012; Luyster et al., 2009) is a semi-structured, play-based interaction and observation designed to assess characteristics of ASD. In response to the ongoing COVID-19 pandemic, aspects of ADOS-2 administration were modified during the latter year of data collection (n = 59 participants). Modifications included use of masks by clinicians and caregivers, omission of the snack activity (substituting toys for food when requesting) and responsive social smile, and substitution of materials to allow for thorough cleaning (i.e., substitution of remote control car for rabbit toy in joint attention activity). Given non-standardized administration and modification, standardized risk scores should be interpreted with caution (Dow et al., 2021). Of note, mean ADOS-2 severity scores were not significantly different (t = 0.19, p > 0.05) for administrations completed prior to COVID (n = 83; m = 7.78, SD = 2.64) and those completed with COVID modifications (n = 59; m = 7.86, SD = 2.24). Diagnostic agreement between tele- and in-person assessment did not differ prior to and following the introduction of COVID-19 precautions (χ = 2.37; p > 0.05). As recommended, the ADOS-2 was used as part of a broader evaluation, including expert clinical judgment, when making diagnostic determinations (Lord et al., 2000). Specifically, psychometric findings of assessment instruments were combined with the totality of available information (i.e., clinician observations, caregiver report) when determining whether a child met criteria for the diagnosis of autism spectrum disorder, per DSM-5 diagnostic criteria.

Clinical interview. Clinicians completed a clinical interview with caregivers to gather information about child social and communication skills, as well as restricted, repetitive behaviors. Clinical interviews were not standardized but were based on DSM-5 criteria for ASD.

Caregiver questionnaire. Following in-person assessment, caregivers completed a questionnaire designed for the current study. This questionnaire asked them to compare the tele- and in-person assessments, including indicating whether they would prefer to play with their child as part of an assessment, observe a clinician interact with their child, or both. Caregivers were also given the opportunity to provide open-ended feedback on the tele-assessment and in-person assessment.

Clinician questionnaire. Following in-person assessment, clinicians completed questionnaires documenting their diagnostic impression, diagnostic certainty, and observed and reported characteristics of ASD according to DSM-5 criteria.

Procedures

Participants were randomized to receive one of two tele-assessment procedures: the TAP (n = 73) or the TELE-STAT (n = 71). All procedures were approved by the Institutional Review Board. No adverse events or participant withdrawals occurred. Clinical procedures (i.e., both tele-assessments and in-person assessments) were completed by psychological providers (licensed psychologists, licensed senior psychological examiners, supervised postdoctoral psychology fellows) with expertise in the evaluation and diagnosis of ASD in toddlers. All psychological providers had achieved research reliability on the ADOS-2 and completed training on the STAT. All providers participated in regular reliability discussions about TAP and TELE-STAT scoring and procedures.

Tele-assessment took place within a clinical research setting. Upon arriving, participating families were escorted to a tele-assessment room by a research assistant. Research assistants were responsible for orienting families to the assessment room, test materials (e.g., toys, bubbles, snacks), and tele-assessment technology. Research assistants accessed the Zoom platform via wall-mounted monitor and speakers allowing for two-way audiovisual communication and camera control by the remote assessor. The research assistant left the room after ensuring that the family and clinician could see and hear each other.

Remote clinicians (n = 15 psychological providers, as defined above) guided caregivers through structured interactions with their children, following the procedures described above for either the TELE-STAT or TAP. Each measure was coded according to its instructions regarding behaviors that the clinician observed. Clinicians also completed a clinical interview with caregivers focused on autism-related characteristics. Tele-assessments lasted an average of 39 min (SD = 11.12). Remote clinicians documented their diagnostic impressions (ASD vs. no ASD) and diagnostic certainty immediately following tele-assessment. Diagnostic impressions were informed by the totality of tele-assessment information (i.e., TAP or TELE-STAT plus clinical interview). Tele-assessment diagnostic impressions were not shared with families or in-person clinicians. Following tele-assessment, caregivers completed the caregiver questionnaire to share their thoughts on the tele-assessment process.

Immediately after completing tele-assessment, participating families moved into another clinic room to complete traditional in-person evaluation with a different clinician blind to tele-assessment results. Tele-assessment was always completed prior to in-person assessment for several reasons, including the anticipated length of in-person assessments, the receipt of diagnostic feedback following in-person assessment, and the desire for caregivers to provide initial feedback on tele-assessment procedures before completing in-person assessment. In-person evaluation included the MSEL, VABS-3, ADOS-2, and a clinical interview with caregivers. In-person clinicians (n = 8) were a subset of the providers who completed tele-assessments, including licensed psychologists and licensed senior psychological examiners. In-person clinicians shared assessment results and delivered diagnostic feedback following in-person assessment.

Analytic Plan

Diagnostic outcomes of assessments are reported descriptively. Independent sample t-tests and chi square tests were used to compare TAP and TELE-STAT groups in terms of diagnostic agreement, diagnostic certainty, and clinician and caregiver perceptions of tele-assessment. To examine assessment scores as a function of diagnostic agreement status, the Brown-Forsythe or the Welch test were used to compare total scores on the TELE-STAT, TAP, MSEL, VABS-3, and ADOS-2. The Brown-Forsythe test rather than traditional one-way ANOVA was used when the assumptions of normality and homogeneity of variance were met because this test is robust in the presence of unequal sample sizes (Maxwell & Delaney, 2003). The Welch test was used when the assumption of normality was met, but the assumption of homogeneity of variance was violated (Tomarken & Serlin, 1986). The Bonferroni method was used to correct for multiple comparisons.

Analysis of the psychometric properties of the TAP focused on comparing the utility of dichotomous and Likert scoring procedures. Cronbach’s alpha for total scores was calculated to measure the internal consistency of both scoring procedures (Cronbach, 1951). A procedure derived by Feldt (1969, 1980) was used to test the statistical significance of the difference between the internal consistency coefficients of the different response formats of the TAP (Charter & Feldt, 1996).

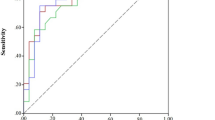

The diagnostic accuracy of the dichotomous and Likert scoring procedures for the TAP were comparatively evaluated using receiver operating characteristic (ROC) curves and the area under the curve (AUC) (Zhou et al., 2011). Confidence intervals for the AUCs were calculated using DeLong’s method for paired ROC curves (DeLong et al., 1988). The AUCs of the dichotomous and Likert scoring procedures for the TAP were compared using the stratified bootstrap test for paired ROC curves.

To determine the optimal cutoff score for the dichotomous and Likert scoring procedures, the following indices were calculated at all possible cutoff points: sensitivity, specificity, positive predictive value (PPV), negative predictive value (NPV), Cohen’s kappa, and Youden’s Index. Cohen’s kappa is a measure of interrater agreement and in this context, represents the agreement between the TAP and the clinical diagnosis based on in-person assessments. Youden’s index represents the likelihood of a positive test result among individuals with the condition versus individuals without the condition of interest (Zhou et al., 2011). Youden’s index is calculated as the sum of the sensitivity and specificity minus one.

Once optimal cutoff scores were identified, the sensitivity, specificity, positive predictive value, and negative predictive values were calculated and compared. Specifically, differences in sensitivity and specificity between the two scoring procedures for the TAP were analyzed using McNemar’s test, while differences in PPV and NPV were analyzed using the method proposed by Moskowitz & Pepe (2006).

All statistical analyses were performed in R (R Core Team, 2020). The cocron package was used to compare the Cronbach’s alpha coefficients of the two scoring procedures for the TAP (Diedenhofen, 2016). The pROC package was used to conduct the ROC curve analyses (Robin et al., 2011). The cutpointr package was used to calculated indices used to select the optimal cutoff points (Thiele & Hirschfeld, 2021). The DTComPair package was used to compare the sensitivity, specificity, PPV, and NPV of the optimal cutoff points of the TAP with dichotomous ratings and the TAP with Likert scoring (Stock & Hielscher, 2014).

Results

Preliminary Analyses

Following in-person assessment, 92% of toddlers were diagnosed with ASD. Diagnostic outcomes for children not meeting ASD criteria included speech delay (n = 3), global developmental delay (n = 3), unspecified developmental delays or behavioral concerns (n = 4), and typical development (n = 2).

No differences were observed between the TAP and TELE-STAT groups in terms of occurrence of diagnostic discrepancies (i.e., ASD vs. no ASD, χ = 0.13; p > 0.05), clinician diagnostic certainty following tele-assessment (t = 1.16, p > 0.05), or total time spent on tele-assessment (t = 0.19, p > 0.05). Caregiver satisfaction also did not differ as a function of the tele-assessment tool used (all p-values > 0.05). See Table 2 for detailed caregiver satisfaction questions.

Diagnostic Accuracy of tele-assessment

Comparing clinician diagnostic impressions following tele-assessment and in-person assessment indicated diagnostic agreement for 92% of participants. Remote clinicians correctly identified 124 toddlers as having ASD (ASD-ASD group). Remote clinicians correctly identified nine toddlers as not having ASD (No ASD-No ASD group). Eight children diagnosed with ASD following in-person assessment were missed on tele-assessment (ASD-No ASD group). Three children were inaccurately identified as having ASD following tele-assessment (no ASD-ASD group). Group comparisons were used to investigate differences between these four groups in terms of: child age, sex, and race; telehealth clinician diagnostic certainty; and scores on both telehealth and in-person assessments. Results of these comparisons are reported below.

Group comparison using the Brown-Forsythe test indicate that child age differed among groups (F*(3, 18.79) = 6.001, p = 0.005). Children in the No ASD-ASD group were significantly younger (m = 1.97, SD = 0.11) than children in all other groups (see Table 3). That is, the three children inaccurately identified as having ASD by tele-assessment were younger than all other children.

Fisher’s exact test was used to determine if there was a relationship between gender and diagnostic agreement status. There was not a significant relationship between gender and the four diagnostic agreement groups (p = 0.892).

Regarding diagnostic certainty, no differences were found for in-person clinicians across the four groups. However, telehealth clinician diagnostic certainty differed as a function of group (F*(3, 10.17) = 11.18, p = 0.001). Telehealth clinicians reported significantly greater diagnostic certainty for children in the ASD-ASD group. As seen in Table 3, telehealth clinicians reported lower diagnostic certainty for all other groups, including in cases of diagnostic disagreement (ASD-No ASD and No ASD-ASD groups), as well as for children for whom ASD was accurately ruled out by tele-assessment (No ASD-No ASD group).

Table 3 contains scores on tele-assessment and in-person assessment measures as a function of diagnostic outcome and diagnostic agreement status. Group comparisons using the Brown-Forsythe or Welch test revealed statistically significant group differences on the TAP (F*(2, 5.073) = 45.543, p < 0.001), TELE-STAT (F*(3, 2.680) = 11.111, p = 0.049), MSEL Early Learning Composite (W(3, 6.888) = 5.360, p = 0.032), VABS-3 Adaptive Behavior Composite (F*(3, 13.673) = 16.295, p < 0.001), and ADOS-2 severity scores (F*(3, 7.593) = 52.165, p < 0.001).

As expected, scores on tele-assessment instruments (i.e., TAP and TELE-STAT) were highest for children in the ASD-ASD group. Bonferonni post-hoc analyses of all possible between-group pairwise comparisons (see Table 3) indicated that, as expected, children in the No ASD-No ASD group had statistically significantly lower scores on both the TAP and TELE-STAT, in comparison to the ASD-ASD group. Children diagnosed with ASD following in-person assessment who were not identified on tele-assessment (ASD-No ASD group) also had statistically significantly lower scores on both the TAP and TELE-STAT. Children inaccurately identified as having ASD on tele-assessment (No ASD-ASD group) had TELE-STAT scores that were not significantly different than the ASD-ASD group. This comparison (i.e., No ASD-ASD vs. ASD-ASD) could not be calculated for the TAP, as only one child was in the No ASD-ASD group.

Fisher’s exact test was used to determine whether children’s risk classifications, using recommended cut-scores on tele-assessment instruments (i.e., scores ≥ 12 on the TAP or > 2 on the STAT), were associated with diagnostic agreement status. For both tele-assessments, Fisher’s exact test indicated statistically significant differences. For the TAP, all children in the ASD-ASD group had scores ≥ 12. No children in the No ASD-No ASD group had scores ≥12. The one child inaccurately identified as having ASD in the TAP condition had a TAP score ≥12, and 50% (n = 2) of the children in the ASD-No ASD group (i.e., children whose ASD diagnosis was missed on tele-assessment) had a TAP score ≥ 12. For the STAT, 83% of children in the ASD-ASD group had STAT scores >2. Half (n = 1) of the children in the No ASD-ASD group (i.e., those inaccurately identified has having ASD) had STAT scores >2. No children in the No ASD-No ASD group or in the ASD-No ASD (i.e., those with ASD missed on tele-assessment) had STAT scores exceeding the risk threshold.

Scores on in-person assessment also revealed between group differences on the ADOS-2, the MSEL, and the VABS-3 (see Table 3). Post hoc analysis indicated that the ASD-ASD group had higher scores on the ADOS-2 than all other groups. Children in the ASD-No ASD group had statistically significantly higher ADOS-2 scores than children without ASD (No ASD-No ASD). Children in the No ASD-No ASD group had significantly higher MSEL-ELC scores, compared to both children in the ASD-ASD group and children in the ASD-No ASD group. Children inaccurately identified as having ASD by tele-assessment (No ASD-ASD group) also had higher MSEL-ELC scores, compared to the ASD-ASD group. Regarding adaptive behavior, children in the No ASD-No ASD and No ASD-ASD groups had statistically significantly higher VABS-3 composite scores than children in the ASD-ASD group. Statistically significant differences did not emerge for MSEL composite scores.

Comparison of TAP Scoring Procedures

Cronbach’s alpha was used to measure the internal consistency of both TAP scoring procedures. The Cronbach’s alpha associated with the Likert scoring method was significantly higher than the dichotomous scoring method (t (71) = 1.921, p = 0.029). The Cronbach’s alpha for the TAP with dichotomous ratings was 0.795 (95% CI: 0.767–0.821). The Cronbach’s alpha for the TAP with Likert scoring was 0.834 (95% CI: 0.811–0.855).

Receiver operating characteristic (ROC) curves were used to examine the diagnostic accuracy of the TAP using dichotomous and Likert scoring procedures (see Fig. 1). The area under the curve (AUC) can range from 0 to 1, with a value of 0.50 indicating that prediction is at chance level. Analyses indicated an AUC of 0.923 (95% CI: 0.801-1.000) for the dichotomous scoring and an AUC of 0.949 (95% CI: 0.860-1.000) for the Likert scoring. Both were significantly different from chance level (p < 0.001). The AUCs were not significantly different (p = 0.175). Using Youden’s Index, the optimal cutoff point was found to be 15 for the dichotomous scoring method and 12 for the Likert scoring method. The sensitivity, specificity, positive predictive value, and negative predictive value associated with these cutoff points are presented in Table 4. The sensitivity of the Likert scoring method was significantly different from the sensitivity of the dichotomous rating method (p = 0.014). The negative predictive value (NPV) of the Likert scoring procedure was also significantly different from the NPV of the dichotomous scoring (p = 0.015).

Discussion

The present study describes outcomes from a clinical trial investigating tele-assessment of autism in toddlers using clinician-guided, caregiver-led procedures. Together, results of this study provide additional evidence supporting the use of tele-assessment in the identification of ASD in young children. Assessments combining caregiver-led play activities (using either the TAP or an experimental remote administration of the STAT) and clinical interviews with caregivers yielded high rates of diagnostic agreement with in-person evaluation, high levels of diagnostic certainty, and satisfaction from both families and clinicians.

Analysis of the TAP, now in broad clinical use (Wagner et al., 2022), found that a slight increase in the score more accurately identified increased likelihood of ASD. Preliminary use of the scale recommended interpretation of scores ≥ 11 as indicative of increased ASD likelihood. Analysis of the present data indicates that a score of ≥ 12 optimally identified children who went on to receive a diagnosis of ASD. This slightly more conservative interpretation of scores may help to reduce the chances of inaccurately identifying a child as having ASD via telehealth.

Across both groups, clinicians’ diagnostic impressions following tele-assessment agreed with the results of traditional, in-person assessment for 92% of participants. Of these, the vast majority (93%) were diagnosed with ASD. This likely reflects research recruitment pathways that rely heavily upon pre-screening of participants by referring community providers. Additional ongoing work is evaluating TAP functionality in a non-referred community sample.

As expected, participants identified as having ASD following both in-person and tele-assessment had high scores on tele-assessment instruments as well as the ADOS-2. Of note, diagnostic agreement and ADOS-2 scores did not differ as a function of pandemic precautions, including use of face masks, instituted part-way through the present study. Across the course of the study, eight participants were diagnosed with ASD following in-person assessment but not identified by tele-assessment. These participants had significantly lower scores on the TAP, TELE-STAT, and ADOS-2 and higher adaptive behavior skills relative to children with clearly identified ASD. The three participants incorrectly identified as having ASD on tele-assessment had TAP or TELE-STAT scores that approached or exceeded cut-offs for risk classification. These three children were significantly younger than children in all other groups. Though a small sample, this finding may call for additional caution when assessing very young children for ASD via tele-assessment. Additional research, in a larger and broader sample, will allow for ongoing investigation of the characteristics of children for whom tele-assessment does not provide an accurate or definitive diagnosis. Ultimately, it is not unexpected that some children, particularly those with more complex presentations, may be more accurately classified via in-person assessment.

Clinicians’ diagnostic certainty was significantly lower for children without ASD and for children inaccurately identified as having ASD on tele-assessment. That clinicians reported uncertainty in cases of diagnostic disagreement may inform clinical practice, with clinical uncertainty helping to guide recommendations regarding children who may need further or in-person evaluation. This finding is also consistent with prior work from our team (Wagner et al., 2020) and may reflect a referral bias in which our sample is skewed toward children with high levels of concern related to developmental differences, including autism-specific concerns as well as significant developmental delays, attention concerns, or challenging behaviors. Past findings from a broader sample of clinicians, with samples including a higher number of children without ASD, indicate high levels of certainty when ruling out ASD on tele-assessment (Wagner et al., 2022).

Across tele-assessment tools, caregivers and providers reported broad satisfaction with tele-assessment procedures. Consistent with past work (Corona, Weitlauf, et al., 2021), caregivers reported that tele-assessment procedures were easy to understand, lasted the right amount of time, and often elicited the behaviors about which they were most concerned. Most caregivers reported that they would recommend the use of tele-assessment to others. Across this and prior work, a smaller number of caregivers have qualitatively reported challenges related to technology use, managing child behavior while interacting with the clinician, and concerns that clinicians may not have a complete picture of their child following tele-assessment alone (Corona, Weitlauf, et al., 2021). Tele-assessment also presents barriers for families without reliable access to technology or internet connections, many of whom may be from underrepresented groups or from rural communities. Ongoing work is investigating child and family characteristics that predict for whom tele-assessment works well and who may be best served by in-person assessment, as well as focusing on optimizing clinical procedures to address some of these concerns.

Finally, this study provides the most comprehensive opportunity to date for detailed analysis of the psychometric properties of the TAP, including comparison of Likert and dichotomous scoring procedures. This analysis suggests increasing the previous cut-off score of ≥ 11 to score of ≥ 12, when using Likert scoring procedures. Additionally, these analyses support continued use of Likert scoring rather than dichotomous scoring. Though both scoring procedures performed well in distinguishing children with ASD from those without, Likert scoring procedures afforded statistically significantly higher sensitivity and negative predictive value. In general, the increase in scoring options provided by Likert versus dichotomous procedures affords greater variability in scores, thereby strengthening the psychometric properties of the test (Finn et al., 2015).

Limitations and Future Directions

A significant and primary limitation of the current study is that participants were referred due to high levels of concern for ASD, resulting in a sample heavily weighted toward children who received autism diagnosis. The vast majority of participants (92%) were diagnosed with ASD following in-person assessment, presenting barriers to detailed analysis of how tele-assessment functions for children without ASD. The current data supports clinicians’ ability to readily and confidently identify ASD via tele-assessment when it is present; this data allows for less commentary on how well tele-assessment differentiates children with ASD vs. other concerns. To address this important limitation, ongoing work is intentionally recruiting a broader sample, including children screened within community settings and children referred for a broader range of developmental concerns.

A second limitation in this study is the use of controlled clinical settings and materials. Within this study, families came into a clinical lab space, and tele-assessment was completed using technology and assessment materials provided by the research team. Tele-assessments were completed in a small room, with few distractions, with only the child and one or two caregivers present. At the onset of the trial, direct-to-home tele-assessment was thought to be years away and was not a routine clinical care option. The goal of this trial was to investigate the use of tele-assessment in a controlled way, with a longer-term goal of replication in less controlled, home settings. The onset of the pandemic necessarily expediated the move from clinic-based to home-based tele-assessment, prior to investigation in home settings. The current study, then, does not account for multitude of environmental factors present in home settings, including technology issues (e.g., devices, internet connectivity), people present (e.g., caregivers, other children), and other factors associated with the home setting (e.g., presence or absence of toys, distracting items or events, etc.) (Wagner et al., 2020). Ongoing work is studying the use of direct-to-home tele-assessment in comparison to in-person assessment and will provide a useful contrast to the present work, as well as greater generalizability to the current ways in which tele-assessment is used clinically.

Another possible limitation associated with study research procedures is that all families participated in tele-assessment immediately prior to in-person assessment, as opposed to use of a counter-balanced design. This design was meant to reduce the likelihood of child fatigue following in-person procedures, as well as to first probe families’ initial impressions of tele-assessment without comparison to in-person assessment. However, it is possible that both toddlers and caregivers experienced increased fatigue during in-person assessment, which may have impacted toddler scores on direct assessment. All clinicians engaged in dialogue with families to query whether caregivers felt that in-person observations accurately captured their child’s usual behavior, and that caregivers had the opportunity to share any behaviors that the clinician did not observe.

Finally, tele- and in-person assessments in this study both included autism-focused clinical interviews that were not standardized or coded across clinicians. Though the present research focused primarily on use and scoring of parent-administered, play-based assessment procedures, ongoing and future work may further probe information gained via clinical interviewing. Caregiver report is a vital part of any autism assessment, but limited work to date has discussed the relative impact of caregiver report and clinician observation in the context of tele-assessments. As clinical use of tele-assessment continues, it will be important to explore ways in which varying tele-assessment procedures are used to meet varying family and clinician needs.

Conclusion

In sum, the conclusion of this clinical trial represents one of the first controlled studies of caregiver-mediated tele-assessment for autism in toddlers. This work supports the claim that trained clinicians with expertise in the diagnosis of ASD can readily identify ASD characteristics via tele-assessment, and that this type of assessment is acceptable to both clinicians and families. This study further demonstrated that multiple, distinct caregiver-mediated assessment tools can facilitate remote ASD identification. In the context of broad uptake and use of tele-assessment, ongoing work is needed to optimize tele-assessment procedures, to ensure equitable access, and to understand for whom and in what situations tele-assessment can be most successful. Continued development and refinement of tele-assessment tools will also expand the reach of telemedicine in meeting the needs of various clinicians, families, and circumstances.

References

Berger, N. I., Wainer, A. L., Kuhn, J., Bearss, K., Attar, S., Carter, A. S., Ibanez, L. V., Ingersoll, B. R., Neiderman, H., Scott, S., & Stone, W. L. (2021). Characterizing available tools for synchronous virtual Assessment of Toddlers with suspected autism spectrum disorder: a brief report. Journal of Autism and Developmental Disorders. https://doi.org/10.1007/s10803-021-04911-2.

Charter, R. A., & Feldt, L. S. (1996). Testing the Equality of two alpha coefficients. Perceptual and Motor Skills, 82(3), 763–768. https://doi.org/10.2466/pms.1996.82.3.763.

Corona, L., Hine, J., Nicholson, A., Stone, C., Swanson, A., Wade, J., Wagner, L., Weitlauf, A., Warren, Z. TELE-ASD-PEDS: A Telemedicine-based ASD Evaluation Tool for Toddlers and, & Children, Y. (2020). https://vkc.vumc.org/vkc/triad/tele-asd-peds (Vanderbilt University Medical Center)

Corona, L. L., Wagner, L., Wade, J., Weitlauf, A. S., Hine, J., Nicholson, A., Stone, C., Vehorn, A., & Warren, Z. (2021). Toward Novel Tools for Autism Identification: fusing computational and clinical expertise. Journal of Autism and Developmental Disorders, 51(11), 4003–4012. https://doi.org/10.1007/s10803-020-04857-x.

Corona, L. L., Weitlauf, A. S., Hine, J., Berman, A., Miceli, A., Nicholson, A., Stone, C., Broderick, N., Francis, S., Juárez, A. P., Vehorn, A., Wagner, L., & Warren, Z. (2021). Parent perceptions of caregiver-mediated Telemedicine Tools for assessing autism risk in toddlers. Journal of Autism and Developmental Disorders, 51(2), 476–486. https://doi.org/10.1007/s10803-020-04554-9.

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16(3), 297–334. https://doi.org/10.1007/bf02310555.

Dahiya, A. V., Delucia, E., McDonnell, C. G., & Scarpa, A. (2021). A systematic review of technological approaches for autism spectrum disorder assessment in children: implications for the COVID-19 pandemic. Research in Developmental Disabilities, 109, 103852. https://doi.org/10.1016/j.ridd.2021.103852.

DeLong, E. R., DeLong, D. M., & Clarke-Pearson, D. L. (1988). Comparing the Areas under two or more correlated receiver operating characteristic curves: a Nonparametric Approach. Biometrics, 44(3), https://doi.org/10.2307/2531595.

Diedenhofen, B. (2016). Cocron: Statistical Comparisons of Two or more Alpha Coefficients (Version 1.0–1). Available from http://comparingcronbachalphas.org

Dow, D., Holbrook, A., Toolan, C., McDonald, N., Sterrett, K., Rosen, N., Kim, S. H., & Lord, C. (2021). The brief Observation of symptoms of Autism (BOSA): development of a New adapted Assessment measure for remote Telehealth Administration through COVID-19 and Beyond. Journal of Autism and Developmental Disorders. https://doi.org/10.1007/s10803-021-05395-w.

Feldt, L. S. (1969). A test of the hypothesis that cronbach’s alpha or kuder-richardson coefficent twenty is the same for two tests. Psychometrika, 34(3), 363–373. https://doi.org/10.1007/bf02289364.

Feldt, L. S. (1980). A test of the hypothesis that Cronbach’s alpha reliability coefficient is the same for two tests administered to the same sample. Psychometrika, 45(1), 99–105. https://doi.org/10.1007/bf02293600.

Finn, J. A., Ben-Porath, Y. S., & Tellegen, A. (2015). Dichotomous versus polytomous response options in psychopathology assessment: method or meaningful variance? Psychological Assessment, 27(1), https://doi.org/10.1037/pas0000044.

Jones, M. K., Zellner, M. A., Hobson, A. N., Levin, A., & Roberts, M. Y. (2022). Understanding caregiver satisfaction with a telediagnostic assessment of autism spectrum disorder. American Journal of Speech-Language Pathology, 31(2), 982–990. https://doi.org/10.1044/2021_AJSLP-21-00139.

Juárez, A. P., Weitlauf, A. S., Nicholson, A., Pasternak, A., Broderick, N., Hine, J., Stainbrook, J. A., & Warren, Z. (2018). Early Identification of ASD through Telemedicine: potential value for Underserved populations. Journal of Autism and Developmental Disorders, 48(8), 2601–2610. https://doi.org/10.1007/s10803-018-3524-y.

Lord, C., Risi, S., Lambrecht, L., Cook, E. H. Jr., Leventhal, B. L., Dilavore, P. C., Pickles, A., & Rutter, M. (2000). The Autism Diagnostic Observation schedule - generic: a standard measure of social and communication deficits associated with the spectrum of autism. Journal of Autism and Developmental Disorders, 30, 205–223. doi:https://doi.org/10.1023/a:1005592401947.

Lord, C., Rutter, M., DiLavore, P. C., Risi, S., Gotham, K., & Bishop, S. (2012). Autism diagnostic observation schedule, second edition (ADOS-2) manual (part 1): modules 1–4. Western Psychological Services.

Luyster, R., Gotham, K., Guthrie, W., Coffing, M., Petrak, R., Pierce, K., Bishop, S., Esler, A., Hus, V., Oti, R., Richler, J., Risi, S., & Lord, C. (2009). The Autism Diagnostic Observation Schedule—Toddler Module: a New Module of a standardized diagnostic measure for Autism Spectrum Disorders. Journal of Autism and Developmental Disorders, 39(9), 1305–1320. https://doi.org/10.1007/s10803-009-0746-z.

Maxwell, S. E., & Delaney, H. D. (2003). Designing Experiments and Analyzing Data. In Designing Experiments and Analyzing Data. https://doi.org/10.4324/9781410609243

Moskowitz, C. S., & Pepe, M. S. (2006). Comparing the predictive values of diagnostic tests: sample size and analysis for paired study designs. Clinical Trials, 3(3), 272–279. https://doi.org/10.1191/1740774506cn147oa.

Mullen, E. M. (1995). Mullen Scales of early learning. American Guidance Service.

R Core Team (2020). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. URL https://www.R-project.org/.

Reese, R. M., Jamison, R., Wendland, M., Fleming, K., Braun, M. J., Schuttler, J. O., & Turek, J. (2013). Evaluating interactive videoconferencing for assessing symptoms of Autism. Telemedicine and e-Health, 19(9), 671–677. https://doi.org/10.1089/tmj.2012.0312.

Reese, R. M., Jamison, T. R., Braun, M., Wendland, M., Black, W., Hadorn, M., Nelson, E. L., & Prather, C. (2015). Brief report: use of interactive television in identifying autism in Young Children: Methodology and Preliminary Data. Journal of Autism and Developmental Disorders, 45(5), 1474–1482. https://doi.org/10.1007/s10803-014-2269-5.

Robin, X., Turck, N., Hainard, A., Tiberti, N., Lisacek, F., Sanchez, J. C., & Müller, M. (2011). pROC: An open-source package for R and S + to analyze and compare ROC curves. BMC Bioinformatics, 12. https://doi.org/10.1186/1471-2105-12-77

Sparrow, S. D., Cicchetti, D. V., & Saulnier, C. A. (2016). Vineland Adaptive Behavior Scales, Third Edition. Pearson.

Stainbrook, J. A., Weitlauf, A. S., Juárez, A. P., Taylor, J. L., Hine, J., Broderick, N., Nicholson, A., & Warren, Z. (2019). Measuring the service system impact of a novel telediagnostic service program for young children with autism spectrum disorder. Autism, 23(4), 1051–1056. https://doi.org/10.1177/1362361318787797.

Stavropoulos, K. K. M., Bolourian, Y., & Blacher, J. (2022). A scoping review of telehealth diagnosis of autism spectrum disorder. Plos One, 17(2), e0263062. https://doi.org/10.1371/journal.pone.0263062.

Stavropoulos, K. K. M., Heyman, M., Salinas, G., Baker, E., & Blacher, J. (2022). Exploring telehealth during COVID for assessing autism spectrum disorder in a diverse sample. Psychology in the Schools. https://doi.org/10.1002/pits.22672.

Stock, C., & Hielscher, T. (2014). DTComPair: comparison of binary diagnostic tests in a paired study design. R package version 1.0.3. URL: http://CRAN.R-project.org/package=DTComPair

Stone, W. L., Coonrod, E. E., & Ousley, O. Y. (2000, Dec). Brief report: screening tool for autism in two-year-olds (STAT): development and preliminary data. Journal Of Autism And Developmental Disorders, 30(6), 607–612. https://www.ncbi.nlm.nih.gov/pubmed/11261472.

Stone, W. L., Coonrod, E. E., & Ousley, O. Y. (2000). Brief report: Screening Tool for Autism in Two-Year-Olds(STAT): Development and Preliminary Data. Journal of Autism and Developmental Disorders, 30(6), 607–612. https://doi.org/10.1023/a:1005647629002.

Stone, W. L., Coonrod, E. E., Turner, L. M., & Pozdol, S. L. (2004). Psychometric Properties of the STAT for early autism screening. Journal of Autism and Developmental Disorders, 34(6), 691–701. https://doi.org/10.1007/s10803-004-5289-8.

Stone, W. L., McMahon, C. R., & Henderson, L. M. (2008). Use of the Screening Tool for Autism in Two-Year-Olds (STAT) for children under 24 months. Autism, 12(5), 557–573. https://doi.org/10.1177/1362361308096403.

Thiele, C., & Hirschfeld, G. (2021). Cutpointr: improved estimation and validation of optimal cutpoints in r. Journal of Statistical Software. https://doi.org/10.18637/jss.v098.i11. 98.

Tomarken, A. J., & Serlin, R. C. (1986). Comparison of anova Alternatives under Variance Heterogeneity and specific noncentrality structures. Psychological Bulletin, 99(1), https://doi.org/10.1037/0033-2909.99.1.90.

Wagner, L., Corona, L. L., Weitlauf, A. S., Marsh, K. L., Berman, A. F., Broderick, N. A., Francis, S., Hine, J., Nicholson, A., Stone, C., & Warren, Z. (2020). Use of the TELE-ASD-PEDS for autism evaluations in response to COVID-19: preliminary outcomes and clinician acceptability. Journal of Autism and Developmental Disorders. https://doi.org/10.1007/s10803-020-04767-y.

Wagner, L., Stone, C., Wade, J., Corona, L., Hine, J., Nicholson, A., Swanson, A., Vehorn, A., Weitlauf, A., & Warren, J. (2021). TELE-ASD-PEDS user’s Manual. Vanderbilt Kennedy Center Treatment and Research Institute for Autism Spectrum Disorders.

Wagner, L., Weitlauf, A. S., Hine, J., Corona, L. L., Berman, A. F., Nicholson, A., Allen, W., Black, M., & Warren, Z. (2022). Transitioning to Telemedicine during COVID-19: impact on perceptions and use of Telemedicine Procedures for the diagnosis of Autism in Toddlers. Journal of Autism and Developmental Disorders, 52(5), 2247–2257. https://doi.org/10.1007/s10803-021-05112-7.

Zhou, X. H., Obuchowski, N. A., & McClish, D. K. (2011). Measures of diagnostic accuracy. In Statistical Methods in Diagnostic Medicine (pp. 13–55). Wiley. https://doi.org/10.1002/9780470906514

Zwaigenbaum, L., & Warren, Z. (2020). Commentary: embracing innovation is necessary to improve assessment and care for individuals with ASD: a reflection on Kanne and Bishop (2020). Journal of Child Psychology and Psychiatry. https://doi.org/10.1111/jcpp.13271.

Funding

The study was supported by funding from NIH/NIMH (R21MH118539), the Eunice Kennedy Shriver National Institute of Child Health and Human Development (U54 HD08321), and the Vanderbilt Institute for Clinical and Translational Research. The Vanderbilt Institute for Clinical and Translational Research (VICTR) is funded by the National Center for Advancing Translational Sciences (NCATS) Clinical Translational Science Award (CTSA) Program, Award Number 5UL1TR002243-03.

Author information

Authors and Affiliations

Contributions

The first draft of the manuscript was written by Laura Corona. Madison Hooper completed substantial data analysis and reviewed multiple drafts of the manuscript. Liliana Wagner and Amy Weitlauf reviewed and revised the manuscript. All authors contributed to study design and/or data collection. All authors reviewed the final manuscript.

Data included in this manuscript can be accessed in the NIMH Data Archive. Dataset identifiers: https://doi.org/10.15154/1528433.

Corresponding author

Ethics declarations

Conflict of Interest

Authors Corona, Wagner, Weitlauf, Hine, Nicholson, Stone, and Warren are authors of the TAP. They do not receive compensation related to use of this tool.

Ethics Approval

All procedures performed in this study were in accordance with the ethical standards of the institutional research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards. This research was approved by the Institutional Review Board at Vanderbilt University Medical Center.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Corona, L.L., Wagner, L., Hooper, M. et al. A Randomized Trial of the Accuracy of Novel Telehealth Instruments for the Assessment of Autism in Toddlers. J Autism Dev Disord 54, 2069–2080 (2024). https://doi.org/10.1007/s10803-023-05908-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-023-05908-9