Abstract

Lecture capture tends to polarise the views of academic staff. Some view it as encouraging non-attendance at lectures. Others view it as a valuable adjunct, allowing students to revisit the lecture experience and providing opportunities for clarification and repetition of key points. However, data supporting either of these stances remains scarce. Irrespective of these views, a more pertinent question pertains to the impact of lecture attendance and the use of recordings on student achievement. Findings remain unclear due to methodological issues, inconsistent findings, and a lack of differentiation of students by year of study. This paper investigated the impact of attendance, lecture recording, and student attainment across four years of an undergraduate programme. For first year students, attendance and recording use were positive predictors of performance. For weaker students, supplementary recording use was beneficial but only better students use of the recordings helped overcome the impact of low attendance. For second year students, attendance and recording use were positively correlated with, but no longer predictive of, achievement. There was no relationship for honours year students. We found no compelling evidence for a negative effect of recording use, or that attendance and recording use were related. We suggest focusing on improving lecture attendance through monitoring whilst also providing recordings for supplementary use, particularly in first year. Finally, our findings highlight the need to consider third variables such as year of study and first language when conducting and comparing lecture capture research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Traditionally, lecture capture software has been used to deliver content for online courses, but it is now commonplace for campus-based programmes to also record live lectures. Anecdotally, many lecturers express reservations towards recording arguing that it will lower attendance (Scutter et al. 2010) and if true this may be a valid cause for concern. In a meta-analytic review, Credé et al. (2010) found that attendance had a strong relationship with final course grade and was a better predictor of academic performance than factors such as standardised test scores or study skills. However, technological advances mean that lecture capture may provide an alternative to attending the live lecture, and so we argue that the key question from a learner perspective is not whether providing recordings affects attendance but whether the provision of recordings influences student achievement. Previous findings on this question have been mixed, potentially due to methodological problems, and no study has yet addressed the question of whether there is variability in how students in different years of study utilise lecture recordings and if the impact on achievement changes over time.

Lecture recording and achievement

Students report numerous benefits of lecture recordings. Scutter et al. (2010) found four main themes emerged from interviews regarding the use of lecture podcasts. First, recordings allowed clarification of material presented in lectures. Second, recordings were particularly useful for students who have English as a second language. This complements previous research suggesting that students who identify as having a physical or learning disability view recorded lectures as an essential part of their learning, allowing rewinding and pausing of the recording whilst note-taking (Eisen et al. 2015; Gysbers et al. 2011; Williams 2006; Williams et al. 2012). Third, recordings were used for revision, supporting increased comprehension of difficult topics, supplementing written materials. Finally, the provision of recordings allowed some students to use them as a direct substitute for the live lecture.

Some researchers suggest that recordings have a negative effect on achievement. Varao-Sousa and Kingstone (2015) found that students performed better after attending a live lecture rather than viewing similar content via a recording. Inglis et al. (2011) found a negative correlation between the frequency of accessing online recordings and final grade. The data indicated that weaker students, as assessed through their overall exam performance, might choose to access recordings multiple times in order to try and compensate for their difficulty understanding the material. Thus, rather than access to recordings impacting performance, it may be the case that weaker students are simply more likely to access recordings than stronger students. Drouin (2014) also indicated that students who had access to recordings had significantly lower grades. However, when non-participators (students who did not attend lectures or watch the recordings) were excluded, there were no differences in performance. Drouin argues that whilst the use of recordings does not itself affect performance negatively, it may increase non-participation in certain groups (i.e. students who do not attend lectures because they know they are recorded, but then fail to watch the recordings).

Other evidence suggests a more nuanced view. Williams et al. (2012) found that students who used recordings as a substitute for attending live lectures received lower final grades. However, those students who supplemented live lecture attendance with additional recording use performed better than those who only attended live lectures, a pattern also noted by von Konsky et al. (2009) and Bos et al. (2016). Wieling and Hofman (2010) additionally considered the interaction between attendance and viewing online recordings and found that whilst both attendance and watching recordings were positive predictors of final grade, the effect of viewing lecture recordings was greater for those students who had lower lecture attendance.

Third variable student factors and recording use

The approach of a student to learning can encompass preferences in terms of access to material (visual, auditory, or both) and general approaches to learning (active engagement or reflective processing) and may be indicative of the current stage in learning development (Kolb 1976; Marriott 2002). It has been suggested that learning preferences can change with experience, and that a student’s approach may be influenced by individual characteristics (Marriott 2002). Learning preferences differ across students according to educational maturity (Knowles 1980) and year of study (Marriott 2002). Since this includes preferences relevant to the mode of material presentation, this may impact the utility of lecture recordings for different students at different stages of study.

A proportion of students choose to study abroad, which can result in the requirement to study in a non-native language (Rientíes et al. 2016). Study abroad does not appear to adversely impact academic performance, with some evidence suggesting that non-native students perform better, or equivalent, to home students (Rientíes et al. 2016). However, there is some evidence that non-native students may utilise lecture recordings to a greater extent than native students (Pearce and Scutter 2010; Taplin et al. 2014) with stated reasons including the ability to pause a recording to look up words for clarification. The implication, reported by Revell (2014), is that lecture recordings could be useful for students who might be struggling with a language barrier, as a way in which they can attempt to improve their performance when studying in a foreign language. However, there is no evidence to suggest a direct link between recording use and performance enhancement in non-native students.

Finally, the level of course content and associated learning requirements may also influence use of lecture recordings. Phillips et al. (2007) argue that first year students may be less likely to attend lectures or access recordings, perhaps due to reduced educational maturity, the introductory nature of course content, or both. Demetriadis and Pombortsis (2007) also raise the issue of differences in teaching between introductory courses that are centred around knowledge acquisition that would directly benefit from additional exposure to lecture content. This contrasts with advanced, final year courses that are dominated by higher order thinking skills, problem-solving, and applied knowledge, where lecture recordings may not be as important. This suggests that the impact of lecture recording use may vary across year groups, but the potential relationship between lecture attendance, use of recordings, and achievement according to year of study has not yet been directly assessed.

Methodological concerns

In addition to third variable-type student and course-based factors, there are several methodological issues that may contribute to the inconsistent findings on lecture recordings. The first concerns how lecture attendance is measured. Some studies administered self-reports to students at the end of term, asking them to count lecture attendance (e.g. Franklin et al. 2011; Leadbeater et al. 2013; Williams et al. 2012) whilst Larkin (2010) used headcounts in lectures and could therefore not align individual attendance with recording use. Others have measured attendance in lectures using sign-in sheets or ID card swiping (e.g. Inglis et al. 2011; Jensen 2011; Phillips et al. 2011; Traphagan et al. 2010). In addition to inconsistencies in collecting attendance data, multiple methods have been used to collect recording data with some studies collecting self-reports of recording use (e.g. Gorissen et al. 2012; Larkin 2010; Leadbeater et al. 2013) that are subject to the same inaccuracies, whilst others retrieve data from the virtual learning environment (VLE) (e.g. Elliott and Neal 2016; Inglis et al. 2011; Phillips et al. 2011; Traphagan et al. 2010; Williams et al. 2012).

An additional issue affecting several studies concerns the use of continuous variables as categorical (Senn 2003). For example, Franklin et al. (2011) asked students to rate whether they used recordings regularly, not regularly, or not at all. Williams et al.’s (2012) self-report measure of attendance asked students whether they had attended 0–6, 7–13, 14–18, 19–21, 22–24, or 25–26 lectures. Given that the impact of recording use on performance appears to be nuanced, these practices may mask small effects and reduce measurement sensitivity. This issue of sensitivity is further compounded using artificial measures of achievement with low discrimination in potential outcomes; for example, Varao-Sousa and Kingstone (2015) use a six-item ‘true or false’ questionnaire to measure performance, rather than the final course grade. This type of measurement will differ, both in tone and in substance, from the summative assessments that will constitute a student’s final grade and limits the usefulness of the findings.

There is no easily identifiable pattern of consequences that these methodological issues appear to result in, that is, it is seemingly not the case that, for example, self-report methods tend to produce positive results and objective measures produce negative results. Yet, given the inconsistent literature, this is an issue worth highlighting. The number of third variable factors involved in lecture capture research means that this is a field in which it is almost impossible to achieve standardisation and precise control over all variables; however, we should strive to ensure that those factors that are under our control as researchers are as robust and reliable as possible.

The current project

The current cross-sectional study aimed to address the above issues. First, attendance data were collected in-class rather than as self-reports and treated as a continuous variable. Second, the recording data were collected using the VLE logs that measure recording usage in minutes and seconds and again treated as a continuous variable. To enhance ecological validity and sensitivity, the main outcome measure of achievement was students’ final grade for each course’s exam. Additionally, we included GPA and native speaker status as predictors, given previous findings that these may be related to use of recordings. Finally, to investigate the effect of maturity on the use and effect of recordings, our project is cross-sectional and reports data collected from students in four years of a Scottish undergraduate psychology degree programme for one semester.

We predicted that attendance and recording use would be significant positive predictors of performance. Additionally, we predicted that there would be an interaction between attendance and recording use, with students who had high recording use but low attendance (i.e. using the recordings as a substitute) performing worse than those who used the recordings as a supplement. Given that there were no previous studies comparing the effects for students in different years of study, our predictions were more exploratory. We expected that the effects of attendance would be larger for first year students based on their stage of educational maturity and the introductory nature of the course.

Method

Participants

Prior to teaching across all years of this programme, ethical approval for this study was obtained from the School of Psychology’s Ethics Committee and all staff and students involved gave informed consent. Across all four years of the undergraduate degree, 347 University of Aberdeen students consented to take part in the study in the first semester of the academic year 2015/2016. Nine participants were excluded due to not sitting the final exam for medical reasons, three participants were excluded for failing to turn up for the final exam, 14 participants left the course before the end of the semester, five participants were excluded due to taking the first year course but being in their second or third year of university (on the basis of previous findings demonstrating that attendance and recording usage differs with increasing maturity), two participants were excluded for having the same name because the media server did not provide enough information to differentiate their recording use, one participant was excluded as we were unable to calculate their GPA from the information held on student records, and finally, one participant was excluded due to an administrative error meaning attendance data were not collected. Our final sample size offers a good prediction level for the number of predictor variables as per the criteria outlined by Knofczynski and Mundfrom (2008) (see Table 1 for demographic information).

Materials and procedure

1st year

The course was composed of three sub-courses with three one-hour lectures per week running for 11 weeks with content from three different fields of psychology, with each sub-course taught by different lecturers. Sub-course 1 did not record live lectures but instead provided recordings of summaries of the learning outcomes for each lecture. Sub-courses 2 and 3 provided full recordings for all lectures. The final exam for the course consisted of 100 multiple-choice questions taken from all three sub-courses. This mark was then converted to award an overall grade on a 22-point Common Grading Scale (CGS).

2nd year

The course was composed of three sub-courses with three one-hour lectures per week running for 11 weeks with content from three different fields of psychology, with each sub-course taught by different lecturers. One lecture in each sub-course was devoted to essay writing techniques and was not included as the content did not relate to the final exam. Two of the sub-courses provided full recordings for all lectures. The third sub-course used a flipped classroom approach and was therefore not included. The final exam for the course consisted of an essay question for each sub-course and students were awarded an overall CGS grade.

3rd and 4th years

At honoursFootnote 1 level, each field of psychology (e.g. developmental) has its own self-contained course with a separate exam in which students typically answer two essay questions on the topic and are awarded an overall CGS grade. At both 3rd and 4th years, two courses were included in the study; however, due to the structure of the degree, not all students take all courses. This paper includes the results from one third year course and one fourth year course, both of which involved a weekly one-hour lecture for 11 weeks. The courses with the largest number of participants were chosen for inclusion. The pattern of results for the additional courses were similar to those included in the paper and can be viewed online at https://osf.io/39ey8/.

Attendance data

Attendance sheets were distributed in-class for each lecture by student research assistants. All students enrolled on the course were listed on the sheets and were informed that they had until week six of teaching to provide their consent. Attendance data from any student who had not provided consent was destroyed after this deadline. For 1st and 2nd years, average attendance was calculated for each of the sub-courses as well as for the course overall.

Recording data

Recording data for the courses that provided live lecture recordings was downloaded from the Kaltura (Kaltura Inc.) plug-in for Blackboard Learn (Blackboard Inc.) and total recording access in minutes was calculated for each participant. For 1st and 2nd years, recording access was calculated for each of the lecture sub-courses as well as for the course overall.

Demographic and GPA data

All students enrolled on the course were e-mailed a questionnaire that contained the consent form and several demographic questions: age, gender, nationality, native English speaker (yes/no), degree intention (psychology major/other). GPA was calculated using data from the student record system. For first year students, GPA was calculated by taking the average grade for the semester, excluding the grade for the course involved in the study. For 2nd, 3rd, and 4th year students, GPA was calculated by taking the average grade each student obtained for all courses completed since 2013 (the institutional grading system was changed in 2013/2014 and therefore grades from 2012/2013 were on a different scale).

Results

All analyses were conducted using R 3.3.1 (R Core Team 2016) and R Studio 1.0.44 (RStudio Team 2016). For clarity, and because of highly unequal sample sizes and demographic confounds between the years, the results are presented separately for each year of study. Due to the smaller sample sizes for 3rd and 4th year students, these data were combined into an honours level analysis. The pattern of results was identical when these groups were analysed separately and can be found online at (https://osf.io/39ey8/).

First year results

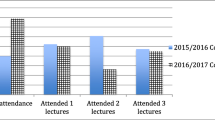

In total, attendance data was collected for 30/33 lectures. Data were not collected for one lecture due to failure to obtain consent from the lecturer in time, one lecture was cancelled due to AV problems, and data from one lecture was lost due to a corrupted data file. Recording data was obtained from 21/22 of the recorded lectures, the missing lecture data again being from a failure to obtain consent in time (see Table 2 and Fig. 1 for descriptive information (plots created using the yarrr package; Phillips 2017)). Recording data from one student was excluded (Kaltura logged 30 hours and 54 minutes of access for a single lecture in what we assume was a technical error as this would equate to watching the same lecture approximately 32 times).

Of note is how little recordings were used by the majority of first year students with the median number of minutes viewed being just over 30 min out of approximately 20 h of recording. A one-way ANOVA with Greenhouse-Geisser correction found a significant main effect of sub-course on attendance (F (1.66, 240.17) = 23.70, p < .001, η2 = .07). Planned comparisons using Bonferroni correction found that attendance in sub-course 1 that did not provide full recordings was significantly higher than in sub-course 2 (p < .001) and course 3 (p < .001). There was no significant difference in attendance between sub-courses 2 and 3 (p = .29). A pairwise t test found no significant difference in the recording use for sub-course 2 and sub-course 3 (t (152) = .69, p = .49, d = .06).

Welch t tests found that non-native speakers used the recordings significantly more (M = 294.11, SD = 361.50) than native speakers (M = 177.62, SD = 304.89, t (128.72) = 2.12, p = .036, d = .35); however, there was no difference in attendance (M1 = 62.21, SD1 = 18.31, M2 = 59.26, SD2 = 16.24, t (135.2) = − .805, p = .422, d = .13). There was no significant difference in attendance (M1 = 61.08, SD1 = 16.49, M2 = 60.73, SD2 = 18.25, t (124.49) = .12, p = .905, d = .02) or recording use (M1 = 254.21, SD1 = 355.64, M2 = 191.09, SD2 = 300.64, t (144.01) = 1.18, p = .239, d = .19) between those registered on a psychology degree and those taking the course for extra credit.

Multiple regression was conducted on the outcome variable, final exam mark.Footnote 2 For all analyses in the paper, we aimed to construct the simplest possible model using the following procedure. A regression model containing native speaker status and GPA as predictors was constructed. For first and second year students, degree intention was also included as our institutional data have consistently shown that those students who are psychology majors do better than those taking the course for extra credit. A second model was then fitted retaining any significant predictors (hereafter referred to as the demographic model). We then introduced mean attendance and recording minutes viewed as predictor variables. Also included within this model were interaction terms for interactions between recording use, attendance, and GPA. These two models (with and without attendance and recording data) were then compared using ANOVA. Regression diagnostics were performed using the gvlma package (Pena and Slate 2014) and qq-plots produced from the car package (Fox and Weisberg 2011). All continuous variables were centred at the mean.

For first year students, higher exam marks were positively correlated with GPA and attendance (see Table 3). There was no correlation between attendance and recording use.

GPA, native speaker status, and degree intention were all significant predictors of achievement (see Table 4). Both models (demographic and demographic + recording and attendance) significantly predicted final exam mark (model 1, F (3, 149) = 46.63, p < .001, adjusted R2 = .47, model 2, F (9, 143) = 18.69, p < .001, adjusted R2 = .51, significant F change, p = .001). In the final model, non-native speakers achieved higher marks than native speakers as well as those students registered for a psychology degree compared to those taking the course as a second or third subject choice. Both attendance and recording use were positive predictors of exam mark.

There was also a significant three-way interaction between attendance, recording use, and GPA (see Fig. 2). The greatest benefit of additional recording use is seen in low GPA students with high attendance as well as a positive relationship in average and high GPA students with low attendance. This suggests that recordings are useful for weaker students when they are used as a supplement (but not as a substitute for live lecture attendance), whilst better students can mitigate the effects of not attending the lecture by watching the recording. The interaction also indicates that those students with a high GPA who have high attendance in addition to high recording use do not perform as well.

Second year results

Attendance and recording data were collected for 20/20 lectures. Paired sample t tests found that attendance was significantly higher for sub-course 2 than sub-course 1 (t (78) = 4.18, p < .001, d = .47); however, there was no difference in the use of recordings (t (78) = .62, p = .54, d = .07; see Table 5 and Fig. 3). For second year students, higher exam marks were positively correlated with GPA, attendance, and recording use (see Table 6). There was no correlation between attendance and recording use.

Welch t tests found no significant difference in attendance between native (M = 45.58, SD = 25.38) and non-native speakers (M = 47.36, SD = 23.44, t (76.23) = .32, p = .747, d = .07), or in recording use (M1 = 232.18, SD1 = 302.34, M2 = 271.55, SD2 = 302.71, t (74.55) = .58, p = .566, d = .13). There was also no significant difference in attendance between those registered on a psychology degree and those taking the course as curriculum breadth (M1 = 46.08, SD1 = 25.52, M2 = 47.86, SD2 = 18.88, t (24.47) = .30, p = .786, d = .08) or recording use (M1 = 219.34, SD1 = 287.16, M2 = 393.02, SD2 = 334.19, t (17.37) = 1.81, p = .088, d = .557).

In the first regression model, native speaker status was not a significant predictor of final exam grade and was therefore excluded. Both subsequent models (demographic and demographic + recording and attendance) significantly predicted final exam mark although the second model did not provide a significantly better fit (model 1, F (2, 76) = 21.59, p < .001, adjusted R2 = .35 model 2, F (8, 70) = 7.14, p < .001, adjusted R2 = .37, F change, p = .10; see Table 7). In the final model, students registered for a psychology degree achieved higher marks and GPA was again the strongest predictor. There were no main effects of attendance or recording use; however, there was a significant interaction between attendance and recording use. Students with low attendance benefitted the most from increased recording use, whilst for students with the highest attendance, higher recording use was predictive of lower grades, again suggesting that these students were struggling with the content. Given that the addition of attendance and recording data did not significantly improve the model, this interaction should be interpreted with due caution.

Third and fourth year results

Given the smaller sample sizes and the identical course structures, data from third and fourth year students were combined to perform an honours level analysis. The pattern of results for the separate analyses was identical and is available in the supplementary online material. As not all students took all courses in each year and only one course from each year was included in the analysis, within-subject comparisons of attendance and recording use are not possible; however, descriptive statistics are presented in Table 8.

Welch t tests found that there was no difference in recording use between native (M = 291.58, SD = 254.31) and non-native speakers (M = 261.33, SD = 136.07, t (46.07) = .56, p = .58, d = .15). For honours students, higher exam marks were positively correlated with GPA and negatively correlated with age (see Table 9). There were no significant correlations between exam grade and attendance or recording use, or between attendance and recording use.

For the honours analysis, degree intention was dropped from the analysis as out of 57 participants only one was not registered for a psychology degree. The regression diagnostics report that the data did not satisfy the assumptions for regression. After examining the q-q plot, three influential cases were removed from the analysis (full analysis available online). In the first regression model, native speaker status was not a significant predictor and was therefore excluded. Although both subsequent models significantly predicted exam mark, the addition of attendance and recording data did not improve the model and the only significant predictor was GPA (model 1, F (1, 50) = 31.71, p < .001, adjusted R2 = .38 model 2, F (7, 44) = 5.37, p < .001, adjusted R2 = .37, F change, p = .452; see Table 10). There were no significant main effects of attendance or recording use or any higher order interactions.

All students

Although separating the analyses by year of study was the most appropriate approach due to the confounds between students in different years, given the inconsistencies in the literature, it is also helpful to see the how the conclusions change in the same dataset when collapsed across year of study. Data from all students (N = 290) were combined to investigate general trends and how these compared to the relationships seen at each individual year of study (see Table 11 for descriptive statistics). For first and second years, average attendance and recording use across all sub-courses was used.

Across all years, exam grade was positively correlated with GPA and attendance (see Table 12). Attendance was also positively correlated with GPA. Recording use was not correlated with attendance, GPA, or exam grade (see Fig. 4).

Across all students, there was no difference in recording use between native (M = 173.78, SD = 238.60) and non-native students (M = 199.56, SD = 227.42, t (259.29) = .93, p = .355, d = .11) or in attendance (M1 = 52.12, SD1 = 23.27, M2 = 55.06, SD2 = 23.17, t (254.80) = 1.06, p = .292, d = .126).

For the regression analysis, degree intention was dropped given that this variable is confounded by year of study. Both subsequent models (GPA and GPA + recording and attendance) significantly predicted final exam mark and the second model was a significantly better fit (model 1, F (1, 287) = 156.6, p < .001, adjusted R2 = .35, model 2, F (7, 281) = 25.65, p < .001, adjusted R2 = .38, significant F change, p = .001; see Table 13). Across all students, GPA, attendance, and recording use were positive predictors of exam mark. There were no significant higher order interactions.

Discussion

At first year, attendance and recording use were positive predictors of performance in the exam. For weaker students, supplementary use of recordings was beneficial but only better students’ use of recordings helped overcome the impact of low attendance. At second year, again attendance and recording use were positively correlated with exam performance although the addition of these variables did not improve the regression model. At honours level, there were no correlations between performance in the exam and either attendance or recording use, the only predictor of performance was GPA. When all years were combined, GPA, attendance, and recording use were positive predictors of exam mark and there were no higher order interactions. In all analyses, recording use had a weaker relationship with achievement than attendance and GPA was by far the strongest predictor. The other predictor of final grade in years 1 and 2 was degree intention (psychology major or not). A high proportion (~ 50% of 1st year) of the students on these courses are not psychology majors and these students’ achievement is historically lower than those committed to psychology as a degree. This may be due to the non-major students having less motivation to work towards higher grades as it is not their main subject of interest.

The results provide a picture of how attendance and recording use affect achievement across the degree programme: increased attendance at lectures has a greater impact at earlier years of study. We found no strong evidence that students who fail to attend the lecture are doing so because they are accessing the recording as a substitute. At no year of study did we find a correlation between attendance and recording use—we cannot claim that lower attendance is not exacerbated by the availability of a recording, but certainly in our sample the students who failed to attend the lecture were not using the recordings more than those who attended (which is a serious problem for the education of those students, but a very different problem to the one under investigation).

We did find that the non-recorded first year sub-course had significantly higher attendance than the two recorded courses and one could conclude that this is evidence for the hypothesis that providing recordings affects attendance, even if this impact is only seen at first year. However, despite this, there was no correlation between recording and attendance and we also found a significant difference in attendance between the two second year courses that were both recorded despite there being no difference in recording use between the two. We have two potential explanations for this finding. First, the sub-courses involved different fields of psychology and we know from our course evaluation forms that those sub-courses with higher attendance at both first and second year were also the courses that students reported enjoying more (for ethical reasons, we cannot identify the fields as this would identify the lecturers). Second, the first year non-recorded sub-course was delivered at 1 pm, whilst the two recorded sub-courses were delivered at 11 am. The course evaluation comments suggested that this is an issue for the younger students, that is, they indicated they were less likely to go to the two 11 am lectures because it was too early. Finally, we saw that descriptively attendance is higher in first year than in later years whilst the opposite is true for recording usage (although again at each year, there was no relationship between attendance and recording usage). To draw firmly the conclusion that recording affects attendance, the time of delivery should be consistent and additional longitudinal data should be collected to determine whether the differences we see in the first year sample are due to maturity or whether they are a cohort effect, that is, that our first year sample was somehow different from the other years for reasons that are unrelated to maturity, such as changes to entry requirements. Additionally, there were no negative interactions between attendance and recording use. We may have expected to find a positive effect of recording use moderated by an interaction showing that high recording use with low attendance (i.e. substitutive use) was detrimental yet this was not present in any of the analyses. We found that poorer first year students do not benefit from substitutive use of recordings but this did not translate into a disadvantage. At no year of study did we find evidence for a negative effect of providing recordings.

A key strength of the study was that it considered a cross-section of the different years. The use of lecture recordings was predictive of achievement only with the early years and the importance of the use of this resource declines as students enter the honours years. Our results accord with the suggestion of Phillips et al. (2007) that educational maturity may be a key factor in assessing the impact of lecture recordings and attendance. Demetriadis and Pombortsis (2007) discuss how the nature of learning changes over the course of a degree, with earlier years being focused upon knowledge acquisition and facts, whilst later years require deeper critical thinking skills and the application of knowledge. Lake and Boyd (2015) also show that older students tend to adopt deep learning approaches compared to younger students who focus on surface learning and we can interpret our findings considering such work. In first year, the material on which students are examined on is largely taught in the lectures; therefore, familiarity with this specific content is key. By fourth year, to do well in the exams, students must go beyond the material covered in the lecture and evaluate critically the extended literature; indeed, an over-reliance upon lecture content will limit achievement. It is of little surprise then that we find the strongest effects for attendance and recording use for first year students. It is also possible that our null results at honours reflect an acculturation into a particular approach to studying (see, e.g. Cilesiz 2015) and/or that additional activities that are not captured by our data such as study groups have a greater influence than attendance and recording usage at these levels of study. Without longitudinal or qualitative data, we are unable to tease apart the exact reasons for why year of study interacts with attendance and recording usage but our range of analyses demonstrates the importance of not generalising from studies that investigate these variables in one year group. Had we only studied our honours level students, our conclusions would be rather different.

Finally, the results indicate that non-native speakers show a greater tendency to use recordings, and a higher level of academic performance, during year 1. This mirrors previous research examining non-native student performance and recording use (Rientíes et al. 2016; Taplin et al. 2014). Lecture recordings may therefore be of particular use to non-native students in their first year abroad. This utility could be related to language proficiency; issues such as a fast speaking rate and complicated grammar use (Mulligan and Kirkpatrick 2000) may necessitate a non-native speaker listening to a lecture multiple times to fully comprehend the presented material. Alternatively, this use could be related to educational maturity, with students who choose to study abroad showing a high level of academic integration and ability to cope with academic demands (Rientíes et al. 2016) and therefore utilising lecture recordings as part of their study approach. However, this effect was not seen in subsequent years of study, suggesting that the utility of lecture recordings became equivalent across home and international students from year 2 onwards. This may be indicative of increasing educational maturity on the part of the home students or may indicate that non-native speakers have a higher language proficiency after their first year of study; again, longitudinal data would be helpful to distinguish the effects of maturity from the effects of cohort.

What our study highlights is the need to consider third variable-type factors when conducting and comparing lecture capture research. Our key findings concern year of study, but we also see that when thinking about lecture capture, we need to consider first language, interest in the course, and, potentially, time of delivery. Most pertinently, the fact that GPA was the strongest predictor of achievement should not be downplayed—the implementation of lecture capture is hugely controversial, yet it appears to have far less impact on achievement than do individual differences. The consequence of the importance of these third variables is that drawing conclusions from single papers is extremely difficult. We would urge those researchers conducting lecture capture research to consider these factors and their research designs accordingly. We would also urge those reading lecture capture research to take a broad view of the literature and draw conclusions from review papers such as O’Callaghan et al. (2017) rather than individual studies whose findings may be difficult to generalise to wider contexts. For this reason, we also believe that the field is in much need of a meta-analytic or systematic review.

In a recent review by Nordmann and McGeorge (2018), we suggested a number of avenues for future research on lecture capture that move away from the binary question of whether or not recording lectures is good or bad. One such suggestion follows from one of the weaknesses of the current study that we were unable to account for when students used the recordings. At the time of data collection, the Blackboard statistics tracking function only provided access data for folders, with no distinction for the different files in a folder, and the Kaltura data logged viewing length but did not provide a timestamp for when the recordings were accessed. This data is now available via lecture capture providers such as Panopto and it would be useful and important to see how recordings were used across the semester and whether the potential benefits only occur with, for example, distributed practice rather than binging the box set during revision week. The impact of different viewing patterns may also potentially explain some of the variance we see in the literature regarding the relationship between recording usage and performance.

Perhaps more importantly, it is becoming clear that lecture capture research needs to be situated within a theoretical framework. Most of the literature to date (including this paper) presents descriptive and/or exploratory work, largely fuelled by the key academic concern surrounding the impact of lecture capture on attendance. Given the pattern of inconsistent findings and the importance of individual differences highlighted above, we propose that the theory of self-regulated learning may provide an ideal framework through which to study the impact of lecture capture. Self-regulated learning is defined as an active and constructive process in which an individual sets their own learning goals and then attempts to actively monitor, control, and regulate their cognition, motivation, and behaviours in order to achieve those goals (Pintrich and Zusho 2007). Zimmerman (2002) conceptualises self-regulated learning as a cyclical process consisting of three basic phases: forethought (including goal setting and outcome expectation), performance (including task strategies and self-recording), and self-reflection (including self-evaluation and self-satisfaction). Effective self-regulated learning has been shown to be a reliable predictor of better academic achievement both in traditional (Dent and Koenka 2016; Richardson et al. 2012) and online educational settings (Broadbent and Poon 2015). Effective self-regulated learning is also seen as critical for success in environments in which the learner may have lower levels of support and guidance (Kizilcec et al. 2017) and the use of recordings as a supplementary material can be viewed in this light. In the majority of cases, students will use recordings as part of their independent studying rather than this material being integrated into the curriculum or structured classroom activities by staff.

It would be disingenuous of us to try to reframe our work in the light of post hoc theoretical considerations given that our study was not designed, conducted, or analysed to test this framework; however, it is worth highlighting the conceptual overlap with our findings and the potential for future research. For example, it may be that students with poorer attendance have generally poorer effort regulation, a key component of self-regulated learning, and that lecture capture may encourage harmful study habits in these students, similar to the findings of Leadbeater et al. (2013) that students adopted different approaches to lecture attendance and recording usage. Additionally, the positive effects of increased recording usage could also be interpreted in light of evidence that shows that students who are more likely to engage in help-seeking also tend to have better achievement (Zusho 2017), a factor that may also contribute towards non-native speakers being more likely to use the recordings. Crucially, the use of self-regulated learning strategies is found to be malleable and can be improved by content-independent interventions (e.g. Dörrenbächer and Perels 2016). It would be interesting for future research to determine whether effective lecture capture usage could be encouraged by more general self-regulated interventions or whether more specific instruction is needed.

In summary, GPA and attendance at live lectures were the strongest predictors of achievement; however, recording use also had a positive role to play. This relationship is strongest for first and second years and appears to have a differential relationship with attendance depending upon GPA. Despite anecdotal fears, we find little evidence to substantiate the claim that providing recordings reduces attendance or negatively impacts achievement, instead, we find positive evidence that the recordings are seen as particularly helpful for non-native speakers in first year as they adjust to a new language environment. We suggest that the best application of our findings would be to focus on increasing live lecture attendance through monitoring in addition to providing recordings for supplementary use, particularly in the early years of study. Finally, we conclude that situational factors and individual differences have a large role to play and need to be considered when conducting and reading individual lecture capture studies.

Notes

A note on terminology: in the UK, the majority of undergraduate degrees offered are ‘honours’ degrees and typically grades from the final two years (2nd and 3rd years in England, Wales, and Northern Ireland, 3rd and 4th years in Scotland) count towards a student’s final degree classification. These degrees refer to a higher level of academic achievement and can be contrasted with ‘ordinary’ degrees that are often shorter and less demanding (e.g. for psychology students at the University of Aberdeen, an ordinary degree is three years rather than four and does not require the completion of a final year dissertation project). This use of terminology should not be confused with the Latin honours system used in, for example, the USA.

To be consistent with the second, third, and fourth year results, we used the mapped CGS mark for first year students, rather than their exam mark out of 100. Using the raw score, the pattern of results is different in that there were significant main effects of recording use and attendance but no interaction. This analysis is available in full in the online supplementary materials.

References

Bos, N., Groeneveld, C., van Bruggen, J., & Brand-Gruwel, S. (2016). The use of recorded lectures in education and the impact on lecture attendance and exam performance. British Journal of Educational Technology, 47(5), 906–917. https://doi.org/10.1111/bjet.12300.

Broadbent, J., & Poon, W. L. (2015). Self-regulated learning strategies & academic achievement in online higher education learning environments: a systematic review. The Internet and Higher Education, 27, 1–13.

Cilesiz, S. (2015). Undergraduate students’ experiences with recorded lectures: towards a theory of acculturation. Higher Education, 69(3), 471-493.

Credé, M., Roch, S. G., & Kieszczynka, U. M. (2010). Class attendance in college: a meta-analytic review of the relationship of class attendance with grades and student characteristics. Review of Educational Research, 80(2), 272–295. https://doi.org/10.3102/0034654310362998.

Demetriadis, S., & Pombortsis, A. (2007). E-lectures for flexible learning: a study on their learning efficiency. Educational Technology & Society, 10(2), 147–157.

Dent, A. L., & Koenka, A. C. (2016). The relation between self-regulated learning and academic achievement across childhood and adolescence: a meta-analysis. Educational Psychology Review, 28(3), 425–474.

Dörrenbächer, L., & Perels, F. (2016). More is more? Evaluation of interventions to foster self-regulated learning in college. International Journal of Educational Research, 78, 50–65.

Drouin, M. A. (2014). If you record it, some won’t come. Using lecture capture in introductory psychology. Teaching of Psychology, 41(1), 11–19. https://doi.org/10.1177/0098628313514172.

Eisen, D. B., Schupp, C. W., Isseroff, R. R., Ibrahimi, O. A., Ledo, L., & Armstrong, A. W. (2015). Does class attendance matter? Results from a second-year medical school dermatology cohort study. International Journal of Dermatology, 54(7), 807–816. https://doi.org/10.1111/ijd.12816.

Elliott, C., & Neal, D. (2016). Evaluating the use of lecture capture using a revealed preference approach. Active Learning in Higher Education, 17(2), 153–167. https://doi.org/10.1177/1469787416637463.

Fox, J., & Weisberg, S. (2011). An {R} companion to applied regression, second edition. Thousand Oaks: Sage http://socserv.socsci.mcmaster.ca/jfox/Books/Companion.

Franklin, D. S., Gibson, J. W., Samuel, J. C., Teeter, W. A., & Clarkson, C. W. (2011). Use of lecture recordings in medical education. Medical Science Educator, 21(1), 21–28.

Gorissen, P., van Bruggen, J., & Jochems, W. (2012). Students and recorded lectures: survey on current use and demands for higher education. Research in Learning Technology, 20(3), 17299. https://doi.org/10.3402/rlt.v20i0.17299.

Gysbers, V., Johnston, J., Hancock, D., & Denyer, G. (2011). Why do students still bother coming to lectures, when everything is available online? International Journal of Innovation in Science and Mathematics Education, 19(2), 20–36 Retrieved from http://ojs-prod.library.usyd.edu.au/index.php/CAL/article/view/4887.

Inglis, M., Palipana, A., Trenholm, S., & Ward, J. (2011). Individual differences in students’ use of optional learning resources. Journal of Computer Assisted Learning, 27(6), 490–502. https://doi.org/10.1111/j.1365-2729.2011.00417.x.

Jensen, S. A. (2011). In-class versus online video lectures. Teaching of Psychology, 38(4), 298–302. https://doi.org/10.1177/0098628311421336.

Kizilcec, R. F., Pérez-Sanagustín, M., & Maldonado, J. J. (2017). Self-regulated learning strategies predict learner behavior and goal attainment in Massive Open Online Courses. Computers & Education, 104, 18–33.

Knofczynski, G. T., & Mundfrom, D. (2008). Sample sizes when using multiple linear regression for prediction. Educational and Psychological Measurement, 68(3), 431–442. https://doi.org/10.1177/0013164407310131.

Knowles, M. (1980). The modern practice of adult education: From pedagogy to andragogy (Revised and updated). Englewood Cliffs: Cambridge Adult Education.

Kolb, D.A. (1976). Management and the Learning Process. California Management Review, 18(3), 21–31.

Lake, W., & Boyd, W. (2015). Age, maturity and gender, and the propensity towards surface and deep learning approaches amongst university students. Creative Education, 6(22), 2361–2371. https://doi.org/10.4236/ce.2015.622242.

Larkin, H. E. (2010). “But they won’t come to lectures...” The impact of audio recorded lectures on student experience and attendance. Australasian Journal of Educational Technology, 26(2), 238–249.

Leadbeater, W., Shuttleworth, T., Couperthwaite, J., & Nightingale, K. P. (2013). Evaluating the use and impact of lecture recording in undergraduates: evidence for distinct approaches by different groups of students. Computers & Education, 61(1), 185–192. https://doi.org/10.1016/j.compedu.2012.09.011.

Marriott, P. (2002). A longitudinal study of undergraduate accounting students’ learning style preferences at two UK universities. Accounting Education, 11(1), 43–62.

Mulligan, D., & Kirkpatrick, A. (2000). How much do they understand? Lectures, students and comprehension. Higher Education Research and Development, 19(March 2015), 311–335. https://doi.org/10.1080/758484352.

Nordmann, E., & Mcgeorge, P. (2018). Lecture capture in higher education: time to learn from the learners. Retrieved from psyarxiv.com/ux29v.

O’Callaghan, F.V., Neumann, D.L., Jones. L., & Creed, P.A. (2017). The use of lecture recordings in higher education: A review of institutional, student, and lecturer issues. Education and Information Technologies, 22(1), 399–415.

Pearce, K., & Scutter, S. (2010). Podcasting of health sciences lectures: benefits for students from a non-English speaking background. Australasian Journal of Educational Technology, 26(7), 1028–1041.

Pena, E. A. & Slate, E. H. (2014). gvlma: Global Validation of Linear Models Assumptions. R package version 1.0.0.2. https://CRAN.R-project.org/package=gvlma.

Phillips, N. (2017). yarrr: a companion to the e-book “YaRrr!: the pirate’s guide to R”. R package version 0.1.4. https://CRAN.R-project.org/package=yarrr.

Phillips, R., Gosper, M., Mcneill, M., Woo, K., & Preston, G. (2007). Staff and student perspectives on web based lecture technologies: insights into the great divide. Paper presented at ASCILITE. Dec 2–5, Singapore. Retrieved from http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.119.6857&rep=rep1&type=pdf.

Phillips, R., Maor, D., Cumming-Potvin, W., Roberts, P., Herrington, J., Preston, G., … Perry, L. (2011). Learning analytics and study behaviour: a pilot study. Paper presented at ASCILITE 2011, Hobart, Tasmania. Retrieved from http://www.academia.edu/4448015/Phillips_R._Maor_D._Cumming-Potvin_W._Roberts_P._Herrington_J._Preston_G._Moore_E._and_Perry_L._2011_Learning_analytics_and_study_behaviour_A_pilot_study._In_ASCILITE_2011_4_-_7_December_2011_Wrest_Point_Hobart_Tasmania.

Pintrich, P. R., & Zusho, A. (2007). Student motivation and self-regulated learning in the college classroom. In The scholarship of teaching and learning in higher education: an evidence-based perspective (pp. 731–810). Dordrecht: Springer.

R Core Team. (2016). R: a language and environment for statistical computing. Vienna: R Foundation for Statistical Computing https://www.R-project.org/.

Revell, K. D. (2014). A comparison of the usage of tablet PC, lecture capture, and online homework in an introductory chemistry course. Journal of Chemical Education, 91(1), 48–51. https://doi.org/10.1021/ed400372x.

Richardson, M., Abraham, C., & Bond, R. (2012). Psychological correlates of university students’ academic performance: a systematic review and meta-analysis. Psychological Bulletin, 138(2), 353.

Rientíes, B., Beausaert, S., Grohnert, T., Niemantsverdriet, S., & Kommers, P. (2016). Understanding academic performance of international students: the role of ethnicity, academic and social integration. Higher Education, 63(6), 685–700.

RStudio Team. (2016). RStudio: integrated development for R. Boston: RStudio, Inc. http://www.rstudio.com/.

Scutter, S., Stupans, I., Sawyer, T., & King, S. (2010). How do students use podcasts to support learning? Australasian Journal of Educational Technology, 26(2), 180–191. https://doi.org/10.14742/ajet.1089.

Senn, S. (2003). Dicing with death: chance, risk and health. Cambridge University Press.

Taplin, R. H., Kerr, R., & Brown, A. M. (2014). Opportunity costs associated with the provision of student services: a case study of web-based lecture technology. Higher Education, 68(1), 15–28. https://doi.org/10.1007/s10734-013-9677-x.

Traphagan, T., Kucsera, J. V., & Kishi, K. (2010). Impact of class lecture webcasting on attendance and learning. Educational Technology Research and Development, 58(1), 19–37. https://doi.org/10.1007/s11423-009-9128-7.

Varao-Sousa, T. L., & Kingstone, A. (2015). Memory for lectures: how lecture format impacts the learning experience. PLoS One, 10(11), 1–11. https://doi.org/10.1371/journal.pone.0141587.

Von Konsky, B. R., Ivins, J., & Gribble, S. J. (2009). Lecture attendance and web based lecture technologies: a comparison of student perceptions and usage patterns. Australasian Journal of Educational Technology, 25(4), 581–595.

Wieling, M. B., & Hofman, W. H. A. (2010). The impact of online video lecture recordings and automated feedback on student performance. Computers in Education, 54(4), 992–998. https://doi.org/10.1016/j.compedu.2009.10.002.

Williams, J. (2006). The Lectopia service and students with disabilities. In Proceedings of the 23rd annual ascilite conference: Who’s learning? Whose technology? Paper presented at ASCILITE 2006, Sydney, Australia. Retrieved from http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.520.9016&rep=rep1&type=pdf.

Williams, A., Birch, E., & Hancock, P. (2012). The impact of online lecture recordings on student performance. Australasian Journal of Educational Technology, 28, 199–213.

Zimmerman, B. J. (2002). Becoming a self-regulated learner: an overview. Theory Into Practice, 41(2), 64–70. https://doi.org/10.1207/s15430421tip4102_2.

Zusho, A. (2017). Toward an integrated model of student learning in the college classroom. Educational Psychology Review, 29(2), 301–324.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Prior to teaching across all years of this programme, ethical approval for this study was obtained from the School of Psychology’s Ethics Committee and all staff and students involved gave informed consent.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Nordmann, E., Calder, C., Bishop, P. et al. Turn up, tune in, don’t drop out: the relationship between lecture attendance, use of lecture recordings, and achievement at different levels of study. High Educ 77, 1065–1084 (2019). https://doi.org/10.1007/s10734-018-0320-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10734-018-0320-8