Abstract

Pareto local search (PLS) methods are local search algorithms for multi-objective combinatorial optimization problems based on the Pareto dominance criterion. PLS explores the Pareto neighbourhood of a set of non-dominated solutions until it reaches a local optimal Pareto front. In this paper, we discuss and analyse three different Pareto neighbourhood exploration strategies: best, first, and neutral improvement. Furthermore, we introduce a deactivation mechanism that restarts PLS from an archive of solutions rather than from a single solution in order to avoid the exploration of already explored regions. To escape from a local optimal solution set we apply stochastic perturbation strategies, leading to stochastic Pareto local search algorithms (SPLS). We consider two perturbation strategies: mutation and path-guided mutation. While the former is unbiased, the latter is biased towards preserving common substructures between 2 solutions. We apply SPLS on a set of large, correlated bi-objective quadratic assignment problems (bQAPs) and observe that SPLS significantly outperforms multi-start PLS. We investigate the reason of this performance gain by studying the fitness landscape structure of the bQAPs using random walks. The best performing method uses the stochastic perturbation algorithms, the first improvement Pareto neigborhood exploration and the deactivation technique.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Pareto local search algorithms (PLS) (Paquete et al. 2004; Angel et al. 2004; Basseur 2006) are local—or neighbourhood—search techniques for solving multi-objective combinatorial optimization problems. PLS applies an exploration strategy to iteratively move from a set of current solutions to a neighbouring set that improves upon the current solution. The algorithm stops in a Pareto local optimal set: a set of solutions that have no improving solutions in their neighbourhood. Because the local search gets stuck in a local optimal set, the search needs to be restarted from different regions of the search space. Multi-start PLS restarts the search from randomly generated points in the search space. However, this does not exploit information present in previously found solutions.

In the literature some variants of PLS algorithms can be found, each having small differences in their neighbourhood exploration technique. Paquete et al.’s PLS (Paquete et al. 2004) stores only those solutions in the archive that are Pareto optimal. Basseur’s PLS (Basseur 2006) explores all solutions in the archive exactly once, even though they might have become dominated by newly included solutions. Both PLSs explore the entire neighbourhood of solutions in the archive. Thus, these PLSs use a best improvement exploration strategy (Hansen and Mladenović 2006). Angel et al.’s PLS (Angel et al. 2004) uses dynasearch and dynamic programming to explore the neighbourhood of the bi-objective travelling salesman problem. Liefooghe et al. (2011) experimentally compare various instances of PLS algorithms using different parameters and settings on bi-objective instances of travelling salesman and scheduling problems.

Variations of PLS were proposed to improve PLS performance (Hamacher and Ruhe 1994). Two phase PLS (Lust and Teghem 2010; Dubois-Lacoste et al. 2011a) first approximates the Pareto local optimal set with solutions generated with single-objective solvers. In the second phase, the algorithm uses PLS (Paquete et al. 2004; Angel et al. 2004) to generate solutions not found in the first phase. Guided PLS (Alsheddy and Tsang 2009) uses a variant of PLS that prioritizes the objectives of the search space to uniformly spread the solutions over the Pareto local optimal set. Guided PLS is somehow the inverse of the two phase PLS because it first performs PLS and then tries to escape from a Pareto local optimal set by adjusting the weights of different objectives.

Iterated PLS (Drugan and Thierens 2010) uses stochastic perturbation operators to escape from a Pareto local optimal set. Iterated PLS is the multi-objective equivalent of iterated local search (Lourenco et al. 2003) a technique for single objective optimization that restarts LS from solutions generated by mutating the currently found local optima. In addition to mutation SPLS can generate restarting solutions using recombination operators because there are multiple solutions in the Pareto local optimal set that can be used as parent solutions. The Pareto local optimal set can be used directly as the population or alternatively, a separate elitist population can be used to generate new solutions (Lopez-Ibanez et al. 2006).

Main contributions of the paper

We propose several techniques to ameliorate the performance of Pareto local search algorithms. Our variation of PLS uses a technique—called deactivation—that restarts PLS from a newly generated solution and a set of incomparable solutions that were generated with previously restarted PLS runs. In Sect. 2, we show that PLS using deactivation can avoid the repetitive exploration of (non-promising) regions.

PLS algorithms usually explore the entire neighbourhood of a solution (Paquete et al. 2004). We show here though that first improvement neighbourhood exploration strategies can be more efficient. A similar observation has recently been made in (Liefooghe et al. 2011). Pareto neighbourhood exploration strategies—or improvement strategies—are the equivalent of single-objective improvement strategies (Hansen and Mladenović 2006) for multi-objective spaces. We view Pareto improvement as a relationship between an initial solution, a solution from the neighbourhood of the initial solution, and all the other solutions of the current Pareto set. In Sect. 3, we design and analyse three Pareto neighbourhood exploration strategies. We show that these Pareto improvement strategies are well performing. We prove that PLS using our Pareto neighbourhood exploration strategies are proper, sound and complete. We also discuss the relationship between our Pareto improvement strategies and another, often used, technique to search in multi-objective search spaces, namely the hypervolume unary indicator (Zitzler et al. 2003; Bringmann and Friedrich 2010). The (Pareto) improvement strategies are independent of the landscape they are applied on, thus these results can straightforwardly be applied to other combinatorial problems.

To escape from a local optimal solution set we apply stochastic perturbation strategies, leading to stochastic Pareto local search algorithms (SPLS). We consider two perturbation strategies: mutation and path-guided mutation. While the former is unbiased, the latter is biased towards preserving common substructures between two solutions. In Sect. 4 we discuss a SPLS that uses mutation and path-guided mutation as perturbation operators. Since path-guided mutation can be seen as a specific form of recombination we refer to this algorithm as Genetic PLS (GPLS). The structure of the neighbourhood and the perturbation operators depend on the representation and particularities of the problem they are tested on. In Sect. 5, we experimentally investigate the efficiency of the proposed mechanisms (i.e., the Pareto improvement strategies, deactivation, genetic operators) for PLSs on instances of the bi-objective QAP (bQAP) (Knowles and Corne 2003). The solutions of bQAPs are represented by permutations of facilities to different locations.

The landscape analysis in Sect. 6 records the quality of the restarting solutions with random walks (RW). We extend the scope of the RW method to study the relationship between the distance to a Pareto local optimal set and quality measures, like the hypervolume unary indicator, or mechanisms like deactivation. RW is a logical choice for analysing the behaviour of GPLS in relationship with the genetic operators because the solutions generated with these operators are all accepted as restarting points for PLS (Merz and Freisleben 2000). RW reveals correlations between the structure of the search space and the genetic operator used. Section 7 concludes the paper.

2 Stochastic Pareto local search (SPLS)

We define stochastic Pareto local search (SPLS) algorithms as a combination of stochastic perturbation operators and Pareto local search (PLS). In Sect. 2.1, we first introduce a slight generalization of PLS (Paquete et al. 2004; Angel et al. 2004; Basseur 2006) to allow the design of more efficient SPLS algorithms. In Sect. 2.3, we present the genetic PLS algorithms that use genetic operators to restart PLS.

Notation and definitions

Let us consider the multi-objective function f=(f 1,…,f m ), where \(\mathbf{f}: \mathcal{S} \rightarrow \mathcal{O}\). \(\mathcal{S}\) is the set of solutions in a countable solution space. The image of the solution set \(\mathcal{S}\) under f is denoted with the objective space \(\mathcal{O} = \mathbf{f}(\mathcal{S}) = \{y \mid \exists x \in \mathcal{S}: y = \mathbf{f}(x)\}\). We consider the single objective space as a special case of the multi-objective space with m=1. A bi-objective space has m=2.

Let \(a,b \in \mathcal{S}\) be two solutions and \(\mathbf{f}(a), \mathbf{f}(b) \in \mathcal{O}\) be their images in objective space, called objective vectors. To define an optimization problem, we first consider an order binary relationship, ⪯, between any two elements in the objective space \(\mathcal{O}\). Without loss of generality, only minimization is considered. In Table 1, we present the relationships between objective vectors and sets of objective vectors used in this paper (Zitzler et al. 2003).

A Pareto set is a set of non-dominated solutions. The set of Pareto optimal solutions, or Pareto optimal set, \(\mathcal{A} \subset \mathcal{S}\), is \(\mathit{Min}(\mathcal{S},\prec) = \{a \in \mathcal{A} \subset \mathcal{S} \mid \neg \exists x \in \mathcal{S}: \mathbf{f}(x) \prec \mathbf{f}(a) \}\). A Pareto local optimal set is the set of non-dominated solutions \(\mathcal{A}_{alg}\) generated with a search algorithm alg, \(\mathit{Min}(\mathcal{S}_{alg},\prec) = \{a \in \mathcal{A}_{alg} \subset \mathcal{S}_{alg} \subset \mathcal{S} \mid \neg \exists x \in \mathcal{S}_{alg}: \:\: \mathbf{f}(x) \prec \mathbf{f}(a) \}\), where \(\mathcal{S}_{alg} \subset \mathcal{S}\) is the subset of solutions explored with the search algorithm. A maximal Pareto local set is a Pareto local optimal set containing solutions for which the solutions in their neighbourhood are either dominated or in the Pareto local optimal set.

2.1 Pareto local search

Algorithm 1 presents the pseudo-code of our PLS algorithm. For ease of discussion, we consider an archive of unlimited size that can store all the solutions in the Pareto set. For very large Pareto sets, for instance for real coded problems where unlimited size Pareto sets are unrealistic, different archiving methods can be applied (Knowles and Corne 2004; Deb et al. 2005; Lopez-Ibanez et al. 2011).

PLS(\(A, \mathcal{I}\)) has two input parameters. A is a Pareto set containing at least one solution s with visited flag set to false. The Pareto improvement strategy, \(\mathcal{I}(s, A^{\prime}\setminus \{s\}, \mathcal{N}, \preceq, \mathbf{f})\), explores the neighbourhood \(\mathcal{N}\) of a solution s using the Pareto set A′∖{s} and the domination relationship ⪯ over the function f. The output of \(\mathcal{I}(\cdot)\) is a Pareto set, A′′. The neighbourhood function \(\mathcal{N}: \mathcal{S} \rightarrow \mathcal{P}(\mathcal{S})\) has as input a solution from \(\mathcal{S}\) and returns the set of neighbours for that solution. \(\mathcal{N}(s)\) depends on the problem (e.g., multi-objective QAPs) and the solution s it is applied on. \(\mathcal{I}\)’s algorithm does not depend on the landscape; it either exhaustively explores the entire neighbourhood and selects all non-dominated solutions in it, or stops when the first improvement to the initial set of solutions is found.

Each iteration of PLS, a solution s with the visited flag set to false, s.visited=false, is randomly chosen from the current Pareto set (or archive) A. The solutions in the neighbourhood of s are evaluated with \(\mathcal{I}\). The function merge merges M≥2 Pareto sets into a new Pareto set, where

Note that merge also removes the dominated solutions from the input Pareto set. The newly added solutions from the neighbourhood have the visited flag set on “false”. The search continues until there are no unvisited solutions in A′. Thus, the search stops when there are no “new” incomparable or dominating solutions found.

Deactivation technique

We use a Pareto set A to restart PLS in Algorithm 1 as opposed to a single initial solution as Paquete et al.’s PLS does.

Definition 1

Let’s consider the deactivation method from Algorithm 2, Deactiv(s,A), with A a Pareto set and s a solution. The output is an archive A I containing s and the solutions of A that are incomparable with s.

The differences between PLS from Algorithm 1 and Paquete et al.’s PLS are: (i) we use a Pareto set A to restart PLS, and (ii) we propose several instances for the Pareto improvement algorithm \(\mathcal{I}\). PLS restarting from a single solution s and using the best improvement neighbourhood exploration strategy resembles the most Paquete et al.’s PLS. The following proposition shows that deactivation can decrease the number of visited solutions, the extra solutions visited with PLS without deactivation that are dominated by solutions in the deactivation set.

Proposition 1

Let PLS be as in Algorithm 1, with \(\mathcal{I}\) the best Pareto improvement strategy. Consider two initial Pareto sets A 1={s} and A 2={s,q 1,…q k } for PLS. Consider the same sequence \(\pi_{\mathcal{N}(s)}\) of visiting the solutions in \(\mathcal{N}(s)\). Then, the number of solutions from \(\mathcal{N}(s)\) visited with PLS \((A_{1}, \mathcal{I})\) is smaller or equal with the number of solutions \(\mathcal{N}(s)\) visited with PLS \((A_{2}, \mathcal{I})\). The set of solutions in \(\mathcal{N}(s)\) visited only with PLS \((A_{1}, \mathcal{I})\) are dominated by at least one solution from {q 1,…q k }.

Proof

Without loss of generality, let’s assume that k=1. Then, A 2={s,q 1}. Consider the sequence of solutions \(\{s_{1},\ldots,s_{n}\} \in \pi_{\mathcal{N}(s)}\) and these solutions’ relationships with s and q 1. The only case when the two PLSs are behaving differently is when ∃s i that is dominated by q 1 and incomparable with s. With PLS \((A_{1}, \mathcal{I})\), s i is added to A′. With PLS \((A_{2}, \mathcal{I})\), s i is not added to A′. We conclude that there are less solutions from \(\pi_{\mathcal{N}(s)}\) that are evaluated with PLS using A 2 than when A 1 is used, and the extra solutions evaluated with A 1 are dominated by q 1. □

Example 1 shows situations where the deactivation techniques is useful.

Example 1

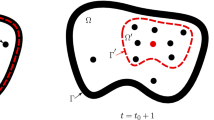

In Fig. 1, a solution is denoted with a point. A circle or a part of a circle denotes the neighbourhood of a solution. A Pareto set is denoted with a curve. In Fig. 1, the initial solution s has in its neighbourhood \(\mathcal{N}(s)\) two incomparable solutions s 1 and s 2, f(s 1)∥f(s 2), f(s)∥f(s 2) and f(s)∥f(s 1), where \(s_{1}, s_{2} \in \mathcal{N}(s)\). Let s 3 be in the neighbourhood of s 1, \(s_{3} \in \mathcal{N}(s_{1})\), such that s 3 dominates s, s 1 and s 2, f(s 3)≺f(s) and f(s 3)≺f(s 1) and f(s 3)≺f(s 2). Let s 4 and s 5 be in the neighbourhood of s 2, \(s_{4}, s_{5} \in \mathcal{N}(s_{2})\), such that s 4 dominates all other solutions, and s 5 is incomparable with s 3 and dominates s 1, s 2 and s.

Let’s consider PLS, PLS(\(A, \mathcal{I}\)), from Algorithm 1 with \(\mathcal{I}\) the best Pareto improvement strategy, and different Pareto sets A.

In Fig. 1(a), we consider A={s} and two equal probable sequences to visit the neighbourhood of s, {s 1,s 2} and {s 2,s 1}. When the neighbours of s are visited in the sequence {s 1,s 2}, the PLS’s output is A′={s 3} because \(s_{3} \in \mathcal{N}(s_{1})\) is dominating s 2 that is deleted from the archive A′. When the neighbours of s are visited in the sequence {s 2,s 1}, the PLS’s output is A′={s 4} because \(s_{3} \in \mathcal{N}(s_{1})\) is added to the archive A′ after adding \(s_{4} \in \mathcal{N}(s_{2})\) and s 4 dominates s 3.

In Fig. 1(b), q and q′ are two solutions that are incomparable with s, and q dominates s 2 and is incomparable with s 1, and q′ dominates s 1 and is incomparable with s 2. When A={s,q}, the PLS’s output is A′={s 3} because s 2 is dominated by q and is deleted from the archive A′. When A={s,q′}, then s 1 is dominated by q′ and is deleted from the archive A′, and the output is A′={s 4}.

Note that both PLSs can delete s 3 whose neighbourhood contains s 4 that dominates all the other solutions. In the first case, the output of PLS depends on the sequence in which solutions in the neighbourhood of s are evaluated. In the second case, the output of PLS depends on the initial Pareto set.

In Algorithms 3 and 4, the input Pareto set A of PLS is equal to the output of the deactivation method.

2.2 The multi-restart PLS algorithm (MPLS)

MPLS is a straightforward algorithm to escape from a Pareto local optimal set. It restarts multiple times from uniform randomly chosen initial solutions. Algorithm 3 presents the pseudo-code for MPLS. The input parameters for MPLS are a Pareto improvement strategy \(\mathcal{I}\) and a stopping criterion \(\mathcal{T}\). The stopping criterion can be, for example, a maximum number of restarted PLSs. The Pareto set A is initialized as the empty set, A←∅. Until \(\mathcal{T}\) is met, a solution s is uniform randomly sampled from the search space. The dominating solutions in A by s are deactivated, A′ ← Deactiv(s,A). The output Pareto set A′ is the input Pareto set for PLS, PLS(A′, \(\mathcal{I}\)). The Pareto set A is updated by merging it with the output of PLS.

The difference between this multi-start PLS and Paquete’s multi-start PLS is: (i) the use of the deactivation technique, and (ii) the use of different Pareto improvement strategies \(\mathcal{I}\). Paquete et al.’s PLS starts to explore the neighborhood of a solution with an empty archive. MLS cannot really profit from an exploration archive, because the restarting solution s is uniform randomly generated, it is improbable that there are solutions in A′ beside s. That is, all the solutions in the Pareto local optimum set A are probably dominating a randomly generated solution s. The deactivation technique will lead to an empty archive. This hypothesis is confirmed in Sect. 6, where MPLS from Algorithm 3 and Paquete’s MPLS have similar performance. Therefore, deactivation is not useful unless fit restarting solutions are generated, for example using genetic operators on solutions from the Pareto local optimum set A. We show that the use of this exploration archive is very useful for GPLS.

2.3 Restarting PLS using genetic operators

To improve upon multi-restart PLS’s performance, stochastic Pareto local search aims to escape from Pareto local optimal sets by stochastic perturbation operators that preserve partial information of the perturbed solutions. In case of genetic PLS (GPLS), the perturbation operators are a combination of mutation and recombination. Algorithm 4 gives the pseudo-code of GPLS.

First, as initialization phase, an MPLS runs in order to construct an initial Pareto set A with diverse solutions from different basins of attraction. The stopping criterion \(\mathcal{T}_{1}\) is the number of times N>0 MPLS is restarted from uniform randomly generated solutions. The type of operator, i.e. mutation or recombination, is selected proportionally with a fixed probability α∈[0,1]. A new individual s′ is generated with mutation, Mutation(s), or with recombination, Recombination(s, s 1), where s 1 is another individual from A, s≠s 1. The solutions in A are deactivated with Deactiv(s′,A) and the resulting Pareto set A′ is the initial Pareto set for PLS. PLS is restarted from s′. The archive A is updated with the output of PLS, A′′. This process is repeated until a stopping criterion, \(\mathcal{T}\), is met. \(\mathcal{T}\) depends on the problem, e.g., mQAPs, and is presented in the next section. The GPLS algorithm returns a Pareto local optimal set.

When α=1 the individuals are generated only with mutation. The advantage of mutation-generated restarts is that the local search is restarted from nearby areas of the landscape. When α=0.5 the individuals are generated 50 % with mutation and 50 % with recombination. Recombination generates solutions at larger distance from the current solution but still aims to preserve partial information of the two parent solutions.

Note that the deactivation method does not have any effect if there are no solutions in the Pareto set A that are incomparable with the solution s. We assume that the individuals generated with mutation or recombination from solutions in the current Pareto set are “good” solutions that are incomparable with some solutions in the Pareto set.

3 Pareto neighbourhood exploration strategies

The performance of LS algorithms depends on the choice of improvement strategy (Hansen and Mladenović 2006; Liefooghe et al. 2011). Until recently, best improvement algorithms were commonly used for the neighbourhood exploration strategies in Pareto local search algorithms. The first non-dominating neighbour (Liefooghe et al. 2011) stops after the first non-dominating solution found. The first dominating neighbour (Aguirre and Tanaka 2005; Liefooghe et al. 2011; Dubois-Lacoste et al. 2011b) stops after the first dominating solution.

In this section, we introduce a new concept for neighbourhood exploration strategies that compares the solutions in a neighbourhood \(\mathcal{N}(s)\) not only to s but also to the solutions from the Pareto set A. In Algorithm 1, A is a Pareto set of solutions incomparable with s that were previously generated in a PLS run. We show that usage of A can: (i) improve the quality of the outputted Pareto set, and (ii) return a smaller Pareto set. We compare our Pareto improvement strategies with another very important method to search through the multi-objective space, the hypervolume unary indicator.

Because we consider one type of neighbourhood function, \(\mathcal{N}\), one type of order relationship ⪯, and one function f, we will often use \(\mathcal{I}(s, A)\) instead of \(\mathcal{I}(s, A, \mathcal{N}, \preceq,\mathbf{f})\) to shorten the notation.

3.1 Best Pareto improvement, \(\mathcal{I}_{B}\)

Like the best improvement for single objective spaces, the best Pareto improvement strategy explores all the solutions in the neighbourhood of the input solution s. The pseudo-code for \(\mathcal{I}_{B}\) is given in Algorithm 5.

Definition 2

The best Pareto improvement strategy from Algorithm 5, \(\mathcal{I}_{B}(s,A)\), evaluates all the individuals in the neighbourhood of s, \(\mathcal{N}(s)\). The outputted set A′ is empty if there are no solutions in \(\mathcal{N}(s)\) that are incomparable or dominating all solutions in {s}∪A. Otherwise, A′ contains solutions from \(\mathcal{N}(s)\) that are dominating or incomparable with {s}∪A and all other solutions from \(\mathcal{N}(s)\). The visited flag of newly added solutions in A′ is set to false.

Note that the output A′ does not contain solutions from the initial set {s}∪A. This initial set is eliminated in the last line of the algorithm \(\mathcal{I}_{B}\), A′←A′∖({s}∪A). In the next proposition, we show that the difference between the outputs of the standard best improvement strategy, \(\mathcal{I}_{B}(s,\emptyset)\), and \(\mathcal{I}_{B}(s,A)\) is the size of the outputted Pareto set A′. The output of \(\mathcal{I}_{B}(s,\emptyset)\) could be larger than \(\mathcal{I}_{B}(s,A)\), where the differences are solutions that are dominated by at least one solution from A. We also show that merging the resulting Pareto set of the two \(\mathcal{I}_{B}\) with A leads to the same result.

Proposition 2

Let \(\mathcal{I}_{B}\) be as in Definition 2. Then, between the Pareto sets \(\mathcal{I}_{B}(s, A)\) and \(\mathcal{I}_{B}(s,\emptyset)\) are the following relationships:

-

1.

\(\mathcal{I}_{B}(s,A) \subseteq \mathcal{I}_{B}(s,\emptyset)\), and ∀s′∈I B (s,∅)∖I B (s,A), ∃s′′∈A, such that f(s′′)≺f(s′)

-

2.

merge(\(\mathcal{I}_{B}(s, \emptyset), A\)) = merge(\(\mathcal{I}_{B}(s, A), A\))

Proof

The first relationship holds because there can be solutions in \(\mathcal{N}(s)\) that are incomparable with s but dominated by a solution from A. Then, the output of \(\mathcal{I}_{B}(s,\emptyset)\) contains such a solution, but \(\mathcal{I}_{B}(s, A)\) does not include it. In all other cases, the output of the two improvement strategies are the same. The second relationship, the equality for the best Pareto improvement, holds because all the solutions in \(\mathcal{N}\) are explored regardless of the initial Pareto set. □

3.2 Neutral Pareto improvement strategy, \(\mathcal{I}_{N}\)

The pseudo-code for the neutral Pareto improvement \(\mathcal{I}_{N}\) is presented in Algorithm 6. \(\mathcal{I}_{N}\) stops the exploration of a neighbourhood \(\mathcal{N}(s)\) when the first solution that is non-dominated by the initial solution s and non-dominated by the Pareto set A is found.

Definition 3

Let \(\mathcal{I}_{N}(s,A)\) be the neutral Pareto improvement strategy from Algorithm 6 and \(\pi_{\mathcal{N}(s)}\) denote a random permutation of \(\mathcal{N}(s)\). \(\mathcal{I}_{N}\) stops after the first solution \(a \in \pi_{\mathcal{N}}\) is found such that a is incomparable with s and incomparable with all solutions from A. If a exists, the outputted Pareto set is A′←{a}, and a.visited←false. Otherwise, A′←∅.

Again, the initial solutions {s}∪A are not contained in the output A′ of \(\mathcal{I}_{N}\). In the following proposition, we show that, even after merging the output of \(\mathcal{I}_{N}\) with A, \(\mathcal{I}_{N}(s,A)\) is at least as good—meaning equal or dominating—with \(\mathcal{I}_{N}(s,\emptyset)\).

Proposition 3

Let \(\mathcal{I}_{N}(s,\emptyset)\) be the neutral Pareto improvement strategy from Definition 3. Then, the outputted Pareto sets of \(\mathcal{I}_{N}(s,A)\) and \(\mathcal{I}_{N}(s,\emptyset)\) are in the following relationships

-

1.

If \(\mathcal{I}_{N}(s,A) = \{a\}\), then \(\mathcal{I}_{N}(s,A) \prec \mathcal{I}_{N}(s,\emptyset)\) or \(\mathcal{I}_{N}(s,A) = \mathcal{I}_{N}(s,\emptyset)\). Otherwise, if \(\mathcal{I}_{N}(s,A) = \emptyset\) and \(\mathcal{I}_{N}(s,\emptyset) = \{s^{\prime}\}\), ∃s′′∈A, such that f(s′′)≺f(s′)

-

2.

merge(\(\mathcal{I}_{N}(s,A)\), A) ⪯ merge(\(\mathcal{I}_{N}(s,\emptyset)\), A)

Proof

Let s′ and \(s^{\prime\prime} \in \pi_{\mathcal{N}}\) be two solutions such that: (i) s′ is the first and s′′ is the second solution in \(\pi_{\mathcal{N}(s)}\) that are incomparable with s, and (ii) s′ is dominated by at least a solution in A, and (iii) s′′ is incomparable or dominating all solutions in A. Then, \(\mathcal{I}_{N}(s,\emptyset)\) stops at s′ and \(\mathcal{I}_{N}(s, A)\) selects s′′. For the rest of the cases, the output of \(\mathcal{I}_{N}(s, A)\) and \(\mathcal{I}_{N}(s, \emptyset)\) are equal. We conclude that the output of \(\mathcal{I}_{N}(s,A)\) is non-dominated by the output of \(\mathcal{I}_{N}(s,\emptyset)\). Otherwise, the output of \(\mathcal{I}_{N}(s,\emptyset)\) is dominated by A. □

This means that there are cases where neutral Pareto improvement is outperforming first non-dominated solution (Liefooghe et al. 2011) but there are no situations where \(\mathcal{I}_{N}\) is outperformed by the first non-dominated solution.

3.3 First Pareto improvement strategy, \(\mathcal{I}_{F}\)

The pseudo-code for \(\mathcal{I}_{F}\) is given in Algorithm 7. In \(\mathcal{I}_{F}\), the exploration of the neighbourhood stops when the first solution that dominates the initial solution is found.

Definition 4

Let \(\mathcal{I}_{F}(s,A)\) be the first Pareto improvement strategy from Algorithm 7 and let \(\pi_{\mathcal{N}(s)}\) denote a random permutation of \(\mathcal{N}(s)\). \(\mathcal{I}_{F}\) stops at the first solution \(a \in \mathcal{N}(s)\) that dominates s. If ∃a, the output A′ contains a and all the solutions from \(\pi_{\mathcal{N}(s)}\) that precede a and that are: (i) incomparable with a and (ii) dominating or are incomparable with all solutions from {s}∪A and all the other solutions from \(\pi_{\mathcal{N}(s)}\) that precede a. Otherwise, if ¬∃a, then A′ contains all the solutions from \(\mathcal{N}(s)\) that are: (i) incomparable with s, (ii) incomparable or dominate all solutions in A, and (iii) incomparable or dominating all other solutions in \(\mathcal{N}(s)\). The visited flag of all solutions included in A′ is set on false.

Like for the other two improvement strategies, from the output of \(\mathcal{I}_{F}\) are deleted the initial set of solutions {s}∪A. Similarly with \(\mathcal{I}_{B}\), the Pareto set of \(\mathcal{I}_{F}(s,A)\) is smaller or equal with the output of \(\mathcal{I}_{F}(s,\emptyset)\), where the extra solutions are dominated by A. After merging the outputs of \(\mathcal{I}_{F}\) with A, the resulting Pareto sets are equal.

Proposition 4

Let \(\mathcal{I}_{F}\) be as in Definition 4. Then, we have

-

1.

\(\mathcal{I}_{F}(s,A) \subseteq \mathcal{I}_{F}(s,\emptyset)\), and ∀s′∈I F (s,∅)∖I F (s,A), ∃s′′∈A, such that f(s′′)≺f(s′)

-

2.

merge(\(\mathcal{I}_{F}(s,\emptyset)\), A) = merge(\(\mathcal{I}_{F}(s, A)\), A)

Proof

Let us assume that \(\exists a \in \mathcal{N}(s)\) such that f(a)≺f(s). Then, a is non-dominated by the currently explored solutions for \(\pi_{\mathcal{N}(s)}\) and all solutions from A. Compared with \(\mathcal{I}_{F}(s, A)\), \(\mathcal{I}_{F}(s,\emptyset)\) returns solutions that are incomparable with a but dominated by a solution from A. Assume that \(\neg \exists a \in \mathcal{N}(s), \mathbf{f}(a) \prec \mathbf{ f}(s)\), then all the solutions in \(\mathcal{N}(s)\) are evaluated regardless of the initial Pareto set. Again, both strategies return the same result. □

The first improvement strategy (Liefooghe et al. 2011) is slightly different from \(\mathcal{I}_{F}(s,\emptyset)\). With the first improvement all non-dominated solutions with s are proposed for integration with A that includes solutions dominated by a or by solutions in A. With \(\mathcal{I}_{F}(s,\emptyset)\), the solutions that are dominated by a are already deleted from the output. This makes a difference in computational time for large neighbourhoods with lots of incomparable solutions.

3.4 Pareto improvement strategies vs searching with the hypervolume unary indicator

The use of the initial Pareto set A allows us to compare the Pareto improvement strategies with a single-objective first improvement exploration strategy using the hypervolume unary indicator, a technique currently often used for multi-objective optimization (Zitzler et al. 2003; Bringmann and Friedrich 2010). This observation is important because some popular algorithms use the hypervolume indicator. For example, Emmerich et al. (2005), Beume et al. (2007), Bader and Zitzler (2011) use the hypervolume to select a subset of solutions from a larger set or to discard a solution from a set.

The hypervolume unary indicator function, \(\mathcal{H}\), measures in the objective space the hypervolume contained between a reference point, r, and the Pareto set A. The hypervolume \(\mathcal{H}(r;A)\) is maximized, meaning that the larger the hypervolume is, the “better” its set A is considered. For a given reference point the hypervolume indicator of A is by design equal or smaller than the hypervolume indicator of ({s}∪A) (Bader 2010). Algorithm 8 gives the pseudo-code for the hypervolume-based first improvement strategy.

Definition 5

Let the hypervolume \(\mathcal{H}(r; A)\) act on the reference point r and a Pareto set A. The hypervolume-based first improvement strategy from Algorithm 8, \(\mathcal{I}_{H}(s,A,\mathcal{N}, \geq,\mathcal{H})\), evaluates the hypervolume indicators of each of the sets merge({s′}, {s}∪A), where \(s^{\prime}\in \pi_{\mathcal{N}}\), a random permutation of \(\mathcal{N}(s)\). \(\mathcal{I}_{H}\) stops after the first solution \(a \in \pi_{\mathcal{N}}\) for which \(\mathcal{H}(r; A) < \mathcal{H}(r; \mbox{\textsf{merge}}(\{a\},A))\). If ∃a, the output is A′←{a}. Otherwise, A′←∅.

We show the equivalence of \(\mathcal{I}_{N}\) with \(\mathcal{I}_{H}\) for any reference point r.

Proposition 5

Let \(\mathcal{I}_{N}\) be the neutral Pareto improvement strategy from Definition 3 and let \(\mathcal{I}_{H}\) be the hypervolume-based first improvement strategy from Definition 5.

Then, \(\mathcal{I}_{N}(s,A,\mathcal{N},\preceq,\mathbf{f}) = \mathcal{I}_{H}(s,A,\mathcal{N},\geq,\mathcal{H})\).

Proof

We split the proof in two parts. First, we show that \(\mathcal{I}_{N} \subseteq \mathcal{I}_{H}\), and then we show that \(\mathcal{I}_{H} \subseteq \mathcal{I}_{N}\). \(\mathcal{I}_{N}\) stops at the first generated solution \(s_{1} \in \mathcal{N}(s)\) for which \(\mathbf{f}(s_{1}) \not \succ \mathbf{f}(s)\), and ¬∃s′∈A, f(s′)≺f(s 1). The new Pareto set is merge({s 1}, {s}∪A) for which the hypervolume indicator of \(\mathcal{H}(r; \mbox{\textsf{merge}}(\{s_{1}\}, \{s\} \cap A))\) is larger than \(\mathcal{H}(r; \{s\} \cap A)\). Then, \(\mathcal{I}_{H}\) selects s 1 and stops. \(\mathcal{I}_{H}\) stops at the first generated solution \(s_{2} \in \mathcal{N}(s)\) such that \(\mathcal{H}(r; \{s\} \cup A) < \mathcal{H}(r; \mbox{\textsf{merge}}(\{s_{2}\}, \{s\} \cup A))\). This means that {s 2} is either: (i) incomparable with s and all other points in A, (ii) s 2 dominates s. In both cases, \(\mathcal{I}_{N}\) selects s 2. We conclude that \(\mathcal{I}_{N}(s,\mathcal{N},A,\preceq,\mathbf{f}) = \mathcal{I}_{H}(s,A,\mathcal{N},\geq,\mathcal{H})\). □

To summarize, the output of local search that uses the hypervolume-based first improvement indicator is equivalent with the output of PLS with the neutral Pareto improvement strategy, but the computational cost of using the hypervolume indicator is much higher than the cost of running PLS with the neutral Pareto neighbourhood exploration.

3.5 Comparing Pareto neighbourhood exploration strategies

The behaviour of the three Pareto neighbourhood exploration strategies—\(\mathcal{I}_{B}\), \(\mathcal{I}_{F}\), and \(\mathcal{I}_{N}\)—are compared by means of an example.

Example 2

In Fig. 2, we denote the solutions with points and crosses and Pareto sets with line segments. Consider five solutions in \(\mathcal{N}(s)\): (i) s 1 and s 2 are dominating s and are incomparable with each other, (ii) s 3 dominates s but is dominated by s 1 and s 2, (iii) s 4 is incomparable with s but dominated by s 1, s 2 and s 3, (iv) s 5 is dominated by all other solutions. In Fig. 2(b), additionally to the solutions from Fig. 2(a), the initial Pareto set is A={p}, where p is incomparable with both s 1 and s 2.

Both \(\mathcal{I}_{B}(s,\emptyset)\) in Fig. 2(a) and \(\mathcal{I}_{B}(s,\{p\})\) in Fig. 2(b) return the dominating solutions s 1 and s 2, A′={s 1,s 2}. \(\mathcal{I}_{F}(s,\emptyset)\) and \(\mathcal{I}_{F}(s,\{p\})\) select one of the solutions that dominates s: s 1, s 2 or s 3. Then, the output is A′={s 1}, or A′={s 2}, or A′={s 3}. \(\mathcal{I}_{N}(s,\emptyset)\) selects one of the four solutions that dominates or is incomparable with s: s 1, s 2, s 3, and s 4. \(\mathcal{I}_{N}(s,\{p\})\) selects one of the three solutions that dominates or is incomparable with {s,p}: s 1, s 2, and s 3. In Fig. 2(b), s 4 is not selected because it is dominated by solutions in A. Then, \(\mathcal{I}_{N}(s,\emptyset)\) is dominated by \(\mathcal{I}_{N}(s,\{p\})\). s 5 is never selected.

To study the differences between the three Pareto neighbourhood exploration strategies \(\mathcal{I}_{B}\), \(\mathcal{I}_{N}\) and \(\mathcal{I}_{F}\), we identify the conditions under which these strategies return the same result. The following statements result directly from their definitions. Let \(\pi_{\mathcal{N}}\) be a permutation of \(\mathcal{N}(s)\) and let \(|\mathcal{I}(s,A)|\) be the number of solutions evaluated in the algorithm \(\mathcal{I}(s,A)\). The output of \(\mathcal{I}_{B}(s,A)\) and \(\mathcal{I}_{F}(s,A)\) are the same Pareto set if and only if one of the following conditions is met:

-

1.

there exists no solution \(s^{\prime}\in \mathcal{N}(s)\) such that s′ dominates s,

-

2.

there exists exactly one solution \(s^{\prime}\in \mathcal{N}(s)\) that: (i) dominates s, (ii) dominates all solutions in \(\mathcal{N}(s) \setminus \{s^{\prime}\}\), and (iii) precedes in \(\pi_{\mathcal{N}(s)}\), if exists, the other solutions that dominate s but are dominated by s′.

The output of \(\mathcal{I}_{N}(s,A)\) is the same as the output of \(\mathcal{I}_{F}(s,A)\) if and only if one of the following conditions hold:

-

3.

there are in \(\mathcal{N}(s)\) only solutions that are dominated by at least a solution in {s}∪A,

-

4.

there exists exactly one solution \(s^{\prime}\in \mathcal{N}(s)\) that: (i) is incomparable or dominating all solutions in {s}∩A, and (ii) all other solutions in \(\mathcal{N}(s) \setminus \{s^{\prime}\}\) are dominated by at least a solution in {s}∩{A}

The output of \(\mathcal{I}_{N}(s,A)\) is the same as the output of \(\mathcal{I}_{B}(s,A)\) if and only if the above conditions 3 and 4 hold. It is interesting to note that the conditions under which the outputs of \(\mathcal{I}_{F}\) and \(\mathcal{I}_{B}\) are equal include the conditions for which the outputs of \(\mathcal{I}_{F}\) and \(\mathcal{I}_{N}\) are equal, which, at their turn are equal with the conditions for \(\mathcal{I}_{B}\) and \(\mathcal{I}_{N}\) to be equal.

In the following, we compare the number and the quality of the solutions returned when the three Pareto neighbourhood exploration strategies are used.

Proposition 6

Let \(\mathcal{I}_{B}\), \(\mathcal{I}_{N}\) and \(\mathcal{I}_{F}\) be Pareto neighbourhood exploration strategies, and \(\pi_{\mathcal{N}(s)}\) the same permutation of the evaluated neighbourhood as before. Let us consider \(|\mathcal{I}(s,A)|\) the number of solutions evaluated with \(\mathcal{I}\). Then,

Proof

\(\mathcal{I}_{B}\) generates all the neighbours of s thus it generates always at least as many individuals as the other two exploration strategies, \(\mathcal{I}_{F}\) and \(\mathcal{I}_{N}\). This also means that \(\mathcal{I}_{B}\) always finds the solution, if it exists, that dominates {s}∩A and all the other solutions \(\mathcal{N}(s)\). Thus \(\mathcal{I}_{B}(s,A) \preceq \mathcal{I}_{F}(s,A)\) and \(|\mathcal{I}_{F}(s,A)| \leq |\mathcal{I}_{B}(s,A)|\). We have \(\mathcal{I}_{F}(s,A) \preceq \mathcal{I}_{N}(s,A)\) because \(\mathcal{I}_{F}\) stops in a dominating solution for s, if it exists, whereas \(\mathcal{I}_{N}\) stops in any non-dominated solution for s and A. For a permutation \(\pi_{\mathcal{N}(s)}\), \(|\mathcal{I}_{N}(s,A)| \leq |\mathcal{I}_{F}(s,A)|\) because \(\mathcal{I}_{N}\) is always stopping earlier than \(\mathcal{I}_{F}\) unless one of the conditions 3 or 4 hold in which case \(|\mathcal{I}_{N}(s,A)| = |\mathcal{I}_{F}(s,A)|\). □

According to the above proposition, there is a trade-off between the number of evaluated solutions and the quality of the newly added solutions. The advantage of \(\mathcal{I}_{F}\) and \(\mathcal{I}_{N}\) is that they evaluate only a part of the neighbourhood, whereas \(\mathcal{I}_{B}\) evaluates the entire neighbourhood. The disadvantage of \(\mathcal{I}_{F}\) and \(\mathcal{I}_{N}\) is that the solutions selected, in general, are dominated by the solutions selected with \(\mathcal{I}_{B}\). The solution(s) generated with \(\mathcal{I}_{F}\) are non-dominated by the solutions selected with \(\mathcal{I}_{N}\). Note that the time complexity of evaluating a solution with any of the three Pareto neighbourhood exploration strategies presented before is linear with the number of objectives, m, and the number of solutions in the neighbourhood of a solution s, \(\mathcal{N}(s)\), and the number of solutions in the initial Pareto set A.

3.6 Soundness and completeness of PLS using Pareto improvement strategies

Paquete et al. (2007) show that their PLS is always stopping and, it returns a maximal Pareto local optimal set. Our version of PLS from Algorithm 1 differs in certain aspects from Paquete’s PLS: (i) the multiple Pareto neighbourhood exploration strategies , \(\mathcal{I}\), and (ii) the starting Pareto set A. In Paquete’s PLS, the Pareto set A is reduced to a single, randomly generated solution and there is a single improvement strategy, the best (Pareto) improvement strategy. We show that PLS using one of the three Pareto improvement strategies and an initial Pareto set has the local optimality property—that is, it always stops in a Pareto local optimal set. For simplicity of the proof, we assume that the initial Pareto set A contains only solution, s, with the visited flag set on true. This proof follows the proof of Theorems 5.3 and 5.4 from (Paquete et al. 2007), but with different improvement strategies for PLS.

Theorem 1

In Algorithm 1, PLS(A, \(\mathcal{I}_{B}\)) and PLS(A, \(\mathcal{I}_{F}\)) always stop in a maximal Pareto local optimal set. PLS(A, \(\mathcal{I}_{N}\)) stops in a subset of a maximal Pareto local optimal set.

Proof

In the first part of this proof, we show that no matter in which sequence solutions are visited, the output contains always a set of solutions that dominates the other solutions. Let us assume that there are two solutions, s′ and s′′ in A′, such that f(s′)≺f(s′′) and both solutions are evaluated with the same PLS. If s′′ is visited first, it is added to A′. When s′ is visited, s′′ is discarded from A′. If s′ is visited first, it is added to A′. When s′′ is visited, it is not added to the archive because s′′ is dominated by s′. We conclude that s′ is always added to A′ regardless of the succession in which solutions are visited.

Second, we show that PLS using \(\mathcal{I}_{B}\) and \(\mathcal{I}_{F}\) are always stopping in a maximal Pareto local optimal set. Thus, there are no solutions in the neighbourhoods of the solutions from the Pareto local optimal set that are incomparable with all the solutions in this set and are not added to the Pareto set. This property is already proven by Paquete et al. (2007) for best improvement strategies where all the neighbourhoods from all the solutions in A′ are explored entirely. The property also holds for \(\mathcal{I}_{F}\) because, like for \(\mathcal{I}_{B}\), all the incomparable solutions from the neighbourhood of a solution in the Pareto local optimal set are visited.

Consider \(\mathcal{I}_{N}\) and a solution s∈A′. Consider there are two solutions s′ and s′′ in \(\mathcal{N}(s)\) that are incomparable with s and all other solutions in A′ but that are not in the neighbourhood of each other. If s′ is visited before s′′, then s′ is added to A′ but s′′ is discarded and s.visited set to true and thus s′′ is not visited again. We conclude that PLS using \(\mathcal{I}_{N}\) stops in a subset of a maximal Pareto local optimum set.

Third, we show that PLS is always terminating. We show that a solution s eliminated from the current archive A′ because it is dominated by a newly added solution s′, where f(s′)≺f(s), will not be added a second time into the archive A′. Suppose that the same solution s is compared with solutions in A′ a second time. A′ contains solutions that are dominating or incomparable with s′. Thus, A′ contains solutions that are dominating s, and s is not included in this archive. If there are no other solutions that dominate the solutions from A′, the PLS algorithm from Algorithm 1 stops by design. □

Note that all three strategies add the dominating solutions, keep the incomparable solutions and delete the dominated solutions from the current Pareto set A′. The difference between these three Pareto neighbourhood exploration strategies is their stopping criterion. \(\mathcal{I}_{N}\) stops the earliest, since it only needs to find a new incomparable solution. \(\mathcal{I}_{B}\) stops after all solutions are visited, and \(\mathcal{I}_{F}\) stops somewhere in-between \(\mathcal{I}_{N}\) and \(\mathcal{I}_{B}\).

4 Genetic PLS (GPLS) for multi-objective quadratic assignment problems (mQAPs)

In order to design the neighbourhood, \(\mathcal{N}\), the stopping criterion, \(\mathcal{T}\), and genetic operators for GPLS, we need to specify a type of multi-objective combinatorial optimization problem. In this section, we design instances of GPLS for mQAPs. The neighbourhood and the stopping criterion are applicable for all kinds of permutation problems. For an optimal performance, the genetic operators are designed for each problem they are applied on.

Multi-objective QAPs

Single and multi-objective QAPs are NP-hard combinatorial optimization problems that model many real-world problems (i.e., scheduling, vehicle routing, etc.). Intuitively, QAPs can be described as the (optimal) assignment of n facilities to n locations. A distance is specified between each pair of locations, and for each pair of facilities the amount of materials (or flows) transported between these facilities is given. The goal is to find the assignment of facilities to locations that minimizes the sum of the products between distances and flows.

We consider multi-objective QAPs introduced by Knowles and Corne (2003). These mQAPs have for each objective different flow matrices and a single distance matrix. The flow matrices are correlated with some correlation ρ. Let us consider n facilities, a set Π(n) of all permutations of {1,2,…,n} and the n×n distance matrix D=(d ij ), where d ij is the distance between location i and location j. We assume an m objective space, and m flow matrices \(B^{k} = (b^{k}_{ij})\), each with n×n elements, where \(b^{k}_{ij}\) represents the flow from facility i to facility j in the k-th objective. The goal is to minimize for all objectives the set of cost functions \(c_{k}(\pi) = \sum_{i = 1}^{n} \sum_{j=1}^{n} d_{ij} \cdot b^{k}_{\pi(i)\pi(j)}\), where π(⋅) is a permutation from Π(n). It takes quadratic time to evaluate this function.

The neighbourhood \(\mathcal{N}(\cdot)\)

Multi-objective QAPs are permutation problems. A suitable neighbourhood operator for mQAPs is the q-exchange operator that swaps the position of q facilities. For example, the 2-exchange swapping operator swaps the position of two different facilities. This operator is attractive because of its linear time to compute the change in the cost function with the condition that all matrices D and B

k are symmetrical (Paquete and Stützle 2006). We have \(\Delta c_{k}(\pi,i,j) = 2 \cdot \sum_{r = 1, r \neq i,j}^{n} (d_{jk} - d_{ik}) \cdot (b^{k}_{\pi(i)\pi(k)} - b^{k}_{\pi(j)\pi(k)})\). This neighbourhood contains  individuals. The size of the neighbourhood increases quadratically with the number of facilities.

individuals. The size of the neighbourhood increases quadratically with the number of facilities.

Stopping criterion, \(\mathcal{T}\)

The stopping criterion for GPLS is chosen to fairly compare its performance with MPLS. The distance between two solutions is defined as the minimum number of exchanges needed to obtain one solution from the other solution (Schiavinotto and Stützle 2007). The distance between a solution and the solution obtained with q-exchange mutation is q−1. The search in GPLS is halted when the same number of swaps is executed as with MPLS. The difference in cost function, Δc k , for two solutions with distance q is linear in the number of facilities and the number of exchanges. Counting the number of swaps is equivalent to counting the number of fitness evaluations.

4.1 Genetic operators for GPLS on mQAPs

In this section, we present two perturbation operators for mQAPs (Drugan and Thierens 2010). In Algorithm 4, the Mutation algorithm is the parametrized mutation, and the path-guided mutation has the role of Recombination. We use cycles (Schiavinotto and Stützle 2007) to design operators that generate children at a given distance from their parent. These types of operators allow us to analyse the behaviour of GPLS on the tested problem instances.

The parametrized mutation, or the q-exchange mutation, uniform randomly selects, without replacement, q>2 distinct locations in a solution s, {l 1,…,l q }. To generate a new solution, these locations are exchanged from left to right or from right to left with equal probability to not bias the generation of individuals. When positions are exchanged from right to left, a position l i takes the value of its right neighbour l i+1. Then, in this sequence, b←l 1, l 1←l 2 and so on until l q−1←l q and l q ←b, where b is a buffer variable. Note that s and the resulting solution form a cycle of size q that contains the mutated positions {l 1,…,l q } and are q swaps apart. When PLS uses the 2-exchange operator to generate a neighbourhood, the mutation operator should exchange at least 3 facilities to escape from the region of attraction of a Pareto local optimal set.

The path-guided mutation, or the q-exchange path-mutation, uses two solutions, s′ and s′′, uniform randomly selected without replacement from the current Pareto set such that the distance between them is at least q. A child s is generated by copying the first parent s′, and mutate s. The second parent s′′, is used to construct the path between the two solutions. The set of cycles common for the two solutions, s and s′′, are identified. A cycle is a minimal subset of locations such that the set of their facilities is the same in both parents, s′ and s′′. It is possible to switch a subset from one parent to the other one while keeping a valid permutation.

A cycle, c, is randomly chosen. For q−1 times, choose at random a position i in the cycle c from solution s, where s[i]=s′′[j] and i≠j. Exchange the values of s[i] and s[j]. With this swap, the distance between s and its first parent, s′, is increased with 1 and the distance between s and the second parent, s′′, is decreased with 1. If the size of c is smaller or equal than q, a second cycle is chosen. This process of randomly selecting a cycle and swap locations is repeated until the distance between s and s′ is becoming q. Then distance between s and s′′ is ℓ−q+1. If there are no parent solutions at distance larger or equal with q, we generate a solution with parametrized mutation.

The path-guided mutation is respectful: if the two parents have the same facility on a position i then their child will also have the same facility on the i-th position. Consequently, to be able to generate any solution in the search space we need to alternate path-guided mutation and mutation operators. The path-guided mutation operator resembles the path relinking operator (Jaszkiewicz and Zielniewicz 2009) because it generates solutions on the path between two solutions.

Cycle recombination (Poon and Carter 1995) uses cycles to generate children, but, unlike path-guided mutation, it exchanges an entire block of cycles between parents. Partially mapped crossover (PMX) (Goldberg and Lingle 1985) is the most popular recombination operator for the (multi-objective) travelling salesman problem (TSP). Some positions are uniform randomly chosen in the two parents and information is exchanged between them such that the generated children are valid permutations. Unlike path-guided mutation, PMX creates, or connects, cycles rather than respecting them. In Sect. 6, we show that operators that do not respect cycles function worse than the operators proposed here that do respect them.

4.2 The expected number of solutions compared with a Pareto improvement strategy

In this section we derive a formula to compute the expected number of solutions generated with the three Pareto neighbourhood exploration strategies. We show that the number of solutions generated with \(\mathcal{I}_{N}\) and \(\mathcal{I}_{F}\) are in expectation much smaller than with \(\mathcal{I}_{B}\). Let us consider a neighbourhood \(\mathcal{N}(s)\) generated with the 2-exchange operator that has:

-

z—the number of solutions that dominate s, \(z = |\{a \in \mathcal{N}(s) \mid \mathbf{f}(a) \prec \mathbf{f}(s)\}|\), where \(0 \leq z \leq C^{n}_{2}\),

-

v—the number of solutions that are incomparable with s, \(v = |\{a \in \mathcal{N}(s) \mid \mathbf{f}(s) \| \mathbf{f}(a)\}|\), where \(0 \leq v \leq C^{n}_{2}\);

-

\(C^{n}_{2} - z - v\)—the number of solutions that are dominated by s, \(C^{n}_{2} - z - v = |\{a \in \mathcal{N}(s) \mid \mathbf{f}(s) \prec \mathbf{f}(a)\}|\).

The best Pareto improvement strategy evaluates all the solutions in the neighbourhood. The number of solutions evaluated with \(\mathcal{I}_{B}\) is \(|\mathcal{I}_{B}| = C^{n}_{2}\). The probability of evaluating a certain number of solutions with \(\mathcal{I}_{F}\) follows a hyper-geometrical distribution describing the number of successes in a finite sequence where samples are drawn without replacement.

Lemma 1

Let \(\mathcal{I}_{F}\) and \(\mathcal{N}(s)\) be as before. Let z be the number of solutions in \(\mathcal{N}(s)\) that dominate s. The probability to find, after exploring exactly i solutions from \(\mathcal{N}(s)\), the first solution that dominates s is

Proof

\(\mathcal{I}_{F}\) stops at the first improvement in the sequence of solutions generated from \(\mathcal{N}(s)\). In total there are z improvements in this sequence of \(C^{n}_{2}\) solutions. Let successes be associated with improvements. The number of improvements, and thus success, before \(\mathcal{I}_{F}\) stops is 1. Using the definition of the hyper-geometrical distribution, we assume that there are z successes and \(C^{n}_{2} - z\) failures. The probability of finding the first improvement after i trials from the z total improvements is the hyper-geometrical value divided by i,  . The probability \(P_{\mathcal{I}_{F}(s)}(x=i)\) follows directly. □

. The probability \(P_{\mathcal{I}_{F}(s)}(x=i)\) follows directly. □

For the neutral Pareto improvement strategy, the probability to discover in i steps the first solution that dominates or is incomparable with s is

Proposition 7

Let \(\mathcal{I}_{B}\), \(\mathcal{I}_{F}\), \(\mathcal{I}_{N}\) be as before. Let \(\mathcal{N}\) be the neighbourhood generated with 2-exchange operator, and let z be the number of solutions that dominate s, v the number of solutions that are incomparable with s, and \(\pi_{\mathcal{N}(s)}\) a uniform randomly generated permutation of \(\mathcal{N}(s)\) as before.

The expected number of evaluated neighbours with \(\mathcal{I}_{B}\) is

where E{|g(⋅)|} is the expected value of the function g. The expected number of evaluated neighbours with \(\mathcal{I}_{F}\) is

The expected number of evaluated neighbours with \(\mathcal{I}_{N}\) is

Proof

The number of evaluated neighbours is always the same for \(\mathcal{I}_{B}\), \(C^{n}_{2}\). Thus, \(E\{|\mathcal{I}_{B}|\} = C^{n}_{2}\). To calculate the expected evaluated solutions for \(\mathcal{I}_{F}\) and \(\mathcal{I}_{N}\), we use the definition of the expected value for the function g(⋅), E{g}=∑ x g(x)⋅P(x). Here, the probability P(x=(i)) is, as in Eq. 2, the probability that a Pareto improvement strategy generates i solutions before the first improvement on s is met. The sum is over all \(i = \{1,\ldots, C^{2}_{n} - z\}\), where \(C^{2}_{n} - z\) is the maximum number of solutions that can be evaluated before the first improvement on s is found. The function g(i)=i as usually. Then, the expected number of evaluated solutions for first Pareto improvement strategy is \(E\{|\mathcal{I}_{F}|\} = \sum^{C^{n}_{2}-z}_{i = 1} g(i) \cdot P_{\mathcal{I}_{F}(s)}(x = (i))\). To proof the expected value for \(\mathcal{I}_{N}\), we follow the same line of reasoning. □

Some properties of these expected values are given in the following proposition.

Proposition 8

If n increases, then each of the expected values \(E\{|\mathcal{I}_{N}|\}\), \(E\{|\mathcal{I}_{F}|\}\), \(E\{|\mathcal{I}_{B}|\}\) also increases. If z increases, then \(E\{|\mathcal{I}_{F}|\}\) and \(E\{|\mathcal{I}_{N}|\}\) decrease. If v increases, then \(E\{|\mathcal{I}_{N}|\}\) decreases.

Proof

The first property follows directly from the definition of expected value. If z increases, then the terms \(C^{n}_{2} - z\),  , \(C^{n}_{2} - (z+v)\) and

, \(C^{n}_{2} - (z+v)\) and  , from Eqs. 2 and 1, decrease. Thus, \(E\{|\mathcal{I}_{F}|\}\) and \(E\{|\mathcal{I}_{N}|\}\) decrease. Proving that when v increases, \(E\{|\mathcal{I}_{N}|\}\) decreases follows the same line of reasoning. □

, from Eqs. 2 and 1, decrease. Thus, \(E\{|\mathcal{I}_{F}|\}\) and \(E\{|\mathcal{I}_{N}|\}\) decrease. Proving that when v increases, \(E\{|\mathcal{I}_{N}|\}\) decreases follows the same line of reasoning. □

Figure 3 shows the expected number of evaluated solutions in a neighbourhood with (a) best Pareto improvement and (b) first Pareto improvement for several values of the number of facilities, n, and the number of dominating solutions, z. The expected number of evaluated solutions in a neighbourhood is always largest for best Pareto improvement, \(E\{|\mathcal{I}_{B}|\}\). Even if only one solution in the neighbourhood is dominating the current solution, z=1, \(E\{|\mathcal{I}_{F}|\}\) is half of \(E\{|\mathcal{I}_{B}|\}\). As stated in Proposition 8, the values of \(E\{|\mathcal{I}_{F}|\}\) decrease when z increases, but the expected values are increasing with n.

“Flat” multi-objective landscapes

An interesting, but logical, property is that \(\mathcal{I}_{N}\) and \(\mathcal{I}_{F}\) return less solutions in a flat landscape (i.e. landscape with many incomparable solutions) than \(\mathcal{I}_{B}\). Single objective spaces with many equal values are called flat and they are known as inappropriate for heuristics in general. Multi-objective spaces with many incomparable values are more common than flat single objective spaces. In general, all the subsets of elements \(\{x_{i},\ldots,x_{j}\} \in \mathcal{S}\) such that f 1(x i )⪯⋯⪯f 1(x j ) and f 2(x i )⪰⋯⪰f 2(x j ) form a Pareto set. For “flat” spaces, or spaces with a large “flat” region, when PLS uses \(\mathcal{I}_{B}\), the entire region is explored and added to the current Pareto set. That leads to computational and storage problems for PLS. In this case, a Pareto improvement strategy, like \(\mathcal{I}_{F}\) and \(\mathcal{I}_{N}\), that finds a single or few solutions from the space is less demanding in memory and run-time than \(\mathcal{I}_{B}\).

5 Experimental results

In this section, we experimentally compare six different (stochastic) Pareto local search algorithms. The first three are multi-start PLS algorithms (MPLS) each using a different Pareto neighbourhood exploration strategy: best improvement \(\mathcal{I}_{B}\), first improvement \(\mathcal{I}_{F}\), and neutral improvement \(\mathcal{I}_{N}\). The other three are SPLS algorithms that combine the above three neighbourhood exploration strategies with stochastic perturbation that consists of unbiased mutation and path-guided mutation, each applied with equal probability. All algorithms generate their neighbourhood \(\mathcal{N}\) with the 2-exchange operator. We denote the six algorithms as follows:

-

1.

bMPLS uses MPLS(\(\mathcal{I}_{B}\), \(\mathcal{T}\)), where \(\mathcal{T}\) means that PLS is restarted for M=100 times;

-

2.

fMPLS uses MPLS(\(\mathcal{I}_{F}\), \(\mathcal{T}^{\prime}\)), where \(\mathcal{T}^{\prime}\) means that PLS is restarted until the same number of swaps are generated as with bMPLS;

-

3.

nMPLS uses MPLS(\(\mathcal{I}_{N}\), \(\mathcal{T}^{\prime}\)), with \(\mathcal{T}^{\prime}\) as in fMPLS;

-

4.

bGPLS uses GPLS(\(\mathcal{I}_{B}\), 0.5, \(\mathcal{T}_{1}\), \(\mathcal{T}_{2}\)), where \(\mathcal{T}_{1}\) randomly restarts PLS N=10 times and \(\mathcal{T}_{2}\) means that PLS is restarted using genetic operators until the same number of swaps are generated as with bMPLS;

-

5.

fGPLS uses GPLS(\(\mathcal{I}_{F}\), 0.5, \(\mathcal{T}_{1}\), \(\mathcal{T}_{2}\)), with \(\mathcal{T}_{1}\) and \(\mathcal{T}_{2}\) as in bGPLS;

-

6.

nGPLS uses GPLS(\(\mathcal{I}_{N}\), 0.5, \(\mathcal{T}_{1}\), \(\mathcal{T}_{2}\)), with \(\mathcal{T}_{1}\) and \(\mathcal{T}_{2}\) as in bGPLS.

We generate restarting solutions with both mutation and path-guided mutation with an exchange rate q, uniform randomly selected between 3 and ⌊n/3⌋. These exchange rates are chosen based on the empirical observations from Drugan and Thierens (2010, 2011). Also the landscape analysis in the paper shows that these exchange rates are the most effective. For example, for n=25, we have q={3,…,8}, and for n=100, we have q={3,…,33}. Each algorithm independently runs 50 times.

The tested problems

Experimental tests are performed on a set of bi-objective QAP instances (Knowles and Corne 2003) with positive correlations ρ={0.25,0.50,0.75} and a large number of facilities n={25,50,75,100}. To facilitate comparisons, some of these problems are the unstructured bQAP instances in Paquete’s study (Paquete and Stützle 2006) (http://eden.dei.uc.pt/~paquete/qap/). Three bQAPs, with n=100 and ρ={0.25,0.5,0.75}, are generated with the software from Knowles and Corne (2003). In general, we denote the bQAP with bQAP(n, ρ), with n and ρ as before. For QAPs with a large number of facilities and high positive correlation, ρ=0.75, Paquete et al. reported poor performance of multi-restart PLS.

It should be noted that we have performed preliminary experiments with unstructured and structured with negative and zero correlation bQAPs. As Paquete et al. noticed, on negative unstructured bQAP instances more complicated LS-based heuristics and the multi-restart PLS have approximatively the same performance. The Pareto sets of these bQAPs are very large, for instance for ρ=0 and n=75 the Pareto optimal set typically found has about 2000 solutions. This can be explained by the fact that when there is no correlation, many solutions have a different ordering for the two objectives. Similarly when there is negative correlation (ρ=−0.75), the sizes of the Pareto optimal sets typically found are huge. In this case, the ordering of each solution is opposite in the two objectives. For such large Pareto optimal sets the search with PLS using \(\mathcal{I}_{B}\) therefore becomes too expensive. Knowles and Corne (2004) acknowledge that the structured QAPs are easier than the unstructured bQAPs for local search.

To summarize, we consider that the complexity is highest when there are a large number of attractors containing Pareto optimal solutions, compared to few attractors with a large number of Pareto optimal solutions. Section 5.1 looks at the performance of the six algorithms, Sect. 5.2 discusses their exploration properties, while Sect. 5.3 focuses on their dynamical behaviour.

5.1 Comparing the performance of stochastic PLS algorithms

Pareto sets are typically compared by the hypervolume unary indicator and/or attainment functions (Zitzler et al. 2003). The unary attainment function (Fonseca and Fleming 1996) (EAF) gives the probability of attaining each point (independently) in the objective space. Contour surfaces through certain probabilities can then be drawn. To compute the hypervolume unary indicator, we use the PISA package (Bleuler et al. 2003). For comparison purposes we first normalize the outputs of all six algorithms, and the corresponding reference set, if it exists, from http://eden.dei.uc.pt/~paquete/qap/, A ∗(n,ρ), where n={0.25,0.5,0.75} and ρ as before. For each instance, the reference set A ∗ is obtained with a two phase tabu algorithm that is run much longer than the MPLSs are run. There are algorithms that outperform this tabu algorithm in some cases (Lopez-Ibanez et al. 2004). This normalization script assigns to the smallest point(s) in an objective the value 1 and to the highest point(s) the value 2. All the other points are scaled to a value between 1 and 2 in both objectives. The normalized outputs are the inputs for the hypervolume calculation. The reference point is (2.1,2.1) which has the largest value in both objectives.

Table 2(A) shows the average and the standard deviation of the hypervolume unary indicators of the six SPLSs on twelve testing bQAPs. Table 2(B) compares the six SPLS algorithms: (i) the mean hypervolume for the three values of ρ and four numbers of facilities, n, (ii) the number of wins of fGPLS, bGPLS and fMPLS over the six SPLSs, and (iii) the rank of each SPLS algorithms based on the number of wins. For the same bQAP instance, we measure if the difference between two measurements of Table 2(A) is statistical significant using the Wilcoxon non-parametric two samples test, where p<0.05. Figure 4 presents five EAFs at 2 %, 25 %, 50 %, 75 % and 100% for two algorithms, fMPLS and fGPLS, on two bQAPs, bQAP(75, 0.5) and bQAP(75, 0.75). Overall, fGPLS is the best tested SPLS and nMPLS is the worst algorithm.

Attainment surfaces at 2 %, 25 %, 50 %, 75 % and 100 % (lines from bottom left to top right) of the normalized outputs. The two tested SPLSs are: (i) fMPLS on the left and (ii) fGPLS on the right. The two tested bQAPs are: (i) bQAP(75, 0.5) top and (ii) bQAP(75, 0.75) bottom. These EAFs are compared with the best known Pareto set for the two bQAPs: (i) A ∗(75, 0.5) top and (ii) A ∗(75, 0.75) bottom. The objective values found at 2 % EAFs correspond to the best Pareto set found over 50 runs and those found at 50 % EAFs are the median outcome

All GPLS algorithms -that are fGPLS, nGPLS, bGPLS—outperform all the multi-restart PLS—that are bMPLS, fMPLS and nMPLS—on the twelve tested bQAPs. In Fig. 4, the EAFs contours are closer to the lowest point (1,1), and thus better, for fGPLS than for fMPLS. Furthermore, for fGPLS the best EAF contour at 2 % is better than 1 % attainment surface for A ∗ from 100 runs on both bQAP instances. The objective values of 2 % EAFs are better for A ∗ than for fMPLS. Thus, the two perturbation operators of GPLS increase the efficiency of the SPLS algorithms.

Table 2(B) shows that the SPLS algorithms that use perturbation operators have the highest performance, rank 1, 2, and 3. The difference between GPLS and its corresponding MPLS with the same Pareto neighbourhood exploration strategy increases when the correlation ρ and the number of facilities n increase. For each SPLS, the hypervolume indicator decreases with the increase of ρ and n. The smallest, statistically the same, hypervolume values are registered with the three MPLSs on bQAP(100, 0.75). On the same bQAP instance, the smallest difference between the hypervolume of GPLS and MPLS is 0.41 when \(\mathcal{I}_{B}\) and \(\mathcal{I}_{N}\) are used. The largest hypervolume difference, 0.45, is between fGPLS and fMPLS. Note that, on bQAP(100, 0.75) the hypervolume indicators of GPLSs are approximatively two times larger than the hypervolume indicators of MPLSs. On the contrary, on bQAP(25, 0.25) all SPLS algorithms perform about the same, the exception is nMPLS, which has a lower performance. We conclude that the difference between the performance of GPLS and MPLS increases with increasing problem complexity—that is the increase in n and ρ.

In Fig. 4, on bQAP(75, 0.5), attainment surfaces, EAFs, are clustered together, whereas on bQAP(75, 0.75) they are spread. Attainment surfaces of fGPLS are more spread than the attainment functions of fMPLS. In Table 2, the clustered EAFs correspond to a small variance of hypervolume values whereas the spread EAFs correspond to large variances of the hypervolume indicators. On the other side, on bQAP(75, 0.5), in Fig. 4(top), each attainment surface is reasonably well spread over the two objectives. On bQAP(75, 0.75), in Fig. 4(bottom), the attainment surfaces are rather narrow and have a small amount of points. For large correlations, there are few incomparable solutions and a large difference between the worst and the best objective values. For small correlations, there is a large amount of solutions with different precedence in the two objectives and a smaller difference between the worst and the best objectives.

The SPLS algorithms that use \(\mathcal{I}_{F}\) have a better or equal performance than the corresponding SPLSs that use \(\mathcal{I}_{B}\). In Table 2(B), both the best genetic PLS, rank 1, and the best multi-restart PLS, rank 4, use \(\mathcal{I}_{F}\). The SPLSs that use \(\mathcal{I}_{F}\) or \(\mathcal{I}_{B}\) have a better or equal performance than the corresponding SPLSs that use \(\mathcal{I}_{N}\). For bQAPs with low correlation, ρ=0.25, there are no statistical differences between algorithms using \(\mathcal{I}_{B}\) and \(\mathcal{I}_{F}\). bQAPs with high correlation, ρ=0.75, give the largest difference between SPLSs that use \(\mathcal{I}_{F}\) and \(\mathcal{I}_{B}\). For bQAPs with ρ={0.25,0.5}, SPLSs that use \(\mathcal{I}_{N}\) are the algorithms with the worst performance. The bad performance of SPLSs using \(\mathcal{I}_{N}\) is due to the long walk on the Pareto set of incomparable solutions instead of searching for dominating solutions that greatly increase the hypervolume. For ρ=0.75, SPLSs that use \(\mathcal{I}_{N}\) have similar performance with SPLSs that use \(\mathcal{I}_{B}\). The small number of solutions in the Pareto sets of bQAPs with ρ=0.75, restricts the time \(\mathcal{I}_{N}\) spends in a neighbourhood of a solution.

The overall conclusion is that the SPLS algorithm with the highest performance is fGPLS using the first improvement Pareto neighbourhood exploration \(\mathcal{I}_{F}\) and both perturbation methods, mutation and path-guided mutation. The bQAP, where the difference between fGPLS and the other SPLSs is maximal, has the largest number of facilities and the largest correlation, bQAP(100, 0.75). In the next section, we give more insights on the SPLSs’ behaviours on the tested bQAPs.

5.2 Landscape characteristics of bQAPs explored with SPLSs

Table 3 shows properties of bQAPs when explored with SPLSs. Table 3(A) shows the number of times PLS is restarted in a SPLS run, #PLS, where all the SPLS algorithms have the same run-time, measured in number of swaps. Table 3(B) shows that the number of calls of the Pareto improvement strategy, \(\#\mathcal{I}\) is proportional with n and inverse proportional with ρ. In Table 3(C), the number of solutions in the output Pareto local optimal set of a PLS, |A|, is proportional with n and inverse proportional with ρ.

There is a natural relationship between Table 3(A), (B) and (C). In Sect. 4.2, we showed that the expected number of solutions generated with \(\mathcal{I}_{B}\) is much larger than the expected number of solutions generated with \(\mathcal{I}_{F}\), which, at its turn, is much larger than the solutions generated with \(\mathcal{I}_{N}\). The more often the Pareto improvement strategy \(\mathcal{I}\) is called, the less times SPLS has to restart PLS. Thus, \(\#\mathcal{I}\) and the number of solutions generated with one of the Pareto neighbourhood exploration strategies are inversely correlated with #PLS, while \(\#\mathcal{I}\) is correlated with |A|. Logically, the larger the size of a Pareto local optimal set, the larger the number of \(\mathcal{I}\) calls that are necessary to explore the neighbourhood. For all algorithms, the largest \(\#\mathcal{I}\) is obtained for bQAP(100, 0.25) which has the largest fronts |A|.

The least number of times a SPLS restarts PLS is 100 for bMPLS, closely followed by fMPLS. Similar with bMPLS, Paquete and Stützle (2006) restarts PLS 100 times. bGPLS and fGPLS restart up to 3–3.5 times more often. The largest number of times PLS is restarted, #PLS, is obtained with nGPLS, which can be up to 40 times larger than 100. When \(\mathcal{I}_{B}\) is used, #PLS is independent of n and ρ because the expected number of solutions visited with \(\mathcal{I}_{B}\) is also constant with n. #PLS of SPLSs using \(\mathcal{I}_{N}\) varies greatly with n and ρ because the expected number of solutions evaluated with \(\mathcal{I}_{N}\) varies greatly with n and the number of incomparable and dominating solutions in the neighbourhood. As expected, #PLS when \(\mathcal{I}_{F}\) is used has values between #PLS using \(\mathcal{I}_{B}\) and \(\mathcal{I}_{N}\).

Interesting, for \(\mathcal{I}_{N}\), the smaller ρ is, the larger #PLS is, whereas for \(\mathcal{I}_{F}\), the larger ρ is, the larger #PLS is. We corroborate this observation with the observation that bQAP with lower ρ have more incomparable solutions, thus larger Pareto local optimal sets. The lower the correlation ρ, the lower the number of solutions in the neighbourhood that are visited with the \(\mathcal{I}_{N}\) exploration strategy, and the more often the SPLS using \(\mathcal{I}_{N}\) has to restart. On the other side, the SPLS using the \(\mathcal{I}_{F}\) exploration strategy runs longer for low ρ because of the large number of incomparable solutions in the neighbourhood and the smaller number of dominating solutions that would stop \(\mathcal{I}_{F}\).

\(\#\mathcal{I}\) and |A| depend on the type of SPLS, GPLSs have smaller Pareto local optimal sets and smaller \(\#\mathcal{I}\) than MPLSs. The SPLSs using \(\mathcal{I}_{N}\) have a much smaller |A| than the SPLSs using \(\mathcal{I}_{B}\) and \(\mathcal{I}_{F}\). This is caused by the numerous incomparable solutions in Pareto local optimal sets of bQAPs with low correlation, ρ=0.25. Recall that \(\mathcal{I}_{N}\) stops after adding a single incomparable solution to A, whereas \(\mathcal{I}_{F}\) and \(\mathcal{I}_{B}\) add a set of solutions to A.

To conclude, GPLSs spend less time in reaching a Pareto local optimal set than MPLSs do. SPLSs using \(\mathcal{I}_{N}\) restart most often and they have the worst performance because \(\mathcal{I}_{N}\) prematurely stops the evaluation of incomparable solutions on large Pareto sets. SPLSs using \(\mathcal{I}_{F}\) have the best performance and are restarted more often than SPLSs using \(\mathcal{I}_{B}\).

5.3 Dynamical behaviour of SPLSs on bQAPs

In this section, we empirically analyse the dynamical behaviour of SPLSs using the relationship between Pareto local optimal sets generated at consecutive iterations of PLS. Let us consider any two consecutive iterations of PLS. The Pareto local optimal set of the first PLS is denoted as the parent Pareto local optimal set, or shortly the parent Pareto set. The output of the second PLS is denoted as the offspring Pareto local optimal set, or shortly the offspring Pareto set. The distance in the objective space, Δobj, between any parent and its offspring Pareto set is calculated as the average Euclidean distance between any two solutions, one from the parent and one from its child. The distance in the solution space, Δsol, between a parent and its offspring Pareto set is calculated as the average of the minimum number of swaps between any two solutions of the two Pareto local optimal sets. For this experiment, Pareto local optimal sets of a single random run of SPLSs are considered.

Figure 5(a) and (b) show, on bQAP(75, 0.75), the relationship between Δobj and Δsol. Figure 5(c) and (d) present, on bQAP(75, 0.75), the relationship between the distance in solution space, Δsol and the hypervolume of the offspring Pareto set. Note the resemblance between the figures for the same SPLS. For fMPLS, solutions in parent and offspring Pareto sets are at a large distance in solution space and they are uncorrelated with each other. For fGPLS, solutions in parent and offspring Pareto set are correlated. A small distance in solution space corresponds to a small distance in the objective space and a high hypervolume indicator for the offspring Pareto set. A large distance in solution space corresponds to a large distance in objective space and a lower hypervolume indicator for the offspring.

In Table 4, we calculate: (i) Δobj in Table 4(A), and (ii) Δsol in Table 4(B). As expected, Table 4 generalizes the results from Fig. 5. For MPLSs, the distance in solution space, Δsol, is proportional with n and is independent of ρ and \(\mathcal{I}\). For GPLSs, Δsol is proportional with n and inverse proportional with ρ. Δsol has the smallest values for GPLSs using \(\mathcal{I}_{B}\), and the largest values for GPLSs using \(\mathcal{I}_{N}\). Δsol for GPLSs is smaller than for MPLSs.

Δobj is proportional with n and inverse proportional with ρ. For GPLS, a large distance is objective space Δobj implies also a large distance in solution space Δsol. Δobj has the smallest values for SPLSs using \(\mathcal{I}_{B}\), and the largest values for SPLSs using \(\mathcal{I}_{N}\).

To conclude, genetic PLSs perform better than multi-restart PLSs by exploiting the structure of the bQAP search space, a structure caused by the positive correlation between the objectives. As shown above, the distance in solution space is correlated with the distance in objective space between consecutive Pareto local optimal sets and with the hypervolume indicator.

6 Random walks for landscape analysis of bQAPs using SPLSs

The previous section showed that the best performance was obtained with the SPLS using genetic perturbation operators and the first Pareto improvement neighbourhood exploration strategy. We presented some experimental results where GPLSs exploit the correlation in bQAPs. In this section, we deepen our analysis using random walks to investigate the effects of various mechanisms of stochastic PLSs in isolation. A random walk (RW) is a sequence of steps generated with a random process on the path between two “good” solutions in the search space using a genetic operator.

Section 6.1 shows the usefulness of the genetic operators that use cycles. The efficiency of deactivation for different correlation values of bQAPs is studied in Sect. 6.2. A novel landscape analysis with RW for multi-objective spaces is given in Sect. 6.3. The properties of different Pareto neighbourhood exploration strategies are investigated with RW in Sect. 6.4.

Methodology