Abstract

We define a semantics for conditionals in terms of stochastic graphs which gives a straightforward and simple method of evaluating the probabilities of conditionals. It seems to be a good and useful method in the cases already discussed in the literature, and it can easily be extended to cover more complex situations. In particular, it allows us to describe several possible interpretations of the conditional (the global and the local interpretation, and generalizations of them) and to formalize some intuitively valid but formally incorrect considerations concerning the probabilities of conditionals under these two interpretations. It also yields a powerful method of handling more complex issues (such as nested conditionals). The stochastic graph semantics provides a satisfactory answer to Lewis’s arguments against the PC = CP principle, and defends important intuitions which connect the notion of probability of a conditional with the (standard) notion of conditional probability. It also illustrates the general problem of finding formal explications of philosophically important notions and applying mathematical methods in analyzing philosophical issues.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The problem of estimating the probabilities of conditional sentences is interesting for at least two reasons:

First, it seems that in many cases it is not clear which estimation is “The True Estimation” (as different language users give different values).

Second, providing a coherent and robust method of evaluating these probabilities would give us a better understanding of many other problems connected with conditionals—e.g. the problem of counterfactuals.

In this article, we define a semantics for conditionals in terms of stochastic graphs, which gives a straightforward and simple method of evaluating the probabilities of conditionals.Footnote 1 It seems to be a good and useful method in the cases already discussed in the literature (cf. e.g. Kaufmann (2004)), and it can easily be extended to cover more complex situations. In particular, it allows us to describe several possible interpretations of the conditional (the global and the local interpretation, and generalizations of them), and yields a powerful method of handling more complex issues (such as nested conditionalsFootnote 2). It provides a satisfactory answer to Lewis’s arguments against the PC = CP principle, and defends important intuitions which connect the notion of probability of a conditional with the (standard) notion of conditional probability.

The article has the following structure:

In Part 1 we analyze a simple example, which shows how to define the stochastic graph semantics for conditionals.

In Part 2 we demonstrate how the notions introduced in Part 1 can be used to analyze more complex problems (we examine the case of two urns, which makes the situation non-trivial).

Parts 3 and 4 are devoted to the global and local interpretations of conditionals. They provide a formal framework suitable for their analysis (and we formalize the intuitive computations given by Kaufmann for the global and local interpretations).

In Part 5 we discuss Lewis’s arguments (concerning the PC = CP principle) and show, how to resolve within our framework the problems indicated by Lewis.

A short summary (part 6) follows, in which we also discuss possible generalizations and indicate some results that we have obtained already (but which lie outside the scope of this article).

The Appendix presents some technical tools which are needed in the text.

A “byproduct” of our results is a contribution to the vivid discussion concerning the explanatory (explicatory) role of mathematics. Notions from probability theory (and the theory of Markov processes) have been used in order to give a precise explication of some philosophically important notions. So apart from their interest to the problem of conditionals, our results exemplify the general strategy of finding formal counterparts (explications) of philosophical notions. The notion of probability is a classic example of an explication (the locus classicus is Carnap (1950), cf. e.g. Brun (2016) for a contemporary discussion). In a sense, we widen the scope of an explication and show how to give formal, precise explications of the probability of conditionals, taking different interpretations and variants into account.

1 A Simple One-Urn Example

Very often in discussions concerning conditionals (and in particular, when their probabilities are under discussion), problems from natural language are analyzed. For example, suppose we try to estimate the probability that If Reagan worked for the KGB, I’ll never find out (Lewis 1986, 155). It is typically the case that our intuitions drive the analysis, but it is difficult (indeed, impossible) to give a concrete value of this probability and it is often even impossible to determine whether different language users apply the same methods of estimating it. For these reasons, in this article (like in many other works on conditionals, e.g. Kaufmann (2004, 2005, 2009, 2015), Khoo (2016), van Fraassen (1976)) we will investigate examples where the probabilities of conditional sentences in question can be given concrete values. This precise semantics can later be applied to everyday-life examples.

Let us start with a simple one-urn example. A ball is drawn from the urn containing 10 White, 8 Green and 2 Red balls.Footnote 3 What probability is a rational subject going to ascribe to the sentence A Green ball has been drawn? It is of course 8/20, which is exactly the probability computed within the classical probability model. It is also equal to the expected value in the game, where the reward is 1 for winning the game (i.e. drawing the Green ball), and 0 for losing it. This is in accord with the intuition that the subjective probability of a sentence is the odds at which you would be willing to place a bet on the outcome or the price you are willing to pay for the game. So, for the same reasons, the sentence A non-White and Green ball has been drawn has probability 8/20; the sentence A White ball has been drawn has probability 10/20 etc. These are the prices to pay for the respective (fair) games.

A rational subject estimates the probabilities (under the threat of a Dutch Book) so as to conform to the Kolmogorov probability axioms.Footnote 4 In other words, the subject works within the probability space Ξ = (Ω, Μ, P), where Ω = {W, G, R} are elementary events (i.e. drawing a ball of one of the colors), with probabilities respectively: P(W) = r = 10/20; P(G) = p = 8/20; P(R) = q = 2/20. Μ is the σ-field of all events, and P is the probability function defined on M.Footnote 5

Let us estimate the probability of the sentence If we draw a non-White ball, we draw a Green ball (or, simpler: If the ball is not White, it is Green). We symbolize it as ¬W → G.

As we have observed before, estimating this probability amounts to estimating the price for playing a certain (fair) game, which we might call “The Conditional Game”. The problem is therefore: how much are we willing to bet on the truth of the conditional ¬W → G? Before we bet, we have to define the game conditions in a precise manner, i.e. we have to stipulate in a clear way how the game should be played and in what circumstances we will consider it as settled. In particular, we have to determine the result for all possible draws (i.e. White, Green, Red—W, G, R).

There is no doubt that we win if we draw a Green ball, and we lose if we draw a Red ball. But the problem arises with what should we do when we draw a White ball.

There are a priori four possibilities. After drawing a White ball:

-

(1)

We win the game.

-

(2)

We lose the game.

-

(3)

The game is undecided and stops.

-

(4)

The game is undecided, we put the ball into the urn and draw the ball again.

Choosing option (1) is equivalent to the claim, that we do not really play The Conditional Game ¬W → G, but rather The Material Implication Game: we win always when the ball is White or Green.Footnote 6 The probability of this event is clearly 18/20. If we chose (2), it means, that we lose after drawing a White or a Red ball and the probability of this event is 12/20. But it is widely agreed that this is not the appropriate way of interpreting conditionals (plenty of discussion can be found in the literature, so there is no need to consider the matter further here).

Choosing (3) (the game stops as undecided) has a major drawback: it “annihilates” the problem of counterfactuals. So this is not an attractive solution.Footnote 7 The conclusion therefore is that the only reasonable definition of The Conditional Game ¬W → G is (4): drawing a White ball does not end the game but extends it: we put the ball back into the urn, and draw again. This choice is a natural one: our bet concerns the case when the ball is not White, and in order to resolve the situation, we have to check what happens if the ball is non-White! If we stipulate the rules of our game in this way, then the outcomes are not single draws, but rather sequences of draws, consisting of a (possibly empty) sequence of White balls (which simply have the effect that the game restarts), followed by a Green or Red ball (either of which settles the game).Footnote 8

This approach to the problem of computing the probability of a conditional means, that we have to define formally a new probability space (different from the simple initial space Ξ = (Ω, Μ, P), but of course intimately connected with it), where computing the probability of the outcome of the game will be possible. Observe, that in our initial space Ξ = (Ω, Μ, P), there is no event corresponding to the conditional ¬W → G.Footnote 9

1.1 A Stochastic Graph Representation

We can represent the flow of the game in a very natural and convenient way by means of a stochastic (Markov) graph. We will present the general idea of a Markov graph using a simple example (for the reader’s convenience, technical details and references can be found in the Appendix).

Gambler’s ruin. Consider a coin-flipping game. At the beginning of the game, two players each have a number of pennies, say n1 and n2. After each flip of the coin, the loser transfers one penny to the winner and the game restarts on the same terms. The game ends when the first player (“The Gambler”) has either lost all his pennies or has collected all the pennies. Assume, that the probability of tossing heads is p, and of tossing tails is q (p + q=1). The Gambler gets one penny if heads show up.

The simplest non-trivial example is when n1 = 1 and n2 = 2 (when n1 = n2 = 1, the game stops after the first flip). At each moment, the state of the game is the number of The Gambler’s pennies, so the possible states are 0,1,2,3. The game starts in state 1 and the transitions between states occur as a consequence of one of two actions: heads or tails (H,T). The game finishes when the gambler enters either state 0 or 3—both 0 (loss) and 3 (victory) are absorbing states.

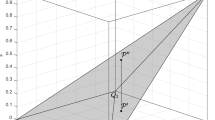

The dynamics of the game is represented in Graph 1 (Fig. 1, next page).

We can naturally track the possible scenarios of the game (i.e. ending either with victory or with loss) as possible paths in the graph. For instance, the path HH (tossing heads twice) leads to victory and the corresponding transitions between the states are 1→2→3.

There are infinitely many paths (scenarios) that lead to victory: HTHH (1→2→1→2→3); HTHTHH (1→2→1→2→1→2→3); HT…HTHH (1→2→…→2→1→2→3), etc.

The possible paths for losing the game are: T (1→0); HTT (1→2→1→0); HT…HTT (1→2→…→2→1→0), etc.

So, the space of all possible paths that settle the game consists of sequences {(HT)nHH, (HT)nT: \({\text{n}}\!\in\!{\mathbb{N}}\)} ((HT)n means the sequence HT repeated n times, i.e. HT….HT). They will form the set of elementary events Ω* in a new probability space that describes all the scenarios. It is straightforward to compute their probabilities. If P(H) = p; P(T) = q, then

-

P*(HH) = pp = p2

-

P*(HTHH) = pqpp = pqp2

-

P*((HT)n HH) = (pq)npp = (pq)np2

-

…

-

P*(T) = q

-

P*(HTT) = (pq)q

-

P*(HTHTT) = (pq)2q

-

…

-

P*((HT)n T) = (pq)nq

The probability of winning the game is

For p = 1/2 (a fair coin) it is 1/3.

The probability can be computed in a more direct way by examining the graph and writing down a simple system of equations. Let P(n) denote the probability of winning the game, which starts in state n. Obviously, P(0) = 0 (if we are in state 0, we have already lost), and P(3) = 1 (if we are in state 3, we have already won). What are the probabilities P(1), P(2)? We reason in an intuitive way: being in state n, we can either:

-

toss heads (with probability p)—then we are transferred to the state n + 1, and our chance of winning is P(n + 1);

-

toss tails (with probability q)—then we are transferred to the state n − 1, and our chance of winning is P(n − 1).

So, P(n) = pP(n + 1) + qP(n − 1). In our example this means that

-

P(0) = 0

-

P(1) = pP(2) + qP(0)

-

P(2) = pP(3) + qP(1)

-

P(3) = 1

The solution is P(1) = \( \frac{{p^{2} }}{1 - pq} \), which is not a surprise. As our example is very simple, the difference in the complexity of computations (i.e. within the probability space versus directly solving the equations obtained from the graph) is not really big; however, for even slightly more complex systems, the gain in the complexity of computations is enormous!Footnote 10

A crucial feature of the process is its memorylessness (Markov property): the coin does not remember the history of tosses. Moreover, the probabilities of actions (H, T) are fixed throughout the whole history (homogeneity). Generally speaking, the future depends only on the present state of the process, not on the past. This applies to our ball-drawing game: anytime we restart the game, the probabilities remain the same as history does not matter. If our Conditional Game concerns the conditional α (e.g. ¬W → G), then Gα denotes the corresponding graph. We might say that the appropriate class of graphs defines the semantics for the conditionals. In general, there are many possibilities of representing a certain stochastic process by Markov graphs; usually we are looking for the simplest representation.

The dynamics of the game is represented in Graph 2 (Fig. 2, next page).

It consists of three states: START, WIN, LOSE. According to our definition, the game proceeds as follows:

We start (obviously) in START. Three moves are possible:

-

If we draw a White ball, we get back to START (and the game restarts);

-

If we draw a Green ball, we go to WIN;

-

If we draw a Red ball, we go to LOSE.

WIN and LOSE are absorbing states: if we get into them, we remain there and the game stops.

The transition probabilities of getting from one state to another are given by (we use the standard notation here)Footnote 11:

-

pSTART, START = P(W) = r = 10/20

-

pSTART, WIN = P(G) = p = 8/20

-

pSTART, LOSS = P(R) = q = 2/20

-

pLOSS, LOSS = 1

-

pWIN, WIN = 1.

-

(All the other transition probabilities are equal to 0).Footnote 12

This is the simplest (and standard) representation of this random process as a Markov graph. The labels for the states indicate the current state of the game (i.e. whether we have won, lost, or perhaps the game (re)starts), and the edges are labeled by the drawn balls (they correspond to the actions: a White/Green/Red ball was drawn from the urn).

A big advantage of such a representation is that it shows the possible scenarios in the game in a straightforward way: we start in START, and we simply travel through the graph until we get into one of the absorbing (deciding) states WIN, LOSS. We can also compute the probability of winning (losing) the game in a very simple way. The graph representation allows us to understand the dynamics of the game—and this will be particularly important in more complex situations, when we distinguish between the local and global interpretation of conditionals (cf. Kaufman 2004).

Let P(START) denote the (as yet unknown) probability of winning the game (i.e. the probability of reaching WIN starting from START). We write down the respective equation for this simple graphFootnote 13:

We solve it, obtaining:

So finally:

Fact 1.1

So the probability P(¬W → G) is \( \frac{p}{p + q} \). In our particular case, it is 8/10 (which is also the intuitive answer).

1.2 The Probability Space

There is a unique probabilistic space corresponding to the graph Gα (where α = ¬W → G) that describes the possible “travel histories” (or paths) in this graph.Footnote 14 The possible histories are of two kinds: WnG (we win the game), and WnR (we lose the game), where \({\text{n}}\!\in\!{\mathbb{N}}\). These paths will serve as elementary events in the constructed space.

The probability space we construct will therefore satisfy the following conditions:

-

i.

It ascribes a certain probability to every possible course of The Conditional Game ¬W → G which settles the game. In particular, it will be possible to compute the probability of our victory (and loss).

-

ii.

It takes into account the initial probabilities of drawing a White, Green or Red ball from the urn, i.e. its definition reflects the probabilities from the space Ξ = (Ω, Μ, P).

-

iii.

As the aim is to compute the probability of a particular conditional α (e.g. α = ¬W → G), it is important to make our space as convenient as possible for this particular task (in particular, it is a minimal space).Footnote 15

Let Ξ = (Ω, Μ, P) be a probability space, where Ω is the set of elementary events: Ω = {W, G, R} with probabilities respectively P(W) = r, P(G) = p, P(R) = q; Μ is the σ-field of all events, and P is the probability function defined on M.

Definition 1.2 The probability space Ξα corresponding to the conditional α = ¬W → G is the triple Ξα = (Ωα, Μα, Pα), where:

-

1.

Ωα is the set of elementary events corresponding to sequences of events from the space Ξ, which decide The Conditional Game α;

-

2.

Μα is the σ-field of all events;

-

3.

Pα is the probability function defined on Mα.

Formally:

-

Ωα = {WnG, WnR: \({\text{n}} \in {\mathbb{N}}\)}.Footnote 16

-

Μα = 2Ωα;

-

Pα(WnG) = rnp (for \({\text{n}} \in {\mathbb{N}}\));

-

Pα(WnR) = rnq (for \({\text{n}} \in {\mathbb{N}}\)).

Observe that our probability space was defined with respect to a particular conditional α: ¬W → G, which is not expressible in the language corresponding to the initial probability space Ξ. The definition is formally correct; in particular, the condition Pα(Ωα) = 1 is fulfilled (which can be checked by an easy computationFootnote 17). Ωα is a countable set, so (due to σ-additivity), the probability measure Pα extends to Μα, i.e. Pα is defined for any set A ⊆ Ωα:

It should be noted that, from a formal point of view, speaking of the probability of the sentence ¬W → G is a kind of abuse of language, because de facto we compute the probability Pα of the event [¬W → G]α, which is the interpretation of the sentence ¬W → G in the probability space Ξα. But we will use this expression freely, as it does not lead to misunderstanding (and in the literature it is standard to speak of the probability of sentences). But we stress here that there is always an interpretation of the sentence defining the game in an appropriate probability space: this interpretation makes clear the sense in which we talk of the probability and how we defined the game (i.e. how we defined the conditions for our victory). Being meticulous in identifying the set of events in the concrete probability space, which is the interpretation of the sentence ¬W → G, will be of particular importance when the context (i.e. the rules of the game) changes (we will discuss it later). In such situations, the interpretation of the sentence ¬W → G will differ in different probability spaces, corresponding e.g. to different understandings of the conditional (local and global).

We want to compute the probability of the sentence ¬W → G. It has its counterpart WINα in the probability space Ξα = (Ωα, Μα, Pα), consisting of the possible scenarios leading to victory:

-

$$ {\text{WIN}}_{\upalpha} = [\neg W \to G]_{\upalpha} = \left\{ {{\text{W}}^{\rm n} {\text{G}}\!\!:{\text{n}} \in {\mathbb{N}}} \right\}, $$

-

by LOSSα we denote the event leading to our defeat in the game:

-

$$ {\text{LOSS}}_{\upalpha} = \, [\neg W \to R]_{\upalpha} = \{ {\text{W}}^{\rm n} {\text{R}}\!\!:{\text{n}} \in {\mathbb{N}}\} .$$

Fact 1.3

\( {\text{P}}_{\upalpha} ({\text{WIN}}_{\upalpha} ) \, = \mathop \sum \limits_{n = 0}^{\infty } pr^{n} = \frac{p}{1 - r} = \frac{p}{p + q} \)

Fact 1.4

\( {\text{P}}_{\upalpha} ({\text{LOSS}}_{\upalpha} ) \, = \mathop \sum \limits_{n = 0}^{\infty } qr^{n} = \frac{q}{1 - r} = \frac{q}{p + q} \)

Fact 1.5

\( {\text{P}}_{\upalpha} ({\text{WIN}}_{\upalpha} ) + {\text{P}}_{\upalpha} ({\text{LOSS}}_{\upalpha} ) \, = \frac{p}{p + q} + \frac{q}{p + q} = 1 \)

Obviously, the following two facts are true:

Fact 1.6

\( {\text{P}}_{\upalpha} ( {\text{WIN}}_{\upalpha} ) = {\text{P(G|}}\neg {\text{W}}) \)

Fact 1.7

\( {\text{P}}_{\upalpha} ( {\text{LOSS}}_{\upalpha} ) = {\text{P(R|}}\neg {\text{W}}) \)

where P is the probability measure in the initial probability space Ξ = (Ω, Μ, P), which was the basis for our construction of the space (of possible game scenarios) Ξα = (Ωα, Μα, Pα).

To sum up: we have given a semantics for conditional sentences α (consisting of atomic sentences in the initial probability space), which allows us to compute their probabilities in a straightforward way. This semantics is given by an appropriate graph Gα and the corresponding (unique) probability space Ξα. The correlation between the values of the probability Pα of the conditional and the conditional probability P in the space Ξ is given by the Facts 1.6 and 1.7.Footnote 18

1.3 A Comparison with Kaufmann’s (Stalnaker Bernoulli) Space

A similar approach to the problem of computing the probability of conditionals is presented in Kaufmann (2004, 2005, 2009, 2015). It is based on the Stalnaker Bernoulli model (cf. also van Fraassen (1976); this terminology is used there and in Kaufmann’s papers). Here we indicate the most important similarities and differences between this model and our stochastic graphs semantics.

For the sake of this comparison, consider Kaufmann’s construction of the probability space for the conditional ¬W → G. Using a notation similar to ours, the appropriate space would have the form:

-

Ξ* = (Ω*, Μ*, P*), where

-

Ω* = the set of infinite sequences consisting of the events W, G, R (Kaufmann speaks of worlds being elements of these sequences);

-

Μ* is the σ-field defined over Ω*;

-

P* is a probability measure defined on M* in an appropriate way.

An important feature of the definition of P* is the fact, that any particular (infinite) sequence of balls has null probability (we consider it to be a sequence of events coming from the space Ξ), but the appropriate “bunches” of such sequences have non-zero probabilities. Considering the sentence ¬W → G, such bunches are constructed from sequences starting with an initial finite (perhaps empty) sequence of White balls, followed by a Green or Red ball, after which an arbitrary infinite sequence of balls follows.

In this way, Kaufmann is able to give a method of computing the probability of victory in The Conditional Game, i.e. P*([¬W → G]*). It corresponds exactly to our intuitions: this computation amounts to estimating the fraction of sequences starting with WnG (i.e. the winning sequences) within all the sequences settling the game (i.e. starting with WnG or WnR).Footnote 19 And of course, this value is exactly the same as the value computed here.

Specific features of Kaufmann’s construction become even more clearly visible when we try to give it a graph-semantical presentation. First, Kaufmann’s method leads to all possible graphs at once: every such graph corresponds to a possible conditional in the language in question. In our graph (and the corresponding probability space) we can only play the game¬W → G (and—after an obvious relabeling WIN/LOSS—also the “dual game” ¬W → R). Secondly, in our approach the game terminates after entering WIN or LOSS (so the sequences, being elementary events in the probabilistic space Ξα are finite); but under Kaufmann’s approach, the game (formally speaking) lasts infinitely long. Both these approaches lead to the same results. The main difference lies in a certain redundancy appearing in Kaufmann’s model, which might be important when more complicated cases are discussed.

To sum up, the advantage of stochastic-graph semantics is its intuitiveness (it is connected with the particular conditional in a natural way) and it gives us an easy method of computing the probability of the conditional. It is formally correct (there is a corresponding formally defined probability space), but this semantics reveals its full power in more complicated cases in which the use of Kaufmann’s semantics might face technical complications of a combinatorial character (the probability space might turn out to be very intricate). We suppose that in some cases these technical complications might even make the treatment impossible.Footnote 20

2 Two-Urn Examples: Global and Local Conditional Probabilities

Consider the following example (which is similar to the example analyzed in Kaufman (2004) and Khoo (2016)):

We have two urns I, II. In urn I there are 2 White, 9 Green and 1 Red ball. In the urn II there are 50 White, 1 Green and 9 Red balls. Assume that urn I can be chosen with probability 1/4 and urn II with probability 3/4.Footnote 21

We have chosen these particular values to simplify the comparison of our results with the results given in Kaufman (2004). But as our considerations have a general character, we use the following symbols: λ1,λ2 are the probabilities of choosing urn I and II; r1, p1, q1 and r2, p2, q2—are the probabilities of a White/Green/Red ball within the respective urns I and II.

Again, we want to compute the probability of the sentence If not-White, then Green (i.e. ¬W → G). To do this, we consider The Conditional Game and try to estimate the chance of winning it. This requires a precise description of the rules of the game. An urn must first be chosen and then a ball from this urn has to be drawn. If we draw a Green ball (from any of the urns I, II), we win; if we draw a Red ball (for any urn), we lose. But what happens when we draw a White ball? A priori, the following decisions are possible:

-

(1)

We win the game.

-

(2)

We lose the game.

-

(3)

The game is undecided and stops.

-

(4)

The game is undecided. We put the ball back (to the urn it was drawn from), and restart the game: we draw the urn and the ball from it.

-

(5)

The game is undecided. We put the ball back (to the urn it was drawn from), but remain within the chosen urn (i.e. we keep drawing the ball from the same urn until the game is decided).

Just like we did in the one-urn model, we can agree that the options (1), (2) and (3) are not attractive. This means that we have (4) and (5) at our disposal: after drawing a White ball we can restart the game from the beginning or remain within the drawn urn and continue the game within it. A priori none of these variants is superior and so we have to make a decision. This means that we have two possible variants of The Conditional Game—and in particular two possible interpretations of the sentence in question. Depending on the chosen variant, we have to adjust the formal constructions of it. Both these variants of the game correspond to the global and local interpretations of the conditional discussed in the literature (Kaufmann 2004; Khoo 2016). We will use this terminology, in particular denoting global as the conditional defined (interpreted) by (4), and local as the conditional defined (interpreted) by (5).Footnote 22

When discussing these two possible variants we will see the advantage of the graph semantics—it shows clearly the differences and allows us to compute the corresponding probabilities in a simple (and mathematically correct) way.

3 The Global Interpretation

Consider the game with a global interpretation of the conditional (i.e. given by the condition (4)). In this case, we can give several graph representations of the game where the one we give is the simples one

3.1 A Stochastic Graph Representation

Graph 3 (Fig. 3, next page) illustrates the mechanism in the Global Conditional Game.

The transition probabilities in this graph are given in the following way:

-

pSTART, I = λ1 = 3/4

-

pSTART, II = λ2 = 1/4

-

pI, START = r1 = 2/12

-

pI, WIN = p1 = 9/12

-

pII, LOSS = q1 = 1/12

-

pII, START = r2 = 50/60

-

pII, WIN = p2 = 1/60

-

pII, LOSS = q2 = 9/60

-

pWIN, WIN = 1

-

pLOSS, LOSS = 1.Footnote 23

Let us (as before) denote by P(START) the probability of getting (finally) to the state WIN from the state START. In addition, let P(I), P(II) denote the probabilities of getting (finally) to the state WIN from the states I, II respectively (perhaps after several moves). Just as in the case of the one-urn model, we compute P(START) using the appropriate system of equations for the Markov graph:

-

P(START) = λ1P(I) + λ2P(II)

-

P(I) = p1 + r1P(START)

-

P(II) = p2 + r2P(START)

After solving this system, we obtain the formula:

Fact 3.1

Here we have made use of the fact that 1 − r1 = (p1 + q1); 1 − r2 = (p2 + q2).

3.2 The Probability Space

We will construct a probability space Ξ globα = (Ω globα , Μ globα , P globα ) for the game defined in this way.

Let W1, W2, G1, G2, R1, R2 denote the drawing of a White, Green or Red ball from urns I or II respectively. In the case of the global interpretation, the paths in the graph show us which sequences of results (draws) are possible. The set of sequences, representing the possible histories of The Global Conditional Game is: {G1, G2, R1, R2, W1G1, W1G2, W2G1, W2G2, W1R1, W1R2, W2R1, W2R2, W1W1G1, W1W1G2, W1W2G1, W1W2G2 ,….}. Our victories are represented by sequences ending with G1or G2 (our losses—respectively—R1 or R2).

The important feature of this case is that after drawing (and replacing) the White ball from urn I, we can in the next step draw any ball from any urn—e.g. Green from urn II. This is because returning the White ball to any urn restarts the whole procedure—again we select an urn and draw a ball from it. This is indicated by the arrows in the graph leading from I and II to START (and again to any urn and any ball). In particular, this means that in the probability space we will construct, there will be “mixed” sequences: first, there are White balls (from any of the urns), then the decisive ball (from any urn).

Consider the probability space ΞU2 = (ΩU2, ΜU2, PU2),where ΩU2 is the set of elementary events: ΩU2 = {W1, G1, R1, W2, G2, R2}, with probabilities λ1r1, λ1p1, λ1q1, λ2r2, λ2p2, λ2q2 respectively; ΜU2 is the σ-field of all events; PU2 is the probability measure on MU2. We assume that λ1 + λ2 = 1; p1 + q1 + r1 = 1; p2 + q2 + r2 = 1.Footnote 24

Definition 3.2 The space Ξ globα for the globally understood conditional α = ¬W → G is the triple Ξ globα = (Ω globα , Μ globα , P globα ) where:

-

1.

Ω globα is the set of elementary events—these are the sequences of events from the space ΞU2, which decide The Global Conditional Game α;

-

2.

Μ globα is the σ-field of all events;

-

3.

P globα is a probability measure on M globα .

Formally:

Ω globα = {(W1/W2)n(G1/G2), (W1/W2)n(R1/R2): n∈ℕ}, where by (W1/W2)n we denote any sequence of White balls of length n, coming from any urn; similarly (G1/G2), (R1/R2) denotes any Green (Red) ball.

So these sequences consist of a (possibly empty) series of White balls coming from any urn, followed by a Green or Red ball (from any urn).

Μ globα = P(Ω globα ) (here P denotes the powerset of Ω globα )

The probability P globα is defined on elementary events in the following way:

\( {\text{P}}_{\upalpha}^{\rm glob} (({\text{W}}_{1}/{\text{W}}_{2})^{\text{n}} {\text{G}}_{1}) \, = \, (\lambda_{1} r_{1} )^{\rm k} (\lambda_{2} r_{2} )^{{{\text{n}} - {\text{k}}}} (\lambda_{1} p_{1} ) \)—when k balls in the sequence \( ({\text{W}}_{1}/{\text{W}}_{\text{2}})^{\text{n}} {\text{G}}_{1} \) are of the kind W1, and n − k balls are of the kind W2, for \({\text{n,k}}\!\in\!{\mathbb{N}}\); 0 ≤ k ≤ n

\( {\text{P}}_{\upalpha}^{\rm glob} (({\text{W}}_{1} / {\text{W}}_{\text{2}})^{\text{n}} {\text{G}}_{2} ) \, = \, (\lambda_{1} r_{1} )^{\rm k} (\lambda_{2} r_{2} )^{{{\text{n}} - {\text{k}}}} (\lambda_{2} p_{2} ) \)—when k balls in the sequence \( ({\text{W}}_{1} / {\text{W}}_{\text{2}})^{\text{n}} {\text{G}}_{1} \) are of the kind W1, and n–k balls are of the kind W2, for \({\text{n,k}}\!\in\!{\mathbb{N}}\); 0 ≤ k ≤ n

\( {\text{P}}_{\upalpha}^{\text{glob}}({\text{W}}_{1}/ {\text{W}}_{\text{2}})^{\text{n}} {\text{R}}_{1}\, = \, (\lambda_{1} r_{1} )^{\text{k}} (\lambda_{2} r_{2} )^{{{\text{n}} - {\text{k}}}} (\lambda_{1} q_{1} ) \)—when k balls in the sequence \(({\text{W}}_{1}/ {\text{W}}_{\text{2}})^{\text{n}} {\text{R}}_{1}\) are of the kind W1, and n–k balls are of the kind W2, for \({\text{n,k}}\!\in\!{\mathbb{N}}\); 0 ≤ k ≤ n

\( {\text{P}}_{\upalpha}^{\text{glob}} (({\text{W}}_{1}/ {\text{W}}_{\text{2}})^{\text{n}} {\text{R}}_{2} ) \, = \, (\lambda_{1} r_{1} )^{\text{k}} (\lambda_{2} r_{2} )^{{{\text{n}} - {\text{k}}}} (\lambda_{2} q_{2} ) \)—when k balls in the sequence \( ({\text{W}}_{1}/ {\text{W}}_{\text{2}})^{\text{n}} {\text{R}}_{2} \) are of the kind W1, and n–k balls are of the kind W2, for \({\text{n,k}}\!\in\!{\mathbb{N}}\); 0 ≤ k ≤ nFootnote 25

-

The chance that our game ends at the (n + 1)-st move, and the last ball is G1 is:

-

$$ (\lambda_{1} r_{1} + \lambda_{2} r_{2} )^{\text{n}} (\lambda_{1} p_{1} ) \, ({\text{for n}}\!\in\!{\mathbb{N}}) $$

-

So:

-

The chance, that the game lasts (n + 1) moves and the last ball is G1 is (λ1r1 +λ2r2)n(λ1p1).

-

Similarly:

-

The chance, that the game lasts (n + 1) moves and the last ball is G2 is (λ1r1 +λ2r2)n(λ2p2).

-

So, the chance that the game ends at the (n + 1)-th move and we win, is:

-

(λ1r1 +λ2r2)n(λ1p1 + λ2p2).

We want to compute the probability of the conditional ¬W → G. It has its counterpart WIN globα in the probability space Ξ globα = (Ω globα , Μ globα , P globα ). This set consists of all game scenarios leading to the victory:

-

$$ {\text{WIN}}_{\upalpha}^{\text{glob}} = \, [\neg W \to G]_{\upalpha}^{\text{glob}} = \left\{ {\left( {{\text{W}}_{1} / {\text{W}}_{2} } \right)^{\text{n}} \left( {{\text{G}}_{ 1} / {\text{G}}_{ 2} } \right)\!\!:{\text{ n}} \in {\mathbb{N}}} \right\}$$

-

The event We lose in this game is the set:

-

$$ {\text{LOSS}}_{\upalpha}^{\text{glob}} = \, [\neg W \to R]_{\upalpha}^{\text{glob}} = \left\{ {\left( {{\text{W}}_{1} / {\text{W}}_{2} } \right)^{\text{n}} \left( {{\text{R}}_{ 1} / {\text{R}}_{ 2} } \right)\!\!:{\text{ n}} \in {\mathbb{N}}} \right\}$$

We compute P globα (WIN globα ) as a sum of geometric series and obtain the (already known) result:

Fact 3.3

Similarly:

Fact 3.4

Fact 3.5

3.3 Kaufmann’s Equation (The Global Version)

In our model, some intuitive considerations concerning the chances to win can be formalized. Kaufmann (2004, 586) examines an equation which is intuitively clear but formally not quite precise:

Imagine someone who has already observed that the drawn ball is not White (so the game is already decided). What is the chance that it is Green? The knowledge, that the ball is not White gives us some indirect information about the urn it (probably) comes from. This information is contained in the expressions P(I∣¬W) and P(II∣¬W). Now, if the ball is from urn I, the chance of the conditional ¬W → G is P(¬W → G∣I), if it is from urn II, the chance is P(¬W → G∣II). This leads to the equation above.

The weakness of this equation consists in the fact that it is not clear where (i.e. in what probability space) the probability function P is defined. In particular, there should be an event corresponding to the conditional ¬W → G, but Kaufmann does not define an appropriate probability space.Footnote 26 We can give a precise counterpart of this equation in the following way (using the observation, that drawing urn I/II can be written as the event \(\left({\text{W}}_{1} \vee {\text{G}}_{1} \vee {\text{R}}_{1}\right)\), resp.: \(\left({\text{W}}_{2} \vee {\text{G}}_{2} \vee {\text{R}}_{2}\right)\)):

-

Instead of \(P\left({\text{I}}| \neg {\text{W}}\right)\) we take: \({\text{P}}_{\text{U2}} (({\text{W}}_{1} \vee {\text{G}}_{1}\vee {\text{R}}_{1})|\,(\neg {\text{W}}_{1} \wedge \neg {\text{W}}_{2}))\).

-

Instead of \(P\left({\text{II}}| \neg {\text{W}}\right)\) we take: \({\text{P}}_{\text{U2}} (({\text{W}}_{2} \vee {\text{G}}_{2}\vee {\text{R}}_{2})|\,(\neg {\text{W}}_{1} \wedge \neg {\text{W}}_{2}))\).

-

Instead of \(P\left(\neg{\text{W}} \to {\text{G}}|{\text{I}}\right)\) we take: \({\text{P}}_{\text{U2}} ({\text{G}}_{1} | ({\text{G}}_{1}\vee {\text{R}}_{1}))\).

-

Instead of \(P\left(\neg{\text{W}} \to {\text{G}}|{\text{II}}\right)\) we take: \({\text{P}}_{\text{U2}} ({\text{G}}_{2} | ({\text{G}}_{2}\vee {\text{R}}_{2}))\).Footnote 27

The conditional probabilities in the (simple) space ΞU2 are given by:

-

$$ {\text{P}}_{\text{U2}} (({\text{W}}_{1} \vee {\text{G}}_{1} \vee {\text{R}}_{1} )|(\neg {\text{W}}_{1} \wedge \neg {\text{W}}_{2} )) \, = \frac{{\lambda_{1} \left( {p_{1} + q_{1} } \right)}}{{\lambda_{1} p_{1} + \lambda_{2} p_{2} + \lambda_{1} q_{1} + \lambda_{2} q_{2} }} = 5/8 $$

-

$$ {\text{P}}_{\text{U2}} (({\text{W}}_{2} \vee {\text{G}}_{2} \vee {\text{R}}_{2} )|(\neg {\text{W}}_{1} \wedge \neg {\text{W}}_{2} )) \, = \frac{{\lambda_{2} \left( {p_{2} + q_{2} } \right)}}{{\lambda_{1} p_{1} + \lambda_{2} p_{2} + \lambda_{1} q_{1} + \lambda_{2} q_{2} }} = 3 /8 $$

-

$$ {\text{P}}_{\text{U2}} ({\text{G}}_{1} |({\text{G}}_{1} \vee {\text{R}}_{1} )) \, = \frac{{p_{1} }}{{(p_{1} + q_{1} )}} = \, 9 /10 $$

-

$$ {\text{P}}_{\text{U2}} ({\text{G}}_{2} |({\text{G}}_{2} \vee {\text{R}}_{2} )) \, = \frac{{p_{2} }}{{(p_{2} + q_{2} )}} = 1 /10 $$

So, finally:

which is formally correct, identical with the results known from the literature (Kaufmann 2004; Khoo 2016) and already established by Fact 3.3. We have here just made the intuitive considerations mathematically precise.

Two facts are of particular importance for the ongoing analyses:

Fact 3.6

\( \begin{aligned} {\text{P}}_{\upalpha}^{\text{glob}} (\neg W \to G) \, =\, & {\text{P}}_{\text{II}} ({\text{G}}_{1} |({\text{G}}_{1} \vee {\text{R}}_{1} )){\text{P}}_{\text{II}} (({\text{W}}_{1} \vee {\text{G}}_{1} \vee {\text{R}}_{1} )|(\neg {\text{W}}_{ 1} \wedge \neg {\text{W}}_{ 2} )) \\ & + {\text{P}}_{\text{II}} ({\text{G}}_{ 2} |({\text{G}}_{ 2} \vee {\text{R}}_{ 2} )){\text{P}}_{\text{II}} (({\text{W}}_{ 2} \vee {\text{G}}_{ 2} \vee {\text{R}}_{2} )|(\neg {\text{W}}_{1} \wedge \neg {\text{W}}_{2} )) \\ =\, & \frac{{p_{1} }}{{(p_{1} + q_{1} )}}\frac{{\lambda_{1} \left( {p_{1} + q_{1} } \right)}}{{(\lambda_{1} p_{1} + \lambda_{2} p_{2} + \lambda_{1} q_{1} + \lambda_{2} q_{2} )}} + \frac{{p_{2} }}{{(p_{2} + q_{2} )}}\frac{{\lambda_{2} \left( {p_{2} + q_{2} } \right)}}{{(\lambda_{1} p_{1} + \lambda_{2} p_{2} + \lambda_{1} q_{1} + \lambda_{2} q_{2} )}} \\ =\, & \frac{{\lambda_{1} q_{1} + \lambda_{2} q_{2} }}{{(\lambda_{1} p_{1} + \lambda_{2} p_{2} + \lambda_{1} q_{1} + \lambda_{2} q_{2} )}} \\ \end{aligned} \)

Fact 3.7

The structure of these formulas is very similar to the structure of the formulas for the probabilities of WINα and LOSSα in The (One-Urn) Conditional Game ¬W → G (cf. Facts 1.3 and 1.4). They correspond precisely to the situation where we have just one urn (call it Unew), where the probabilities of drawing a White, Green, or Red ball are respectively:

-

Pnew(W) = λ1r1 + λ2r2

-

Pnew(G) = λ1p1 + λ2p2

-

Pnew(R) = λ1q1 + λ2q2.

In this urn:

Consider the probability space Ξnew = (Ωnew, Μnew, Pnew), where Ωnew is the set of elementary events: W, G, R with probabilities respectively λ1r1 + λ2r2, λ1p1 + λ2p2, λ1q1 + λ2q2; Μnew is the σ-field of all events and Pnew is the probability measure defined on Mnew.

The following fact takes place:

Fact 3.8

It is important to observe that the probability space Ξnew has a universal character which means that it allows us to compute the probabilities of other conditionals as conditional probabilities. For example, the probability of β = ¬G → W can be computed within Ξnew simply as Pnew(W∣¬G). Of course, the appropriate probability space Ξ globβ = (Ω globβ , Μ globβ , P globβ ) will be different than Ξ globα = (Ω globα , Μ globα , P globα ), as it will consist of sequences of the form GnW, GnR. So P globβ (¬G → W) = Pnew(W∣¬G). We summarize these observations as:

Fact 3.9

For any probability space Ξ = (Ω, Μ, P), where Ω = {W,G,R}, there is another probability space Ξnew = (Ωnew, Μnew, Pnew), where Ωnew = {W, G, R} is the set of elementary events with probabilities λ1r1 + λ2r2, λ1p1 + λ2p2, λ1q1 + λ2q2 respectively; Μnew is the σ-field of all events, and Pnew is the probability measure defined on Mnew, such that:

For any globally interpreted conditional ¬X → Y, where X, Y ∈ {W, G, R} and X ≠ Y, and the space Ξ glob¬X→Y = (Ω glob¬X→ Y , Μ glob¬X→ Y , P glob¬X→ Y ):

Let us once again take a look at the formula in Fact 3.6. The terms \( \frac{{p_{1} }}{{(p_{1} + q_{1} )}} \) and \( \frac{{p_{2} }}{{(p_{2} + q_{2} )}} \) are just the probabilities of the conditional ¬W → G computed separately in the two urns I and II (or: the probabilities of winning The Conditional Game within these single urns). The more complicated terms \( \frac{{\lambda_{1} \left( {p_{1} + q_{1} } \right)}}{{(\lambda_{1} p_{1} + \lambda_{2} p_{2} + \lambda_{1} q_{1} + \lambda_{2} q_{2} )}} \) and \( \frac{{\lambda_{2} \left( {p_{2} + q_{2} } \right)}}{{(\lambda_{1} p_{1} + \lambda_{2} p_{2} + \lambda_{1} q_{1} + \lambda_{2} q_{2} )}} \) are conditional probabilities of being in a certain urn, provided we know that a non-White ball has been drawn. So we have a mathematically precise expression which corresponds exactly to Kaufmann’s intuitive formulas. Remember that The Global Conditional Game in two urns can be simulated with one urn, where the probabilities of White, Green and Red balls have been fixed in an appropriate way. This urn is universal (or: stable) in the sense that it can also be used for computing the probabilities of all globally interpreted conditionals formulated in the language with atomic sentences W,G,R.Footnote 28

4 Local Interpretation

4.1 The Stochastic Graph Representation

If we chose the local interpretation, i.e. option (5), we remain in the urn drawn in the first move until the game is decided. This means that we have different scenarios leading to the decision compared to the global case. We can give the simples graph representation of the game (just like in the global case).

Graph 4 (Fig. 4, next page) corresponds to graph 3. It has the same states (START, I,II,WIN,LOSS), but its structure (i.e. edges) is different.

The difference with respect to graph 3 (Fig. 3—illustrating the global case) is that it is no longer possible to get back to START from the states I, II. This is because in the local interpretation, we remain within an urn after we get into it. In particular, if we are already in one of the urns i (i = I, II), we have 3 possible actions (Fig. 4):

-

After drawing a White ball we are again in the same state i (with probability ri)

-

After drawing a Green ball we go to WIN (with probability pi)

-

After drawing a Red ball we go to LOSS (with probability qi)

WIN and LOSE are absorbing states: if we get in any of them, we remain there and the game stops.

The transition probabilities in the graph are given in the following way:

-

pSTART, I = λ1 = 1/4

-

pSTART, II = λ2 = 3/4

-

pI, I = r1 = 2/12

-

pI, WIN = p1 = 9/12

-

pI, LOSS = q1 = 1/12

-

pII, II = r2 = 50/60

-

pII, WIN = p2 = 1/60

-

pII, LOSS = q2 = 9/60

-

pWIN, WIN = 1

-

pLOSS, LOSS = 1Footnote 29

This Markov graph exhibits the dynamics of the game and allows for a very simple computation of the probability of victory. The respective system of equations is:

-

P(START) = λ1P(I) + λ2P(II)

-

P(I) = r1P(I) + p1

-

P(II) = r2P(II) + p2

After solving it, we obtain:

Fact 4.1

The paths in the graph show us, which sequences of draws are possible. Of course, in the local situation, the sequences are homogeneous: only one index appears in them as a green/red ball from urn I (II) can be preceded only by white balls from the same urn I (II). So the sequences form the following set:

4.2 The Probability Space

We construct the probability space Ξ locα = (Ω locα , Μ locα , P locα ) for the Local Conditional Game.

Consider again the probability space ΞU2 = (ΩU2, ΜU2, PU2), where ΩU2 is the set of elementary events ΩU2 = {W1, W2, G1, G2, R1, R2} with probabilities λ1r1, λ2r2, λ1p1, λ2p2, λ1q1, λ2q2. ΜU2 is the σ-field of all events, and PU2 is the probability measure on MU2 (cf. Definition 3.2.)

Definition 4.2 The space Ξ locα for the locally understood conditional α = ¬W → G is the triple Ξ locα = (Ω locα , Μ locα , P locα ), where:

-

1.

Ω locα is the set of elementary events—these are the sequences of events from the space ΩU2 which decide The Local Conditional Game α;

-

2.

Μ locα is the σ-field of all events;

-

3.

P locα is a probability measure on M locα .

Formally:

The probability measure P locα is defined on the elementary events in the following way:

-

$$ {\text{P}}_{\upalpha}^{\rm loc} \left( {{\text{W}}_{ 1}^{\rm n} {\text{G}}_{1} } \right) \, = \lambda_{1} \left( {r_{1} } \right)^{\rm n} p_{1} ,({\text{for n}}\!\in\!{\mathbb{N}}); $$

-

$$ {\text{P}}_{\upalpha}^{\text{loc}} \left( {{\text{W}}_{ 1}^{{\rm n}} {\text{R}}_{1} } \right) \, = \lambda_{1} \left( {r_{1} } \right)^{\text{n}} q_{1} ,({\text{for n}}\!\in\!{\mathbb{N}}); $$

-

$$ {\text{P}}_{\upalpha}^{\text{loc}} \left( {{\text{W}}_{ 2}^{{\rm n}} {\text{G}}_{2} } \right) \, = \lambda_{2} \left( {r_{2} } \right)^{\text{n}} p_{2} ,({\text{for n}}\!\in\!{\mathbb{N}}); $$

-

$$ {\text{P}}_{\upalpha}^{\text{loc}} \left( {{\text{W}}_{ 2}^{{\rm n}} {\text{R}}_{2} } \right) \, = \lambda_{2} \left( {r_{2} } \right)^{\text{n}} q_{2} ,({\text{for n}}\!\in\!{\mathbb{N}}). $$

So there are four types of elementary events in this space, corresponding to possible scenarios of The Local Conditional Game:

-

Urn I has been drawn, and then n balls W1, finally G1;

-

Urn I has been drawn, and then n balls W1, finally R1;

-

Urn II has been drawn, and then n balls W2, finally G2;

-

Urn II has been drawn, and then n balls W2, finally R2;

The counterpart of ¬W → G in this probability space (i.e. the set of scenarios leading to victory) is:

The event we lose is:

The probabilities of victory (and defeat) have been computed in a simple way with the use of the stochastic graph. We can also (as in the case of the global interpretation) compute it in the straightforward way: after summing up appropriate geometric series, we obtain the familiar results:

Fact 4.3

Fact 4.4

4.3 Kaufmann’s Equation (The Local Version)

We can again compare these formalisms and computations with the intuitive (but informal) computations from Kaufman (2004, 586):

In the local interpretation, we have P(I), P(II)—not the conditional probabilities P(I∣¬W), P(II∣¬W)! We can formalize Kaufmann’s formulas in the following way:

Finally, we have two facts, which are exact counterparts of Kaufmann’s intuitive formulas:

Fact 4.5

Fact 4.6

To summarize: the computation of the probability of the locally‑interpreted conditional is based on the probabilities of the sentence ¬W → G computed separately in urn I and urn II (which are \( \frac{{p_{1} }}{{(p_{1} + q_{1} )}} \) and \( \frac{{p_{2} }}{{(p_{2} + q_{2} )}} \)). They are then summed up with weights equal to the probabilities of drawing the urn I or II (i.e. λ1 and λ2 respectively).

4.4 The Global Versus the Local Interpretation

We have shown that the conditional can be formally interpreted in at least two ways. Both these interpretations rely on different assumptions concerning the rules of The Conditional Game (Fig. 5).

To stress the difference, consider a generalization of the two-urn example, namely assume that we have n different urns. The main question to decide is: what should we do when the antecedent of the conditional is not fulfilled—i.e. when a White ball has been drawn from urn i?Footnote 30 According to the rules, we repeat the draw, but we have at our disposal only those urns which are somehow connected with the urn i via a certain relation R.Footnote 31

If R is the identity relation, it means that every urn i is connected only with itself. So, after drawing White from urn i, we put the ball back and repeat the draw within the urn i. So in this case we have the local interpretation of the conditional.

If R is the full relation, it means that all urns are connected with each other. Whereas previously (in the local case), after drawing a white we returned the ball to the urn i and restarted the game within that particular urn i—here we select an urn again and draw the ball from this urn. In this case, we have the global interpretation of the conditional.

These two approaches are in a sense two extremes (and they are based on the “granularity” of the division of the set of urns), and many intermediate approaches are possible.

What are the important consequences of distinguishing between the local and global interpretation of the conditional? We will indicate only a few of them:

Under the local interpretation, the number of White balls in the urns does not matter. It means that the probability of the conditional is not dependent on the fact of how probable (or improbable) the antecedent is (i.e. what the probability is of drawing a non-White ball). It follows from the fact that the probability of the conditional in any of the urns depends not on the number of White balls but only on the proportion of Green balls to non-White balls (which is expressed by the formula \( \frac{{p_{i} }}{{(p_{i} + q_{i} )}} \)). Imagine that we put 1010 additional White balls into the urn: the probability of the conditional will not change (of course, on average, we will have to wait longer for the result). In other words: the degree of counterfactuality of a sentence has no influence on its logical (mathematical) probability but it influences the magnitude which we might call its “practical probability”. This issue is discussed elsewhere.Footnote 32

Under the global interpretation, the probability of the antecedent is important for estimating the probability of the conditional (again, we mean the probability of the event non-White was drawn in the initial space ΞU2 = (ΩU2, ΜU2, PU2)).The following example will illustrate this: urn I contains 100 Green balls, 1 Red ball, and 10100000 White balls. The probability of drawing this urn is 99/100. Urn II contains 1 Green ball, 100 Red balls and 1 White ball, and the probability of drawing urn II is 1/100. The probability of the (global) conditional ¬W → G is approximately 1/100, because we have virtually no chance to decide the game within urn I. The game will therefore (with probability almost 1) be decided in urn II. This is the consequence of the fact that drawing a non-White ball from urn I is highly improbable.

In particular, it follows from the considerations above that The Global Conditional Game can be reduced to The Conditional Game in one urn Unew. This new urn is universal in the sense characterized by the Fact 3.9. This is not possible for The Local Conditional Game. It follows from the fact that the probability of the locally interpreted conditional is independent from the number of white balls in the urns.

5 Lewis’s Triviality Result: PC = CP?

In the discussion concerning the probabilities of conditionals we have to take into account the PC = CP thesis, and the results of Lewis (1976) (and the ensuing discussion).Footnote 33

Observe first, that this thesis was considered to be the expression of certain intuitions which are usually presented by the following, oft-quoted claims of Ramsey and van Fraassen:

If two people are arguing ‘If p will q?’ and both are in doubt as to p, they are adding p hypothetically to their stock of knowledge and arguing on that basis about q… We can say that they are fixing their degrees of belief in q given p (Ramsey 1929, 247).

What is the probability that I throw a six if I throw an even number, if not the probability that: if I throw an even number, it will be a six? (van Fraassen, 1976, 273).

Generally speaking, they express the view that the probability of the conditional is somehow dependent on the conditional probability. Applying it to the example of drawing balls from one urn (paragraph 1): if we want to know the probability of the conditional If the ball is not White, then it is Green, we have to compute the (usual) conditional probability Green was drawn under the condition non-White was drawn.

According to the widely-accepted interpretation of the PC = CP thesis, this statement should be written as:

And of course, in this form it is immediately subject to Lewis’s criticism: accepting standard assumptions concerning the properties of the probability measure and of the counterfactual leads us to the (obviously) false claim, that:

\( {\text{P}}(\neg W \to G) \, =\, {\text{ P}}({\text{G}})\) Footnote 34

But in the light of the semantics for conditionals proposed here it becomes obvious that even the very formulation of the thesis is not correct. The probability of the conditional ¬W → G can only be defined in the probability space Ξα, as there is no event, corresponding to this sentence within the initial probability space Ξ. Hayek’s numerical arguments concerning a similar example show it clearly enough (Hajek 2011, 4). So, if we are going to formulate the thesis PC = CP concerning the conditional ¬W → G in a formally proper way, it surely cannot be:

(PC = CP): P(¬W → G) = P(G|¬W), where P is the probability measure in the initial probability space Ξ.

But notice that in fact, the following equality holds (Fact 1.6):

\( ({\mathbf{P}}_{{\neg {\mathbf{W}} \to {\mathbf{G}}}} {\mathbf{C}} \, = \, {\mathbf{CP}})\!\!:{\text{P}}_{{\neg {\text{W}} \to {\text{G}}}} (\neg W \to G) \, = {\text{P}}({\text{G}}|\neg {\text{W}}), \) where on the left side we have the probability function Pα from the probability space Ξα (α = ¬W → G) and on the right side we have the probability function P defined in the initial probability space Ξ.

Two remarks follow:

-

(1)

This formulation corresponds to the intuitions of Ramsey and van Fraassen—it shows how to reduce the difficult problem of estimating the probability of conditionals to the simpler problem of computing the conditional probabilities in the initial probability space Ξ;

-

(2)

This thesis can be formally proven—it follows trivially from Fact 1.6.

What about the more complicated two-urn example? In this case, the problem of the dependence of the probability of the conditional ¬W → G, and the conditional probabilities can be given a weaker and a stronger interpretation.

Under the weaker interpretation, we expect only that calculating the probability of the counterfactual ¬W → G is possible if we know the conditional probabilities P(G∣¬W), calculated separately in urns I and II. This means that we expect that there is a formula giving the probability of the conditional, taking the conditional probabilities as arguments.

Under the stronger interpretation, we expect that the probability of the counterfactual ¬W → G is equal to a certain conditional probability Px(G∣¬W) in a suitably-constructed probability space X.

Facts 3.6 and 4.3 allow us to formulate the proper versions of the PC = CP principle under the weaker interpretation: on the left side of the equality (equalities) there is the value of the probability of the conditional α in appropriate probability spaces Ξ globα and Ξ locα , and on the right side there is a certain function taking the appropriate conditional probabilities in the space ΞU2 = (ΩU2, ΜU2, PU2) as arguments. The formulas for the conditional ¬W → G will have the following forms:

where I and II are abbreviations for \( ({\text{W}}_{1} \vee {\text{G}}_{1} \vee {\text{R}}_{1})\, {\text{and}}\, ({\text{W}}_{2} \vee {\text{G}}_{2} \vee {\text{R}}_{2})\) respectively.Footnote 35

Notwithstanding the fact that the right sides of these equalities are quite extended, we must agree that they conform to Ramsey’s intuitions: they reduce the probability of the conditional to the conditional probabilities \({\text{P}}_{\text{U2}} ({\text{G}}_{1} | ({\text{G}}_{1}\vee {\text{R}}_{1}))\, {\text{and}}\, {\text{P}}_{\text{U2}} ({\text{G}}_{2} | ({\text{G}}_{2}\vee {\text{R}}_{2}))\) summed up with certain weights (which depend on the interpretation of the conditional as local or global). This is obvious for the local interpretation; for the global interpretation, it is evident from Fact 3.6. And it is clear that the probability of the conditional ¬W → G (interpreted globally) is equal to the conditional probability in the new urn Unew (Fact 3.8).

For the global interpretation of the conditional, an even stronger version can be formulated in the form: \( {\text{P}}_{{\neg {\text{W}} \to {\text{G}}}}^{\text{glob}} \)C = CPnew, where Pnew is the probability in the suitable probability space Ξnew = (Ωnew, Μnew, Pnew) (cf. Fact 3.8). It should be stressed that this space (according to the remarks at the end of paragraph 3) has a stable character: it can be used to compute the probabilities of other conditionals built from the atomic sentences W,G,R.Footnote 36

Observe, that a natural counterpart of the space Ξnew = (Ωnew, Μnew, Pnew) for the local interpretation does not exist. Of course, we can trivially define an artificial probability space Ξ# = (Ω#, Μ#, P#) with events dubbed W,G,R in such a way that the probability of the locally interpreted conditional ¬W → G equals P#(G∣¬W). But the stability condition will not be fulfilled: this space will not work for other conditionals, e.g. for ¬G → W. This is because it is only the globally interpreted conditional which has the Ramseyian character.

To summarize: when analyzing the relationships between the probability of the conditional and conditional probability, we have to be aware of the fact that the notion of probability can have different meanings (in the sense that it can be defined differently in different probability spaces). Lewis’s arguments are directed only against a particular version of the PC = CP thesis, where there is the same probability measure P on both sides of the equality. But it need not have this form; in particular, this form is not enforced by the task it was meant to fulfill, i.e. the task of reducing the probabilities of the conditionals to (functions of) the conditional probabilities. But exactly this task is fulfilled (without any fear of Lewis’s arguments) by the principles we have given here:

(P¬W→GC = CP): concerning The Conditional Game in one urn, where we have the strong interpretation.

(\( {\text{P}}_{{\neg {\text{W}} \to {\text{G}}}}^{\text{glob}} \)C = CPU2): concerning The Two Urn Global Conditional Game—where the probability of the conditional ¬W → G is computed using conditional probabilities in the space ΞU2 = (ΩU2, ΜU2, PU2) with appropriate weights—this is the weak interpretation.

(\( {\text{P}}_{{\neg {\text{W}} \to {\text{G}}}}^{\text{glob}} \)C = CPnew): concerning The Two Urn Global Conditional Game—where the probability of the conditional ¬W → G is computed using conditional probabilities in the space Ξnew = (Ωnew, Μnew, Pnew)–i.e. we have the strong interpretation here.

(\( {\text{P}}_{{\neg {\text{W}} \to {\text{G}}}}^{\text{loc}} \)C = CPU2): concerning The Two Urn Local Conditional Game—where the probability of the conditional ¬W → G is computed using conditional probabilities in the space ΞU2 = (ΩU2, ΜU2, PU2) with appropriate weights—of course, they are different than in the global case—so this is the weak interpretation.

6 Summary

The semantics for conditionals given in terms of stochastic graphs (and the corresponding probability spaces) has several advantages:

-

It allows us to exhibit the structure of the problem in a very clear way.

-

It reveals the differences between the global and local interpretations of the conditional.

-

It is mathematically precise and allows for a straightforward computation of the needed probabilities.

But this model reveals its full power when we turn to more complex issues. In our opinion, promising areas of research (where we already have obtained some results) are:

-

Generalizing the notions of the local and global interpretation (we consider a more general case of a certain accessibility relation between the worlds).

-

The description of nested conditionals.Footnote 37

-

Making the models more realistic by considering not only mathematical probability, but also “practical probability, where the time factor is taken into account (we will not bet on a game which we will almost surely win—but only after a billion years!).

Additionally, there is also a clear metaphilosophical gain: our models show how certain vaguely understood philosophical notions can be given formal, fully precise counterparts. They can be called explications (in Carnap’s sense). When turning to more complex issues, more powerful mathematical methods will be used and they will provide additional explanatory power for these problems. Our results can be also viewed as a contribution to the idea of mathematical philosophy (Leitgeb 2013). So—in a sense—this paper contributes also to the more general discussion concerning the role of mathematical methods in analyzing philosophical problems.

Notes

We define the appropriate probabilities, but the model also uses the notion of truth-conditions (expressed in terms of the rules of a game). However, we do not discuss the topic here, as the aim of the article is to present the formal model and to discuss the interpretation of the conditional (and its impact on the discussion about PC = CP).

The problem of nested conditionals is discussed in Wójtowicz and Wójtowicz (2019a).

In order to maintain consistency with the upcoming formalisation, we shall capitalise the three colour adjectives henceforth.

This is a much-discussed issue, but we will not go into details here.

M is formally very simple: M = 2Ω (i.e. it is the power set of Ω). Of course, we could also use as the model a (more complicated) 20-element space (with 10 White, 8 Green, 2 Red balls, all equally probable), but we prefer the simpler—and more general—interpretation.

This reflects the simple tautology of the propositional calculus: \( (\neg {\text{p}} \to {\text{q}}) \leftrightarrow ({\text{p}} \vee {\text{q}})\). But it is widely agreed that the interpretation of a conditional as the material implication is not appropriate.

We admit, that this choice might be natural if we do not admit „restarting actuality”, and in particular if we do not want to think in terms of possible worlds. If we think of evaluating the probability or truth value of the conditional in terms of the actual world only, without allowing possible worlds (or alternative scenarios) etc. our approach can be considered problematic. However, it is quite standard for other approaches, e.g. of van Fraassen: Imagine the possible worlds are balls in an urn, and someone selects a ball, replaces it, selects again and so forth. We say he is carrying out a series of Bernoulli trials (van Fraassen 1976, 279).

Examples of sequences which settle the game are: WWWG (we win with the fourth move); R (we lose with the first move); WWWWWR (we lose with the sixth move) etc.

In the space Ω = {W, R, G} there are 8 events (as M = 2Ω), which correspond to Boolean combinations of the elementary events. But none of these events corresponds to the conditional ¬W → G.

In the general case, the gambler’s ruin process is defined for arbitrary N, which makes the process much more complex (think of describing the possible paths, for instance when N = 10 and n1 = 1!). The gambler’s ruin process is a particular case of a more general random walk process; for a survey of applications see for instance chapter 4.5.1. of Ross (2007).

In the general case, when there are n possible states si, for i = 1,…n;

-

pi,j denotes the transition probability from the state si to the state sj;

-

P(i) denotes the probability of finally (i.e. possibly after a sequence of steps) reaching the winning state starting from the state si.

The states in this article have their names, so we use symbols like pSTART,WIN or P(START).

-

This is the transition probability matrix for the graph 2 (Fig. 2):

START

WIN

LOSS

START

r

p

q

WIN

0

1

0

LOSS

0

0

1

The general theory is presented in the Appendix, but we can also justify the equation in an intuitive way. Assume that we are in the state START. What are our chances P(START) of winning? There are two ways of winning: (1) we can win in just one move, with probability p; (2) we will be in the starting position again, with probability r, but then the game is restarted, so the probability of winning is P(START). So, the “contributions” of these variants to P(START) are (1) p and (2) rP(START), which gives the equation P(START) = p + rP(START).

We can think of them as paths within the graph which end in one of the states WIN or LOSE. Each of these paths has a definite probability which is computed in a very intuitive way: as the draws are independent of each other, we simply multiply the probabilities; for instance, the probability of WWWG is rrrp.

Observe, that our approach is different in this respect from Kaufmann’s approach, which is based on Stalnaker Bernoulli models. Kaufmann presents the construction of a universal probability space, where all possible conditionals (based on the initial space Ω) can be represented. However, formal complications are the price to pay for the generality of this model.

The probability of drawing an infinite sequence of White balls (treated as a sequence of events from the initial probability space Ξ) is 0 and so we do not need to include it in our definition of Ξα.

Pα(Ωα) = Pα({WnG, WnR: n∈ℕ}) = Pα({WnG: n∈ℕ}) + Pα({WnR: n∈ℕ}) = \( \mathop \sum \limits_{n = 0}^{\infty } pr^{n} + \mathop \sum \limits_{n = 0}^{\infty } qr^{n} = \frac{p}{1 - r} + \frac{q}{1 - r} = \frac{p}{p + q} + \frac{q}{p + q} = 1 \).

By. the way, observe, that this semantics allows us also to compute the probabilities of sentences like I will win The Conditional Game within an even number of moves, which is a nice feature of the model.

Usually, when we speak of fractions, we have finite sets in mind—but the sets here are infinite (and even uncountable). So perhaps the word “proportion” would be more appropriate.

Kaufmann’s model using Stalnaker Bernoulli spaces covers right-nested conditionals B → (C → D); left-nested conditionals (B → C) → D; conjoined conditionals (A → B)∧(C → D) and conditional conditionals (A → B) → (C → D). The model makes it possible to give explicit formulas for these probabilities: P*(B → (C → D)) = P(CD∣B) + P(Cc∣B)P(D∣C), where Cc is the complement of C, and CD is the conjunction (intersection) of events C and D). P* is the probability in the Bernoulli Stalnaker space. The formula for the conjoined conditional (Kaufmann 2009, 12, see also McGee 1989, 500) is:

$$ {\text{P}}^{*}(({\text{A}}\, \to \,{\text{B}}) \wedge ({\text{C}}\, \to \,{\text{D}}))\, = \frac{{ \left[ {{\text{P}}\left( {\text{ABCD}} \right) + {\text{P}}\left( {\neg {\text{ACD}}} \right){\text{P}}\left( {{\text{A}} \to {\text{B}}} \right) + {\text{P}}\left( {{\text{AB}}\neg {\text{C}}} \right){\text{P}}\left( {{\text{C}} \to {\text{D}}} \right)} \right]}}{{{\text{P}}\left( {{\text{A}} \vee {\text{C}}} \right) }} $$These are very important achievements and a perfect motivation and justification for introducing and investigating the Bernoulli Stalnaker model. The graph model covers all these cases as well but simplifies matters and allows for generalizations (see also footnote 37).

In Kaufmann’s model we have a red ball with a spot instead of green and a red ball without a spot for red. Choosing a red ball (with or without a spot) corresponds to choosing a non-White ball. Kaufmann considers the sentence: If I pick a red ball, it will have a black spot, which corresponds exactly to ours If the ball is non-White, then it is Green. As the examples are entirely isomorphic, it is easy to compare the results.

We adopt the terminology from Kaufmann (2004, 2005, 2009, 2015), leaving aside the discussion concerning the detailed relationships between this classification, and other possible ones, like the standard subjunctive/indicative classification of conditionals (as exemplified by the famous Oswald–Kennedy sentences (Adams 1970)) or epistemic/metaphysical distinction from Khoo (2015).

The transition probability matrix for this graph (Fig. 3) in the general case is:

GLOBAL

START

I

II

WIN

LOSS

START

0

λ1

λ2

0

0

I

r1

0

0

p1

q1

II

r2

0

0

p2

q2

WIN

0

0

0

1

0

LOSS

0

0

0

0

1

U2 in the index indicates the fact that we have two urns to draw the balls from.

E.g. the probability of W1W1W2W1W2G1 equals (λ1r1)3(λ2r2)2(λ1p1).

Khoo observes: “Kaufmann's theory marks a significant advance in our thinking about conditionals. However, I think it faces some challenges. First, it is incomplete: since Kaufmann never specifies a semantics for conditionals, it remains unclear why conditionals should have local and global interpretations – for instance, is it due to ambiguity or perhaps context-dependence?” Khoo (2016, 8). We remove this weakness.

P(¬W → G∣I) is replaced by \({\text{P}}_{\text{U2}} ({\text{G}}_{1} | ({\text{G}}_{1}\vee {\text{R}}_{1}))\). P(¬W → G∣I) expresses our intuitive degree of belief that we will win the Conditional Game ¬W → G, under the assumption, that we are in urn I. But to win the game ¬W → G amounts to drawing a Green ball, provided it is Green or Red, i.e. not White (and everything is going on within urn I). And this is exactly \({\text{P}}_{\text{U2}} ({\text{G}}_{1} | ({\text{G}}_{1}\vee {\text{R}}_{1}))\).

It does not matter that we have three atomic sentences – the generalization to n atomic sentences X1, … Xn is straightforward. It will also work for a countably infinite language (set of elementary events).

The transition probability matrix for this graph (Fig. 4) in the general case is:

LOCAL

START

I

II

WIN

LOSS

START

0

λ1

λ2

0

0

I

0

r1

0

p1

q1

II

0

r2

0

p2

q2

WIN

0

0

0

1

0

LOSS

0

0

0

0

1

Observe that in the case, when there are only non-White balls in the urns (i.e. when r1 = 0 and r2 = 0) this distinction would make no sense: the probability of the conditional ¬W → G could be computed in one way only and it would be equal to λ1p1 + λ2p2 (the formulas from the facts 3.3, 4.1 both reduce to this simple form when r1 = r2 = 0). This is obvious, because in this case the game would be settled after drawing the first ball from the chosen urn.

In the literature concerning counterfactuals, such a relation R is often interpreted as a relationship occurring between the nearest or neighbouring worlds (this can be traced back at least to Stalnaker (1968)).

Here we observe only that it is easy to construct an example where the probability of the conditional ¬W → G is approximately 1 but at the same time we can claim that the game will last almost forever. It is enough to consider one urn containing 1 Red ball, 1010 Green balls, and 101000000 White balls. The chance of winning is almost 1, but we will have to wait very long for our victory (on average approx. 10100000 moves). The details are given in Wójtowicz and Wójtowicz (2019b).

We do not discuss the historical details and the discussion. The thesis has been also known as “Stalnaker’s Thesis” or “Adams’ Thesis” (Stalnaker 1970; Adams 1965, 1966, 1975). Hajek and Hall (1994) and Hall (1994) use the term “Conditional Construal of Conditional Probability”; Hajek and Hall (1994) discuss several versions of it. Using their terminology we can say, that what we defend is closest to the universal version and the universal tailoring version Hajek and Hall (1994, 76).

Lewis’s result can be formulated as: there is no connective * such that P(X*Y) = P(Y|X) (for every P).

\({\text{P}}_{\text{U2}} ({\text{G}}_{1} | ({\text{G}}_{1} \vee {\text{R}}_{1}))\, {\text{and}}\, {\text{P}}_{\text{U2}} ({\text{G}}_{2} | ({\text{G}}_{2}\vee {\text{R}}_{2}))\) are arguments of these functions!

It can also be generalized in a straightforward way to the case of finitely (or countably infinitely) many atomic sentences.

We can model right-nested conditionals B → (C → D) with graphs in a straightforward way. Think of a toy example of drawing colorful balls which have a heavy or light mass. The conditional If the ball is Heavy, then if it is not White, it is Green has two interpretations (deep and shallow), and the difference can by represented by the corresponding graphs, which exhibit the dynamics of the game. The Stalnaker Bernoulli spaces account imposes the shallow interpretation, which is unnatural in some cases: for example, the model gives very counter-intuitive results for sentences like If the match is wet, then if you strike it, it will light. The causal random variable model in Kaufmann (2009) covers the deep interpretation (this interpretation agrees with McGee’s account from McGee (1989); without going into details, the deep interpretation imposes the Import–Export principle). But they are two different models and an advantage of our model is that it can account in a uniform way for both the deep and shallow interpretation of right-nested conditionals. The method can also be extended to longer right-nested conditionals A1 → (…(An-1 → An)), and also for cases when conditional operators that occur in the long conditional need different interpretations (i.e. some of them are deep and some are shallow). The rules for constructing appropriate graphs are straightforward; this is impossible to achieve within the Stalnaker Bernoulli model (which by definition works only for the shallow interpretation), and we suppose that mixed conditionals are not easy to cover within the causal model (which is designed to cover the deep interpretation). It is also possible to explain the interplay between the local/global and deep/shallow interpretations of the compound conditional. Due to limitations of space it is not possible to give the technical details here; they can be found in Wójtowicz and Wójtowicz (2019a).

The simplest presentation of the needed notions from Markov chains theory (we know of) is to be found in chapter 7 of Bertsekas and Tsitsiklis (2008). Our spider-fly example is a slight modification of the original one.

Formally, a Markov chain is a sequence of random variables X0,…,Xk…, with values in the set of states S, which have the Markov property, i.e.

P(Xn+1 = j| X0 = i0, X1 = i1,…, Xn = i) = pij

for all times n, all states i, j∈S, and all possible sequences i0, i1,…,in−1 of earlier states.

Indeed, in our case it is natural to think of some very concrete actions leading from state to state. But in general, it is enough only to say, that there occurs a transition with a certain probability.

So we identify the set of paths generated by the graph by—in a sense—reversing the problem: consider any string of actions, and ask, whether it leads from START to an accepting state. Assume the fly starts in the state 1, and consider four sequences of actions: (1) RWR; (2) RLRLRLWWL; (3) WWWR; (4) RRRR. We see, that (1) RWR leads to the accepting state 3, so this path is in Ω*; (2) RLRLRLWWL leads to the accepting state 0, so this path is in Ω* as well; (3) WWWR leads to the state 2 (i.e. the game has not finished yet), so this path is not in Ω*; (4) is not in Ω*: we land in the accepting state 3 already after two R’s – but there are still two R’s left, so the string is too long. The paths generated by the graph are exactly these sequences of actions, which lead to an absorbing state in their final step. For formal definitions of a deterministic automaton and the language accepted by the automaton see for instance (Anderson 2006) or the classic (Hopcroft et al. 2001).

References

Adams, E. W. (1965). On the logic of conditionals. Inquiry, 8, 166–197.