Abstract

Topic modeling using models such as Latent Dirichlet Allocation (LDA) is a text mining technique to extract human-readable semantic “topics” (i.e., word clusters) from a corpus of textual documents. In software engineering, topic modeling has been used to analyze textual data in empirical studies (e.g., to find out what developers talk about online), but also to build new techniques to support software engineering tasks (e.g., to support source code comprehension). Topic modeling needs to be applied carefully (e.g., depending on the type of textual data analyzed and modeling parameters). Our study aims at describing how topic modeling has been applied in software engineering research with a focus on four aspects: (1) which topic models and modeling techniques have been applied, (2) which textual inputs have been used for topic modeling, (3) how textual data was “prepared” (i.e., pre-processed) for topic modeling, and (4) how generated topics (i.e., word clusters) were named to give them a human-understandable meaning. We analyzed topic modeling as applied in 111 papers from ten highly-ranked software engineering venues (five journals and five conferences) published between 2009 and 2020. We found that (1) LDA and LDA-based techniques are the most frequent topic modeling techniques, (2) developer communication and bug reports have been modelled most, (3) data pre-processing and modeling parameters vary quite a bit and are often vaguely reported, and (4) manual topic naming (such as deducting names based on frequent words in a topic) is common.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Text mining is about searching, extracting and processing text to provide meaningful insights from the text based on a certain goal. Techniques for text mining include natural language processing (NLP) to process, search and understand the structure of text (e.g., part-of-speech tagging), web mining to discover information resources on the web (e.g., web crawling), and information extraction to extract structured information from unstructured text and relationships between pieces of information (e.g., co-reference, entity extraction) (Miner et al. 2012). Text mining has been widely used in software engineering research (Bi et al. 2018), for example, to uncover architectural design decisions in developer communication (Soliman et al. 2016) or to link software artifacts to source code (Asuncion et al. 2010).

Topic modeling is a text mining and concept extraction method that extracts topics (i.e., coherent word clusters) from large corpora of textual documents to discovery hidden semantic structures in text (Miner et al. 2012). An advantage of topic modeling over other techniques is that it helps analyzing long texts (Treude and Wagner 2019; Miner et al. 2012), creates clusters as “topics” (rather than individual words) and is unsupervised (Miner et al. 2012).

Topic modeling has become popular in software engineering research (Sun et al. 2016; Chen et al. 2016). For example, Sun et al. (2016) found that topic modeling had been used to support source code comprehension, feature location and defect prediction. Additionally, Chen et al. (2016) found that many repository mining studies apply topic modeling to textual data such as source code and log messages to recommend code refactoring (Bavota et al. 2014b) or to localize bugs (Lukins et al. 2010).

Probabilistic topic models such as Latent Semantic Indexing (LSI) (Deerwester et al. 1990) and Latent Dirichlet Allocation (LDA) (Blei et al. 2003b) discover topics in a corpus of textual documents, using the statistical properties of word frequencies and co-occurrences (Lin et al. 2014). However, Agrawal et al. (2018) warn about systematic errors in the analysis of LDA topic models that limit the validity of topics. Lin et al. (2014) also advise that classical topic models usually generate sub-optimal topics when applied “as is” to small amounts or short text documents.

Considering the limitations of topic modeling techniques and topic models on the one hand and their potential usefulness in software engineering on the other hand, our goal is to describe how topic modeling has been applied in software engineering research. In detail, we explore the following research questions:

-

RQ1. Which topic modeling techniques have been used and for what purpose? There are different topic modeling techniques (see Section 2), each with their own limitations and constraints (Chen et al. 2016). This RQ aims at understanding which topic modeling techniques have been used (e.g., LDA, LSI) and for what purpose studies applied such techniques (e.g., to support software maintenance tasks). Furthermore, we analyze the types of contributions in studies that used topic modeling (e.g., a new approach as a solution proposal, or an exploratory study).

-

RQ2. What are the inputs into topic modeling? Topic modeling techniques accept different types of textual documents and require the configuration of parameters (see Section 2.1). Carefully choosing parameters (such as the number of topics to be generated) is essential for obtaining valuable and reliable topics (Agrawal et al. 2018; Treude and Wagner 2019). This RQ aims at analysing types of textual data (e.g., source code), actual documents (e.g., a Java class or an individual Java method) and configured parameters used for topic modeling to address software engineering problems.

-

RQ3: How are data pre-processed for topic modeling? Topic modeling requires that the analyzed text is pre-processed (e.g., by removing stop words) to improve the quality of the produced output (Aggarwal and Zhai 2012; Bi et al. 2018). This RQ aims at analysing how previous studies pre-processed textual data for topic modeling, including the steps for cleaning and transforming text. This will help us understand if there are specific pre-processing steps for a certain topic modeling technique or types of textual data.

-

RQ4. How are generated topics named? This RQ aims at analyzing if and how topics (word clusters) were named in studies. Giving meaningful names to topics may be difficult but may be required to help humans comprehend topics. For example, naming topics can provide a high-level view on topics discussed by developers in Stack Overflow (a Q&A website) (Barua et al. 2014) or by end mobile app users in tweets (Mezouar et al. 2018). Analysts (e.g., developers interested in what topics are discussed on Stack Overflow or app reviews) can then look at the name of the topic (i.e., its “label”) rather than the cluster of words. These labels or names must capture the overarching meaning of all words in a topic. We describe different approaches to naming topics generated by a topic model, such as manual or automated labeling of clusters with names based on the most frequent words of a topic (Hindle et al. 2013).

In this paper, we provide an overview of the use of topic modeling in 111 papers published between 2009 and 2020 in highly ranked venues of software engineering (five journals and five conferences). We identify characteristics and limitations in the use of topic models and discuss (a) the appropriateness of topic modeling techniques, (b) the importance of pre-processing, (c) challenges related to defining meaningful topics, and (d) the importance of context when manually naming topics.

The rest of the paper is organized as follows. In Section 2 we provide an overview of topic modeling. In Section 3 we describe other literature reviews on the topic as well as “meta-studies” that discuss topic modeling more generally. We describe the research method in Section 4 and present the results in Section 5. In Section 6, we summarize our findings and discuss implications and threats to validity. Finally, in Section 7 we present concluding remarks and future work.

2 Topic Modeling

Topic modeling aims at automatically finding topics, typically represented as clusters of words, in a given textual document (Bi et al. 2018). Unlike (supervised) machine learning-based techniques that solve classification problems, topic modeling does not use tags, training data or predefined taxonomies of concepts (Bi et al. 2018). Based on the frequencies of words and frequencies of co-occurrence of words within one or more documents, topic modeling clusters words that are often used together (Barua et al. 2014; Treude and Wagner 2019). Figure 1 illustrates the general process of topic modeling, from a raw corpus of documents (“Data input”) to topics generated for these documents (“Output”). Below we briefly introduce the basic concepts and terminology of topic modeling (based on Chen et al. (2016)):

-

Word w: a string of one or more alphanumeric characters (e.g., “software” or “management”);

-

Document d: a set of n words (e.g., a text snippet with five words: w1 to w5);

-

Corpus C: a set of t documents (e.g., nine text snippets: d1 to d9);

-

Vocabulary V: a set of m unique words that appear in a corpus (e.g., m = 80 unique words across nine documents);

-

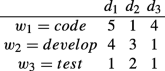

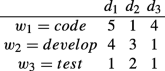

Term-document matrix A: an m by t matrix whose Ai,j entry is the weight (according to some weighting function, such as term-frequency) of word wi in document dj. For example, given a matrix A with three words and three documents as

A1,1 = 5 indicates that “code” appears five times in d1, etc.;

-

Topic z: a collection of terms that co-occur frequently in the documents of a corpus. Considering probabilistic topic models (e.g., LDA), z refers to an m-length vector of probabilities over the vocabulary of a corpus. For example, in a vector z1 = (code : 0.35;test : 0.17;bug : 0.08),

0.35 indicates that when a word is picked from a topic z1, there is a 35% chance of drawing the word “code”, etc.;

-

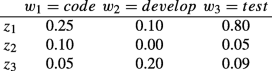

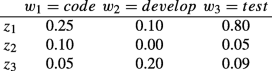

Topic-term matrix ϕ (or T): a k by m matrix with k as the number of topics and ϕi,j the probability of word wj in topic zi. Row i of ϕ corresponds to zi. For example, given a matrix ϕ as

0.05 in the first column indicates that the word “code” appears with a probability of 0.5% in topic z3, etc.;

-

Topic membership vector 𝜃d: for document di, a k-length vector of probabilities of the k topics. For example, given a vector \(\theta _{d_{i}} = (z_{1}: 0.25; z_{2}: 0.10; z_{3}: 0.08)\),

0.25 indicates that there is a 25% chance of selecting topic z1 in di;

-

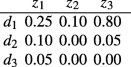

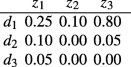

Document-topic matrix 𝜃 (or D): an n by k matrix with 𝜃i,j as the probability of topic zj in document di. Row i of 𝜃 corresponds to \(\theta _{d_{i}}\). For example, given a matrix 𝜃 as

0.10 in the first column indicates that document d2 contains topic z1 with probability of 10%, etc.

2.1 Data Input

Data used as input into topic modeling can take many forms. This requires decisions on what exactly are documents and what the scope of individual documents is (Miner et al. 2012). Therefore, we need to determine which unit of text shall be analyzed (e.g., subject lines of e-mails from a mailing list or the body of e-mails).

To model topics from raw text in a corpus C (see Fig. 1), the data needs to be converted into a structured vector-space model, such as the term-document matrix A. This typically also requires some pre-processing. Although each text mining approach (including topic modeling) may require specific pre-processing steps, there are some common steps, such as tokenization, stemming and removing stop words (Miner et al. 2012). We discuss pre-processing for topic modeling in more detail when presenting the results for RQ3 in Section 5.4.

2.2 Modeling

Different models can be used for topic modeling. Models typically differ in how they model topics and underlying assumptions. For example, besides LDA and LSI mentioned before, other examples of topic modeling techniques include Probabilistic Latent Semantic Indexing (pLSI) (Hofmann 1999). LSI and pLSI reduce the dimensionality of A using Singular Value Decomposition (SVD) (Hofmann 1999). Furthermore, variants of LDA have been proposed, such as Relational Topic Models (RTM) (Chang and Blei 2010) and Hierarchical Topic Models (HLDA) (Blei et al. 2003a). RTM finds relationships between documents based on the generated topics (e.g., if document d1 contains the topic “microservices”, document d2 contains the topic “containers” and document dn contains the topic “user interface”, RTM will find a link between documents d1 and d2 (Chang and Blei 2010)). HLDA discovers a hierarchy of topics within a corpus, where each lower level in the hierarchy is more specific than the previous one (e.g., a higher topic “web development” may have subtopics such as “front-end” and “back-end”).

Topic modeling techniques need to be configured for a specific problem, objectives and characteristics of the analyzed text (Treude and Wagner 2019; Agrawal et al. 2018). For example, Treude and Wagner (2019) studied parameters, characteristics of text corpora and how the characteristics of a corpus impact the development of a topic modeling technique using LDA. Treude and Wagner (2019) found that textual data from Stack Overflow (e.g., threads of questions and answers) and GitHub (e.g., README files) require different configurations for the number of generated topics (k). Similarly, Barua et al. (2014) argued that the number of topics depends on the characteristics of the analyzed corpora. Furthermore, the values of modeling parameters (e.g., LDA’s hyperparameters α and β which control an initial topic distribution) can also be adjusted depending on the corpus to improve the quality of topics (Agrawal et al. 2018).

2.3 Output

By finding words that are often used together in documents in a corpus, a topic modeling technique creates clusters of words or topicszk. Words in such a cluster are usually related in some way, therefore giving the topic a meaning. For example, we can use a topic modeling technique to extract five topics from unstructured document such as a combination of Stack Overflow posts. One of the clusters generated could include the co-occurring words “error”, “debug” and “warn”. We can then manually inspect this cluster and by inference suggest the label “Exceptions” to name this topic (Barua et al. 2014).

3 Related Work

3.1 Previous Literature Reviews

Sun et al. (2016) and Chen et al. (2016), similar to our study, surveyed software engineering papers that applied topic modeling. Table 1 shows a comparison between our study and prior reviews. As shown in the table, Sun et al. (2016) focused on finding which software engineering tasks have been supported by topic models (e.g., support source code comprehension, feature location, traceability link recovery, refactoring, software testing, developer recommendations, software defects prediction and software history comprehension), and Chen et al. (2016) focused on characterizing how studies used topic modeling to mine software repositories.

Furthermore, as shown in Table 1, in comparison to Sun et al. (2016) and Chen et al. (2016), our study surveys the literature considering other aspects of topic modeling such as data inputs (RQ2), data pre-processing (RQ3), and topic naming (RQ4). Additionally, we searched for papers that applied topic models to any type of data (e.g., Q&A websites) rather than to data in software repositories. We also applied a different search process to identify relevant papers.

Although some of the search venues of these two previous studies and our study overlap, our search focused on specific venues. We also searched papers published between 2009 and 2020, a period which only partially overlaps with the searches presented by Sun et al. (2016) and Chen et al. (2016).

Regarding the data analysed in previous studies, Chen et al. (2016) analyzed two aspects not covered in our study: (a) tools to implement topic models in papers, and (b) how papers evaluated topic models (note that even though we did not cover this aspect explicitly, we checked whether papers compared different topic models, and if so, what metrics they used to compare topic models). However, different to Chen et al. (2016) we analyzed (a) the types of contribution of papers (e.g., a new approach); (b) details about the types of data and documents used in topic modeling techniques, and (c) whether and how topics were named. Additionally, we extend the survey of Chen et al. (2016) by investigating hyperparameters (see Section 2.1) of topic models and data pre-processing in more detail. We provide more details and a justification of our research method in Section 4.

3.2 Meta-studies on Topic Modeling

In addition to literature surveys, there are “meta-studies” on topic modeling that address and reflect on different aspects of topic modeling more generally (and are not considered primary studies for the purpose of our review, see our inclusion and exclusion criteria in Section 4). In the following paragraphs we organized their discussion into three parts: (1) studies about parameters for topic modeling, (2) studies on topic models based on the type of analyzed data, and (3) studies about metrics and procedures to evaluate the performance of topic models. We refer to these studies throughout this manuscript when reflecting on the findings of our study.

Regarding parameters used for topic modeling, Treude and Wagner (2019) performed a broad study on LDA parameters to find optimal settings when analyzing GitHub and Stack Overflow text corpora. The authors found that popular rules of thumb for topic modeling parameter configuration were not applicable to their corpora, which required different configurations to achieve good model fit. They also found that it is possible to predict good configurations for unseen corpora reliably. Agrawal et al. (2018) also performed experiments on LDA parameter configurations and proposed LDADE, a tool to tune the LDA parameters. The authors found that due to LDA topic model instability, using standard LDA with “off-the-shelf” settings is not advisable. We also discuss parameters for topic modeling in Section 2.2.

For studies on topic models based on the analyzed data, researchers have investigated topic modeling involving short texts (e.g., a tweet) and how to improve the performance of topic models that work well with longer text (e.g., a book chapter) (Lin et al. 2014). For example, the study of Jipeng et al. (2020) compared short-text topic modeling techniques and developed an open-source library of the short-text models. Another example is the work of Mahmoud and Bradshaw (2017) who discussed topic modeling techniques specific for source code.

Finally, regarding metrics and procedures to evaluate the performance of topic models, some works have explored how semantically meaningful topics are for humans (Chang et al. 2009). For example, Poursabzi-Sangdeh et al. (2021) discuss the importance of interpretability of models in general (also considering other text mining techniques). Another example is the work of Chang et al. (2009) who presented a method for measuring the interpretability of a topic model based on how well words within topics are related and how different topics are between each other. On the other hand, as an effort to quantify the interpretability of topics without human evaluation, some studies developed topic coherence metrics. These metrics score the probability of a pair of words from topics being found together in (a) external data sources (e.g., Wikipedia pages) or (b) in the documents used by the model that generated those topics (Röder et al. 2015). Röder et al. (2015) combined different implementations of coherence metrics in a framework. Perplexity is another measure of performance for statistical models in natural language processing, which indicates the uncertainty in predicting a single word (Blei et al. 2003b). This metric is often applied to compare the configurations of a topic modeling technique (e.g., Zhao et al. (2020)). Other studies use perplexity as an indicator of model quality (such as Chen et al. 2019 and Yan et al.2016b).

4 Research Method

We conducted a literature survey to describe how topic modeling has been applied in software engineering research. To answer the research questions introduced in Section 1, we followed general guidelines for systematic literature review (Kitchenham 2004) and mapping study methods (Petersen et al. 2015). This was to systematically identify relevant works, and to ensure traceability of our findings as well as the repeatability of our study. However, we do not claim to present a fully-fledged systematic literature review (e.g., we did not assess the quality of primary studies) or a mapping study (e.g., we only analyzed papers from carefully selected venues). Furthermore, we used parts of the procedures from other literature surveys on similar topics (Bi et al. 2018; Chen et al. 2016; Sun et al. 2016) as discussed throughout this section.

4.1 Search Procedure

To identify relevant research, we selected high-quality software engineering publication venues. This was to ensure that our literature survey includes studies of high quality and described at sufficient level of detail. We identified venues rated as A and A∗ for Computer Science and Information Systems research in the Excellence Research for Australia (CORE) ranking (ARC 2012). Only one journal was rated B (IST), but we included it due to its relevance for software engineering research. These venues are a subset of venues also searched by related previous literature surveys (Chen et al. 2016; Sun et al. 2016), see Section 3. The list of searched venues includes five journals: (1) Empirical Software Engineering (EMSE); (2) Information and Software Technology (IST); (3) Journal of Systems and Software (JSS); (4) ACM Transactions on Software Engineering & Methodology (TOSEM); (5) IEEE Transaction on Software Engineering (TSE). Furthermore, we included five conferences: (1) International Conference on Automated Software Engineering (ASE); (2) ACM/IEEE International Symposium on Empirical Software Engineering and Measurement (ESEM); (3) International Symposium on the Foundations of Software Engineering / European Software Engineering Conference (ESEC/FSE); (4) International Conference on Software Engineering (ICSE); (5) International Workshop/Working Conference on Mining Software Repositories (MSR).

We performed a generic search on SpringerLink (EMSE), Science Direct (IST, JSS), ACM DL (TOSEM, ESEC/FSE, ASE, ESEM, ICSE, MSR) and IEEE Xplore (TSE, ASE, ESEM, ICSE, MSR) using the venue (journal or conference) as a high-level filtering criterion. Considering that the proceedings of ASE, ESEM, ICSE and, MSR are published by ACM and IEEE, we searched these venues on ACM DL and IEEE Xplore to avoid missing relevant papers. We used a generic search string (“topic model[l]ing” and “topic model”). Furthermore, in order to find studies that apply specific topic models but do not mention the term “topic model”, we used a second search string with topic model names (“lsi” or “lda” or “plsi” or “latent dirichlet allocation” or “latent semantic”). This second string was based on the search string used by Chen et al. (2016), who also present a review and analysis of topic modeling techniques in software engineering (see Section 3). We applied both strings to the full text and metadata of papers. We considered works published between 2009 and 2020. The search was performed in March 2021. Limiting the search to the last twelve years allowed us to focus on more mature and recent works.

4.2 Study Selection Criteria

We only considered full research papers since full papers typically report (a) mature and complete research, and (b) more details about how topic modeling was applied. Furthermore, to be included, a paper should either apply, experiment with, or propose a topic modeling technique (e.g., develop a topic modeling technique that analyzes source code to recommend refactorings (Bavota et al. 2014b)), and meet none of the exclusion criteria: (a) the paper does not apply topic models (e.g., it applies other text mining techniques and only cites topic modeling in related or future work, such as the paper by Lian et al. (2020); (b) the paper focuses on theoretical foundation and configurations for topic models (e.g., it discusses how to tune and stabilize topic models, such as Agrawal et al. (2018) and other meta-studies listed in Section 3.2); and (c) the paper is a secondary study (e.g., a literature review like the studies discussed in Section 3.1). We evaluated inclusion and exclusion criteria by first reading the abstracts and then reading full texts.

The search with the first search string (see Section 4.1) resulted in 215 papers and the search with the second search string resulted in an additional 324 papers. Applying the filtering outlined above resulted in 114 papers. Furthermore, we excluded three papers from the final set of papers: (a) Hindle et al. (2011), (b) Chen et al. (2012), and (c) Alipour et al. (2013). These papers were earlier and shorter versions of follow-up publications; we considered only the latest publications of these papers (Hindle et al. 2013; Chen et al. 2017; Hindle et al. 2016). This resulted in a total of 111 papers for analysis.

4.3 Data Extraction and Synthesis

We defined data items to answer the research questions and characterize the selected papers (see Table 2). The extracted data was recorded in a spreadsheet for analysis (raw data are available online Footnote 1). One of the authors extracted the data and the other authors reviewed it. In case of ambiguous data, all authors discussed to reach agreement. To synthesize the data, we applied descriptive statistics and qualitatively analyzed the data as follows:

-

RQ1: Regarding the data item “Technique”, we identified the topic modeling techniques applied in papers. For the data item “Supported tasks”, we assigned to each paper one software engineering task. Tasks emerged during the analysis of papers (see more details in Section 5.2.2). We also identified the general study outcome in relation to its goal (data item “Type of contribution”). When analyzing the type of contribution, we also checked whether papers included a comparison of topic modeling techniques (e.g., to select the best technique to be included in a newly proposed approach). Based on these data items we checked which techniques were the most popular, whether techniques were based on other techniques or used together, and for what purpose topic modeling was used.

-

RQ2: We identified types of data (data item “Type of data”) in selected papers as listed in Section 5.3.1. Considering that some papers addressed one, two or three different types of data, we counted the frequency of types of data and related them with the document. Regarding “Document”, we identified the textual document and (if reported in the paper) its length. For the data item “Parameters”, we identified whether papers described modeling parameters and if so, which values were assigned to them.

-

RQ3: Considering that some papers may have not mentioned any pre-processing, we first checked which papers described data pre-processing. Then, we listed all pre-processing steps found and counted their frequencies.

-

RQ4: Considering the papers that described topic naming, we analyzed how generated topics were named (see Section 5.5). We used three types of approaches to describe how topics were named: (a) Manual - manually analysis and labeling of topics; (b) Automated - use automated approaches to label names to topics; and (c) Manual & Automated - mix of both manual and automated approaches to analyse and name topics. We also described the procedures performed to name topics.

5 Results

5.1 Overview

As mentioned in Section 4.1, we analyzed 111 papers published between 2009 and 2020 (see Appendix A.1 - Papers Reviewed). Most papers were published after 2013. Furthermore, most papers were published in journals (68 papers in total, 32 in EMSE alone), while the remaining 43 papers appeared in conferences (mostly MSR with sixteen papers). Table 3 shows the number of papers by venue and year.

5.2 RQ1: Topic Models Used

In this Section we first discuss which topic modeling techniques are used (Section 5.2.1). Then, we explore why or for what purpose these techniques were used (Section 5.2.2). Finally, we describe the general contributions of papers in relation to their goals (Section 5.2.3).

5.2.1 Topic Modeling Techniques

The majority of the papers used LDA (80 out of 111), or a LDA-based technique (30 out of 111), such as Twitter-LDA (Zhao et al. 2011). The other topic modeling technique used is LSI. Figure 2 shows the number of papers per topic modeling technique. The total number (125) exceeds the number of papers reviewed (111), because ten papers experimented with more than one technique: Thomas et al. (2013), De Lucia et al. (2014), Binkley et al. (2015), Tantithamthavorn et al. (2018), Abdellatif et al. (2019) and Liu et al. (2020) experimented with LDA and LSI; Chen et al. (2014) experimented with LDA and Aspect and Sentiment Unification Model (ASUM); Chen et al. (2019) experimented with Labeled Latent Dirichlet Allocation (LLDA) and Label-to-Hierarchy Model (L2H); Rao and Kak (2011) experimented with LDA and MLE-LDA; and Hindle et al. (2016) experimented with LDA and LLDA. ASUM, LLDA, MLE-LDA and L2H are techniques based on LDA.

The popularity of LDA in software engineering has also been discussed by others, e.g., Treude and Wagner (2019). LDA is a three-level hierarchical Bayesian model (Blei et al. 2003b). LDA defines several hyperparameters, such as α (probability of topic zi in document di), β (probability of word wi in topic zi) and k (number of topics to be generated) (Agrawal et al. 2018).

Thirty-seven (out of 75) papers applied LDA with Gibbs Sampling (GS). Gibbs sampling is a Markov Chain Monte Carlo algorithm that samples from conditional distributions of a target distribution. Used with LDA, it is an approximate stochastic process for computing α and β (Griffiths and Steyvers 2004). According to experiments conducted by Layman et al. (2016), Gibbs sampling in LDA parameter estimation (α and β) resulted in lower perplexity than the Variational Expectation-Maximization (VEM) estimations. Perplexity is a standard measure of performance for statistical models of natural language, which indicates the uncertainty in predicting a single word. Therefore, lower values of perplexity mean better model performance (Griffiths and Steyvers 2004).

Thirty papers applied modified or extended versions of LDA (“LDA-based” in Fig. 2). Table 4 shows a comparison between these LDA-based techniques. Eleven papers proposed a new extension of LDA to adapt LDA to software engineering problems (hence the same reference in the third and fourth column of Table 4). For example, the Multi-feature Topic Model (MTM) technique by Xia et al. (2017b), which implements a supervised version of LDA to create a bug triaging approach. The other 19 papers applied existing modifications of LDA proposed by others (third column in Table 4). For example, Hu and Wong (2013) used the Citation Influence Topic Model (CITM), developed by Dietz et al. (2007), which models the influence of citations in a collection of publications.

The other topic modeling technique, LSI (Deerwester et al. 1990), was published in 1990, before LDA which was published in 2003. LSI is an information extraction technique that reduces the dimensionality of a term-document matrix using a reduction factor k (number of topics) (Deerwester et al. 1990). Compared to LDA, LDA follows a generative process that is statistically more rigorous than LSI (Blei et al. 2003b; Griffiths and Steyvers 2004). From the 16 papers that used LSI, seven papers compared this technique to others:

-

One paper (Rosenberg and Moonen 2018) compared LSI with other two dimensionality reduction techniques: Principal Component Analysis (PCA) (Wold et al. 1987) and Non-Negative Matrix Factorization (NMF) (Lee and Seung 1999). The authors applied these models to automatically group log messages of continuous deployment runs that failed for the same reasons.

-

Four papers applied LDA and LSI at the same time to compare the performance of these models to Vector Space Model (VSM) (Salton et al. 1975), an algebraic model for information extraction. These studies supported documentation (De Lucia et al. 2014); bug handling (Thomas et al. 2013; Tantithamthavorn et al. 2018); and maintenance tasks (Abdellatif et al. 2019)).

-

Regarding the other two papers, Binkley et al. (2015) compared LSI to Query likelihood LDA (QL-LDA) and other information extraction techniques to check the best model for locating features in source code; and Liu et al. (2020) compared LSI and LDA to Generative Vector Space Model (GVSM), a deep learning technique, to select the best performer model for documentation traceability to source code in multilingual projects.

5.2.2 Supported Tasks

As mentioned before, we aimed to understand why topic modeling was used in papers, e.g., if topic modeling was used to develop techniques to support specific software engineering tasks, or if it was used as a data analysis technique in exploratory studies to understand the content of large amounts of textual data. We found that the majority of papers aimed at supporting a particular task, but 21 papers (see Table 5) used topic modeling in empirical exploratory and descriptive studies as a data analysis technique.

We extracted the software engineering tasks described in each study (e.g., bug localization, bug assignment, bug triaging) and then grouped them into eight more generic tasks (e.g., bug handling) considering typical software development activities such as requirements, documentation and maintenance (Leach 2016). The specific tasks collected from papers are available online 1. Note that we kept “Bug handling” and “Refactoring” separate rather than merging them into maintenance because of the number of papers (bug handling) and the cross-cutting nature (refactoring) in these categories. Each paper was related to one of these tasks:

-

Architecting: tasks related to architecture decision making, such as selection of cloud or mash-up services (e.g., Belle et al. (2016));

-

Bug handling: bug-related tasks, such as assigning bugs to developers, prediction of defects, finding duplicate bugs, or characterizing bugs (e.g., Naguib et al. (2013));

-

Coding: tasks related to coding, e.g., detection of similar functionalities in code, reuse of code artifacts, prediction of developer behaviour (e.g., Damevski et al. (2018));

-

Documentation: support software documentation, e.g., by localizing features in documentation, automatic documentation generation (e.g., Souza et al. (2019));

-

Maintenance: software maintenance-related activities, such as checking consistency of versions of a software, investigate changes or use of a system (e.g., Silva et al. (2019));

-

Refactoring: support refactoring, such as identifying refactoring opportunities and removing bad smell from source code (e.g., Bavota et al. (2014b));

-

Requirements: related to software requirements evolution or recommendation of new features (e.g., Galvis Carreno and Winbladh (2012));

-

Testing: related to identification or prioritization of test cases (e.g., Thomas et al. (2014)).

Table 5 groups papers based on the topic modeling technique and the purpose. Few papers applied topic modeling to support Testing (three papers) and Refactoring (three papers). Bug handling is the most frequent supported task (33 papers). From the 21 exploratory studies, 13 modeled topics from developer communication to identify developers’ information needs: 12 analyzed posts on Stack Overflow, a Q&A website for developers (Chatterjee et al. 2019; Bajaj et al. 2014; Ye et al. 2017; Bagherzadeh and Khatchadourian 2019; Ahmed and Bagherzadeh 2018; Barua et al. 2014; Rosen and Shihab 2016; Zou et al. 2017; Chen et al. 2019; Han et al. 2020; Abdellatif et al. 2020; Haque and Ali Babar 2020) and one paper analyzed blog posts (Pagano and Maalej 2013). Regarding the other eight exploratory studies, three papers investigated web search queries to also identify developers’ information needs (Xia et al. 2017a; Bajracharya and Lopes 2009; 2012); four papers investigated end user documentation to analyse users’ feedback on mobile apps (Tiarks and Maalej 2014; El Zarif et al. 2020; Noei et al. 2018; Hu et al. 2018); and one paper investigated historical “bug” reports of NASA systems to extract trends in testing and operational failures (Layman et al. 2016).

5.2.3 Types of Contribution

For each study, we identified what type of contribution it presents based on the study goal. We used three types of contributions (“Approach”, “Exploration” and “Comparison”, as described below) by analyzing the research questions and main results of each study. A study could contribute either an “Approach” or an “Exploration”, while “Comparison” is orthogonal, i.e., a study that presents a new approach could present a comparison of topic models as part of this contribution. Similarly, a comparison of topic models can also be part of an exploratory study.

-

Approach: a study develops an approach (e.g., technique, tool, or framework) to support software engineering activities based on or with the support of topic models. For example, Murali et al. (2017) developed a framework that applies LDA to Android API methods to discover types of API usage errors, while Le et al. (2017) developed a technique (APRILE+) for bug localization which combines LDA with a classifier and an artificial neural network.

-

Exploration: a study applies topic modeling as the technique to analyze textual data collected in an empirical study (in contrast to for example open coding). Studies that contributed an exploration did not propose an approach as described in the previous item, but focused on getting insights from data. For example, Barua et al. (2014) applied LDA to Stack Overflow posts to discover what software engineering topics were frequently discussed by developers; Noei et al. (2018) explored the evolution of mobile applications by applying LDA to app descriptions, release notes, and user reviews.

-

Comparison: the study (that can also contribute with an “Approach” or an “Exploration”) compares topic models to other approaches. For example, Xia et al. (2017b) compared their bug triaging approach (based on the so called Multi-feature Topic Model - MTM) with similar approaches that apply machine learning (Bugzie (Tamrawi et al. 2011)) and SVM-LDA (combining a classifier with LDA (Somasundaram and Murphy 2012)). On the other hand, De Lucia et al. (2014) compared LDA and LSI to define guidelines on how to build effective automatic text labeling techniques for program comprehension.

From the papers that contributed an approach, twenty-two combined a topic modeling technique with one or more other techniques applied for text mining:

-

Information extraction (e.g., VSM) (Nguyen et al. 2012; Zhang et al. 2018; Chen et al. 2020; Thomas et al. 2013; Fowkes et al. 2016);

-

Classification (e.g., Support Vector Machine - SVM) (Hindle et al. 2013; Le et al. 2017; Liu et al. 2017; Demissie et al. 2020; Zhao et al. 2020; Shimagaki et al. 2018; Gopalakrishnan et al. 2017; Thomas et al. 2013);

-

Clustering (e.g., K-means) (Jiang et al. 2019; Cao et al. 2017; Liu et al. 2017; Zhang et al. 2016; Altarawy et al. 2018; Demissie et al. 2020; Gorla et al. 2014);

-

Structured prediction (e.g., Conditional Random Field - CRF) (Ahasanuzzaman et al. 2019);

-

Artificial neural networks (e.g., Recurrent Neural Network - RNN) (Murali et al. 2017; Le et al. 2017);

-

Evolutionary algorithms (e.g., Multi-Objective Evolutionary Algorithm - MOEA) (Blasco et al. 2020; Pérez et al. 2018);

-

Web crawling (Nabli et al. 2018).

Pagano and Maalej (2013) was the only study that contributed an exploration that combined LDA with another text mining technique. To analyze how developer communities use blogs to share information, the authors applied LDA to extract keywords from blog posts and then analyzed related “streams of events” (commit messages and releases by time in relation to blog posts), which were created with Sequential pattern mining.

Regarding comparisons we found that (1) 13 out of the 63 papers that contribute an approach also include some form of comparison, and (2) ten out of the 48 papers contribute an exploration also include some form of comparison. We discuss comparisons in more detail below in Section 6.1.2

5.3 RQ2: Topic Model Inputs

In this section we first discuss the type of data (Section 5.3.1). Then we discuss the actual textual documents used for topic modeling (Section 5.3.2). Finally, we describe which model parameters were used (Section 5.3.3) to configure models.

5.3.1 Types of Data

Types of data help us describe the textual software engineering content that has been analyzed with topic modeling. We identified 12 types of data in selected papers as shown in Table 6. In some papers we identified two or three of these types of data; for example, the study of Tantithamthavorn et al. (2018) dealt with issue reports, log information and source code.

Source code (37 occurrences), issue/bug reports (22 occurrences) and developer communication (20 occurrences) were the most frequent types of data used. Seventeen papers used two to four types of data in their topic modeling technique; twelve of these papers used a combination of source code with another type of data. For example, Sun et al. (2015) generated topics from source code and developer communication to support software maintenance tasks, and in another study, Sun et al. (2017) used topics found in source code and commit messages to assign bug-fixing tasks to developers.

5.3.2 Documents

A document refers to a piece of textual data that can be longer or shorter, such as a requirements document or a single e-mail subject. Documents are concrete instances of the types of data discussed above. Figure 3 shows documents (per type of data) and how often we found them in papers. The most frequent documents are bug reports (12 occurrences), methods from source code (9 occurrences), Q&A posts (9 occurrences) and user reviews (8 occurrences).

We also analyzed document length and found the following:

-

In general, papers described the length of documents in number of words, see Table 7.Footnote 2 On the other hand, two papers (Moslehi et al. 2016, 2020) described their documents’ length in minutes of screencast transcriptions (videos with one to ten minutes, no information about the size of transcripts). Sixteen papers mentioned the actual length of the documents, see Table 7. Ten papers that described the actual document length did that when describing the data used for topic modeling; four papers discussed document length while describing results; and one mentioned document length as a metric for comparing different data sources;

-

Most papers (80 out of 111) did not mention document length and also do not acknowledge any limitations or the impact of document length on topics.

-

Fifteen papers did not mention the actual document length, but at some point acknowledge the influence of document length on topic modeling. For example, Abdellatif et al. (2019) mentioned that the documents in their data set were “not long”. Similarly, Yan et al. (2016b) did not mention the length of the bug reports used but discussed the impact of the vocabulary size of their corpus on results. Moslehi et al. (2018) mentioned document length as a limitation and acknowledge that using LDA on short documents was a threat to construct validity. According to these authors, using techniques specific for short documents could have improved the outcomes of their topic modeling.

5.3.3 Model Parameters

Topic models can be configured with parameters that impact how topics are generated. For example, LDA has typically been used with symmetric Dirichlet priors over 𝜃 (document-topic distributions) and ϕ (topic-word distributions) with fixed values for α and β (Wallach et al. 2009). Wallach et al. (2009) explored the robustness of a topic model with asymmetric priors over 𝜃 (i.e., varying values for α) and a symmetric prior (fixed value for β) over ϕ. Their study found that such topic model can capture more distinct and semantically-related topics, i.e., the words in clusters are more distinct. Therefore, we checked which parameters and values were used in papers. Overall, we found the following:

-

Eighteen of the 111 papers do not mention parameters (e.g., number of topics k, hyperparameters α and β). Thirteen of these papers use LDA or an LDA-based technique, four papers use LSI, while (Liu et al. 2020) use LDA and LSI.

-

The remaining 93 papers mention at least one parameter. The most frequent parameters discussed were k, α and β:

-

Fifty-eight papers mentioned actual values for k, α and β;

-

Two papers mentioned actual values for α and β, but no values for k;

-

Twenty-nine papers included actual values for k but not for α and β;

-

Thirty-two (out of 58) papers mentioned other parameters in addition to k, α and β. For example, Chen et al. (2019) applied L2H (in comparison to LLDA), which uses the hyperparameters γ1 and γ2;

-

One paper (Rosenberg and Moonen 2018) that applied LSI, mentioned the parameter “similarity threshold” rather than k, α and β.

-

We then had a closer look at the 60 papers that mentioned actual values for hyperparameters α and β:

-

α based on k: The most frequent setting (29 papers) was α = 50/k and β = 0.01 (i.e., α was depending on the number of topics, a strategy suggested by Steyvers and Griffiths (2010) and Wallach et al. (2009)). These values are a default setting in Gibbs Sampling implementations for LDA such as Mallet.Footnote 3

-

Fixed α and β: Five papers fixed 0.01 for both hyperparameters, as suggested by Hoffman et al. (2010). Another eight papers fixed 0.1 for both hyperparameters, a default setting in Stanford Topic Modeling Toolbox (TMT);Footnote 4 and three other papers fixed α = 0.1 and β = 1 (these three studies applied RTM).

-

Varying α or β: Four papers tested different values for α, where two of these papers also tested different values for β; and one paper varied β but fixed a value for α.

-

Optimized parameters: Four papers obtained optimized values for hyperparameters (Sun et al. 2015; Catolino et al. 2019; Yang et al. 2017; Zhang et al. 2018). These papers applied LDA-GA (as proposed by Panichella et al. (2013)) which, based on genetic algorithms; finds the best values for LDA hyperparameters. In regards to the actual values chosen for optimized hyperparameters, Catolino et al. (2019) did not mention the values for hyperparameters; Sun et al. (2015) and Yang et al. (2017) mentioned only the values used for k; and Zhang et al. (2018) described the values for k, α and β.

Regarding the values for k we observed the following:

-

The 90 papers that mentioned values for k modeled three (Cao et al. 2017) to 500 (Li et al. 2018; Lukins et al. 2010; Chen et al. 2017) topics;

-

Twenty-four (out of 90) papers mentioned that a range of values for k was tested in order to check the performance of the technique (e.g., Xia et al. (2017b)) or as a strategy to select the best number of topics (e.g., Layman et al. (2016));

-

Although the remaining 66 (out of 90) papers mentioned a single value used for k, most of them acknowledged that had tried several number of topics or used the number of topics suggested by other studies.

As can be seen in Table 7, there is no common trend of what values for hyperparameter or k depending on the document or document length.

5.4 RQ3: Pre-processing Steps

Thirteen of the papers did not mention what pre-processing steps were applied to the data before topic modeling. Seven papers only described how the data analyzed were selected, but not how they were pre-processed. Table 8 shows the pre-processing steps found in the remaining 91 papers. Each of these papers mentioned at least one of these steps.

Removing noisy content (76 occurrences), Stemming terms (61 occurrences) and Splitting terms (33 occurrences) were the most used pre-processing steps. The least frequent pre-processing step (Resolving negations) was found only in the studies of Noei et al. (2019) and Noei et al. (2018). Resolving synonyms and Expanding contractions were also less frequent, with three occurrences each.

Table 9 shows the types of noise removal in papers and their frequency. Most of the papers that described pre-processing steps removed stop words (76 occurrences). Stop words are the most common words in a language, such as “a/an” and “the” in English. Removing stop words allows topic modeling techniques to focus on more meaningful words in the corpus (Miner et al. 2012). Eight papers mentioned the stop words list used: Layman et al. (2016) and Pettinato et al. (2019) used the SMART stop words list;Footnote 5 Martin et al. (2015) and Hindle et al. (2013) used the Natural Language Toolkit English stop words list;Footnote 6Bagherzadeh and Khatchadourian (2019), Ahmed and Bagherzadeh (2018) and Yan et al. (2016b) used the Mallet stop words list;Footnote 7 and Mezouar et al. (2018) used the Moby stop words list.Footnote 8

As can be seen in Table 9, some papers removed words based on the frequency of their occurrence (most or least frequent terms) or length (words shorter than four, three or two letters or long terms). Other papers removed long paragraphs. For example, Henß et al. (2012) removed paragraphs longer than 800 characters because most paragraphs in their data set were shorter than that. We also found two papers that removed short documents: Gorla et al. (2014) removed documents with fewer than ten words, and Palomba et al. (2017) removed documents with fewer than three words. The concept of non-informative content depends on the context of each paper. In general, it refers to any data considered not relevant for the objective of the study. For example, Choetkiertikul et al. (2017), which aimed at predicting bugs in issue reports, removed issues that took too much time to be resolved. Noei et al. (2019) and Fu et al. (2015) removed content (end user reviews and commit messages) that did not describe feedback or cause of change.

5.5 RQ4: Topic Naming

Topic naming is about assigning labels (names) to topics (word clusters) to give the clusters a human-understandable meaning. Seventy-five papers (out of 111) did not mention whether or how topics were named. These papers only used the word clusters for analysis, but did not require a name. For example, Xia et al. (2017a) and Canfora et al. (2014) did not name topics, but mapped the word clusters to the documents (search queries and source code comments) used as input for topic modeling. These papers used the probability of a document to belong to a topic (𝜃) to associate a document to the topic with the highest probability.

From the 36 papers (out of 111) that mentioned topic naming (see Table 10), we identified three ways of how they named topics:

-

Automated: Assigning names to word clusters without human intervention;

-

Manual: Manually checking the meaning and the combination of words in cluster to “deduct” a name, sometimes validated with expert judgment;

-

Manual & Automated: Mix of manual and automated; e.g., topics are manually labeled for one set of clusters to then train a classifier for naming another set of clusters.

Most of the papers (30 out of 36) assigned one name to one topic. However, we identified six papers that used one name for multiple topics (Hindle et al. 2013; Pagano and Maalej 2013; Bajracharya and Lopes 2012; Rosen and Shihab 2016) or labeled a topic with multiple names (Zou et al. 2017; Gao et al. 2018). Two of the papers (Hindle et al. 2013; Bajracharya and Lopes 2012) that assigned one name to multiple topics used predefined labels, and in the other two papers (Pagano and Maalej 2013; Rosen and Shihab 2016) authors interpreted words in the clusters to deduct names.

Regarding the papers that assigned multiple names to a topic, Zou et al. (2017) assigned no, one or more names, depending on how many words in the predefined word list matched words in clusters. Gao et al. (2018) used an automated approach to label topics with the three most relevant phrases and sentences from the end user reviews inputted to their topic model. The relevance of phrases and sentences were obtained with the metrics Semantic and Sentiment scores proposed by these authors.

6 Discussion

6.1 RQ1: Topic Modeling Techniques

6.1.1 Summary of Findings

LDA is the most frequently used topic model. Almost all papers (95 out of 111) applied LDA or a LDA-based technique, while nine papers applied LSI to identify topics and seven papers used LDA and LSI. Regarding the papers that used LDA-based techniques, eleven (out of 30) proposed their own LDA-based technique (Fu et al. 2015; Nguyen et al. 2011; Liu et al. 2017; Cao et al. 2017; Panichella et al. 2013; Yan et al. 2016a; Xia et al. 2017b; Nguyen et al. 2012; Damevski et al. 2018; Gao et al. 2018; Rao and Kak 2011). This may indicate that the LDA default implementation may not be adequate to support specific software engineering tasks or extract meaningful topics from all types of data. We discuss more about topic modeling techniques and their inputs in Section 6.2.2. Furthermore, we found that topic modeling is used to develop tools and methods to support software engineers and concrete tasks (the most frequently supported task we found was bug handling), but also as a data analysis technique for textual data to explore empirical questions (see for example the “oldest” paper in our sample published in 2009 (Bajracharya and Lopes 2009)).

One aspect that we did not specifically address in this review, but which impacts the applicability of topics models is their computational overhead. Computational overhead refers to processing time and computational resources (e.g., memory, CPU) required for topic modeling. As discussed by others, topic modeling can be computational intensive (Hoffman et al. 2010; Treude and Wagner 2019; Agrawal et al. 2018). However, we found that only few papers (seven out of 111) mentioned computational overhead at all. From these seven papers, five mentioned processing time (Bavota et al. 2014b; Zhao et al. 2020; Luo et al. 2016; Moslehi et al. 2016; Chen et al. 2020), one paper mentioned computational requirements and some processing times (e.g., processor, data pre-processing time, LDA processing time and clustering processing time), and one paper only mention that their technique was processed in “few seconds” (Murali et al. 2017). Hence, based on the reviewed studies we cannot provide broader insights into the practical applicability and potential constraints of topic modeling based on the computational overhead.

6.1.2 Comparative Studies

As mentioned in Sections 5.2.1 and 5.2.3, we identified studies that used more than one topic modeling technique and compared their performance. In detail, we found studies that (1) compared topic modeling techniques to information extraction techniques, such as Vector Space Model (VSM), an algebraic model (Salton et al. 1975) (see Table 11), (2) proposed an approach that uses a topic modeling technique and compared it to other approaches (which may or may not use topic models) with similar goals (see Table 12), and (3) compared the performance of different settings for a topic modeling technique or a newly proposed approach that utilizes topic models (see Table 13). In column “Metric” of Tables 11, 12 and 13 the metrics show the metrics used in the comparisons to decide which techniques performed “better” (based on the metrics’ interpretation). Metrics in bold were proposed for or adapted to a specific context (e.g., SCORE and Effort reduction), while the other metrics are standard NLP metrics (e.g., Precision, Recall and Perplexity). Details about the metrics used to compare the techniques are provided in Appendix A.2 - Metrics Used in Comparative Studies.

As shown in Table 11, ten papers compared topic modeling techniques to information extraction techniques. For example, Rosenberg and Moonen (2018) compared LSI with two other dimensionality reduction techniques (PCA and NMF) to group log messages of failing continuous deployment runs. Nine out of these ten papers presented explorations, i.e., studies experimented with different models to discuss their application to specific software engineering tasks, such as bug handling, software documentation and maintenance. Thomas et al. (2013) on the other hand experimented with multiple models to propose a framework for bug localization in source code that applies the best performing model.

Four papers in Table 11 (De Lucia et al. 2014; Tantithamthavorn et al. 2018; Abdellatif et al. 2019; Thomas et al. 2013) compared the performance of LDA, LSI and VSM with source code and issue/bug reports. Except for De Lucia et al. (2014), these studies applied Top-k accuracy (see Appendix A.2 - Metrics Used in Comparative Studies) to measure the performance of models, and the best performing model was VSM. Tantithamthavorn et al. (2018) found that VSM achieves both the best Top-k performance and the least required effort for method-level bug localization. Additionally, according to De Lucia et al. (2014), VSM possibly performed better than LSI and LDA due to the nature of the corpus used in their study: LDA and LSI are ideal for heterogeneous collections of documents (e.g., user manuals from different systems), but in De Lucia et al. (2014) study each corpus was a collection of code classes from a single software system.

Ten studies proposed an approach that uses a topic modeling technique and compared it to similar approaches (shown in Table 12). In column “Approaches compared” of Table 12, the approach in bold is the one proposed by the study (e.g., Cao et al. 2017) or the topic modeling technique used in their approach (e.g., Thomas et al. 2014). All newly proposed approaches were the best performing ones according to the metrics used.

In addition to the papers mentioned in Tables 11 and 12, four papers compared the performance of different settings for a topic modeling technique or tested which topic modeling technique works best in their newly proposed approach (see Table 13). Biggers et al. (2014) offered specific recommendations for configuring LDA when localizing features in Java source code, and observed that certain configurations outperform others. For example, they found that commonly used heuristics for selecting LDA hyperparameter values (beta = 0.01 or beta = 0.1) in source code topic modeling are not optimal (similar to what has been found by others, see Section 3.2). The other three papers (Chen et al. 2014; Fowkes et al. 2016; Poshyvanyk et al. 2012) developed approaches which were tested with different settings (e.g., the approach applying LDA or ASUM (Chen et al. 2014)).

Regarding the datasets used by comparative studies, only Rao and Kak (2011) used a benchmarking dataset (iBUGS). Most of the comparative studies (13 out of 24) used source code or issue/bug reports from open source software, which are subject to evolution. The advantage of using benchmarking datasets rather than “living” datasets (e.g., an open source Java system) is that its data will be static and the same across studies. Additionally, data in benchmarking datasets are usually curated. This means that the results of replicating studies can be compared to the original study when both used the same benchmarking dataset.

Finally, we highlight that each of the above mentioned comparisons has a specific context. This means that, for example, the type of data analyzed (e.g., Java classes), the parameter setting (e.g., k = 50), the goal of the comparison (e.g., to select the best model for bug localization or for tracing documentation in source code) and pre-processing (e.g., stemming and stop word removal) were different. Therefore, it is not possible to “synthesize” the results from the comparisons across studies by aggregating the different comparisons in different papers, even for studies that appear to have similar goals or use the same topic modeling techniques, such as comparing the same models with similar types of data (such as Tantithamthavorn et al. 2018 and Abdellatif et al.2019).

6.2 RQ2: Inputs to Topic Models

6.2.1 Summary of Findings

Source code, developer communication and issue/bug reports were the most frequent types of data used for topic modeling in the reviewed papers. Consequently, most of the documents referred to individual or groups of functions or methods, individual Q&A posts, or individual bug reports; another frequent document was an individual user review (more discussions are in Section 6.2.3). We also found that few papers (16 out of 111) mentioned the actual length of documents used for topic modeling (we discuss this more in Section 6.2.2).

Regarding modeling parameters, most of the papers (93 out of 111) explicitly mentioned the configuration of at least one parameter, e.g., k, α or β for LDA. We observed that the setting α = 50/k and β = 0.01 (asymmetric α and symmetric β) as suggested by Steyvers and Griffiths (2010) and Wallach et al. (2009) was frequently used (28 out of 93 papers). Additionally, papers that applied LDA mostly used the default parameters of the tools used to implement LDA (e.g., Mallet 3 with α = 50/k and β = 0.01 as default). This finding is similar to what has been reported by others, e.g., according to another review by Agrawal et al. (2018), LDA is frequently applied “as is out-of-the-box” or with little tuning. This means that studies may rely on the default settings of the tools used with their topic modeling technique, such as Mallet and TMT, rather than try to optimize parameters.

6.2.2 Documents and Parameters for Topic Models

Short texts: According to Lin et al. (2014), topic models such as LDA have been widely adopted and successfully used with traditional media like edited magazine articles. However, applying LDA to informal communication text such as tweets, comments on blog posts, instant messaging, Q&A posts, may be less successful. Their user-generated content is characterized by very short document length, a large vocabulary and a potentially broad range of topics. As a consequence, there are not enough words in a document to create meaningful clusters, compromising the performance of the topic modeling. This means that probabilistic topic models such as LDA perform sub-optimally when applied “as is” with short documents even when hyperparameters (α and β in LDA) are optimized (Lin et al. 2014). In our sample there were only two papers that mentioned the use of a LDA-based technique specifically for short documents (Hu et al. 2019; Hu et al. 2018). Hu et al. (2019) and Hu et al. (2018) applied Twitter-LDA with end user reviews. Furthermore, Moslehi et al. (2018) used a weighting algorithm in documents to generate topics with more relevant words, they also acknowledge that the use of a short text technique could have improved their topic model.

As shown in Table 7, few papers mentioned the actual length of documents. Considering a single document from a corpus, we observed that most papers potentially used short texts (all documents found in papers are shown in Fig. 3). For example, papers used an individual search query (Xia et al. 2017a), an individual Q&A post (Barua et al. 2014), an individual user review (Nayebi et al. 2018), or an individual commit message (Canfora et al. 2014) as a document. Among the papers that mentioned document length, the shortest documents were an individual commit message (9 to 20 words) (Canfora et al. 2014) and an individual method (14 words) (Tantithamthavorn et al. 2018). Both studies applied LDA.

Two approaches to improve the performance of LDA when analyzing short documents are pooling and contextualization (Lin et al. 2014). Pooling refers to aggregating similar (e.g., semantically or temporally) documents into a single document (Mehrotra et al. 2013). For example, among the papers analysed, Pettinato et al. (2019) used temporal pooling and combined short log messages into a single document based on a temporal order. Contextualization refers to creating subsets of documents according to a type of context; considering tweets as documents, the type of context can refer to time, user and hashtags associated with tweets (Tang et al. 2013). For example, Weng et al. (2010) combined all the individual tweets of an author into one pseudo-document (rather than treating each tweet as a document). Therefore, with the contextualization approach, the topic model uses word co-occurrences at a context level instead of at the document level to discover topics.

Hyperparameters Table 14 shows the hyperparameter settings and types of data of the papers that mentioned the value of at least one model parameter. In Table 14 we also highlight the topic modeling techniques used. Note that some topic modeling techniques (e.g., RTM) can receive more parameters that the ones mentioned in Table 14 (e.g., number of documents, similarity thresholds); all parameters mentioned in papers are available online in the raw data of our study 1. When comparing hyperparameter settings, topic modeling techniques and types of data, we observed the following:

-

Papers that used LDA-GA, an LDA-based technique that optimizes hyperparameters with Genetic algorithms, applied it to data from developer documentation or source code;

-

LDA was used with all three types of hyperparameter settings across studies. The most common setting was α based on k for developer communication and source code;

-

Most of the LDA-based techniques applied fixed values for α and β.

Most of the papers that applied only LSI as the topic modeling technique did not mention hyperparameters. As LSI is a model simpler than LDA, it generally requires the number of topics k. For example, a paper that applied LSI to source code mentioned α and k (Poshyvanyk et al. 2012).

Number of topics By relating the type of data to the number of topics, we aimed at finding whether the choice of the number of topics is related to the data used in the topic modeling techniques (see also Table 7). However, the number of topics used and data in the studies are rather diverse. Therefore, synthesizing practices and offering insights from previous studies on how to choose the number topics is rather limited.

From the 90 papers that mentioned number of topics (k), we found that 66 papers selected a specific number of topics (e.g., based on previous works with similar data or addressing the same task), while 24 papers used several numbers of topics (e.g., Yan et al. (2016b) used 10 to 120 topics in steps of 10). To provide an example of how the number of topics differed even when the same type of data was analyzed with the same topic modeling technique, we looked at studies that applied LDA in textual data from developer communication (mostly Q&A posts) to propose an approach to support documentation. For these papers we found one paper that did not mention k (Henß et al. 2012), one paper that modeled different numbers of topics (k = 10,20,30) (Asuncion et al. 2010), one paper that modeled k = 15 (Souza et al. 2019) and another paper that modeled k = 40 (Wang et al. 2015). This illustrates that there is no common or recommended practice that can be derived from the papers.

Some papers mentioned that they tested several numbers of topics before selecting the most appropriate value for k (in regards to studies’ goals) but did not mention the range of values tested. In regards to papers that mentioned such range, we identified four studies (Nayebi et al. 2018; Chen et al. 2014; Layman et al. 2016; Nabli et al. 2018) that tested several values for k and used perplexity (see details in Appendix A.2 - Metrics Used in Comparative Studies) of models to evaluate which value of k generated the best performing model; three studies (Zhao et al. 2020; Han et al. 2020; El Zarif et al. 2020) also selected the number of topics after testing several values for k; however they used topic coherence (Röder et al. 2015) to evaluate models. One paper (Haque and Ali Babar 2020) used both perplexity and topic coherence to select a value for k. Metrics of topic coherence score the probability of a pair of words from the resulted word clusters being found together in (a) external data sources (e.g., Wikipedia pages) or (b) in the documents used by the topic model that generated those word clusters (Röder et al. 2015).

6.2.3 Supported Tasks, Types of Data and Types of Contribution

We looked into the relationship between the tasks supported by papers, the type of data used and the types of contributions (see Table 15). We observed the following:

-

Source code was a frequent type of data in papers; consequently it appeared for almost all supported tasks, except for exploratory studies;

-

Considering exploratory studies, most papers used developer communication (13 out of 21), followed by search queries and end user communication (three papers each);

-

Papers that supported bug handling mostly used issue/bug reports, source code and end user communication;

-

Log information was used by papers that supported maintenance, bug handling, and coding;

-

Considering the papers that supported documentation, three used transcript texts from speech;

-

From the four papers related to the type of data developer documentation, two supported architecting tasks and the other two, documentation tasks.

-

Regarding the type of data, URLs and transcripts were only used in studies that contributed an approach.

We found that most of the exploratory studies used data that is less structured. For example, developer communication, such as Q&A posts and conversation threads generally do not follow a standardized template. On the other hand, issue reports are typically submitted through forms which enforces a certain structure.

6.3 RQ3: Data Pre-processing

6.3.1 Summary of Findings

Most of the papers (91 out of 111) pre-processed the textual data before topic modeling. Removing noisy content was the most frequent pre-processing step (as typical for natural language processing), followed by stemming and splitting words. Miner et al. (2012) consider tokenizing as one of the basic data pre-processing steps in text mining. However, in comparison to other basic pre-processing steps such as stemming, splitting words and removing noise, tokenizing was not frequently found in papers (it was at least not mentioned in papers).

Eight papers (Henß et al. 2012; Xia et al. 2017b; Ahasanuzzaman et al. 2019; Abdellatif et al. 2019; Lukins et al. 2010; Tantithamthavorn et al. 2018; Poshyvanyk et al. 2012; Binkley et al. 2015) tested how pre-processing steps affected the performance of topic modeling or topic model-based approaches. For example, Henß et al. (2012) tested several pre-processing steps (e.g., removing stop words, long paragraphs and punctuation) in e-mail conversations analyzed with LDA. They found that removing such content increased LDA’s capability to grasp the actual semantics of software mailing lists. Ahasanuzzaman et al. (2019) proposed an approach which applies LDA and Conditional Random Field (CRF) to localize concerns in Stack Overflow posts. The authors did not incorporate stemming and stop words removal in their approach because in preliminary tests these pre-processing steps decreased the performance of the approach.

6.3.2 Pre-processing Different Types of Data

Table 16 shows how different types of data were pre-processed. We observed that stemming, removing noise, lowercasing, and splitting words were commonly used for all types of data. Regarding the differences, we observed the following:

-

For developer communication there were specific types of noisy content that was removed: URLs, HTML tags and code snippets. This might have happened because most of the papers used Q&A posts as documents, which frequently contain hyperlinks and code examples;

-

Removing non-informative content was frequently applied to end user communication and end user documentation;

-

Expanding contracted terms (e.g., “didn’t” to “did not”) were applied to end user communication and issue/bug reports;

-

Removing empty documents and eliminating extra white spaces were applied only in end user communication. Empty documents occurred in this type of data because after the removal of stop words no content was left (Chen et al. 2014);

-

For source code there was a specific noise to be removed: program language specific keywords (e.g., “public”, “class”, “extends”, “if”, and “while”).

Table 16 shows that splitting words, stop words removal and stemming were frequently applied to source code and most of these studies (15) applied these three steps at the same time. Studies that performed these pre-processing steps to source code mostly used methods, classes, or comments in classes/methods as documents. For example, Silva et al. (2016) who applied LDA, performed these three pre-processing steps in classes from two open source systems using TopicXP (Savage et al. 2010). TopicXP is a Eclipse plug-in that extracts source code, pre-process it and executes LDA. This plug-in implements splitting words, stop words removal and stemming.

Splitting words was the most frequent pre-processing step in source code. Studies used this step to separate Camel Cases in methods and classes (e.g., the class constructor InvalidRequestTest produces the terms “invalid”, “request” and “test”). For example, Tantithamthavorn et al. (2018) compared LDA, LSI and VSM testing different combinations of pre-processing steps to the methods’ identifiers inputted to these techniques. The best performing approach was VSM with splitting words, stop words removal and stemming.

Removing stop words in source code refer to the exclusion of the most common words in a language (e.g., “a/an” and “the” in English), as in studies that used other types of data. Removing stop words in source code is also different from removing programming language keywords and studies mentioned these as separate steps. Lukins et al. (2010), for example, tested how removing stop words from their documents (comments and identifiers of methods) affected the topics generated by their LDA-based approach. They found that this step did not improve the results substantially.

As mentioned in Section 5.4, stemming is the process of normalizing words into their single forms by identifying and removing prefixes, suffixes and pluralisation (e.g., “development”, “developer”, “developing” become “develop”). Regarding stemming in source code, papers normalized identifiers of classes and methods, comments related to classes and methods, test cases or a source code file. Three papers tested the effect of this pre-processing step in the performance of their techniques (Tantithamthavorn et al. 2018; Poshyvanyk et al. 2012; Binkley et al. 2015), and one of these papers also tested removing stop words and splitting words (Tantithamthavorn et al. 2018). Poshyvanyk et al. (2012) tested the effect of stemming classes in the performance of their LSI-based approach. The authors concluded that stemming can positively impact features localization by producing topics (“concept lattices” in their study) that effectively organize the results of searches in source code. Binkley et al. (2015) compared the performance of LSI, QL-LDA and other techniques. They also tested the effects of stemming (with two different stemmers: Porter Footnote 9 and Krovetz Footnote 10) and non-stemming methods from five open source systems. These authors found that they obtained better performances in terms of models’ Mean Reciprocal Rank (MRR, details in Appendix A.2 - Metrics Used in Comparative Studies) with non-stemming.

Additionally, we found that even though some papers used the same type of data, they pre-processed data differently since they had different goals and applied different techniques. For example, Ye et al. (2017), Barua et al. (2014) and Chen et al. (2019) used developer communication (Q&A posts as documents). Ye et al. (2017) and Barua et al. (2014) removed stop words, code snippets and HTML tags, while Barua et al. (2014) also stemmed words. On the other hand, Chen et al. (2019) removed stop words and the least and the most frequent words, and identified bi-grams. Some studies considered the advice on data pre-processing from previous studies (e.g., Chen et al. 2017; Li et al. 2018), while others adopted steps that are commonly used in NLP, such as noise removal and stemming (Miner et al. 2012) (e.g., Demissie et al. 2020). This means that the choice of pre-processing steps do not only depend on the characteristics of the type of data inputted to topic modeling techniques.

6.4 RQ4: Assigning Names to Topics

Most papers did not mention if or how they named topics. The majority of papers that explicitly assigned names to topics (27 out of 36) used a manual approach and relied on human judgment (researchers’ interpretation) of words in clusters. One paper (Rosen and Shihab 2016) justified their use of a manual approach by arguing that there was no tool that could give human readable topics based on word clusters. Thus, authors checked every word cluster generated and the documents used (an individual question of a Q&A website) to make sure they would label topics appropriately.

Table 17 shows how topics were named and the type of data analyzed. Table 18 shows how topics were named and the type of contributions they make. We observed the following:

-

Studies that modeled topics from developer documentation, transcripts and URLs did not mention topic naming. Studies that contributed with both exploration and comparison also did not mention topic naming;

-

Topics were mostly named in studies that used data from developer communication (ten occurrences) and in exploratory studies (22 occurrences).

-

From studies that compared topic models or topic modeling-based approaches (see Section 6.1.2), only one study (Yan et al. 2016b) named topics (automatically with predefined labels).